This is the continuation of the work that was started in R7 Guilin. (see here). In Guilin, the MVP implementation added proper multi-tenancy support for read and write operations on PNFs. In Honolulu, this support will be extented to all entity types in AAI, but because of limited bandwidth, other components (CDS, Policy, SDC) have been descoped and pushed to the I release.

Requirement:

REQ-463 - Getting issue details... STATUS

SO:

SO-3029 - Getting issue details... STATUS

A&AI:

AAI-2971 - Getting issue details... STATUS

SDC (out of scope for R8)

SDC-3188 - Getting issue details... STATUS

CDS (out of scope for R8)

CCSDK-2537 - Getting issue details... STATUS

Policy (out of scope for R8)

POLICY-2717 - Getting issue details... STATUS

Abstract

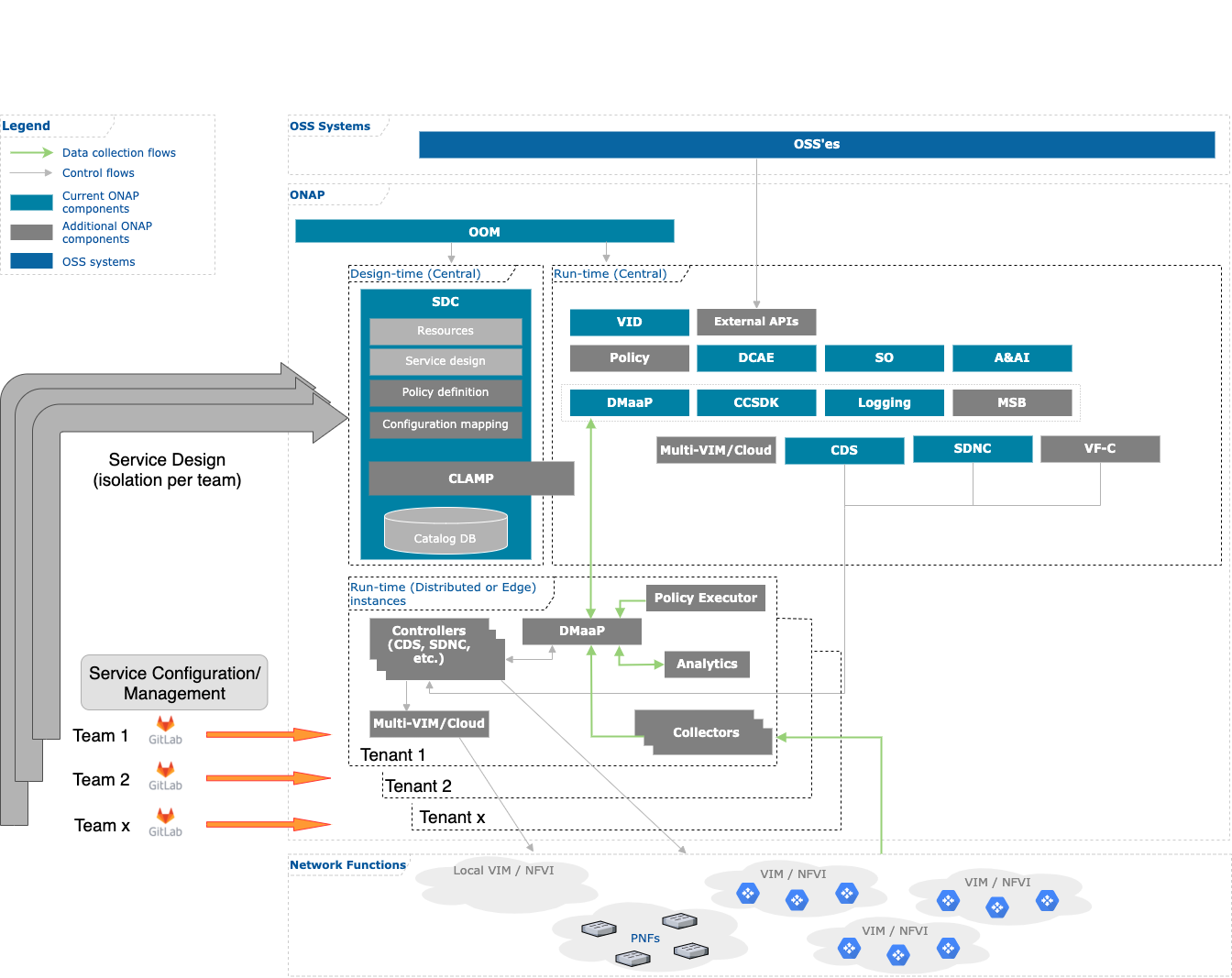

In order to deal with E2E service orchestration across different network domains, there are ONAP components that need to be logically centralized, such as the Inventory or entry-point of service orchestration or use of common models for services and resources.

Examples of such scenarios :

- Services deployed into the data centres, and 'infrastructure' type services within the datacenter

- E2E services such as 5G network slicing

- Services spanning across different domains (wireline, wireless, data centre, underlay and overlay services)

- Any business needs requiring E2E view and interactions between those different services and resources

However, those different services are often authored, deployed and operated by different groups of people within service providers, which in turn brings other challenges :

- Different groups of people requiring the right level of access to author, make changes to and distribute service & resources models (SDC)

- Who can change which models, not simply by role.

- Visibility of those models, which may be 'shared' or usable by other groups, or even restricting visibility to those models by groups (avoiding dependencies)

- Different groups of people instantiating, updating, and operating service instances while other groups may either not be allowed to change them, or even view them (SO)

- Visibility and control over inventory data by groups who 'owns' this data (A&AI)

- Authoring, updating and deployment of :

- Controller logic for different services and resources (CDS, SDNC, APPC, etc)

- Data collection parameters & mappings, inventory scope for collectors (DCAE Collectors)

- Analytics applications, their configuration and the management of different instances (DCAE Analytics)

- Policies (Policy) - which applies to certain network domains only, and control of execution of those policies

- Management of which consumers/producers can publish/subscribe to different message bus topics (DMaaP)

For each of the ONAP components, these requirements translate in different implementation approaches :

- Single logical instance, with multi-tenancy built in - examples : SDC, A&AN, SO ?, DMaaP ?

- Several logical instances, where deployment/configuration of those instances can be handled independently - examples : Controllers, Collectors, Policy executors.

Context

The NSO platform has both core components (i.e. AAI, SO, SDC, etc.) and end-user defined, deployed and managed components.

Candidate components for running into individual namespaces (where multi-tenancy is achieved by running several, independent instances)

- Collectors - Outbound (SNMP Poll, gRPC initiated by NSO, recurring queries, SSH configuration gets) or Inbound (SNMP Traps, gRPC initiated by device, syslog, etc.) data coming from Network Element's network interfaces/networks.

- Controllers - Outbound (mostly, using SSH, NETCONF, Restconf, gRPC) for configuration on Network Element's management interfaces/networks.

- Analytics applications - which uses primarily Kafka, but also external data sources used to process data coming from Collectors and publish events

- Policy executors - to act upon certain network events to trigger configuration/orchestration flows, mostly consuming from DMaaP/Kafka and triggering actions mostly using REST, gRPC or DMaaP/Kafka.

In order to be efficient in building, testing and operating those components - the different teams need as much flexibility as possible within the platform, while also maintaining certain level of isolation (especially with regards to data).

Objective

- Common ONAP components implement the right access controls for different end users/tenants to

- Build& distribute the SDC artifacts

- Build & distribute CDS blueprints

- Build & distribute custom operational SO workflows

- Build & distribute Policies

- Build & distribute Control loops

- Access / manage the AAI inventory data for objects they own

- Publish & consume messages on DMaaP / Kafka topics they have access to

- Build, deploy & manage DCAE collectors

- Build, deploy & manage DCAE analytics applications

- Build, deploy & manage CDS executors

For each of those the level of maturity varies.

SDC

For any artifacts developed & distributed through SDC there is already a partial implementation through user roles in the ONAP Portal framework - however it may not be extensive enough to meet the operational requirements we have.

The Portal framework has different role definition and membership for different users - however there may be a need for a group/tenant notion as well. This covers items in orange above (partially meeting the need w/ ONAP)

AAI

The AAI authentication framework (red) is not extensive enough to allow any fine grained control over which users can access which elements of the Inventory. Because of this, access to the AAI APIs must be restricted until proper AAA is in place.

DCAE

DCAE components (collectors & analytics) in green - deployed outside of the DCAE Controller framework (i.e. using Gitlab pipelines w/ Helm charts) can be controlled by the tenants, based on the roles they have in the tenant space on Kubernetes clusters and their group membership in Gitlab - so this is mostly covered already.

CDS

CDS artifact authoring & distribution should be done through the integration with SDC (orange). For the execution of the blueprints (green), in order to provide access to the execution logs, and provide end-user teams with full control over the lifecycle of the executors - a model similar to the DCAE controllers & analytics applications is used - controlled through Kubernetes roles and Gitlab group membership for their authoring & deployment.

Detailed assessment / perceived impacts

CDS (or other controllers)

Who is responsible to do the routing (which instance of CDS would be used for a given service/resource) ?

Possible patterns

- Run fully independent controller instances, and whichever components have to run controller actions need to know which blueprint processor it should talk to

- Upon creation/initial service instantiation, assign a CDS instance and inventory it.

- This requires support in the inventory (A&AI) to identify

- This may mean impacts to components calling controllers, having to look up that inventory binding

- Which component deals with the assignment of that CDS instance ? i.e. CDS ? or simply supply it.

- This requires a mechanism to define, and provide visibility to which Controller instance is used for a given service or resource

- Ideas :

- Similar to a controller-resource registry (applicable at different levels - service, generic-vnf, pnf, etc.)

- Challenges

- As a whole unit (Controller blueprint processor/logic interpreter, controller data store (CDS DB, MDSAL), externalized executors, etc.

- Complete scaling unit for controllers :

TBC : Impacts on SDNC, APP-C and VF-C ?

Policy

Policy is a framework - there are design & runtime components. It supports having multiple policy executors but all deployed in a single namespace only

How would we achieve having run-time components run into different namespaces ?

- Centralized :

- PAP

- Policy API

- Distributed / by namespace :

- All the PDP's (X/D/A/*)

Current limitations :

- <requires review of the implementation/architecture> - Bruno Sakoto

- <requires looking at Apex and how it would handle this> - Bruno Sakoto

Other impacts :

- If controllers are distributed, Policy executors need to fetch which controller instance to be leveraged for a given resource from A&AI

- Or, we use K8S's namespace/DNS - but this has limitations and would inconsistent with SO's way of dealing with this.

SDC

A&AI

An example for A&AI multi-tenancy is the use of owning-entity concept - which defines which group of people owns specific A&AI resources or services.

This concept exists today for service-instance object only, but should be extended - as an example - for PNFs :

In this example, you could have:

- PNF objects 'owned' by an infrastructure team (owning-entity : datacenter-team)

- 'Infrastructure' type services (i.e. underlay networking), designed and deployed by that same team, on those PNFs (owning-entity : datacenter-team)

- Services deployed by tenants of the data center, which requires configuration on those PNFs to be deployed (owning-entity : wireless-core-team)

This requires A&AI to have a minimal level of relationship information if we want to enforce any RBAC, to understand who should be allowed to change/update/delete the services instances or PNF objects.

This exists today for service-instance objects, but not PNFs.

Other scenarios in which those can be useful :

- Updates of PNF objects, such as when doing OS upgrades, hardware replacements (Serial number updates), etc. should only be allowed by those which own those PNFs

Multi-tenancy model

There are various aspects which need to be covered, and the current proposed model is as follow (to be refined) :