Project Name:

- Proposed name for the project: ONAP Operations Manager

- Proposed name for the repository: oom

Project description:

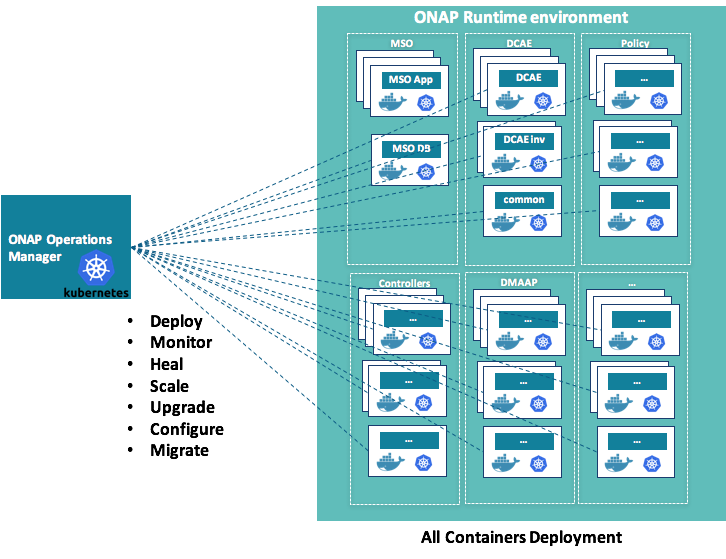

This proposal introduces the ONAP Platform OOM (ONAP Operations Manager) to efficiently Deploy, Manage, Operate the ONAP platform and its components (e.g. MSO, DCAE, SDC, etc.) and infrastructure (VMs, Containers). The OOM addresses the current lack of consistent platform-wide method in managing software components, their health, resiliency and other lifecycle management functions. With OOM, service providers will have a single dashboard/UI to deploy & un-deploy the entire (or partial) ONAP platform, view the different instances being managed and the state of each component, monitor actions that have been taken as part of a control loop (e.g., scale in-out, self-heal), and trigger other control actions like capacity augments across data centers.

The primary benefits of this approach are as follows:

- Flexible Platform Deployment - While current ONAP deployment automation enables the entire ONAP to be created, more flexibility is needed to support the dynamic nature of getting ONAP instantiated, tested and operational. Specifically, we need the capability to repeatedly deploy, un-deploy, and make changes onto different environments (dev, system test, DevOps, production), for both platform as a whole or on an individual component basis. To this end, we are introducing the ONAP Operations Manager with orchestration capabilities into the deployment, un-deployment and change management process associated with the platform.

- State Management of ONAP platform components – Our initial health checking of Components and software modules are done manually and lack consistency. We are proposing key modules/services in each ONAP Component to be able to self-register/discovered into the ONAP Operations Manager, which in turn performs regular health checks and determines the state of the Components/software.

- Platform Operations Orchestration / Control Loop Actions – Currently there is a lack of event-triggered corrective actions defined for platform components. The ONAP Operations Manager will enable DevOps to view events and to manually trigger corrective actions. The actions might be simple initially – stop, start or restart the platform component. Over time, more advanced control loop automation, triggered by policy, will be built into the ONAP Operations Manager.

Proposed ONAP Operations Manager Functional Architecture:

- UI/Dashboard – this provides DevOps users a view of the inventory, events and state of what is being managed by the ONAP Operations Manager, and the ability to manually trigger corrective actions on a component. The users can also deploy ONAP instances, a component, or a change to a software module within a component.

- API handler – this supports NB API calls from external clients and from the UI/Dashboard

- Inventory & data store – tracks the inventory, events, health, and state of the ONAP instances and individual components

- ONAP Lifecycle Manager – this is a model-driven orchestration engine for deploying/un-deploying instances and components. It will trigger downstream plugin actions such as instantiate VMs, create containers, stop/restart actions, etc. Target implementation should aim at TOSCA as the master information model for deploying/managing ONAP Platform components.

- SB Interface Layer – these are a collection of plugins to support actions and interactions needed by the ONAP Operations Manager to ONAP instances and other external cloud related resources – plugins may include Openstack, Docker, Kubernetes, Chef, Ansible, etc.

- Service & Configuration Registry – this function performs the registry and discovery of components/software to be managed as well as the subsequent health check on each registered component/software

- Hierarchical OOM architecture for scaling and specialization - OOM's architecture allows for a hierarchical implementation for scale as volume/load and scope of the underlying ONAP platform instance(s) increases. (see attached deck for more information on OOM's hierarchical architecture - Slides 7-8: https://wiki.onap.org/pages/worddav/preview.action?fileName=ONAP_Operations_Manager_Proposalv2.pdf&pageId=3246809.)

Scope:

- In scope: ONAP Platform lifecycle management & automation, i.e.

- Platform Deployment: Automated deployment/un-deployment of ONAP instance(s) / Automated deployment/un-deployment of individual platform components

- Platform Monitoring & healing: Monitor platform state, Platform health checks, fault tolerance and self-healing

- Platform Scaling: Platform horizontal scalability

- Platform Upgrades: Platform upgrades

- Platform Configurations: Manage overall platform components configurations

- Platform migrations: Manage migration of platform components

- Out of scope:

Architecture Alignment:

- How does this project fit into the rest of the ONAP Architecture?

- The ONAP Operations Manager (OOM) is used to deploy, manage and automate ONAP platform components operations.

- How does this align with external standards/specifications?

- At target, TOSCA should be used to model platform deployments and operations.

- Are there dependencies with other open source projects?

- Open source technologies could be Cloudify, Kubernetes, Docker, and others.

- The OOM will have dependency on the current proposed "Common Controller Framework" (CommServ 2) project and will leverage its software framework.

- The OOM will require to work with the MSB project for to perform a comprehensive Service Registry function. At this point, the 2 projects seems to have needs to manage service registry at 2 different levels, i.e. MSB at the micro/macro service endpoint level vs OOM at the micro-service/component level.

- The OOM will have inter-dependencies with DCAE (specifically the DCAE Controller). OOM will be built on the CCF software framework for ONAP platform management. DCAE Controller will be updated to use the same CCF software framework. In addition, OOM ("Root Node") and DCAE Controller ("DCAE Node") form the initial hierarchy of managers for ONAP platform management (as depicted in Slides 7-8 of this deck: https://wiki.onap.org/pages/worddav/preview.action?fileName=ONAP_Operations_Manager_Proposalv2.pdf&pageId=3246809). OOM Root Node is the Tier 1 manager responsible for the entire platform and delegates to the Tier 2 DCAE Controller/DCAE Node to be responsible for managing DCAE and its analytics and collector microservices.

- The current proposed "System Integration and Testing" (Integration) Project might have a dependency on this project - use OOM to deploy/undeploy/change the test environments, including creation of the container layer.

- This project has also a dependency on the LF infrastructure (seed code from ci-management project)

Initial Implementation Proposal: ONAP on Containers

Description:

This milestone describes a deployment and orchestration option for the ONAP platform components (MSO, SDNC, DCAE, etc.) based on Docker containers and the open-source Kubernetes container management system. This solution removes the need for VMs to be deployed on the servers hosting ONAP components and allows Docker containers to directly run on the host operating system. As ONAP uses Docker containers presently, minimal changes to existing ONAP artifacts will be required.

The primary benefits of this approach are as follows:

- Life-cycle Management. Kubernetes is a comprehensive system for managing the life-cycle of containerized applications. Its use as a platform manager will ease the deployment of ONAP, provide fault tolerance and horizontal scalability, and enable seamless upgrades.

- Hardware Efficiency. As opposed to VMs that require a guest operating system be deployed along with the application, containers provide similar application encapsulation with neither the computing, memory and storage overhead nor the associated long term support costs of those guest operating systems. An informal goal of the project is to be able to create a development deployment of ONAP that can be hosted on a laptop.

- Deployment Speed. Eliminating the guest operating system results in containers coming into service much faster than a VM equivalent. This advantage can be particularly useful for ONAP where rapid reaction to inevitable failures will be critical in production environments.

- Cloud Provider Flexibility. A Kubernetes deployment of ONAP enables hosting the platform on multiple hosted cloud solutions like Google Compute Engine, AWS EC2, Microsoft Azure, CenturyLink Cloud, IBM Bluemix and more.

In no way does this project impair or undervalue the VM deployment methodology currently used in ONAP. Selection of an appropriate deployment solution is left to the ONAP user.

The ONAP on Containers project is part of the ONAP Operations Manager project and focuses on (as shown in green):

- Converting ONAP components deployment to docker containers

- Orchestrating ONAP components lifecycle using Kubernetes

As part of the OOM project, it will manage the lifecycle of individual containers on the ONAP runtime environment.

Challenges

Although the current structure of ONAP lends itself to a container based manager there are challenges that need to be overcome to complete this project as follows:

- Duplicate containers – The VM structure of ONAP hides internal container structure from each of the components including the existence of duplicate containers such as Maria DB.

- DCAE - The DCAE component not only is not containerized but also includes its own VM orchestration system. A possible solution is to not use the DCAE Controller but port this controller’s policies to Kubenetes directly, such as scaling CDAP nodes to match offered capacity.

- Ports - Flattening the containers also expose port conflicts between the containers which need to be resolved.

- Permanent Volumes - One or more permanent volumes need to be established to hold non-ephemeral configuration and state data.

- Configuration Parameters - Currently ONAP configuration parameters are stored in multiple files; a solution to coordinate these configuration parameters is required. Kubernetes Config Maps may provide a solution or at least partial solution to this problem.

- Container Dependencies – ONAP has built-in temporal dependencies between containers on startup. Supporting these dependencies will likely result in multiple Kubernetes deployment specifications.

Scope:

- In scope: ONAP Operations Manager implementation using docker containers and kubernetes, i.e.

- Platform Deployment: Automated deployment/un-deployment of ONAP instance(s) / Automated deployment/un-deployment of individual platform components using docker containers & kubernetes

- Platform Monitoring & healing: Monitor platform state, Platform health checks, fault tolerance and self-healing using docker containers & kubernetes

- Platform Scaling: Platform horizontal scalability through using docker containers & kubernetes

- Platform Upgrades: Platform upgrades using docker containers & kubernetes

- Platform Configurations: Manage overall platform components configurations using docker containers & kubernetes

- Platform migrations: Manage migration of platform components using docker containers & kubernetes

- Out of scope: support of container networking for VNFs. The project is about containerization of the ONAP platform itself.

- In scope: ONAP Operations Manager implementation using docker containers and kubernetes, i.e.

Architecture Alignment:

- How does this project fit into the rest of the ONAP Architecture?

- Please Include architecture diagram if possible

- What other ONAP projects does this project depend on?

- ONAP Operations Manager (OOM) [Formerly called ONAP Controller]: The ONAP on Containers project is a sub-project of OOM focusing on docker/kubernetes management of the ONAP platform components

- The current proposed "System Integration and Testing" (Integration) Project might have a dependency on this project - use OOM to deploy/undeploy/change the test environments, including creation of the container layer.

This project has also a dependency on the LF infrastructure (seed code from ci-management project)

- How does this align with external standards/specifications?

- N/A

- Are there dependencies with other open source projects?

- Docker

- Kubernetes

- How does this project fit into the rest of the ONAP Architecture?

Resources:

- Primary Contact Person:

- Mike Elliott (Amdocs)

- Committers and Contributors:

Other Information:

- link to seed code:

- Vendor Neutral

- if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

- Meets Board policy (including IPR)

Use the above information to create a key project facts section on your project page

Key Project Facts

Project Name:

- JIRA project name: ONAP Operations Manager

- JIRA project prefix: oom

Repo name: oom

Lifecycle State: Approved

Primary Contact: Mike Elliott (Amdocs)

Project Lead: Mike Elliott (Amdocs)

mailing list tag oom

Committers: OOM Team

*Link to TSC approval:

Link to approval of additional submitters:

22 Comments

HuabingZhao

When I went through this proposal, I noticed that there might be some overlap between this proposal and MSB in the "registry" functionality.

Base on my understanding, OOM mainly aims to the deployment and management of the ONAP Platform components, so the intention of registration and health check in OOM is for the management of these components, such as reporting alarms based on the check result, restarting/healing a component when it's down, scaling out/scaling in a component according to the service load of a component.

For MSB, registration info is used for service request routing, so the info should include service name, service exposed URL, version, service instance endpoint (IP:Port), service protocol, service TTL, service load balancing method, etc.

Service health check of MSB is used to maintain the correct health status of service in the service registry so the service consumer will not get a failed service provider instance, and Service API Gateway uses the Service information in the registry to route the service requests to the service provider instance.

In conclusion, the intention and focus of Registration/health check of MSB and OOM are different, so the derived APIs/Data model may also be different.

Despite the difference, Is there a potential to normalize and combine these two efforts?

John Ng

I posted the following comment on the MSB project page. I am posted it here as well so other members interested in OOM will see it and continue the discussion ... for OOM's service registry/health-checking, we are proposing to the reuse the open source software Consul (https://www.consul.io/). It essentially accomplishes the service registration, discovery and health checking functions. Also, we see the service registration/health-checking functions as needed beyond microservices. We need to monitor and manage the entire ONAP platform - ONAP component software, microservices, databases, and eventually the underlying connectivity across the platform - many of which will have multiple instances as we scale out and geographically distributed to achieve HA. Onboarding of microservices is currently being performed by the DCAE controller for analytics applications and data collectors (we see analytics and collectors as our initial microservices). We see this onboarding functionality move beyond DCAE into the overall ONAP platform as we support non-DCAE microservices.

HuabingZhao

Hi John,

I duplicated the following response from MSB project page to here as well :-)

I fully agree that "we see the service registration/health-checking functions as needed beyond microservices", and that's exactly what MSB is providing. There is no difference in the sense of service registration/health-checking/routing/load balancing for "normal" service and "micro" service.

Actually, in this context, the term "microservice" just means that the service's scope is highly organized around one business capability so it's smaller than "normal" service. Just like micrcoservice, other services wich is "bigger" is also a process which needs to expose service endpoint to be accessed by its consumers. So both "micro" and "normal" services need the service registration/discovery/routing/load balancing mechanism provided by MSB. However, according to the ONAP architecture principle, all services should evolve to become service-based with clear, concise function addressed by each service, following principles of microservices. https://wiki.onap.org/display/DW/Draft+Architecture+Principles

MSB already provides a common mechanism to register service instances and monitor its health/state, no matter it's a "micro" service or a "normal" service. MSB also support different services from L4 to L7, such as TCP, UDP, HTTP/S(REST), HTTP/S(UI), etc.The information can be used by MSB for gateway, service routing and load balancing.

Besides, I'd like to clarify that the "service" mentioned in OOM and MSB are different, the two project proposals use the same term to express different meanings, which might cause confusion.

OOM aims to provide lifecycle management for ONAP components, while MSB facilitates the routing/LB of services which defined by a specific port. So the "service" managed by OOM are at process level, in contrast, the "service" managed by MSB are in smaller granularity, at the endpoint level. It's common that a component(process) may have multiple endpoints(services).

OOM check the health of ONAP components at the process level and manage its lifecycle accordingly. MSB take care of the health of a service at endpoint level and make sure the service request from the consumer is routed to an available service endpoint.

While OOM and MSB manage the process and service separately at different levels, these two projects do need to cooperate. As I understand it, after OOM start/stop/scale an ONAP component, it broadcasts a message for the specific ONAP component. MSB will consume that message and manage service at endpoint level for the usage of service request routing and load balancing.

John Ng

Hi Huabing. Thank you for your response. We see OOM registering and health-checking each ONAP component and its subcomponents as services. For example, for SO, that might include the API handler, the camunder orchestration engine, and MariaDB - each being a separate service to be registered with Consul. Also, I mentioned above that OOM's scope includes managing ONAP's microservices (DCAE analytics and collectors being the initial ones). I want to point that out again. I have attached the deck that was shared at the Middletown NJ Developers Meeting in May (filename: ONAP_Operations_Manager_Proposal.pdf). Please feel free to review and comment. Thanks.

John Ng

Huabing: The MSB project mentions the use of Consul. Could you share with us how you are using Consul - for registration, health-checks, URL-to-IP/port look ups? Do you make use of the Consul agent for these capabilities? Thanks.

HuabingZhao

Yes, we do. We do use Consul as the solution for all those functionalities you mentioned at end point service level. We also have an abstract layer on top of Consul to make all these functionalities agnostic for a specific solution provider and add those functionalities which are needed to be extended beyond Consul API.

Christopher Rath

We have been planning to put an abstraction layer above our use of Consul as well for the Common Controller Framework project for use by OOM and DCAE. If you already have this available in MSB it would make sense to merge MSB into CCF so all controllers can reuse it.

John Ng

Huabing, Chris: let's agree to combine these efforts on registration and discovery and work out the best technical path forward. ok? thanks.

HuabingZhao

As I was saying in my previous response, the "service" mentioned in OOM and MSB are at different level. So they might need to be independent, at least logically. Let's find a time to schedule a meeting to discuss more details to figure out how to consolidate these efforts.

John Ng

Huabing: do you want me to schedule a call for discussion? when is a good time of day for you? we need to involve folks from MSB, CCFSDK, and OOM projects.

HuabingZhao

John,

Very sorry for having missed this comment. Currently I'm busy with MSB project creation stuff. I'll contact you when I'm available.

Thanks,

Huabing

Catherine Lefevre

I also would like to consider that we review the following JIRA items as part of this proposal if these are not solve earlier: - thank you

COMMON-10 - Getting issue details... STATUS

APPC-1 - Getting issue details... STATUS

COMMON-12 - Getting issue details... STATUS

COMMON-13 - Getting issue details... STATUS

COMMON-5 - Getting issue details... STATUS

COMMON-6 - Getting issue details... STATUS

COMMON-4 - Getting issue details... STATUS

COMMON-3 - Getting issue details... STATUS

UCA-4 - Getting issue details... STATUS

UCA-2 - Getting issue details... STATUS

maopeng zhang

The seed code links are almost from other projects, right? What's the links of this project? Thank you.

David Sauvageau

hi maopeng zhang updated link: https://gerrit.onap.org/r/gitweb?p=oom.git

thanks!

Manoj Nair

Will OOM support deploying ONAP Components in a PaaS environment like Openshift/Azure or Public Cloud like Amazon EC2? As per the presentation on 07/11, Cloudify is used for various deployment options ranging from K8S, Openstack etc. Would like to know if the option is limited to these two platforms.

David Sauvageau

I believe infrastructure deployment= should not be limited to any of the options you mentionned, but rather, a key architecture principle of OOM is that we will make ONAP deployable on any infrastructure, and that each infrastructure is an option.

So, Docker will be the container environment, K8S will be used as an option to deploy within a data center, Cloudify will be used as an option to deploy the infrastructure itself. That being said, we definitely need to limit the number of variations we will work on for the initial release in order to keep focus. I believe Docker+K8S will be the initial focus so that deployment can occur on any infrastructure. Then, deployment of the K8S cluster itself using Cloudify was discussed to be the stretch target for Amsterdam release.

Michael O'Brien

Just a marker for us to attend the next policy group meeting on 2 Aug 2017 at 0800EDT to answer a couple OOM questions posed yesterday (went through the notes) - I attend it for OOM

07-19-2017 Policy Weekly Meeting

The Logging project is relying on OOM to get an Elasticsearch/Kibana solution up - I could not find a weekly meeting yet.

Logging Enhancements Project Proposal

Pamela Dragosh

Thanks Michael O'Brien - (deprecated as of 20170508) - use obrienlabs, that would be helpful for us. I will add it to the Agenda if you are ok with that.

Pamela Dragosh

Michael O'Brien - (deprecated as of 20170508) - use obrienlabs - just to confirm would you be available for tomorrow's Policy weekly Meeting? I have some time slotted to discuss OOM integration.

Michael O'Brien

Pamela, Yes, very good, I will be there tomorrow morning

/michael

Notes:

- policy meet 20170802

tasks

- continue move to 1.1

- record oom meetings

- verify dependency definition between services (static like compose or dynamic?)

- look at breaking up pods into microservices at the api layer

- pypdp is out in 1.1.

- MSB integration from policy perspective> (precludes direct OOM integration)

- expand on pluggable SBI multiVIM for VFModule instantiation

- record k8s boostrap and demo - and do a quick demo

- policy does not need dcae as yet - but DMaaP from MessageRouter will be required - which are up

- integration with CLAMP?

- CLI integration?

- tosca support (how we will implement)

- CI Test integration with OOM?

Michael O'Brien

Kibana Statistics

Dhananjaya rao N

Hi All,

I am new to ONAP and trying to deploy it on OpenShift environment using OOM(Version 1.0). Executed "createConfig.sh" script and config-int pod was created. But the status of it is "Error" and /dockerdata-nfs/ directory is empty.

# kubectl get pods --namespace=onap --show-all

Below are the prints from /var/log/messages

Sep 19 17:13:42 RHOPEN1 atomic-openshift-node: E0919 17:13:42.435927 2351 docker_manager.go:761] Logging security options: {key:seccomp value:unconfined msg:}Sep 19 17:13:42 RHOPEN1 dockerd-current: time="2017-09-19T17:13:42.436362241+05:30" level=info msg="{Action=start, LoginUID=4294967295, PID=2351}"

Sep 19 17:13:42 RHOPEN1 kernel: XFS (dm-16): Mounting V5 Filesystem

Sep 19 17:13:42 RHOPEN1 kernel: XFS (dm-16): Ending clean mount

Sep 19 17:13:42 RHOPEN1 systemd: Started docker container 5adcabf868b2e15ad97b84bea643663f8d6caa048bef727e064d2633ab9f3334.

Sep 19 17:13:42 RHOPEN1 systemd: Starting docker container 5adcabf868b2e15ad97b84bea643663f8d6caa048bef727e064d2633ab9f3334.

Sep 19 17:13:42 RHOPEN1 kernel: SELinux: mount invalid. Same superblock, different security settings for (dev mqueue, type mqueue)

Sep 19 17:13:42 RHOPEN1 oci-register-machine[4103]: 2017/09/19 17:13:42 Register machine: prestart 5adcabf868b2e15ad97b84bea643663f8d6caa048bef727e064d2633ab9f3334 4085 /var/lib/docker/devicemapper/mnt/18ae7ca9cdd1c0eac58677136385187fa860a072c92a7297271ed1bde461fe33/rootfs

Sep 19 17:13:42 RHOPEN1 systemd-machined: New machine 5adcabf868b2e15ad97b84bea643663f.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: prestart /var/lib/docker/devicemapper/mnt/18ae7ca9cdd1c0eac58677136385187fa860a072c92a7297271ed1bde461fe33/rootfs

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker/overlay2]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker/overlay]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/overlay2]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/overlay]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/devicemapper]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/containers/]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/container/storage/lvm]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/container/storage/devicemapper]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/container/storage/overlay]: No such file or directory. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Could not find mapping for mount [/var/lib/docker/devicemapper] from host to conatiner. Skipping.

Sep 19 17:13:42 RHOPEN1 oci-umount: umounthook <info>: Could not find mapping for mount [/var/lib/docker/containers] from host to conatiner. Skipping.

Sep 19 17:13:42 RHOPEN1 kernel: IPv6: ADDRCONF(NETDEV_UP): eth0: link is not ready

Sep 19 17:13:42 RHOPEN1 kernel: IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

Sep 19 17:13:42 RHOPEN1 NetworkManager[793]: <info> [1505821422.7092] device (vethbcce07e6): link connected

Sep 19 17:13:42 RHOPEN1 NetworkManager[793]: <info> [1505821422.7104] manager: (vethbcce07e6): new Veth device (/org/freedesktop/NetworkManager/Devices/12)

Sep 19 17:13:42 RHOPEN1 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl add-port br0 vethbcce07e6

Sep 19 17:13:42 RHOPEN1 kernel: device vethbcce07e6 entered promiscuous mode

Sep 19 17:13:42 RHOPEN1 NetworkManager[793]: <info> [1505821422.7206] device (vethbcce07e6): enslaved to non-master-type device ovs-system; ignoring

Sep 19 17:13:42 RHOPEN1 atomic-openshift-node: I0919 17:13:42.749989 2351 docker_manager.go:2177] Determined pod ip after infra change: "config-init_onap(c8f94fdc-9d2f-11e7-b1d4-005056b0a166)": "10.128.0.38"

Sep 19 17:13:42 RHOPEN1 atomic-openshift-node: I0919 17:13:42.752553 2351 provider.go:119] Refreshing cache for provider: *credentialprovider.defaultDockerConfigProvider

Sep 19 17:13:42 RHOPEN1 atomic-openshift-node: I0919 17:13:42.752733 2351 docker.go:290] Pulling image oomk8s/config-init:1.0.1 without credentials

Sep 19 17:13:42 RHOPEN1 dockerd-current: time="2017-09-19T17:13:42.753090797+05:30" level=info msg="{Action=create, LoginUID=4294967295, PID=2351}"

Sep 19 17:13:43 RHOPEN1 atomic-openshift-node: I0919 17:13:43.210481 2351 kubelet.go:2328] SyncLoop (PLEG): "config-init_onap(c8f94fdc-9d2f-11e7-b1d4-005056b0a166)", event: &pleg.PodLifecycleEvent{ID:"c8f94fdc-9d2f-11e7-b1d4-005056b0a166", Type:"ContainerStarted", Data:"5adcabf868b2e15ad97b84bea643663f8d6caa048bef727e064d2633ab9f3334"}

Sep 19 17:13:45 RHOPEN1 atomic-openshift-node: I0919 17:13:45.772029 2351 conversion.go:133] failed to handle multiple devices for container. Skipping Filesystem stats

Sep 19 17:13:45 RHOPEN1 atomic-openshift-node: I0919 17:13:45.772071 2351 conversion.go:133] failed to handle multiple devices for container. Skipping Filesystem stats

Sep 19 17:13:52 RHOPEN1 atomic-openshift-node: I0919 17:13:52.807160 2351 kube_docker_client.go:317] Pulling image "oomk8s/config-init:1.0.1": "Trying to pull repository registry.access.redhat.com/oomk8s/config-init ... "

Sep 19 17:13:55 RHOPEN1 dockerd-current: time="2017-09-19T17:13:55.210457429+05:30" level=error msg="Not continuing with pull after error: unknown: Not Found"

Sep 19 17:13:55 RHOPEN1 atomic-openshift-node: I0919 17:13:55.824542 2351 conversion.go:133] failed to handle multiple devices for container. Skipping Filesystem stats

Sep 19 17:13:55 RHOPEN1 atomic-openshift-node: I0919 17:13:55.824577 2351 conversion.go:133] failed to handle multiple devices for container. Skipping Filesystem stats

Sep 19 17:14:00 RHOPEN1 dockerd-current: time="2017-09-19T17:14:00.546926953+05:30" level=error msg="Not continuing with pull after error: unknown: Not Found"

Sep 19 17:14:02 RHOPEN1 atomic-openshift-node: I0919 17:14:02.807174 2351 kube_docker_client.go:317] Pulling image "oomk8s/config-init:1.0.1": "Trying to pull repository docker.io/oomk8s/config-init ... "

Sep 19 17:14:05 RHOPEN1 atomic-openshift-node: I0919 17:14:05.888096 2351 conversion.go:133] failed to handle multiple devices for container. Skipping Filesystem stats

Sep 19 17:14:05 RHOPEN1 atomic-openshift-node: I0919 17:14:05.888126 2351 conversion.go:133] failed to handle multiple devices for container. Skipping Filesystem stats

Sep 19 17:14:12 RHOPEN1 atomic-openshift-node: I0919 17:14:12.524350 2351 kube_docker_client.go:320] Stop pulling image "oomk8s/config-init:1.0.1": "Status: Image is up to date for docker.io/oomk8s/config-init:1.0.1"

Sep 19 17:14:12 RHOPEN1 dockerd-current: time="2017-09-19T17:14:12.529490716+05:30" level=info msg="{Action=create, LoginUID=4294967295, PID=2351}"

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Mounting V5 Filesystem

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Ending clean mount

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Unmounting Filesystem

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Mounting V5 Filesystem

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Ending clean mount

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Unmounting Filesystem

Sep 19 17:14:12 RHOPEN1 atomic-openshift-node: E0919 17:14:12.765919 2351 docker_manager.go:761] Logging security options: {key:seccomp value:unconfined msg:}

Sep 19 17:14:12 RHOPEN1 dockerd-current: time="2017-09-19T17:14:12.766398036+05:30" level=info msg="{Action=start, LoginUID=4294967295, PID=2351}"

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Mounting V5 Filesystem

Sep 19 17:14:12 RHOPEN1 kernel: XFS (dm-17): Ending clean mount

Sep 19 17:14:12 RHOPEN1 systemd: Started docker container 5ce7ece03a9b0639d1589a33005092042e908101dfea3a2e535899c68fd7253a.

Sep 19 17:14:12 RHOPEN1 systemd: Starting docker container 5ce7ece03a9b0639d1589a33005092042e908101dfea3a2e535899c68fd7253a.

Sep 19 17:14:12 RHOPEN1 kernel: SELinux: mount invalid. Same superblock, different security settings for (dev mqueue, type mqueue)

Sep 19 17:14:12 RHOPEN1 oci-register-machine[4277]: 2017/09/19 17:14:12 Register machine: prestart 5ce7ece03a9b0639d1589a33005092042e908101dfea3a2e535899c68fd7253a 4270 /var/lib/docker/devicemapper/mnt/6fd42ecbf565f4137d7be23c933a1a98cfe62a1b8504c28420e709a47b5dcfc0/rootfs

Sep 19 17:14:12 RHOPEN1 systemd-machined: New machine 5ce7ece03a9b0639d1589a3300509204.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: prestart /var/lib/docker/devicemapper/mnt/6fd42ecbf565f4137d7be23c933a1a98cfe62a1b8504c28420e709a47b5dcfc0/rootfs

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker/overlay2]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker/overlay]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/overlay2]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/overlay]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/devicemapper]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/docker-latest/containers/]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/container/storage/lvm]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/container/storage/devicemapper]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Failed to canonicalize path [/var/lib/container/storage/overlay]: No such file or directory. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Could not find mapping for mount [/var/lib/docker/devicemapper] from host to conatiner. Skipping.

Sep 19 17:14:12 RHOPEN1 oci-umount: umounthook <info>: Could not find mapping for mount [/var/lib/docker/containers] from host to conatiner. Skipping.

Sep 19 17:14:13 RHOPEN1 systemd-machined: Machine 5ce7ece03a9b0639d1589a3300509204 terminated.

Sep 19 17:14:13 RHOPEN1 oci-register-machine[4324]: 2017/09/19 17:14:13 Register machine: poststop 5ce7ece03a9b0639d1589a33005092042e908101dfea3a2e535899c68fd7253a 0 /var/lib/docker/devicemapper/mnt/6fd42ecbf565f4137d7be23c933a1a98cfe62a1b8504c28420e709a47b5dcfc0/rootfs

Sep 19 17:14:13 RHOPEN1 kernel: XFS (dm-17): Unmounting Filesystem

Sep 19 17:14:13 RHOPEN1 atomic-openshift-node: I0919 17:14:13.471190 2351 kubelet.go:2328] SyncLoop (PLEG): "config-init_onap(c8f94fdc-9d2f-11e7-b1d4-005056b0a166)", event: &pleg.PodLifecycleEvent{ID:"c8f94fdc-9d2f-11e7-b1d4-005056b0a166", Type:"ContainerDied", Data:"5ce7ece03a9b0639d1589a33005092042e908101dfea3a2e535899c68fd7253a"}

Sep 19 17:14:13 RHOPEN1 docker: I0919 11:44:13.479834 1 factory.go:211] Replication controller "onap/" has been deleted

Sep 19 17:14:13 RHOPEN1 ovs-vsctl: ovs|00001|vsctl|INFO|Called as ovs-vsctl --if-exists del-port vethbcce07e6

Sep 19 17:14:13 RHOPEN1 kernel: device vethbcce07e6 left promiscuous mode

Sep 19 17:14:13 RHOPEN1 atomic-openshift-node: I0919 17:14:13.566681 2351 docker_manager.go:1507] Killing container "5adcabf868b2e15ad97b84bea643663f8d6caa048bef727e064d2633ab9f3334 onap/config-init" with 30 second grace period

Sep 19 17:14:13 RHOPEN1 dockerd-current: time="2017-09-19T17:14:13.567056917+05:30" level=info msg="{Action=stop, LoginUID=4294967295, PID=2351}"

Sep 19 17:14:13 RHOPEN1 systemd-machined: Machine 5adcabf868b2e15ad97b84bea643663f terminated.

Sep 19 17:14:13 RHOPEN1 oci-register-machine[4381]: 2017/09/19 17:14:13 Register machine: poststop 5adcabf868b2e15ad97b84bea643663f8d6caa048bef727e064d2633ab9f3334 0 /var/lib/docker/devicemapper/mnt/18ae7ca9cdd1c0eac58677136385187fa860a072c92a7297271ed1bde461fe33/rootfs

Sep 19 17:14:13 RHOPEN1 kernel: XFS (dm-16): Unmounting Filesystem

Can someone guide me how to install ONAP on OpenShift environment .

Thanks,

Dhananjay