This is a potential draft of a project proposal template. It is not final or to be used until the TSC approves it.

Project Name:

- Proposed name for the project:

DCAE - Proposed name for the top level repository name: dcaegen2

- Note: For the 4Q17 release, one of major goals for DCAE is to evolve from the "old" controller that is currently in gerrit.onap.org with a new controller that follows the Common Controller Framework. The switch will maintain external (to other ONAP component) compatibility, but NOT backwards compatible internally. That is, subcomponents built for the old controller will not work with the new controller or vice versa. We are proposing to us a new top level naming “dcaegen2” for repos of subcomponents that are compatible with the new controller because it appears to be the cleanest approach to avoid confusion. The existing "dcae" top level still hosts repos of subcomponent projects that are compatible with the old controller. Eventually we will phase out the "dcae" tree.

- Note: For the 4Q17 release, one of major goals for DCAE is to evolve from the "old" controller that is currently in gerrit.onap.org with a new controller that follows the Common Controller Framework. The switch will maintain external (to other ONAP component) compatibility, but NOT backwards compatible internally. That is, subcomponents built for the old controller will not work with the new controller or vice versa. We are proposing to us a new top level naming “dcaegen2” for repos of subcomponents that are compatible with the new controller because it appears to be the cleanest approach to avoid confusion. The existing "dcae" top level still hosts repos of subcomponent projects that are compatible with the old controller. Eventually we will phase out the "dcae" tree.

Project description:

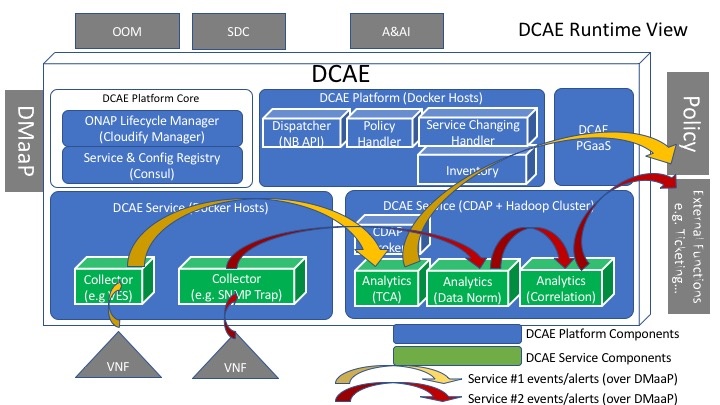

DCAE is the umbrella name for a number of components collectively fulfilling the role of Data Collection, Analytics, and Events generation for ONAP. The architecture of DCAE targets flexible, plug-able, micros-service oriented, model based component deployment and service composition. DCAE also support multi-site collection and analytics operations which are essential for large ONAP deployments.

DCAE components generally fall into two categories: DCAE Platform Components and DCAE Services Components. DCAE Platform consists of components that are needed for any deployments. They form the foundation for deploying and managing DCAE Service components, which are deployed on-demand based on specific collection and analytics needs.

DCAE Platform

DCAE Platform consists of a growing list of components. For R1 release this includes the Cloudify Manager, Consul, Dispatcher, Policy Handler, Service Changing Handler, Service Infrastructure (Docker host and CDAP cluster), and CDAP Broker. Their roles and functions are described below:

- Cloudify Manager: Cloudify, through its army of plugins, is capable of relationship topology base resource orchestration in many levels and cross different technologies. It is the lifecycle management engine of DCAE. Various resource deployment, change, allocation, configuration, etc, operations are all done through Cloudify. [Note: This component is part of the Common Controller SDK, or ccsdk project. How DCAE uses it will be described more below regarding deployment.]

- Consul: Consul is a service discovery technology for distributed fault detection and KV store. DCAE uses Consul for service and configuration registry. [Note: This component is part of the Common Controller SDK, or ccsdk project. How DCAE uses it will be described more below regarding deployment. ]

- Service infrastructure: DCAE platform supports two kinds of infrastructures, the Docker container hosts and CDAP/Hadoop clusters. The former is for running containerized applications and services. And the latter is for running CDAP-Hadoop based big data analytics.

- Dispatcher: Dispatcher is a NB API provider for the DCAE Services. Service related triggers, such as deploying/undeploying services, changing configurations, etc all arrive at the Dispatcher, which then enriches the request, and invokes the right Blueprints and calling Cloudify Manager plugins to complete the necessary changes in virtual resources.

- Inventory: Inventory tracks DCAE related resource information such as various Blueprints and templates that are used by Cloudify Manager to deploy and configure components, as well as inventory information extracted from A&AI that is related to but not really part of DCAE, such as the relationships between virtual network resources and their physical infrastructures.

- PGaaS: Inventory is backed by a PostgreSQL database for data storage.

- Policy Handler and Service Changing Handler: They are the interfacing modules for specific external components such as Policy, SDC, etc.

- CDAP Broker: CDAP Broker interfaces between CDAP and Cloudify Manager, supporting carrying out various Cloudify CDAP operations onto the CDAP.

The DCAE platform is expected to be deployed through the ONAP Operations Manager (OOM). There are two ways to deploy a DCAE system: 1. all DCAE components (both Platform and Service components) deployed by the OOM; or 2. the OOM deploys only the core DCAE platform functions, namely the Lifecycle Manager (Cloudify) and the Service & Config Registry (Consul). Then this platform core subsequently deploys all other DCAE Platform components. Note that the platform core is based on the same software tools that are part of the Common Controller SDK just like OOM. Because of the multi-level responsibility structure, option 2 is more suitable for larger DCAE systems that span cross multiple sites and security and infrastructure technology boundaries. We will use the option 2 as example in the DCAE Service component deployment description too.

DCAE Services

DCAE provides data collection and analysis services to the ONAP. Different analysis services will require different collection components and analytics components. They are distinct from DCAE Platform components because they are dynamically deployed and managed based on service needs. More over they are deployed and managed by the DCAE Platform. These are the DCAE Service components.

Under DCAE, the collectors and analytics, either as Docker containers or as CDAP applications, will be on-boarded as Blueprints to the DCAE Platform. At deployment time, as triggered by API calls through Dispatcher and other interfacing Platform modules, the Cloudify Manager completes the deployment of the required DCAE Service resources onto the Docker Host or CDAP Cluster that is determined by the DCAE Platform. The illustration below depicts that the DCAE is deploying two services (yellow and red). The required DCAE Service components are deployed onto the DCAE Platform. In this example, the yellow service is completed by a VNF Event Streaming collector and a Threshold Crossing Analytics; while the red service consists of a SNMP Trap collector, followed by a data normalization step and a Correlation analytics.

In addition, the DCAE Platform will also configure the data paths between the Service components, i.e. by setting up DMaaP topics and configurations.

The figure below further illustrates how performance measurement and fault management data, i.e. VNF events, SNMP traps, and alert events, traverse through the DCAE Service components, and eventually depart from DCAE to reach downstream components such as ONAP Policy, or other external systems such as ticketing.

* Portal/GUI

(This section is for addressing related TSC comments, not as part of DCAE project.)

Dedicated DCAE Portal/GUI is not part of the DCAE project under the current scope. In future if such portal is deemed necessary, it may be developed under DCAE, or under Portal or VID project. At present time, certain aspects of DCAE operation status can be displayed by utilizing a combination of native GUI/Portal of the open source software tools used by DCAE (e.g. CDAP GUI, Cloudify portal, etc), or CLAMP cockpit for a more service level end-to-end view in which DCAE is only a part, or CLI-style and RestAPI interaction for status probing.

Project Scope:

Because of the large potential scope for DCAE, the components proposed for 4Q17 R1 are prioritized as follows:

- DCAE Platform: This is top priority because everything DCAE depends on it. All platform components listed above fall under this priority.

- DCAE Services: collectors and analytics that are needed for supporting the 4Q17 use cases and Open-O harmonization. We have identified the VES and SNMP Trap collectors; Threshold Crossing and Data Normalization Analytics. We expect the list to grow and be finalized as the ONAP R1 use cases and the control loops for the use cases are fully defined.

- Other collectors, analytics, and functions that have been identified as valuable by the community will be included based on resource availabilities in this and future releases of ONAP.

- DCAE Portal and design studio

- Additional normalization of OpenECOMP/OpenO data collection needs and realization

- More TOSCA model based artifact (component, VNF, and data) on-boarding

- Catalog of artifact models

- VNF/PNF data collection

- MultiVIM interfacing

- Event triggered life cycle management

- Cloud computing Infrastructure event collection and analytics

- Predictive analytics

- AI/ML

Architecture Alignment:

How does this project fit into the rest of the ONAP Architecture?

DCAE performs a vital function within the ONAP architecture. DCAE collects performance metrics and fault data from the VNFs, PNFs, and computing infrastructure, performing local and global analytics, and generating events that are provided for downstream ONAP components (e.g. Policy) for further operations.DCAE follows the TOSCA model based ONAP Operations Manager and Common Controller SDK architecture for component on-boarding, close-loop triggered or event (e.g. A&AI) triggered collector and analytics deployment, configuration, and scaling.

- What other ONAP projects does this project depend on?

- A&AI, Policy, Micro Services, Modeling, CLAMP, SDC, OOM, CCSDK, DMaaP, Common Services, MultiVIM, Integration, Holmes

- How does this align with external standards/specifications?

- TOSCA

- VES (OPNFV)

- Are there dependencies with other open source projects?

- CDAP, Cloudify, Consul, Hadoop, Elastic Search, PostgreSQL, MariaDB

Resources:

Project Technical Lead

- Current

- Vijay Venkatesh Kumar (AT&T)

- Past

- Lusheng Ji (AT&T)

Names, gerrit IDs, and company affiliations of the committers

| Name | Gerrit ID | Company | Time Zone | DCAE Component Focus | |

|---|---|---|---|---|---|

| Lusheng Ji | wrider | AT&T | lji@research.att.com | New Jersey, USA EST/EDT | |

| Vijay Venkatesh Kumar | vv770d | AT&T | New Jersey, USA EST/EDT | collectors controller | |

| Tony Hansen | TonyLHansen | AT&T | tony@att.com | New Jersey, USA EST/EDT | database, storage, analytics |

| Mike Hwang | researchmike | AT&T | mhwang@research.att.com | New Jersey, USA EST/EDT | controller |

| Yan Yang | yangyan | China Mobile | yangyanyj@chinamobile.com | Beijing, China. UTC +8 | collectors |

| Xinhui Li | xinhuili | VMware | lxinhui@vmware.com | Beijing, China. UTC +8 | collectors |

Contributors

Names and affiliations of any other contributors

Company | Name | DCAE Component/Repo Focus | Commitment (%) | |

AT&T | Alok Gupta | ag1367@att.com | VES | |

| Gayathri Patrachari | Collector | 50% | ||

| Alexei Nekrassov | nekrassov@att.com | Analytics | 50% | |

| Tommy Carpenter | tommy@research.att.com | CDAP Broker, Config binding service | >50% | |

| Jack Lucas | jflucas@research.att.com | Deployment Handler, Cloudify Manager | >50% | |

| Alexander V Shatov | alexs@research.att.com | Policy Handler | >50% | |

| David Ladue | dl3158@att.com | SNMP Trap Collector | 50% | |

| Gokul Singraju | gs244f@att.com | VES | ||

Intel | Maryam Tahhan | VES | ||

| Tim Verrall | VES | |||

| China Mobile | Yuan Liu | |||

| Huawei | Avinash S | |||

| Actual contribution TBD for below | ||||

| AT&T | Jerry Robinson | |||

| BOCO | Jingbo Liu | liujingbo@boco.com.cn | ||

| Zhangxiong Zhou | ||||

| Deutsch Telcom | Mark Fiedler | |||

Futurewei | Xin Miao | xin.miao@huawei.com | ||

| IBM | Yusuf Mirza | |||

| David Parfett | ||||

| Mathew Thomas | ||||

| Janki Vora | ||||

| Amandeep Singh | ||||

Orange | Vincent Colas | |||

Olivier Augizeau | ||||

| Pawel Pawlak | ||||

Reliance Jio | Aayush Bhatnagar | Aayush.Bhatnagar@ril.com | ||

| Yog Vashishth | yog.vashishth@ril.com | |||

| Adityakar Jha | Adityakar.Jha@ril.com | |||

Tech Mahindra | Sandeep Singh | |||

| Abhinav Singh | ||||

| VMware | Sumit Verdi | |||

- Project Roles (include RACI chart, if applicable)

Other Information

- link to seed code (if applicable)

- Vendor Neutral

- if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

The current seed code has been already scanned and cleanup to remove all proprietary trademarks, logos, etc. except openecomp to be replaced by onap

Subsequent modification to the existing seed code should continue to follow the same scanning and clean up principles.

- if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

- Meets Board policy (including IPR)

Use the above information to create a key project facts section on your project page

Key Project Facts

Project Name:

- JIRA project name: Data Collection Analytics and Events

- JIRA project prefix: dcaegen2

Lifecycle State:

- Mature code that needs enhancement/integration: VES collector, TCA analytics, Dispatcher, Service Changing Handler, Inventory PGaaS, CDAP infrastructure, CDAP Broker

- Incubation: Policy Handler, SNMP Trap collector, additional use case specific collectors/analytics/Blueprints, ESaaS,

Primary Contact: John F. Murray (AT&T), Lusheng Ji (AT&T)

Project Lead: Lusheng Ji (AT&T)

Mailing list tag: dcaegen2

Repo structure and names:

Category | Components Repository name | Maven Group ID | Components Description |

Platform | dcaegen2/platform/blueprints | org.onap.dcaegen2.platform.blueprints | Blueprint for DCAE controller |

dcaegen2/platform/plugins | org.onap.dcaegen2.platform.plugins | Plugin for DCAE controller | |

dcaegen2/platform/cdapbroker | org.onap.dcaegen2.platform.cdapbroker | CDAP Broker | |

dcaegen2/platform/cli | org.onap.dcaegen2.platform.cli | Cli tool for onboarding through new dcae controller | |

dcaegen2/platform/deployment-handler | org.onap.dcaegen2.platform.deployment-handler | Deployment handler | |

dcaegen2/platform/servicechange-handler | org.onap.dcaegen2.platform.servicechange-handler | Service change handler | |

dcaegen2/platform/inventory-api | org.onap.dcaegen2.platform.inventory-api | DCAE inventory API service | |

dcaegen2/platform/policy-handler | org.onap.dcaegen2.platform.policy-handler | Policy handler | |

dcaegen2/platform/configbinding | org.onap.dcaegen2.platform.configbinding | Configbinding api service | |

dcaegen2/platform/registrator | org.onap.dcaegen2.platform.registrator | Registrator | |

ccsdk/storage/pgaas | org.onap.ccsdk.storage.pgaas | Postgres as a service | |

ccsdk/storage/esaas | org.onap.ccsdk.storage.esaas | Elastic Search as a service | |

Service | dcaegen2/collectors/ves | org.onap.dcaegen2.collectors.ves | VNF Event Streaming collector |

dcaegen2/collectors/snmptrap | org.onap.dcaegen2.collectors.snmptrap | SNMP Trap collector (TBD) | |

dcaegen2/analytics/tca | org.onap.dcaegen2.analytics.tca | Threshold crossing analytics | |

deployments | dcaegen2/deployments | org.onap.dcaegen2.deployments | For hosting configurations and blueprints for different deployments |

utils | dcaegen2/utils | org.onap.dcaegen2.utils | For hosting utility/tools code used cross components |

Link to approval of additional submitters:

69 Comments

Xiaojun XIE

Thanks for the proposal. I have a few questions:

Lusheng Ji

So far those two collectors have been identified to be the most relevant for supporting the use cases of 4Q17 release. There are many many more collectors developed for data collection, for example, syslog and file copy. But unfortunately we do not have the bandwidth to include more for this release. There is only a little over two months of dev time.

For collecting from existing VIMs, there have been special purpose collectors developed, such as ceilometer collector, vcenter collector, contrail collector, etc, each speaking the specific telemetry and other performance/fault measurement API of the target system. These collectors will be included in future release at a pace that is prioritized based on community interests and development resource availability.

All these collectors follow the same use pattern of the VES and SNMP collector. That is, they are on-boarded with the ONAP system by providing model specifications (i.e. TOSCA yml files) so that the DCAE controller is able to deploy them as virtual resources dynamically into the sites that best suit the collection goals; they can configured based on the overall close loop control flow and application at both deployment time and runtime by the controller based on corresponding policies; and they interface with the DCAE analytics micro-service via the DMaaP data movement bus.

Rebecca Lantz

Hello Lusheng Ji, is there an update on a ceilometer and/or gnocchi collector being added to DCAE? Anyone that may have started POC work on this?

Srinivasa Addepalli

There is some work going on connecting Prometheus to ONAP, initially for HPA telemetry, but eventually would be extended to talk to DCAE via VES.

Edge Scoping MVP for Casablanca - ONAP Enhancements#ONAPEnhancements-AggregatedInfrastructureTelemetryStreams(AlignswithHPArequirements,CombiningeffortswithHPA)

Srini

Vijay Venkatesh Kumar

#1 - The VES specification supports batch events; hence that's is already supported in the VES Collector.

#2 - That is handled by separate collector/ms (not part of ONAP currently or in this new project proposal). The collector basically uses restful api provided by ceilometer to collect the stats periodically.

Xiaojun XIE

Thanks for the clarification.

Cang Chen

I have go through code of VES, and not find the batch collector. Is batch collector not implemented yet? or Batch collector is exactly EventReceipt::receiveMultipleEvents?

Olivier Augizeau

SNMP Trap collector is identified. For underlay/WAN scenarios, more collectors have to be considered:

Time series data base (e.g. influxDB) could be added to the list of potential interesting data bases.

Catherine Lefevre

I have added a comment regarding the Vendor Neutral section.

Suggested tools or anything else equivalent: FOSSology, Blackduck suite, Sonar, CheckMarx.

I have updated the JIRA project name/prefix to align with jira.onap.org

Cang Chen

What is dcaegen2/controller/sch?

Vijay Venkatesh Kumar

sch is abbreviation for Service chain handler (used in CLAMP flow); primary function of this component will be interface with SDC to get the updated service configuration into DCAE

Alok Gupta

Batch Processing is currently not supported in the VES (evel) agent code. However, the requirements can be found in the requirement documents and DCAE collector does support it. We will add it in the next release.

Yan Yang

In VoLTE use case,it needs to collect data of compute nodes, vms and vnfs,these data may include performance, alarm data, etc.I don't know how these data are collected in DCAE whether these different levels of data can be collected by VES Collector.

Vijay Venkatesh Kumar

hi Yan - It depends on what type of interface you are looking at. VES specification includes performance/measurement domain also (in addition to fault/syslog etc). For DCAE internally, we employ different collectors based on interfacing protocols (sftp/rsync/http) for collecting measurement stats. Newer VNF's being on-boarded are aligning with VES spec supporting restful interface (in this case measurement events are handled through same VES collector)

Yan Yang

Thanks for the clarification and I have two other questions:

1.As far as I know,the data passed to the VES collector is collected through the VES agent,but I'm not sure if vnf allows the agent to be embedded.In fact many vnfs are not allowed to embed the agent.If vnf does not allow embedding VES agents, How do we collect vnf data or whether there are other ways in DCAE.

2.Do you know how the VES agent is implemented and whether it is implemented by calling the shell command.

Xin Miao

Hi, Yan,

According to my understanding, if the VNF is defined and created through ONAP, then VES agent should be implemented by design. For any existing 3rd party VNF to be registered or on-boarded through ONAP and available in ONAP domain, a VES agent (acting as data adapter interfacing VES collector) needs to be implemented per such type of VNF. It is the VNF developer's call on how to implement the agent.

Please correct me if I am wrong.

Yuan Liu

Does the DCAE provide the GUI function to show the collected data / events, like performance, alarm, fault, etc.?

Christopher Rath

No, it does not. The role of DCAE is to collect data from VNFs, perform analytics, and post the results of those analytics for other systems to pick up for closed loop, reporting, and longer-term analytics, (e.g. export to a data lake).

Yuan Liu

Thanks. I guess the Analytic APPs may provide the GUI to show the results. If the portal needs to show something, I guess it can read the data from data lake and show something.

Alok Gupta

VES agent currently in ONAP Git is a C Library that can be used by VNF vendors to generate events from their VNFs in VES Format. DCAE collector would be able to receive these events, check for Security and syntax and provide acknowledgement.

An example of this integration can be found in the vFW and vLB, two VNFs in the ONAP. For additional help or questions, please reach out to me. DCAE has lots of sub-components that can be customized by service providers. I am currently working on PoC/Demo, where we will be able to support VES 5.0 events: vFW/vLB => DCAE Collector => DMaaP => Influx DB => Grafana Dashboard. We will have the Poc/Demo completed by Mid June, 2017. This frame work could be used for experimenting/building new sub-systems in DCAE.

Yan Yang

Thank you for your detailed explanation.If VNF not invoke the C Library or C Library is not loaded into the VNF guest OS, the data will not be collected,is it right? and Whether the performance data about vm and compute node is collected by the C Library VES agent or by other agent?

Srinivasa Addepalli

Hi Alok,

Where is this library - VES Agent? I tried to search for VES in projects list of gerrit. I only found two projects - dcae/collectors/ves and dcaegen2/collectors/ves. Thanks in advance.

Thanks

Srini

Alok Gupta

Srini:

You can VES Library and requirements are currently at:

Hosted in the ONAP github site: https://gerrit.onap.org/r/gitweb?p=demo.git;a=tree;f=vnfs;hb=HEAD

VES Documentation: https://github.com/att/evel-test-collector/tree/master/docs/att_interface_definition

Alok

Srinivasa Addepalli

Hi Alok,

Thanks for the pointers. I am yet to go through this thoroughly. But I am missing end-to-end picture. Any clarification on that would be good. I understand that evel_initialize(..) function takes VES collector FQDN, port on which it is listening, user name & password expected by VES collector to authenticate the VES agent etc.. From README document, I also understand that it is responsibility of application to somehow get this information and pass it on to this initialize function. Since this configuration is dynamic on per deployment basis, this configuration information is not expected to be part of the VNF image itself. Normally, user-data component of cloud-init is used by VIMs to pass any deployment specific information such as these. I am wondering how does this work in ONAP? How do VNFs come to know about VES collector information? Who informs them? What guidelines VNF developers should follow? Any end-to-end sequence diagrams anywhere?

Thanks

Srini

Alok Gupta

Shrini:

In one of the DCAE call I had presented VES Overview. The presentation can be found in the meeting notes published by Vijay: https://wiki.onap.org/download/attachments/6592895/ONAP_DCAE_WeeklyMeeting_062917.docx?version=2&modificationDate=1498753255000&api=v2 .

If you have question or need overview, please feel free to setup a call, or join the next DCAE discussion meeting on Thursday at 9.00AM EST.

Alok

Laurentiu Soica

Is the Poc/Demo ready ? Otherwise is there a demo/documentation available for VNF monitoring through a dashborad / GUI ?

Catherine Lefevre

I also would like to consider the following JIRA items as part of this proposal if these are not solve earlier:

DCAE-9 - Getting issue details... STATUS

DCAE-5 - Getting issue details... STATUS

DCAE-10 - Getting issue details... STATUS if the following epic is approved COMMON-10 - Deploy automatically an ONAP high availability environment Open

COMMON-10 - Deploy automatically an ONAP high availability environment Open

DCAE-6 - Getting issue details... STATUS

DCAE-3 - Getting issue details... STATUS

Lusheng Ji

DCAE-9, DCAE-6, and DCAE-3 JIRA items will be addressed under the new controller framework.

DCAE-10 appears to be more of an ONAP system wide issue, not just DCAE. It relates to how the overall ONAP system is deployed and how COMMON-10 is addressed in the Integration of other OAM related projects. DCAE will try to follow the over ONAP approach for HA.

Christopher Rath

Some clarifications from questions on the Initial Project Proposal Feedback page.

Olivier Augizeau

If "The definition of standard collectors should really be a separate project", which of the ONAP projects is supposed to deal with the data collectors?

Lusheng Ji

DCAE (sub)components can be divided into two camps: platform and services/applications. Platform components will be there for all deployments, service/application components will be deployed based on the control loops needed. Individual collectors and analytics belong to the services/applications space. Within OpenECOMP/ONAP so far we have not explicitly separated them into individual projects, for example: DCAE platform project, SNMP Trap collector project, threshold crossing analysis project, etc, yet. This is not only due to how DCAE was structured historically, also due to the fact that we are targeting the first release, the first time that the DCAE platform and collection/analytics components will work together in a complete ONAP close-loop under the Common Controller Framework. The component developers need to work really close together. That is why in this proposal DCAE is written as an umbrella project covering the platform, and the collectors and analytics needed for the use cases. For future it would make sense to have separate projects for platform v.s. individual collectors and analytics. At that time, the documentation, a platform for CICD testing and integration, and sample collector/analytics code, will all be there.

Christopher Rath

In response to latest comments from the TSC:

Lusheng Ji

Also in response to TSA comments:

xinhuili

For the multi-vim side to expose VIM layer Fcaps and monitoring data, we need to support both agent and agentless data collection in R1. The agentless way will be used to collect data from REST API exposed by multi-vim service. Considering the tight schedule, it can be VES compitable or discuss adoptes acceptable event format by DCAE.

Lusheng Ji

Yes VES would be good for this purpose. What needs further definition is the specification of the exact data format, how to model the format, and what kind of analytics processing is needed.

Jason Hunt

To document the concerns I raised during the meeting:

Lusheng Ji

Thanks for your comments.

For the first point. DCAE supports two deployment approaches. Taking the target of the R1 use case deployments, I would agree with you. The OOM-does-all model is simpler, and there is a central place for all state info for all ONAP pieces as you pointed out. However, I would also argue that there are tradeoffs between the two approaches. The second option is more for larger and more complex deployments. For example, when a collector is deployed at mobile edge on west coast, it may not be able to access the OOM Consul at a site on east coast due to firewall blockage. On the other hand, a regional site that hosts the DCAE consul can be accessed from edge. In this case the DCAE Consol could also broke the DCAE component info to the OOM too. For R1, we have not finalized on which way to go yet. I think part of the beauty of OOM is that it can be used recursively.

As for MSB, just for Service & Config Registry purpose, I think the synergy is best realized at between OOM and MSB, not between DCAE and MSB. Under option #2, because the DCAE "Service & config registry" component is really OOM code just run by DCAE, whatever OOM leverages from MSB, DACE would be using it too. On the other hand, if we go with deployment model option #1, there won't even be a "Service & config registry" under DCAE. Now there may be other mutually beneficial aspects between MSB and DCAE. The TSC also provided comments along this line too. We will review MSB in depth to identify those potentials.

Jason Hunt

Thank you for the reply. I agree that there will need to be a good discussion on how to handle distributed ONAP components and managing them.

Yan Yang

In VoLTE usecase,the Fcaps and monitoring datas from VNFs and vim layer are needed in R1.In this usecase,it involves the interfaces between DACE and VF-C and the interfaces between DACE and Mutil-vim, so these interfaces are needed in DCAE R1 not the future release.

DCAE should provide south bound REST API which will be used to push relative data to DACE for analysis and Close Loop control by other projects.In addition, the interface data format also needs to be defined in R1.

Lusheng Ji

For interface with VF-C, or other ONAP components, DCAE publishes events to the DMaaP Message Bus. Other components can subscribe to those topics to get the data. Now the devil is always in the details, such as exactly what data and format is needed and what kind of processing or analysis is needed. We need to set up discussion on a more detailed level.

The VES collector provides the SB REST API for VNFs to push data into DCAE. Please check out VES project under OPNFV (https://wiki.opnfv.org/display/PROJ/VNF+Event+Stream) for more info.

Yan Yang

First, Whether the SB REST API for VNFs is also suitable for VIM layer to push data into DCAE or need to be extended?

I will clarify my question again.In VoLTE usecase, the VF-C needs push Fcaps data to DCAE for analysis and Close Loop control.So the interfaces between DACE and VF-C are needed in R1.

Xin Miao

Lusheng Ji

Is SNMP Collector code going to be opened in this release?

Lusheng Ji

Yes, it is planned to be part of this release.

Manoj Nair

As I understand DCAE currently enables closed loop control and overall service assurance in ONAP. Also seen a rich toolset with VES collector, CDAP, Cloudify orchestration of blueprints etc. I would like to know if there is any capability planned just to visually monitor the alarms or telemetry data as received by the collector ? Is there any plan to create a portal/dashboard or tool set for logging the alarms/metrics and visualizing them. Currently I guess this is possible only through an analytics application capturing the events from collector and aggregating it to be consumed by a visualization tool.

Michael O'Brien

See rudimetry TCA/MEASUREMENT tracking stats in the vFirewall demo

Tutorial: Verifying and Observing a deployed Service Instance

/michael

Geora Barsky

I understand that DCAE is subscribed to DMaaP topic to receive A&AI events. We are looking to implement similar functionality via Robot for vCPE use cases.

Could you please share some info where can we see your implementation of DMaaP subscriber module.

Thanks

Geora

Alok Gupta

Laurentiu Soica:

A demo was completed last June and was demonstrated on OPNFV, Beijing Summit. You can view it on the youtube by searching for VES and ONAP.

Please let me know if you additional questions.

Laurentiu Soica

Thanks. Basically I am trying to understand the integration between DMaaP and InfluxDB in order to provision in InfluxDB the data received from VES agents deployed on VNF VMs.

If my understand is correct:

In an ONAP deployment, where VES agents report data to DCAE, how can I integrate this monitoring solution InfluxDB + Grafana ?

Thanks,

Laurentiu Soica

GOKUL SINGARAJU

Dmaap is message bus for send/rcv publish/consume of message Data. VES Agents directly report events to DCAE Collector which verifies them and outputs processed messages on DMaaP. The VES event messages in DCAE logs are also parsed by python script (Analytics) which saves relevent data in InfluxDB. Grafana dashboard displays live data from InfluxDB.

The demo runs on Ubuntu 16.04 Linux.

Sample VND VES Agents vFireWall vLoadBalancer are sending Data into DCAE Collector

The demo features

Integrated End to End Service Assurance Demo featuring

VES Agent collecting data

DCAE Collector (source code not provided)

Grafana Dashboard / InfluxDb backend

iPerf traffic injection/ traffic threshold cross alarm / Mail Alert

Manual Firewall traffic mitigation

https://github.com/att/evel-library/files/1438898/demowk.zip

GOKUL SINGARAJU

Sangeeth G

Hello Everyone,

I am facing an issue in DCAE deployment. I am seeing DCAE VMs gets bootstrapped via cloudify. I am facing an issue on the same. Bootup task is failing.

The Designate services are running on the openstack side. But still seeing the issue. Please suggest.

2018-03-14 06:19:56 CFY <local> [floatingip_vm00_a9ef0.create] Task succeeded 'neutron_plugin.floatingip.create'

2018-03-14 06:19:56 CFY <local> [private_net_56b12.create] Task started 'neutron_plugin.network.create'

2018-03-14 06:19:56 LOG <local> [private_net_56b12.create] INFO: Using external resource network: oam_onap_3Ctf

2018-03-14 06:19:56 CFY <local> [private_net_56b12.create] Task succeeded 'neutron_plugin.network.create'

2018-03-14 06:19:57 CFY <local> [key_pair_2c3fe] Configuring node

2018-03-14 06:19:57 CFY <local> [security_group_7561d] Configuring node

2018-03-14 06:19:57 CFY <local> [floatingip_vm00_a9ef0] Configuring node

2018-03-14 06:19:57 CFY <local> [private_net_56b12] Configuring node

2018-03-14 06:19:57 CFY <local> [key_pair_2c3fe] Starting node

2018-03-14 06:19:57 CFY <local> [floatingip_vm00_a9ef0] Starting node

2018-03-14 06:19:57 CFY <local> [security_group_7561d] Starting node

2018-03-14 06:19:57 CFY <local> [private_net_56b12] Starting node

2018-03-14 06:19:58 CFY <local> [dns_vm00_059b8] Creating node

2018-03-14 06:19:58 CFY <local> [dns_cm_e2faf] Creating node

2018-03-14 06:19:58 CFY <local> [dns_cm_e2faf.create] Sending task 'dnsdesig.dns_plugin.aneeded'

2018-03-14 06:19:58 CFY <local> [dns_vm00_059b8.create] Sending task 'dnsdesig.dns_plugin.aneeded'

2018-03-14 06:19:58 CFY <local> [dns_cm_e2faf.create] Task started 'dnsdesig.dns_plugin.aneeded'

2018-03-14 06:19:58 CFY <local> [dns_vm00_059b8.create] Task started 'dnsdesig.dns_plugin.aneeded'

2018-03-14 06:19:58 CFY <local> [dns_cm_e2faf.create] Task failed 'dnsdesig.dns_plugin.aneeded' -> 'dns'

2018-03-14 06:19:58 CFY <local> 'install' workflow execution failed: Workflow failed: Task failed 'dnsdesig.dns_plugin.aneeded' -> 'dns'

Workflow failed: Task failed 'dnsdesig.dns_plugin.aneeded' -> 'dns'

Andrew Gauld

Can you see if the dnsdesig plugin raised an exception? It would typically have some explanitory text like "Failed to list DNS zones" or "Failed to list DNS record sets" or "Failed to create DNS record set for ..." It can also log an error "DNS zone ... not available for this tenant."

Sangeeth G

Hi Andrew,

Thanks for the response. I don't see anything on the container logs by the way. Is there anywhere specifically you want me to verify ?

Andrew Gauld

I asked our local bootstrap person to look at this, his suggestion is that, coming from Cloudify, " ... → 'dns'" as an error usually indicates a python KeyError, with a key of 'dns'. This would happen if the response from authenticating to the OpenStack KeyStone identity service was successful, but did not contain the expected entry for Designate. In particular, it is expecting the service type, for Designate, to be "dns" (all lower case). If you look, using the OpenStack horizon dashboard, under Project/Compute/Access & Security, at the "API Access" tab, in the "SERVICE" column, you should be able to see the entry. (Except that the dashboard seems to capitalize the first letter in its display, so "dns" will appear as "Dns".)

Sangeeth G

Oh yeah, That's right. I see the "Dns' in service Endpoint in the API access seems to be not maching with cloudify 'dns'.

Is there any way to override this ?

Andrew Gauld

Good. You can see the entry. The display may not match what's really there, since the display may be showing "Dns" when what's really there is "dns". You may be able to use the OpenStack command line interface (I think it may require admin access) to look at keystone's service catalog, and that should show the services and their endpoints and regions, exactly as they are entered. You want to see that, for the region you're using, there is a public URL for the service type of "dns".

Sangeeth G

From the openstack cli I have already verified, the service type is in lowercase with public url for the service type 'dns' except that horizon displays the first char in uppercase.

Andrew Gauld

Good. Then the only other possibility I know of would be a mismatched name of the "region". The name of the region for the service type has to match the name of the region in the bootstrap configuration. If that's not it, I'm out of ideas.

Sangeeth G

Thanks Andrew. That was helpful. It looked like the problem with the region name. Uppercase here again. Changed it and it proceeded with the deployment. But exited over here with credential issue. Is there something specific I need to use since I used the same key for DCAE as well which I used for all other ONAP VMs.

And when I login separate I still see the same issue.

+ nc -z -v -w5 10.53.172.109 22

10.53.172.109: inverse host lookup failed: Unknown host

(UNKNOWN) [10.53.172.109] 22 (ssh) : Connection refused

+ echo .

+ nc -z -v -w5 10.53.172.109 22

10.53.172.109: inverse host lookup failed: Unknown host

(UNKNOWN) [10.53.172.109] 22 (ssh) open

+ sleep 10

Installing Cloudify Manager on 10.53.172.109.

+ echo 'Installing Cloudify Manager on 10.53.172.109.'

++ sed s/PVTIP=//

++ grep PVTIP

++ ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no -i ./key600 centos@10.53.172.109 'echo PVTIP=`curl --silent http://169.254.169.254/2009-04-04/meta-data/local-ipv4`'

Warning: Permanently added '10.53.172.109' (ECDSA) to the list of known hosts.

Permission denied (publickey,gssapi-keyex,gssapi-with-mic).

+ PVTIP=

Cannot access specified machine at 10.53.172.109 using supplied credentials

+ '[' '' = '' ']'

+ echo Cannot access specified machine at 10.53.172.109 using supplied credentials

+ exit

root@onap-dcae-bootstrap:~#

Andrew Gauld

Glad to hear you're making progress.

This looks like a straight ssh issue. It looks like you've got good connectivity to the VM. Other possibilities are: the VM image isn't a standard CentOS 7 image (and uses some login other than "centos" for administration), for example, an Ubuntu image would not work, or the private key (onap.pem) doesn't match the public key the image was booted with (which your comment seems to indicate you've checked). You might also try looking at the VMs overview and log on the Horizon dashboard to verify that the public key used was the one you expect and that there weren't any obvious problems booting up the VM.

Sangeeth G

Yeah. I tried the key name attached via openstack is similar to the other ONAP VMs that I brought up. Image also looks good. I tried bringing up a test instance using centos image via a new key I created and I am able to login using centos user for that. I did not find issue in that. But seeing issue when I use the same key used for all the other ONAP VMs

Andrew Gauld

I'm out of suggestions.

Sangeeth G

Is there an option, I can use separate key only for DCAE VMs ?

Andrew Gauld

As far as I'm aware, this is all standard OpenStack stuff. You create ssh key pairs, according to your preferences or to conform to local organization policies, you upload the public keys to OpenStack, giving them names to help you keep track of them, when the VMs are created, the names for the public keys are specified, and then you can connect to the VMs using the matching private keys. The bootstrap just needs to know the name you gave the public key, in OpenStack, and where to find the matching private key file.

Sangeeth G

Oops. My bad. May be I wasn't asking the question right.

I will explain in detail.

Actually I would like to know if I create a new key on openstack specifically for DCAE VMs and will I be able to use it in the config file of dcae. Since when I looked at the dcae bootstrap vm, the key files are pub.txt for public and priv.txt for private key and it looks this is being used for all. So if add my new pub key and priv key that I created and use it to bootstrap dcae vms, wont there be impact in communication between dcae vms and non dcae vms in ONAP.

Thanks again for providing support.

Andrew Gauld

I don't know of any cases where using separate ssh keys would be a problem.

Rebecca Lantz

Are SNMP Collector and Mapper available to use in Beijing release?

Lusheng Ji

Rebecca, Both SNMP Trap collector and Mapper are stretch goals for DCAE Beijing. As things currently stand, we are very hopeful that both will be done. Thanks. Lusheng

SOUMYA PATTANAYAK

Hi,

Is there any document/steps available to build and deploy the dcaegen2 into my local VM ? all platform and service components.