Project Name:

- Proposed name for the project: Multi VIM/Cloud for Infrastructure Providers

- Proposed name for the repository: multicloud

Project description:

Motivation

- ONAP needs underlying virtualized infrastructure to deploy, run, and manage network services and VNFs.

- The service providers always look for flexibility and choice in selecting virtual and cloud infrastructure implementations, for example, on-premise private cloud, public cloud, or hybrid cloud implementations, and related network backends.

- ONAP needs to maintain platform backward compatibility with every new release.

Goal

- Multi-VIM/Cloud project aims to enable ONAP to deploy and run on multiple infrastructure environments, for example, OpenStack and its different distributions (e.g. vanilla OpenStack, Wind River, etc...), public and private clouds (e.g. VMware, Azure), and micro services containers, etc.

- Multi-VIM/Cloud project will provide a Cloud Mediation Layer supporting multiple infrastructures and network backends so as to effectively prevents vendor lock-in.

- Multi-VIM/Cloud project decouples the evolution of ONAP platform from the evolution of underlying cloud infrastructure, and minimizes the impact on the deployed ONAP while upgrading the underlying cloud infrastructures independently.

Scope:

The scope of Multi-VIM/Cloud project is a plugable and extensible framework that

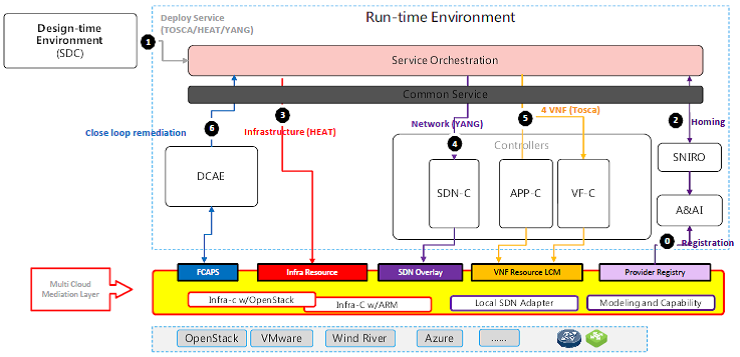

- provides a Multi-VIM/Cloud Mediation Layer which includes the following functional modules

- Provider Registry to register infrastructure site/location/region and their attributes and capabilities in A&AI

- Infra Resource to manage resource request (compute, storage and memory) from SO, DCAE, or other ONAP components, so as to get VM created and VNF instantiated at the right infrastructure

- SDN Overlay to configure overlay network via local SDN controllers for the corresponding cloud infrastructure

- VNF Resource LCM to perform VM lifecycle management as requested by VNFM (APP-C or VNF-C)

- FCAPS to report infrastructure resource metrics (utilization, availability, health, performance) to DCAE Collectors for Close Loop Remediation

- provides a common northbound interface (NBI) / Multi-Cloud APIs of the functional modules to be consumed by SO, SDN-C, APP-C, VF-C, DCAE etc.

- provides a common abstraction model

- provides the ability to

- handle differences in models

- generate or extend NBI based on the functional model of underlying infrastructure

- implement adapters for different providers.

Across the project, the implementation of any differentiated functionalities will be done in a way where ONAP users can decide if to use or not to use those functionalities.

Multi-VIM/Cloud project will align with the Common Controller Framework to enable reuse by different ONAP elements.

Deliverables of Release One:

In R1, we target to support

- Maintain OpenStack APIs as the primary interface (Nova, Neutron, etc) to mitigate the risk and impact to other projects

- As of this date, we expect to support Vanilla OpenStack based on Ocata, and commercial OpenStack based on Mitaka (see below)

- Other OpenStack distributions in theory should work, but need other cloud providers to commit resources in the scope of R1 (Redhat, Mirantis, Canonical, etc)

- Provide support for 4 cloud providers and align with R1 use case

- Vanilla OpenStack, VMware Integrated OpenStack, Wind River Titanium Server, and Microsoft Azure (Azure to provide HEAT to ARM translator)

- Minimal goal: any single cloud provider from above across multi-sites (TIC edge and TIC core)

- including implementation of the adapters for above clouds

- Stretch goal: mix-match of different cloud providers across multi-sites

- Minimal goal: any single cloud provider from above across multi-sites (TIC edge and TIC core)

- Vanilla OpenStack, VMware Integrated OpenStack, Wind River Titanium Server, and Microsoft Azure (Azure to provide HEAT to ARM translator)

Architecture Alignment:

- How does this project fit into the rest of the ONAP Architecture?

The proposed Multi-VIM/Cloud Mediation Layer consists of five functional modules, which interacts with SO, SDN-C, APP-C, VF-C, DCAE and A&AI respectively. It will act as the single access point to be called by these components for accessing the cloud and virtual infrastructure. Furthermore, it will interact with SDN-C component to configure overlay network via local SDN controllers for both intra-DC connectivity and inter-DC connectivity of the corresponding cloud infrastructure. Thus it is also the single access point for SDN-C to work with other local SDN Controllers. Applications/VNFs can be homed to the different cloud providers through the standard ONAP methods. For automated homing (SNIRO), different cloud providers can register attributes that differentiate their cloud platforms (e.g., reliability, latency, other capabilities) in A&AI and application placement policies/constraints can request for these specific properties (e.g., reliability > 0.999).

Is there any overlap with other ONAP components or other Open Source projects?

There is no intentional or unintentional overlap with other ONAP components or other Open Source projects to the best of our knowledge

What other ONAP projects does this project depend on?

Consumers of Multi-VIM/Cloud – SO, SDN-C, APP-C, VF-C, DCAE

Producers for Multi-VIM/Cloud – DCAE, A&AI\

Dependencies – Modeling

Alignment of Reusable APIs – Common Controller Framework

Indirect Impact and Collaboration – SNIRO, SDC

- How does this align with external standards/specifications?

- Support existed functions

- Information/data models by ONAP modeling project

- Compliant with ETSI NFV architecture framework

- VIM, NFVI, Vi-Vnfm, and Or-Vi

- Are there dependencies with other open source projects?

Cassandra, OpenStack Java sdk, AWS Java sdk, Azure and Bare metal.

Resources:

- PTL

Bin Yang, biny993, bin.yang@windriver.com, Wind River

- Names, Gerrit IDs, emails, and company affiliations of the committers

- Anbing Zhang, zhanganbing@chinamobile.com, China Mobile

- Bin Hu, bh526r, bh526r@att.com, AT&T

- Bin Yang, biny993, bin.yang@windriver.com, Wind River

- Ethan Lynn, ethanlynnl@vmware.com, VMware

Xinhui Li, xinhuili, lxinhui@vmware.com, VMware

- Names and affiliations of any other contributors

Alex Vul, alex.vul@intel.com, Intel

- Alon Strikovsky, alon.Strikovsky@amdocs.com, Amdocs

- Anil Vishnoi, vishnoianil@gmail.com,

- Andrew Philip, aphilip@microsoft.com, Microsoft

- Arash Hekmat, arash.hekmat@amdocs.com, Amdocs

- Bin Sun, bins@vmware.com, VMware

- Claude Noshpitz cn5542@att.com, AT&T

- Dominic Lunanuova, dgl@research.att.com, AT&T

- Gautam S, GAUTAMS@amdocs.com, Amdocs

- Gil Hellmann, gil.hellmann@windriver.com, Wind River

- Haibin Huang, haibin.haung@intel.com, Intel

- Hong Hui Xiao, honghui_xiao@yeah.net,

- Huang Zhuoyao, haunt.zhuoyao@zte.com.cn, ZTE

- Isaku Yanahata, isaku.yamahata@intel.com, Intel

- Jinhua Fu, fu.jinhua@zte.com.cn, ZTE

- John Murray, jm2932@att.com, AT&T

- Kanagaraj Manickam, mkr1481, kanagaraj.manickam@huawei.com, Huawei

- Liang Ke, lokyse@163.com,

- Madhu Nunna, mnunna@mirantis.com, Mirantis

- Manoj K Nair, manoj.k.nair@netcracker.com, NetCracker Technology

- Maopeng Zhang, zhang.maopeng1@zte.com.cn, ZTE

- Marcin Bednarz, mbednarz@mirantis.com, Mirantis

- Matti Hiltunen, hiltunen@att.com, AT&T

- Michael O'Brien, frank.obrien@amdocs.com, Amdocs

- Piyush Garg, piyush.garg1@amdocs.com, Amdocs

- Ram Koya, rk541m@att.com, AT&T

- Ramesh Tammana, ramesht@vmware.com, VMware

- Ramki Krishnan, ramkik@vmware.com, VMware

- Ramu N, rams.nsm@gmail.com,

- Robert Tao, roberttao@huawei.com, Huawei

- Sandeep Shah, ss00473517@techmahindra.com, Tech Mahindra

- Satish Addagadda, sa482b@att.com, AT&T

Shimon Seretensky shimon.seretensky@amdocs.com, Amdocs

- Srinivasa Addepalli, srinivasa.r.addepalli@intel.com, Intel

- Sumit Verdi, sverdi@vmware.com, VMware

- Tapan Majhi, Tapan.Majhi@amdocs.com, Amdocs

- Tom Tofigh, ttofigh@gmail.com,

- Tyler Smith, ts4124@att.com, AT&T

Varun Gudisena, vg411h@att.com, AT&T

Victor Gao, victor.gao@huawei.com, Huawei

Virginie Dotta, vdotta@fr.ibm.com, IBM

Yang Xu, yang.xu3@huawei.com, Huawei

- Project Roles (include RACI chart, if applicable)

Other Information:

- link to seed code (if applicable)

OPEN-O

- seed code for Multi VIM/Cloud framework: https://gerrit.open-o.org/r/multivimdriver-broker

- seed code for OpenStack: https://gerrit.open-o.org/r/multivimdriver-openstack

- seed code for VMware: https://gerrit.open-o.org/r/multivimdriver-vmware-vio

ECOMP

- seed code for Multi VIM/Cloud framework: https://github.com/att/AJSC/tree/master/cdp-pal/cdp-pal-common

- seed code for OpenStack: https://github.com/att/AJSC/tree/master/cdp-pal/cdp-pal-openstack

- Vendor Neutral

- if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

- Meets Board policy (including IPR)

Use the above information to create a key project facts section on your project page

Key Project Facts

Project Name:

- JIRA project name: multicloud

- JIRA project prefix: multicloud

Repo name:

- org.onap.multicloud/framework

- org.onap.multicloud/openstack

- org.onap.multicloud/vmware

- org.onap.multicloud/azure

IRC: http://webchat.freenode.net/?channels=onap-multicloud

Lifecycle State: incubation

PTL: Bin Yang, Wind River

mailing list tag: multicloud

Committers:

Contributors

- alex.vul@intel.com

- alon.Strikovsky@amdocs.com

- Anton.Snitser@amdocs.com

- arash.hekmat@amdocs.com

- bins@vmware.com

- claude.noshpitz@att.com

- EyalH@Amdocs.com

- frank.obrien@amdocs.com

- fu.jinhua@zte.com.cn

- gautams@amdocs.com

- gil.hellmann@windriver.com

- haibin.haung@intel.com

- hiltunen@att.com

- haunt.zhuoyao@zte.com.cn

- honghui_xiao@yeah.net

- isaku.yamahata@intel.com

- jm2932@att.com

- kanagaraj.manickam@huawei.com

- lokyse@163.com

- ramesht@vmware.com

- ramkik@vmware.com

- rk541m@att.com

- sa482b@att.com

- Shimon.Seretensky@amdocs.com

- srinivasa.r.addepalli@intel.com

- sverdi@vmware.com

- tapan.majhi@amdocs.com

- ttofigh@gmail.com

- ts4124@att.com

- vg411h@att.com

- rams.nsm@gmail.com

- roberttao@huawei.com

- vdotta@fr.ibm.com

- victor.gao@huawei.com

- vishnoianil@gmail.com

- yang.xu3@huawei.com

- zhang.maopeng1@zte.com.cn

*Link to TSC approval:

Link to approval of additional submitters:

in selecting virtual and cloud infrastructure implementations

63 Comments

Oliver Spatscheck

I am somewhat concerned with the focus on WindRiver OpenStack. Why can't we just support OpenStack? Currently the seed code works on Rackspace (openstack). Internally most partner companies use a variety of OpenStack setups. So I would suggest that we support generic open stack in release 1. Otherwise we really limit the value of this.

Roger Maitland

Given that MSO directly uses the OpenStack APIs what is your plan to introduce the multi-vim layer as a separate component without impacting of the current ONAP functionality? Are you planning to re-factor the Camunda Java code within MSO to use your new multi-vim APIs?

danny lin

Hi, Oliver,

The goal of the project here is to support multiple cloud infrastructure providers, and maximize the value for service providers. The scope is to build a common VIM framework that allows plugin of any choice of implementations, VMware, Wind River, or Azure, and make sure they run seamlessly within ONAP. The project actually assumes the generic OpenStack support as the baseline (e.g., Mitaka or Ocata release), and then enables different providers to offer unique and differentiated functionalities. Across the project the implementation of any differentiated functionalities will be done in a way that will allow ONAP to work in a way where the ONAP user can decide if to use or not to use those functionalities.

But your point is well taken, in that, we should be explicit in the project scope that baseline OpenStack is also supported

Brian Freeman

Can we leverage another opensource project for the actual interfaces to the clouds so that maintenance of those API changes over time is left to a cloud/mediation layer group ? Seems like being able to plugin an opensource mediation layer that has a purpose to support all clouds (those mentioned plus AWS and Oracle)

danny lin

Hi Roger,

See reply above. The intent is to use a baseline OpenStack version (e.g., Mitaka or Ocata), but also allow for additional plugins/extensions. The specific implementation details will be worked out by the project committers but it will be done in a way that doesn’t force the user to use those plug-ins / extensions if they don’t want.

Roger Maitland

How about jclouds (https://jclouds.apache.org/), a Java based multi-cloud toolkit that supports a set of interesting VIMs?

danny lin

Brian, that's an interesting point, as in our project discussion, we also viewed this being somewhat a cloud mediation layer. The vision for the project is really to enable multiple cloud providers, and VIM is just a means to the end. The NFVi under a VIM is where each cloud provider adds value. Maybe it makes sense for this project to also take care of the cloud/mediation layer? Interested to hear your thought.

Gil Hellmann

Brian & Roger, thanks for your interest in our project proposal and taking the time to provide feedback and suggestions, the team truly appreciate it. You are of course also welcome to join our team. To add to Danny's comment, from looking at the project which you suggest as a potential starting point I can see that it is a "multi-cloud toolkit for the Java platform that gives you the freedom to create applications that are portable across clouds while giving you full control to use cloud-specific features" while this is look very good project, it is not what our project is about. For example jclouds is coming to replace the use of REST APIs which will be very limiting in our context. Also it a layer to allow Java application to run on top of different clouds, this again will limit us significantly if every thing that interface will need to be in Java. I maybe wrong in my understanding of the jclouds project, I am not proclaiming to be an expert in this project, those comments are just based on looking at the project documentation, and description. Your feedback, comment is welcome, and highly apprichated.

Brian Freeman

Our initial roadmap was to go in an change SO, APPC,DCAE to use a mediation layer and not go direct to clouds so that the same API's to ONAP could be mediated out to the cloud prividers. Mediation layer dealt with differences in models etc. We have some internal code (adapters in cdp-pal that were not yet opensourced) that we could use for this but we thought that looking at other opensource code bases that could meet the same common ONAP model to multiple cloud providers (Openstack and its versions, VMWare, Azure, IBM, AWS, Oracle, Rackspace, etc) would make sense. jclouds looks interesting and they list Cloudify as a user of jclouds so I'm not sure how jclouds fits into the eco-system but this is the type of discussion we need to have.

Gil Hellmann

Brian, not sure if you are aware that in OPEN-O we had a multi-VIM driver (smaller scope that what we want to achieve here, but a good start) which exist and support OpenStack and VMWare. We can also look at using this as a seed code, or if we believe it is better to start fresh or with and existing eCOMP code as a seed code. Also I agree that having those type of discussions are good and beneficial. Investing some time on the planing at the start and agreeing on the approach and architecture will go a long way later.

DeWayne Filppi

Just FYI, Cloudify doesn't use JClouds. It used to long ago, but now it's Python and approached multi-VIM via a plugin philosophy rather than a least common denominator philosophy.

danny lin

Brian and Gil, these are excellent suggestions. Looks like the initial road map of ECOMP to have a common cloud mediation layer aligns very well to how we envision this multi-vim/multi-cloud project to be, in the sense that, we see a common interface layer at the northbound of this architectural module, and then within this module, provide the ability to register, discover, and execute any participial cloud provider, including the ability to advertise or match the capabilities of the cloud provider to the needs of application and service, under a common model. It feels more and more that the cloud mediation layer can be included the scope of this project. Definitely agree we should discuss this. I will setup some time for us after we get through this week's crazy project proposal submission and OS Submit

Brian Freeman

Gil, I have looked at the VIM code but I'm not an expert at mediation. if you look at the cdp-pal source under the cdp-pal-common section you can get a sense of where I am coming from in terms of a standard model (for some reason cdp-pal calls it "rime") that would then be translated to specific cloud APIs.

(https://github.com/att/AJSC/tree/master/cdp-pal) - APPC uses this library to talk to Openstack but not the other components in ONAP.

Since we only opensouced the cdp-pal-openstack and not the Azure, Aws or VMware plugins its a leap of faith to see how we mapped from a common model to the cloud specific models.

I'm not saying that we should use cdp-pal but I do think we need to make sure we have an approach with a common abstraction model for the cloud.

Does Multi-VIM have a common abstraction model ? Does it fit with other multi-cloud api's - I keep hearing that Cloudify has one as well and we have some exposure to Cloudify in some other work we are doing.

to be a universal, abstract mechanism to access any cloud infrastructure, the actual data objects of the underlying cloud implementation must be translated to a common, well-defined model that is defined by rime. This means that any client that accesses a cloud infrastructure implementation using rime does so using rime's definitions of data types. This ensures that the behavior is always the same as seen by the client regardless of the underlyingimplementation

Ramprasad Koya

CDP-PAL-COMMON currently supports Baremetal, Openstack, Vmware, Azure and AWS. Please take a look at the seed code https://github.com/att/AJSC/tree/master/cdp-pal that really aligns with what we are looking here right out of the gate.

Gil Hellmann

Ram, looking in the in re branch that you provided I can only see OpenStack support under cep-pal-openstack am I missing some thing? In any case we need to look deeper into this as it dose look promising and may be a good starting point. Thanks for sharing.

Victor Morales

Do we have an irc channel? These comments are great but maybe interactive discussions can be quicker

Gil Hellmann

Victor, stay tuned, comping soon. Hopefully very soon

xinhuili

Victor, welcome to /join #onap-multicloud for more discussion there.

Victor Morales

+1 I'll be there(irc: electrocucaracha), thanks

Brian Freeman

I do not think the role of MultiVIM/Cloud / Mediation is to mediate between SDNC Controllers. I think the term "local" means internal to a cloud provider so in this case the MultiVIM would be the common API into neutron and other networking functions inside the cloud. Within local controllers for a cloud provider we can not dictate how that would occur. For instance Gluon is a mechanism that a cloud provider could use to arbitrate between local controllers.

I think you might want to remove this bullet since it is outside of ONAP's domain.

jamil chawki

I think we need to limit the scope to multi cloud and to remove

allows global SDN Controller to choose and work with multiple local SDN Controller backends

Bin Hu

Brian, thank you for comment. I removed the bullet which caused the confusion.

Bin Hu

Jamil, thank you for comment. Multi Cloud includes the multiple local SDN controllers within cloud providers.

I think the confusion was from the bullet "enables multiple local SDN Controller backends to interoperate collaboratively and simultaneously", which might be misunderstood that Mediation Layer will "federate" between local SDN controllers. This is not the intention. I removed this confusing point. Thanks. Bin

"

zhang ab

In R1, Why is demo use case only supported by single cloud provider? What's the difficulties if it support multi simultaneous cloud providers ?

Gil Hellmann

Zhang, thanks for the question. As R1 release schedule is very aggressive and there are a lot of unknown things the team felt that while our ultimate goal is to support simultaneous cloud providers (private on premassis and public) it may be challenging for R1 due to the tied schedule. So for R1 we suggested that supporting a single cloud will be a must, and a starch goal for R1 will be multiple clouds. For sure the plan is to get to point where we support multiple clouds simultaneously.

zhang ab

In R1?is Baseline OpenStack version which will be supported M ,O or Other version?

Gil Hellmann

Still have not decided, and probably more then one. We are looking to align with OpenStack version(s) which we also widely adopted by the commercial vendor community so we have a pass forward to support the two use cases for R1. Many vendors tend to pick certain releases of OpenStack, rather then all of them, for example Mitaka is widely adopted among different commercial vendors, while Liberty or Ocata are less. As a past example in the multi-VIM driver project in OPEN-O the support was for the latest OpenStack version at the time which was Kilo and Newton, so probably in ONAP it would be on the same lines, maybe Mitaka & Ocata, Newton & Ocata, or even Newton & Pike.

danny lin

To add to Gil's comment, the current thinking is Mitaka or Ocata. Any input/suggestion/feedback welcomed.

virginie dotta

Danny,

Also, do you target to support Openstack release or distribution from vendors (redHat, Mirantis...) ?

I mean, most of customers prefer to install their Openstack environment by using a distro rather than install it component by component.

But, a distro installation means more features like an installer, security, high availability...

Do we have inputs on the rackspace Openstack environment ?

From our side, we have installed a "vanilla" openstack environment, component by component based on Ocata release, so we can share our experience.

Gil Hellmann

Virginie, thanks for you comment, and for interest in our project proposal.

All the "standard" / "vanilla" upstream OpenStack APIs which will be supported, which means any OpenStack distribution from the same major version release, for example Mitaka, Ocata should work. For the project it self we will validate "standard" / "vanilla" upstream OpenStack from openstack,org as well as commercial OpenStack distributions. As far as the commercial OpenStack distribution which will be validated / supported it will be up to the commercial vendor to join the project as contributor and help with the validation effort of there commercial OpenStack distribution.

As far as additional OpenStack extensions which may be available with some commercial distributions, the idea of the multi-VIM/Cloud project is to create a flexible nidation layer which allow the infrastructure layer (VIM/Cloud) to expose those extensions / capabilities to the NFVO layer, and at the same time to allow the upper layer to decide if to use them or not. So the upper layer is not forced to use the, Extensions such as HA, security, etc... The multi-VIM/Cloud will provide a standardized API/Metod on how to use those functionality, regardless to the VIM/Cloud type which runs on the infrastructure.

Of course we will love to hear, and learn from your experience using "vanilla" OpenStack.

Claude Noshpitz

It may be useful to talk more about scoping; in particular, to what extent are we expecting MultiVIM to handle areas where there are significant semantic differences among cloud-service providers?

One example would be around networking models, where CSPs may offer complex, interwoven facilities – private subnets, intra/inter-site routing, cross-tenant peering, policy-driven load balancing, VPN or other tunnel gateways, and so on – in contrast to the relatively straightforward Neutron model.

Commercial capabilities could be seen as quite different from those of an on-premises cloud, where there could be integration with non-standard WAN orchestration or proprietary storage pools.

It seems like the lowest-common-denominator approach, which treats anything "special" as an extension, is the right entry path. But at some point extensions can become common dependencies, affecting interoperability and accessibility to the broader community.

This becomes even more interesting as we start to look at services that enrich basic cloud resources – enhanced persistence and analytics, "serverless" deployment, special-purpose hardware, CDN distribution, specialized application monitoring.

As a starting point, it may be helpful to define a means of interrogating cloud service platforms so as to identify their capabilities in terms of a standardized taxonomy. Even if it's not all that rich to begin with, at least we could differentiate over time without breaking client code.

None of this is meant to bias the moving-forward discussion in one direction or another, just to point out that there's a broad range of potential "mediated" resources and services, and that we could probably sharpen the definitions around the MultiVIM project to ensure that we leave ourselves space to evolve the solution.

danny lin

Claude, I agree with your point. There needs to be some level of normalization of the standard taxonomy, which is also aligned to the overall information modeling defined for ONAP. The function module "Provider Registry" is intended to cover this aspect. Details need to be flushed out. In release 1, the objective is likely to be that we try our best not to break the existing client code base, while adding the ability to define, register, and discover certain cloud platform capabilities, driven out of the need from the use case we decide to support

Oleg Kaplan

Gil you keep deleting Amdocs committers. Amdocs decided to add 2 committers to this project.

Tapan.Majhi@amdocs.com,Amdocs

You are not on list of approvals for additional committers, otherwise i would contact you first to discuss

Please clarify on what base/process you deleted Amdocs names from the list of committers.

Gil Hellmann

Oleg, I have sent you an e-mail with the explanation about the process to become a committer in the project. The process is defined by the LF which the committer team has decided to follow it.

Before you added the 2 people, Liron has already added them, and Danny Lin which is the current project lead has already reply with a detailed e-mail about the process on how to become a committer. I have attached his e-mail to my replay to you.

The approvers for adding additional committers are the committers themselves, I am one of the contributors and the one that during the incubation phase maintain the project wiki page, pleas note that I have not removed your nominees from the resource list, just move them to the contributors section and until the committers approve them, this is following the process which the team agreed on, and was discussed during the team weekly meeting, which you are welcome to join ([multicloud] ONAP7, Wed UTC 13:00 / China 21:00 / Eastern 09:00 / Pacific 06:00) This is one of the way to present your request to add additional committers and get them approved.

DeWayne Filppi

To get very specific from the SO perspective:

1. I shop for a cloud that meets my needs in A&AI (which was placed there by multi-vim)

2. I take some identifier I get from A&AI to multi-vim and request an instance with particular attributes (e.g. flavor, key name,...)

3. Multi-vim sends me a routable IP address.

I assume I'd request network, storage, routing, etc... similarly. Close at all to the vision?

danny lin

By routable IP address, do you mean the IP address to the API end point of multi-VIM/Cloud? If so, I would think such IP would be stored inside A&AI. But I could be wrong on this.

On your second part of the question, we are aligned to that vision. Over time, multi-VIM/Cloud is responsible for instantiating compute, networking, and storage resource within a given cloud provider environment. We need to talk to SO team to iron out the specific call flow.

Thanks

Eric Debeau

We need to clarify the API definition. The Multi-VIM will be used by SO, APPC, VFC for Infra Resource and VNF LCM.

SO, APPC, VFC will not use OpenStack direct API to request resource creation (using Heat or Nova API). SO, APPC & VFC will request the Multi-VIM API (to be defined).

SO, APPC, VFC require some specific prameters (coming from AAI) to route towards the right VIM

It is key to define the multi-VIM northbound API for the various components that will consume it.

Sandeep Shah

Hi Danny/DeWayne - On your last comments regarding speaking with SO team to establish VIM call flows, please advise if this meeting has occurred and if there is any document on call flows that I can refer to? Thank you very much!

danny lin

Hi Sandeep,

Yes, there was a meeting with Seshu, PTL of SO project, last week. In a nutshell, in R1 MultiCloud will support ensure backward compatibility with existing ONAP component, and specifically in the context of SO integration, this means to provide support for HEAT API. In parallel, SO team is discussing with APP-C and VF-C team to see if it makes sense to delegate some of the virtual resource instantiation operations to APP-C/VF-C.

Danny

Manoj Nair

Appreciate if you can clarify the following

Gil Hellmann

Hi Manoj,

Thanks for your interest in the project. I will try to answer some of your questions.

Srinivasa Addepalli

Good discussion on NFVI (hardware features such as SGX, AES-NI, PCI accelerators or software features such as DPDK) feature awareness. I am wondering which component of ONAP is responsible in discovering the compute nodes and their capabilities? Normally, VIMs do the discovery and keep the compute node capabilities in VIMs. VIMs use them during VM placement to ensure that VMs are placed on right compute nodes. In ONAP too, are VIMs responsible to discover and keep the capabilities within them. What is the role of ONAP in regards to this? I guess ONAP needs to indicate what capabilities VM requires. What else is required? I guess that requires changes to various components. Is that something being worked out? I am interested in participating in those discussions.

Thanks

Srini

Manoj Nair

I guess this capability is typically implemented in a resource orchestrator or VNFM. But in ONAP context, this may be one characteristic that can be supported in Optimization Framework (SNIRO) which supports with decision on resource allocation/availability/placement. Currently there are two functions planned in Optimization Framework - Homing,Allocation and Change Management. Probably you can check if compute node capability check is planned in Optimization Framework. There was also a mail in ONAP mailing list proposing EPA as a use case to be considered in Release 2 , but I have not seen it being documented.

danny lin

Srinivasa and Manoj,

As part of MUlti-VIM/Cloud project, the NFVi infrastructure capabilities are discovered by the VIM and then registered within ONAP. The initial thinking is that they will be sent to either A&AI or SNIRO (now the Optimization Framework). So this is aligned to both of your comments.

For release R1 though, EPA is not in the scope, nor is the platform capability discovery. Would love to hear your ideas and/or contributions to help move this forward within this project in R2 and beyond

Danny

Srinivasa Addepalli

Thank you Danny.

You said "As part of Multi-VIM/Cloud project, the NFVi infrastructure capabilities are discovered by the VIM and then registered within ONAP". When I have read little bit about Optimization framework, I thought that this framework is mainly addressing the capability registration of multiple cloud providers and multiple sites of various cloud providers. OF will choose the site on which the VNF-C to be placed. But actual determination of which compute node to place the VNF-C is up to the VIM. Actual individual compute nodes are not registered is what I was thinking so far. Are you saying that OF or A&AI will have information of all compute nodes of all sites the ONAP deployment manages? Or are you also saying that VIM only provides meta information about capabilities of various sites to OF?

I guess TOSCA templates would be used by ONAP for service orchestration. I guess more TOSCA node properties need to be introduced to give hints on VDUs that takes advantage of various hardware features. Where is the work related to defining these properties happen? In OASIS TOSCA forum?

Srini

Manoj Nair

OSM already supports EPA parameters (non TOSCA) as part of their model and are documented here. I have also seen documentation in Open-O project supporting EPA parameters here.

Srinivasa Addepalli

Thanks Manoj. I did not find TOSCA properties related to EPA in the Open-O document you referred. Is there any other document?

Srinivasa Addepalli

Not sure why I did not find EPA requirements section in the first place. I found it. Thanks

Srinivasa Addepalli

Openstack Nova expects flavor to be given while creating virtual compute server as shown here: https://developer.openstack.org/api-ref/compute/#create-server. Normal virtual compute information (whether basic compute properties such as memory, but also extended properties as mentioned in Openstack EPA) are part of flavor records. Multiple flavors are created (again via Nova APIs) to meet various virtual compute requirements.

In TOSCA, as mentioned here https://wiki.open-o.org/display/AR/VNF+Guidelines?preview=/4983260/4983258/VNF%2520TOSCA%2520Template%2520Requirements%2520for%2520OpenO.docx, each virtual compute resource property is mentioned explicitly, like as shown here:

topology_template:

node_templates:

vdu_vNat:

capabilities:

virtual_compute:

properties:

virtual_memory:

numa_enabled: true

virtual_mem_size: 2 GB

requested_additional_capabilities:

numa:

support_mandatory: true

requested_additional_capability_name: numa

target_performance_parameters:

hw:numa_nodes: "2"

hw:numa_cpus.0: "0,1"

hw:numa_mem.0: "1024"

hw:numa_cpus.1: "2,3,4,5"

hw:numa_mem.1: "1024"

It means some component has to convert from TOSCA instance properities to flavors (in case of Openstack VIM) before VM is created . In case of Kubernetes VIM, these TOSCA instance properties would need to be converted to nodeselectors. Since each VIM has its own way of affinity, I guess Multi-VIM is responsible for this conversion. If so, I would expect MultiVIM northbound API take this information in generic fashion. Is that correct understanding? Is this being documented elsewhere and is there any draft API documentation?

ramki krishnan

Multi-VIM/Cloud supports existing OpenStack APIs as default functional modules. This way there are minimal code changes to existing ONAP modules that already use OpenStack. The details are captured in these documents (Multi VIM/Cloud Documents) and the source code.

Thanks,

Ramki

Srinivasa Addepalli

I understand from your response is that "Northbound API" of Multi-VIM component is same as Openstack NOVA/Neutron/Glance/Cinder API. Is that literally same as Openstack API or looks similar to Openstack API? I guess the URI endpoints would be different - as ONAP has its own URI namespace and also it is needed to have VIM instance as another parameter. Is everything else would be same as Openstack API? In Open-O, I understand that Multi-VIM broker has NB API little bit different from Openstack API as shown here: https://wiki.open-o.org/display/MUL/Documentation?preview=/4981059/4982233/MultiVIMDriverAPISpecification-v0.5.3.doc. Open-O Multi-VIM broker is mentioned as seed code and hence the query.

If Multi-VIM NB API is little bit different form Openstack API, could you please point out where I can find this information on changes or new API definition? Let me know if it is in works.

edit: I found this API document: https://wiki.onap.org/download/attachments/13599038/MultiVIM-onap-r1.doc?version=1&modificationDate=1503059219000&api=v2.

Since Multi-VIM is going to expose similar API as Openstack, I guess the Openstack plugin in Multi-VIM component may be simple passthrough. For other VIMs such as Kubernetes with Kubevirt, is there any analysis done whether this NB API can be translated easily to other VIM technologies?

Thanks

Srini

ramki krishnan

Hi Srinivas,

For APIs, you have the right document. The APIs will be finalized by the M3 milestone. Kubernetes is beyond R1 – evolving community discussion on use cases and architecture for beyond R1 is captured in Drafted Resources for Multi VIM/Cloud Team Review.

Thanks for you interest, would love your participation in the Multi-VIM/Cloud community meetings and discussions.

Thanks,

Ramki

Srinivasa Addepalli

My new role from September would allow me to participate on this.

Trying to understand the needs & requirements. Please validate on whether this is understanding of the working group.

My understanding and thoughts:

Multi-VIM has multiple VIM technology plugins (drivers), ability to support Multiple cloud operators and ability to support multiple sites (regions) in each cloud operator.

Multi-VIM defines concept of VIM-Instance. Each VIM-Instance is associated (registered) with VIM-technology, Operator (that manage the VIM-Instance) and region (where VIM–instance is deployed). In summary, VIM-instance identifier is combination of Cloud identifier and region identifier . My understanding is that this information is registered with A&AI. Is this registration done by Multi-VIM component? If so, how does Multi-VIM know the Cloud operators, regions, various VIM technologies. Is that coming from some ONAP management entity?

Flavor management: Each Cloud operator defines flavors (based on compute hardware they have and combinations of features they think their customer require). For example, AWS provides following flavors (AWS calls it as instance types): http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/instance-types.html. Operators using Openstack also define flavors one time (or change very infrequently). Essentially, operators define flavors statically. They may not expose API to define new flavors by external entities (in this case ONAP). Each Cloud operators also define which flavors are possible in various regions. EPA specific parameter values become part of each flavor.

Keeping above in mind, wouldn't it be easier for ONAP operator to define flavors and map ONAP flavors to Cloud operator flavors at static time instead of creating flavors dynamically (as indicated earlier in an email chain)?

Tacker and OpenBaton seems to have defined TOSCA property called 'flavor', 'flavor-type' respectively to represent flavor to be used by VDUs. Since, Tacker only support one openstack operator, same openstack flavor records are represented in TOSCA templates ( or TOSCA instance parameter YAML file). In the case of ONAP with Multi-VIM and Multi-Cloud, I guess ONAP needs to define its own flavors and provide both static mapping (in future even auto mapping) of these ONAP flavors to Operator specific flavors. This way, there is not much of complexity, at least for initial release, yet it supports EPA as it leaves this to VIM technologies & operators.

If it makes sense, let me know. I can present this in your meetings.

Thanks

Srini

ramki krishnan

Hi Srinivas,

Standardized workload representation across multiple clouds is an apt topic for us, would love to hear your thoughts.

Another related aspect to consider – can we examine standardized representation for service/application policies with performance-awareness? Related OpenStack summit presentation by me (https://www.openstack.org/videos/video/dell-developing-a-policy-driven-platform-aware-and-devops-friendly-nova-scheduler).

Our weekly meetings are usually packed with R1 issues, but we can certainly arrange a separate slot for this discussion. Are you coming to Paris? If so, we could do have a face-to-face discussion.

On your question on how the multi-cloud instance is chosen in R1 - it depends on the use case - for vcpe it is VID. For volte use case - it SO/VF-C - under final discussion. Beyond R1, we are looking at converging all these paths and the right multi cloud instance across distributed DCs being chosen by the optimization framework.

Thanks,

Ramki

(ramkik@vmware.com)

Bin Yang

To your questions about "It means some component has to convert from TOSCA instance properities to flavors (in case of Openstack VIM) before VM is created", in case of deploying a VNF with EPA features described in TOSCA, VF-C will do that. And MultiVIM exposes APIs to allow VF-C creating flavors dynamically. Hope I answered your questions.

Kubernetes VIM is not in R1 scope yet.

Srinivasa Addepalli

Yes you did.

In TOSCA, at least for now, each NFVI property is mentioned as individual property, as you mentioned, flavor records need to be created dynamically. Does it remove the flavor record once the Multi-VIM API 'create server' returns back or is there any other trigger for it to remove the flavor record? If later is true, is there any role for Multi-VIM to inform VF-C that the flavor record is no longer is required by underlying VIM.

Thanks

Srini

Bin Yang

For now, the flavor management belongs to consumer of Multi-VIM right now. e.g. VF-C will manage the flavor (create/delete).

George Zhao

First, all the links in Other Information: section are out of date.

I would like to understand a little more of MULTI-cloud, because also I see from this proposal is OpenStack, where is the multi part?

thanks,

George

Manoj Nair

Can you please clarify "Multi-VIM/Cloud project will align with the Common Controller Framework to enable reuse by different ONAP elements." in Scope above. Does it mean that going forward multi cloud plan to leverage the Common Controller Framework for implementation R2+ ? Is it aligned/different from what is being presented to the architecture committee here ?

ramki krishnan

We are fully aligned. Slide 2 of the presentation to the architecture committee lists the background material on “ONAP Multi Cloud Architectural vision for R2 and beyond” presented in the ONAP Paris Workshop. In this context, a presentation of high interest would be "Architectural options for Multi-vendor SDN Controller and Multi Cloud Deployments in a DC“ - https://wiki.onap.org/download/attachments/11928197/ONAP-mc-sdn.pdf?version=1&modificationDate=1506518708000&api=v2.

Arun Gupta

The github repositories are:

https://github.com/onap/multicloud-openstack

https://github.com/onap/multicloud-openstack-vmware

https://github.com/onap/multicloud-framework

https://github.com/onap/multicloud-openstack-windriver

https://github.com/onap/multicloud-azure

Fei Zhang

Hi, Bin and All,

One basic and simple question, is MultiCloud Mediation Layer is mandatory framework in ONAP or optional?

BestRegards

Fei

Bin Yang

Optional for now