Name of Use Case:

VoLTE

Use Case Authors:

AT&T, China Mobile, Huawei, ZTE, Nokia, Jio, VMWare, Wind River, BOCO

Description:

A Mobile Service Provider (SP) plans to deploy VoLTE services based on SDN/NFV. The SP is able to onboard the service via ONAP. Specific sub-use cases are:

- Service onboarding

- Service configuration

- Service termination

- Auto-scaling based on fault and/or performance

- Fault detection & correlation, and auto-healing

- Data correlation and analytics to support all sub use cases

ONAP will perform those functions in a reliable way. Which includes:

- the reliability, performance and serviceability of the ONAP platform itself

- security of the ONAP platform

- policy driven configuration management using standard APIs or scripting languages like chef/ansible (stretch goal)

- automated configuration audit and change management (stretch goal)

To connect the different Data centers ONAP will also have to interface with legacy systems and physical function to establish VPN connectivity in a brown field deployment.

Users and Benefit:

SPs benefit from VoLTE use case in the following aspects:

- service agility: more easy design of both VNF and network service, VNF onboarding, and agile service deployment.

- resource efficiency: through ONAP platform, the resource can be utilized more efficiently, as the services are deployed and scaled automatically on demand.

- operation automation and intelligence: through ONAP platform, especially integration with DCAE and policy framework, VoLTE VNFs and the service as a whole are expected to be managed with much less human interference and therefore will be more robust and intelligent.

VoLTE users benefit from the network service provided by SPs via ONAP, as their user experience will be improved, especially during the peak period of traffic

VNF:

Utilize vendors VNFs in the ONAP platform.

TIC Location | VNFs | Intended VNF Provider | VNF CSAR |

Edge | vSBC | Huawei | vSBC_Huawei.csar |

vPCSCF | Huawei | vPCRF_Huawei.csar | |

vSPGW | ZTE/Huawei | vSPGW_ZTE.csar | |

Core | vPCRF | Huawei | vPCRF_Huawei.csar |

VI/SCSCF | Nokia | VI/SCSCF_Nokia.csar | |

vTAS | Nokia | Confirmed | |

VHSS | Huawei | vHSS_Huawei.csar | |

vMME | ZTE/Huawei | vMME_Huawei.csar |

Note: The above captures the currently committed VNF providers, we are open to adding more VNF providers.

Note: The committed VNF providers will be responsible for providing support for licensing and technical assistance for VNF interowrking issues, while the core ONAP usecase testing team will be focused on platform validation.

NFVI+VIM:

Utilize vendors NFVI+VIMs in the ONAP platform.

TIC Location | NFVI+VIMs | Intended VIM Provider | Notes |

Edge | Titanium Cloud (OpenStack based) | Wind River | Confirmed |

VMware Integrated OpenStack | VMware | Confirmed | |

Core | Titanium Cloud (OpenStack based) | Wind River | Confirmed |

VMware Integrated OpenStack | VMware | Confirmed |

Note: The above captures the currently committed VIM providers, we are open to adding more VIM providers.

Note: The committed VIM providers will be responsible for providing support for licensing and technical assistance for VIM integration issues, while the core ONAP usecase testing team will be focused on platform validation.

Network equipment

Network equipment vendors.

| Network equipment | intended provider | Notes |

|---|---|---|

Bare Metal Host | Huawei, ZTE | Confirmed |

WAN/SPTN Router | Huawei/ZTE | Confirmed |

| DC Gateway | Huawei, ZTE | Confirmed |

| TOR | Huawei,ZTE | Confirmed |

| Wireless Access Point | Raisecom | Confirmed |

| VoLTE Terminal Devices | Raisecom | Confirmed |

Note: The above captures the currently committed HW providers, we are open to adding more HW providers.

Note: The committed HW providers will be responsible for providing support for licensing and technical assistance for HW integration issues, while the core ONAP usecase testing team will be focused on platform validation.

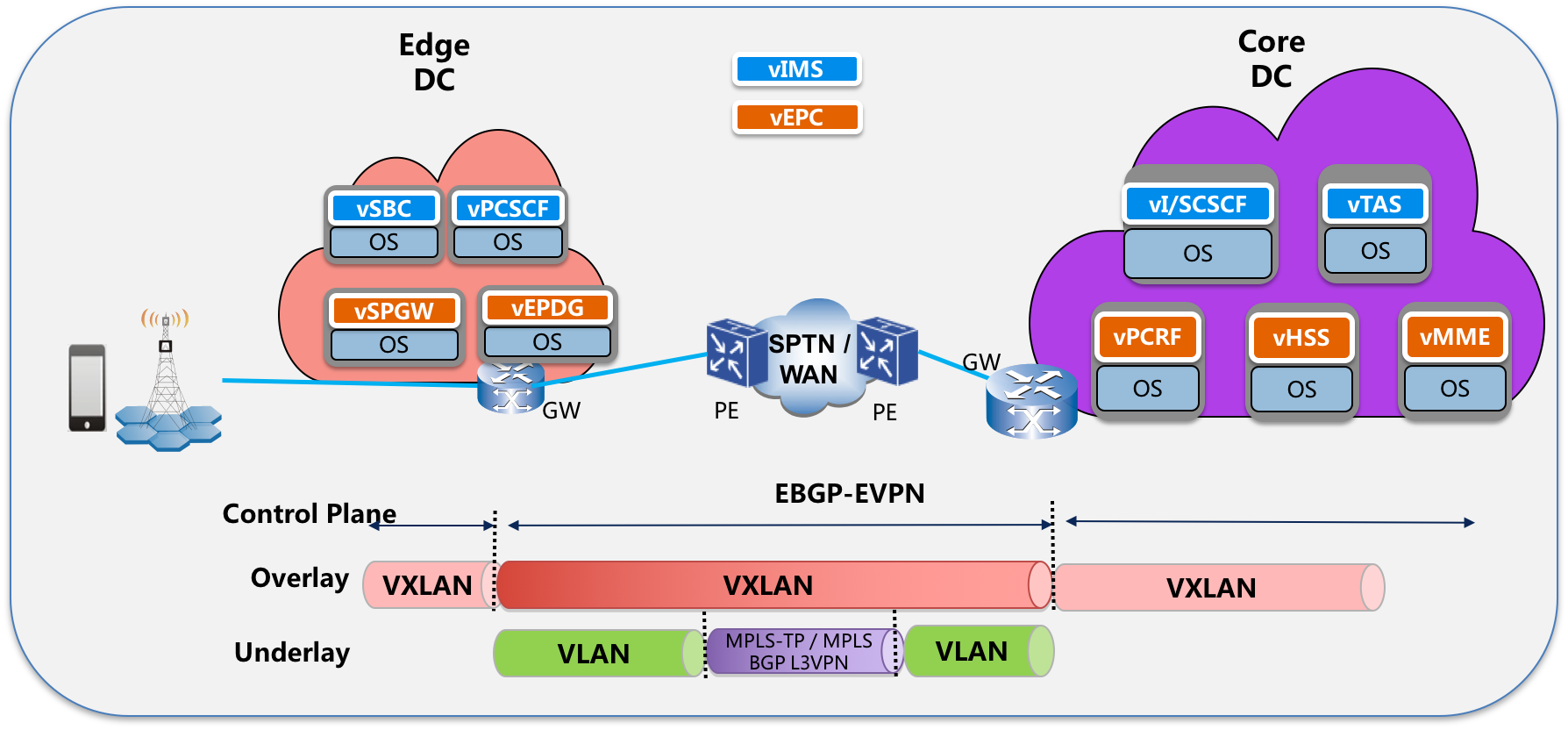

Topology Diagram:

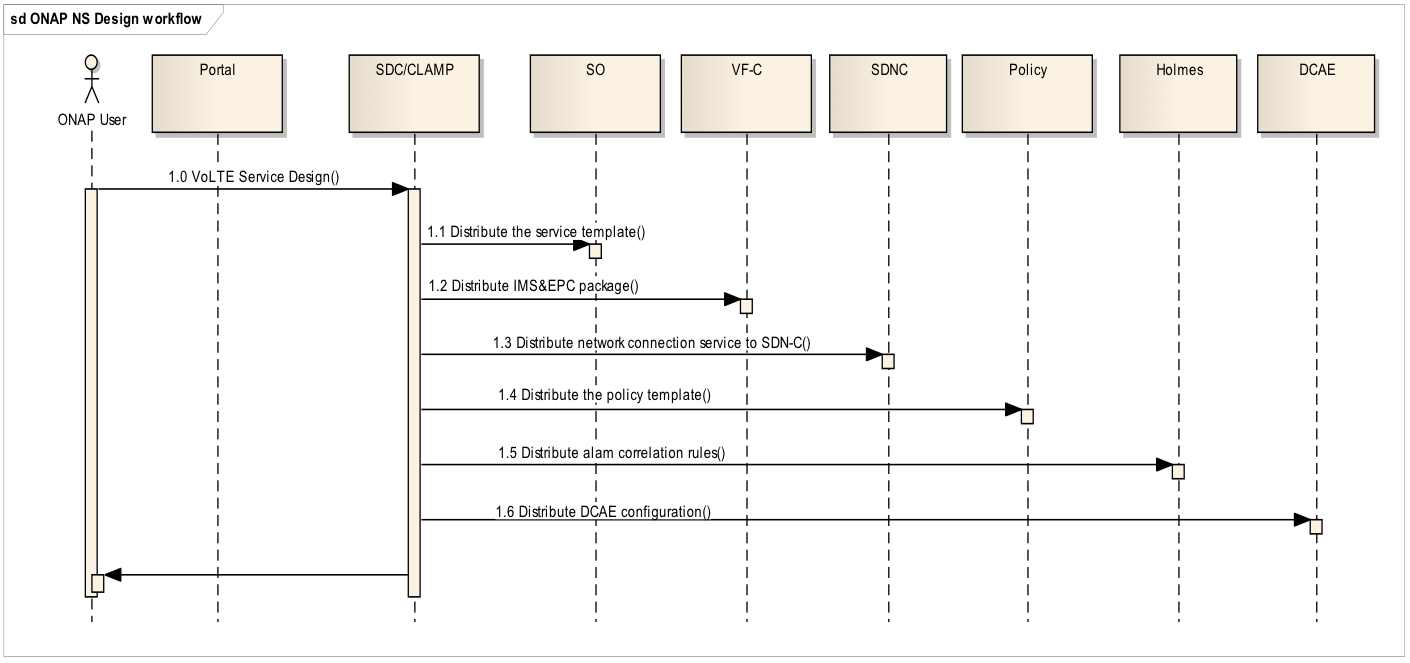

Work Flows:

Customer ordering

- Design

- 1.0: ONAP user uses SDC to import and design VoLTE E2E model/templates via Portal. The E2E models should include, vIMS + vEPC network services, SDN-WAN connection services, relevant auto-healing policy and alarm correlation rules, etc. All of these should be tied together as a E2E VoLTE model

SDC/CLAMP should distribute all above models to related components in run time when use need to instantiate the E2E VoLTE service. - 1.1 Distribute E2E model to SO.

- 1.2 Distribute vIMS+vEPC NS to VF-C,

- 1.3 Distribute SDN-WAN connection service to SDN-C

- 1.4 Distribute auto-healing policy to Policy engine

- 1.5 Distribute alarm correlation rules to Holmes engine.

- 1.6 Design and Distribute DCAE configuration from CLAMP

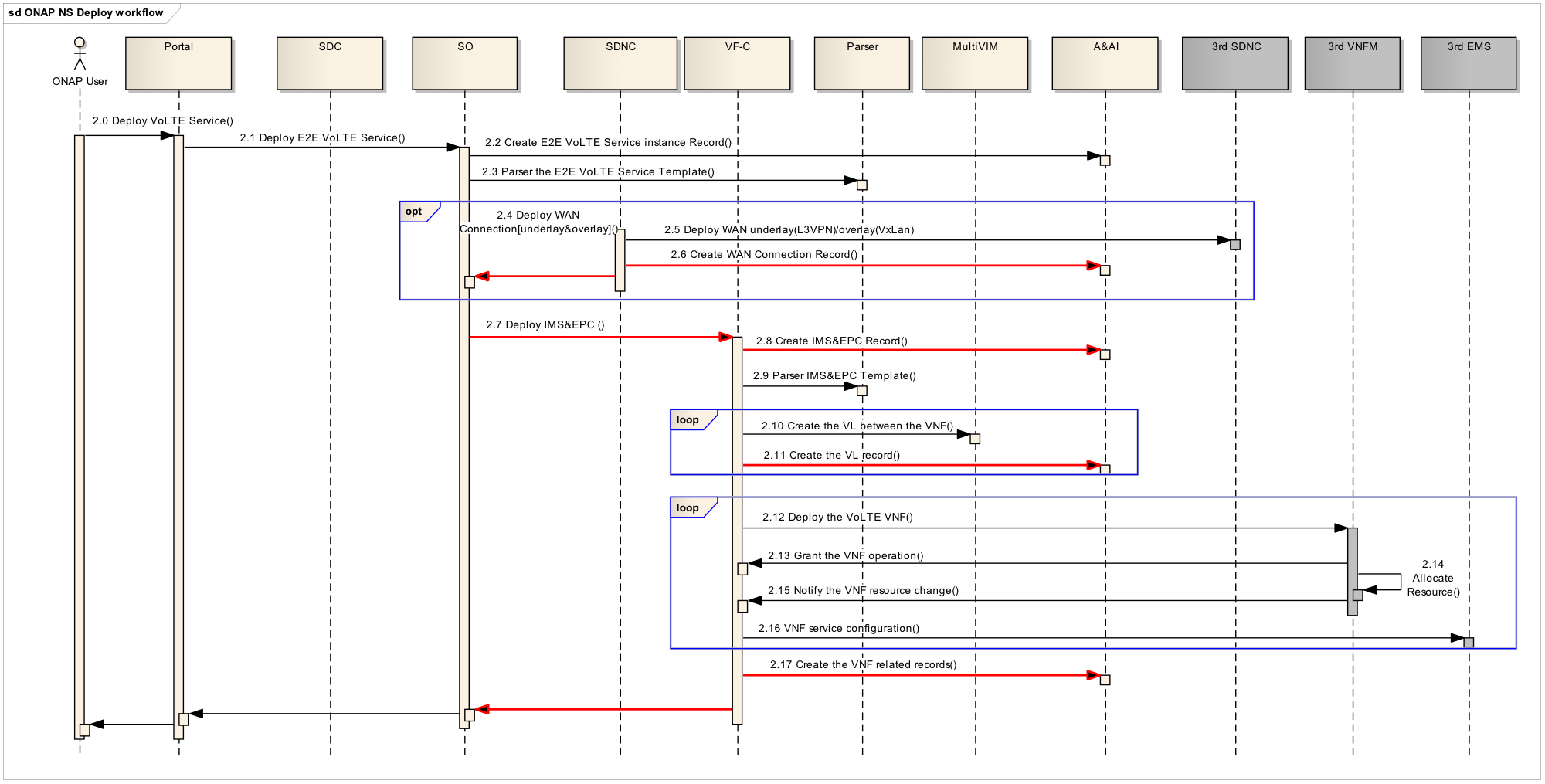

- Instantiation

- 2.0 The user clicks the button on portal to deploy the service.

- 2.1 Portal will send request to SO to deploy the VoLTE service.

- 2.2 SO talk with A&AI to create the new E2E instance in A&AI inventory

- 2.3 SO parse the E2E model via TOSCA parser provided by Modeling project.

- 2.4 SO send request to SDN-C to deploy SDN-WAN connection service, including underlay and overlay.

- 2.5 SDN-C need talk with 3rd SDN controller to provision the MPLS BGP L3VPN for underlay and setup VXLAN tunnel based on EVPN protocol for overlay.

- 2.6 During SDN-C deploying network connection service, it need to create related instances in A&AI inventory.

- 2.7 SO send request to VF-C to deploy vIMS+vEPC network service.

- 2.8 VF-C talk with A&AI to create NS instances in A&AI inventory.

- 2.9 VF-C parse the NS model via TOSCA parser to decompose NS to VNFs and recognize the relationship between VNFs.

- 2.10 VF-C talk with Multi-VIM to create virtual network connections between VNFs if needed

- 2.11 VF-C create related virtual link instances to A&AI inventory

- 2.12 VF-C send request to S-VNFM/G-VNFM to deploy each VNFs in terms of the mapping of VNF and VNFM.

- 2.13 Aligned with ETSI specs work flow, VNFM need to send granting resource request to VF-C, VF-C will response the granting result and related VIM information(such as url/username/password, etc) to VNFM

- 2.14 VNFM call VIM API to deploy VNF into VIM

- 2.15 VNFM need to send notification to VF-C to notify the changes of virtual resources, including VDU/VL/CP/VNFC, etc.

- 2.16 VF-C talk with 3rd EMS via EMS driver to do the service configuration.

- 2.17 VF-C need to create/update related records in A&AI inventory

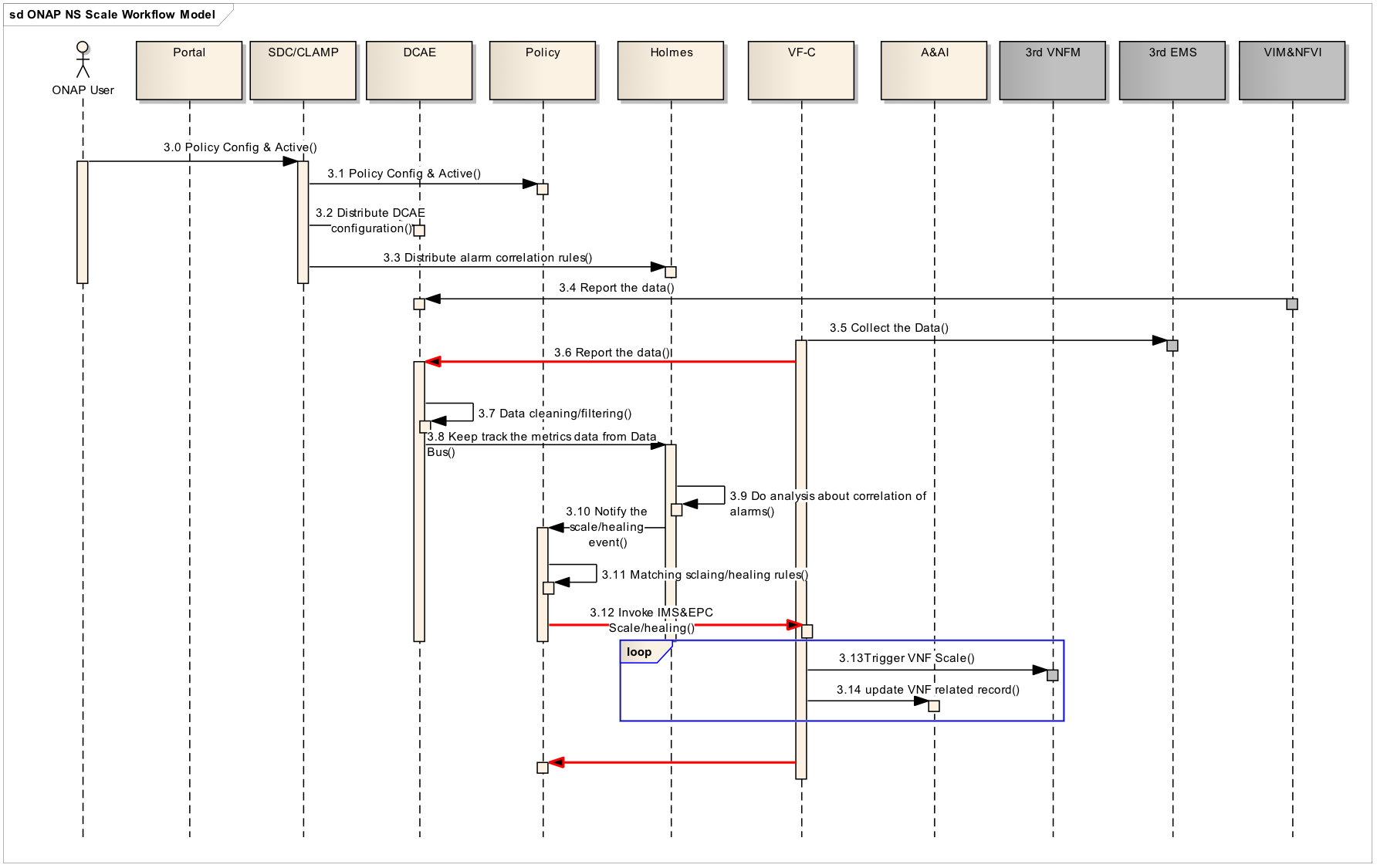

- VNF Auto-Scaling/Auto-healing

- 3.0 when user instantiate the service, need CLAMP to instantiate related control-loop to run time environment.

- 3.1 SDC will distribute auto-healing policy rules to policy engine, and distribute alarms correlation rules to Holmes engine as well

- 3.2 CLAMP portal will talk with DCEA to deploy related analytic application/collector tied to the services

- 3.3 CLAMP distribute alarm correlation rules to Holmes

- 3.4 During the runtime, Multi-VIM will report FCAPS metrics data from VIM/NFVI to DCAE in real-time or period.

- 3.5 VF-C will integrate with 3rd EMS, EMS will report/notify VNF service level FCAPS to VF-C in real-time or period.

- 3.6 VF-C will transfer VNF service level FCAPS metrics to DCAE aligned with DCAE’s data structure requirements.

- 3.7 Data filtering/cleaning inside DCAE, DCAE can send related events to data bus.

- 3.8 Holmes can keep track the events published to data bus

- 3.9 Holmes do the alarm correlation analysis based on the imported rules

- 3.10 Holmes send the result, the root cause, to the event bus.

- 3.11 Policy engine subscribe related topic on event bus. After receiving auto-healing/scaling triggering events, matching the events with exist rules.

- 3.12 Policy invoke VF-C APIs to do the action of auto-healing/scaling once matching events with scaling/healing rules.

- 3.13/3.14 VF-C update/create related instances information to A&AI inventory according to the changes of resources.

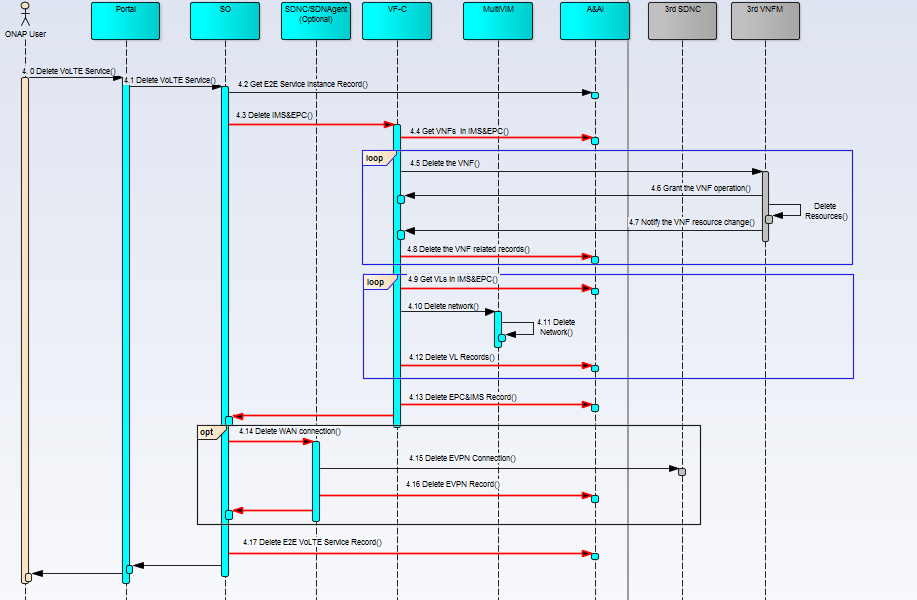

- Termination

- 4.0 The ONAP user trigger termination action via portal.

- 4.1 Portal talk with SO to delete the VoLTE service

- 4.2 SO check with A&AI if the instance exist.

- 4.3 SO talk with VF-C to request deletion of vIMS/vEPC network services

- 4.4 VF-C check with A&AI if the vIMS/vEPC instances exist.

- 4.5 VF-C talk with S-VNFM/G-VNFM to request deletion of VNFs and release sources.

- 4.6/4.7 Aligned with ETSI specs work flow, VNFM will delete/release virtual resources with the granting of VF-C and notify the changes(releasing) of virtual resources.

- 4.8 VF-C update/delete related resource instances in A&AI inventory

- 4.9 VF-C check with A&AI if the VL instances exist.

- 4.10 VF-C talk with Multi-VIM to request deletion of virtual network connected to VNFs

- 4.11 Multi-VIM delete related virtual network resources, such as network, sub-network and port, etc.

- 4.12 VF-C update/delete related VL resource instances in A&AI inventory

- 4.13 VF-C update/delete related NS instances in A&AI inventory

- 4.14 SO talk with SDN-C to request deletion of SDN-WAN connecting between clouds, release resources including overlay and underlay.

- 4.15 SDN-C talk with 3rd SDN controller to release connetion resources.

- 4.16 SDN-C update/delete related connection resource instances in A&AI inventory

- 4.17 SO update/delete related E2E service instances in A&AI inventory

Controll Automation:

Open Loop

- Auto ticket creation based on the policy (stretch goal)

Closed Loop

- Auto-scaling (stretch goal)

When a large-scale event, like concert, contest, is coming, the service traffic may increase continuously, the monitoring data of service may grow higher, or other similar things make the virtual resources located in TIC edge become resource-constrained. ONAP should automatically trigger VNF actions to horizontal scale out to add more virtual resource on data plane to cope with the traffic. On the contrary, when the event is done, which means the traffic goes down, ONAP should trigger VNF actions to scale in to reduce resource.

- Fault detection & correlation, and auto-healing

During the utilization of VoLTE service, faults alarms can be issued at various layers of the system, including hardware, resource and service layers. ONAP should detect these fault alarms and report to the system to do the alarm correlation to identify the root cause of a series of alarms and do the correction actions for auto healing accordingly.

After the fault detected and its root correlated, ONAP should do the auto-healing action as specified by a given policy to make the system back to normal.

More details about the VoLTE use case control loop.

Configuration flows (Stretch goal)

- Create (or onboard vendor provided) application configuration Gold standard (files) in Chef/Ansible server

- Create Chef cookbook or Ansible playbook (or onboard vendor provided artifacts) to audit and optionally update configuration on the VNF VM(s)

- Install the Chef client on the VM (Ansible doesn’t requires)

- After every upgrade or once application misconfiguration is detected, trigger auditing with update option to update configuration based on the Gold Standards

- Post-audit update, re-run audit, run healthcheck to verify application is running as expected

- Provide configuration change alert to Operation via control loop dashboard

Platform Requirements:

- Support for commercial VNFs

- Support for commercial S-VNFM/EMS

- Support for Multiple Cloud Infrastructure Platforms or VIMs

- Cross-DC NFV and SDN orchestration

- Telemetry collection for both resource and service layer

- Fault correlation application

- Policy for scaling/healing

Project Impact:

< list all projects that are impacted by this use case and identify any project which would have to be created >

- Modeling/Models

Provide TOSCA parser to support to parse NS/VNF or E2E models

Modeling will need to be added to describe how VNFs are to be instantiated, removed, healed (restart, rebuild), scaled, how metrics related are gathered, how events are received

Modeling will need to be added to describe the connection service (underlay/overlay) between cloud Edge and Core.

- SDC

Design vIMS/vEPC network service, TOSCA based. Who provides initial VNF templates? VNF Vendor.

Design SDN-WAN network connection service.

Design Auto-healing policy

Design Alarm correlation rules

Design work flow/DG used by SO/VF-C/SDN-C

Design E2E service, tie all above together. - SO

E2E service lifecycle management, decompose E2E service and talk with VF-C/SDN-C to instantiate services respectively in NFV/SDN domain.

- DCAE

Support metrics data collection on the VoLTE case and receipt of events as per the new model

Support NB APIs to notify/report metrics data collected from multi-components in real-time. - VF-C

network service(vIMS+vEPC) lifecycle management

Integrate with S-VNFM/G-VNFM to do VNF lifecycle management

Integrate with EMS to collect FCAPS metrics data of VNF level and do application configuration

Integrate with Multi-VIM to do virtual resource management

Transfer FCAPS data to DCAE aligned with DCAE’s requirements - SDN-C

network connection service lifecycle management

Integrate with 3rd party SDN Controllers to setup MPLS BGP L3VPN for underlay

Integrate with 3rd party SDN Controllers to setup VXLAN based on EVPN protocol tunnel for overlay - A&AI

Support the new data model

Support the integration with new added components - Policy

Support to define and execute auto-healing policy rules.

Support integration with VF-C for execution of auto-healing policy rules. - Multi-VIM

Support to aggregate multi version OpenStack to provide common NB APIs including resources management and FCAPS - Holmes

Integration with CLAMP for support alarm correlation rules definition and distribution and LCM.

Integration with DCAE for FCAPS metrics as input of Holmes.

Support for alarm correction execution. - CLAMP

Support auto-healing control loop lifecycle management (design, distribution, deletion, etc.).

Integration with Policy for auto-healing rules

Integration with Holmes for alarm correlation rules. - VNF-SDK

Support VNFs on-boarding included in VoLTE cases, such as packaging, validation and testing, etc.

- OOM

Support to deploy and operate new added components into ONAP, such as VF-C/Holmes - MSB

Support to route requests to components correctly. - Use Case UI

Support VoLTE case lifecycle management actions, monitor service instances status, display FCAPS metrics data in real time. - ESR

Support to register 3rd Party VIMs, VNFMs, EMSs and SDN controllers to ONAP

Priorities:

1 means the highest priority.

| Functional Platform Requirement | Priority | basic/stretch goal default basic goal |

|---|---|---|

| VNF onboarding | 2 | |

| Service Design | 1 | |

| Service Composition | 1 | |

| Network Provisioning | 1 | |

| Deployment automation | 1 | |

| Termination automation | 1 | |

| Policy driven/optimal VNF placement | 3 | stretch |

| Performance monitoring and analysis | 2 | |

| Resource dedication | 3 | stretch |

| Controll Loops | 2 | |

| Capacity based scaling | 3 | stretch |

| Triggered Healthcheck | 2 | |

| Health monitoring and analysis | 2 | |

| Data collection | 2 | |

| Data analysis | 2 | |

| Policy driven scaling | 3 | stretch |

| Policy based healing | 2 | |

| Configuration audit | 3 | stretch |

| Multi Cloud Support | 2 | |

| Framework for integration with OSS/BSS | 3 | stretch |

| Framework for integration with vendor provided VNFM(if needed) | 1 | |

| Framework for integration with external controller | 1 | |

| Non-functional Platform Requirement | ||

| Provide Tools for Vendor Self-Service VNF Certification (VNF SDK) | NA | NA |

| ONAP platform Fault Recovery | NA | NA |

| Security | NA | NA |

| Reliability | NA | NA |

| Dister Recovery | NA | NA |

| ONAP Change Management/Upgrade Control/Automation | NA | NA |

Work Commitment:

< identify who is committing to work on this use case and on which part>

Work Item | ONAP Member Committed to work on VoLTE |

|---|---|

Modeling | CMCC, Huawei, ZTE, BOCO |

SDC | CMCC, ZTE |

SO | CMCC, Huawei, ZTE |

SDN-C/SDN-Agent | CMCC, Huawei, ZTE |

DCAE/Homles/CLAMP | CMCC, ZTE, BOCO, Huawei, Jio |

VF-C | CMCC, HUAWEI, ZTE, BOCO, Nokia, Jio |

A&AI | HUAWEI, ZTE, BOCO |

Policy | ZTE |

Multi-VIM | VMWare, Wind River |

| Portal | CMCC |

25 Comments

Yan Yang

If you want to know the previous discussion about VoLTE use case, please refer to (obselete)Use Case: VoLTE (vIMS + vEPC)

Brian Freeman

Why does the sequence diagram show Holmes stand alone with no linkage to DCAE . I thought the R1 goal was for Holmes to be used as a microservice as part of DCAE. While deployment of Holmes stand alone is an option a service provider could choose, I would think it wouldn't be the option chosen for the R1 use case. The obsolete Use Case VoLTE didn't show Holmes but rather showed DCAE and Policy.

Chengli Wang

Added Holmes into the diagram as a standalone component, just want to clarify the functional requirements to Holmes clearly. it does not mean the deployed instances.

Brian Freeman

On the VXLAN tunnels, arent the VNF's using GTP tunnels already to communicate so you wouldnt need another tunnel protocol. vSPGW should be able to establish a GPRS Tunneling Protocol Version 2 for Control plane (GTPv2-C) tunnel to vMME over IP without the need for a VXLAN tunnel ? Wouldn't the networking be simpler with just GTP over the MPLS VPN in the WAN ?

Chengli Wang

We prefer the overlay solution is service agnostic. we can use it to residential/enterprise vCPE cases if they want. but in these two vCPE cases we can not use GTP.

Brian Freeman

My comment was on the VoLTE case not the vCPE use case. 3GPP uses GTP tunnels between elements so that's why I don't understand the need for the VXLAN between the edge and core data centers.

Chengli Wang

Sorry for the misleading as my bad description.

I mean our proposed solution for the connection service between clouds, VXLAN based on EVPN protocol, is more common solution used to connect two DCs. The solution can also be used in other scenarios not just only for VoLTE.

My understanding that your suggested solution, GTP tunnel, is service layer tunnel. It is more special for vEPC. It is not suitable for other cases.

We may deploy deferent services/VNFs in DC in the real world. When we need to deal with the interconnection between DCs a common solution is more better than a special one.

Cheng (Ian) Liu

Would like to follow up on this one. I assume in order to re-use current 3GPP specified VoLTE PNFs/VNFs, we need to keep the GTP tunnel between S/P-GW and MME for the control plan signaling. Are you suggesting this service-specific GTP tunnel will be encapsulated inside of the VXLAN tunnel between two DCs?

Chengli Wang

Sorry for the late response. Correctly I think the solution for the DCI connection will not care about the special service package. service page should be encapsulated inside vxlan tunnel.

user-67d6f

Dear team,

can you please add the details of 3rd party VNFM, EMS similar to how VNF and VIM are listed in this page.

Also how these external systems are on-boarded into the ONAP is missing. kindly add them as well. whether it would use ESR/A&AI?

Thanks

Chengli Wang

The choice of 3rd party VNFM/EMS depend on which vendor the VNF come from. as a example, HUAWEI is interest to take vSBC to do the VoLTE case test, they also need to bring specific VNFM and EMS together.

After our committee make the decision on how to mapping VNFs to vendors. that means we also have the decision on their VNFM and EMS system

For the last question, I think we should leverage the function provided by ESR/A&AI. They should have all the information about 3rd party system, like URL, username, password...I can add some words in Project Impact part, thanks.

user-67d6f

Thanks for adding impact on ESR. which should facilitates the need.

will it be good to address below ones:

Chengli Wang

Thanks for your suggestion, I suggest this wiki page only focus on the main flow involved in VoLTE case, left the details to the projects themself.

I think we can refine this part once we conclude the choice of vendors components.

Brian Freeman

Do we have any example documentation on the REST API calls needed by 3rd party controllers. I want to demonstrate using the REST API Call Node how we can sketch out the interactions and having documentation on the types of REST calls would be useful. Something like CreateVPN, CreateVRF,UpdateVRF (setting import/export policies), etc

Chengli Wang

I have only part of the APIs you needs can be referred to. as an example, we can use API as below to let local controller know which virtual networks inside DC need connect with remote DC network.

POST local-controller-ip:/v2.0/l3-dci-connects

{

" l3-dci-connect": {

" id ": "CDD702C3-7719-4FE6-A5AD-3A9C9E265309",

"name": "PODX-routerY",

"description": "VPC A connect VPC B",

"router_id": "CBB702C3-6789-1234-A5AD-3A9C9E265309",

"local_subnets": ["8a41319d-87cf-4cd6-8957-f4a1066c63a8"],

" local_network_all ":false,

"evpn_irts": ["1:5000"],

"evpn_erts": ["1:5000" ],

"l3_vni": "5001",

}

}

Brian Freeman

Does this table look accurate with respect to sources of the data ? A couple of them I wasnt sure of the source.

SDNC-G created from Data Center Name (PODX) and DC Gateway router (Y). Pertinent DC inventory should be in SDNC-G and in AAI as part of cloud site inventory/setup

Should be service provider optional coding of the string

Array of neutron network GUID (its neutron network not subnet right ?) , this should come from OpenStack Neutron data. Should be available in SO from original VIM create of VNFs or via query from SDNC to MultiVIM to OpenStack to get network data

MACD should permit adding/deleting neutron networks

evpn_irts

import route target array

["1:5000"]

Is this part of VNF design or part of SDNCG assigned MPLS VPN Data from the WAN configuration ?

MACD should permit adding / deleting route targets

evpn_erts

export route target array

Brian Freeman

Also - would the import/export always be an Any to Any VPN or would you need to support Hub and Spoke down the road ?

Chengli Wang

For the environment will be set up in ONAP lab I think edge DC to core DC (point to point) is enough.

Chengli Wang

For the description part, it can be set string as human readable to clarify what's the resources used for. A and B is just as example not critical.

For the "router_id", I think it can be queried from AAI. It should be part of resource instances when deploy the network services.

For the "evpn_irts" and "evpn_erts" part, maybe it can be covered by configuration. we can provide more API examples as we need update route targets. To simplify the case in R1, we do not suggest to cover so much.

Gil Bullard

In the Instantiation sequence diagram, I cannot tell where automated "Homing" would occur. Is it intended that "homing" of the VoLTE VNFs would occur as part of the "parse" step 2.3? Or is the intent that "homing" takes place as part of the lifecycle manager functionality within the VF-Cs, sometime after step 2.7?

I would think that, in order to establish the WAN, SO would need to know which Cloud Zones will host each VoLTE VNF instance, so would I be right to assume step 2.3? If so, that would mean that the SO flow would need to decompose VoLTE into its component VNFs to feed to the homing service.

If on the other hand "homing" in this use case would be expected to take place at the vIMS and vEPC levels by the respective VF-Cs, then would the WAN setup need to be moved until after the VF-C operations? I would also think that, in order to setup the WAN, SO would need to know the network assignments (IPs, L2 tags) that presumably would be assigned by the VF-C level processing?

Chengli Wang

Hi Gil, I missed 'Homing' part details in the sequence flow. Maybe we can suggest VF-C team to keep follow this issue. I think the interaction with Homing will happen after 2.13 in instantiation diagram.

2.13 VNFM will send grant request to VF-C, then VF-C will talk with Homing and get the result which appropriate VIM will be granted to deploy VNF. Maybe Homing can give the dedicated VIM based on the location principle and usage/availability of virtual resources of each VIMs.

I think you are right, I need update the diagram about the operation order of VF-C(instance NSs/VNFs via S-VNFM) and SDN-C(provision overlay solution). As SDN-C need some information, such as the sub-network created by VF-C to connect with VNFs, when it provision the overlay.

Gil Bullard

Attached is a deck that proposes a delineation between the "Service Orchestration" and "Resource Orchestration" functionality in the context of VoLTE. The deck illustrates this delineation using the complex example of a needed migration of a VNF instance that is shared by service instances of multiple Service Types. This example used in the deck is certainly not a R1 example or even R2, but rather is presented as a high level overview of how the delineation proposed would be manifested for complex scenarios. The example is intended to provide an overview of the delineation proposed between the Service Orchestration and Resource Orchestration functions in the context of this complex example, and is not intended to be a detailed analysis of the steps involved by ONAP in performing a VNF migration specific to the VoLTE Service and VNFs.

Gil Bullard

Team,

I posted to the Drafts for discussion sub-page an updated version of the PPT file that I quickly covered on the August 2nd "[onap-discuss] ONAP VoLTE SDC" call (10pm China Time/10am Eastern US Time). It contains a proposal on how to support this near term need that we face together.

I intend that this proposal has an advantage of minimizing rework and misalignment of Release 1 and Release 2. Note the SO functionality in this proposal aligns with that which is being proposed in the vCPE Residential Broadband Use Case.

You will note that in this PPT I have also proposed a “design time” approach that results in a “flat” VoLTE model in the Release 2 timeframe through “compile time nesting”. Please do not conclude from this that I do not appreciate the business benefit of modeling in ONAP complex, multi-tiered services. I do appreciate that need, and I agree that ONAP needs to be able to support such complex, “n-tiered” services. In fact, I am proposing in this deck one way to accomplish such support, at least in the near term, by “flattening” the orchestration of such services.

Please also do not conclude from this “flattening” proposal that I am pushing back on the concept of “upper level” run-time service orchestration flows recursively delegating to “lower level” service orchestration flows, in an “n-tier” construct. In fact, I find an attractive elegance to recursive flows like this. However, I am proposing to avoid such an “n-tier” flow approach in the near term because I do envision that such an implementation would add significant complexity to ONAP orchestration “rainy day handling”, certainly in trouble-shooting. In addition, though I would like to see ONAP eventually support “rainy day handling” for such an “n-tier” orchestration architecture, I would prefer to first develop some underlying common “rainy day handling” infrastructure that each flow level can leverage. I foresee that such a task will take some significant design work. Because of that I propose the “compile time nesting” as a way to “flatten” the orchestration and to simplify this rainy day handling, at least in the near term “Release 2” timeframe.

I look forward to other’s comments and feedback

Jie Feng

Brian Freeman, bellow is ZTE WAN and SPTN SWAGGER API:

swagger: '2.0' info: version: "1.0.0" title: L3VPN API description: | L3VPN API schemes: - http host: 127.0.0.1:8080 basePath: /api/sdnc/v1 paths: /l3vpns: post: description: | Create L3VPN consumes: - application/json parameters: - name: controller-id type: string in: header required: true description: Controller uuid - name: request in: body required: true schema: $ref: '#/definitions/L3vpnCreateList' responses: 200: description: Create L3VPN succesful 400: description: Create L3VPN failed definitions: L3vpnCreateList: type: object properties: network-instances: type: array items: $ref: '#/definitions/L3vpnCreate' interfaces: type: array items: $ref: '#/definitions/NeInterface' L3vpnCreate: type: object properties: node-id: type: string description: NE ID in controller network-instance: type: array items: $ref: '#/definitions/L3vpnInstance' L3vpnInstance: type: object properties: name: type: string l3vpn: $ref: '#/definitions/L3vpn' L3vpn: type: object properties: route-distinguisher: $ref: '#/definitions/RouteDistinguisher' ipv4: $ref: '#/definitions/RouteTargetConfig' RouteDistinguisher: type: object properties: config: $ref: '#/definitions/RdConfig' RdConfig: type: object properties: rd: type: string description: route distinguisher, like 1:200:1 RouteTargetConfig: type: object properties: unicast: $ref: '#/definitions/UnicastConfig' UnicastConfig: type: object properties: route-targets: $ref: '#/definitions/RouteTargets' RouteTargets: type: object properties: config: $ref: '#/definitions/RouteTargetsConfig' RouteTargetsConfig: type: object properties: rts: type: array items: $ref: '#/definitions/Rt' Rt: type: object properties: rt: type: string description: route target, like "1:200:1" rt-type: type: string description: export, import or both NeInterface: type: object properties: node-id: type: string interface: $ref: '#/definitions/Interface' Interface: type: object properties: name: type: string ipv4: $ref: '#/definitions/InterfaceIpv4' InterfaceIpv4: type: object properties: address: $ref: '#/definitions/IpAddress' bind-network-instance-name: type: string description: Name of L3VPN IpAddress: type: object properties: ip: type: string netmask: type: stringJson example:

{ "network-instances": [ { "node-id": "10.1.1.1", "network-instance": [ { "name": "HL5_HL4-1", "l3vpn": { "route-distinguisher": { "config": { "rd": "0:200:1" } }, "ipv4": { "unicast": { "route-targets": { "config": { "rts": [ { "rt": "0:200:1", "rt-type": "both" } ] } } } } } } ] } ], "interfaces": [ { "node-id": "10.1.1.1", "interface": [ { "name": "gei_1/2", "ipv4": { "address": { "ip": "20.1.1.1", "netmask": "255.255.255.0" }, "bind-network-instance-name": "VRF:HL5_HL4-1" } } ] } ] }Bertrand Low

Hi there, I would appreciate clarification for the following questions. Thanks in advance.

1) Is there a repository for the complete list of resources involved in this blueprint?

2) Related to above, are there Directed Graphs specifically for VoLTE?

3) Where is the volte-api used between the SO and SDNC? Has it been combined with generic-resource-api?

4) How does the 3rd party external controller register with ONAP? Is there a particular component that it needs to register with? Does SDNC communicate with it via the RestapiCall plugin?