Keywords:

Centralized, Role based access, tracking, streaming, reporting

Feature / Requirement | Description |

|---|---|

| F1 | Centralized Logs - all the logs available streamed in one place - search, visualize and report ready |

| F2 | Role based access - logs are accessible from outside the container - no ssh into the pod or tailing of the logs per microservice |

| F3 | Tracking - If a unique ID is passed across microservices we can trace a transaction pass/failure through the system requestID, InvocationID, Timestamp indexed |

| F4 | Reporting - track multiple transaction patterns and generate emergent or correlated behavior |

| F5 | Control over log content - collect, index, filter |

| F6 | Machine readable json oriented elasticsearch storage of logs |

| R1 | Logs are in the same (currently 29 field) format |

| R2 | Logging library requires minimal changes for use - using Spring AOP to get MARKER entry/exit logs for free without code changes |

| R3 | Logs are streamed to a central ELK stack |

| R4 | ELK stack provides for tracing, dashboards, query API |

ONAP consists of many components and containers, and consequently writes to many logfiles. The volume of logger output may be enormous, especially when debugging. Large, disparate logfiles are difficult to monitor and analyze, and tracing requests across many files, file systems and containers is untenable without tooling.

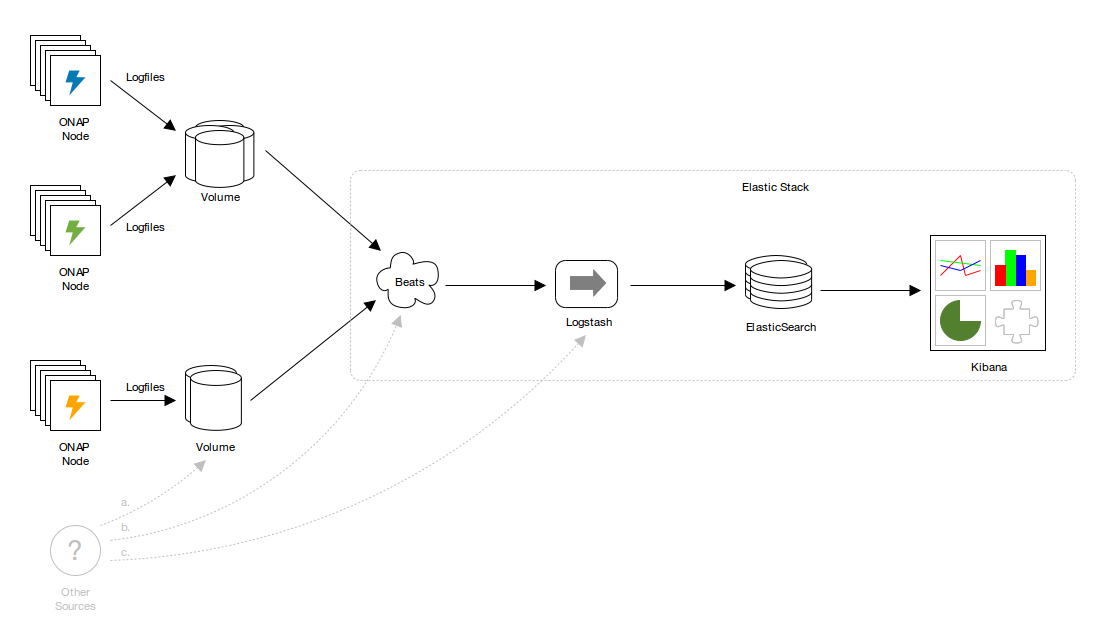

The problem of decentralized logger output is addressed by analytics pipelines such as Elastic Stack (ELK). Elastic Stack consumes logs, indexes their contents in Elasticsearch, and makes them accessible, queryable and navigable via a sophisticated UI, Kibana Discover. This elevates the importance of standardization and machine-readability. Logfiles can remain browsable, but output can be simplified.

Logger configurations in ONAP are diverse and idiosyncratic. Addressing these issues will prevent costs from being externalized to consumers such as analytics. It also affords the opportunity to remedy any issues with the handling and propagation of contextual information such as transaction identifiers (presently passed as X-ONAP-RequestID. This propagation is critical to tracing requests as they traverse ONAP and related systems, and is the basis for many analytics functions.

Rationalized logger configuration and output also paves the way for other high-performance logger transports, including publishing directly to analytics via SYSLOG (RFC3164, RFC5425, RFC5426) and streams, and mechanisms for durability.

Each change is believed to be individually beneficial:

In scope:

Out of scope:

All changes augment ONAP application logging guidelines on the ONAP wiki.

Commits to 2 repos

https://gerrit.onap.org/r/#/q/status:merged+project:+logging-analytics

https://gerrit.onap.org/r/#/q/status:merged+project:+oom

All logfiles to default to beneath /var/log, and beneath /var/log/ONAP in the case of core ONAP components.

All logger provider configuration document locations namespaced by component and (if applicable) subcomponent by default:

/var/log/ONAP/<component>[/<subcomponent>]/*.log |

All logger provider configuration documents to default to beneath /etc/ONAP.

All logger provider configuration document locations namespaced by component and (if applicable) subcomponent by default:

/etc/onap/<component>[/<subcomponent>]/<provider>.xml |

With the various simplifications discussed in this document, the logger provider configuration for most components can be rationalized.

Proposals include:

A reductive example for the Logback provider, omitting some finer details:

<configuration scan="true" scanPeriod="5 seconds" debug="false">

<property name="component" value="component1"></property>

<property name="subcomponent" value="subcomponent1"></property>

<property name="outputDirectory" value="/var/log/ONAP/${component}/${subcomponent}" />

<property name="outputFilename" value="all" />

<property name="defaultPattern" value="%nopexception%logger

\t%date{yyyy-MM-dd'T'HH:mm:ss.SSSXXX,UTC}

\t%level

\t%replace(%replace(%message){'\t','\\\\t'}){'\n','\\\\n'}

\t%replace(%replace(%mdc){'\t','\\\\t'}){'\n','\\\\n'}

\t%replace(%replace(%rootException){'\t','\\\\t'}){'\n','\\\\n'}

\t%replace(%replace(%marker){'\t','\\\\t'}){'\n','\\\\n'}

\t%thread

\t%n"/>

<property name="queueSize" value="256"/>

<property name="maxFileSize" value="50MB"/>

<property name="maxHistory" value="30"/>

<property name="totalSizeCap" value="10GB"/>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<charset>UTF-8</charset>

<pattern>${defaultPattern}</pattern>

</encoder>

</appender>

<appender name="consoleAsync" class="ch.qos.logback.classic.AsyncAppender">

<queueSize>${queueSize}</queueSize>

<appender-ref ref="console" />

</appender>

<appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${outputDirectory}/${outputFilename}.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${outputDirectory}/${outputFilename}.%d{yyyy-MM-dd}.%i.log.zip</fileNamePattern>

<maxFileSize>${maxFileSize}</maxFileSize>

<maxHistory>${maxHistory}</maxHistory>

<totalSizeCap>${totalSizeCap}</totalSizeCap>

</rollingPolicy>

<encoder>

<charset>UTF-8</charset>

<pattern>${defaultPattern}</pattern>

</encoder>

</appender>

<appender name="fileAsync" class="ch.qos.logback.classic.AsyncAppender">

<queueSize>${queueSize}</queueSize>

<appender-ref ref="file" />

</appender>

<root level="info" additivity="false">

<appender-ref ref="consoleAsync"/>

<!--<appender-ref ref="fileAsync"/>-->

</root>

<logger name="org.onap.example.component1.subcomponent2" level="debug" additivity="false">

<appender-ref ref="fileAsync" />

</logger>

</configuration> |

Encourage Logback for Java-based components, porting any components that agree to switch.

All configuration locations to be overrideable by a system or file property passed to (or read by) the JVM. (No specific convention suggested, because it'll only divert attention from the importance of having a convention).

We've already discussed key aspects of extensibility:

The MDCs in ONAP application logging guidelines are closely matched to existing MDC usage in seed ONAP components, but there may be many arbitrarily many others, and there's no need for them to be listed in configuration. Itemizing them means changing all configuration documents when new MDCs are introduced.

The change is to the serialization of MDCs in the Logback provider <pattern/> to a single expression which emits whatever is present:

%replace(%replace(%mdc){'\t','\\\\t'}){'\n','\\\\n'} |

Markers can be used to characterize log entries. Their use is widespread, and future ONAP components and/or customizations might emit Markers with their log messages. If and when they do, we want them indexed for analytics, and like MDCs, we don't want them to need to be declared in logger provider configuration in advance.

The change is to add the serialization of Markers to <pattern/> in Logback provider configuration:

%replace(%replace(%marker){'\t','\\\\t'}){'\n','\\\\n'} |

Regularizing output locations and line format simplifies shipping and indexing of logs, and enables many options for analytics. See Default logfile locations, MDCs, Markers, Machine-readable output, and Elastic Stack reference configuration.

Shipper and indexing performance and durability depends on logs that can be parsed quickly and reliably.

The proposal is:

For example (tab- and newline-separated, MDCs, a nested exception, a marker, with newlines self-consciously added between each attribute for readability):

org.onap.example.component1.subcomponent1.LogbackTest \t2017-06-06T16:09:03.594Z \tERROR \tHere's an error, that's usually bad \tkey1=value1, key2=value2 with space, key5=value5"with"quotes, key3=value3\nwith\nnewlines, key4=value4\twith\ttabs \tjava.lang.RuntimeException: Here's Johnny \n\tat org.onap.example.component1.subcomponent1.LogbackTest.main(LogbackTest.java:24) \nWrapped by: java.lang.RuntimeException: Little pigs, little pigs, let me come in \n\tat org.onap.example.component1.subcomponent1.LogbackTest.main(LogbackTest.java:27) \tAMarker1 \tmain |

On the basis of regularized provider configuration and output, and the work of the ONAP Operations Manager project, a complete Elastic Stack pipeline can be deployed automatically.

This achieves two things:

The proposal is:

Reliable propagation of transaction identifiers is critical to tracing requests through ONAP.

This is normally achieved:

This also requires the generation of transaction IDs in initiating components.

Update X-ECOMP-RequestID to X-ONAP-RequestID throughout.

Existing logging guidelines:

Logging providers:

EELF:

Elastic Stack:

ONAP Operations Manager: ONAP Operations Manager Project

OPNFV: https://wiki.opnfv.org/display/doctor

https://kubernetes.io/docs/concepts/cluster-administration/logging/