Kubernetes clusters using ONAP Multicloud K8s Plugin Project for R4/R5 do not report hardware features to A&AI.

Consequently during the CNF/VNF life cycle CNFs/VNFs that require or recommend specific hardware during instantiation cannot dynamically reach the correct cluster and node that provides the needed hardware capabilities.

This Frankfurt Release epic () adds the ability to discover, report and use hardware features during CNF/VNF life cycle to accelerate use cases using ONAP.

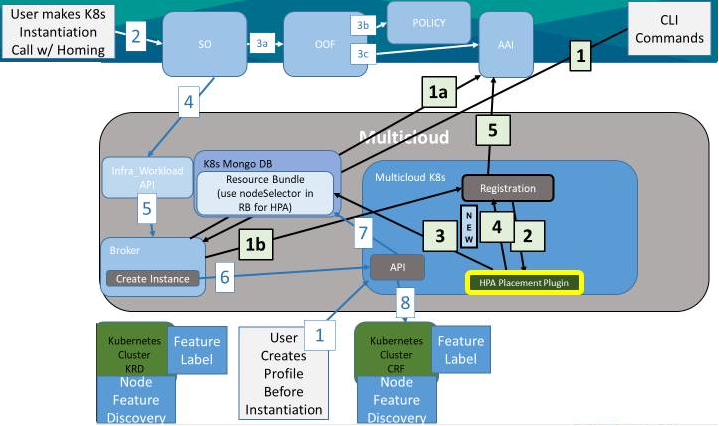

The below figure shows a K8s cluster registering with the K8s plugin, wherein capabilities are transmitted

Discovery of HPA features will be done using the node feature discovery [NFD] addon (https://github.com/kubernetes-sigs/node-feature-discovery) for kubernetes. NFD will be deployed as a daemonSet, with pods on each node and will discover and label hardware features on each kubernetes node with features labels (https://github.com/kubernetes-sigs/node-feature-discovery#feature-labels).

For SR-IOV Add Network Controllers Class "0200" to NFD-Worker Config File on deploy to label network cards on nodes

For HPA Features not labeled by NFD, investigate creation of Feature Detector Hooks (User Specific Features)

The GREEN FLOW is Register HPA features to AAI.

Registration of K8s Cluster will happen at the ESR UI (see: https://onap.readthedocs.io/en/latest/guides/onap-user/cloud_site/openstack/index.html - use k8s for cloud-type). ESR will then send a POST request to

http://{{MSB_IP}}:{{MSB_PORT}}/api/v1/{{cloud-owner}}/{{cloud-region-id}}/registryMulticloud K8s Plugin registrationHandler will receive said POST and register the new Kubernetes cluster with the K8s Plugin using the connectivity code/API.

We need to check what is sent from ESR to registery URL. Currently the K8s Plugin uses a kubeconfig file to access different k8s clusters. We will either need to generate a kubeconfig file (does ESR send enough info to do this? If not we might need to extend ESR) or access the cluster using another form of authentication (check k8s code and see if this is possible - auth token perhaps?

|

The registrationHandler will then query the k8s cluster under registration using the Node App or Node Plugin code to get a list of nodes and the labels for each node.

Reporting of HPA features to AA&I is done by the registrationHandler. Data will be munged to cloud-region/tenant/flavors schema for that cluster and reported to AA&I. registrationHandler is planning on using AA&I module under development for Frankfurt to report to AA&I. In this case we will send a request to the AA&I module instructing it to update the cloud-region, create a tenant and create flavors that match the node feature labels in said cloud-region.

Convert node feature label to flavor properties

below table is example how to convert node feature label to flavor properties

| node feature label | Openstack flavor properties |

|---|---|

feature.node.kubernetes.io/network-sriov.capable=True feature.node.kubernetes.io/network-sriov.configured=True feature.node.kubernetes.io/pci-1200_8086.present=True Comments: The other parameter we can set default because node feature label can not provide. |

|

We can get the latest mapping table in HPA Policies and Mappings + K8s

CNF and VNF helm charts will be updated with nodeSelector requirements & recommendations in line with node labels created by NFD during feature discovery.

Example Node Features:

# Hardware Feature Labels: feature.node.kubernetes.io/cpu-cpuid.AESNI=true, feature.node.kubernetes.io/cpu-cpuid.AVX2=true, feature.node.kubernetes.io/cpu-cpuid.AVX=true, feature.node.kubernetes.io/cpu-cpuid.FMA3=true, feature.node.kubernetes.io/cpu-cpuid.IBPB=true, feature.node.kubernetes.io/cpu-cpuid.STIBP=true, feature.node.kubernetes.io/cpu-hardware_multithreading=true, feature.node.kubernetes.io/cpu-pstate.turbo=true, feature.node.kubernetes.io/cpu-rdt.RDTCMT=true, feature.node.kubernetes.io/cpu-rdt.RDTMON=true, feature.node.kubernetes.io/memory-numa=true, feature.node.kubernetes.io/network-sriov.capable=true, feature.node.kubernetes.io/pci-0300_102b.present=true, # GPU Present feature.node.kubernetes.io/pci-0200_8086.present=true, # Network Card Present feature.node.kubernetes.io/storage-nonrotationaldisk=true # Software Feature Labels: feature.node.kubernetes.io/kernel-config.NO_HZ=true, feature.node.kubernetes.io/kernel-config.NO_HZ_FULL=true, feature.node.kubernetes.io/kernel-version.full=3.10.0-957.el7.x86_64, feature.node.kubernetes.io/kernel-version.major=3, feature.node.kubernetes.io/kernel-version.minor=10, feature.node.kubernetes.io/kernel-version.revision=0, feature.node.kubernetes.io/system-os_release.ID=centos, feature.node.kubernetes.io/system-os_release.VERSION_ID.major=7, feature.node.kubernetes.io/system-os_release.VERSION_ID.minor=, feature.node.kubernetes.io/system-os_release.VERSION_ID=7 |

Where needed add resource limits and request to ResourceBundle charts. I.E.:

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"https://github.com/onap/multicloud-k8s/tree/master/kud/demo/firewall

https://github.com/onap/multicloud-k8s/tree/master/kud/tests/vnfs/edgex/helm/edgex

For CPU Pinning etc - investigate CMK for kubernetes (see links at bottom of page) and specifying nodeSelector of "cmk.intel.com/cmk-node": "true"

See Deploying vFw and EdgeXFoundry Services on Kubernets Cluster with ONAP for instantiation flow for K8s. See https://docs.onap.org/en/latest/submodules/integration.git/docs/docs_vfwHPA.html?highlight=hpa VFW deployment with HPA for HPA use case.

If we follow the deploying vFW for K8s on ONAP, the only difference in the process for HPA would be the addition of homing (see VFW deployment with HPA docs above) to the SO instantiation request. This enables a call to OOF that uses AA&I and Policy to select the correct cluster.

Policy and OOF will use the same path to discover capabilities of K8s nodes as are done for OpenStack. We are using the same data model (OpenStack cloud-region/tenant/flavors) from AA&I. OOF will use AA&I information to home particular CNF workloads to cloud-regions which contain requested features. When K8s plugin instantiates said workloads Kubernetes will read nodeSelector preferences and place each workload on nodes which contain needed features to accelerate their function.

As of now there are not any known gaps in OOF/Policy that would inhibit this from working.

Background Links:

Deploying vFw and EdgeXFoundry Services on Kubernets Cluster with ONAP

vFW K8S examples mapping to AAI

Setting up Closed Loop for K8S vFW - initial pass

https://github.com/intel/CPU-Manager-for-Kubernetes

https://github.com/kubernetes-sigs/node-feature-discovery

https://kubernetes.io/docs/tasks/configure-pod-container/assign-cpu-resource/

https://kubernetes.io/docs/tasks/administer-cluster/topology-manager/

https://kubernetes.io/docs/tasks/configure-pod-container/assign-memory-resource/

https://kubernetes.io/docs/tasks/administer-cluster/extended-resource-node/