| Table of Contents |

|---|

Developer Setup

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

cd oom/kubernetes # do a make if anything is modified in your charts sudo make all #sudo make onap ubuntu@ip-172-31-19-23:~/oom/kubernetes$ sudo helm upgrade -i onap local/onap --namespace onap --set log.enabled=false # wait and check in another terminal for all containers to terminate ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-log onap onap-log-elasticsearch-7557486bc4-5mng9 0/1 CrashLoopBackOff 9 29m onap onap-log-kibana-fc88b6b79-nt7sd 1/1 Running 0 35m onap onap-log-logstash-c5z4d 1/1 Terminating 0 4h onap onap-log-logstash-ftxfz 1/1 Terminating 0 4h onap onap-log-logstash-gl59m 1/1 Terminating 0 4h onap onap-log-logstash-nxsf8 1/1 Terminating 0 4h onap onap-log-logstash-w8q8m 1/1 Terminating 0 4h sudo helm upgrade -i onap local/onap --namespace onap --set portal.enabled=false sudo vi portal/charts/portal-sdk/resources/config/deliveries/properties/ONAPPORTALSDK/logback.xml sudo make portal sudo make onap ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-log sudo helm upgrade -i onap local/onap --namespace onap --set log.enabled=true sudo helm upgrade -i onap local/onap --namespace onap --set portal.enabled=true ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-log onap onap-log-elasticsearch-7557486bc4-2jd65 0/1 Init:0/1 0 31s onap onap-log-kibana-fc88b6b79-5xqg4 0/1 Init:0/1 0 31s onap onap-log-logstash-5vq82 0/1 Init:0/1 0 31s onap onap-log-logstash-gvr9z 0/1 Init:0/1 0 31s onap onap-log-logstash-qqzq5 0/1 Init:0/1 0 31s onap onap-log-logstash-vbp2x 0/1 Init:0/1 0 31s onap onap-log-logstash-wr9rd 0/1 Init:0/1 0 31s ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-portal onap onap-portal-app-8486dc7ff8-nbps7 0/2 Init:0/1 0 9m onap onap-portal-cassandra-8588fbd698-4wthv 1/1 Running 0 9m onap onap-portal-db-7d6b95cd94-9x4kf 0/1 Running 0 9m onap onap-portal-db-config-dpqkq 0/2 Init:0/1 0 9m onap onap-portal-sdk-77cd558c98-5255r 0/2 Init:0/1 0 9m onap onap-portal-widget-6469f4bc56-g8s62 0/1 Init:0/1 0 9m onap onap-portal-zookeeper-5d8c598c4c-czpnz 1/1 Running 0 9m |

downgrade docker if required

| Code Block |

|---|

sudo apt-get autoremove -y docker-engine |

Change max-pods from default 110 pod limit

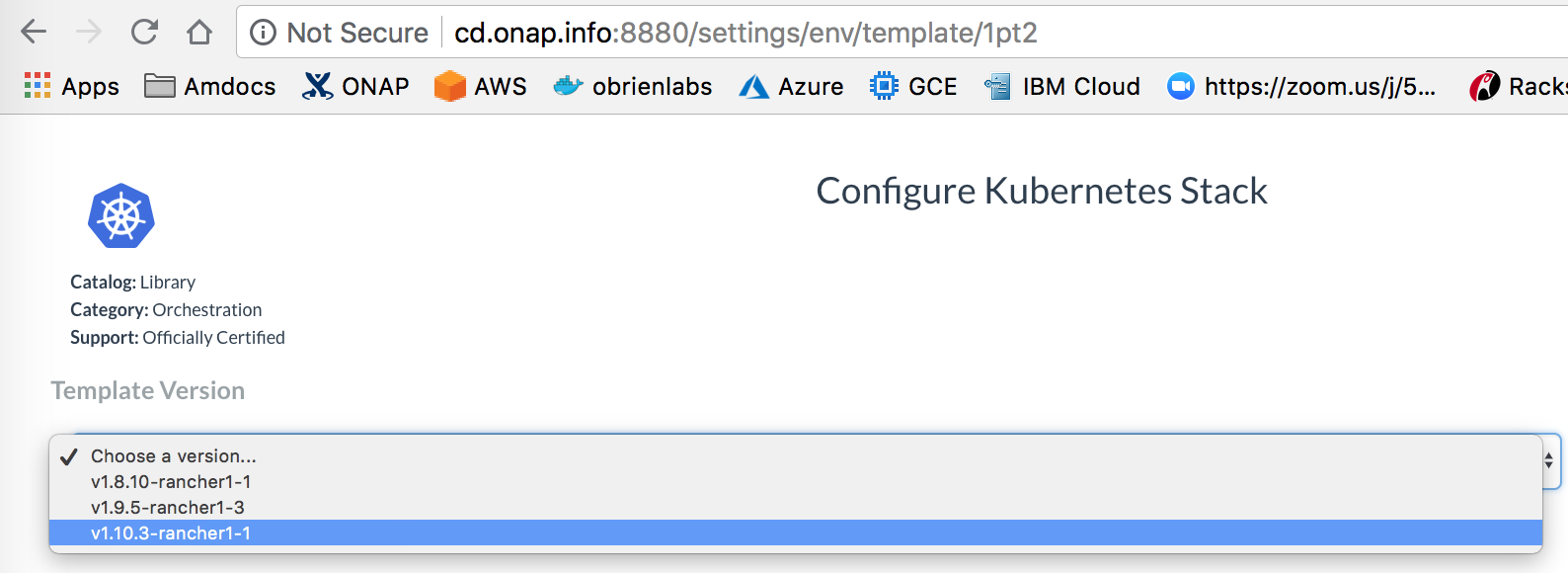

Rancher ships with a 110 pod limit - you can override this on the kubernetes template for 1.10

Manual procedure: change the kubernetes template (1pt2) before using it to create an environment (1a7)

add --max-pods=500 to the "Additional Kubelet Flags" box on the v1.10.13 version of the kubernetes template from the "Manage Environments" dropdown on the left of the 8880 rancher console.

| View file | ||||

|---|---|---|---|---|

|

| Jira | ||||||

|---|---|---|---|---|---|---|

|

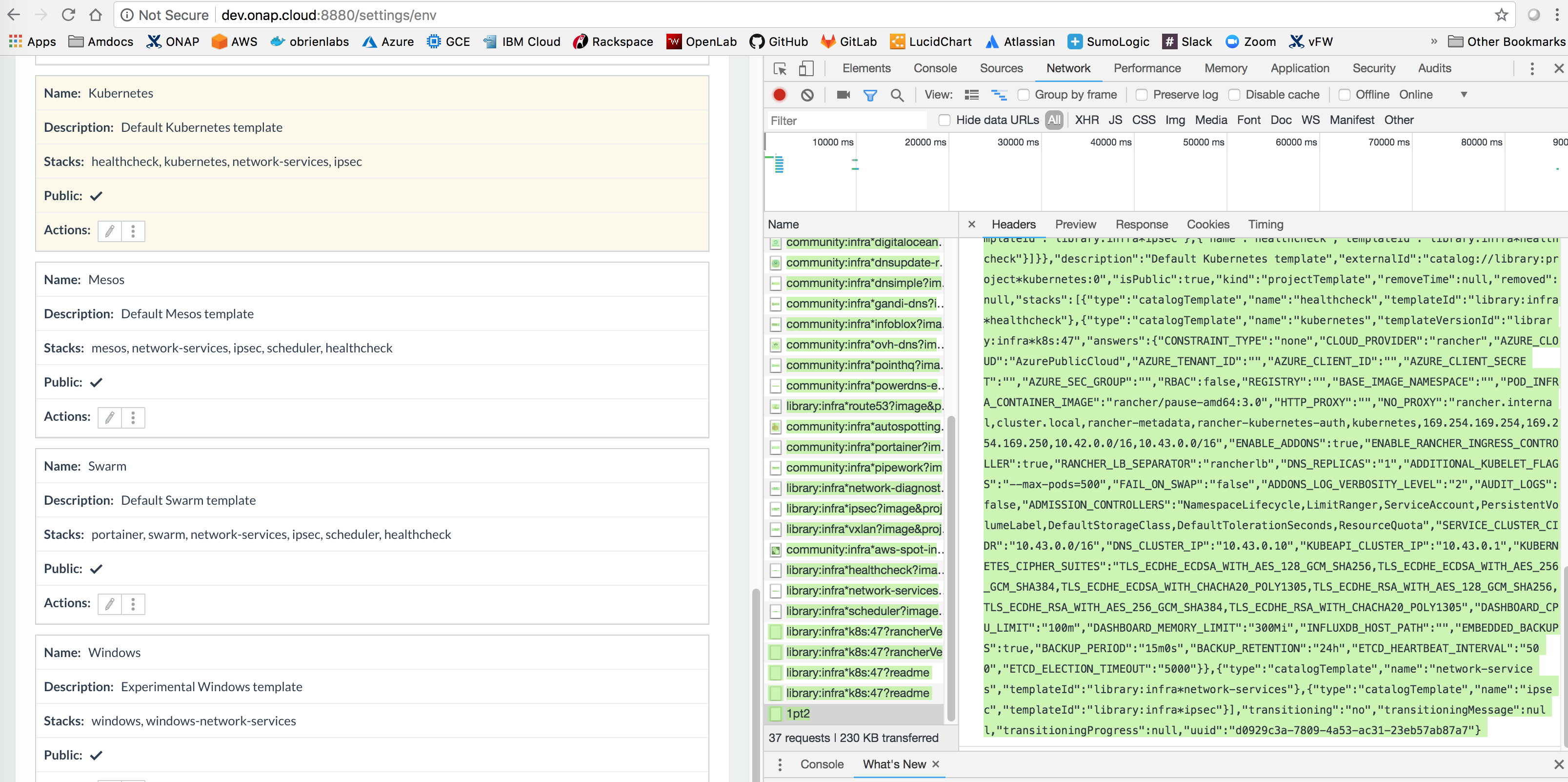

Or capture the output of the REST PUT call - and add around line 111 of the script https://git.onap.org/logging-analytics/tree/deploy/rancher/oom_rancher_setup.sh#n111

Automated - ongoing

| Code Block | ||

|---|---|---|

| ||

ubuntu@ip-172-31-27-183:~$ curl 'http://127.0.0.1:8880/v2-beta/projecttemplates/1pt2' --data-binary '{"id":"1pt2","type":"projectTemplate","baseType":"projectTemplate","name":"Kubernetes","state":"active","accountId":null,"created":"2018-09-05T14:12:24Z","createdTS":1536156744000,"data":{"fields":{"stacks":[{"name":"healthcheck","templateId":"library:infra*healthcheck"},{"answers":{"CONSTRAINT_TYPE":"none","CLOUD_PROVIDER":"rancher","AZURE_CLOUD":"AzurePublicCloud","AZURE_TENANT_ID":"","AZURE_CLIENT_ID":"","AZURE_CLIENT_SECRET":"","AZURE_SEC_GROUP":"","RBAC":false,"REGISTRY":"","BASE_IMAGE_NAMESPACE":"","POD_INFRA_CONTAINER_IMAGE":"rancher/pause-amd64:3.0","HTTP_PROXY":"","NO_PROXY":"rancher.internal,cluster.local,rancher-metadata,rancher-kubernetes-auth,kubernetes,169.254.169.254,169.254.169.250,10.42.0.0/16,10.43.0.0/16","ENABLE_ADDONS":true,"ENABLE_RANCHER_INGRESS_CONTROLLER":true,"RANCHER_LB_SEPARATOR":"rancherlb","DNS_REPLICAS":"1","ADDITIONAL_KUBELET_FLAGS":"","FAIL_ON_SWAP":"false","ADDONS_LOG_VERBOSITY_LEVEL":"2","AUDIT_LOGS":false,"ADMISSION_CONTROLLERS":"NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,ResourceQuota","SERVICE_CLUSTER_CIDR":"10.43.0.0/16","DNS_CLUSTER_IP":"10.43.0.10","KUBEAPI_CLUSTER_IP":"10.43.0.1","KUBERNETES_CIPHER_SUITES":"TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305","DASHBOARD_CPU_LIMIT":"100m","DASHBOARD_MEMORY_LIMIT":"300Mi","INFLUXDB_HOST_PATH":"","EMBEDDED_BACKUPS":true,"BACKUP_PERIOD":"15m0s","BACKUP_RETENTION":"24h","ETCD_HEARTBEAT_INTERVAL":"500","ETCD_ELECTION_TIMEOUT":"5000"},"name":"kubernetes","templateVersionId":"library:infra*k8s:47"},{"name":"network-services","templateId":"library:infra*network-services"},{"name":"ipsec","templateId":"library:infra*ipsec"}]}},"description":"Default Kubernetes template","externalId":"catalog://library:project*kubernetes:0","isPublic":true,"kind":"projectTemplate","removeTime":null,"removed":null,"stacks":[{"type":"catalogTemplate","name":"healthcheck","templateId":"library:infra*healthcheck"},{"type":"catalogTemplate","answers":{"CONSTRAINT_TYPE":"none","CLOUD_PROVIDER":"rancher","AZURE_CLOUD":"AzurePublicCloud","AZURE_TENANT_ID":"","AZURE_CLIENT_ID":"","AZURE_CLIENT_SECRET":"","AZURE_SEC_GROUP":"","RBAC":false,"REGISTRY":"","BASE_IMAGE_NAMESPACE":"","POD_INFRA_CONTAINER_IMAGE":"rancher/pause-amd64:3.0","HTTP_PROXY":"","NO_PROXY":"rancher.internal,cluster.local,rancher-metadata,rancher-kubernetes-auth,kubernetes,169.254.169.254,169.254.169.250,10.42.0.0/16,10.43.0.0/16","ENABLE_ADDONS":true,"ENABLE_RANCHER_INGRESS_CONTROLLER":true,"RANCHER_LB_SEPARATOR":"rancherlb","DNS_REPLICAS":"1","ADDITIONAL_KUBELET_FLAGS":"--max-pods=600","FAIL_ON_SWAP":"false","ADDONS_LOG_VERBOSITY_LEVEL":"2","AUDIT_LOGS":false,"ADMISSION_CONTROLLERS":"NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,ResourceQuota","SERVICE_CLUSTER_CIDR":"10.43.0.0/16","DNS_CLUSTER_IP":"10.43.0.10","KUBEAPI_CLUSTER_IP":"10.43.0.1","KUBERNETES_CIPHER_SUITES":"TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305","DASHBOARD_CPU_LIMIT":"100m","DASHBOARD_MEMORY_LIMIT":"300Mi","INFLUXDB_HOST_PATH":"","EMBEDDED_BACKUPS":true,"BACKUP_PERIOD":"15m0s","BACKUP_RETENTION":"24h","ETCD_HEARTBEAT_INTERVAL":"500","ETCD_ELECTION_TIMEOUT":"5000"},"name":"kubernetes","templateVersionId":"library:infra*k8s:47"},{"type":"catalogTemplate","name":"network-services","templateId":"library:infra*network-services"},{"type":"catalogTemplate","name":"ipsec","templateId":"library:infra*ipsec"}],"transitioning":"no","transitioningMessage":null,"transitioningProgress":null,"uuid":null}' --compressed

{"id":"9107b9ce-0b61-4c22-bc52-f147babb0ba7","type":"error","links":{},"actions":{},"status":405,"code":"Method not allowed","message":"Method not allowed","detail":null,"baseType":"error"} |

Results

Single AWS 244G 32vCore VM with 110 pod limit workaround - 164 pods (including both secondary DCAEGEN2 orchestrations at 30 and 55 min) - most of the remaining 8 container failures are known/in-progress issues.

| Code Block | ||

|---|---|---|

| ||

ubuntu@ip-172-31-20-218:~$ free

total used free shared buff/cache available

Mem: 251754696 111586672 45000724 193628 95167300 137158588

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep onap | wc -l

164

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep onap | grep -E '1/1|2/2' | wc -l

155

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep -E '0/|1/2' | wc -l

8

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep -E '0/|1/2'

onap dep-dcae-ves-collector-59d4ff58f7-94rpq 1/2 Running 0 4m

onap onap-aai-champ-68ff644d85-rv7tr 0/1 Running 0 59m

onap onap-aai-gizmo-856f86d664-q5pvg 1/2 CrashLoopBackOff 10 59m

onap onap-oof-85864d6586-zcsz5 0/1 ImagePullBackOff 0 59m

onap onap-pomba-kibana-d76b6dd4c-sfbl6 0/1 Init:CrashLoopBackOff 8 59m

onap onap-pomba-networkdiscovery-85d76975b7-mfk92 1/2 CrashLoopBackOff 11 59m

onap onap-pomba-networkdiscoveryctxbuilder-c89786dfc-qnlx9 1/2 CrashLoopBackOff 10 59m

onap onap-vid-84c88db589-8cpgr 1/2 CrashLoopBackOff 9 59m

|

Operations

Get failed/pending containers

| Code Block | ||||

|---|---|---|---|---|

| ||||

kubectl get pods --all-namespaces | grep -E "0/|1/2" | wc -l |

| Code Block |

|---|

kubectl cluster-info

# get pods/containers

kubectl get pods --all-namespaces

# get port mappings

kubectl get services --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-1389790254-lgkz3 1/1 Running 1 5d

kube-system heapster-4285517626-x080g 1/1 Running 1 6d

kube-system kube-dns-638003847-tst97 3/3 Running 3 6d

kube-system kubernetes-dashboard-716739405-fnn3g 1/1 Running 2 6d

kube-system monitoring-grafana-2360823841-hr824 1/1 Running 1 6d

kube-system monitoring-influxdb-2323019309-k7h1t 1/1 Running 1 6d

kube-system tiller-deploy-737598192-x9wh5 1/1 Running 1 6d

# ssh into a pod

kubectl -n default exec -it nginx-1389790254-lgkz3 /bin/bash

# get logs

kubectl -n default logs -f nginx-1389790254-lgkz3 |

Exec

kubectl -n onap-aai exec -it aai-resources-1039856271-d9bvq bash

Bounce/Fix a failed container

Periodically one of the higher containers in a dependency tree will not get restarted in time to pick up running child containers - usually this is the kibana container

Fix this or "any" container by deleting the container in question and kubernetes will bring another one up.

| Code Block | ||

|---|---|---|

| ||

root@a-onap-auto-20180412-ref:~# kubectl get services --all-namespaces | grep log

onap dev-vfc-catalog ClusterIP 10.43.210.8 <none> 8806/TCP 5d

onap log-es NodePort 10.43.77.87 <none> 9200:30254/TCP 5d

onap log-es-tcp ClusterIP 10.43.159.93 <none> 9300/TCP 5d

onap log-kibana NodePort 10.43.41.102 <none> 5601:30253/TCP 5d

onap log-ls NodePort 10.43.180.165 <none> 5044:30255/TCP 5d

onap log-ls-http ClusterIP 10.43.13.180 <none> 9600/TCP 5d

root@a-onap-auto-20180412-ref:~# kubectl get pods --all-namespaces | grep log

onap dev-log-elasticsearch-66cdc4f855-wmpkz 1/1 Running 0 5d

onap dev-log-kibana-5b6f86bcb4-drpzq 0/1 Running 1076 5d

onap dev-log-logstash-6d9fdccdb6-ngq2f 1/1 Running 0 5d

onap dev-vfc-catalog-7d89bc8b9d-vxk74 2/2 Running 0 5d

root@a-onap-auto-20180412-ref:~# kubectl delete pod dev-log-kibana-5b6f86bcb4-drpzq -n onap

pod "dev-log-kibana-5b6f86bcb4-drpzq" deleted

root@a-onap-auto-20180412-ref:~# kubectl get pods --all-namespaces | grep log

onap dev-log-elasticsearch-66cdc4f855-wmpkz 1/1 Running 0 5d

onap dev-log-kibana-5b6f86bcb4-drpzq 0/1 Terminating 1076 5d

onap dev-log-kibana-5b6f86bcb4-gpn2m 0/1 Pending 0 12s

onap dev-log-logstash-6d9fdccdb6-ngq2f 1/1 Running 0 5d

onap dev-vfc-catalog-7d89bc8b9d-vxk74 2/2 Running 0 5d |

Remove containers stuck in terminating

a helm namespace delete or a kubectl delete or a helm purge may not remove everything based on hanging PVs - use

| Code Block | ||

|---|---|---|

| ||

#after a

sudo helm delete --purge onap

melliott [12:11 PM]

kubectl delete pods <pod> --grace-period=0 --force -n onap |

Persistent Volumes

Several applications in ONAP require persistent configuration or storage outside of the stateless docker containers managed by Kubernetes. In this case Kubernetes can act as a direct wrapper of native docker volumes or provide its own extended dynamic persistence for use cases where we are running scaled pods on multiple hosts.

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

The SDNC clustering poc - https://gerrit.onap.org/r/#/c/25467/23

For example the following has a patch that exposes a dir into the container just like a docker volume or a volume in docker-compose - the issue here is mixing emptyDir (exposing dirs between containers) and exposing dirs outside to the FS/NFS

https://jira.onap.org/browse/LOG-52

This is only one way to do a static PV in K8S

https://jira.onap.org/secure/attachment/10436/LOG-50-expose_mso_logs.patch

Token

Thanks Joey

root@ip-172-31-27-86:~# kubectl describe secret $(kubectl get secrets | grep default | cut -f1 -d ' ') Name: default-token-w1jq0 Namespace: default Labels: <none> Annotations: kubernetes.io/service-account.name=default kubernetes.io/service-account.uid=478eae11-f0f4-11e7-b936-022346869a82 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 7 bytes token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZmF1bHQtdG9rZW4tdzFqcTAiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGVmYXVsdCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ3OGVhZTExLWYwZjQtMTFlNy1iOTM2LTAyMjM0Njg2OWE4MiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OmRlZmF1bHQifQ.Fjv6hA1Kzurr-Cie5EZmxMOoxm-3Uh3zMGvoA4Xu6h2U1-NBp_fw_YW7nSECnI7ttGz67mxAjknsgfze-1JtgbIUtyPP31Hp1iscaieu5r4gAc_booBdkV8Eb8gia6sF84Ye10lsS4nkmmjKA30BdqH9qjWspChLPdGdG3_RmjApIHEOjCqQSEHGBOMvY98_uO3jiJ_XlJBwLL4uydjhpoANrS0xlS_Evn0evLdits7_piklbc-uqKJBdZ6rWyaRbkaIbwNYYhg7O-CLlUVuExynAAp1J7Mo3qITNV_F7f4l4OIzmEf3XLho4a1KIGb76P1AOvSrXgTzBq0Uvh5fUw |

Auto Scaling

Using the example on page 122 of Kubernetes Up & Running.

| Code Block |

|---|

kubectl run nginx --image=nginx:1.7.12

kubectl get deployments nginx

kubectl scale deployments nginx --replicas=3

kubectl get deployments nginx

kubectl get replicasets --selector=run=nginx

kubectl get pods --all-namespaces

kubectl scale deployments nginx --replicas=64

|

Developer Deployment

Deployment Integrity

...