I was really frustrated trying to figure out why my Kubernetes configmap wasn't behaving as expected. In my personal setup, everything worked fine, but in the new Windriver integration lab, it seemed to ignore my file permission settings and inject a read-only version into the container.

After banging my head against the wall for a while, I realized there was a version difference between the two environments. My personal setup was running Kubernetes version 1.8.5, while the Windriver environment was on version 1.8.10. It turns out that in Kubernetes version 1.8.9, a fix was implemented that affects how configmaps and secrets are handled. This difference in versions explained the issue I was experiencing.

Fix impact:

Secret, configMap, downwardAPI and projected volumes will be mounted as read-only volumes. Applications that attempt to write to these volumes will receive read-only filesystem errors. Previously, applications were allowed to make changes to these volumes, but those changes were reverted at an arbitrary interval by the system. Applications should be re-configured to write derived files to another location.

Here is the bug that led me to the discovery: https://github.com/coreos/bugs/issues/2384

The commit: https://github.com/kubernetes/kubernetes/issues/60814

And the changelog: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#changelog-since-v188

For it services and support: https://ottawa-it-support.com/

This was the ticket I was working at the time and I know that SO has a similar problem where the container is trying to modify/move a file/directory that is injected via configmap.

OOM-900 - portal-cassandra missing pv and pvc Closed

Here is the code. Note that setting the defaultMode: or mode: of a volume to something that is writeable isn't being honored anymore.

Problem raised on PTL meeting, I was to create a ticket to LF IT but went with writing a simple script to actually gather manifests from a set of ONAP images.

The goal of this page is to ahve a public place where progress of creating best practices for package manifests to contain usefull information is tracked.

Some of the stuff that I feel should be there:

- uniquely identify the package (name, semver version), from what it was build (e.g. git url + commit sha)

- contain license tag and license file (in the package not in manifest)

- we should handle copyrights too- I'm unsure how those should be published (maybe there is some java/maven way im not aware of)

We already do have all the information available at hand, it just doesn't get transferred to jar packages.

Image list that was taken for analysis, I think it gives representable sample but if someone feels that some other images should be included, let me know I'll re-run this.

$ cat onap-dockers-sample nexus3.onap.org:10001/onap/aai-graphadmin:1.9.2 nexus3.onap.org:10001/onap/aai-resources:1.9.3 nexus3.onap.org:10001/onap/aai-schema-service:1.9.3 nexus3.onap.org:10001/onap/aai-traversal:1.9.3 nexus3.onap.org:10001/onap/babel:1.9.2 nexus3.onap.org:10001/onap/ccsdk-blueprintsprocessor:1.2.1 nexus3.onap.org:10001/onap/ccsdk-cds-ui-server:1.2.1 nexus3.onap.org:10001/onap/ccsdk-commandexecutor:1.2.1 nexus3.onap.org:10001/onap/ccsdk-py-executor:1.2.1 nexus3.onap.org:10001/onap/ccsdk-sdclistener:1.2.1 nexus3.onap.org:10001/onap/dmaap/datarouter-node:2.1.9 nexus3.onap.org:10001/onap/dmaap/datarouter-prov:2.1.9 nexus3.onap.org:10001/onap/dmaap/dmaap-mr:1.3.0 nexus3.onap.org:10001/onap/model-loader:1.9.1 nexus3.onap.org:10001/onap/sdc-backend-all-plugins:1.9.5 nexus3.onap.org:10001/onap/sdc-backend-init:1.9.5 nexus3.onap.org:10001/onap/sdc-frontend:1.9.5 nexus3.onap.org:10001/onap/sdc-helm-validator:1.3.0 nexus3.onap.org:10001/onap/sdc-onboard-backend:1.9.5 nexus3.onap.org:10001/onap/sdc-workflow-backend:1.7.0 nexus3.onap.org:10001/onap/sdc-workflow-frontend:1.7.0 nexus3.onap.org:10001/onap/so/api-handler-infra:1.9.2 nexus3.onap.org:10001/onap/so/bpmn-infra:1.9.2 nexus3.onap.org:10001/onap/so/catalog-db-adapter:1.9.2 nexus3.onap.org:10001/onap/so/openstack-adapter:1.9.2 nexus3.onap.org:10001/onap/so/request-db-adapter:1.9.2 nexus3.onap.org:10001/onap/so/sdc-controller:1.9.2 nexus3.onap.org:10001/onap/so/sdnc-adapter:1.9.2 nexus3.onap.org:10001/onap/so/so-admin-cockpit:1.8.2 nexus3.onap.org:10001/onap/so/so-cnf-adapter:1.9.2 nexus3.onap.org:10001/onap/so/so-etsi-nfvo-ns-lcm:1.8.2 nexus3.onap.org:10001/onap/so/so-etsi-sol003-adapter:1.8.2 nexus3.onap.org:10001/onap/so/so-etsi-sol005-adapter:1.8.3 nexus3.onap.org:10001/onap/so/so-nssmf-adapter:1.9.1 nexus3.onap.org:10001/onap/so/so-oof-adapter:1.8.3 nexus3.onap.org:10001/onap/vid:8.0.2

All the manifests for all the jars found in the images listed above are are attached below (directories correspond to images with / switched to _ , manifests have the same name as jars in the image but with -manifest suffix)

Example manifests for aai-graphadmin

$ cat *-manifest Manifest-Version: 1.0 Archiver-Version: Plexus Archiver Built-By: jenkins Start-Class: org.onap.aai.GraphAdminApp Spring-Boot-Classes: BOOT-INF/classes/ Spring-Boot-Lib: BOOT-INF/lib/ Spring-Boot-Version: 1.5.21.RELEASE Created-By: Apache Maven 3.5.4 Build-Jdk: 1.8.0_292 Main-Class: org.springframework.boot.loader.PropertiesLauncher Manifest-Version: 1.0 Created-By: 1.8.0_212 (IcedTea) Manifest-Version: 1.0 CLDR-Version: 21.0.1 Created-By: 1.8.0_212 (IcedTea) Manifest-Version: 1.0 Created-By: 1.8.0_212 (IcedTea) Manifest-Version: 1.0 Created-By: 1.8.0_212 (IcedTea) Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Implementation-Vendor-Id: com.sun Extension-Name: javax.crypto Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Manifest-Version: 1.0 Created-By: 1.8.0_212 (IcedTea) Manifest-Version: 1.0 Crypto-Strength: limited Manifest-Version: 1.0 Premain-Class: sun.management.Agent Agent-Class: sun.management.Agent Created-By: 1.8.0_212 (IcedTea) Manifest-Version: 1.0 Created-By: 1.8.0_212 (IcedTea) Main-Class: jdk.nashorn.tools.Shell Name: jdk/nashorn/ Name: jdk/nashorn/ Implementation-Title: Oracle Nashorn Implementation-Version: 1.8.0_212-b04 Implementation-Vendor: Oracle Corporation Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Name: javax/swing/JRadioButton.class Java-Bean: True Name: javax/swing/JTextPane.class Java-Bean: True Name: javax/swing/JWindow.class Java-Bean: True Name: javax/swing/JRadioButtonMenuItem.class Java-Bean: True Name: javax/swing/JDialog.class Java-Bean: True Name: javax/swing/JSlider.class Java-Bean: True Name: javax/swing/JMenuBar.class Java-Bean: True Name: javax/swing/JList.class Java-Bean: True Name: javax/swing/JTable.class Java-Bean: True Name: javax/swing/JTextArea.class Java-Bean: True Name: javax/swing/JTabbedPane.class Java-Bean: True Name: javax/swing/JPanel.class Java-Bean: True Name: javax/swing/JApplet.class Java-Bean: True Name: javax/swing/JInternalFrame.class Java-Bean: True Name: javax/swing/JToggleButton.class Java-Bean: True Name: javax/swing/JSpinner.class Java-Bean: True Name: javax/swing/JComboBox.class Java-Bean: True Name: javax/swing/JOptionPane.class Java-Bean: True Name: javax/swing/JPasswordField.class Java-Bean: True Name: javax/swing/JTree.class Java-Bean: True Name: javax/swing/JMenuItem.class Java-Bean: True Name: javax/swing/JFrame.class Java-Bean: True Name: javax/swing/JScrollPane.class Java-Bean: True Name: javax/swing/JSeparator.class Java-Bean: True Name: javax/swing/JLabel.class Java-Bean: True Name: javax/swing/JToolBar.class Java-Bean: True Name: javax/swing/JButton.class Java-Bean: True Name: javax/swing/JTextField.class Java-Bean: True Name: javax/swing/JEditorPane.class Java-Bean: True Name: javax/swing/JPopupMenu.class Java-Bean: True Name: javax/swing/JMenu.class Java-Bean: True Name: javax/swing/JScrollBar.class Java-Bean: True Name: javax/swing/JCheckBoxMenuItem.class Java-Bean: True Name: javax/swing/JCheckBox.class Java-Bean: True Name: javax/swing/JProgressBar.class Java-Bean: True Name: javax/swing/JSplitPane.class Java-Bean: True Name: javax/swing/JFormattedTextField.class Java-Bean: True Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Implementation-Vendor-Id: com.sun Extension-Name: javax.crypto Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Implementation-Vendor-Id: com.sun Extension-Name: javax.crypto Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Implementation-Vendor-Id: com.sun Extension-Name: javax.crypto Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Manifest-Version: 1.0 Crypto-Strength: unlimited Manifest-Version: 1.0 Implementation-Title: Java Runtime Environment Implementation-Version: 1.8.0_212 Specification-Vendor: Oracle Corporation Specification-Title: Java Platform API Specification Specification-Version: 1.8 Created-By: 1.8.0_212 (IcedTea) Implementation-Vendor: IcedTea Main-Class:

I've created the following slide deck to describe the highlights of ONAP Dublin release. These include all the Use Cases, as well as Project level summaries.

I would like to invite community members to review and let me know of any misrepresentation/incorrect/missing information.

Regards,

Sriram

ONAP has grown in Casablanca and requires a lot of resources to run. Although I have a 6 node K8s cluster that I deployed using rancher, I believe the same kubelet flags can be applied to any method of spinning up and maintaining a K8s cluster (kubeadm etc). The nodes are Openstack VMs that I spun up on a private lab network.

The flavour I used:

That gives me 42 cores of CPU, 192 GB RAM, 960 GB storage with which to "try" and deploy all of ONAP.

Out of the box, what I found was that after a few days one of my nodes would become unresponsive and disconnect from the cluster. Even accessing the console through Openstack was slow. The resolution for me was a hard shutdown from the Openstack UI which brought it back to life (until it eventually happened again!).

It seems that the node that died was overloaded with pods that starved the core containers that maintain connectivity to the K8s cluster (kubelet, rancher-agent, etc).

The solution I found was to use kubelet eviction flags to protect the core containers from getting starved of resources.

These flags will not enable you to run all of ONAP on less resources. They are there to simply prevent the overloading of your K8s cluster from crashing. If you attempt to deploy too much the pods will be evicted by K8s.

The resource limit story introduced in Casablanca add minimum requirements to each application. This is a WIP and will help the community better understand how many actual CPU cores and RAM is really required in your K8s cluster to run things.

For more information on the kubelet flags see:

https://kubernetes.io/docs/tasks/administer-cluster/out-of-resource/

Here is how I applied them to my already running K8s cluster using rancher:

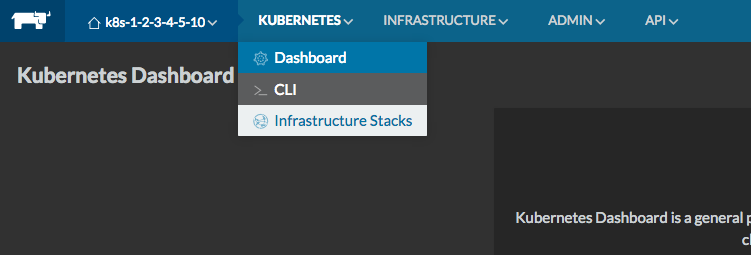

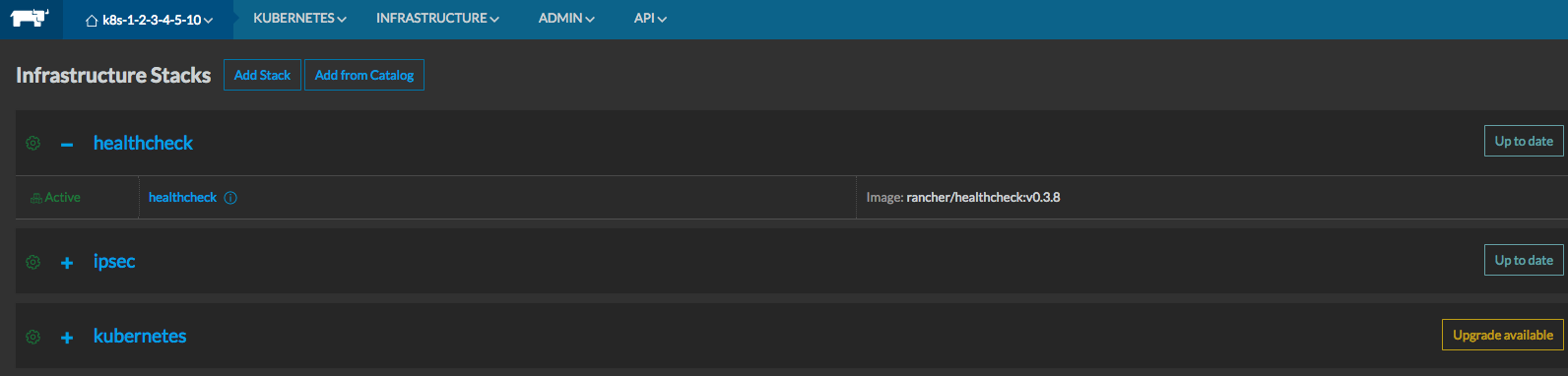

- Navigate to the Kubernetes > "Infrastructure Stacks" menu option

- Depending on the version of rancher and the K8s template version you have deployed, the button on the right side will say either "Up to date" or "Upgrade available".

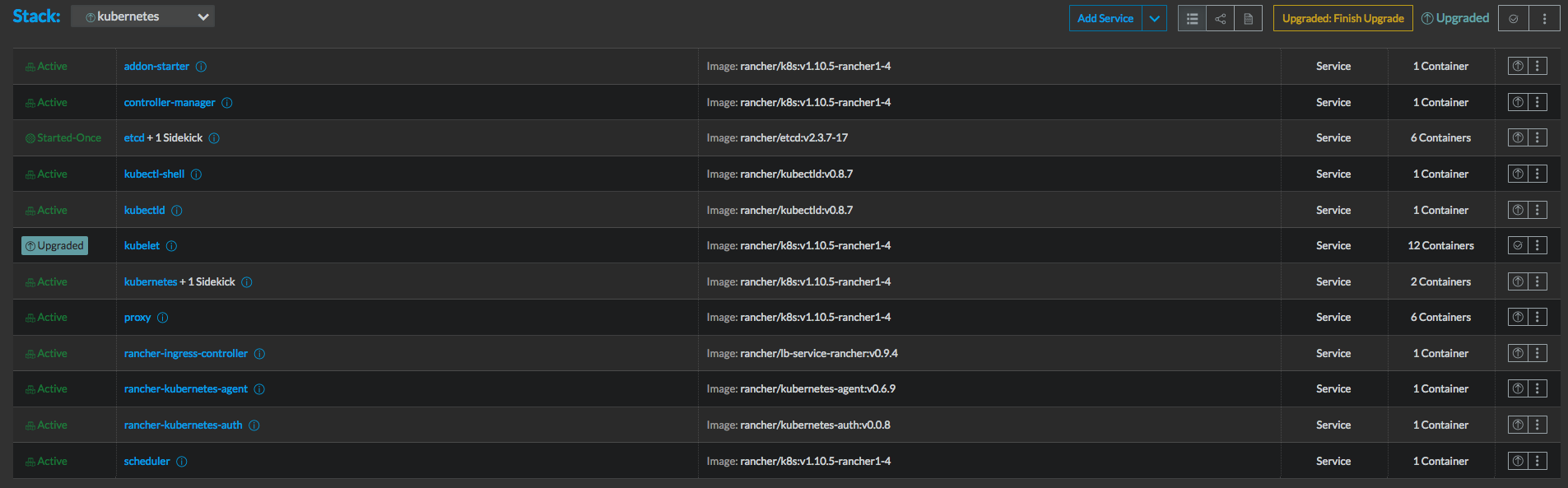

- Click the one beside the "+ kubernetes stack" and it will bring up a menu where you can select the environment template you want to tweak

- Find the field for Additional Kubelet Flags and input the desired amount of resources you want to reserve. I used the example from the K8s docs but you should adjust to what makes sense for your environment.

--eviction-hard=memory.available<500Mi,nodefs.available<1Gi,imagefs.available<5Gi --eviction-minimum-reclaim=memory.available=0Mi,nodefs.available=500Mi,imagefs.available=2Gi --system-reserved=memory=1.5Gi

- The stack will upgrade and it will basically add some startup parameters to the kubelet container and bounce them. Click on "Upgraded: Finish Upgrade" to complete the upgrade.

- Validate the kubelet flags are now passed in as arguments to the container:

- That's it!

Hopefully this was helpful and will save people time and effort reviving dead K8s worker nodes due to resource starvation from containers that do not have any resource limits in place.

As an aid to describing to interested parties outside of ONAP the major changes, improvements, features, etc. developed during the Casablanca release I've created the following slide package. As I'm focusing on audiences from outside the ONAP community I haven't included activities that are largely internal even though they might be very important (e.g. improvements in S3P goals). As many of the projects worked on use-cases I didn’t highlight all of these changes in the project summaries.

If I've made any errors, missed anything you think should be included or included activities that aren't complete please let me know.

Cheers, Roger

This is an easy way to access any of the pod services or pods from an external network. Below are instructions on how to setup a SOCK5 proxy server and then how to configure Firefox running on a desktop to use the proxy server.

The SOCK5 proxy server app is the ssh “Dynamic port forwarding” feature. To enable it, a ssh session must be created with a pod using the ‘-D’ option. The below instructions where tested in ONAP Amsterdam and use the portal-vnc pod.

First connect to your portal-vnc pod.

kubectl exec -it $(kubectl get pod -lapp=portal-vnc -o jsonpath="{..metadata.name}") bash

On the portal-vnc pod Install openssh-server and just use the factory settings

apt update apt install openssh-server service ssh start

On the portal-vnc pod, copy over a public ssh key. For details on ssh key pair see https://www.digitalocean.com/community/tutorials/how-to-set-up-ssh-keys--2

mkdir /root/.ssh cat >> /root/.ssh/authorized_keys << EOF put the public key here EOF

To be able to create a ssh session from a client external to kubernetes, a NodePort must be created for the session to pass through. So on a box running the kubectl client , Create the following file and fill in the NAMESPACE and NODE-PORT.

cat > portal-vnc-service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: portal-vnc-ssh

labels:

app: portal-vnc-ssh

namespace: NAMESPACE

spec:

ports:

- name: portal-3

nodePort: NODE-PORT

port: 22

protocol: TCP

targetPort: 22

selector:

app: portal-vnc

type: NodePort

EOF

Create the Service in kubernetes

kubectl create -f ./portal-vnc-service.yaml

Start the sock 5 proxy server by opening a ssh session to the portal-vnc with Dynamic port forwarding enabled (-D).

On the host where the ssh private key resides, executed the following command with the appropriate values. The address of the proxy server will be 'socks5://localhost:PROXY-PORT' where localhost is where the ssh session is initiated from.

ssh -D <PROXY-PORT> -p <NODE-PORT> root@<KUBE-MASTER-NODE-IP>

This will behave like a regular ssh session to portal-vnc.

Welcome to Ubuntu 16.04.4 LTS (GNU/Linux 4.9.78-rancher2 x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/advantage Last login: Mon Jun 4 16:56:31 2018 from 10.42.0.0 root@portal-vnc-59679d7f99-gbrlf:~#

Closing the ssh session will close the proxy server too.

Get the service IPs from portal-vnc from its the /etc/hosts file.

cat /etc/hosts 10.43.142.185 sdc.api.be.simpledemo.onap.org 10.43.180.235 portal.api.simpledemo.onap.org 10.43.227.25 sdc.api.simpledemo.onap.org 10.43.8.165 vid.api.simpledemo.onap.org 10.42.0.149 aai.api.simpledemo.onap.org

Then add host ip mappings to the /etc/hosts where the ssh session was initiated from.

Don't just copy and paste the ip from this block post. The IPs are different on each ONAP deployment

sudo cat >> /etc/hosts << EOF 10.43.142.185 sdc.api.be.simpledemo.onap.org 10.43.180.235 portal.api.simpledemo.onap.org 10.43.227.25 sdc.api.simpledemo.onap.org 10.43.8.165 vid.api.simpledemo.onap.org 10.42.0.149 aai.api.simpledemo.onap.org EOF

The proxy server can be configured with most web bowsers. Here is an easy way to configure it in Firefox. Just open Firefox preferences by typing 'about:preferences' in the address bar. Then search for proxy and click on the settings button that appears. Finally enter the SOCKs details.

The port 32003 in the following screen shot is the-D <PROXY-PORT> it the entered in the ssh command above.

Once configured just enter the 'http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm' and then firefox will open up the ONAP portal. Firefox will have access to any of the onap service IP. Firefox must run on the same host where the ssh session was initiated from and were the /etc/hosts modified.

For the Beijing release of ONAP on OOM, accessing the ONAP portal from the user's own environment (laptop etc.) was a frequently requested feature.

So to achieve that, what we've decided on doing is to expose the portal application's port 8989 through a K8s LoadBalancer object. This means that your Kubernetes deployment needs to support Load Balancing capabilities (Rancher's default Kubernetes deployment has this implemented by default), or this approach will not work.

This has some non-obvious implications in a clustered Kubernetes environment specifically where the K8s cluster nodes communicate with each other via a private network that isn't publicly accessible (i.e. Openstack VMs with private internal network).

Typically, to be able to access the K8s nodes publicly a public address is assigned. In Openstack, this is a floating IP address (or if your provider network is public/external and can attach directly to your K8S nodes, your nodes have direct public access).

What happens when the portal-app chart is deployed is that a K8S service is created that instantiates a load balancer (in its own separate container). The LB chooses the private interface of one of the nodes (if happening in a multi-node K8S cluster deployment) as in the example below (i.e. in OpenStack, 10.0.0.4 is the private IP of the specific K8s cluster node VM only).

Then, to be able to access the portal on port 8989 from outside the OpenStack private network where the K8S cluster is connected to, the user needs to assign/get the specific floating IP address that corresponds to the private IP address of the specific K8S Node VM where the Portal Service "portal-app" (as shown below) is deployed at.

kubectl -n onap get services | grep "portal-app" NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE LABELS portal-app LoadBalancer 10.43.142.201 10.0.0.4 8989:30215/TCP,8006:30213/TCP,8010:30214/TCP 1d app=portal-app,release=dev

In this example, that public floating IP that is associated with private IP 10.0.0.4 is 10.12.6.155, which can be obtained through the horizon GUI or the Openstack CLI for your tenant (by running command "openstack server list" and looking for the specific K8S Node where the portal-app service is deployed at). This floating IP is then used in your /etc/hosts to map the fixed DNS aliases required by the ONAP Portal as shown below.

10.12.6.155 portal.api.simpledemo.onap.org 10.12.6.155 vid.api.simpledemo.onap.org 10.12.6.155 sdc.api.fe.simpledemo.onap.org 10.12.6.155 portal-sdk.simpledemo.onap.org 10.12.6.155 policy.api.simpledemo.onap.org 10.12.6.155 aai.api.sparky.simpledemo.onap.org 10.12.6.155 cli.api.simpledemo.onap.org 10.12.6.155 msb.api.discovery.simpledemo.onap.org

NOTE:

If you are not using floating IPs in your Kubernetes deployment and directly attaching a public IP address (i.e. by using your public provider network) to your K8S Node VMs' network interface, then the output of 'kubectl -n onap get services | grep "portal-app"' will show your public IP instead of the private network's IP. Therefore, you can grab this public IP directly (as compared to trying to find the floating IP first) and map this IP in /etc/hosts.

For K8S clusters that use Rancher as its CMP (container management platform) only: If your kubernetes nodes/VMs are using floating IPs and want to use this floating IP as the Kubernetes node's "EXTERNAL-IP", then you need to make sure that the "CATTLE_AGENT_IP" argument of the "docker run" command that will run the Rancher Agent in the kubernetes node(s) is set to be the Floating IP and not the VM's private IP.

Ensure you've disabled any proxy settings the browser you are using to access the portal and then simply access the familiar URL:

http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm

Other things we tried:

We went through using Kubernetes port forwarding but thought it a little clunky for the end user to have to use a script to open up port forwarding tunnels to each K8s pod that provides a portal application widget

We considered bringing back the VNC chart with a different image but there were many issues with resolution, lack of volume mount, /etc/hosts dynamic update, file upload that were a tall order to solve in time for the Beijing release.

I was banging my head against the wall trying to figure out why my K8s configmap was ignoring my file permission settings and injecting a read-only version into the container. What was stranger was that it worked in my personal environment and not in the new one I set up for myself in the Windriver integration lab.

Well it turns out that there is a version difference between the 2 environments and this is exactly what the issue is. In the 1.8.9 version of K8s, a fix went in that ensures all configmaps, secrets,

My personal environment is running K8s 1.8.5 and the Windriver environment K8s 1.8.10

Fix impact:

Secret, configMap, downwardAPI and projected volumes will be mounted as read-only volumes. Applications that attempt to write to these volumes will receive read-only filesystem errors. Previously, applications were allowed to make changes to these volumes, but those changes were reverted at an arbitrary interval by the system. Applications should be re-configured to write derived files to another location.

Here is the bug that led me to the discovery: https://github.com/coreos/bugs/issues/2384

The commit: https://github.com/kubernetes/kubernetes/issues/60814

And the changelog: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#changelog-since-v188

This was the ticket I was working at the time and I know that SO has a similar problem where the container is trying to modify/move a file/directory that is injected via configmap.

OOM-900 - Getting issue details... STATUS

Here is the code. Note that setting the defaultMode: or mode: of a volume to something that is writeable isn't being honored anymore.

https://gerrit.onap.org/r/#/c/42325/

In OOM, many of us use Rancher to deploy Kubernetes to run ONAP.

Recently, I've had to update my lab environment from Rancher and Kubernetes 1.5 to rancher to v1.6 in order to upgrade the Kubernetes stack to v1.7. I followed the steps on the Rancher website with 1 minor tweak: I used the rancher/server:stable image tag instead of latest

http://rancher.com/docs/rancher/latest/en/upgrading/#single-container

My rancher server container name was pensive_saha

docker stop pensive_saha docker create --volumes-from pensive_saha --name rancher-data rancher/server:stable docker pull rancher/server:stable docker run -d --volumes-from rancher-data --restart=unless-stopped -p 8080:8080 rancher/server:stable

Once the server is back up, navigate to the rancher web page and navigate to Kubernetes > Infrastructure Stacks.

The "Up to date" button shown below, will say "Upgrade Available" for each stack.

For each stack component, hit the button and select the most recent template from drop down, leave any input fields as their default and choose "Upgrade".

Example: upgrading the healthcheck service stack

Rancher will do its thing to each K8s stack component and after it is done there will be a button that says "Complete upgrade". Click that and the upgrade of the stack component will be complete.

You can now move on to the next component.

Once all stack status is "Green", the upgrade is complete.