Developer Setup

Gerrit/Git

Quickstarts

Committing Code

| Code Block |

|---|

|

# stage your changes

git add .

git commit -m "your commit message"

# commit your staged changes with sign-off

git commit -s --amend

# add Issue-ID after Change-ID

# Submit your commit to ONAP Gerrit for review

git review

# goto https://gerrit.onap.org/r/#/dashboard/self |

Amending existing gerrit changes in review

| Code Block |

|---|

|

# add new files/changes

git add .

# dont use -m - keep the same Issue-ID: line from original commmit

git commit --amend

git review -R

# see the change set number increase - https://gerrit.onap.org/r/#/c/17203/2 |

If you get a 404 on commit hooks - reconfigure - https://lists.onap.org/pipermail/onap-discuss/2018-May/009737.html and https://lists.onap.org/g/onap-discuss/topic/unable_to_push_patch_to/28034546?p=,,,20,0,0,0::recentpostdate%2Fsticky,,,20,2,0,28034546

| Code Block |

|---|

|

curl -kLo `git rev-parse --git-dir`/hooks/commit-msg http://gerrit.onap.org/r/tools/hooks/commit-msg; chmod +x `git rev-parse --git-dir`/hooks/commit-msg

git commit --amend

git review -R |

Verify Change Set from another developer

| Code Block |

|---|

|

# clone master, cd into it, pull review

git clone ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics

git pull ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics refs/changes/93/67093/1 |

Shared Change Set across Multiple VMs

| Code Block |

|---|

|

amdocs@ubuntu:~/_dev/20180917_shared_test$ rm -rf logging-analytics/

amdocs@ubuntu:~/_dev/20180917_shared_test$ git clone ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics

amdocs@ubuntu:~/_dev/20180917_shared_test$ cd logging-analytics/

amdocs@ubuntu:~/_dev/20180917_shared_test/logging-analytics$ git review -d 67093

Downloading refs/changes/93/67093/1 from gerrit

Switched to branch "review/michael_o_brien/67093"

|

Work across multiple VMs

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

sudo git clone ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics

cd logging-analytics/

sudo git pull ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics refs/changes/39/55339/1 |

Filter gerrit reviews - Thanks Mandeep Khinda

https://gerrit.onap.org/r/#/q/is:reviewer+AND+status:open+AND+label:Code-Review%253D0

Run/verify jobs Configuring Gerrit#RunningaCommandwithinGerrit

Workstation configuration

Ubuntu 16.04 on VMware Workstation 14 or Fusion 8

Note: do not use the gui upgrade (will cause the vm to periodically lock) - do individual apt-get 's

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

# start with clean VM, I use root, you can use the recommended non-root account

sudo vi /etc/hosts

# add your hostname to ::1 and 127.0.0.1 or each sudo command will hang for up to 10 sec on DNS resolution especially on ubuntu 18.04

sudo apt-get update

sudo apt-get install openjdk-8-jdk

# not in headless vm

apt-get install ubuntu-desktop

apt-get install git

apt-get install maven

#or

sudo wget http://apache.mirror.gtcomm.net/maven/maven-3/3.5.4/binaries/apache-maven-3.5.4-bin.tar.gz

sudo cp ap(tab) /opt

tar -xvf apache-maven-3.5.4-bin.tar.gz

sudo vi /etc/environment

MAVEN_OPTS="-Xms8192 -Djava.net.preferIPv4Stack=true"

# restart the terminal

ubuntu@ip-172-31-78-76:~$ mvn -version

Apache Maven 3.5.4 (1edded0938998edf8bf061f1ceb3cfdeccf443fe; 2018-06-17T18:33:14Z)

Maven home: /opt/apache-maven-3.5.4

Java version: 1.8.0_171, vendor: Oracle Corporation, runtime: /usr/lib/jvm/java-8-openjdk-amd64/jre

sudo vi ~/.ssh/config

Host *

StrictHostKeyChecking no

UserKnownHostsFile=/dev/null

# scp onap gerrit cert into VM from host macbook

obrien:obrienlabs amdocs$ scp ~/.ssh/onap_rsa amdocs@192.168.211.129:~/

move to root

sudo su -

root@obriensystemsu0:~# cp /home/amdocs/onap_rsa .

ls /home/amdocs/.m2

cp onap_rsa ~/.ssh/id_rsa

chmod 400 ~/.ssh/id_rsa

# test your gerrit access

sudo git config --global --add gitreview.username michaelobrien

sudo git config --global user.email frank.obrien@amdocs.com

sudo git config --global user.name "Michael OBrien"

sudo git config --global gitreview.remote origin

sudo mkdir log-326-rancher-ver

cd log-326-rancher-ver/

sudo git clone ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics

cd logging-analytics/

sudo vi deploy/rancher/oom_rancher_setup.sh

sudo git add deploy/rancher/oom_rancher_setup.sh .

# setup git-review

sudo apt-get install git-review

sudo git config --global gitreview.remote origin

# upload a patch

sudo git commit -am "update rancher version to 1.6.18"

# 2nd line should be "Issue-ID: LOG-326"

sudo git commit -s --amend

sudo git review

Your change was committed before the commit hook was installed.

Amending the commit to add a gerrit change id.

remote: Processing changes: new: 1, refs: 1, done

remote: New Changes:

remote: https://gerrit.onap.org/r/55299 update rancher version to 1.6.18

remote:

To ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics

* [new branch] HEAD -> refs/publish/master

# see

https://gerrit.onap.org/r/#/c/55299/

if you get a corrupted FS type "fsck -y /dev/sda1" |

OSX 10.13

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

# turn of host checking

# install mvn

# download from http://maven.apache.org/download.cgi

sudo chown -R root:wheel apache-maven-3.5.4*

sudo vi ~/.bash_profile

# use

export PATH=$PATH:/Users/amdocs/apache-maven-3.5.4/bin |

Windows 10

On a 64GB Thinkpad P52

get maven http://maven.apache.org/download.cgi

get https://gitforwindows.org/

install putty, run pageant, load the ppk version of your ssh key

setup gerrit config in your .ssh/config file

The powershell now has unix and OpenSSH capabilities built in

or Just install the Windows Subsystem for Linux https://docs.microsoft.com/en-us/windows/wsl/install-win10 and the Ubuntu 16 tools https://www.microsoft.com/en-ca/p/ubuntu/9nblggh4msv6?rtc=1&activetab=pivot:overviewtab and skip git-bash, putty and cygwin.

| Code Block |

|---|

|

michaelobrien@biometrics MINGW64 ~/_dev/intelij/onap_20180916

$ cat ~/.ssh/config

host gerrit.onap.org

Hostname gerrit.onap.org

IdentityFile ~/.ssh/onap_rsa

michaelobrien@biometrics MINGW64 ~/_dev/intelij/onap_20180916

$ git clone ssh://michaelobrien@gerrit.onap.org:29418/logging-analytics

Cloning into 'logging-analytics'...

remote: Counting objects: 1, done

remote: Finding sources: 100% (1/1)

remote: Total 1083 (delta 0), reused 1083 (delta 0)

Receiving objects: 100% (1083/1083), 1.18 MiB | 2.57 MiB/s, done.

Resolving deltas: 100% (298/298), done.

|

Note: some repos are close to thew 255 char limit - only for windows -

| Jira |

|---|

| server | ONAP JIRA |

|---|

| serverId | 425b2b0a-557c-3c0c-b515-579789cceedb |

|---|

| key | SDC-1765 |

|---|

|

Java Environment

Java 8

| Code Block |

|---|

|

sudo apt-get install openjdk-8-jdk |

Java 9

| Code Block |

|---|

|

apt-cache search openjdk

sudo apt-get install openjdk-8-jdk |

Java 10

| Code Block |

|---|

|

# this one is 3rd party

sudo add-apt-repository ppa:linuxuprising/java

sudo apt update

sudo apt install oracle-java10-installer

# it is an older one

amdocs@obriensystemsu0:~$ java -version

java version "10.0.2" 2018-07-17

Java(TM) SE Runtime Environment 18.3 (build 10.0.2+13)

Java HotSpot(TM) 64-Bit Server VM 18.3 (build 10.0.2+13, mixed mode) |

Java 11

| Code Block |

|---|

|

# came out this week - use windows for now or dockerhub

PS C:\Windows\system32> java -version

java version "11" 2018-09-25

Java(TM) SE Runtime Environment 18.9 (build 11+28)

Java HotSpot(TM) 64-Bit Server VM 18.9 (build 11+28, mixed mode) |

Maven Configuration

add ~/.m2/settings.xml from the following or oparent/settings.xml - as of oparent 1.2.1 20180927 you will need the following additional profile

| View file |

|---|

| name | settings.xml |

|---|

| height | 250 |

|---|

|

| Code Block |

|---|

|

<profile>

<id>onap-settings</id>

<properties>

<onap.nexus.url>https://nexus.onap.org</onap.nexus.url>

<onap.nexus.rawrepo.baseurl.upload>https://nexus.onap.org/content/sites/raw</onap.nexus.rawrepo.baseurl.upload>

<onap.nexus.rawrepo.baseurl.download>https://nexus.onap.org/service/local/repositories/raw/content</onap.nexus.rawrepo.baseurl.download>

<onap.nexus.rawrepo.serverid>ecomp-raw</onap.nexus.rawrepo.serverid>

<!-- properties for Nexus Docker registry -->

<onap.nexus.dockerregistry.daily>nexus3.onap.org:10003</onap.nexus.dockerregistry.daily>

<onap.nexus.dockerregistry.release>nexus3.onap.org:10002</onap.nexus.dockerregistry.release>

<docker.pull.registry>nexus3.onap.org:10001</docker.pull.registry>

<docker.push.registry>nexus3.onap.org:10003</docker.push.registry>

</properties>

</profile>

# top profile above the other 8

<activeProfile>onap-settings</activeProfile> |

Test your environment

Verify docker image pushes work

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

cd logging-analytics/reference/logging-docker-root/logging-docker-demo

./build.sh

Sending build context to Docker daemon 18.04 MB

Step 1/2 : FROM tomcat:8.0.48-jre8

8.0.48-jre8: Pulling from library/tomcat

723254a2c089: Pull complete

Digest: sha256:b2cd0873b73036b3442c5794a6f79d554a4df26d95a40f5683383673a98f3330

Status: Downloaded newer image for tomcat:8.0.48-jre8

---> e072422ca96f

Step 2/2 : COPY target/logging-demo-1.2.0-SNAPSHOT.war /usr/local/tomcat/webapps/logging-demo.war

---> a571670e32db

Removing intermediate container 4b9d81978ab3

Successfully built a571670e32db

oomk8s/logging-demo-nbi latest a571670e32db Less than a second ago 576 MB

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username: obrienlabs

Password:

Login Succeeded

The push refers to a repository [docker.io/oomk8s/logging-demo-nbi]

7922ca95f4db: Pushed

7a5faefa0b46: Mounted from library/tomcat

0.0.1: digest: sha256:7c1a3db2a71d47387432d6ca8328eabe9e5353fbc56c53f2a809cd7652c5be8c size: 3048 |

Verify maven builds work

Will test nexus.onap.org

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

get clone string from https://gerrit.onap.org/r/#/admin/projects/logging-analytics

sudo wget https://jira.onap.org/secure/attachment/10829/settings.xml

mkdir ~/.m2

cp settings.xml ~/.m2

cd logging-analytics/

mvn clean install -U

[INFO] Finished at: 2018-06-22T16:11:47-05:00 |

Helm/Rancher/Kubernetes/Docker stack installation

Either install all the current versions manually or use the script in https://git.onap.org/logging-analytics/tree/deploy/rancher/oom_rancher_setup.sh

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

# fully automated (override 16.14 to 1.6.18)

sudo logging-analytics/deploy/rancher/oom_rancher_setup.sh -b master -s 192.168.211.129 -e onap

# or docker only if you kubernetes cluster is in a separate vm

sudo curl https://releases.rancher.com/install-docker/17.03.sh | sh |

Verify Docker can pull from nexus3

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

ubuntu@ip-10-0-0-144:~$ sudo docker login -u docker -p docker nexus3.onap.org:10001

Login Succeeded

ubuntu@ip-10-0-0-144:~$ sudo docker pull docker.elastic.co/beats/filebeat:5.5.0

5.5.0: Pulling from beats/filebeat

e6e5bfbc38e5: Pull complete

ubuntu@ip-10-0-0-144:~$ sudo docker pull nexus3.onap.org:10001/aaionap/haproxy:1.1.0

1.1.0: Pulling from aaionap/haproxy

10a267c67f42: Downloading [==============================================> ] 49.07 MB/52.58 MB |

download and run the installer for https://www.eclipse.org/downloads/download.php?file=/oomph/epp/oxygen/R2/eclipse-inst-linux64.tar.gz

| Code Block |

|---|

|

# run as root

sudo su -

tar -xvf eclipse-inst-linux64.tar.gz

cd eclipse-installer

./eclipse-inst |

up the allocation of

Xmx4096m in eclipse.ini

start eclipse with sudo /root/eclipse/jee-oxygen/eclipse/eclipse &

IntelliJ

download from https://www.jetbrains.com/idea/download/index.html#section=linux

| Code Block |

|---|

|

tar -xvf ideaIC-2018.2.3.tar.gz

cd idea-IC-182.4323.46/

cat Install-Linux-tar.txt

cd bin

./idea.sh

|

Cloning All of ONAP

optional

Use the script on https://github.com/obrienlabs/onap-root/blob/master/git_recurse.sh

IntelliJ

Add git config, add jdk reference, add maven run target

Run maven build for logging-analytics in InteliJ

V

V

Developer Testing

Sonar

Having trouble getting the "run-sonar" command to run sonar - it skips the modules in the pom.

Looking at verifying sonar locally using eclemma

Kubernetes DevOps

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

Installing a pod

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

# automatically via cd.sh in LOG-326

# get the dev.yaml and set any pods you want up to true as well as fill out the openstack parameters

sudo wget https://git.onap.org/oom/plain/kubernetes/onap/resources/environments/dev.yaml

sudo cp logging-analytics/deploy/cd.sh .

# or

# manually

cd oom/kubernetes/

sudo make clean

sudo make all

sudo make onap

sudo helm install local/onap -n onap --namespace onap -f onap/resources/environments/disable-allcharts.yaml --set log.enabled=true

# adding another (so)

sudo helm upgrade local/onap --namespace onap -f onap/resources/environments/disable-allcharts.yaml --set so.enabled=true --set log.enabled=true |

Test DNS URLS in the kubernetes ONAP namespace

| Code Block |

|---|

|

test urls in the robot container

wget http://pomba-sdcctxbuilder.onap:9530/sdccontextbuilder/health

wget http://pomba-networkdiscoveryctxbuilder.onap:9530/ndcontextbuilder/health |

Override global policy

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

# override global docker pull policy for a single component

# set in oom/kubernetes/onap/values.yaml

# use global.pullPolicy in your -f yaml or a --set

|

Exec into a container of a pod with multiple containers

| Code Block |

|---|

|

# for

onap logdemonode-logdemonode-5c8bffb468-dhzcc 2/2 Running 0 1m

# use

kubectl exec -it logdemonode-logdemonode-5c8bffb468-dhzcc -n onap -c logdemonode bash |

Push a file into a Kubernetes container/pod

| Code Block |

|---|

|

opy files from the vm to the robot container - to avoid buiding a new robot image

root@ubuntu:~/_dev/62405_logback/testsuite/robot/testsuites# kubectl cp health-check.robot onap-robot-7c84f54558-f8mw7: -n onap

root@ubuntu:~/_dev/62405_logback/testsuite/robot/testsuites# kubectl cp ../resources/pomba_interface.robot onap-robot-7c84f54558-f8mw7: -n onap

move the files in the robot container to the proper dir

root@onap-robot-7c84f54558-f8mw7:/# cp health-check.robot /var/opt/OpenECOMP_ETE/robot/testsuites/

root@onap-robot-7c84f54558-f8mw7:/# ls

bin boot dev etc health-check.robot home lib lib64 media mnt opt pomba_interface.robot proc root run sbin share srv sys tmp usr var

root@onap-robot-7c84f54558-f8mw7:/# cp pomba_interface.robot /var/opt/OpenECOMP_ETE/robot/resources/

retest health

root@ubuntu:~/_dev/62405_logback/oom/kubernetes/robot# ./ete-k8s.sh onap health

and directly in the robot container

wget http://pomba-sdcctxbuilder.onap:9530/sdccontextbuilder/health

wget http://pomba-networkdiscoveryctxbuilder.onap:9530/ndcontextbuilder/health |

Restarting a container

Restarting a pod

If you change configuration like the logback.xml in a pod or would like restart an entire pod like the log and portal pods

| Code Block |

|---|

| language | bash |

|---|

| theme | Midnight |

|---|

|

cd oom/kubernetes

# do a make if anything is modified in your charts

sudo make all

#sudo make onap

ubuntu@ip-172-31-19-23:~/oom/kubernetes$ sudo helm upgrade -i onap local/onap --namespace onap --set log.enabled=false

# wait and check in another terminal for all containers to terminate

ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-log

onap onap-log-elasticsearch-7557486bc4-5mng9 0/1 CrashLoopBackOff 9 29m

onap onap-log-kibana-fc88b6b79-nt7sd 1/1 Running 0 35m

onap onap-log-logstash-c5z4d 1/1 Terminating 0 4h

onap onap-log-logstash-ftxfz 1/1 Terminating 0 4h

onap onap-log-logstash-gl59m 1/1 Terminating 0 4h

onap onap-log-logstash-nxsf8 1/1 Terminating 0 4h

onap onap-log-logstash-w8q8m 1/1 Terminating 0 4h

sudo helm upgrade -i onap local/onap --namespace onap --set portal.enabled=false

sudo vi portal/charts/portal-sdk/resources/config/deliveries/properties/ONAPPORTALSDK/logback.xml

sudo make portal

sudo make onap

ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-log

sudo helm upgrade -i onap local/onap --namespace onap --set log.enabled=true

sudo helm upgrade -i onap local/onap --namespace onap --set portal.enabled=true

ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-log

onap onap-log-elasticsearch-7557486bc4-2jd65 0/1 Init:0/1 0 31s

onap onap-log-kibana-fc88b6b79-5xqg4 0/1 Init:0/1 0 31s

onap onap-log-logstash-5vq82 0/1 Init:0/1 0 31s

onap onap-log-logstash-gvr9z 0/1 Init:0/1 0 31s

onap onap-log-logstash-qqzq5 0/1 Init:0/1 0 31s

onap onap-log-logstash-vbp2x 0/1 Init:0/1 0 31s

onap onap-log-logstash-wr9rd 0/1 Init:0/1 0 31s

ubuntu@ip-172-31-19-23:~$ kubectl get pods --all-namespaces | grep onap-portal

onap onap-portal-app-8486dc7ff8-nbps7 0/2 Init:0/1 0 9m

onap onap-portal-cassandra-8588fbd698-4wthv 1/1 Running 0 9m

onap onap-portal-db-7d6b95cd94-9x4kf 0/1 Running 0 9m

onap onap-portal-db-config-dpqkq 0/2 Init:0/1 0 9m

onap onap-portal-sdk-77cd558c98-5255r 0/2 Init:0/1 0 9m

onap onap-portal-widget-6469f4bc56-g8s62 0/1 Init:0/1 0 9m

onap onap-portal-zookeeper-5d8c598c4c-czpnz 1/1 Running 0 9m |

downgrade docker if required

| Code Block |

|---|

sudo apt-get autoremove -y docker-engine |

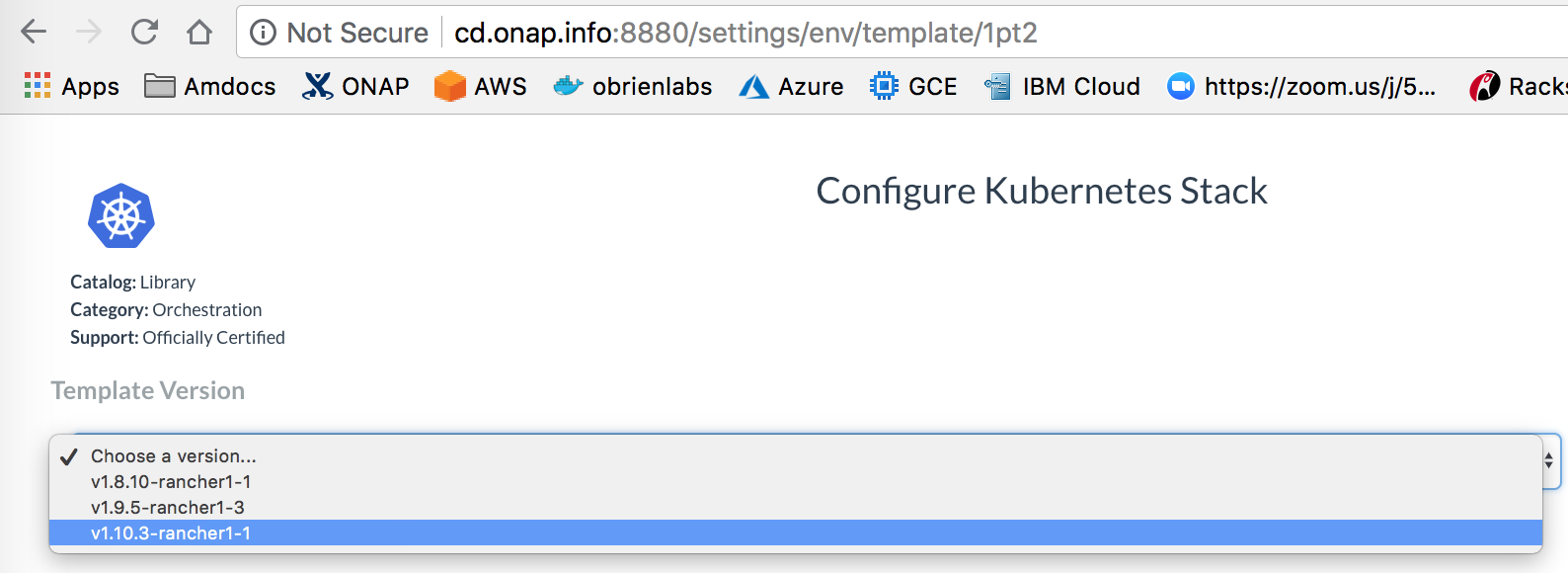

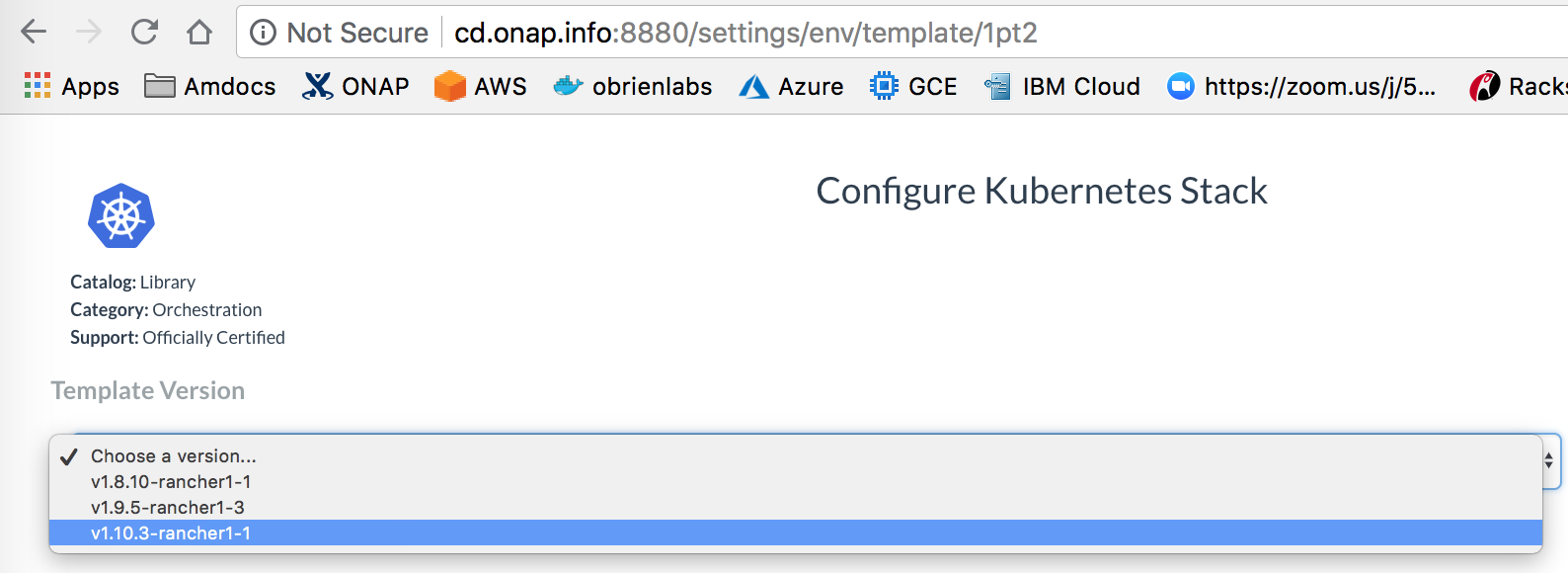

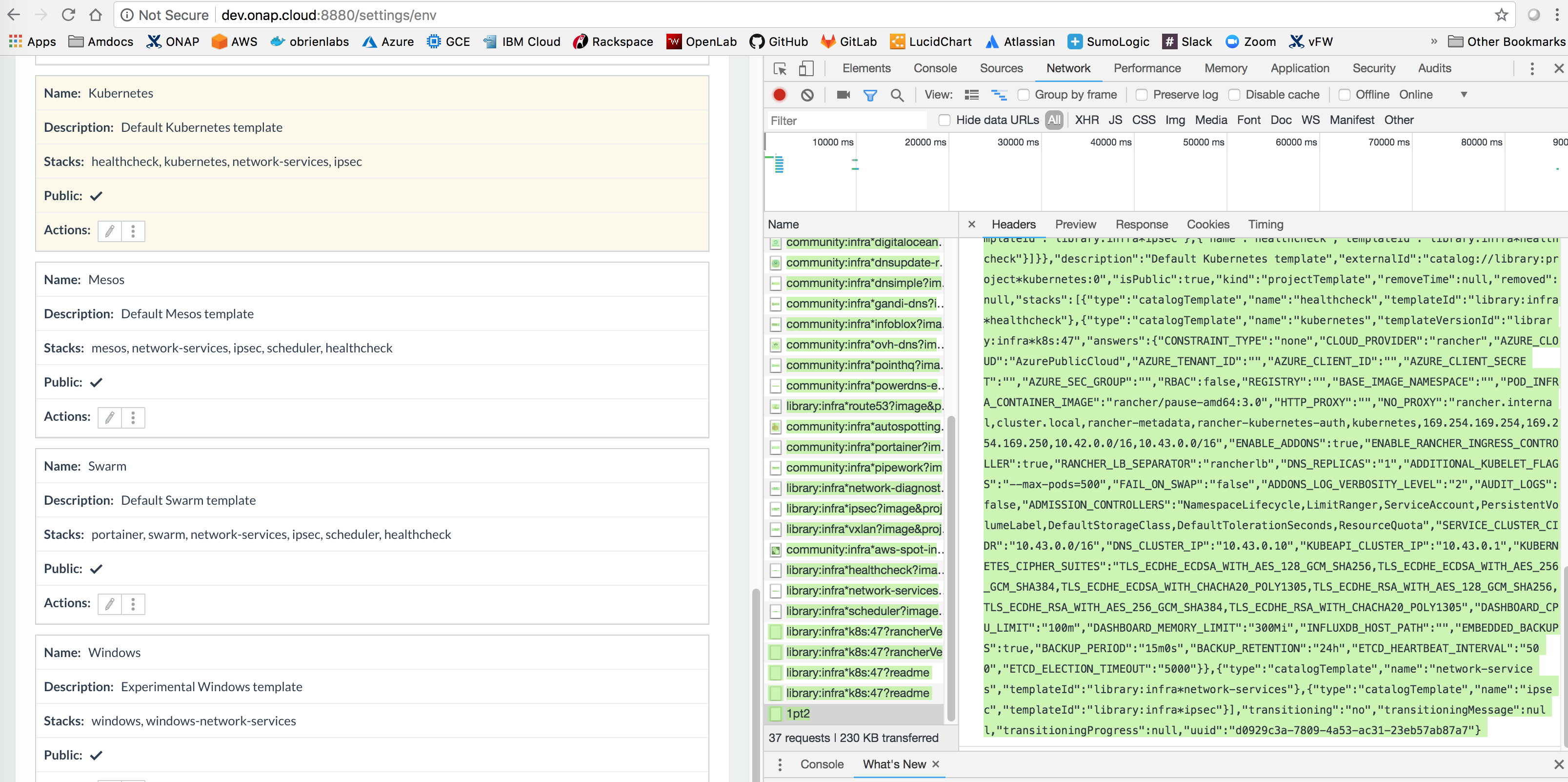

Change max-pods from default 110 pod limit

Rancher ships with a 110 pod limit - you can override this on the kubernetes template for 1.10

https://lists.onap.org/g/onap-discuss/topic/oom_110_kubernetes_pod/25213556?p=,,,20,0,0,0::recentpostdate%2Fsticky,,,20,2,0,25213556

Manual procedure: change the kubernetes template (1pt2) before using it to create an environment (1a7)

add --max-pods=500 to the "Additional Kubelet Flags" box on the v1.10.13 version of the kubernetes template from the "Manage Environments" dropdown on the left of the 8880 rancher console.

| View file |

|---|

| name | 20180905_rancher_increase_110_pod_limit.mp4 |

|---|

| height | 250 |

|---|

|

| Jira |

|---|

| server | ONAP JIRA |

|---|

| serverId | 425b2b0a-557c-3c0c-b515-579789cceedb |

|---|

| key | OOM-1137 |

|---|

|

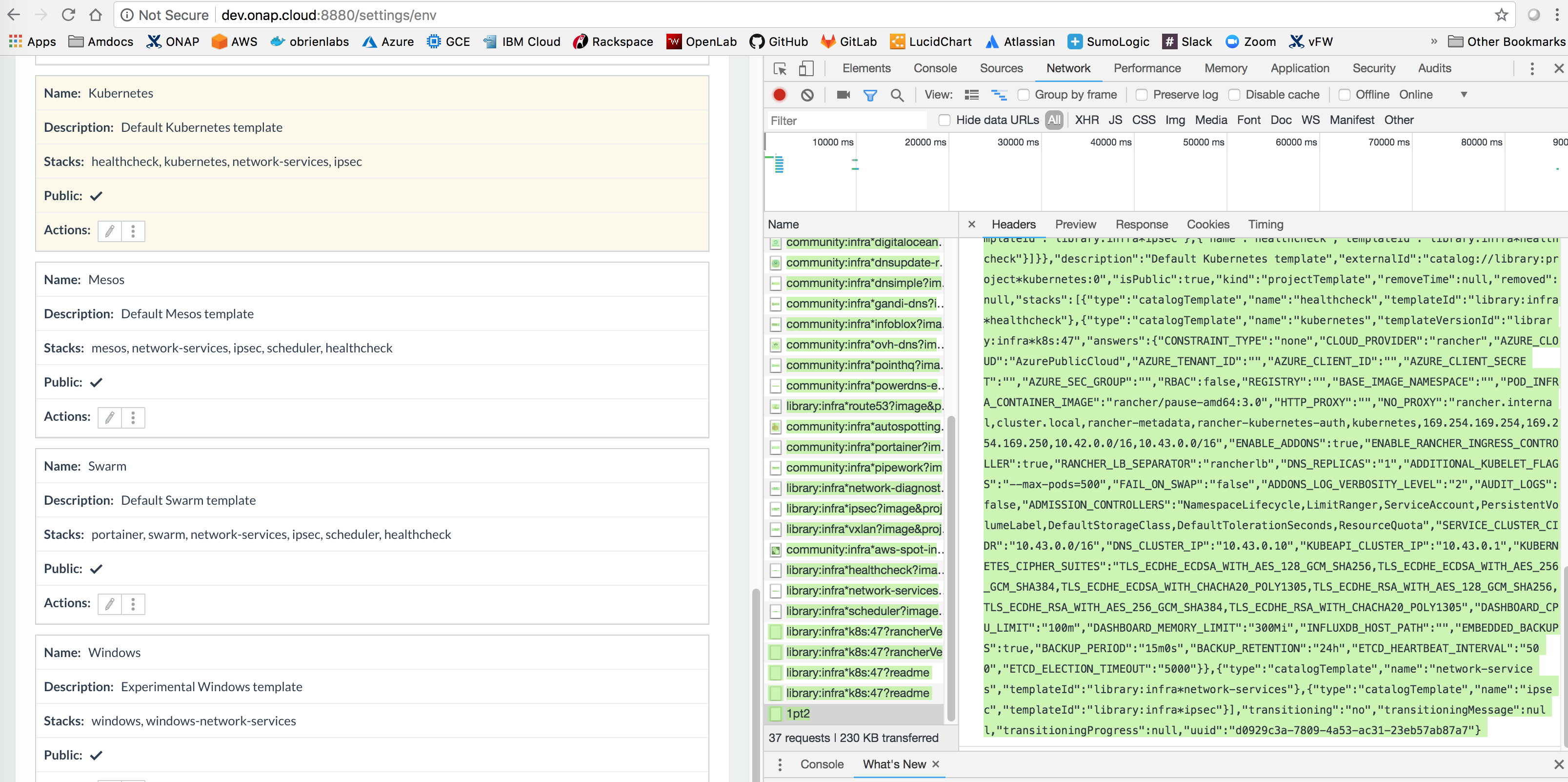

Or capture the output of the REST PUT call - and add around line 111 of the script https://git.onap.org/logging-analytics/tree/deploy/rancher/oom_rancher_setup.sh#n111

Automated - ongoing

| Code Block |

|---|

|

ubuntu@ip-172-31-27-183:~$ curl 'http://127.0.0.1:8880/v2-beta/projecttemplates/1pt2' --data-binary '{"id":"1pt2","type":"projectTemplate","baseType":"projectTemplate","name":"Kubernetes","state":"active","accountId":null,"created":"2018-09-05T14:12:24Z","createdTS":1536156744000,"data":{"fields":{"stacks":[{"name":"healthcheck","templateId":"library:infra*healthcheck"},{"answers":{"CONSTRAINT_TYPE":"none","CLOUD_PROVIDER":"rancher","AZURE_CLOUD":"AzurePublicCloud","AZURE_TENANT_ID":"","AZURE_CLIENT_ID":"","AZURE_CLIENT_SECRET":"","AZURE_SEC_GROUP":"","RBAC":false,"REGISTRY":"","BASE_IMAGE_NAMESPACE":"","POD_INFRA_CONTAINER_IMAGE":"rancher/pause-amd64:3.0","HTTP_PROXY":"","NO_PROXY":"rancher.internal,cluster.local,rancher-metadata,rancher-kubernetes-auth,kubernetes,169.254.169.254,169.254.169.250,10.42.0.0/16,10.43.0.0/16","ENABLE_ADDONS":true,"ENABLE_RANCHER_INGRESS_CONTROLLER":true,"RANCHER_LB_SEPARATOR":"rancherlb","DNS_REPLICAS":"1","ADDITIONAL_KUBELET_FLAGS":"","FAIL_ON_SWAP":"false","ADDONS_LOG_VERBOSITY_LEVEL":"2","AUDIT_LOGS":false,"ADMISSION_CONTROLLERS":"NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,ResourceQuota","SERVICE_CLUSTER_CIDR":"10.43.0.0/16","DNS_CLUSTER_IP":"10.43.0.10","KUBEAPI_CLUSTER_IP":"10.43.0.1","KUBERNETES_CIPHER_SUITES":"TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305","DASHBOARD_CPU_LIMIT":"100m","DASHBOARD_MEMORY_LIMIT":"300Mi","INFLUXDB_HOST_PATH":"","EMBEDDED_BACKUPS":true,"BACKUP_PERIOD":"15m0s","BACKUP_RETENTION":"24h","ETCD_HEARTBEAT_INTERVAL":"500","ETCD_ELECTION_TIMEOUT":"5000"},"name":"kubernetes","templateVersionId":"library:infra*k8s:47"},{"name":"network-services","templateId":"library:infra*network-services"},{"name":"ipsec","templateId":"library:infra*ipsec"}]}},"description":"Default Kubernetes template","externalId":"catalog://library:project*kubernetes:0","isPublic":true,"kind":"projectTemplate","removeTime":null,"removed":null,"stacks":[{"type":"catalogTemplate","name":"healthcheck","templateId":"library:infra*healthcheck"},{"type":"catalogTemplate","answers":{"CONSTRAINT_TYPE":"none","CLOUD_PROVIDER":"rancher","AZURE_CLOUD":"AzurePublicCloud","AZURE_TENANT_ID":"","AZURE_CLIENT_ID":"","AZURE_CLIENT_SECRET":"","AZURE_SEC_GROUP":"","RBAC":false,"REGISTRY":"","BASE_IMAGE_NAMESPACE":"","POD_INFRA_CONTAINER_IMAGE":"rancher/pause-amd64:3.0","HTTP_PROXY":"","NO_PROXY":"rancher.internal,cluster.local,rancher-metadata,rancher-kubernetes-auth,kubernetes,169.254.169.254,169.254.169.250,10.42.0.0/16,10.43.0.0/16","ENABLE_ADDONS":true,"ENABLE_RANCHER_INGRESS_CONTROLLER":true,"RANCHER_LB_SEPARATOR":"rancherlb","DNS_REPLICAS":"1","ADDITIONAL_KUBELET_FLAGS":"--max-pods=600","FAIL_ON_SWAP":"false","ADDONS_LOG_VERBOSITY_LEVEL":"2","AUDIT_LOGS":false,"ADMISSION_CONTROLLERS":"NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,ResourceQuota","SERVICE_CLUSTER_CIDR":"10.43.0.0/16","DNS_CLUSTER_IP":"10.43.0.10","KUBEAPI_CLUSTER_IP":"10.43.0.1","KUBERNETES_CIPHER_SUITES":"TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305","DASHBOARD_CPU_LIMIT":"100m","DASHBOARD_MEMORY_LIMIT":"300Mi","INFLUXDB_HOST_PATH":"","EMBEDDED_BACKUPS":true,"BACKUP_PERIOD":"15m0s","BACKUP_RETENTION":"24h","ETCD_HEARTBEAT_INTERVAL":"500","ETCD_ELECTION_TIMEOUT":"5000"},"name":"kubernetes","templateVersionId":"library:infra*k8s:47"},{"type":"catalogTemplate","name":"network-services","templateId":"library:infra*network-services"},{"type":"catalogTemplate","name":"ipsec","templateId":"library:infra*ipsec"}],"transitioning":"no","transitioningMessage":null,"transitioningProgress":null,"uuid":null}' --compressed

{"id":"9107b9ce-0b61-4c22-bc52-f147babb0ba7","type":"error","links":{},"actions":{},"status":405,"code":"Method not allowed","message":"Method not allowed","detail":null,"baseType":"error"} |

Results

Single AWS 244G 32vCore VM with 110 pod limit workaround - 164 pods (including both secondary DCAEGEN2 orchestrations at 30 and 55 min) - most of the remaining 8 container failures are known/in-progress issues.

| Code Block |

|---|

|

ubuntu@ip-172-31-20-218:~$ free

total used free shared buff/cache available

Mem: 251754696 111586672 45000724 193628 95167300 137158588

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep onap | wc -l

164

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep onap | grep -E '1/1|2/2' | wc -l

155

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep -E '0/|1/2' | wc -l

8

ubuntu@ip-172-31-20-218:~$ kubectl get pods --all-namespaces | grep -E '0/|1/2'

onap dep-dcae-ves-collector-59d4ff58f7-94rpq 1/2 Running 0 4m

onap onap-aai-champ-68ff644d85-rv7tr 0/1 Running 0 59m

onap onap-aai-gizmo-856f86d664-q5pvg 1/2 CrashLoopBackOff 10 59m

onap onap-oof-85864d6586-zcsz5 0/1 ImagePullBackOff 0 59m

onap onap-pomba-kibana-d76b6dd4c-sfbl6 0/1 Init:CrashLoopBackOff 8 59m

onap onap-pomba-networkdiscovery-85d76975b7-mfk92 1/2 CrashLoopBackOff 11 59m

onap onap-pomba-networkdiscoveryctxbuilder-c89786dfc-qnlx9 1/2 CrashLoopBackOff 10 59m

onap onap-vid-84c88db589-8cpgr 1/2 CrashLoopBackOff 9 59m

|

Operations

Get failed/pending containers

| Code Block |

|---|

| theme | Midnight |

|---|

| linenumbers | true |

|---|

|

kubectl get pods --all-namespaces | grep -E "0/|1/2" | wc -l |

| Code Block |

|---|

kubectl cluster-info

# get pods/containers

kubectl get pods --all-namespaces

# get port mappings

kubectl get services --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-1389790254-lgkz3 1/1 Running 1 5d

kube-system heapster-4285517626-x080g 1/1 Running 1 6d

kube-system kube-dns-638003847-tst97 3/3 Running 3 6d

kube-system kubernetes-dashboard-716739405-fnn3g 1/1 Running 2 6d

kube-system monitoring-grafana-2360823841-hr824 1/1 Running 1 6d

kube-system monitoring-influxdb-2323019309-k7h1t 1/1 Running 1 6d

kube-system tiller-deploy-737598192-x9wh5 1/1 Running 1 6d

# ssh into a pod

kubectl -n default exec -it nginx-1389790254-lgkz3 /bin/bash

# get logs

kubectl -n default logs -f nginx-1389790254-lgkz3 |

Exec

kubectl -n onap-aai exec -it aai-resources-1039856271-d9bvq bash

Bounce/Fix a failed container

Periodically one of the higher containers in a dependency tree will not get restarted in time to pick up running child containers - usually this is the kibana container

Fix this or "any" container by deleting the container in question and kubernetes will bring another one up.

| Code Block |

|---|

|

root@a-onap-auto-20180412-ref:~# kubectl get services --all-namespaces | grep log

onap dev-vfc-catalog ClusterIP 10.43.210.8 <none> 8806/TCP 5d

onap log-es NodePort 10.43.77.87 <none> 9200:30254/TCP 5d

onap log-es-tcp ClusterIP 10.43.159.93 <none> 9300/TCP 5d

onap log-kibana NodePort 10.43.41.102 <none> 5601:30253/TCP 5d

onap log-ls NodePort 10.43.180.165 <none> 5044:30255/TCP 5d

onap log-ls-http ClusterIP 10.43.13.180 <none> 9600/TCP 5d

root@a-onap-auto-20180412-ref:~# kubectl get pods --all-namespaces | grep log

onap dev-log-elasticsearch-66cdc4f855-wmpkz 1/1 Running 0 5d

onap dev-log-kibana-5b6f86bcb4-drpzq 0/1 Running 1076 5d

onap dev-log-logstash-6d9fdccdb6-ngq2f 1/1 Running 0 5d

onap dev-vfc-catalog-7d89bc8b9d-vxk74 2/2 Running 0 5d

root@a-onap-auto-20180412-ref:~# kubectl delete pod dev-log-kibana-5b6f86bcb4-drpzq -n onap

pod "dev-log-kibana-5b6f86bcb4-drpzq" deleted

root@a-onap-auto-20180412-ref:~# kubectl get pods --all-namespaces | grep log

onap dev-log-elasticsearch-66cdc4f855-wmpkz 1/1 Running 0 5d

onap dev-log-kibana-5b6f86bcb4-drpzq 0/1 Terminating 1076 5d

onap dev-log-kibana-5b6f86bcb4-gpn2m 0/1 Pending 0 12s

onap dev-log-logstash-6d9fdccdb6-ngq2f 1/1 Running 0 5d

onap dev-vfc-catalog-7d89bc8b9d-vxk74 2/2 Running 0 5d |

a helm namespace delete or a kubectl delete or a helm purge may not remove everything based on hanging PVs - use

| Code Block |

|---|

|

#after a kubectl delete namespace onap

sudo helm delete --purge onap

melliott [12:11 PM]

kubectl delete pods <pod> --grace-period=0 --force -n onap |

Remove a Deployment

https://git.onap.org/logging-analytics/tree/deploy/cd.sh#n57

| Code Block |

|---|

|

kubectl delete namespace onap

sudo helm delete --purge onap

kubectl delete pv --all

kubectl delete pvc --all

kubectl delete secrets --all

kubectl delete clusterrolebinding --all |

Rotate Logs

find them

du --max-depth=1 | sort -nr

Persistent Volumes

Several applications in ONAP require persistent configuration or storage outside of the stateless docker containers managed by Kubernetes. In this case Kubernetes can act as a direct wrapper of native docker volumes or provide its own extended dynamic persistence for use cases where we are running scaled pods on multiple hosts.

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

The SDNC clustering poc - https://gerrit.onap.org/r/#/c/25467/23

For example the following has a patch that exposes a dir into the container just like a docker volume or a volume in docker-compose - the issue here is mixing emptyDir (exposing dirs between containers) and exposing dirs outside to the FS/NFS

https://jira.onap.org/browse/LOG-52

This is only one way to do a static PV in K8S

https://jira.onap.org/secure/attachment/10436/LOG-50-expose_mso_logs.patch

Token

Thanks Joey

root@ip-172-31-27-86:~# kubectl describe secret $(kubectl get secrets | grep default | cut -f1 -d ' ') Name: default-token-w1jq0 Namespace: default Labels: <none> Annotations: kubernetes.io/service-account.name=default kubernetes.io/service-account.uid=478eae11-f0f4-11e7-b936-022346869a82 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 7 bytes token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZmF1bHQtdG9rZW4tdzFqcTAiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGVmYXVsdCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ3OGVhZTExLWYwZjQtMTFlNy1iOTM2LTAyMjM0Njg2OWE4MiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OmRlZmF1bHQifQ.Fjv6hA1Kzurr-Cie5EZmxMOoxm-3Uh3zMGvoA4Xu6h2U1-NBp_fw_YW7nSECnI7ttGz67mxAjknsgfze-1JtgbIUtyPP31Hp1iscaieu5r4gAc_booBdkV8Eb8gia6sF84Ye10lsS4nkmmjKA30BdqH9qjWspChLPdGdG3_RmjApIHEOjCqQSEHGBOMvY98_uO3jiJ_XlJBwLL4uydjhpoANrS0xlS_Evn0evLdits7_piklbc-uqKJBdZ6rWyaRbkaIbwNYYhg7O-CLlUVuExynAAp1J7Mo3qITNV_F7f4l4OIzmEf3XLho4a1KIGb76P1AOvSrXgTzBq0Uvh5fUw |

Auto Scaling

Using the example on page 122 of Kubernetes Up & Running.

| Code Block |

|---|

kubectl run nginx --image=nginx:1.7.12

kubectl get deployments nginx

kubectl scale deployments nginx --replicas=3

kubectl get deployments nginx

kubectl get replicasets --selector=run=nginx

kubectl get pods --all-namespaces

kubectl scale deployments nginx --replicas=64

|

Developer Deployment

running parts of onap using multiple yaml overrides

| Code Block |

|---|

|

order of multiple -f yaml overrides (talked with mike a couple months ago) but until now I only run with -f disable-allcharts and some --set s - verified in my adjusted cd.sh the following works - would only see aai if the order was right to left - so command line order for -f looks right

sudo helm deploy onap local/onap --namespace $ENVIRON -f onap/resources/environments/disable-allcharts.yaml -f ~/dev.yaml --set aai.enabled=true |

Deployment Integrity

ELK containers

Logstash port

Elasticsearch port

| Code Block |

|---|

|

# get pod names and the actual VM that any pod is on

ubuntu@ip-10-0-0-169:~$ kubectl get pods --all-namespaces -o wide | grep log-

onap onap-log-elasticsearch-756cfb559b-wk8c6 1/1 Running 0 2h 10.42.207.254 ip-10-0-0-227.us-east-2.compute.internal

onap onap-log-kibana-6bb55fc66b-kxtg6 0/1 Running 16 1h 10.42.54.76 ip-10-0-0-111.us-east-2.compute.internal

onap onap-log-logstash-689ccb995c-7zmcq 1/1 Running 0 2h 10.42.166.241 ip-10-0-0-111.us-east-2.compute.internal

onap onap-vfc-catalog-5fbdfc7b6c-xc84b 2/2 Running 0 2h 10.42.206.141 ip-10-0-0-227.us-east-2.compute.internal

# get nodeport

ubuntu@ip-10-0-0-169:~$ kubectl get services --all-namespaces -o wide | grep log-

onap log-es NodePort 10.43.82.53 <none> 9200:30254/TCP 2h app=log-elasticsearch,release=onap

onap log-es-tcp ClusterIP 10.43.90.198 <none> 9300/TCP 2h app=log-elasticsearch,release=onap

onap log-kibana NodePort 10.43.167.146 <none> 5601:30253/TCP 2h app=log-kibana,release=onap

onap log-ls NodePort 10.43.250.182 <none> 5044:30255/TCP 2h app=log-logstash,release=onap

onap log-ls-http ClusterIP 10.43.81.173 <none> 9600/TCP 2h app=log-logstash,release=onap

# check nodeport outside container

ubuntu@ip-10-0-0-169:~$ curl ip-10-0-0-111.us-east-2.compute.internal:30254

{

"name" : "-pEf9q9",

"cluster_name" : "onap-log",

"cluster_uuid" : "ferqW-rdR_-Ys9EkWw82rw",

"version" : {

"number" : "5.5.0",

"build_hash" : "260387d",

"build_date" : "2017-06-30T23:16:05.735Z",

"build_snapshot" : false,

"lucene_version" : "6.6.0"

}, "tagline" : "You Know, for Search"

}

# check inside docker container - for reference

ubuntu@ip-10-0-0-169:~$ kubectl exec -it -n onap onap-log-elasticsearch-756cfb559b-wk8c6 bash

[elasticsearch@onap-log-elasticsearch-756cfb559b-wk8c6 ~]$ curl http://127.0.0.1:9200

{

"name" : "-pEf9q9",

# check indexes

ubuntu@ip-172-31-54-73:~$ curl http://dev.onap.info:30254/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open logstash-2018.07.23 knMYfzh2Rdm_d5ZQ_ij00A 5 1 1953323 0 262mb 262mb

yellow open logstash-2018.07.26 DRAjpsTPQOaXv1O7XP5Big 5 1 322022 0 100.2mb 100.2mb

yellow open logstash-2018.07.24 gWR7l9LwSBOYtsGRs18A_Q 5 1 90200 0 29.1mb 29.1mb

yellow open .kibana Uv7razLpRaC5OACPl6IvdA 1 1 2 0 10.5kb 10.5kb

yellow open logstash-2018.07.27 MmqCwv1ISliZS79mvFSHSg 5 1 20406 0 7.2mb 7.2mb

# check records in elasticsearch

ubuntu@ip-172-31-54-73:~$ curl http://dev.onap.info:30254/_search?q=*

{"took":3,"timed_out":false,"_shards":{"total":21,"successful":21,"failed":0},"hits":{"total":2385953,"max_score":1.0,"hits":[{"_index":".kibana","_type":"index-pattern","_id":"logstash-*","_score":1.0,"_source":{"title":"logstash-*","timeFieldName":"@timestamp","notExpandable":true,"fields":"[{\"name\":\"@timestamp\",\"type\":\"date\",\"count\":0,\ |

Kibana port

ONAP Ports

| component | port | example |

|

|---|

| consul | 30270/ui/#/dc1/services |

|

|

|

|

|

|

Running Robot Commands

Make sure the robot container is deployed - you may run directly from the kubernetes folder outside of the container - see https://git.onap.org/logging-analytics/tree/deploy/cd.sh#n297

| Code Block |

|---|

|

cd oom/kubernetes/robot

./ete-k8s.sh $ENVIRON health |

however if you need to adjust files inside the container without recreating the docker image do the following

| Code Block |

|---|

|

root@ubuntu:~/_dev/62405_logback/oom/kubernetes# kubectl exec -it onap-robot-7c84f54558-fxmvd -n onap bash

root@onap-robot-7c84f54558-fxmvd:/# cd /var/opt/OpenECOMP_ETE/robot/resources

root@onap-robot-7c84f54558-fxmvd:/var/opt/OpenECOMP_ETE/robot/resources# ls

aaf_interface.robot browser_setup.robot demo_preload.robot json_templater.robot music policy_interface.robot sms_interface.robot test_templates

aai clamp_interface.robot dr_interface.robot log_interface.robot nbi_interface.robot portal-sdk so_interface.robot vid

appc_interface.robot cli_interface.robot global_properties.robot mr_interface.robot oof_interface.robot portal_interface.robot ssh vnfsdk_interface.robot

asdc_interface.robot dcae_interface.robot heatbridge.robot msb_interface.robot openstack sdngc_interface.robot stack_validation

|

Pairwise Testing

AAI and Log Deployment

AAI, Log and Robot will fit on a 16G VM

Deployment Issues

ran into an issue running champ on a 16g VM (AAI/LOG/Robot only)

master 20180509 build

but it runs fine on a normal cluster with the rest of ONAP

| Code Block |

|---|

|

19:56:05 onap onap-aai-champ-85f97f5d7c-zfkdp 1/1 Running 0 2h 10.42.234.99 ip-10-0-0-227.us-east-2.compute.internal |

http://jenkins.onap.info/job/oom-cd-master/2915/consoleFull

| Jira |

|---|

| server | ONAP JIRA |

|---|

| serverId | 425b2b0a-557c-3c0c-b515-579789cceedb |

|---|

| key | OOM-1015 |

|---|

|

| Code Block |

|---|

|

Every 2.0s: kubectl get pods --all-namespaces Thu May 10 13:52:47 2018

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system heapster-76b8cd7b5-9dg8j 1/1 Running 0 10h

kube-system kube-dns-5d7b4487c9-fj2wv 3/3 Running 2 10h

kube-system kubernetes-dashboard-f9577fffd-c9nwp 1/1 Running 0 10h

kube-system monitoring-grafana-997796fcf-jdx8q 1/1 Running 0 10h

kube-system monitoring-influxdb-56fdcd96b-zpjmz 1/1 Running 0 10h

kube-system tiller-deploy-54bcc55dd5-mvbb4 1/1 Running 2 10h

onap dev-aai-babel-6b79c6bc5b-7srxz 2/2 Running 0 10h

onap dev-aai-cassandra-0 1/1 Running 0 10h

onap dev-aai-cassandra-1 1/1 Running 0 10h

onap dev-aai-cassandra-2 1/1 Running 0 10h

onap dev-aai-cdc9cdb76-mmc4r 1/1 Running 0 10h

onap dev-aai-champ-845ff6b947-l8jqt 0/1 Terminating 0 10h

onap dev-aai-champ-845ff6b947-r69bj 0/1 Init:0/1 0 25s

onap dev-aai-data-router-8c77ff9dd-7dkmg 1/1 Running 3 10h

onap dev-aai-elasticsearch-548b68c46f-djmtd 1/1 Running 0 10h

onap dev-aai-gizmo-657cb8556c-z7c2q 2/2 Running 0 10h

onap dev-aai-hbase-868f949597-xp2b9 1/1 Running 0 10h

onap dev-aai-modelloader-6687fcc84-2pz8n 2/2 Running 0 10h

onap dev-aai-resources-67c58fbdc-g22t6 2/2 Running 0 10h

onap dev-aai-search-data-8686bbd58c-ft7h2 2/2 Running 0 10h

onap dev-aai-sparky-be-54889bbbd6-rgrr5 2/2 Running 1 10h

onap dev-aai-traversal-7bb98d854d-2fhjc 2/2 Running 0 10h

onap dev-log-elasticsearch-5656984bc4-n2n46 1/1 Running 0 10h

onap dev-log-kibana-567557fb9d-7ksdn 1/1 Running 50 10h

onap dev-log-logstash-fcc7d68bd-49rv8 1/1 Running 0 10h

onap dev-robot-6cc48c696b-875p5 1/1 Running 0 10h

ubuntu@obrien-cluster:~$ kubectl describe pod dev-aai-champ-845ff6b947-l8jqt -n onap

Name: dev-aai-champ-845ff6b947-l8jqt

Namespace: onap

Node: obrien-cluster/10.69.25.12

Start Time: Thu, 10 May 2018 03:32:21 +0000

Labels: app=aai-champ

pod-template-hash=4019926503

release=dev

Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"ReplicaSet","namespace":"onap","name":"dev-aai-champ-845ff6b947","uid":"bf48c0cd-5402-11e8-91b1-020cc142d4...

Status: Pending

IP: 10.42.23.228

Created By: ReplicaSet/dev-aai-champ-845ff6b947

Controlled By: ReplicaSet/dev-aai-champ-845ff6b947

Init Containers:

aai-champ-readiness:

Container ID: docker://46197a2e7383437ed7d8319dec052367fd78f8feb826d66c42312b035921eb7a

Image: oomk8s/readiness-check:2.0.0

Image ID: docker-pullable://oomk8s/readiness-check@sha256:7daa08b81954360a1111d03364febcb3dcfeb723bcc12ce3eb3ed3e53f2323ed

Port: <none>

Command:

/root/ready.py

Args:

--container-name

aai-resources

--container-name

message-router-kafka

State: Running

Started: Thu, 10 May 2018 03:46:14 +0000

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Thu, 10 May 2018 03:34:58 +0000

Finished: Thu, 10 May 2018 03:45:04 +0000

Ready: False

Restart Count: 1

Environment:

NAMESPACE: onap (v1:metadata.namespace)

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-2jccm (ro)

Containers:

aai-champ:

Container ID:

Image: nexus3.onap.org:10001/onap/champ:1.2-STAGING-latest

Image ID:

Port: 9522/TCP

State: Waiting

Reason: PodInitializing

Ready: False

Restart Count: 0

Readiness: tcp-socket :9522 delay=10s timeout=1s period=10s #success=1 #failure=3

Environment:

CONFIG_HOME: /opt/app/champ-service/appconfig

GRAPHIMPL: janus-deps

KEY_STORE_PASSWORD: <set to the key 'KEY_STORE_PASSWORD' in secret 'dev-aai-champ-pass'> Optional: false

KEY_MANAGER_PASSWORD: <set to the key 'KEY_MANAGER_PASSWORD' in secret 'dev-aai-champ-pass'> Optional: false

SERVICE_BEANS: /opt/app/champ-service/dynamic/conf

Mounts:

/etc/localtime from localtime (ro)

/logs from dev-aai-champ-logs (rw)

/opt/app/champ-service/appconfig/auth from dev-aai-champ-secrets (rw)

/opt/app/champ-service/appconfig/champ-api.properties from dev-aai-champ-config (rw)

/opt/app/champ-service/dynamic/conf/champ-beans.xml from dev-aai-champ-dynamic-config (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-2jccm (ro)

Conditions:

Type Status

Initialized False

Ready False

PodScheduled True

Volumes:

localtime:

Type: HostPath (bare host directory volume)

Path: /etc/localtime

dev-aai-champ-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: dev-aai-champ

Optional: false

dev-aai-champ-secrets:

Type: Secret (a volume populated by a Secret)

SecretName: dev-aai-champ-champ

Optional: false

dev-aai-champ-dynamic-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: dev-aai-champ-dynamic

Optional: false

dev-aai-champ-logs:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

default-token-2jccm:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-2jccm

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.alpha.kubernetes.io/notReady:NoExecute for 300s

node.alpha.kubernetes.io/unreachable:NoExecute for 300s

Events: <none>

ubuntu@obrien-cluster:~$ kubectl delete pod dev-aai-champ-845ff6b947-l8jqt -n onap

pod "dev-aai-champ-845ff6b947-l8jqt" deleted |

Developer Use of the Logging Library

Logging With Spring AOP

see ONAP Application Logging Specification v1.2 (Casablanca)#DeveloperGuide

Logging Without Spring AOP

| Code Block |

|---|

| language | java |

|---|

| theme | Midnight |

|---|

|

# pending annotation level weaving of the library

import org.onap.logging.ref.slf4j.ONAPLogAdapter;

import org.slf4j.LoggerFactory;

import org.springframework.stereotype.Service;

@Service("daoFacade")

public class ApplicationService implements ApplicationServiceLocal {

@Override

public Boolean health(HttpServletRequest servletRequest) {

Boolean health = true;

// TODO: check database

final ONAPLogAdapter adapter = new ONAPLogAdapter(LoggerFactory.getLogger(this.getClass()));

try {

adapter.entering(new ONAPLogAdapter.HttpServletRequestAdapter(servletRequest));

} finally {

adapter.exiting();

}

return health;

}

MDC's are set for example

this LogbackMDCAdapter (id=282)

copyOnInheritThreadLocal InheritableThreadLocal<T> (id=284)

lastOperation ThreadLocal<T> (id=287)

key "ServerFQDN" (id=273)

val "localhost" (id=272)

{InstanceUUID=aa2d5b18-e3c2-44d3-b3ae-8565113a81b9, RequestID=788cf6a6-8008-4b95-af3f-61d92d9cbb4e, ServiceName=, InvocationID=dade7e58-fa24-4b2d-84e8-d3e89af9e6e1, InvokeTimestamp=2018-07-05T14:25:05.739Z, PartnerName=, ClientIPAddress=0:0:0:0:0:0:0:1, ServerFQDN=localhost}

in

LogbackMDCAdapter.put(String, String) line: 98

MDC.put(String, String) line: 147

ONAPLogAdapter.setEnteringMDCs(RequestAdapter<?>) line: 327

ONAPLogAdapter.entering(ONAPLogAdapter$RequestAdapter) line: 156

ApplicationService.health(HttpServletRequest) line: 38

RestHealthServiceImpl.getHealth() line: 47

# fix

get() returned "" (id=201)

key "ServiceName" (id=340)

Daemon Thread [http-nio-8080-exec-12] (Suspended)

owns: NioEndpoint$NioSocketWrapper (id=113)

MDC.get(String) line: 203

ONAPLogAdapter.setEnteringMDCs(RequestAdapter<?>) line: 336

ONAPLogAdapter.entering(ONAPLogAdapter$RequestAdapter) line: 156

ApplicationService.health(HttpServletRequest) line: 38

RestHealthServiceImpl.getHealth() line: 47

if (MDC.get(ONAPLogConstants.MDCs.SERVICE_NAME) == null) {

MDC.put(ONAPLogConstants.MDCs.SERVICE_NAME, request.getRequestURI());

to

if (MDC.get(ONAPLogConstants.MDCs.SERVICE_NAME) == null ||

MDC.get(ONAPLogConstants.MDCs.SERVICE_NAME).equalsIgnoreCase(EMPTY_MESSAGE)) {

|

In progress

| Jira |

|---|

| server | ONAP JIRA |

|---|

| serverId | 425b2b0a-557c-3c0c-b515-579789cceedb |

|---|

| key | LOG-552 |

|---|

|

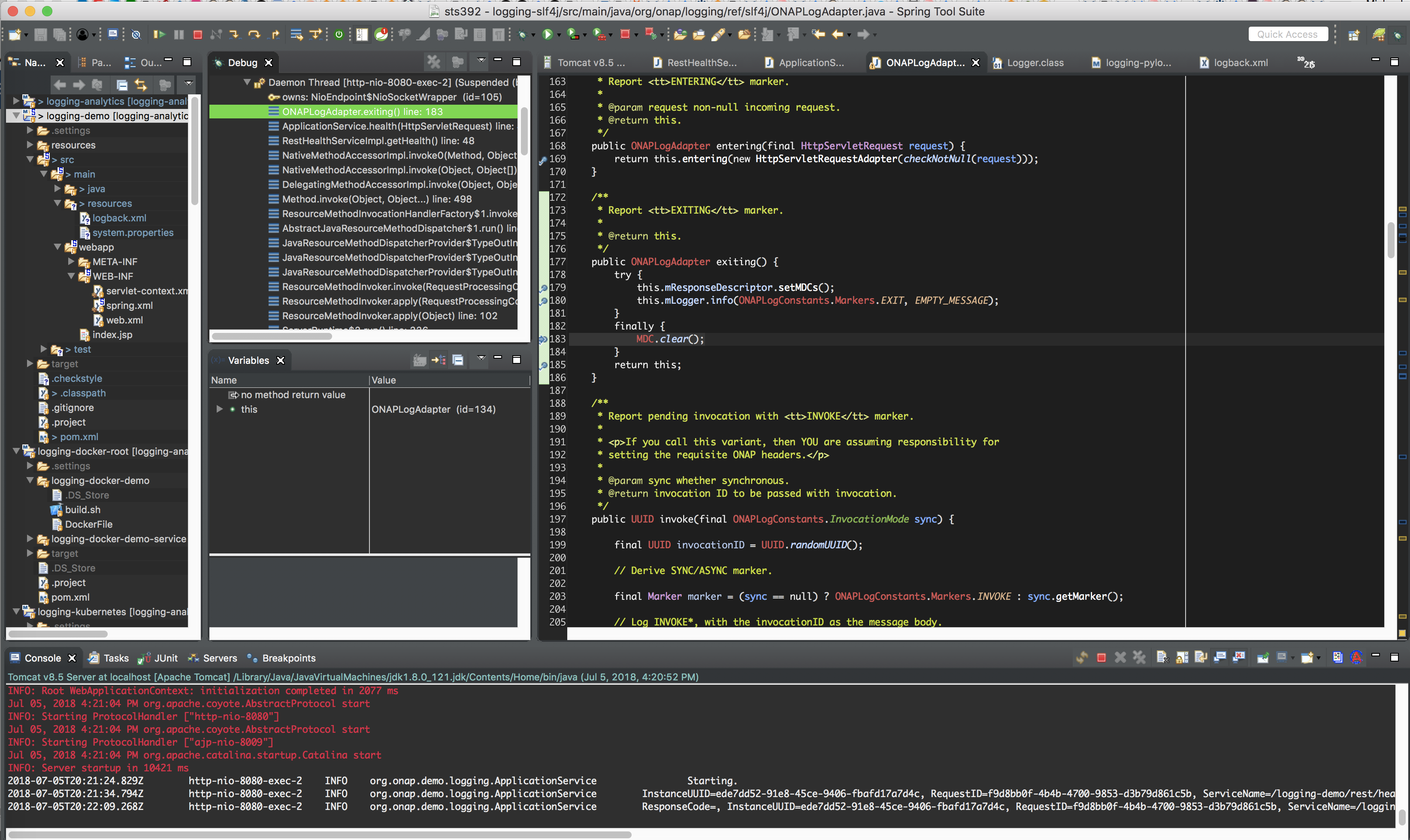

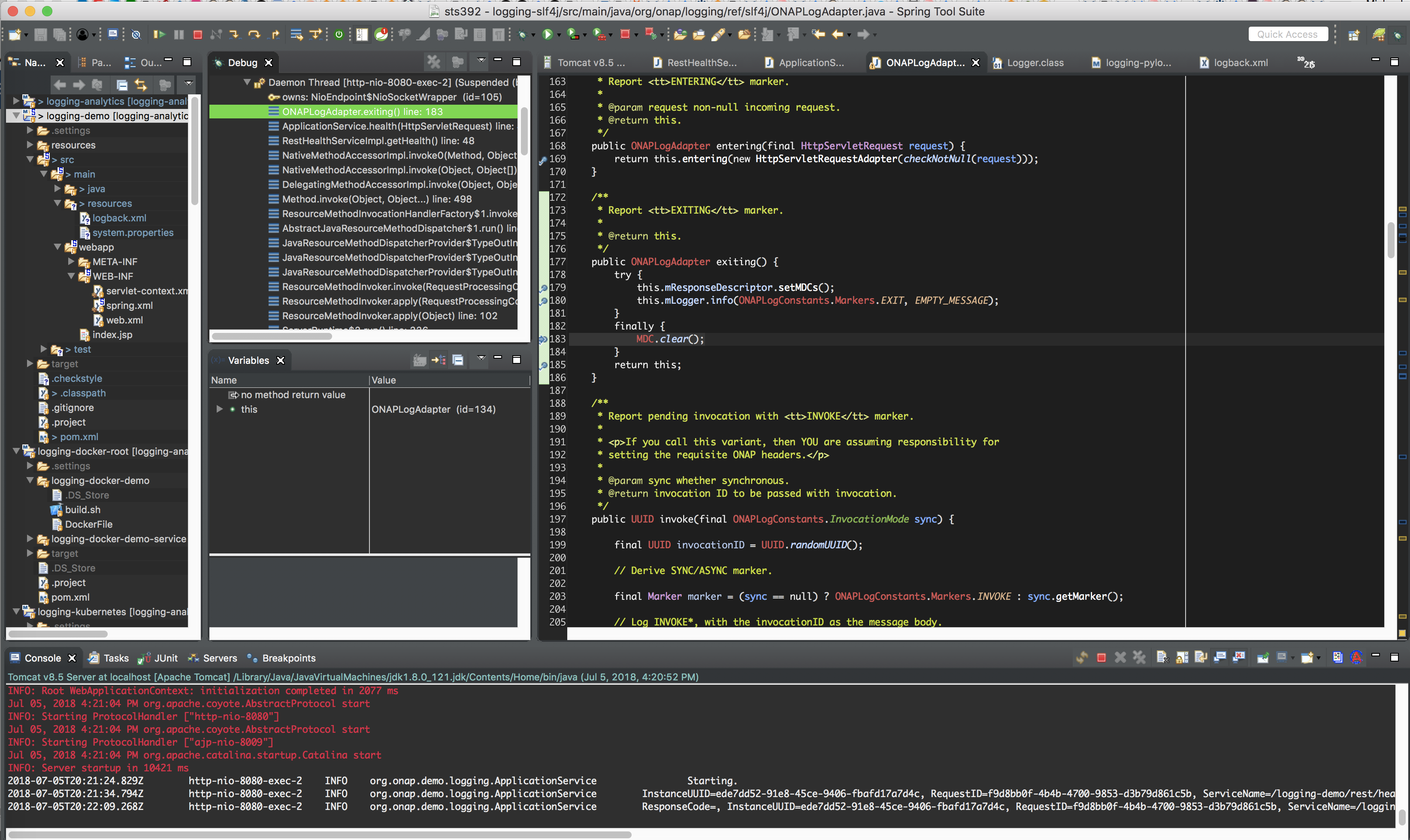

Developer Debugging

Local Tomcat via Eclipse/IntelliJ

Run as "debug"/deploy to Tomcat via Eclipse - https://git.onap.org/logging-analytics/tree/reference/logging-demo

Exercise the health endpoint which invokes Luke Parker's logging library

http://localhost:8080/logging-demo/rest/health/health

Hit preset breakpoints - try

| Code Block |

|---|

| language | java |

|---|

| theme | Midnight |

|---|

|

this ONAPLogAdapter (id=130)

mLogger Logger (id=132)

mResponseDescriptor ONAPLogAdapter$ResponseDescriptor (id=138)

mServiceDescriptor ONAPLogAdapter$ServiceDescriptor (id=139)

request ONAPLogAdapter$HttpServletRequestAdapter (id=131)

requestID "8367757d-59c2-4e3e-80cd-b2fdc7a114ea" (id=142)

invocationID "967e4fe8-84ea-40b0-b4b9-d5988348baec" (id=170)

partnerName "" (id=171)

Tomcat v8.5 Server at localhost [Apache Tomcat]

org.apache.catalina.startup.Bootstrap at localhost:50485

Daemon Thread [http-nio-8080-exec-3] (Suspended)

owns: NioEndpoint$NioSocketWrapper (id=104)

ONAPLogAdapter.setEnteringMDCs(RequestAdapter<?>) line: 312

ONAPLogAdapter.entering(ONAPLogAdapter$RequestAdapter) line: 156

ApplicationService.health(HttpServletRequest) line: 37

RestHealthServiceImpl.getHealth() line: 47

NativeMethodAccessorImpl.invoke0(Method, Object, Object[]) line: not available [native method]

...

JavaResourceMethodDispatcherProvider$TypeOutInvoker(AbstractJavaResourceMethodDispatcher).invoke(ContainerRequest, Object, Object...) line: 161

...

ServletContainer.service(URI, URI, HttpServletRequest, HttpServletResponse) line: 388

...

CoyoteAdapter.service(Request, Response) line: 342

...

Daemon Thread [http-nio-8080-exec-5] (Running)

/Library/Java/JavaVirtualMachines/jdk1.8.0_121.jdk/Contents/Home/bin/java (Jul 4, 2018, 12:12:04 PM)

output - note there are 3 tabs (see p_mak in logback.xml) delimiting the MARKERS (ENTRY and EXIT) at the end of each line

2018-07-05T20:21:34.794Z http-nio-8080-exec-2 INFO org.onap.demo.logging.ApplicationService InstanceUUID=ede7dd52-91e8-45ce-9406-fbafd17a7d4c, RequestID=f9d8bb0f-4b4b-4700-9853-d3b79d861c5b, ServiceName=/logging-demo/rest/health/health, InvocationID=8f4c1f1d-5b32-4981-b658-e5992f28e6c8, InvokeTimestamp=2018-07-05T20:21:26.617Z, PartnerName=, ClientIPAddress=0:0:0:0:0:0:0:1, ServerFQDN=localhost ENTRY

2018-07-05T20:22:09.268Z http-nio-8080-exec-2 INFO org.onap.demo.logging.ApplicationService ResponseCode=, InstanceUUID=ede7dd52-91e8-45ce-9406-fbafd17a7d4c, RequestID=f9d8bb0f-4b4b-4700-9853-d3b79d861c5b, ServiceName=/logging-demo/rest/health/health, ResponseDescription=, InvocationID=8f4c1f1d-5b32-4981-b658-e5992f28e6c8, Severity=, InvokeTimestamp=2018-07-05T20:21:26.617Z, PartnerName=, ClientIPAddress=0:0:0:0:0:0:0:1, ServerFQDN=localhost, StatusCode= EXIT

|

Remote Docker container in Kubernetes deployment

Developer Commits

Developer Reviews

PTL Activities

Releasing Images

- merge change to update pom version

- magic word "please release"

- send mail to help@onap.org - and to Jessica of the LF to release the image - post the build job and the gerrit review

- branch the repo if required - as late in the release as possible - using the gerrit ui

- After the release prepare another review to bump the version and add -SNAPSHOT - usually to master

- examples around 20181112 in the logging-analytics repo -

| Jira |

|---|

| server | ONAP JIRA |

|---|

| serverId | 425b2b0a-557c-3c0c-b515-579789cceedb |

|---|

| key | LOG-838 |

|---|

|

FAQ

License Files

Do we need to put license files everywhere - at the root of java, pom.xml, properties, yamls?

In reality just put them in active code files interpreted/compiled - like java, javascript, sh/bat, python, go - leave out pom.xml, yamls

Some types have compile checks (properties but not sh)

from Jennie Jia

| Code Block |

|---|

| language | xml |

|---|

| theme | FadeToGrey |

|---|

|

<checkstyle.skip>true</checkstyle.skip> |