...

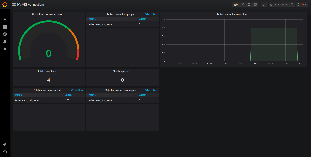

In order to conduct client tests this will be conducted in following architecture:

- HV-VES Client - produces high amount of events for processing.

- Processing Consumer - consumes events from Kafka topics and creates performance metrics.

- Offset Consumer - reads Kafka offsets.

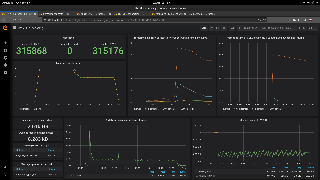

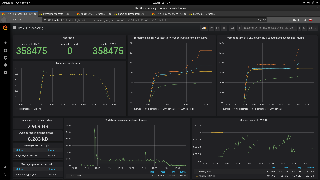

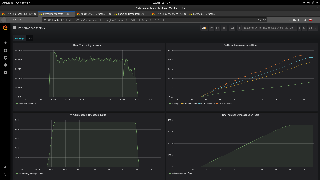

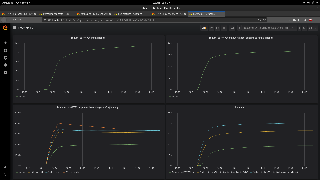

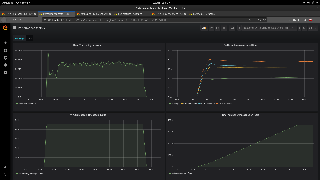

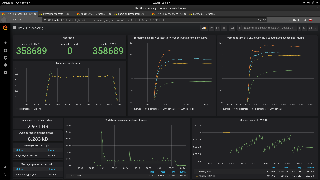

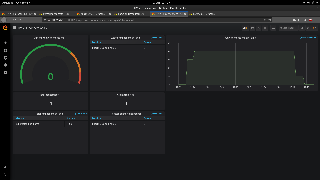

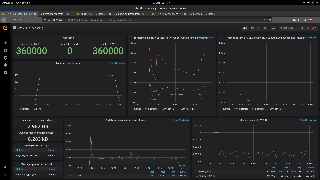

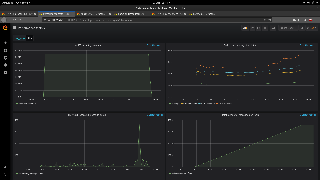

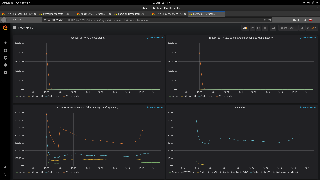

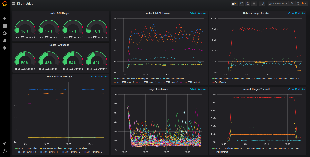

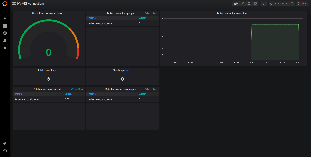

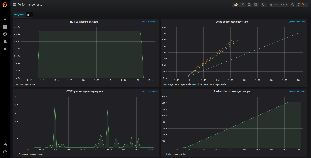

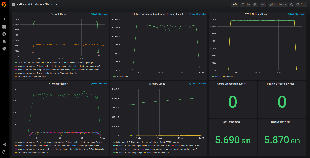

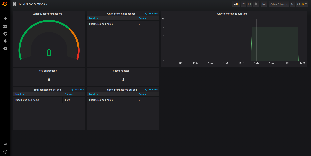

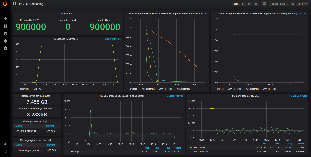

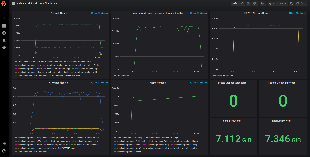

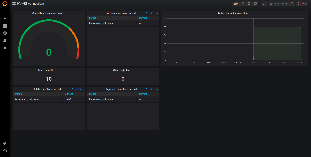

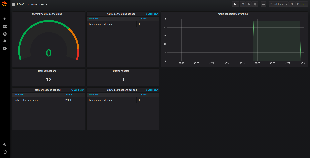

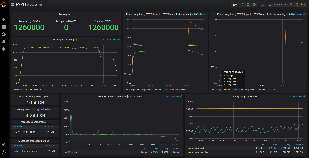

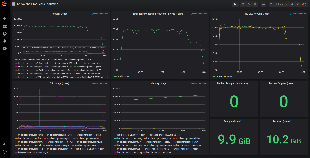

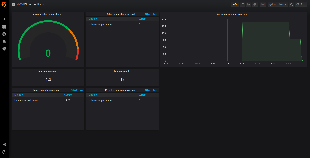

- Prometheus - sends requests for performance metrics to HV-VES, Processing Consumer and Offset Consumer, provides data to Grafana.

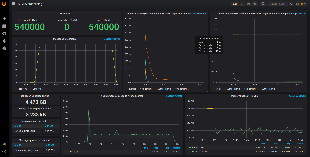

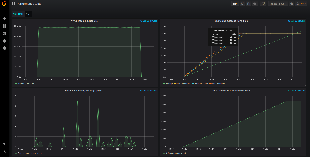

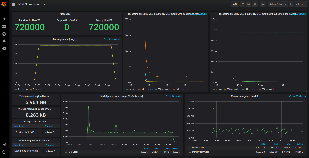

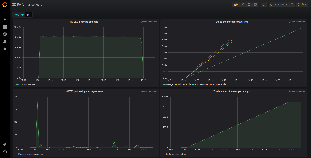

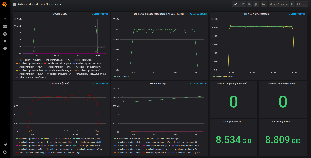

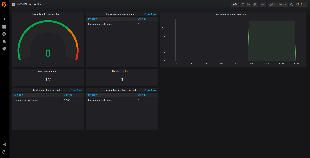

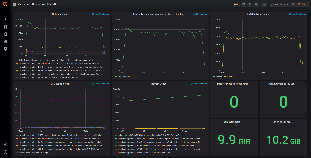

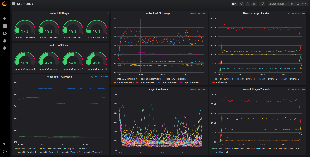

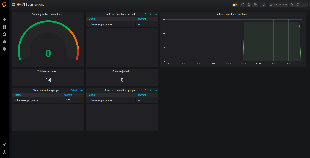

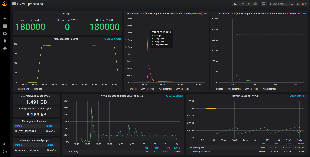

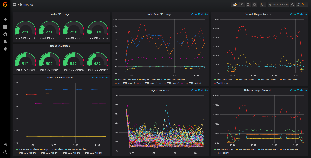

- Grafana - delivers analytics and its visualization.

Link between HV-VES Client and HV-VES is TLS secured (provided scripts generate and place certificates on proper containers).

| Info |

|---|

Note: In the Without DMaaP Kafka tests the DMaaP/Kafka service was substituted with wurstmeister kafka |

Test Setup

To execute performance tests we have to run functions from a shell script cloud-based-performance-test.sh in HV-VES project directory: ~/tools/performance/cloud/

First we have to generate certificates in ~/tools/ssl folder by using gen_certs. This step only needs to be performed during the first test setup (or if the generated files have been deleted).

| Code Block | ||

|---|---|---|

| ||

./cloud-based-performance-test.sh gen_certs |

Then we call setup in order to send certificates to HV-VES, and deploy Consumers, Prometheus, Grafana and create their ConfigMaps.

| Code Block | ||

|---|---|---|

| ||

./cloud-based-performance-test.sh setup |

After that we have to change HV-VES configuration in Consul KEY/VALUE tab (typically we can access Consul at port 30270 of any Controller node, i.e. http://slave1:30270/ui/#/dc1/kv/dcae-hv-ves-collector/edit).

| Code Block | ||

|---|---|---|

| ||

{"security.sslDisable": false,

"logLevel": "INFO",

"server.listenPort": 6061,

"server.idleTimeoutSec": 300,

"cbs.requestIntervalSec": 5,

"streams_publishes": {

"perf3gpp": {

"type": "kafka",

"aaf_credentials": {

"username": "admin",

"password": "admin_secret"

},

"kafka_info": {

"bootstrap_servers": "message-router-kafka:9092",

"topic_name": "HV_VES_PERF3GPP"

}

}

},

"security.keys.trustStoreFile": "/etc/ves-hv/ssl/custom/trust.p12",

"security.keys.keyStoreFile": "/etc/ves-hv/ssl/custom/server.p12",

"security.keys.trustStorePasswordFile":"/etc/ves-hv/ssl/custom/trust.pass",

"security.keys.keyStorePasswordFile": "/etc/ves-hv/ssl/custom/server.pass"} |

Environment and Resources

Kubernetes cluster with 4 worker nodes, sharing hardware configuration shown in a table below, is deployed in OpenStack cloud operating system. The test components in docker containers are further deployed on the Kubernetes cluster.

| Configuration | ||

|---|---|---|

CPU | Model | Intel(R) Xeon(R) CPU E5-2680 v4 |

| No. of cores | 24 | |

| CPU clock speed [GHz] | 2.40 | |

| Total RAM [GB] | 62.9 | |

Network Performance

Pod measurement method

In order to check cluster network performance tests with usage of Iperf3 have been applied. Iperf is a tool for measurement of the maximum bandwidth on IP networks, it runs on two mode: server and client. We used a docker image: networkstatic/iperf3.

Following deployment creates a pod with iperf (server mode) on one worker, and one pod with iperf client for each worker.

| Code Block | ||||

|---|---|---|---|---|

| ||||

apiVersion: apps/v1

kind: Deployment

metadata:

name: iperf3-server

namespace: onap

labels:

app: iperf3-server

spec:

replicas: 1

selector:

matchLabels:

app: iperf3-server

template:

metadata:

labels:

app: iperf3-server

spec:

containers:

- name: iperf3-server

image: networkstatic/iperf3

args: ['-s']

ports:

- containerPort: 5201

name: server

---

apiVersion: v1

kind: Service

metadata:

name: iperf3-server

namespace: onap

spec:

selector:

app: iperf3-server

ports:

- protocol: TCP

port: 5201

targetPort: server

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: iperf3-clients

namespace: onap

labels:

app: iperf3-client

spec:

selector:

matchLabels:

app: iperf3-client

template:

metadata:

labels:

app: iperf3-client

spec:

containers:

- name: iperf3-client

image: networkstatic/iperf3 |

After completing previous steps we can call the start function, which provides Producers and starts the test.

| Code Block | ||

|---|---|---|

| ||

./cloud-based-performance-test.sh start |

For the start function we can use optional arguments:

...

Example invocations of test start:

| Code Block | ||

|---|---|---|

| ||

./cloud-based-performance-test.sh start --containers 10 |

The command above starts the test that creates 10 producers which send the amount of messages defined in test.properties once.

| Code Block | ||

|---|---|---|

| ||

./cloud-based-performance-test.sh start --load true --containers 10 --retention-time-minutes 30 |

This invocation starts load test, meaning the script will try to keep the amount of running containers at 10 with kafka message retention of 30 minutes.

The test.properties file contains Producers and Consumers configurations and it allows setting following properties:

...

To remove created ConfigMaps, Consumers, Producers, Grafana and Prometheus from Kubernetes cluster we call clean function. Note: clean doesn't remove certificates from HV-VES.

| Code Block | ||

|---|---|---|

| ||

./cloud-based-performance-test.sh clean |

In order to restart the test environment (perform steps in following order: 5, 2 and then 3), which means redeploying hv-ves pod, resetting kafka topic and performing setup, we use reboot-test-environment.sh.

| Code Block | ||

|---|---|---|

| ||

./reboot-test-environment.sh |

Results can be accessed under following links:

- Prometheus: http://slave1:30000/graph?g0.range_input=1h&g0.expr=hv_kafka_consumer_travel_time_seconds_count&g0.tab=1

- Grafana: http://slave1:30001/d/V94Kjlwmz/hv-ves-processing?orgId=1&refresh=5s

Environment and Resources

Kubernetes cluster with 4 worker nodes, sharing hardware configuration shown in a table below, is deployed in OpenStack cloud operating system. The test components in docker containers are further deployed on the Kubernetes cluster.

...

CPU

...

Network Performance

Pod measurement method

In order to check cluster network performance tests with usage of Iperf3 have been applied. Iperf is a tool for measurement of the maximum bandwidth on IP networks, it runs on two mode: server and client. We used a docker image: networkstatic/iperf3.

Following deployment creates a pod with iperf (server mode) on one worker, and one pod with iperf client for each worker.

| Code Block | ||||

|---|---|---|---|---|

| ||||

apiVersion: apps/v1 kind: Deployment metadata: name: iperf3-server namespace: onap labels: app: iperf3-server spec: replicas: 1 selector: matchLabels: app: iperf3-server template: metadata: labels: app: iperf3-server spec: containers: - name: iperf3-server image: networkstatic/iperf3 args: ['-s'] ports: - containerPort: 5201 name: server --- apiVersion: v1 kind: Service metadata: name: iperf3-server namespace: onap spec: selector: app: iperf3-server ports: - protocol: TCP port: 5201 targetPort: server --- apiVersion: apps/v1 kind: DaemonSet metadata: name: iperf3-clients namespace: onap labels: app: iperf3-client spec: selector: matchLabels: app: iperf3-client template: metadata: labels: app: iperf3-client spec: containers: - name: iperf3-client image: networkstatic/iperf3 command: ['/bin/sh', '-c', 'sleep infinity'] |

To create deployment, execute following command:

| Code Block |

|---|

kubectl create -f deployment.yaml |

To find all iperf pods, execute:

| Code Block |

|---|

kubectl -n onap get pods -o wide | grep iperf |

To measure connection between pods, run iperf on iperf-client pod, using following command:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server |

To change output format from MBits/sec to MBytes/sec:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server -f MBytes |

To change measure time:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server -t <time-in-second> |

To gather results, the command was executed:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server -f MBytes |

Results of performed tests

command: ['/bin/sh', '-c', 'sleep infinity'] |

To create deployment, execute following command:

| Code Block |

|---|

kubectl create -f deployment.yaml |

To find all iperf pods, execute:

| Code Block |

|---|

kubectl -n onap get pods -o wide | grep iperf |

To measure connection between pods, run iperf on iperf-client pod, using following command:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server |

To change output format from MBits/sec to MBytes/sec:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server -f MBytes |

To change measure time:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server -t <time-in-second> |

To gather results, the command was executed:

| Code Block |

|---|

kubectl -n onap exec -it <iperf-client-pod> -- iperf3 -c iperf3-server -f MBytes |

Results of performed tests

worker1 (136 MBytes/sec)

Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 10.42.5.127 port 39752 connected to 10.43.25.161 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 141 MBytes 141 MBytes/sec 32 673 KBytes [ 4] 1.00-2.00 sec 139 MBytes 139 MBytes/sec 0 817 KBytes [ 4] 2.00-3.00 sec 139 MBytes 139 MBytes/sec 0 936 KBytes [ 4] 3.00-4.00 sec 138 MBytes 137 MBytes/sec 0 1.02 MBytes [ 4] 4.00-5.00 sec 138 MBytes 137 MBytes/sec 0 1.12 MBytes [ 4] 5.00-6.00 sec 129 MBytes 129 MBytes/sec 0 1.20 MBytes [ 4] 6.00-7.00 sec 129 MBytes 129 MBytes/sec 0 1.27 MBytesworker1 (136 MBytes/sec)

Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 10.42.5.127 port 39752 connected to 10.43.25.161 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 07.00-18.00 sec 141134 MBytes 141134 MBytes/sec 32 0 6731.35 KBytesMBytes [ 4] 18.00-29.00 sec 139135 MBytes 139135 MBytes/sec 0 817 KBytes1.42 MBytes [ 4] 29.00-310.00 sec 139135 MBytes 139135 MBytes/sec 0 936 KBytes [ 4] 3.00-4.00 sec 138 MBytes 137 MBytes/sec 0 1.02 MBytes45 1.06 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 40.00-510.00 sec 1.32 138GBytes MBytes 137136 MBytes/sec 77 0 1.12 MBytes sender [ 4] 50.00-610.00 sec 1.32 129GBytes MBytes 135 129 MBytes/sec 0 1.20 MBytes receiver

worker2 (87 MBytes/sec)

worker2 (87Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 10.42.3.188 port 6.00-7.00 sec35472 connected to 10.43.25.161 port 5201 [ ID] Interval 129 MBytes 129 MBytes/sec Transfer 0 Bandwidth 1.27 MBytes Retr Cwnd [ 4] 70.00-81.00 sec 88.3 134 MBytes 13488.3 MBytes/sec 121 0 697 1.35KBytes MBytes [ 4] 81.00-92.00 sec 13596.2 MBytes 13596.3 MBytes/sec 0 1.42 MBytes 796 KBytes [ 4] 92.00-103.00 sec 13592.5 MBytes 92.5 135 MBytes/sec 45 0 1.06 MBytes 881 KBytes - - - [ - -4] - - 3.00-4.00 - - -sec - -90.0 -MBytes - - - - - - - - - - - [ ID] Interval Transfer90.0 MBytes/sec 0 957 BandwidthKBytes Retr [ 4] 04.00-105.00 sec 187.325 GBytesMBytes 87.5 136 MBytes/sec 77 0 1.00 MBytes sender [ 4] 05.00-106.00 sec 188.328 GBytesMBytes 88.7 135 MBytes/sec 0 1.06 MBytes [ 4] 6.00-7.00 sec receiver

)80.0 MBytes 80.0 MBytes/sec

Code Block title results collapse true Connecting to host iperf3-server, port0 5201 [ 4] local 10.42.3.188 port 35472 connected to 10.43.25.161 port 5201 [ ID] Interval Transfer Bandwidth 1.12 MBytes [ 4] 7.00-8.00 sec 81.2 MBytes 81.3 MBytes/sec 25 895 KBytes Retr Cwnd [ 4] 08.00-19.00 sec 8885.30 MBytes 8885.30 MBytes/sec 121 0 697983 KBytes [ 4] 19.00-210.00 sec 9683.28 MBytes 9683.37 MBytes/sec 0 1.03 MBytes 796 KBytes [ 4] 2.00-3.00 sec 92.5 MBytes 92.5 MBytes/sec 0 881 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 30.00-410.00 sec sec 90.0873 MBytes 9087.03 MBytes/sec 146 0 957 KBytes sender [ 4] 40.00-510.00 sec sec 87.5870 MBytes 87.50 MBytes/sec 0 1.00 MBytes receiver

worker3 (135 MBytes/sec)

Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 10.42.4.182 port 35288 connected to 10.43.25.161 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 5.00-6.00 sec 88.8 MBytes 88.7 MBytes/sec 0 1.06 MBytes [ 4] 6.00-7.00 sec 80.0 MBytes 80.0 MBytes/sec 0 1.12 MBytes [ 4] 70.00-81.00 sec 81.2129 MBytes 81.3 129 MBytes/sec 2545 895 KBytes1.17 MBytes [ 4] 81.00-92.00 sec 85.0 134 MBytes 85.0134 MBytes/sec 32 0 1.25 MBytes 983 KBytes [ 4] 92.00-103.00 sec 83.8135 MBytes 83.7 135 MBytes/sec 0 1.0332 MBytes -[ - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth 4] 3.00-4.00 sec 139 MBytes 139 MBytes/sec 0 1.40 MBytes Retr [ 4] 04.00-105.00 sec 873144 MBytes 87.3 144 MBytes/sec 146 0 1.47 MBytes sender [ 4] 05.00-106.00 sec 870131 MBytes 87.0 131 MBytes/sec 45 1.14 MBytes receiver

worker3 (135 MBytes/sec)

Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 10.42.4.182 port 35288 connected to 10.43.25.161 port 5201 [ ID] Interval Transfer Bandwidth 6.00-7.00 sec 129 MBytes 129 MBytes/sec 0 1.25 MBytes Retr Cwnd [ 4] 07.00-18.00 sec 129134 MBytes 129134 MBytes/sec 450 1.1733 MBytes [ 4] 18.00-29.00 sec 134138 MBytes 134138 MBytes/sec 320 1.2539 MBytes [ 4] 29.00-310.00 sec 135 MBytes 135 MBytes/sec 0 1.32 MBytes44 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 30.00-410.00 sec 1.31 139GBytes MBytes 139135 MBytes/sec 122 0 1.40 MBytes sender [ 4] 40.00-510.00 sec 1.31 144GBytes MBytes 134 144 MBytes/sec 0 1.47 MBytes receiver

worker0 (2282 MBytes/sec) (iperf client and server exist on same worker )

Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 5.00-6.0010.42.6.132 port 51156 connected to 10.43.25.161 port 5201 [ ID] Interval sec 131 MBytes 131 MBytes/secTransfer 45 Bandwidth 1.14 MBytes Retr Cwnd [ 4] 60.00-71.00 sec 2.13 129GBytes MBytes 2185 129 MBytes/sec 0 1.25536 MBytesKBytes [ 4] 71.00-82.00 sec 1.66 134GBytes MBytes 1702 134 MBytes/sec 0 1.33621 MBytesKBytes [ 4] 82.00-93.00 sec 2.10 138GBytes MBytes 1382154 MBytes/sec 0 1.39766 MBytesKBytes [ 4] 93.00-104.00 sec 135 MBytes1.89 GBytes 1351937 MBytes/sec 0 1.4401 MBytes -[ - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth 4] 4.00-5.00 sec 1.87 GBytes 1914 MBytes/sec 0 1.39 MBytes Retr [ 4] 05.00-106.00 sec 12.3176 GBytes 2826 135 MBytes/sec 122 0 1.39 MBytes sender [ 4] 06.00-107.00 sec 1.3181 GBytes 1853 134 MBytes/sec 792 1.09 MBytes [ 4] receiver

worker0 (2282 MBytes/sec) (iperf client and server exist on same worker )

Code Block title results collapse true Connecting to host iperf3-server, port 5201 [ 4] local 10.42.6.132 port 51156 connected to 10.43.25.161 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd7.00-8.00 sec 2.54 GBytes 2600 MBytes/sec 0 1.21 MBytes [ 4] 8.00-9.00 sec 2.70 GBytes 2763 MBytes/sec 0 1.34 MBytes [ 4] 09.00-110.00 sec 2.1382 GBytes 21852889 MBytes/sec 0 1.34 MBytes 536 KBytes [ 4] 1.00-2.00 sec 1.66 GBytes 1702 MBytes/sec 0 621 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 20.00-310.00 sec 222.103 GBytes 21542282 MBytes/sec 792 0 766 KBytes sender [ 4] 30.00-410.00 sec 122.893 GBytes 19372282 MBytes/sec 0 1.01 MBytes [ 4] 4.00-5.00 sec 1.87 GBytes 1914 MBytes/sec 0 1.39 MBytes [ 4] 5.00-6.00 sec 2.76 GBytes 2826 MBytes/sec 0 1.39 MBytes [ 4] 6.00-7.00 sec 1.81 GBytes 1853 MBytes/sec 792 1.09 MBytes [ 4] 7.00-8.00 sec 2.54 GBytes 2600 MBytes/sec 0 1.21 MBytes [ 4] 8.00-9.00 sec 2.70 GBytes 2763 MBytes/sec 0 1.34 MBytes [ 4] 9.00-10.00 sec 2.82 GBytes 2889 MBytes/sec 0 1.34 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 22.3 GBytes 2282 MBytes/sec 792 sender [ 4] 0.00-10.00 sec 22.3 GBytes 2282 MBytes/sec receiver

Average speed (without worker 0 ) : 119 MBytes/sec

HV-VES Performance

Preconditions

Before start tests, download docker image of producer which is available here. To extract image locally use command:

...

receiver

Average speed (without worker 0 ) : 119 MBytes/sec

Test Setup

Preconditions

- Installed ONAP (Frankfurt)

- Plain TCP connection between HV-VES and clients (default configuration)

- Metric port exposed on HV-VES service

In order to reach metrics endpoint in HV-VES there is a need to add the following lines in the ports section of HV-VES service configuration file:

| Code Block | ||

|---|---|---|

| ||

- name: port-t-6060

port: 6060

protocol: TCP

targetPort: 6060 |

Before start tests, download docker image of producer which is available here:

| View file | ||||

|---|---|---|---|---|

|

To extract image locally use command:

| Code Block |

|---|

docker load < hv-collector-go-client.tar.gz

|

Modify tools/performance/cloud/producer-pod.yaml file to use the above image and set imagePullPolicy to IfNotPresent:

| Code Block | ||

|---|---|---|

| ||

...

spec:

containers:

- name: hv-collector-producer

image: onap/org.onap.dcaegen2.collectors.hv-ves.hv-collector-go-client:latest

imagePullPolicy: IfNotPresent

volumeMounts:

... |

To execute performance tests we have to run functions from a shell script cloud-based-performance-test.sh in HV-VES project directory: ~/tools/performance/cloud/

First we have to generate certificates in ~/tools/ssl folder by using gen_certs. This step only needs to be performed during the first test setup (or if the generated files have been deleted).

Code Block title Generating certificates ./cloud-based-performance-test.sh gen_certsThen we call setup in order to send certificates to HV-VES, and deploy Consumers, Prometheus, Grafana and create their ConfigMaps.

Code Block title Setting up the test environment ./cloud-based-performance-test.sh setupAfter completing previous steps we can call the start function, which provides Producers and starts the test.

Code Block title Performing the test ./cloud-based-performance-test.sh startFor the start function we can use optional arguments:

--load should the test keep defined number of running producers until script interruption (false) --containers number of producer containers to create (1) --properties-file path to file with benchmark properties (./test.properties) --retention-time-minutes retention time of messages in kafka in minutes (60) Example invocations of test start:

Code Block title Starting performance test with single producers creation ./cloud-based-performance-test.sh start --containers 10The command above starts the test that creates 10 producers which send the amount of messages defined in test.properties once.

Code Block title Starting performance test with constant messages load ./cloud-based-performance-test.sh start --load true --containers 10 --retention-time-minutes 30This invocation starts load test, meaning the script will try to keep the amount of running containers at 10 with kafka message retention of 30 minutes.

The test.properties file contains Producers and Consumers configurations and it allows setting following properties:

Producer hvVesAddress HV-VES address (dcae-hv-ves-collector.onap:6061) client.count Number of clients per pod (1) message.size Size of a single message in bytes (16384) message.count Amount of messages to be send by each client (1000) message.interval Interval between messages in miliseconds (1) Certificates paths client.cert.path Path to cert file (/ssl/client.p12) client.cert.pass.path Path to cert's pass file (/ssl/client.pass) Consumer kafka.bootstrapServers Adress of Kafka service to consume from (message-router-kafka:9092) kafka.topics Kafka topics to subscribe to (HV_VES_PERF3GPP)

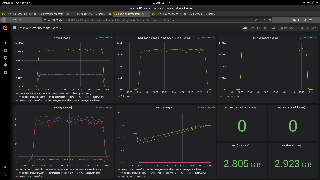

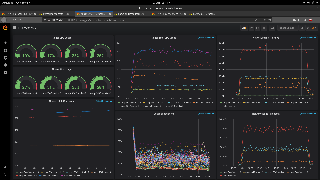

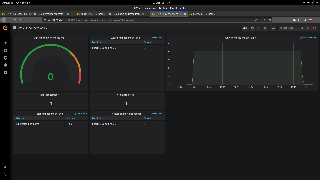

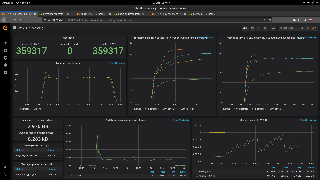

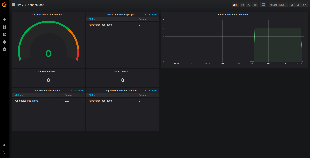

Results can be accessed under following links:

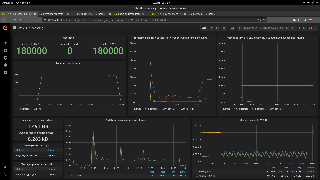

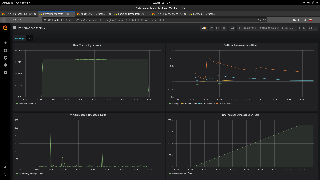

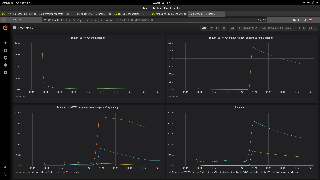

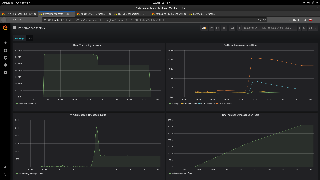

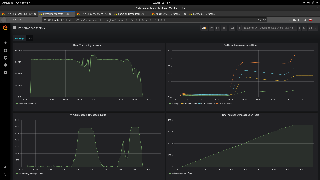

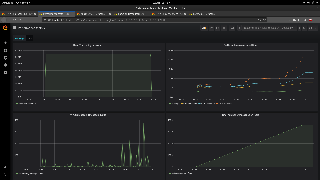

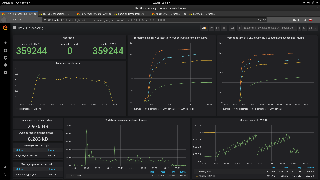

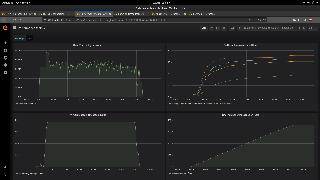

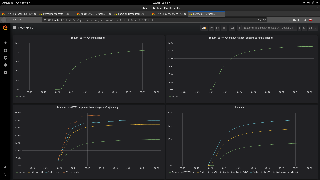

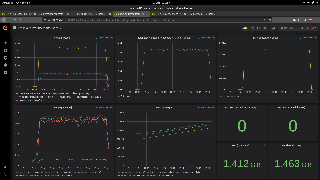

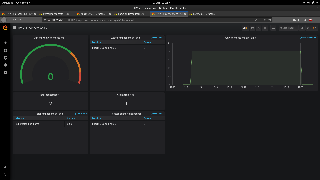

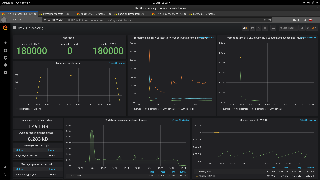

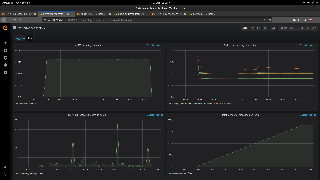

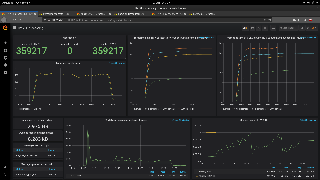

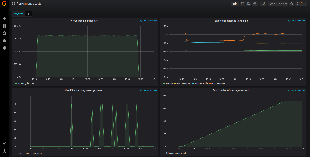

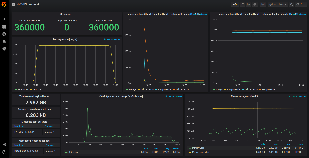

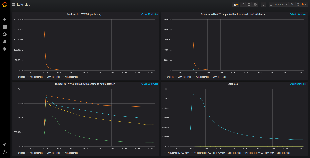

HV-VES Performance test results

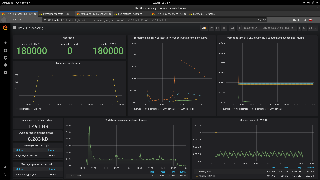

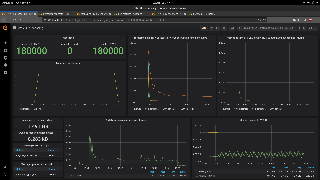

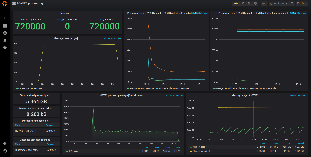

With dmaap Kafka

Conditions

...

Raw results data with screenshots can be found in following files:

- Series 1 - results_series_1_with_dmaap.zip

- Series 2 - results_series_2_with_dmaap.zip

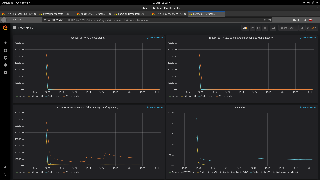

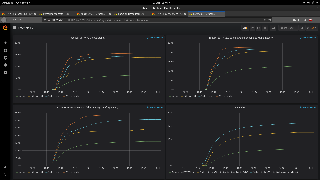

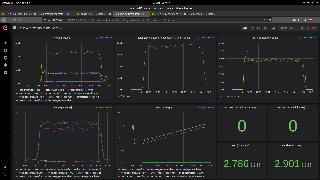

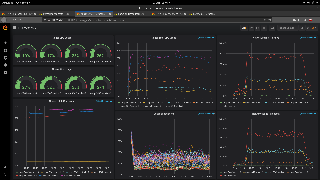

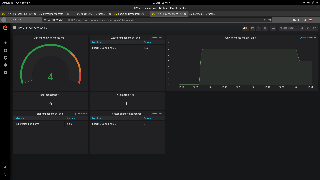

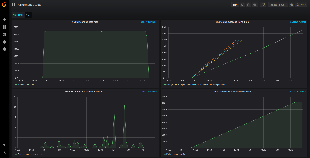

Test Results - series 1

| Expand | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Below tables show the test results across a wide range of containers' number.

|

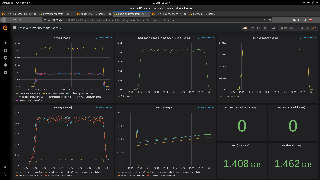

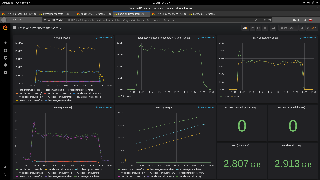

Test Results - series 2

| Expand | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

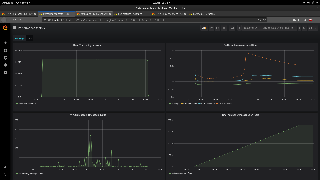

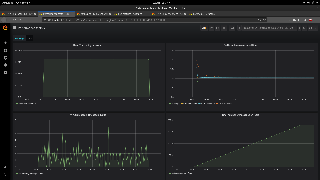

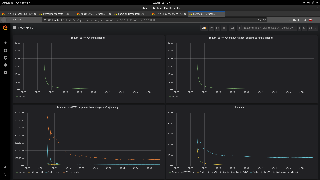

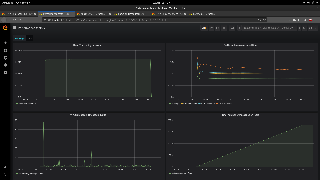

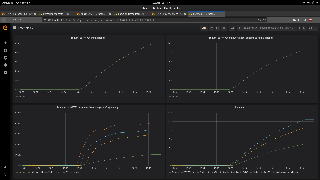

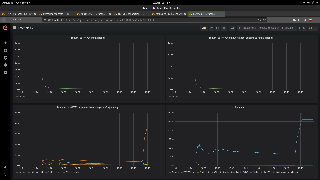

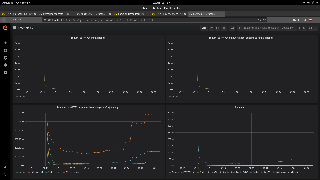

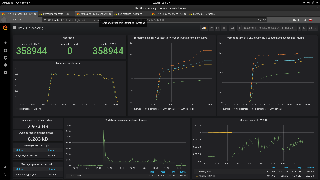

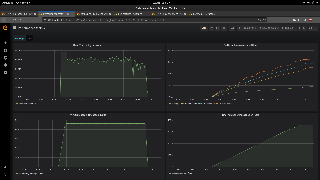

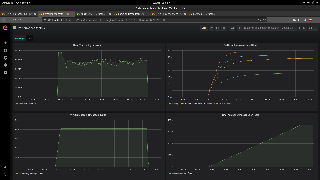

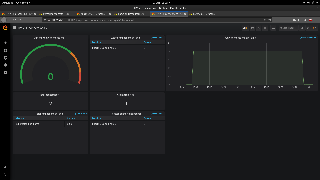

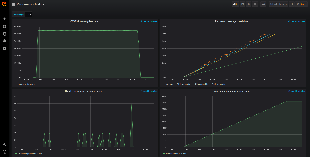

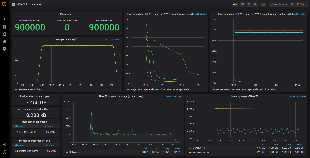

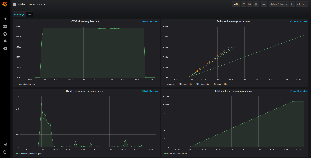

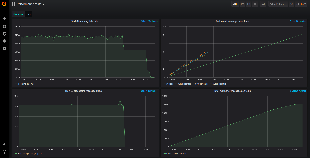

No DMaaP Kafka SetUp

...

Raw results data with screenshots can be found in following files:

- Series 1 - results_series_1.zip

- Series 2 - results_series_2.zip

To see custom Kafka metrics you may want to change kafka-and-producers.json (located in HV-VES project directory: tools/performance/cloud/grafana/dashboards) to

...

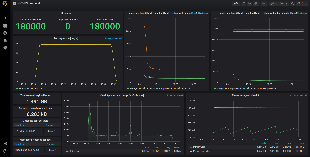

| Expand | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Below tables show the test results across a wide range of containers' number.

|

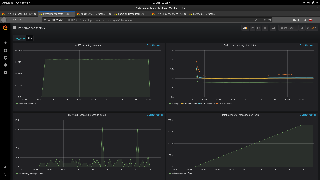

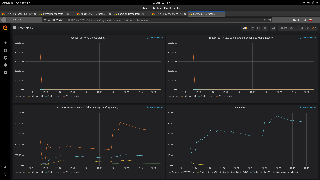

Test results - series 2

| Expand | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Below tables show the test results across a wide range of containers' number.

|