| Table of Contents |

|---|

20180616 this page is deprecated - use Cloud Native Deployment

This page details the Rancher RI installation independent of the deployment target (Physical, Openstack, AWS, Azure, GCD, Bare-metal, VMware)

see ONAP on Kubernetes#HardwareRequirements

Pre-requisite

The supported versions are as follows:

| ONAP Release | Rancher | Kubernetes | Helm | Kubectl | Docker |

|---|---|---|---|---|---|

| Amsterdam | 1.6.10 | 1.7.7 | 2.3.0 | 1.7.7 | 1.12.x |

| Beijing | 1.6.14 | 1.8.610 | 2.68.1+2 | 1.8.610 | 17.03-ce |

...

Rancher 1.6

...

Installation

The following is for amsterdam or master branches

Scenario: installing Rancher on clean Ubuntu 16.04 128g VM (single collocated server/host)

Note: amsterdam will require a different onap-parameters.yaml

Cloud Native Deployment#UndercloudInstall-Rancher/Kubernetes/Helm/Docker

| Code Block |

|---|

wget https://git.onap.org/logging-analytics/plain/deploy/rancher/oom_rancher_setup.sh |

...

clone continuous deployment script - until it is merged

| Code Block |

|---|

wget https://git.onap.org/logging-analytics/plain/deploy/cd.sh

chmod 777 cd.sh

wget https://jira.onap.org/secure/attachment/ID/aaiapisimpledemoopenecomporg.cer

wget https://jira.onap.org/secure/attachment/1ID/onap-parameters.yaml

wget https://jira.onap.org/secure/attachment/ID/aai-cloud-region-put.json

./cd.sh -b master -n onap

# wait about 25-120 min depending on the speed of your network pulling docker images |

Config

Rancher Host IP or FQDN

When running the oom_rancher_setup.sh script or manually installing rancher - which IP/FQDN to use

| Jira | ||||||

|---|---|---|---|---|---|---|

|

You can also edit your /etc/hosts with a hostname linked to an ip and use this name as the server - I do this for Azure.

If you cannot ping your ip then rancher will not be able to either.

do an ifconfig and pick the non-docker ip there - I have also used the 172 docker ip in public facing subnets to work around the lockdown of the 10250 port in public for crypto miners - but in a private subnet you can use the real IP.

for example

| Code Block | ||||

|---|---|---|---|---|

| ||||

obrienbiometrics:logging-analytics michaelobrien$ dig beijing.onap.cloud

;; ANSWER SECTION:

beijing.onap.cloud. 299 IN A 13.72.107.69

ubuntu@a-ons-auto-beijing:~$ ifconfig

docker0 Link encap:Ethernet HWaddr 02:42:8b:f4:74:95

|

The following will be automated shortly

Run above script on a clean Ubuntu 16.04 VM (you may need to set your hostname in /etc/hosts)

Login to port 8880

Create a Kubernetes environment in rancher

Add your host - run the docker agent pasted to the gui

Wait for the Kubernetes CLI to provide a token, copy past this to ~/.kube/config

Beijing Branch Installation

Rancher 1.6.14

see https://gerrit.onap.org/r/#/c/32019/2/install/rancher/oom_rancher_setup.sh

The following will be automated shortly

Run above script on a clean Ubuntu 16.04 VM (you may need to set your hostname in /etc/hosts)

Login to port 8880

Create a Kubernetes environment in rancher

Add your host - run the docker agent pasted to the gui

Wait for the Kubernetes CLI to provide a token, copy past this to ~/.kube/config

Experimental Installation

Rancher 2.0

see https://gerrit.onap.org/r/#/c/32037/1/install/rancher/oom_rancher2_setup.sh

Run above script on a clean Ubuntu 16.04 VM (you may need to set your hostname in /etc/hosts)

The cluster will be created and registered for you.

Login to port 80 and wait for the cluster to be green - then hit the kubectl button, copy paste the contents to ~/.kube/config

Result

| Code Block |

|---|

root@ip-172-31-84-230:~# docker ps CONTAINER ID IMAGE inet addr:172.17.0.1 Bcast:0.0.0.0 Mask:255.255.0.0 eth0 Link encap:Ethernet HWaddr 00:0d:3a:1b:5e:03 inet addr:10.0.0.4 Bcast:10.0.0.255 Mask:255.255.255.0 # i could use 172.17.0.1 only for a single collocated host # but 10.0.0.4 is the correct IP (my public facing subnet) # In my case I use -b beijing.onap.cloud # but in all other cases I could use the hostname ubuntu@a-ons-auto-beijing:~$ sudo cat /etc/hosts 127.0.0.1 a-ons-auto-beijing |

Experimental Installation

Rancher 2.0

see https://gerrit.onap.org/r/#/c/32037/1/install/rancher/oom_rancher2_setup.sh

| Code Block |

|---|

./oom_rancher2_setup.sh -s amsterdam.onap.info |

Run above script on a clean Ubuntu 16.04 VM (you may need to set your hostname in /etc/hosts)

The cluster will be created and registered for you.

Login to port 80 and wait for the cluster to be green - then hit the kubectl button, copy paste the contents to ~/.kube/config

Result

| Code Block |

|---|

root@ip-172-31-84-230:~# docker ps CONTAINER ID COMMAND CREATED STATUS IMAGE PORTS NAMES 66e823e8ebb8 gcr.io/google_containers/defaultbackend@sha256:865b0c35e6da393b8e80b7e3799f777572399a4cff047eb02a81fa6e7a48ed4b "/server" 3 minutes ago Up 3 minutes COMMAND CREATED k8s_default-http-backend_default-http-backend-66b447d9cf-t4qxx_ingress-nginx_54afe3f8-1455-11e8-b142-169c5ae1104e_0 7c9a6eeeb557STATUS rancher/k8s-dns-sidecar-amd64@sha256:4581bf85bd1acf6120256bb5923ec209c0a8cfb0cbe68e2c2397b30a30f3d98c PORTS "/sidecar --v=2 --..." 3 minutes ago Up 3 minutes NAMES 66e823e8ebb8 gcr.io/google_containers/defaultbackend@sha256:865b0c35e6da393b8e80b7e3799f777572399a4cff047eb02a81fa6e7a48ed4b "/server" 3 minutes ago k8s_sidecar_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 72487327e65b Up 3 minutes rancher/pause-amd64:3.0 k8s_default-http-backend_default-http-backend-66b447d9cf-t4qxx_ingress-nginx_54afe3f8-1455-11e8-b142-169c5ae1104e_0 7c9a6eeeb557 rancher/k8s-dns-sidecar-amd64@sha256:4581bf85bd1acf6120256bb5923ec209c0a8cfb0cbe68e2c2397b30a30f3d98c "/sidecar --v=2 --..." 3 minutes ago Up 3 minutes "/pause" 3 minutes ago Up 3 minutes k8s_sidecar_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 72487327e65b rancher/pause-amd64:3.0 k8s_POD_default-http-backend-66b447d9cf-t4qxx_ingress-nginx_54afe3f8-1455-11e8-b142-169c5ae1104e_0 d824193e7404 rancher/k8s-dns-dnsmasq-nanny-amd64@sha256:bd1764fed413eea950842c951f266fae84723c0894d402a3c86f56cc89124b1d "/dnsmasq-nanny -v..." 3 minutes ago Up 3 minutes "/pause" 3 minutes k8s_dnsmasq_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 89bdd61a99a3ago Up rancher/k8s-dns-kube-dns-amd64@sha256:9c7906c0222ad6541d24a18a0faf3b920ddf66136f45acd2788e1a2612e623313 minutes "/kube-dns --domai..." 3 minutes ago Up 3 minutes k8s_kubednsPOD_kubedefault-http-dnsbackend-6f7666d48c66b447d9cf-9zmtft4qxx_kubeingress-systemnginx_51b35ec854afe3f8-1455-11e8-b142-169c5ae1104e_0 7c17fc57aef9d824193e7404 rancher/clusterk8s-dns-proportionaldnsmasq-autoscalernanny-amd64@sha256:77d2544c9dfcdfcf23fa2fcf4351b43bf3a124c54f2da1f7d611ac54669e3336bd1764fed413eea950842c951f266fae84723c0894d402a3c86f56cc89124b1d "/cluster-proporti "/dnsmasq-nanny -v..." 3 minutes ago Up 3 minutes k8s_autoscalerdnsmasq_kube-dns-autoscaler6f7666d48c-54fd4c549b-6bm5b9zmtf_kube-system_51afa75f51b35ec8-1455-11e8-b142-169c5ae1104e_0 024269154b8b89bdd61a99a3 rancher/pause-amd64:3.0k8s-dns-kube-dns-amd64@sha256:9c7906c0222ad6541d24a18a0faf3b920ddf66136f45acd2788e1a2612e62331 "/kube-dns --domai..." 3 minutes ago Up 3 minutes "/pause"k8s_kubedns_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 7c17fc57aef9 rancher/cluster-proportional-autoscaler-amd64@sha256:77d2544c9dfcdfcf23fa2fcf4351b43bf3a124c54f2da1f7d611ac54669e3336 "/cluster-proporti..." 3 minutes ago Up 3 minutes k8s_PODautoscaler_kube-dns-autoscaler-6f7666d48c54fd4c549b-9zmtf6bm5b_kube-system_51b35ec851afa75f-1455-11e8-b142-169c5ae1104e_0 48e039d15a90024269154b8b rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-dns-autoscaler6f7666d48c-54fd4c549b-6bm5b9zmtf_kube-system_51afa75f51b35ec8-1455-11e8-b142-169c5ae1104e_0 13bec6fda75648e039d15a90 rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_nginxkube-dns-ingressautoscaler-controller54fd4c549b-vchhb6bm5b_ingresskube-nginxsystem_54aede2751afa75f-1455-11e8-b142-169c5ae1104e_0 332073b160c913bec6fda756 rancher/coreospause-flannel-cni@sha256:3cf93562b936004cbe13ed7d22d1b13a273ac2b5092f87264eb77ac9c009e47famd64:3.0 "/install-cni.sh" 3 minutes ago Up 3 minutes k8s_install-cni_kube-flannel-jgx9x_kube-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 79ef0da922c5 "/pause" rancher/coreos-flannel@sha256:93952a105b4576e8f09ab8c4e00483131b862c24180b0b7d342fb360bbe44f3d 3 minutes ago Up 3 minutes "/opt/bin/flanneld..." 3 minutes ago Up 3 minutes k8s_POD_kubenginx-flannel_kubeingress-flannelcontroller-jgx9xvchhb_kubeingress-systemnginx_4fb9b39b54aede27-1455-11e8-b142-169c5ae1104e_0 300eab7db4bc332073b160c9 rancher/pausecoreos-flannel-amd64cni@sha256:3.03cf93562b936004cbe13ed7d22d1b13a273ac2b5092f87264eb77ac9c009e47f "/install-cni.sh" 3 minutes ago Up 3 minutes k8s_install-cni_kube-flannel-jgx9x_kube-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 79ef0da922c5 "/pause"rancher/coreos-flannel@sha256:93952a105b4576e8f09ab8c4e00483131b862c24180b0b7d342fb360bbe44f3d "/opt/bin/flanneld..." 3 minutes ago Up 3 minutes k8s_PODkube-flannel_kube-flannel-jgx9x_kube-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 1597f8ba9087300eab7db4bc rancher/k8spause-amd64:v1.8.7-rancher1-13.0 "/opt/rke/entrypoi..."pause" 3 minutes ago Up 3 minutes k8s_POD_kube-flannel-jgx9x_kube-proxy 523034c75c0e-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 1597f8ba9087 rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 43 minutes ago Up 43 minutes kubeletkube-proxy 788d572d313e523034c75c0e rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes schedulerkubelet 9e520f4e5b01788d572d313e rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes kube-controllerscheduler 29bdb59c91649e520f4e5b01 rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes kube-apicontroller 2686cc1c904a29bdb59c9164 rancher/coreos-etcdk8s:v3v1.0.178.7-rancher1-1 "/usropt/localrke/bin/etentrypoi..." 4 minutes ago Up 4 minutes etcdkube-api a1fccc20c8e72686cc1c904a rancher/agentcoreos-etcd:v2v3.0.2 17 "run.sh --etcd --c"/usr/local/bin/et..." 54 minutes ago Up 54 minutes unruffled_pikeetcd 6b01cf361a52a1fccc20c8e7 rancher/server:previewagent:v2.0.2 "run.sh --etcd --c..." 5 minutes ago Up 5 minutes "rancher --k8s-mod..." 5 minutes ago Up 5 minutes 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp rancher-server unruffled_pike 6b01cf361a52 rancher/server:preview "rancher --k8s-mod..." 5 minutes ago Up 5 minutes 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp rancher-server |

OOM ONAP Deployment Script

| Jira | ||||||

|---|---|---|---|---|---|---|

|

https://gerrit.onap.org/r/32653

Helm DevOps

https://docs.helm.sh/chart_best_practices/#requirements

Kubernetes DevOps

From original ONAP on Kubernetes page

Kubernetes specific config

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Deleting All Containers

Delete all the containers (and services)

|

Delete/Rerun config-init container for /dockerdata-nfs refresh

refer to the procedure as part of https://github.com/obrienlabs/onap-root/blob/master/cd.sh

Delete the config-init container and its generated /dockerdata-nfs share

There may be cases where new configuration content needs to be deployed after a pull of a new version of ONAP.

for example after pull brings in files like the following (20170902)

root@ip-172-31-93-160:~/oom/kubernetes/oneclick# git pull Resolving deltas: 100% (135/135), completed with 24 local objects. From http://gerrit.onap.org/r/oom bf928c5..da59ee4 master -> origin/master Updating bf928c5..da59ee4 kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/metadata.rb | 7 + kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/recipes/aai-resources-aai-keystore.rb | 8 + kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/CHANGELOG.md | 2 +- kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/README.md | 4 +- |

see (worked with Zoran) OOM-257 - DevOps: OOM config reset procedure for new /dockerdata-nfs content CLOSED

|

Container Endpoint access

Check the services view in the Kuberntes API under robot

robot.onap-robot:88 TCP

robot.onap-robot:30209 TCP

kubectl get services --all-namespaces -o wide onap-vid vid-mariadb None <none> 3306/TCP 1h app=vid-mariadb onap-vid vid-server 10.43.14.244 <nodes> 8080:30200/TCP 1h app=vid-server |

|---|

Container Logs

kubectl --namespace onap-vid logs -f vid-server-248645937-8tt6p 16-Jul-2017 02:46:48.707 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 22520 ms kubectl --namespace onap-portal logs portalapps-2799319019-22mzl -f root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE onap-robot robot-44708506-dgv8j 1/1 Running 0 36m 10.42.240.80 obriensystemskub0 root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-robot logs -f robot-44708506-dgv8j 2017-07-16 01:55:54: (log.c.164) server started |

|---|

A pods may be setup to log to a volume which can be inspected outside of a container. If you cannot connect to the container you could inspect the backing volume instead. This is how you find the backing directory for a pod which is using a volume which is an empty directory type, the log files can be found on the kubernetes node hosting the pod. More details can be found here https://kubernetes.io/docs/concepts/storage/volumes/#emptydir

here is an example of finding SDNC logs on a VM hosting a kubernetes node.

|

Robot Logs

Yogini and I needed the logs in OOM Kubernetes - they were already there and with a robot:robot auth http://<your_dns_name>:30209/logs/demo/InitDistribution/report.html for example after a oom/kubernetes/robot$./demo-k8s.sh distribute find your path to the logs by using for example root@ip-172-31-57-55:/dockerdata-nfs/onap/robot# kubectl --namespace onap-robot exec -it robot-4251390084-lmdbb bash root@robot-4251390084-lmdbb:/# ls /var/opt/OpenECOMP_ETE/html/logs/demo/InitD InitDemo/ InitDistribution/ path is http://<your_dns_name>:30209/logs/demo/InitDemo/log.html#s1-s1-s1-s1-t1 |

SSH into ONAP containers

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

Get the pod name viakubectl get pods --all-namespaces -o wide bash into the pod via kubectl -n onap-mso exec -it mso-1648770403-8hwcf /bin/bash |

|---|

Push Files to Pods

Trying to get an authorization file into the robot pod

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/home/ubuntu above works? |

|---|

Redeploying Code war/jar in a docker container

see building the docker image - use your own local repo or a repo on dockerhub - modify the values.yaml and delete/create your pod to switch images

example in LOG-136 - Logging RI: Code/build/tag microservice docker image IN PROGRESS

Turn on Debugging

via URL

http://cd.onap.info:30223/mso/logging/debug

via logback.xml

Attaching a debugger to a docker container

Running ONAP Portal UI Operations

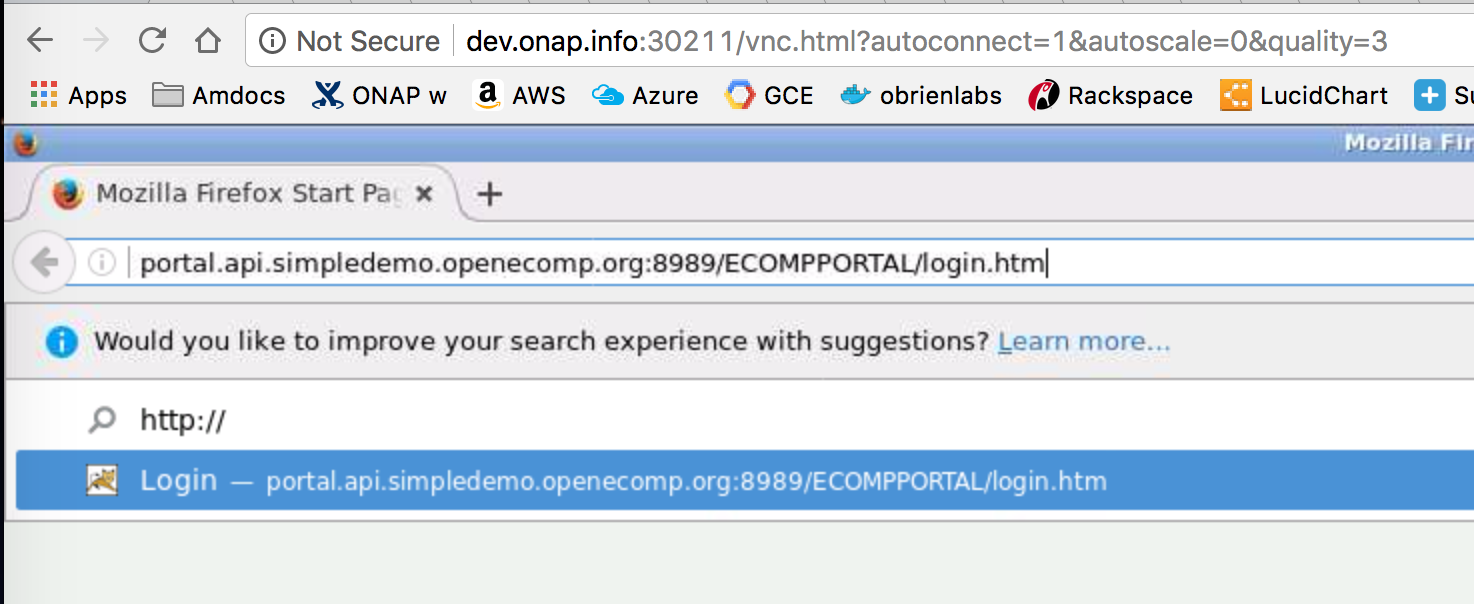

Running ONAP using the vnc-portal

see (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

or run the vnc-portal container to access ONAP using the traditional port mappings. See the following recorded video by Mike Elliot of the OOM team for a audio-visual reference

Check for the vnc-portal port via (it is always 30211)

|

launch the vnc-portal in a browser

password is "password"

Open firefox inside the VNC vm - launch portal normally

http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm

For login details to get into ONAPportal, see Tutorial: Accessing the ONAP Portal

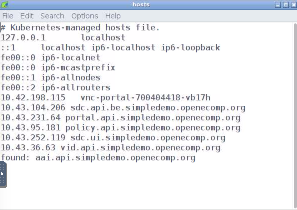

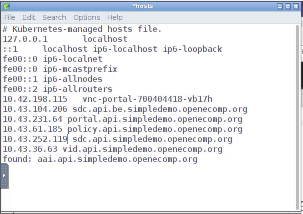

(20170906) Before running SDC - fix the /etc/hosts (thanks Yogini for catching this) - edit your /etc/hosts as follows

(change sdc.ui to sdc.api)

OOM-282 - vnc-portal requires /etc/hosts url fix for SDC sdc.ui should be sdc.api CLOSED

before | after | notes |

|---|---|---|

login and run SDC

Continue with the normal ONAP demo flow at (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

Running Multiple ONAP namespaces

Run multiple environments on the same machine - TODO

Troubleshooting

Rancher fails to restart on server reboot

Having issues after a reboot of a colocated server/agent

Installing Clean Ubuntu

apt-get install ssh apt-get install ubuntu-desktop |

|---|

DNS resolution

ignore - not relevant

Search Line limits were exceeded, some dns names have been omitted, the applied search line is: default.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

https://github.com/rancher/rancher/issues/9303

Config Pod fails to start with Error

Make sure your Openstack parameters are set if you get the following starting up the config pod

|