...

This page details the Rancher RI installation independent of the deployment target (Physical, Openstack, AWS, Azure, GCD, Bare-metal, VMware)

Pre-requisite

The supported versions are as follows:

...

Delete all the containers (and services)

|

Delete/Rerun config-init container for /dockerdata-nfs refresh

...

for example after pull brings in files like the following (20170902)

root@ip-172-31-93-160:~/oom/kubernetes/oneclick# git pull Resolving deltas: 100% (135/135), completed with 24 local objects. From http://gerrit.onap.org/r/oom bf928c5..da59ee4 master -> origin/master Updating bf928c5..da59ee4 kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/metadata.rb | 7 + kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/recipes/aai-resources-aai-keystore.rb | 8 + kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/CHANGELOG.md | 2 +- kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/README.md | 4 +- |

see (worked with Zoran) OOM-257 - DevOps: OOM config reset procedure for new /dockerdata-nfs content CLOSED

|

Container Endpoint access

...

robot.onap-robot:30209 TCP

kubectl get services --all-namespaces -o wide onap-vid vid-mariadb None <none> 3306/TCP 1h app=vid-mariadb onap-vid vid-server 10.43.14.244 <nodes> 8080:30200/TCP 1h app=vid-server |

|---|

Container Logs

kubectl --namespace onap-vid logs -f vid-server-248645937-8tt6p 16-Jul-2017 02:46:48.707 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 22520 ms kubectl --namespace onap-portal logs portalapps-2799319019-22mzl -f root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE onap-robot robot-44708506-dgv8j 1/1 Running 0 36m 10.42.240.80 obriensystemskub0 root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-robot logs -f robot-44708506-dgv8j 2017-07-16 01:55:54: (log.c.164) server started |

|---|

A pods may be setup to log to a volume which can be inspected outside of a container. If you cannot connect to the container you could inspect the backing volume instead. This is how you find the backing directory for a pod which is using a volume which is an empty directory type, the log files can be found on the kubernetes node hosting the pod. More details can be found here https://kubernetes.io/docs/concepts/storage/volumes/#emptydir

here is an example of finding SDNC logs on a VM hosting a kubernetes node.

|

Robot Logs

Yogini and I needed the logs in OOM Kubernetes - they were already there and with a robot:robot auth http://<your_dns_name>:30209/logs/demo/InitDistribution/report.html for example after a oom/kubernetes/robot$./demo-k8s.sh distribute find your path to the logs by using for example root@ip-172-31-57-55:/dockerdata-nfs/onap/robot# kubectl --namespace onap-robot exec -it robot-4251390084-lmdbb bash root@robot-4251390084-lmdbb:/# ls /var/opt/OpenECOMP_ETE/html/logs/demo/InitD InitDemo/ InitDistribution/ path is http://<your_dns_name>:30209/logs/demo/InitDemo/log.html#s1-s1-s1-s1-t1 |

SSH into ONAP containers

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

Get the pod name viakubectl get pods --all-namespaces -o wide bash into the pod via kubectl -n onap-mso exec -it mso-1648770403-8hwcf /bin/bash |

|---|

Push Files to Pods

Trying to get an authorization file into the robot pod

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/home/ubuntu above works? |

|---|

Redeploying Code war/jar in a docker container

...

Check for the vnc-portal port via (it is always 30211)

|

launch the vnc-portal in a browser

...

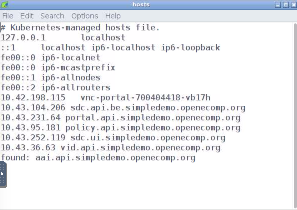

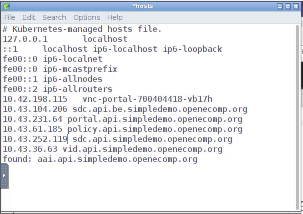

OOM-282 - vnc-portal requires /etc/hosts url fix for SDC sdc.ui should be sdc.api CLOSED

before | after | notes |

|---|---|---|

login and run SDC

Continue with the normal ONAP demo flow at (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

...

Having issues after a reboot of a colocated server/agent

Installing Clean Ubuntu

apt-get install ssh apt-get install ubuntu-desktop |

|---|

DNS resolution

ignore - not relevant

...

Make sure your Openstack parameters are set if you get the following starting up the config pod

|