This is a DRAFT page for collecting ideas and opinions on possible evolution of DMaaP

Byung-Woo Jun, Fiachra Corcoran

Message Router

Current State

DMaaP Message Router utilizes an HTTP REST API to service all Publish and Consume transactions. HTTP and REST standards are followed so clients as varied as CURL, Java applications and even Web Browsers will work to interact with Message Router.

Message Router uses AAF for user’s authentication and authorization.

The MessageRouter service has no requirements on what publishers can put onto a topic. The messages are opaque to the service and are treated as raw bytes. In general, passing JSON messages is preferred, but this is due to higher-level features and related systems, not the MessageRouter broker itself.

Challenges to be addressed

The current message router implementation does not give the receiver control over commits, severely limiting the bus semantics and the performance of the bus.

The current message router implementation does not give the producer the capability of choosing a partition key per message in case of group sending

The ONAP AAF security model is bespoke to ONAP, which means off-the-shelf components from other communities generally cannot integrate. This is not a DMaaP problem but will have an impact on the target architecture if not addressed.

The topic administration in ONAP does not offer the capability of configuring the topic retention.

There are some considerations needed for secure, low-latency access to data.

The message router exposes a subset of the Kafka semantics and has a client aware architecture relying on zookeeper to “move” a Kafka adapter related to a consumer from one server to another. It does not support acknowledges - messages are acknowledged upon successful reply to a GET. If a client crashes straight after receiving a reply at least once semantics are broken. This makes message router in its current form unsuitable for mission critical data and control flows.

Opportunities to be Investigated

Deployment of Kafka - does adopting a component such as Strimzi add any value in terms of configurability etc?

Security: evolving ONAP towards supporting OAuth 2 natively would allow every component including Kafka to provide authentication. Requires a broader analysis.

Message router API: the principles for having a technology agnostic lightweight message API are good. However these days there are more options in the open source ecosystem which support a broader range of the Kafka semantics - should we consider aligning with one of those:

- Kafka-pixy - supports gRPC and REST. gRPC is intended to be used in production. Supports some level of security. Apache 2.0 license

- Strimzi Kafka Bridge provides a fine-grained API to manage Kafka consumers, that is pretty much a translation of the work done by the native Kafka adapter. That includes individual and cumulative commits, creation and deletion of clients, partition assignment etc. It also supports group send/receive of messages for better efficiency and Kafka headers

- Confluent REST proxy is similar in concept to the Strimzi bridge. It is stateful, and in case of scale out the client needs visibility of the REST proxy instances or guarantee that it is always routed towards the same instance by any gateway/load balancers in front of it. That implies the same deployment constraints as the Strimzi Bridge

Strimzi looks like a suitable candidate, propose analysis in two aspects:

Some analysis output here

Native broker API: for low-latency, high volume use cases, how do we securely expose native Kafka APIs?

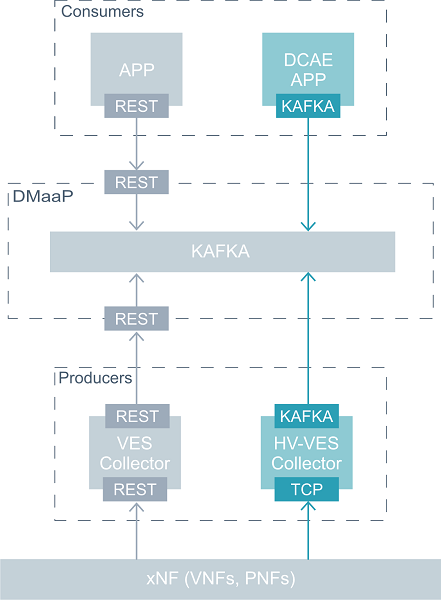

HV-VES supports reading / writing directly from Kafka: https://docs.onap.org/projects/onap-dcaegen2/en/latest/sections/services/ves-hv/architecture.html

Challenges to be addressed

Opportunities to be investigated

Integration with external storage systems

- Public cloud services

- Data lakes

- NFS?

- S3?

3 Comments

Damian Nowak

I think, we shall allow direct access to Kafka Bus for both - Producers and subscribers.

Both shall be able to push /pull events to Kafka Topics natively, and have the CRUD access using a REST interface. It shall be possible to define architectures, where both → publisher and subscribers communicate natively with Kafka. HV-VES is such a publisher already, and there have been changes done in the DCAE MOD project to support orchestrating Kafka native topics.

I agree as well, that current Kafka implementation, using CADI/AAF is a bottleneck. I think, there is actually an SASL provider implemented in Kafka itself.

We have executed performance tests with DCAE collectors (results shall be available in DCAE project), where we have replaced DMaaP-Kafka with "wurstmeister-kafka" docker container – probably the most popular dockerized distribution of Kafka in DockerHUB, and we have immediately seen much better results, even without advanced features like partitioning.

There is clearly a bottleneck in the current architecture of DMaaP-Kafka, which need to be removed to support high-volume, low-latency data.

What about a possible integration of Keycloak, which becomes a de-facto standard for authorization aspects in cloud-native applications?

Ciaran Johnston

Hi Damian,

Makes sense, yes, to have both native and REST access.

The CADI / AAF question is related but also requires broader consideration outside just DMaaP. There seems to be some other work ongoing on multi-tenancy, and in SDN-R looking at OAuth provider using Keycloak which could be promising.

Byung-Woo Jun

The CADI / AAF replacement is a broader consideration. There is separate work ongoing in OOM, SECCOM and ARCCOM; i.e., OAuth2 provider using Keycloak makes sense. Once we settle it down, we can provide more details