- Created by Alexis de Talhouët, last modified by Martin Ouimet on Sep 07, 2018

Pre-requisite

The supported versions are as follows:

| ONAP Release | Rancher | Kubernetes | Helm | Kubectl | Docker |

|---|---|---|---|---|---|

| Amsterdam | 1.6.10 | 1.7.7 | 2.3.0 | 1.7.7 | 1.12.x |

| Beijing | 1.6.14 | 1.8.10 | 2.8.2 | 1.8.10 | 17.03-ce |

| Casablanca | 1.6.18 | 1.8.10 | 2.9.1 | 1.8.10 | 17.03-ce |

This is the amsterdam branch supporting DCAEGEN2 which has different rancher/helm/kubernetes/docker version levels

see ONAP on Kubernetes#HardwareRequirements

Installing Docker/Rancher/Helm/Kubectl

Run the following script as root to install the appropriate versions of Docker and Rancher. Rancher will install Kubernetes and helm, the script will install the helm and kubectl clients.

Adding hosts to the kubernetes cluster is done through the rancher UI.

OOM-715 - Getting issue details... STATUS

https://gerrit.onap.org/r/#/c/32019/17/install/rancher/oom_rancher_setup.sh

Overall required resources:

| Nbr VM | vCPUs | RAM (GB) | Disk (GB) | Swap (GB) | Floating IPs | |

|---|---|---|---|---|---|---|

| Rancher | 1 | 2 | 4 | 40 | - | - |

| Kubernetes | 1 | 8 | 80-128 | 100 | 16 | - |

| DCAE | 15 | 44 | 88 | 880 | - | 15 |

| Total | 17 | 54 | 156-220 | 1020 | 16 | 15 |

| Number | Flavor | size (vCPU/RAM/HD) | |

|---|---|---|---|

| 1 | m1.small | 1/2/20 | |

| 7 (pg, doks, dokp, cnsl, orcl) | m1.medium | 2/4/40 | |

| 7 (cdap) | m1.large | 8/8/80 | |

| 0 | m1.xlarge | 8/16/160 | |

| 1(oom) | m1.xxlarge (oom) | 12/64/160 | |

| 1(oom) | m1.medium | 2/4/40 |

Below is the HEAT portion of the setup in OOM - minus the 64G OOM and 4G OOM-Rancher VM's

Setup infrastructure

Rancher

Create a plain Ubuntu VM in your cloud infrastructure.

The following specs are enough for Rancher

VCPUs 2 RAM 4Go Taille 40Go Setup Rancher 1.6.10 (amsterdam branch only) by running this command:

docker run -d -p 8880:8080 rancher/server:v1.6.10

Navigate to Rancher UI

http://<rancher-vm-ip>:8880

- Setup basic access control: Admin → Access Control

- Install Openstack as machine driver: Admin → Machine Drivers

We're now all set to create our Kubernetes host.

Kubernetes on Rancher

see related ONAP on Kubernetes#QuickstartInstallation

Video describing all the steps

- Create an environment

- Default → Managed Environments

- Click Add Environment

- Fill in the Name and the Description

- Select Kubernetes as Environment Template

- Click Create

- Create an API key: API → Keys

- Click Add Account API Key

- Fill in the Name and the Description

- Click Create

- Backup the Access Key and the Secret Key

- Retrieve your environment ID

- Navigate to the previously created environment

In the browser URL, you should see the following, containing your <env-id>

http://<rancher-vm-ip>:8080/env/<env-id>/kubernetes/dashboard

Create the Kubernetes host on OpenStack.

Using Rancher API

Make sure to fill in the placeholder as follow:{API_ACCESS_KEY}: The API KEY created in the previous step

{API_SECRET_KEY}: The API Secret created in the previous step

{OPENSTACK_INSTANCE}: The OpenStack Instance Name to give to your K8S VM

{OPENSTACK_IP}: The IP of your OpenStack deployment

{RANCHER_IP}: The IP of the Rancher VM created previously

{K8S_FLAVOR}: The Flavor to use for the kubernetes VM. Recommanded specs:VCPUs 8 RAM 64Go Taille 100Go Swap 16Go I added some swap because in ONAP, most of the app are not always active, most of them are idle, so it's fine to let the host store dirty page in the swap memory.

{UBUNTU_1604}: The Ubuntu 16.04 image

{PRIVATE_NETWORK_NAME}: a private network

{OPENSTACK_TENANT_NAME}: Openstack tenant

{OPENSTACK_USERNAME}: Openstack username

{OPENSTACK_PASSWORD}: OpenStack passwordcurl -u "{API_ACCESS_KEY}:{API_SECRET_KEY}" \ -X POST \ -H 'Accept: application/json' \ -H 'Content-Type: application/json' \ -d '{ "hostname":"{OPENSTACK_INSTANCE}", "engineInstallUrl":"wget https://raw.githubusercontent.com/rancher/install-docker/master/1.12.6.sh", "openstackConfig":{ "authUrl":"http://{OPENSTACK_IP}:5000/v3", "domainName":"Default", "endpointType":"adminURL", "flavorName":"{K8S_FLAVOR}", "imageName":"{UBUNTU_1604}", "netName":"{PRIVATE_NETWORK_NAME}", "password":"{OPENSTACK_PASSWORD}", "sshUser":"ubuntu", "tenantName":"{OPENSTACK_TENANT_NAME}", "username":"{OPENSTACK_USERNAME}"} }' \ 'http://{RANCHER_IP}:8080/v2-beta/projects/{ENVIRONMENT_ID}/hosts/'- Doing it manually

- Create a VM in your VIM with the appropriate specs (see point above)

Provision it with docker: You can find the proper version of Docker to install here (for Rancher 1.6): http://rancher.com/docs/rancher/v1.6/en/hosts/#supported-docker-versions

- Go in Rancher, Infrastructure → Hosts and click the button Add Host. "Copy, paste, and run the command below to register the host with Rancher:”

- Let's wait a few minutes until it's ready.

- Get your kubectl config

- Click Kubernetes → CLI

- Click Generate Config

Copy/Paste in your host, under

~/.kube/config

If you have multiple Kubernetes environments, you can give it a different name, instead of config. Then reference all your kubectl config in your bash_profile as follow

KUBECONFIG=\ /Users/adetalhouet/.kube/k8s.adetalhouet1.env:\ /Users/adetalhouet/.kube/k8s.adetalhouet2.env:\ /Users/adetalhouet/.kube/k8s.adetalhouet3.env export KUBECONFIG

Install kubectl

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.8.0/bin/linux/amd64/kubectl chmod +x ./kubectl sudo mv ./kubectl /usr/local/bin/kubectl

Make your kubectl use this new environment

kubectl config use-context <rancher-environment-name>

After a little bit, your environment should be ready. To verify, use the following command

$ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system heapster-4285517626-4dst0 1/1 Running 0 4m kube-system kube-dns-638003847-lx9f2 3/3 Running 0 4m kube-system kubernetes-dashboard-716739405-f0kgq 1/1 Running 0 4m kube-system monitoring-grafana-2360823841-0hm22 1/1 Running 0 4m kube-system monitoring-influxdb-2323019309-4mh1k 1/1 Running 0 4m kube-system tiller-deploy-737598192-8nb31 1/1 Running 0 4m

Deploy OOM

Video describing all the steps

We will basically follow this guide: http://onap.readthedocs.io/en/latest/submodules/oom.git/docs/oom_user_guide.html?highlight=oom

Clone OOM amsterdam branch

git clone -b amsterdam https://gerrit.onap.org/r/p/oom.git

- Prepare configuration

Edit the onap-parameters.yaml under

oom/kubernetes/config

Update (04/01/2018):

Since c/26645 is merged, you now have the ability to deploy DCAE from OOM.

You can disable DCAE deployment through the following parameters:set DEPLOY_DCAE=false

set disableDcae: true in the dcaegen2 values.yaml located under oom/kubernetes/dcaegen2/values.yaml.

To have endpoints registering to MSB, add your kubectl config token. The token can be found within the text you pasted from Rancher into ~/.kube/config. Past this token string into under kubeMasterAuthToken located at

oom/kubernetes/kube2msb/values.yaml

Create the config

In Amsterdam, the namespace, pass with the -n param, has to be onap.

Work is currently being done so this is configurable in Bejing.cd oom/kubernetes/config ./createConfig.sh -n onap

This step is creating the tree structure in the VM hosting ONAP to persist the data, along with adding the initial config data in there. In Amsterdam, this is hardcoded to the path /dockerdata-nfs/onap/

Work is being done so this is configurable in Bejing, see OOM-145 - Getting issue details... STATUSDeploy ONAP

In Amsterdam, the namespace, pass with the -n param, has to be onap.

Work is currently being done so this is configurable in Bejing.cd oom/kubernetes/oneclick ./createAll.bash -n onap

Update (04/01/2018):

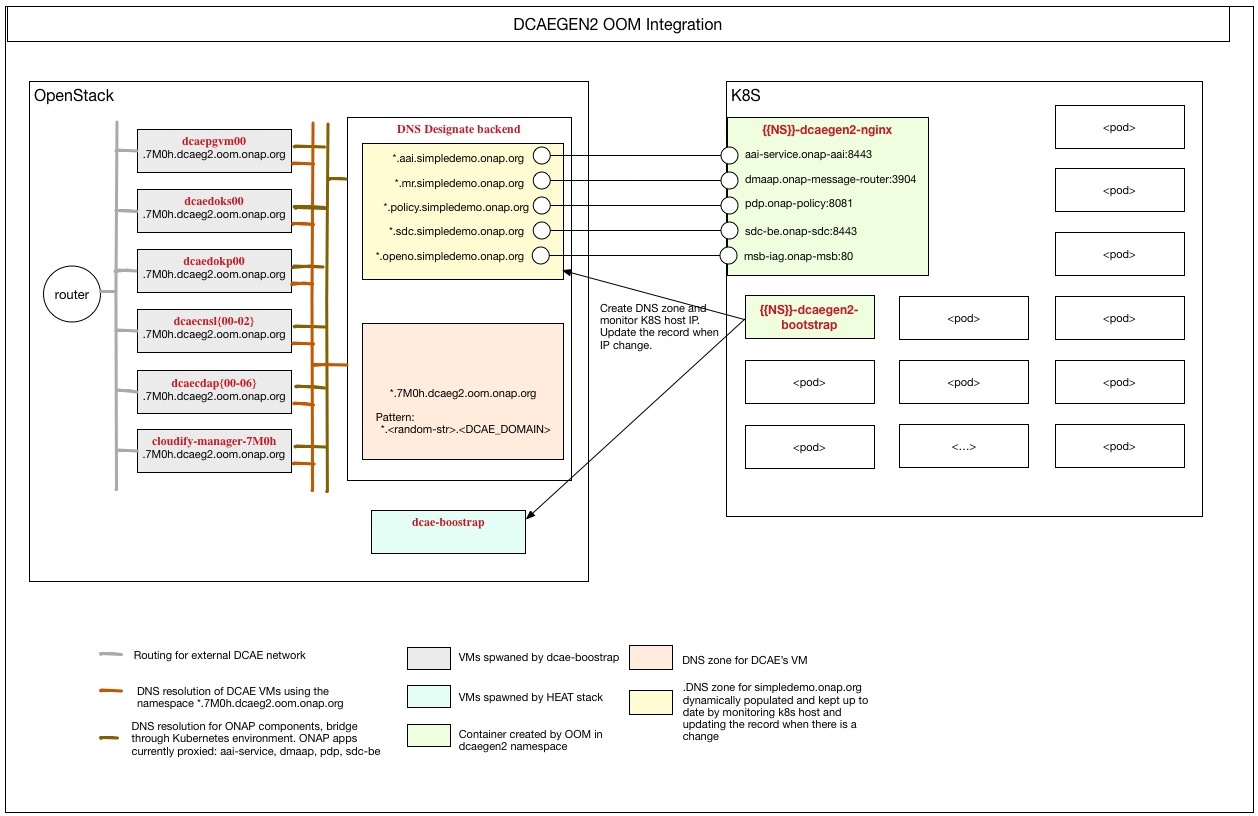

Since c/26645 is merged, two new containers are being deployed, under the onap-dcaegen2 namespace, as shown in the following diagram.

Deploying the dcaegen2 pod