20180616 this page is deprecated - use Cloud Native Deployment

This page details the Rancher RI installation independent of the deployment target (Openstack, AWS, Azure, GCD, Bare-metal, VMware)

see ONAP on Kubernetes#HardwareRequirements

Pre-requisite

The supported versions are as follows:

| ONAP Release | Rancher | Kubernetes | Helm | Kubectl | Docker |

|---|---|---|---|---|---|

| Amsterdam | 1.6.10 | 1.7.7 | 2.3.0 | 1.7.7 | 1.12.x |

| Beijing | 1.6.14 | 1.8.10 | 2.8.2 | 1.8.10 | 17.03-ce |

Rancher 1.6 Installation

The following is for amsterdam or master branches

Scenario: installing Rancher on clean Ubuntu 16.04 128g VM (single collocated server/host)

Note: amsterdam will require a different onap-parameters.yaml

Cloud Native Deployment#UndercloudInstall-Rancher/Kubernetes/Helm/Docker

wget https://git.onap.org/logging-analytics/plain/deploy/rancher/oom_rancher_setup.sh

clone continuous deployment script - until it is merged

wget https://git.onap.org/logging-analytics/plain/deploy/cd.sh chmod 777 cd.sh wget https://jira.onap.org/secure/attachment/ID/aaiapisimpledemoopenecomporg.cer wget https://jira.onap.org/secure/attachment/1ID/onap-parameters.yaml wget https://jira.onap.org/secure/attachment/ID/aai-cloud-region-put.json ./cd.sh -b master -n onap # wait about 25-120 min depending on the speed of your network pulling docker images

Config

Rancher Host IP or FQDN

When running the oom_rancher_setup.sh script or manually installing rancher - which IP/FQDN to use

OOM-715 - Getting issue details... STATUS

You can also edit your /etc/hosts with a hostname linked to an ip and use this name as the server - I do this for Azure.

If you cannot ping your ip then rancher will not be able to either.

do an ifconfig and pick the non-docker ip there - I have also used the 172 docker ip in public facing subnets to work around the lockdown of the 10250 port in public for crypto miners - but in a private subnet you can use the real IP.

for example

obrienbiometrics:logging-analytics michaelobrien$ dig beijing.onap.cloud

;; ANSWER SECTION:

beijing.onap.cloud. 299 IN A 13.72.107.69

ubuntu@a-ons-auto-beijing:~$ ifconfig

docker0 Link encap:Ethernet HWaddr 02:42:8b:f4:74:95

inet addr:172.17.0.1 Bcast:0.0.0.0 Mask:255.255.0.0

eth0 Link encap:Ethernet HWaddr 00:0d:3a:1b:5e:03

inet addr:10.0.0.4 Bcast:10.0.0.255 Mask:255.255.255.0

# i could use 172.17.0.1 only for a single collocated host

# but 10.0.0.4 is the correct IP (my public facing subnet)

# In my case I use -b beijing.onap.cloud

# but in all other cases I could use the hostname

ubuntu@a-ons-auto-beijing:~$ sudo cat /etc/hosts

127.0.0.1 a-ons-auto-beijing

Experimental Installation

Rancher 2.0

see https://gerrit.onap.org/r/#/c/32037/1/install/rancher/oom_rancher2_setup.sh

./oom_rancher2_setup.sh -s amsterdam.onap.info

Run above script on a clean Ubuntu 16.04 VM (you may need to set your hostname in /etc/hosts)

The cluster will be created and registered for you.

Login to port 80 and wait for the cluster to be green - then hit the kubectl button, copy paste the contents to ~/.kube/config

Result

root@ip-172-31-84-230:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 66e823e8ebb8 gcr.io/google_containers/defaultbackend@sha256:865b0c35e6da393b8e80b7e3799f777572399a4cff047eb02a81fa6e7a48ed4b "/server" 3 minutes ago Up 3 minutes k8s_default-http-backend_default-http-backend-66b447d9cf-t4qxx_ingress-nginx_54afe3f8-1455-11e8-b142-169c5ae1104e_0 7c9a6eeeb557 rancher/k8s-dns-sidecar-amd64@sha256:4581bf85bd1acf6120256bb5923ec209c0a8cfb0cbe68e2c2397b30a30f3d98c "/sidecar --v=2 --..." 3 minutes ago Up 3 minutes k8s_sidecar_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 72487327e65b rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_default-http-backend-66b447d9cf-t4qxx_ingress-nginx_54afe3f8-1455-11e8-b142-169c5ae1104e_0 d824193e7404 rancher/k8s-dns-dnsmasq-nanny-amd64@sha256:bd1764fed413eea950842c951f266fae84723c0894d402a3c86f56cc89124b1d "/dnsmasq-nanny -v..." 3 minutes ago Up 3 minutes k8s_dnsmasq_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 89bdd61a99a3 rancher/k8s-dns-kube-dns-amd64@sha256:9c7906c0222ad6541d24a18a0faf3b920ddf66136f45acd2788e1a2612e62331 "/kube-dns --domai..." 3 minutes ago Up 3 minutes k8s_kubedns_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 7c17fc57aef9 rancher/cluster-proportional-autoscaler-amd64@sha256:77d2544c9dfcdfcf23fa2fcf4351b43bf3a124c54f2da1f7d611ac54669e3336 "/cluster-proporti..." 3 minutes ago Up 3 minutes k8s_autoscaler_kube-dns-autoscaler-54fd4c549b-6bm5b_kube-system_51afa75f-1455-11e8-b142-169c5ae1104e_0 024269154b8b rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-dns-6f7666d48c-9zmtf_kube-system_51b35ec8-1455-11e8-b142-169c5ae1104e_0 48e039d15a90 rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-dns-autoscaler-54fd4c549b-6bm5b_kube-system_51afa75f-1455-11e8-b142-169c5ae1104e_0 13bec6fda756 rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_nginx-ingress-controller-vchhb_ingress-nginx_54aede27-1455-11e8-b142-169c5ae1104e_0 332073b160c9 rancher/coreos-flannel-cni@sha256:3cf93562b936004cbe13ed7d22d1b13a273ac2b5092f87264eb77ac9c009e47f "/install-cni.sh" 3 minutes ago Up 3 minutes k8s_install-cni_kube-flannel-jgx9x_kube-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 79ef0da922c5 rancher/coreos-flannel@sha256:93952a105b4576e8f09ab8c4e00483131b862c24180b0b7d342fb360bbe44f3d "/opt/bin/flanneld..." 3 minutes ago Up 3 minutes k8s_kube-flannel_kube-flannel-jgx9x_kube-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 300eab7db4bc rancher/pause-amd64:3.0 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-flannel-jgx9x_kube-system_4fb9b39b-1455-11e8-b142-169c5ae1104e_0 1597f8ba9087 rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 3 minutes ago Up 3 minutes kube-proxy 523034c75c0e rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes kubelet 788d572d313e rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes scheduler 9e520f4e5b01 rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes kube-controller 29bdb59c9164 rancher/k8s:v1.8.7-rancher1-1 "/opt/rke/entrypoi..." 4 minutes ago Up 4 minutes kube-api 2686cc1c904a rancher/coreos-etcd:v3.0.17 "/usr/local/bin/et..." 4 minutes ago Up 4 minutes etcd a1fccc20c8e7 rancher/agent:v2.0.2 "run.sh --etcd --c..." 5 minutes ago Up 5 minutes unruffled_pike 6b01cf361a52 rancher/server:preview "rancher --k8s-mod..." 5 minutes ago Up 5 minutes 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp rancher-server

OOM ONAP Deployment Script

OOM-716 - Getting issue details... STATUS

https://gerrit.onap.org/r/32653

Helm DevOps

https://docs.helm.sh/chart_best_practices/#requirements

Kubernetes DevOps

From original ONAP on Kubernetes page

Kubernetes specific config

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Deleting All Containers

Delete all the containers (and services)

|

Delete/Rerun config-init container for /dockerdata-nfs refresh

refer to the procedure as part of https://github.com/obrienlabs/onap-root/blob/master/cd.sh

Delete the config-init container and its generated /dockerdata-nfs share

There may be cases where new configuration content needs to be deployed after a pull of a new version of ONAP.

for example after pull brings in files like the following (20170902)

root@ip-172-31-93-160:~/oom/kubernetes/oneclick# git pull Resolving deltas: 100% (135/135), completed with 24 local objects. From http://gerrit.onap.org/r/oom bf928c5..da59ee4 master -> origin/master Updating bf928c5..da59ee4 kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/metadata.rb | 7 + kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/recipes/aai-resources-aai-keystore.rb | 8 + kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/CHANGELOG.md | 2 +- kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/README.md | 4 +- |

see (worked with Zoran) OOM-257 - DevOps: OOM config reset procedure for new /dockerdata-nfs content CLOSED

|

Container Endpoint access

Check the services view in the Kuberntes API under robot

robot.onap-robot:88 TCP

robot.onap-robot:30209 TCP

kubectl get services --all-namespaces -o wide onap-vid vid-mariadb None <none> 3306/TCP 1h app=vid-mariadb onap-vid vid-server 10.43.14.244 <nodes> 8080:30200/TCP 1h app=vid-server |

|---|

Container Logs

kubectl --namespace onap-vid logs -f vid-server-248645937-8tt6p 16-Jul-2017 02:46:48.707 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 22520 ms kubectl --namespace onap-portal logs portalapps-2799319019-22mzl -f root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE onap-robot robot-44708506-dgv8j 1/1 Running 0 36m 10.42.240.80 obriensystemskub0 root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-robot logs -f robot-44708506-dgv8j 2017-07-16 01:55:54: (log.c.164) server started |

|---|

A pods may be setup to log to a volume which can be inspected outside of a container. If you cannot connect to the container you could inspect the backing volume instead. This is how you find the backing directory for a pod which is using a volume which is an empty directory type, the log files can be found on the kubernetes node hosting the pod. More details can be found here https://kubernetes.io/docs/concepts/storage/volumes/#emptydir

here is an example of finding SDNC logs on a VM hosting a kubernetes node.

|

Robot Logs

Yogini and I needed the logs in OOM Kubernetes - they were already there and with a robot:robot auth http://<your_dns_name>:30209/logs/demo/InitDistribution/report.html for example after a oom/kubernetes/robot$./demo-k8s.sh distribute find your path to the logs by using for example root@ip-172-31-57-55:/dockerdata-nfs/onap/robot# kubectl --namespace onap-robot exec -it robot-4251390084-lmdbb bash root@robot-4251390084-lmdbb:/# ls /var/opt/OpenECOMP_ETE/html/logs/demo/InitD InitDemo/ InitDistribution/ path is http://<your_dns_name>:30209/logs/demo/InitDemo/log.html#s1-s1-s1-s1-t1 |

SSH into ONAP containers

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

Get the pod name via kubectl get pods --all-namespaces -o wide bash into the pod via kubectl -n onap-mso exec -it mso-1648770403-8hwcf /bin/bash |

|---|

Push Files to Pods

Trying to get an authorization file into the robot pod

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/home/ubuntu above works? |

|---|

Redeploying Code war/jar in a docker container

see building the docker image - use your own local repo or a repo on dockerhub - modify the values.yaml and delete/create your pod to switch images

example in LOG-136 - Logging RI: Code/build/tag microservice docker image IN PROGRESS

Turn on Debugging

via URL

http://cd.onap.info:30223/mso/logging/debug

via logback.xml

Attaching a debugger to a docker container

Running ONAP Portal UI Operations

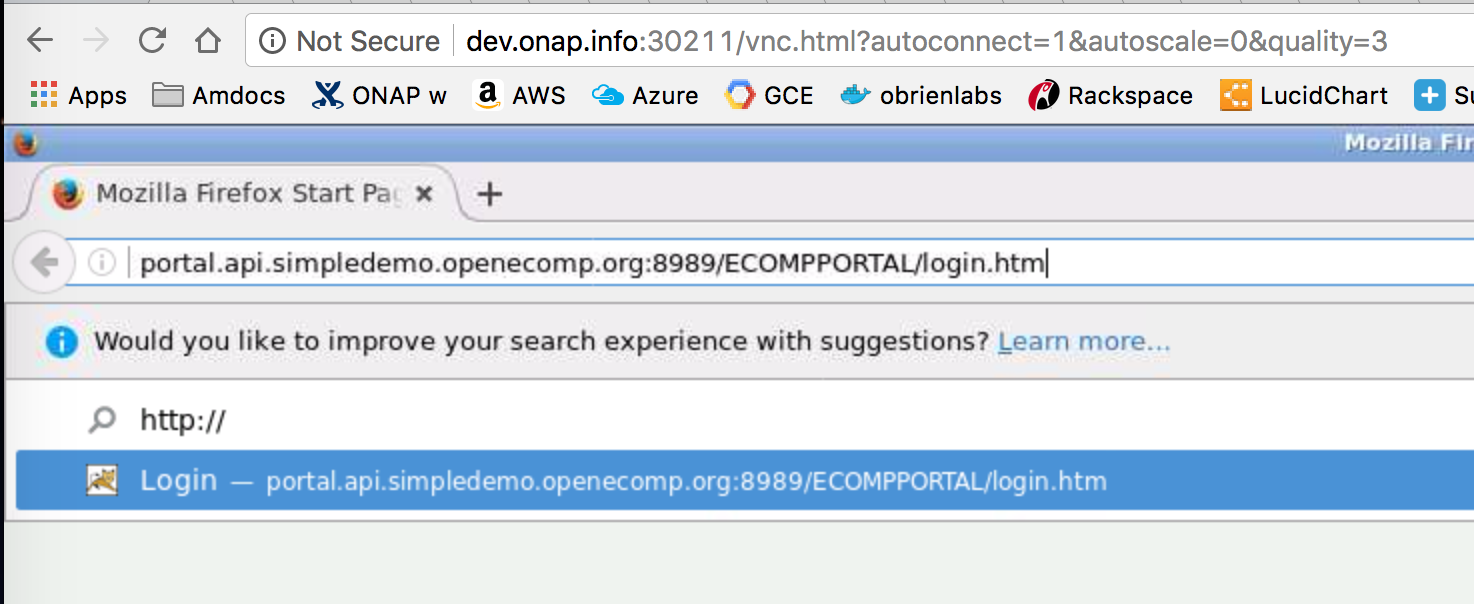

Running ONAP using the vnc-portal

see (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

or run the vnc-portal container to access ONAP using the traditional port mappings. See the following recorded video by Mike Elliot of the OOM team for a audio-visual reference

Check for the vnc-portal port via (it is always 30211)

|

launch the vnc-portal in a browser

password is "password"

Open firefox inside the VNC vm - launch portal normally

http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm

For login details to get into ONAPportal, see Tutorial: Accessing the ONAP Portal

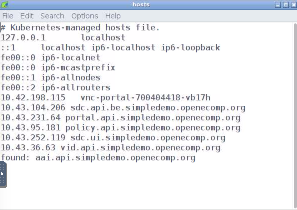

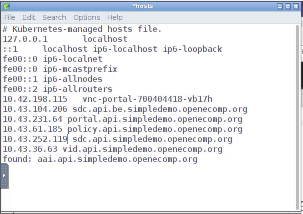

(20170906) Before running SDC - fix the /etc/hosts (thanks Yogini for catching this) - edit your /etc/hosts as follows

(change sdc.ui to sdc.api)

OOM-282 - vnc-portal requires /etc/hosts url fix for SDC sdc.ui should be sdc.api CLOSED

before | after | notes |

|---|---|---|

login and run SDC

Continue with the normal ONAP demo flow at (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

Running Multiple ONAP namespaces

Run multiple environments on the same machine - TODO

Troubleshooting

Rancher fails to restart on server reboot

Having issues after a reboot of a colocated server/agent

Installing Clean Ubuntu

apt-get install ssh apt-get install ubuntu-desktop |

|---|

DNS resolution

ignore - not relevant

Search Line limits were exceeded, some dns names have been omitted, the applied search line is: default.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

https://github.com/rancher/rancher/issues/9303

Config Pod fails to start with Error

Make sure your Openstack parameters are set if you get the following starting up the config pod

|

45 Comments

vishwanath jayaraman

What is the link to the page for the "physical" deployment target that has the steps to be followed after Rancher has been installed?

Michael O'Brien

Works in progress - (Openstack, AWS, Azure, GCD, Bare-metal, VMware)

song wenjian

when I want to deploy the amsterdam version, but the kubernetes occur an error?The log of kubedns container as follows.

2018/2/24 ??2:24:23I0224 06:24:23.678328 1 dns.go:174] Waiting for services and endpoints to be initialized from apiserver...

2018/2/24 ??2:24:24I0224 06:24:24.178350 1 dns.go:174] Waiting for services and endpoints to be initialized from apiserver...

2018/2/24 ??2:24:24E0224 06:24:24.651506 1 reflector.go:199] k8s.io/dns/vendor/k8s.io/client-go/tools/cache/reflector.go:94: Failed to list *v1.Endpoints: Get https://10.43.0.1:443/api/v1/endpoints?resourceVersion=0: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "cattle")

2018/2/24 ??2:24:24E0224 06:24:24.661211 1 reflector.go:199] k8s.io/dns/vendor/k8s.io/client-go/tools/cache/reflector.go:94: Failed to list *v1.Service: Get https://10.43.0.1:443/api/v1/services?resourceVersion=0: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "cattle")

2018/2/24 ??2:24:24I0224 06:24:24.678325 1 dns.go:174] Waiting for services and endpoints to be initialized from apiserver...

2018/2/24 ??2:24:25F0224 06:24:25.178249 1 dns.go:168] Timeout waiting for initialization

kubectl get pods --all-namespaces -a

root@onap:/home/swj# kubectl get pods --all-namespaces -a

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system heapster-76b8cd7b5-cg9m8 1/1 Running 1 25d

kube-system kube-dns-5d7b4487c9-fdp66 2/3 CrashLoopBackOff 96 25d

kube-system kubernetes-dashboard-f9577fffd-9v8cd 0/1 CrashLoopBackOff 44 25d

kube-system monitoring-grafana-997796fcf-d9fm9 1/1 Running 1 25d

kube-system monitoring-influxdb-56fdcd96b-9n9gx 1/1 Running 1 25d

kube-system tiller-deploy-74f6f6c747-c4lcf 1/1 Running 1 24d

the docker version :

root@onap:/home/swj# docker version

Client:

Version: 1.12.6

API version: 1.24

Go version: go1.6.4

Git commit: 78d1802

Built: Tue Jan 10 20:38:45 2017

OS/Arch: linux/amd64

Server:

Version: 1.12.6

API version: 1.24

Go version: go1.6.4

Git commit: 78d1802

Built: Tue Jan 10 20:38:45 2017

OS/Arch: linux/amd64

the Rancher server version:

f2886d8b8da6 rancher/server:v1.6.10 "/usr/bin/entry /usr/" 3 hours ago Up 3 hours 3306/tcp, 0.0.0.0:8880->8080/tcp rancher_server

the command of create kubernetes is?

sudo docker run --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.6 http://10.68.168.231:8880/v1/scripts/284CEA53E606E7010984:1514678400000:sNDC0wowiRnVXxa63gNyHzoJYSM

so, I want to know why these errors occur ?or my operation error!.

Thank you !!

Michael O'Brien

I've seen this question before - usually due to https blocking and/or issues behind a proxy. Try your installation on a system that is wide open and public to verify.

song wenjian

Thanks for the reply!

This looks like a bug of k8s in v1.7.4?

in Rancher-1.6.10:

the log of container kubernetes-controller-manager show this error:

2018/2/27 ??9:11:49I0227 13:11:49.395253 1 controllermanager.go:107] Version: v1.7.4-rancher1

2018/2/27 ??9:11:49I0227 13:11:49.398831 1 leaderelection.go:179] attempting to acquire leader lease...

2018/2/27 ??9:11:49I0227 13:11:49.419563 1 leaderelection.go:189] successfully acquired lease kube-system/kube-controller-manager

2018/2/27 ??9:11:49I0227 13:11:49.420103 1 event.go:218] Event(v1.ObjectReference{Kind:"Endpoints", Namespace:"kube-system", Name:"kube-controller-manager", UID:"0b834d4b-0dcb-11e8-b027-02755a989a62", APIVersion:"v1", ResourceVersion:"964683", FieldPath:""}): type: 'Normal' reason: 'LeaderElection' d8b745c52a89 became leader

2018/2/27 ??9:11:49F0227 13:11:49.572074 1 controllermanager.go:176] error building controller context: failed to get supported resources from server: unable to retrieve the complete list of server APIs: apps/v1beta2: the server could not find the requested resource, autoscaling/v2beta1: the server could not find the requested resource, batch/v1beta1: the server could not find the requested resource, rbac.authorization.k8s.io/v1: the server could not find the requested resource

this error occur the error of container kube-dns

however, in Rancher-1.6.14:

the log of container kubernetes-controller-manager is ok:

2018/2/27 ??9:16:01I0227 01:16:01.121111 1 controllermanager.go:109] Version: v1.8.5-rancher1

2018/2/27 ??9:16:01I0227 01:16:01.126277 1 leaderelection.go:174] attempting to acquire leader lease...

2018/2/27 ??9:16:16I0227 01:16:16.487307 1 leaderelection.go:184] successfully acquired lease kube-system/kube-controller-manager

2018/2/27 ??9:16:16I0227 01:16:16.487504 1 event.go:218] Event(v1.ObjectReference{Kind:"Endpoints", Namespace:"kube-system", Name:"kube-controller-manager", UID:"4168e76e-04f3-11e8-8a13-02e3652ee5ef", APIVersion:"v1", ResourceVersion:"1957024", FieldPath:""}): type: 'Normal' reason: 'LeaderElection' 2532612f693b became leader

2018/2/27 ??9:16:16I0227 01:16:16.589783 1 controller_utils.go:1041] Waiting for caches to sync for tokens controller

2018/2/27 ??9:16:16I0227 01:16:16.593998 1 controllermanager.go:491] Started "cronjob"

2018/2/27 ??9:16:16I0227 01:16:16.594245 1 cronjob_controller.go:98] Starting CronJob Manager

In your document? the version of k8s in rancher-1.6.10 is v1.7.7?but now it Automatically deploye the k8s with version v1.7.4?

that bug above is : https://github.com/kubernetes/kubernetes/issues/56574 ?

thank you !

Michael O'Brien

fyi, fully automated rancher install for amsterdam or master in the script under review - see instructions at the top of ONAP on Kubernetes.

https://gerrit.onap.org/r/#/c/32019

OOM-715 - Getting issue details... STATUS

Suraj Kant Singh

Hi Michael,

I tried using it and it fails to get the IP address of the onap host, while registering and keeps retrying. It's using the localhost IP but that too in the registration stage it is not used hence failing.

<snip>

. . .

Registering host for image: rancher/agent:v1.2.6 url: http://127.0.0.1:8880/v2-beta/scripts/BD815AFCE8F503E7EF44:1514678400000:U63d0je79qVfl8ZgPwTJJriw registrationToken: BD815AFCE8F503E7EF44:1514678400000:U63d0je79qVfl8ZgPwTJJriw

INFO: Running Agent Registration Process, CATTLE_URL=http://:8880/v1

INFO: Attempting to connect to: http://:8880/v1

ERROR: http://:8880/v1 is not accessible (Could not resolve host:)

ERROR: http://:8880/v1 is not accessible (Could not resolve host:)

. . .

<snip>

Praveen Ch

Hi Team,

Installed Amsterdam release using

DEMO_ARTIFACTS_VERSION: "1.1.1"

and cd.sh captured from :

https://github.com/obrienlabs/onap-root/blob/20171205/cd.sh

Images Prepulled :

https://github.com/obrienlabs/onap-root/blob/20171205/prepull_docker.sh

I see all pods running , so tried to deploy demo vFW :

vFWCL instantiation%2C testing%2C and debuging

However when I try to access the SDC on VNC portal (using cs0008/demo123456!), get the error mesasge permission denied .

Please find below error message .

Can you please advise how to resolve this issue.

Michael O'Brien

Hi, the onap-root repo artifacts for rancher install and cd.sh deployment have moved to onap under

https://jira.onap.org/browse/OOM-710

see deployment instructions at

ONAP on Kubernetes#ExampleEndtoEndKubernetesbasedONAPinstallanddeployment

Your sdc error may be intermittent - I will check the reference system

Beka Tsotsoria

Is docker version 17.03-ce strict requirement? According to Rancher docs 1.6.14 can support 17.12.x-ce. Does ONAP care about docker version when installing from rancher?

Alexis de Talhouët

Yes, as identified here: Re: ONAP on Kubernetes on Rancher

Beka Tsotsoria

Are you sure link is correct? I think it points to my comment above

Alexis de Talhouët

Ooops, my bad. Now should be better: Pre-requisite Basically, there is a pre-requisite section in this page that mentioned the right versions to used. At least the one we have tested and can vouch to work fine with our deployment system.

Beka Tsotsoria

I'm wondering if there is any objective reason of using docker 17.03 and not the 17.12 for example? I'm concerned about the bugs which where fixed after 17.03, specifically this one - Docker up until 17.06 did not correctly release volumes associated with container subject to be stopped and resulted in orphaned volumes. In case of ONAP, there can be lots of orphaned volumes left after recreating components few times

Michael O'Brien

Yes, we need to live with bugs in the 17.03-17.12 gap - because 17.03 is the only version verified with Kubernetes support in rancher. The same reason we are still on helm 2.7 and not 2.8 because we have not verified the issues with 2.8. Also why we are on Kubernetes 1.8.10 and not 1.9

There is a specific subtree of all releases that we must follow. We must at times push this to get things like tpl function support in 2.5+ and clustering fixes in 2.8 not in 2.7, and read only config fixes (Mandeep) in k8s 1.8.10 not 1.8.6

pranjal sharma

Hello All,

I was able to create/deploy the vFirewall package (packet generator, sinc and firewall vnf)on openstack cloud.

But i couldnt able to login into any of vnf's vm.

After when i debug i see i didnt change the default public key with our local public key pair in the PACKET GENERATOR curl jason UI.

Now i am deploying the VNF again (same Vfirewall Package) on the openstack cloud, thought of giving our local public key in both pg and sinc json api's.

I have queries for clarifications :

- how can we create a VNF package manually/dynamically using SDC component (so that we have leverage of get into the VNF vm and access the capability of the same)

- And I want to implement the Service Function chaining for the deployed Vfirewall, please do let me know how to proceed with that.

PS: I have installed/Deployed ONAP using rancher on kubernetes (on openstack cloud platform) without DACE component so i haven't had leverage of using the Closed Loop Automation.

Any thought on this will be helpful for us

Thanks,

Pranjal

Nalini Varshney

I need help please help me out

package versions are:

Client:

Version: 17.03.2-ce

API version: 1.27

Go version: go1.7.5

Git commit: f5ec1e2

Built: Tue Jun 27 03:35:14 2017

OS/Arch: linux/amd64

Server:

Version: 17.03.2-ce

API version: 1.27 (minimum version 1.12)

Go version: go1.7.5

Git commit: f5ec1e2

Built: Tue Jun 27 03:35:14 2017

OS/Arch: linux/amd64

Experimental: false

I have some issue when i followed above step to install Rancher 1.6

(7) Failed to connect to 10.12.5.168 port 8880: No route to host

error: Error loading config file "/root/.kube/config": yaml: line 6: did not find expected key

kubectl get pods --all-namespaces

error: Error loading config file "/root/.kube/config": yaml: line 6: did not find expected key

Suraj Kant Singh

Are you using the oom_rancher_setup.sh did you specify the params correctly. Check the logs for failure of the script you should get the clue what went wrong.

I have seen this error but next run after correcting the error in the script it works seamlessly

Nalini Varshney

yes i am using omm_rancher_setup.sh (execute this by this

./oom_rancher_setup.sh -b master -s10.12.5.168-e onap)i am not able to ping this ip 10.12.5.168 (error is no route to host).

please let me know which server have this ip. which ip i have to use to execute this script.

Suraj Kant Singh

You have to use the IP address of the ONAP host.

Nalini Varshney

How can i get the IP address of the ONAP host.

Suraj Kant Singh

Pls give the IP address of the node on which you are running this script from.

Michael O'Brien

You can also edit your /etc/hosts with a hostname linked to an ip and use this name as the server - I do this for Azure.

If you cannot ping your ip then rancher will not be able to either.

do an ifconfig and pick the non-docker ip there - I have also used the 172 docker ip in public facing subnets to work around the lockdown of the 10250 port in public for crypto miners - but in a private subnet you can use the real IP.

for example

obrienbiometrics:logging-analytics michaelobrien$ dig beijing.onap.cloud ;; ANSWER SECTION: beijing.onap.cloud. 299 IN A 13.72.107.69 ubuntu@a-ons-auto-beijing:~$ ifconfig docker0 Link encap:Ethernet HWaddr 02:42:8b:f4:74:95 inet addr:172.17.0.1 Bcast:0.0.0.0 Mask:255.255.0.0 eth0 Link encap:Ethernet HWaddr 00:0d:3a:1b:5e:03 inet addr:10.0.0.4 Bcast:10.0.0.255 Mask:255.255.255.0 # i could use 172.17.0.1 only for a single collocated host # but 10.0.0.4 is the correct IP (my public facing subnet) # In my case I use -b beijing.onap.cloud # but in all other cases I could use the hostname ubuntu@a-ons-auto-beijing:~$ sudo cat /etc/hosts 127.0.0.1 a-ons-auto-beijingNalini Varshney

Guys i need your help , i faced this problem many times

run healthcheck 3 times to warm caches and frameworks so rest endpoints report properly - see OOM-447

curl with aai cert to cloud-region PUT

curl: (7) Failed to connect to 127.0.0.1 port 30233: Connection refused

get the cloud region back

curl: (7) Failed to connect to 127.0.0.1 port 30233: Connection refused

run healthcheck prep 1

error: unable to upgrade connection: container not found ("robot")

run healthcheck prep 2

error: unable to upgrade connection: container not found ("robot")

run healthcheck for real - wait a further 5 min

error: unable to upgrade connection: container not found ("robot")

run partial vFW

report results

Mon Apr 23 10:44:12 UTC 2018

**** Done ****

please let me know, how can i solve this problem.

Michael O'Brien

this looks like cd.sh code output - see OOM-716 updates

Make sure your vm is setup correctly and has a valid kubernetes cluster

OOM-716 - Getting issue details... STATUS

For those specific warnings it looks like aai is not up on 30233 - are all your aai containers up?

/michael

Barnali Sengupta

Hi All,

I am trying to install the ONAP Master branch on Rancher. I downloaded the prepull script https://jira.onap.org/secure/attachment/11261/prepull_docker.sh and tried to prepull the images before running the cd.sh script, but it just pulls a few of them and then stops there. I had to update the script myself to get all the repository and image names from the values.yaml file for each component. Please let me know if there is an updated script somewhere that I can get.

Thanks

Barnali Sengupta

Dominic Lunanuova

Additional pre-req: make

Pavel Paroulek

When running "

oom_rancher_setup.sh" you should probably be root or do a sudoNalini Varshney

when I want to deploy the ONAP by using this command "helm install local/onap --name development" ,

this error occur "Error: Get https://10.43.0.1:443/version: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "cattle") "

docker version :

Client:

Version: 17.03.2-ce

API version: 1.27

Go version: go1.7.5

Git commit: f5ec1e2

Built: Tue Jun 27 03:35:14 2017

OS/Arch: linux/amd64

Server:

Version: 17.03.2-ce

API version: 1.27 (minimum version 1.12)

Go version: go1.7.5

Git commit: f5ec1e2

Built: Tue Jun 27 03:35:14 2017

OS/Arch: linux/amd64

Experimental: false

helm version :

Client: &version.Version{SemVer:"v2.6.1", GitCommit:"bbc1f71dc03afc5f00c6ac84b9308f8ecb4f39ac", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.6.1", GitCommit:"bbc1f71dc03afc5f00c6ac84b9308f8ecb4f39ac", GitTreeState:"clean"}

Borislav Glozman

Please use helm version 2.8.2 on both client and tiller.

Trinath Somanchi

I'm using Google Cloud to build ONAP with 16 vCPUS and 60 GB Memory with guidance from 1.

I'm running the script 2.

After the script run, while 58 more apps need to be installed, I script hangs with no error.

please help me resolve this issue.

1 Cloud Native Deployment#GoogleGCE

2 https://jira.onap.org/secure/attachment/11802/oom_entrypoint.sh

Here is some error log: http://paste.openstack.org/show/722338/

Nalini Varshney

i tried to install onap on bare metal machine using these steps

use the latest on https://jira.onap.org/browse/OOM-710wget https://jira.onap.org/secure/attachment/LATEST_ID/oom_entrypoint.shchmod777oom_entrypoint.sh./oom_entrypoint.sh -b master -sx.x.x.x-e onapbut i want to install only design time serivces like SDC,CLAMP etc for that in values.yaml file i set all other services enable flag false.

but again all containers are created what can i do.

please help me and give me your suggestion (i only want to install design time services)

Jeff Collins

Hi Guys,

I'm running through an amserdam install and I noticed the aaf/authz-service image is missing from the repo. Is this no longer needed or did something happen to the build? Everything else came up.

28s 28s 1 default-scheduler Normal Scheduled Successfully assigned aaf-1993711932-861nr to localhost

28s 28s 1 kubelet, localhost Normal SuccessfulMountVolume MountVolume.SetUp succeeded for volume "aaf-data"

28s 28s 1 kubelet, localhost Normal SuccessfulMountVolume MountVolume.SetUp succeeded for volume "default-token-zkcwn"

26s 26s 1 kubelet, localhost spec.initContainers{aaf-readiness} Normal Pulling pulling image "oomk8s/readiness-check:1.0.0"

25s 25s 1 kubelet, localhost spec.initContainers{aaf-readiness} Normal Pulled Successfully pulled image "oomk8s/readiness-check:1.0.0"

25s 25s 1 kubelet, localhost spec.initContainers{aaf-readiness} Normal Created Created container

25s 25s 1 kubelet, localhost spec.initContainers{aaf-readiness} Normal Started Started container

21s 21s 1 kubelet, localhost spec.containers{aaf} Normal BackOff Back-off pulling image "nexus3.onap.org:10001/onap/aaf/authz-service:latest"

27s 5s 7 kubelet, localhost Warning DNSSearchForming Search Line limits were exceeded, some dns names have been omitted, the applied search line is: onap-aaf.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

24s 5s 2 kubelet, localhost spec.containers{aaf} Normal Pulling pulling image "nexus3.onap.org:10001/onap/aaf/authz-service:latest"

22s 3s 2 kubelet, localhost spec.containers{aaf} Warning Failed Failed to pull image "nexus3.onap.org:10001/onap/aaf/authz-service:latest": rpc error: code = 2 desc = error pulling image configuration: unknown blob

22s 3s 3 kubelet, localhost Warning FailedSync Error syncing pod

https://nexus3.onap.org/repository/docker.snapshot/v2/onap/aaf/authz-service/manifests/latest

{"errors":[{"code":"BLOB_UNKNOWN","message":"blob unknown to registry","detail":"sha256:3537adf54de3c9c2d06a4e15396314884600864a3d92b34bc77d2f30739dd730"}]}mudassar rana

Hi Guys ,

I am installing Amsterdam release . Few of pods didn't come up.

root@infyonap:/home/onap# kubectl get pods --all-namespaces -a | grep -v "Running"

NAMESPACE NAME READY STATUS RESTARTS AGE

onap config 0/1 Completed 0 10h

onap-aaf aaf-1993711932-gn0m9 0/1 ErrImagePull 0 8h

onap-appc appc-1828810488-zqrlt 1/2 ErrImagePull 0 8h

onap-appc appc-dgbuilder-2298093128-58kkl 0/1 Init:0/1 23 8h

onap-consul consul-agent-3312409084-dtz8p 0/1 Error 33 8h

onap-sdnc dmaap-listener-3967791773-m80d3 0/1 Init:0/1 23 8h

onap-sdnc sdnc-1507781456-zbnt1 1/2 ErrImagePull 0 8h

onap-sdnc sdnc-dgbuilder-4011443503-1sszk 0/1 Init:0/1 23 8h

onap-sdnc sdnc-portal-516977107-w888x 0/1 Init:0/1 23 8h

onap-sdnc ueb-listener-1749146577-0dd3s 0/1 Init:0/1 23 8h

aaf → unknown blob , while pulling image

sdnc → Getting struck while downloading images after certain time & finally connection time out .

appc → Getting struck while downloading images after certain time & finally http request cancelled

consul-agent → image is getting pulled but Back-off restarting has failed the container

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

9h 5m 36 kubelet, infyonap spec.containers{consul-server} Normal Pulled Successfully pulled image "docker.io/consul:latest"

9h 5m 36 kubelet, infyonap spec.containers{consul-server} Normal Created Created container

9h 5m 36 kubelet, infyonap spec.containers{consul-server} Normal Started Started container

9h 5m 11 kubelet, infyonap Warning FailedSync Error syncing pod

9h 5m 11 kubelet, infyonap spec.containers{consul-server} Warning BackOff Back-off restarting failed container

9h 5m 37 kubelet, infyonap spec.containers{consul-server} Normal Pulling pulling image "docker.io/consul:latest"

9h 5m 88 kubelet, infyonap Warning DNSSearchForming Search Line limits were exceeded, some dns names have been omitted, the applied search line is: onap-consul.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

Please help me out with this issue

Jeff Collins

Looks like you are hitting the same errors i am. See the post above... I'm not sure why the images are missing and I'm also seeing the same error for consul-agent.

Michael O'Brien

Guys, I would look into the Amsterdam branch - we last left if with several known failed containers - but beijing and master (casablanca) are our focus now. Do you need to run amsterdam (it is a hybrid deploy with DCAE still running in heat via heatbridge - in beijing everything is containerized - I would move to that branch or master - where the deployment is a lot more stable.

/michael

Jeff Collins

Thanks Michael - I'll drop working with Amsterdam and switch to the master branch. I had only started there because it looked like it had better documentation on how to customize and bring it up. Thanks for your input!

Sunny Gupta

Hi,

I installed Beijing release and around 140 containers are in Running state except below:

Also healthcheck is failling for SO and Policy, On debugging it further I found below error from SO:

Any idea how it can be debugged further.

-Sunny

Sunny Gupta

On debugging further I found out that connection from so container to mariadb is failing with below error:

org.jboss.jca.core.connectionmanager.pool.mcp.SemaphoreConcurrentLinkedDequeManagedConnectionPool.fillTo(SemaphoreConcurrentLinkedDequeManagedConnectionPool.java:1136)

at org.jboss.jca.core.connectionmanager.pool.mcp.PoolFiller.run(PoolFiller.java:97)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.sql.SQLException: ost '10.42.119.3' is not allowed to connect to this MariaDB server^@^@^@^@

at org.mariadb.jdbc.internal.util.ExceptionMapper.get(ExceptionMapper.java:138)

at org.mariadb.jdbc.internal.util.ExceptionMapper.throwException(ExceptionMapper.java:71)

at org.mariadb.jdbc.Driver.connect(Driver.java:109)

at org.jboss.jca.adapters.jdbc.local.LocalManagedConnectionFactory.createLocalManagedConnection(LocalManagedConnectionFactory.java:321)

... 6 more

Caused by: org.mariadb.jdbc.internal.util.dao.QueryException: ost '10.42.119.3' is not allowed to connect to this MariaDB server^@^@^@^@

at org.mariadb.jdbc.internal.packet.read.ReadInitialConnectPacket.<init>(ReadInitialConnectPacket.java:89)

at org.mariadb.jdbc.internal.protocol.AbstractConnectProtocol.handleConnectionPhases(AbstractConnectProtocol.java:437)

at org.mariadb.jdbc.internal.protocol.AbstractConnectProtocol.connect(AbstractConnectProtocol.java:374)

at org.mariadb.jdbc.internal.protocol.AbstractConnectProtocol.connectWithoutProxy(AbstractConnectProtocol.java:763)

at org.mariadb.jdbc.internal.util.Utils.retrieveProxy(Utils.java:469)

at org.mariadb.jdbc.Driver.connect(Driver.java:104)

... 7 more

Kindly suggest and let me know if anybody is also facing similar issue

-Sunny

Nalini Varshney

i tried to install onap using entrypoint.sh script and my system configuration is RAM: 128GB , CPU 32, ubuntu 16.04

but i faced below error when script was trying to create pods

kube-system heapster-4285517626-7qr37 0/1 Pending 0 30m <none> <none>

kube-system monitoring-grafana-2360823841-f6zzq 0/1 Pending 0 45m <none> <none>

kube-system monitoring-influxdb-2323019309-46vtt 0/1 Pending 0 46m <none> <none>

kube-system tiller-deploy-737598192-47276 0/1 Pending 0 46m <none> <none>

onap-aaf aaf-1993711932-n27wm 0/1 Init:0/1 0 51m 10.42.47.201 ubuntu-xenial

onap-aaf aaf-cs-1310404376-6767f 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-aai aai-resources-2398553481-sm56v 0/2 PodInitializing 0 51m 10.42.139.45 ubuntu-xenial

onap-aai aai-service-749944520-bpdzt 0/1 Init:Error 0 51m 10.42.228.188 ubuntu-xenial

onap-aai aai-traversal-2677319478-xgrlj 0/2 Init:Error 0 51m 10.42.190.136 ubuntu-xenial

onap-aai model-loader-service-911950978-n181r 0/2 ContainerCreating 0 51m <none> ubuntu-xenial

onap-aai search-data-service-2471976899-4dc9g 0/2 ContainerCreating 0 51m <none> ubuntu-xenial

onap-aai sparky-be-1779663793-qfvrg 0/2 ContainerCreating 0 51m <none> ubuntu-xenial

onap-appc appc-1828810488-7v380 0/2 PodInitializing 0 51m 10.42.213.115 ubuntu-xenial

onap-appc appc-dbhost-2793739621-g7xcz 0/1 Pending 0 29m <none> <none>

onap-appc appc-dgbuilder-2298093128-6d1vs 0/1 Pending 0 29m <none> <none>

onap-clamp clamp-2211988013-pb75q 0/1 Init:Error 0 51m 10.42.140.87 ubuntu-xenial

onap-clamp clamp-mariadb-1812977665-sc73x 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-cli cli-2960589940-6d3bg 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-consul consul-agent-3312409084-q7rw3 0/1 CrashLoopBackOff 1 52m 10.42.203.7 ubuntu-xenial

onap-consul consul-server-1173049560-80qrz 0/1 CrashLoopBackOff 1 52m 10.42.4.234 ubuntu-xenial

onap-consul consul-server-1173049560-jwt89 0/1 CrashLoopBackOff 1 52m 10.42.193.70 ubuntu-xenial

onap-consul consul-server-1173049560-xd33b 0/1 Error 1 52m 10.42.233.250 ubuntu-xenial

onap-dcaegen2 nginx-1230103904-g93c1 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-esr esr-esrgui-1816310556-g8xz3 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-esr esr-esrserver-1044617554-4256b 0/1 ErrImagePull 0 51m <none> ubuntu-xenial

onap-kube2msb kube2msb-registrator-4293827076-dpfm3 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-log elasticsearch-1942187295-r10vg 0/1 PodInitializing 0 51m 10.42.226.128 ubuntu-xenial

onap-log kibana-3372627750-rjs6c 0/1 Init:0/1 0 51m 10.42.25.115 ubuntu-xenial

onap-log logstash-1708188010-b3qt4 0/1 Init:Error 0 51m 10.42.163.224 ubuntu-xenial

onap-message-router dmaap-3126594942-crv5t 0/1 Pending 0 38m <none> <none>

onap-message-router global-kafka-3848542622-1kc2q 0/1 PodInitializing 0 52m 10.42.5.242 ubuntu-xenial

onap-message-router zookeeper-624700062-c4gk5 0/1 Pending 0 42m <none> <none>

onap-msb msb-consul-3334785600-kt10j 0/1 Pending 0 33m <none> <none>

onap-msb msb-discovery-196547432-83r9w 0/1 Pending 0 43m <none> <none>

onap-msb msb-eag-1649257109-l8nxx 0/1 Pending 0 43m <none> <none>

onap-msb msb-iag-1033096170-0ddb7 0/1 Pending 0 43m <none> <none>

onap-mso mariadb-829081257-37fcx 0/1 Error 0 52m 10.42.40.216 ubuntu-xenial

onap-mso mso-681186204-715gr 0/2 Pending 0 40m <none> <none>

onap-multicloud framework-4234260520-rkjbk 0/1 ImagePullBackOff 0 51m 10.42.33.87 ubuntu-xenial

onap-multicloud multicloud-ocata-629200416-nm145 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-multicloud multicloud-vio-1286525177-2d78l 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-multicloud multicloud-windriver-458734915-s3jm6 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-policy brmsgw-2284221413-q7q9v 0/1 Pending 0 29m <none> <none>

onap-policy drools-534015681-srhm3 0/2 Pending 0 34m <none> <none>

onap-policy mariadb-559003789-x2xhs 0/1 Pending 0 30m <none> <none>

onap-policy nexus-687566637-1mzfg 0/1 Pending 0 30m <none> <none>

onap-policy pap-4181215123-qb41x 0/2 Init:1/2 0 52m 10.42.91.233 ubuntu-xenial

onap-policy pdp-2622241204-3sbw6 0/2 Pending 0 33m <none> <none>

onap-portal portalapps-1783099045-l602w 0/2 Init:1/2 0 52m 10.42.107.114 ubuntu-xenial

onap-portal portaldb-1451233177-gqpn5 0/1 Pending 0 34m <none> <none>

onap-portal portalwidgets-2060058548-ds9lz 0/1 PodInitializing 0 52m 10.42.210.167 ubuntu-xenial

onap-portal vnc-portal-1252894321-c7ht9 0/1 Pending 0 34m <none> <none>

onap-robot robot-2176399604-h32k9 0/1 Pending 0 40m <none> <none>

onap-sdc sdc-be-2336519847-dkrxk 0/2 Init:Error 0 51m 10.42.64.109 ubuntu-xenial

onap-sdc sdc-cs-1151560586-l346c 0/1 Init:Error 0 51m 10.42.166.234 ubuntu-xenial

onap-sdc sdc-es-3319302712-fqnn3 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-sdc sdc-fe-2862673798-sps3t 0/2 Init:0/1 0 51m 10.42.139.66 ubuntu-xenial

onap-sdc sdc-kb-1258596734-k17bm 0/1 Init:Error 0 51m 10.42.40.111 ubuntu-xenial

onap-sdnc dmaap-listener-3967791773-84wxc 0/1 Init:Error 0 52m 10.42.217.152 ubuntu-xenial

onap-sdnc sdnc-1507781456-ztgg0 0/2 PodInitializing 0 52m 10.42.163.203 ubuntu-xenial

onap-sdnc sdnc-dbhost-3029711096-25h9k 0/1 Pending 0 41m <none> <none>

onap-sdnc sdnc-dgbuilder-4011443503-c3vbl 0/1 Init:Error 0 52m 10.42.245.219 ubuntu-xenial

onap-sdnc sdnc-portal-516977107-fqzrs 0/1 Init:Error 0 52m 10.42.25.137 ubuntu-xenial

onap-sdnc ueb-listener-1749146577-q8p3m 0/1 Init:Error 0 52m 10.42.181.247 ubuntu-xenial

onap-uui uui-4267149477-xd9nt 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-uui uui-server-3441797946-l4cxx 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-catalog-840807183-7mr5s 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-emsdriver-2936953408-4mcjx 0/1 ImagePullBackOff 0 51m 10.42.62.236 ubuntu-xenial

onap-vfc vfc-gvnfmdriver-2866216209-j3n6f 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-hwvnfmdriver-2588350680-73skn 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-jujudriver-406795794-66211 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-nokiavnfmdriver-1760240499-32gtc 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-nslcm-3756650867-wnzmv 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-resmgr-1409642779-n4wrx 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-vnflcm-3340104471-mxrrm 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-vnfmgr-2823857741-bs45x 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-vnfres-1792029715-qdvq6 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-workflow-3450325534-swk4b 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-workflowengineactiviti-4110617986-xb1xm 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-ztesdncdriver-1452986549-2h6jj 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vfc vfc-ztevmanagerdriver-2080553526-bc914 0/1 ImagePullBackOff 0 51m <none> ubuntu-xenial

onap-vid vid-mariadb-3318685446-ctfcx 0/1 Pending 0 40m <none> <none>

onap-vid vid-server-421936131-w6p7k 0/2 PodInitializing 0 52m 10.42.176.246 ubuntu-xenial

please help me to figure out the issue and how i can resolve this problem

Borislav Glozman

Please try to deploy according to instructions here:

http://onap.readthedocs.io/en/latest/submodules/oom.git/docs/oom_setup_kubernetes_rancher.html

http://onap.readthedocs.io/en/latest/submodules/oom.git/docs/oom_user_guide.html

Nalini Varshney

Thank you Borislav Glozman for respond me but i tried to install onap Amsterdam version. and above link is for beijing

i followed below link for the same

Cloud Native Deployment#UndercloudInstall-Rancher/Kubernetes/Helm/Docker

in this i tried with Full Entrypoint Install section

i need to install all-in-on setup. i have machine with configuration of RAM-128GB, CPU-72, Disk- 3TB

Michael O'Brien

All your pods are failing - this means a config issue in your vm or network or firewall

do a kubectl describe pod on a couple to see some details

check that you can pull images from nexus3 - try a manual docker pull outside kubernetes to verify

Also - do you need amsterdam - that hybrid deploy is not really supported anymore - you can switch the readthedocs to amsterdam on the bottom right - but try master/beijing - it has support.

looking at your pods - your issue is that your kubernetes setup is not registered properly - your system is down - these are all pending.

This may be the IP you are using to register your host - on some network setups for certain openstack environments - a non-routable IP is picked by rancher - there is a override when using the gui to put in your client IP

There is a patch up to be able to specify this in

https://gerrit.onap.org/r/#/c/54677/

kube-system heapster-4285517626-7qr37 0/1 Pending 0 30m <none> <none>

kube-system monitoring-grafana-2360823841-f6zzq 0/1 Pending 0 45m <none> <none>

kube-system monitoring-influxdb-2323019309-46vtt 0/1 Pending 0 46m <none> <none>

kube-system tiller-deploy-737598192-47276 0/1 Pending 0 46m <none> <none>

run oom_rancher_install.sh separately - the entrypoint is more for running in arm/heat templates

https://git.onap.org/logging-analytics/tree/deploy/rancher/oom_rancher_setup.sh

then run cd.sh

Borislav Glozman

Michael O'Brien, maybe we should retire this page and point it to readthedocs instead?

Michael O'Brien

deprecated - moved

see Cloud Native Deployment for the latest content - and read the docs