...

Perform these above operations on all control nodes as well as worker nodes to run kubectl and helm commands

Create "onap" namespace and set that as default in kubernetes.

| Code Block |

|---|

ubuntu@onap-control-01:~$ kubectl create namespace onap ubuntu@onap-control-01:~$ kubectl config set-context --current --namespace=onap |

Verify the kubernetes cluster

...

Perform this on onap-control-1 VM only during the first setup.

Perform this on the other onap-control nodes:

Setting up the NFS share for multinode kubernetes cluster:

Deploying applications to a Kubernetes cluster requires Kubernetes nodes to share a common, distributed filesystem. In this tutorial, we will setup an NFS Master, and configure all Worker nodes a Kubernetes cluster to play the role of NFS slaves.

It is recommneded that a separate VM, outside of the kubernetes cluster, be used. This is to ensure that the NFS Master does not compete for resources with Kubernetes Control Plane or Worker Nodes.

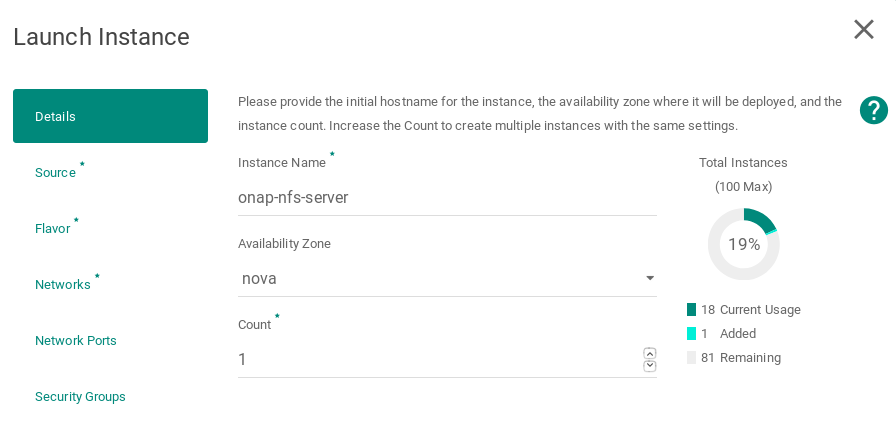

Launch new NFS Server VM instance

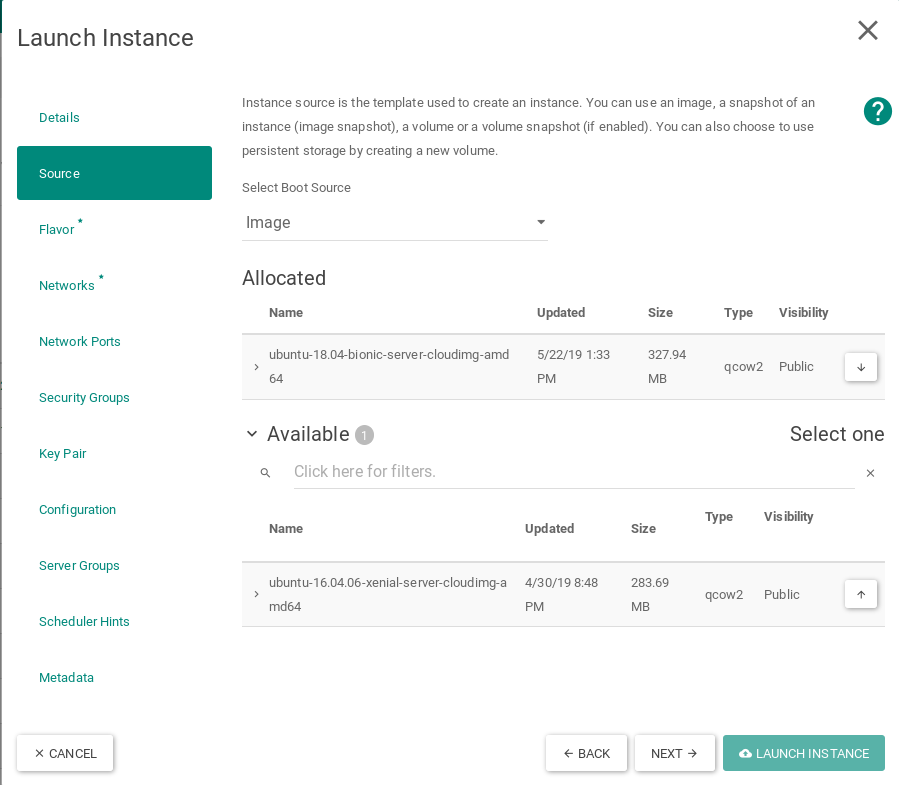

Select Ubuntu 18.04 as base image

Select Flavor

Networking

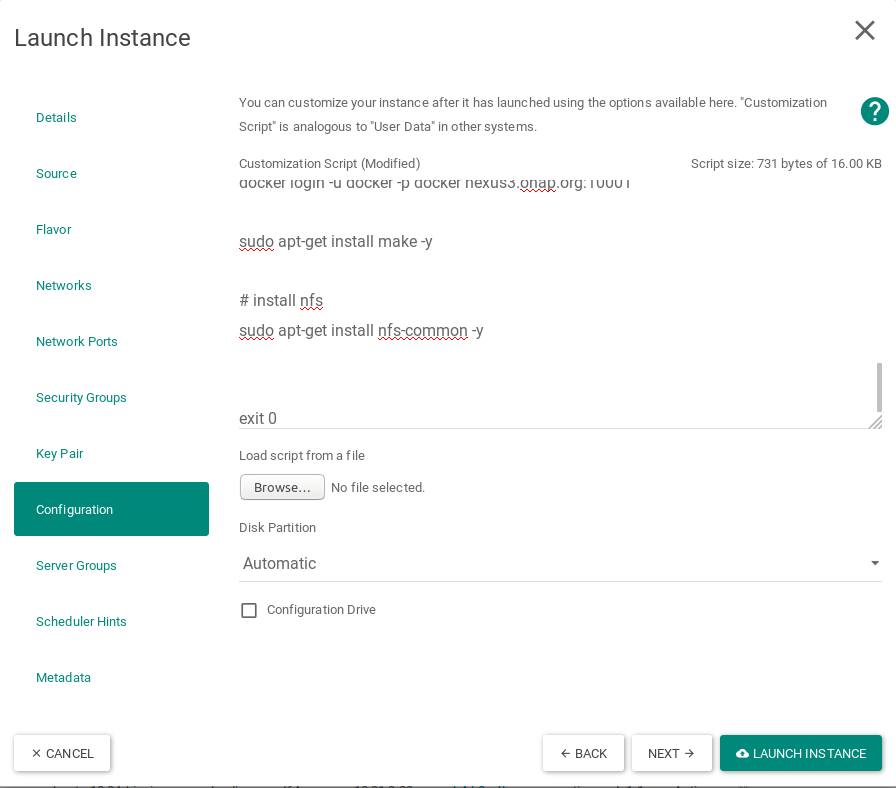

Apply customization script for NFS Server VM

Script to be added:

| Code Block |

|---|

#!/bin/bash

DOCKER_VERSION=18.09.5

export DEBIAN_FRONTEND=noninteractive

apt-get update

curl https://releases.rancher.com/install-docker/$DOCKER_VERSION.sh | sh

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries" : [ "nexus3.onap.org:10001","10.20.6.10:30000" ],

"log-driver": "json-file",

"log-opts": {

"max-size": "1m",

"max-file": "9"

},

"mtu": 1450,

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64",

"registry-mirrors": ["https://nexus3.onap.org:10001"]

}

EOF

sudo usermod -aG docker ubuntu

systemctl daemon-reload

systemctl restart docker

apt-mark hold docker-ce

IP_ADDR=`ip address |grep ens|grep inet|awk '{print $2}'| awk -F / '{print $1}'`

HOSTNAME=`hostname`

sudo echo "$IP_ADDR $HOSTNAME" >> /etc/hosts

docker login -u docker -p docker nexus3.onap.org:10001

sudo apt-get install make -y

# install nfs

sudo apt-get install nfs-common -y

sudo apt update

exit 0

|

This customization script will:

- Install docker and hold the docker version to 18.09.5

- insert hostname and IP address of the onap-nfs-server in the hosts file

- install nfs server

Resulting example

Configure NFS Share on Master node

Login into onap-nfs-server and perform the below commands

Create a master_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) node1_ip node2_ip ... nodeN_ip"

exit 1

}

if [ "$#" -lt 1 ]; then

echo "Missing NFS slave nodes"

usage

fi

#Install NFS kernel

sudo apt-get update

sudo apt-get install -y nfs-kernel-server

#Create /dockerdata-nfs and set permissions

sudo mkdir -p /dockerdata-nfs

sudo chmod 777 -R /dockerdata-nfs

sudo chown nobody:nogroup /dockerdata-nfs/

#Update the /etc/exports

NFS_EXP=""

for i in $@; do

NFS_EXP+="$i(rw,sync,no_root_squash,no_subtree_check) "

done

echo "/dockerdata-nfs "$NFS_EXP | sudo tee -a /etc/exports

#Restart the NFS service

sudo exportfs -a

sudo systemctl restart nfs-kernel-server

|

Make the above created file as executable and run the script in the onap-nfs-server with the IP's of the worker nodes:

| Code Block |

|---|

chmod +x master_nfs_node.sh

sudo ./master_nfs_node.sh {list kubernetes worker nodes ip}

example from the WinLab setup:

sudo ./master_nfs_node.sh 10.31.3.39 10.31.3.24 10.31.3.52 10.31.3.8 10.31.3.34 10.31.3.47 10.31.3.15 10.31.3.9 10.31.3.5 10.31.3.21 10.31.3.1 10.31.3.68 |

Login into each kubernetes worker node, i.e. onap-k8s VMs and perform the below commands

Create a slave_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) nfs_master_ip"

exit 1

}

if [ "$#" -ne 1 ]; then

echo "Missing NFS mater node"

usage

fi

MASTER_IP=$1

#Install NFS common

sudo apt-get update

sudo apt-get install -y nfs-common

#Create NFS directory

sudo mkdir -p /dockerdata-nfs

#Mount the remote NFS directory to the local one

sudo mount $MASTER_IP:/dockerdata-nfs /dockerdata-nfs/

echo "$MASTER_IP:/dockerdata-nfs /dockerdata-nfs nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0" | sudo tee -a /etc/fstab

|

Make the above created file as executable and run the script in all the worker nodes:

| Code Block |

|---|

chmod +x slave_nfs_node.sh

sudo ./slave_nfs_node.sh {master nfs node IP address}

example from the WinLab setup:

sudo ./slave_nfs_node.sh 10.31.3.11 |

ONAP Installation

Perform the following steps in onap-control-1 VM.

Clone the OOM helm repository

Use the master branch as Dublin branch is not available.

Perform these on the home directory

Create folder for local charts repository on onap-control-01.

| Code Block | ||||

|---|---|---|---|---|

| ||||

ubuntu@onap-control-01:~$ mkdir charts; chmod -R 777 charts

|

Run a docker server to serve local charts

| Code Block | ||||

|---|---|---|---|---|

| ||||

ubuntu@onap-control-01:~$ docker run -d -p 8080:8080 -v $(pwd)/charts:/charts -e DEBUG=true -e STORAGE=local -e STORAGE_LOCAL_ROOTDIR=/charts chartmuseum/chartmuseum:latest |

Install helm chartmuseum plugin

| Code Block | ||||

|---|---|---|---|---|

| ||||

ubuntu@onap-control-01:~$ helm plugin install https://github.com/chartmuseum/helm-push.git |

Setting up the NFS share for multinode kubernetes cluster:

Deploying applications to a Kubernetes cluster requires Kubernetes nodes to share a common, distributed filesystem. In this tutorial, we will setup an NFS Master, and configure all Worker nodes a Kubernetes cluster to play the role of NFS slaves.

It is recommneded that a separate VM, outside of the kubernetes cluster, be used. This is to ensure that the NFS Master does not compete for resources with Kubernetes Control Plane or Worker Nodes.

Launch new NFS Server VM instance

Select Ubuntu 18.04 as base image

Select Flavor

Networking

Apply customization script for NFS Server VM

Script to be added:

| Code Block |

|---|

#!/bin/bash

DOCKER_VERSION=18.09.5

export DEBIAN_FRONTEND=noninteractive

apt-get update

curl https://releases.rancher.com/install-docker/$DOCKER_VERSION.sh | sh

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries" : [ "nexus3.onap.org:10001","10.20.6.10:30000" ],

"log-driver": "json-file",

"log-opts": {

"max-size": "1m",

"max-file": "9"

},

"mtu": 1450,

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64",

"registry-mirrors": ["https://nexus3.onap.org:10001"]

}

EOF

sudo usermod -aG docker ubuntu

systemctl daemon-reload

systemctl restart docker

apt-mark hold docker-ce

IP_ADDR=`ip address |grep ens|grep inet|awk '{print $2}'| awk -F / '{print $1}'`

HOSTNAME=`hostname`

sudo echo "$IP_ADDR $HOSTNAME" >> /etc/hosts

docker login -u docker -p docker nexus3.onap.org:10001

sudo apt-get install make -y

# install nfs

sudo apt-get install nfs-common -y

sudo apt update

exit 0

|

This customization script will:

- Install docker and hold the docker version to 18.09.5

- insert hostname and IP address of the onap-nfs-server in the hosts file

- install nfs server

Resulting example

Configure NFS Share on Master node

Login into onap-nfs-server and perform the below commands

Create a master_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) node1_ip node2_ip ... nodeN_ip"

exit 1

}

if [ "$#" -lt 1 ]; then

echo "Missing NFS slave nodes"

usage

fi

#Install NFS kernel

sudo apt-get update

sudo apt-get install -y nfs-kernel-server

#Create /dockerdata-nfs and set permissions

sudo mkdir -p /dockerdata-nfs

sudo chmod 777 -R /dockerdata-nfs

sudo chown nobody:nogroup /dockerdata-nfs/

#Update the /etc/exports

NFS_EXP=""

for i in $@; do

NFS_EXP+="$i(rw,sync,no_root_squash,no_subtree_check) "

done

echo "/dockerdata-nfs "$NFS_EXP | sudo tee -a /etc/exports

#Restart the NFS service

sudo exportfs -a

sudo systemctl restart nfs-kernel-server

|

Make the above created file as executable and run the script in the onap-nfs-server with the IP's of the worker nodes:

| Code Block |

|---|

chmod +x master_nfs_node.sh

sudo ./master_nfs_node.sh {list kubernetes worker nodes ip}

example from the WinLab setup:

sudo ./master_nfs_node.sh 10.31.3.39 10.31.3.24 10.31.3.52 10.31.3.8 10.31.3.34 10.31.3.47 10.31.3.15 10.31.3.9 10.31.3.5 10.31.3.21 10.31.3.1 10.31.3.68 |

Login into each kubernetes worker node, i.e. onap-k8s VMs and perform the below commands

Create a slave_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) nfs_master_ip"

exit 1

}

if [ "$#" -ne 1 ]; then

echo "Missing NFS mater node"

usage

fi

MASTER_IP=$1

#Install NFS common

sudo apt-get update

sudo apt-get install -y nfs-common

#Create NFS directory

sudo mkdir -p /dockerdata-nfs

#Mount the remote NFS directory to the local one

sudo mount $MASTER_IP:/dockerdata-nfs /dockerdata-nfs/

echo "$MASTER_IP:/dockerdata-nfs /dockerdata-nfs nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0" | sudo tee -a /etc/fstab

|

Make the above created file as executable and run the script in all the worker nodes:

| Code Block |

|---|

chmod +x slave_nfs_node.sh

sudo ./slave_nfs_node.sh {master nfs node IP address}

example from the WinLab setup:

sudo ./slave_nfs_node.sh 10.31.3.11 |

ONAP Installation

Perform the following steps in onap-control-01 VM.

Clone the OOM helm repository

Perform these on the home directory

- Clone oom repository with recursive submodules option

- Add deploy plugin to helm

- Add undeploy plugin to helm

- Add helm local repository to the local running server

| Code Block |

|---|

ubuntu@onap-control-01:~$ git clone -b master http://gerrit.onap.org/r/oom --recurse-submodules

ubuntu@onap-control-01:~$ helm plugin install ~/oom/kubernetes/helm/plugins/deploy

ubuntu@onap-control-01:~$ helm plugin install ~/oom/kubernetes/helm/plugins/undeploy

ubuntu@onap-control-01:~$ helm repo add local http://127.0.0.1:8080 |

Make charts from oom repository

| Code Block |

|---|

ubuntu@onap-control-01:~$ cd ~/oom/kubernetes; make all -e SKIP_LINT=TRUE; make onap -e SKIP_LINT=TRUE

Using Helm binary helm which is helm version v3.5.2

[common]

make[1]: Entering directory '/home/ubuntu/oom/kubernetes'

make[2]: Entering directory '/home/ubuntu/oom/kubernetes/common'

[common]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

==> Linting common

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing common-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[repositoryGenerator]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

==> Linting repositoryGenerator

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing repositoryGenerator-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[readinessCheck]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Deleting outdated charts

==> Linting readinessCheck

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing readinessCheck-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[serviceAccount]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Deleting outdated charts

==> Linting serviceAccount

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing serviceAccount-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[certInitializer]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 3 charts

Deleting outdated charts

==> Linting certInitializer

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing certInitializer-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[cassandra]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Deleting outdated charts

==> Linting cassandra

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing cassandra-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[certManagerCertificate]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Deleting outdated charts

==> Linting certManagerCertificate

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing certManagerCertificate-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[cmpv2Config]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Deleting outdated charts

==> Linting cmpv2Config

[INFO] Chart.yaml: icon is recommended

[WARNING] templates/: directory not found

1 chart(s) linted, 0 chart(s) failed

Pushing cmpv2Config-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[dgbuilder]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 4 charts

Downloading certInitializer from repo http://127.0.0.1:8080

Deleting outdated charts

==> Linting dgbuilder

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing dgbuilder-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[dist]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[elasticsearch]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

make[4]: Entering directory '/home/ubuntu/oom/kubernetes/common/elasticsearch'

[components]

make[5]: Entering directory '/home/ubuntu/oom/kubernetes/common/elasticsearch'

make[6]: Entering directory '/home/ubuntu/oom/kubernetes/common/elasticsearch/components'

[soHelpers]

make[7]: Entering directory '/home/ubuntu/oom/kubernetes/common/elasticsearch/components'

make[7]: Leaving directory '/home/ubuntu/oom/kubernetes/common/elasticsearch/components'

==> Linting ejbca

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/dist/packages/ejbca-8.0.0.tgz

make[5]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components'

[netbox]

make[5]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components'

make[6]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox'

[components]

make[7]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox'

make[8]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

[netbox-app]

make[9]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Downloading common from repo http://127.0.0.1:8080

Downloading repositoryGenerator from repo http://127.0.0.1:8080

Deleting outdated charts

==> Linting netbox-app

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/contrib/components/dist/packages/netbox-app-8.0.0.tgz

make[9]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

[netbox-nginx]

make[9]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Downloading common from repo http://127.0.0.1:8080

Downloading repositoryGenerator from repo http://127.0.0.1:8080

Deleting outdated charts

==> Linting netbox-nginx

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/contrib/components/dist/packages/netbox-nginx-8.0.0.tgz

make[9]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

[netbox-postgres]

make[9]: Entering directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Downloading common from repo http://127.0.0.1:8080

Downloading repositoryGenerator from repo http://127.0.0.1:8080

Deleting outdated charts

==> Linting netbox-postgres

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/contrib/components/dist/packages/netbox-postgres-8.0.0.tgz

make[9]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

make[8]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox/components'

make[7]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox'

make[6]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components/netbox'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 5 charts

Downloading common from repo http://127.0.0.1:8080

Downloading repositoryGenerator from repo http://127.0.0.1:8080

Deleting outdated charts

==> Linting netbox

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/dist/packages/netbox-8.0.0.tgz

make[5]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components'

make[4]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib/components'

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib'

make[2]: Leaving directory '/home/ubuntu/oom/kubernetes/contrib'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 5 charts

Downloading common from repo http://127.0.0.1:8080

Downloading repositoryGenerator from repo http://127.0.0.1:8080

Deleting outdated charts

Skipping linting of contrib

Pushing contrib-8.0.0.tgz to local...

Done.

make[1]: Leaving directory '/home/ubuntu/oom/kubernetes'

[cps]

make[1]: Entering directory '/home/ubuntu/oom/kubernetes'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 5 charts

Downloading common from repo http://127.0.0.1:8080

Downloading postgres from repo http://127.0.0.1:8080

Downloading readinessCheck from repo http://127.0.0.1:8080

Downloading repositoryGenerator from repo http://127.0.0.1:8080

Downloading serviceAccount from repo http://127.0.0.1:8080

Deleting outdated charts

Skipping linting of cps

Pushing cps-8.0.0.tgz to local...

Done.

make[1]: Leaving directory '/home/ubuntu/oom/kubernetes'

[dcaegen2]

make[1]: Entering directory '/home/ubuntu/oom/kubernetes'

make[2]: Entering directory '/home/ubuntu/oom/kubernetes/dcaegen2'

[components]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/dcaegen2'

make[4]: Entering directory '/home/ubuntu/oom/kubernetes/dcaegen2/components'

[dcae-bootstrap]

make[5]: Entering directory '/home/ubuntu/oom/kubernetes/dcaegen2/components'

Hang tight while we grab the latest from your chart repositories...

|

| Code Block |

git clone -b <branch> "https://gerrit.onap.org/r/oom" --recurse-submodules

mkdir .helm

cp -R ~/oom/kubernetes/helm/plugins/ ~/.helm

cd oom/kubernetes/sdnc |

Edit the values.yaml file

...

| Code Block |

|---|

# Copyright © 2019 Amdocs, Bell Canada

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

###################################################################

# This override file enables helm charts for all ONAP applications.

###################################################################

cassandra:

enabled: true

mariadb-galera:

enabled: true

aaf:

enabled: true

aai:

enabled: true

appc:

enabled: true

cds:

enabled: true

clamp:

enabled: true

cli:

enabled: true

consul:

enabled: true

contrib:

enabled: true

dcaegen2:

enabled: true

dmaap:

enabled: true

esr:

enabled: true

log:

enabled: true

sniro-emulator:

enabled: true

oof:

enabled: true

msb:

enabled: true

multicloud:

enabled: true

nbi:

enabled: true

policy:

enabled: true

pomba:

enabled: true

portal:

enabled: true

robot:

enabled: true

sdc:

enabled: true

sdnc:

enabled: true

config:

sdnrwt: true

so:

enabled: true

uui:

enabled: true

vfc:

enabled: true

vid:

enabled: true

vnfsdk:

enabled: true

modeling:

enabled: true

|

Save the file.

Start helm server

go to home directory and start helm server and local repository.

| Code Block |

|---|

cd

helm serve & |

Hit on ENTER key to come out of helm serve if it shows some logs

Add helm repository

Note the port number that is listed and use it in the Helm repo add as follows

...

.

...

Verify helm repository

| Code Block |

|---|

helm repo list |

...

| Code Block | ||

|---|---|---|

| ||

PUT _settings

{

"index": {

"blocks": {

"read_only_allow_delete": "false"

}

}

} |

helm repo add local http://127.0.0.1:8080