...

(on each host) add to your /etc/hosts to point your ip to your hostname (add your hostname to the end). Add entries for all other hosts in your cluster.

Try to use root - if you use ubuntu then you will need to enable docker separately for the ubuntu user

(to fix possible modprobe: FATAL: Module aufs not found in directory /lib/modules/4.4.0-59-generic) (on each host (server and client(s) which may be the same machine)) Install only the 1.12.x (currently 1.12.6) version of Docker (the only version that works with Kubernetes in Rancher 1.6)

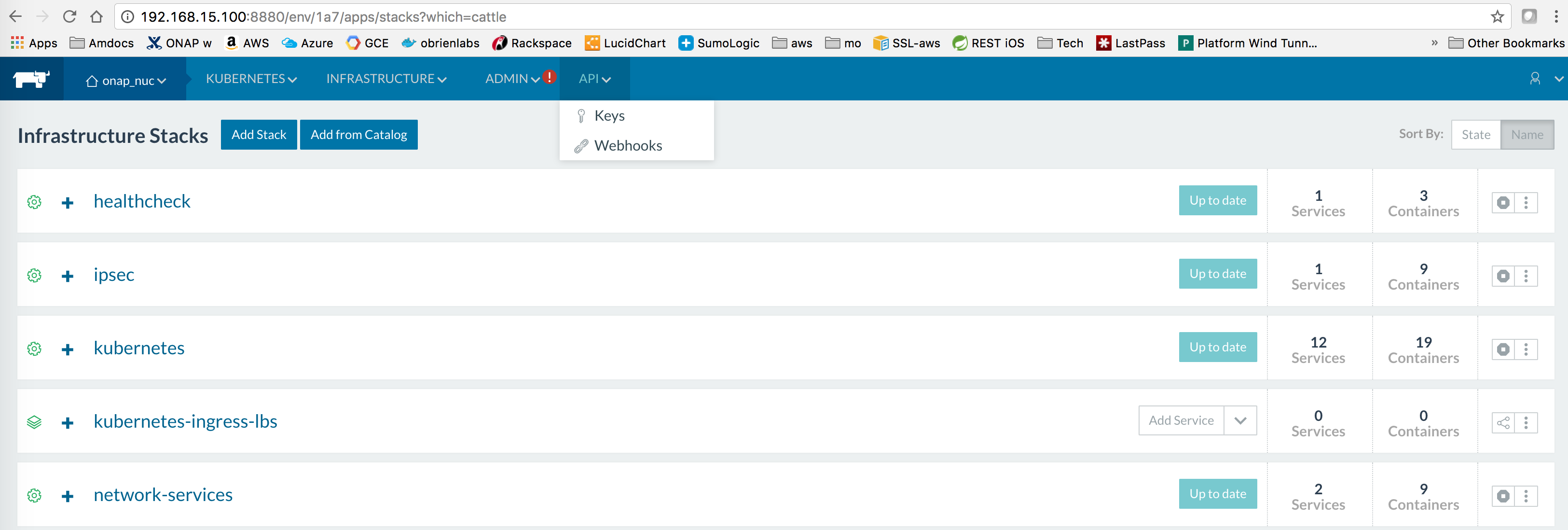

(on the master only) Install rancher (use 8880 instead of 8080) - note there may be issues with the dns pod in Rancher after a reboot or when running clustered hosts - a clean system will be OK -

In Rancher UI - dont use (http://127.0.0.1:8880) - use the real IP address - so the client configs are populated correctly with callbacks You must deactivate the default CATTLE environment - by adding a KUBERNETES environment - and Deactivating the older default CATTLE one - your added hosts will attach to the default

Register your host(s) - run following on each host (including the master if you are collocating the master/host on a single machine/vm) For each host, In Rancher > Infrastructure > Hosts. Select "Add Host" Enter IP of host: (if you launched racher with 127.0.0.1/localhost - otherwise keep it empty - it will autopopulate the registration with the real IP) Copy command to register host with Rancher, Execute command on host, for example:

wait for kubernetes menu to populate with CLI install kubectl on the server and optionally the other hosts

paste kubectl config from rancher (you will see the CLI menu in Rancher | Kubernetes after the k8s pods are up on your host Click on "Generate Config" to get your content to add into .kube/config Verify that Kubernetes config is good

Install Helm (use 2.3.0 not current 2.6.0)

Undercloud done - move to ONAP clone oom (scp your onap_rsa private key first - or clone anon - Ideally you get a full gerrit account and join the community) see ssh/http/http access links below https://gerrit.onap.org/r/#/admin/projects/oom

or use https (substitute your user/pass)

Wait until all the hosts show green in rancher, then run the createConfig/createAll scripts that wraps all the kubectl commands

Run the setenv.bash script in /oom/kubernetes/oneclick/ (new since 20170817)

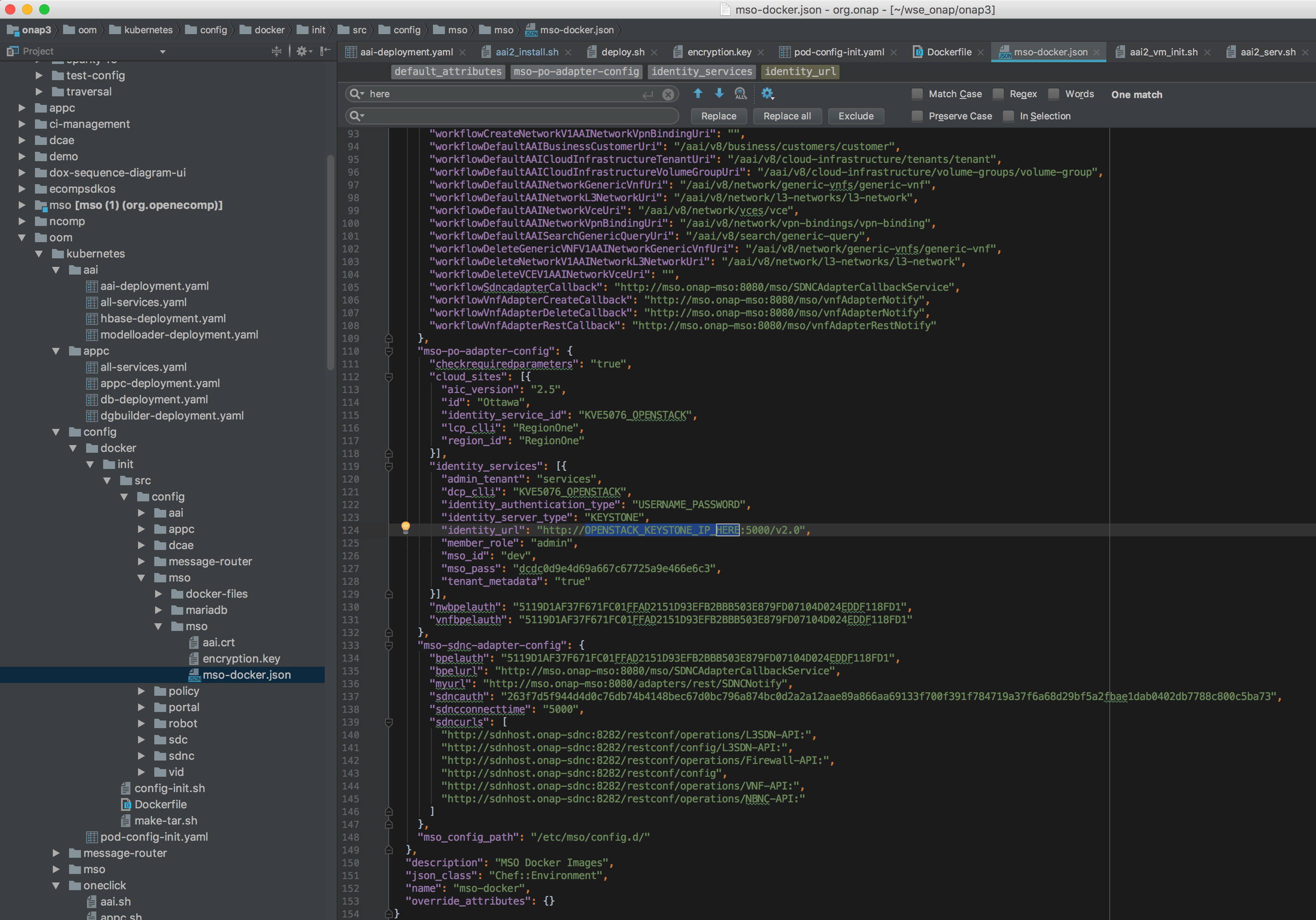

(only if you are planning on closed-loop) - Before running createConfig.sh (see below) - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json replace for example "identity_services": [{ run the one time config pod - which mounts the volume /dockerdata/ contained in the pod config-init. This mount is required for all other ONAP pods to function. Note: the pod will stop after NFS creation - this is normal.

**** Creating configuration for ONAP instance: onap Wait for the config-init pod is gone before trying to bring up a component or all of ONAP - around 15-20 sec - see https://wiki.onap.org/display/DW/ONAP+on+Kubernetes#ONAPonKubernetes-Waitingforconfig-initcontainertofinish-20sec Note: use only the hardcoded "onap" namespace prefix - as URLs in the config pod are set as follows "workflowSdncadapterCallback": "http://mso.onap-mso:8080/mso/SDNCAdapterCallbackService" Don't run all the pods unless you have at least 40G (without DCAE) or 50G allocated - if you have a laptop/VM with 16G - then you can only run enough pods to fit in around 11G Ignore errors introduced around 20170816 - these are non-blocking and will allow the create to proceed -

(to bring up a single service at a time) Only if you have >50G run the following (all namespaces)

ONAP is OK if everything is 1/1 in the following

Run the ONAP portal via instructions at RunningONAPusingthevnc-portal Wait until the containers are all up Run Initial healthcheck directly on the host cd /dockerdata-nfs/onap/robot ./ete-docker.sh health check AAI endpoints root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# kubectl -n onap-aai exec -it aai-service-3321436576-2snd6 bash root@aai-service-3321436576-2snd6:/# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-systemd- root 7 1 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-master root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# curl https://127.0.0.1:30233/aai/v11/service-design-and-creation/models curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none |

List of Containers

Total pods is 48 (without DCAE)

...

NAMESPACE master:20170715 | NAME | Image | Log Locations | Public / Debug Ports | Notes | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| default | config-init | The mount "config-init-root" is in the following location (user configurable VF parameter file below) /dockerdata-nfs/onapdemo/mso/mso/mso-docker.json | |||||||||||||||||||

| onap-aai | aai-dmaap-522748218-5rw0v | ||||||||||||||||||||

| onap-aai | aai-kafka-2485280328-6264m | ||||||||||||||||||||

| onap-aai | aai-resources-3302599602-fn4xm | /opt/aai/logroot/AAI-RES | |||||||||||||||||||

| onap-aai | aai-service-3321436576-2snd6 | ||||||||||||||||||||

| onap-aai | aai-traversal-2747464563-3c8m7 | /opt/aai/logroot/AAI-GQ | |||||||||||||||||||

| onap-aai | aai-zookeeper-1010977228-l2h3h | ||||||||||||||||||||

| onap-aai | data-router-1397019010-t60wm | ||||||||||||||||||||

| onap-aai | elasticsearch-2660384851-k4txd | ||||||||||||||||||||

| onap-aai | gremlin-1786175088-m39jb | ||||||||||||||||||||

| onap-aai | hbase-3880914143-vp8z | ||||||||||||||||||||

| onap-aai | model-loader-service-226363973-wx6s3 | ||||||||||||||||||||

| onap-aai | search-data-service-1212351515-q4k6 | ||||||||||||||||||||

| onap-aai | sparky-be-2088640323-h2pbx | ||||||||||||||||||||

| onap-appc | appc-2044062043-bx6tc | ||||||||||||||||||||

| onap-appc | appc-dbhost-2039492951-jslts | ||||||||||||||||||||

| onap-appc | appc-dgbuilder-2934720673-mcp7c | ||||||||||||||||||||

| onap-appc | sdntldb01 (internal) | ||||||||||||||||||||

| onap-appc | sdnctldb02 (internal) | ||||||||||||||||||||

| onap-dcae | dcae-zookeeper | wurstmeister/zookeeper:latest | |||||||||||||||||||

| onap-dcae | dcae-kafka | dockerfiles_kafka:latest | Note: currently there are no DCAE containers running yet (we are missing 6 yaml files (1 for the controller and 5 for the collector,staging,3-cdap pods)) - therefore DMaaP, VES collectors and APPC actions as the result of policy actions (closed loop) - will not function yet. In review: https://gerrit.onap.org/r/#/c/7287/

| ||||||||||||||||||

| onap-dcae | dcae-dmaap | attos/dmaap:latest | |||||||||||||||||||

| onap-dcae | pgaas | obrienlabs/pgaas | https://hub.docker.com/r/oomk8s/pgaas/tags/ | ||||||||||||||||||

| onap-dcae | dcae-collector-common-event | persistent volume: dcae-collector-pvs | |||||||||||||||||||

| onap-dcae | dcae-collector-dmaapbc | ||||||||||||||||||||

| |||||||||||||||||||||

| onap-dcae | dcae-ves-collector | ||||||||||||||||||||

| onap-dcae | cdap-0 | ||||||||||||||||||||

| onap-dcae | cdap-1 | ||||||||||||||||||||

| onap-dcae | cdap-2 | ||||||||||||||||||||

| onap-message-router | dmaap-3842712241-gtdkp | ||||||||||||||||||||

| onap-message-router | global-kafka-89365896-5fnq9 | ||||||||||||||||||||

| onap-message-router | zookeeper-1406540368-jdscq | ||||||||||||||||||||

| onap-msb | bring onap-msb up before the rest of onap follow

| ||||||||||||||||||||

| onap-msb | |||||||||||||||||||||

| onap-msb | |||||||||||||||||||||

| onap-msb | |||||||||||||||||||||

| onap-mso | mariadb-2638235337-758zr | ||||||||||||||||||||

| onap-mso | mso-3192832250-fq6pn | ||||||||||||||||||||

| onap-policy | brmsgw-568914601-d5z71 | ||||||||||||||||||||

| onap-policy | drools-1450928085-099m2 | ||||||||||||||||||||

| onap-policy | mariadb-2932363958-0l05g | ||||||||||||||||||||

| onap-policy | nexus-871440171-tqq4z | ||||||||||||||||||||

| onap-policy | pap-2218784661-xlj0n | ||||||||||||||||||||

| onap-policy | pdp-1677094700-75wpj | ||||||||||||||||||||

| onap-policy | pypdp-3209460526-bwm6b | 1.0.0 only | |||||||||||||||||||

| onap-portal | portalapps-1708810953-trz47 | ||||||||||||||||||||

| onap-portal | portaldb-3652211058-vsg8r | ||||||||||||||||||||

| onap-portal | portalwidgets-1728801515-r825g | ||||||||||||||||||||

| onap-portal | vnc-portal-948446550-76kj7 | ||||||||||||||||||||

| onap-robot | robot-964706867-czr05 | ||||||||||||||||||||

| onap-sdc | sdc-be-2426613560-jv8sk | ${log.home}/${OPENECOMP-component-name}/${OPENECOMP-subcomponent-name}/transaction.log.%i./var/lib/jetty/logs/SDC/SDC-BE/metrics.log ./var/lib/jetty/logs/SDC/SDC-BE/audit.log ./var/lib/jetty/logs/SDC/SDC-BE/debug_by_package.log ./var/lib/jetty/logs/SDC/SDC-BE/debug.log ./var/lib/jetty/logs/SDC/SDC-BE/transaction.log ./var/lib/jetty/logs/SDC/SDC-BE/error.log ./var/lib/jetty/logs/importNormativeAll.log ./var/lib/jetty/logs/ASDC/ASDC-FE/audit.log ./var/lib/jetty/logs/ASDC/ASDC-FE/debug.log ./var/lib/jetty/logs/ASDC/ASDC-FE/transaction.log ./var/lib/jetty/logs/ASDC/ASDC-FE/error.log ./var/lib/jetty/logs/2017_09_06.stderrout.log | |||||||||||||||||||

| onap-sdc | sdc-cs-2080334320-95dq8 | ||||||||||||||||||||

| onap-sdc | sdc-es-3272676451-skf7z | ||||||||||||||||||||

| onap-sdc | sdc-fe-931927019-nt94t | ./var/lib/jetty/logs/SDC/SDC-BE/metrics.log ./var/lib/jetty/logs/SDC/SDC-BE/audit.log ./var/lib/jetty/logs/SDC/SDC-BE/debug_by_package.log ./var/lib/jetty/logs/SDC/SDC-BE/debug.log ./var/lib/jetty/logs/SDC/SDC-BE/transaction.log ./var/lib/jetty/logs/SDC/SDC-BE/error.log ./var/lib/jetty/logs/importNormativeAll.log ./var/lib/jetty/logs/2017_09_07.stderrout.log ./var/lib/jetty/logs/ASDC/ASDC-FE/audit.log ./var/lib/jetty/logs/ASDC/ASDC-FE/debug.log ./var/lib/jetty/logs/ASDC/ASDC-FE/transaction.log ./var/lib/jetty/logs/ASDC/ASDC-FE/error.log ./var/lib/jetty/logs/2017_09_06.stderrout.log | |||||||||||||||||||

| onap-sdc | sdc-kb-3337231379-8m8wx | ||||||||||||||||||||

| onap-sdnc | sdnc-1788655913-vvxlj | ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/journal/000006.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/cache/1504712225751.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/cache/1504712002358.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/tmp/xql.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/log/karaf.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/taglist.log ./var/log/dpkg.log ./var/log/apt/history.log ./var/log/apt/term.log ./var/log/fontconfig.log ./var/log/alternatives.log ./var/log/bootstrap.log | |||||||||||||||||||

| onap-sdnc | sdnc-dbhost-240465348-kv8vf | ||||||||||||||||||||

| onap-sdnc | sdnc-dgbuilder-4164493163-cp6rx | ||||||||||||||||||||

| onap-sdnc | sdnctlbd01 (internal) | ||||||||||||||||||||

| onap-sdnc | sdnctlb02 (internal) | ||||||||||||||||||||

| onap-sdnc | sdnc-portal-2324831407-50811 | ./opt/openecomp/sdnc/admportal/server/npm-debug.log ./var/log/dpkg.log ./var/log/apt/history.log ./var/log/apt/term.log ./var/log/fontconfig.log ./var/log/alternatives.log ./var/log/bootstrap.log | |||||||||||||||||||

| onap-vid | vid-mariadb-4268497828-81hm0 | ||||||||||||||||||||

| onap-vid | vid-server-2331936551-6gxsp |

List of Docker Images

root@obriensystemskub0:~/oom/kubernetes/dcae# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nexus3.onap.org:10001/openecomp/dcae-collector-common-event latest 325d5b115fc2 7 days ago 538.8 MB

nexus3.onap.org:10001/openecomp/dcae-dmaapbc latest 46891e265574 7 days ago 328.1 MB

wurstmeister/kafka latest f26f76f2e50d 13 days ago 267.7 MB

nexus3.onap.org:10001/openecomp/model-loader 1.0-STAGING-latest 0c7a3eb0682b 2 weeks ago 758.9 MB

nexus3.onap.org:10001/openecomp/ajsc-aai 1.0-STAGING-latest f5c97da83393 2 weeks ago 1.352 GB

nexus3.onap.org:10001/openecomp/vid 1.0-STAGING-latest b80d37a7ac5e 2 weeks ago 725.9 MB

nexus3.onap.org:10001/openecomp/dgbuilder-sdnc-image 1.0-STAGING-latest cb3912913a43 2 weeks ago 850.9 MB

nexus3.onap.org:10001/openecomp/admportal-sdnc-image 1.0-STAGING-latest 825d60fd3abd 2 weeks ago 780.9 MB

nexus3.onap.org:10001/openecomp/sdnc-image 1.0-STAGING-latest c6eff373cc29 2 weeks ago 1.433 GB

nexus3.onap.org:10001/openecomp/testsuite 1.0-STAGING-latest 1b9a4aaa9649 2 weeks ago 1.097 GB

nexus3.onap.org:10001/openecomp/appc-image 1.0-STAGING-latest c5172ae773c5 2 weeks ago 2.235 GB

mariadb 10 b101c8399ee3 2 weeks ago 386.7 MB

nexus3.onap.org:10001/openecomp/mso 1.0-STAGING-latest b45b72cbc99d 2 weeks ago 1.429 GB

oomk8s/config-init 1.0.0 f19045125a44 5 weeks ago 322 MB

ubuntu 16.04 d355ed3537e9 5 weeks ago 119.2 MB

attos/dmaap latest b0ae220fcf1f 5 weeks ago 747.4 MB

mysql/mysql-server 5.6 05712b2a4b84 7 weeks ago 214.3 MB

oomk8s/ubuntu-init 1.0.0 14bb4db11858 8 weeks ago 207 MB

oomk8s/readiness-check 1.0.0 d3923ba1f99c 8 weeks ago 578.6 MB

oomk8s/mariadb-client-init 1.0.0 a5fa953bd4e0 9 weeks ago 251.1 MB

nexus3.onap.org:10001/openecomp/policy/policy-pe 1.0-STAGING-latest 2ffa01f2a8a2 4 months ago 1.421 GB

nexus3.onap.org:10001/openecomp/policy/policy-drools 1.0-STAGING-latest 389f86326698 4 months ago 1.347 GB

nexus3.onap.org:10001/openecomp/policy/policy-db 1.0-STAGING-latest 4f0ed6e92fed 4 months ago 1.142 GB

nexus3.onap.org:10001/openecomp/policy/policy-nexus 1.0-STAGING-latest 82c38af9208a 4 months ago 981.9 MB

nexus3.onap.org:10001/openecomp/sdc-cassandra 1.0-STAGING-latest 8f7c4a92530a 4 months ago 902 MB

nexus3.onap.org:10001/openecomp/sdc-kibana 1.0-STAGING-latest 287d79893e52 4 months ago 463.7 MB

nexus3.onap.org:10001/openecomp/sdc-elasticsearch 1.0-STAGING-latest 6ddf30823600 4 months ago 517.9 MB

nexus3.onap.org:10001/openecomp/sdc-frontend 1.0-STAGING-latest 1e63e5d9dff7 4 months ago 592.8 MB

nexus3.onap.org:10001/openecomp/sdc-backend 1.0-STAGING-latest 163918b87ae7 4 months ago 941.1 MB

nexus3.onap.org:10001/openecomp/portalapps 1.0-STAGING-latest ee0e08b1704b 4 months ago 1.04 GB

nexus3.onap.org:10001/openecomp/portaldb 1.0-STAGING-latest f2a8dc705ba2 4 months ago 394.5 MB

aaidocker/aai-hbase-1.2.3 latest aba535a6f8b5 7 months ago 1.562 GB

wurstmeister/zookeeper latest 351aa00d2fe9 8 months ago 478.3 MB

nexus3.onap.org:10001/mariadb 10.1.11 d1553bc7007f 17 months ago 346.5 MB

missing:

# can be replaced by public dockerHub link

dockerfiles_kafka:latest

# need DockerFile

dcae/pgaas:latest

dcae-dmaapbc:latest

dcae-collector-common-event:latest

dcae-controller:latest

dcae-ves-collector:release-1.0.0

dcae/cdap:1.0.7

Verifying Container Startup

to check that config-init has mounted properly do a "ls /dockerdata-nfs"

Cloning details

Install the latest version of the OOM (ONAP Operations Manager) project repo - specifically the ONAP on Kubernetes work just uploaded June 2017

https://gerrit.onap.org/r/gitweb?p=oom.git

...

git clone ssh://yourgerrituserid@gerrit.onap.org:29418/oom

cd oom/kubernetes/oneclick

Versions

oom : master (1.1.0-SNAPSHOT)

onap deployments: 1.0.0

Rancher environment for Kubernetes

setup a separate onap kubernetes environment and disable the exising default environment.

Adding hosts to the Kubernetes environment will kick in k8s containers

Rancher kubectl config

To be able to run the kubectl scripts - install kubectl

Nexus3 security settings

Fix nexus3 security for each namespace

in createAll.bash add the following two lines just before namespace creation - to create a secret and attach it to the namespace (thanks to Jason Hunt of IBM last friday to helping us attach it - when we were all getting our pods to come up). A better fix for the future will be to pass these in as parameters from a prod/stage/dev ecosystem config.

...

create_namespace() {

kubectl create namespace $1-$2

+ kubectl --namespace $1-$2 create secret docker-registry regsecret --docker-server=nexus3.onap.org:10001 --docker-username=docker --docker-password=docker --docker-email=email@email.com

+ kubectl --namespace $1-$2 patch serviceaccount default -p '{"imagePullSecrets": [{"name": "regsecret"}]}'

}Fix MSO mso-docker.json

Before running pod-config-init.yaml - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example

vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json

| Original | Replacement for Rackspace |

"mso-po-adapter-config": { | "mso-po-adapter-config": { |

|---|

Fix MSO mso-docker.json

Before running pod-config-init.yaml - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example

vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json

...

"mso-po-adapter-config": {

"checkrequiredparameters": "true",

"cloud_sites": [{

"aic_version": "2.5",

"id": "Ottawa",

"identity_service_id": "KVE5076_OPENSTACK",

"lcp_clli": "RegionOne",

"region_id": "RegionOne"

}],

"identity_services": [{

"admin_tenant": "services",

"dcp_clli": "KVE5076_OPENSTACK",

"identity_authentication_type": "USERNAME_PASSWORD",

"identity_server_type": "KEYSTONE",

"identity_url": "http://OPENSTACK_KEYSTONE_IP_HERE:5000/v2.0",

"member_role": "admin",

"mso_id": "dev",

"mso_pass": "dcdc0d9e4d69a667c67725a9e466e6c3",

"tenant_metadata": "true"

}],

...

"mso-po-adapter-config": {

"checkrequiredparameters": "true",

"cloud_sites": [{

"aic_version": "2.5",

"id": "Dallas",

"identity_service_id": "RAX_KEYSTONE",

"lcp_clli": "DFW", # or IAD

"region_id": "DFW"

}],

"identity_services": [{

"admin_tenant": "service",

"dcp_clli": "RAX_KEYSTONE",

"identity_authentication_type": "RACKSPACE_APIKEY",

"identity_server_type": "KEYSTONE",

"identity_url": "https://identity.api.rackspacecloud.com/v2.0",

"member_role": "admin",

"mso_id": "9998888",

"mso_pass": "YOUR_API_KEY",

"tenant_metadata": "true"

}],

delete/recreate the config poroot@obriensystemskub0:~/oom/kubernetes/config# kubectl --namespace default delete -f pod-config-init.yaml

pod "config-init" deleted

root@obriensystemskub0:~/oom/kubernetes/config# kubectl create -f pod-config-init.yaml

pod "config-init" created

or copy over your changes directly to the mount

root@obriensystemskub0:~/oom/kubernetes/config# cp docker/init/src/config/mso/mso/mso-docker.json /dockerdata-nfs/onapdemo/mso/mso/mso-docker.json

Use only "onap" namespace

Note: use only the hardcoded "onap" namespace prefix - as URLs in the config pod are set as follows "workflowSdncadapterCallback": "http://mso.onap-mso:8080/mso/SDNCAdapterCallbackService",

Monitor Container Deployment

first verify your kubernetes system is up

Then wait 29-45 min for all pods to attain 1/1 state

...

Kubernetes specific config

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Nexus Docker repo Credentials

Checking out use of a kubectl secret in the yaml files via - https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

Running a Kubernetes Cluster

Details on getting a cluster of hosts running OOM instead of a single large colocated master/host.

Deleting All Containers

Delete all the containers (and services)

...