...

(on each host) add to your /etc/hosts to point your ip to your hostname (add your hostname to the end). Add entries for all other hosts in your cluster.

Try to use root - if you use ubuntu then you will need to enable docker separately for the ubuntu user

(to fix possible modprobe: FATAL: Module aufs not found in directory /lib/modules/4.4.0-59-generic) (on each host (server and client(s) which may be the same machine)) Install only the 1.12.x (currently 1.12.6) version of Docker (the only version that works with Kubernetes in Rancher 1.6)

(on the master only) Install rancher (use 8880 instead of 8080) - note there may be issues with the dns pod in Rancher after a reboot or when running clustered hosts - a clean system will be OK -

In Rancher UI - dont use (http://127.0.0.1:8880) - use the real IP address - so the client configs are populated correctly with callbacks You must deactivate the default CATTLE environment - by adding a KUBERNETES environment - and Deactivating the older default CATTLE one - your added hosts will attach to the default

Register your host(s) - run following on each host (including the master if you are collocating the master/host on a single machine/vm) For each host, In Rancher > Infrastructure > Hosts. Select "Add Host" The first time you add a host - you will be presented with a screen containing the routable IP - hit save only on a routable IP. Enter IP of host: (if you launched racher with 127.0.0.1/localhost - otherwise keep it empty - it will autopopulate the registration with the real IP) Copy command to register host with Rancher, Execute command on host, for example:

wait for kubernetes menu to populate with CLI Install KubectlThe following will install kubectl on a linux host. Once configured, this client tool will provide management of a Kubernetes cluster.

Paste kubectl config from Rancher (you will see the CLI menu in Rancher / Kubernetes after the k8s pods are up on your host) Click on "Generate Config" to get your content to add into .kube/config Verify that Kubernetes config is good

Install HelmThe following will install Helm (use 2.3.0 not current 2.6.0) on a linux host. Helm is used by OOM for package and configuration management. Prerequisite: Install Kubectl

Undercloud done - move to ONAP clone oom (scp your onap_rsa private key first - or clone anon - Ideally you get a full gerrit account and join the community) see ssh/http/http access links below https://gerrit.onap.org/r/#/admin/projects/oom

or use https (substitute your user/pass)

Wait until all the hosts show green in rancher, then run the createConfig/createAll scripts that wraps all the kubectl commands

Source the setenv.bash script in /oom/kubernetes/oneclick/ - will set your helm list of components to start/delete

run the one time config pod - which mounts the volume /dockerdata/ contained in the pod config-init. This mount is required for all other ONAP pods to function. Note: the pod will stop after NFS creation - this is normal.

**** Creating configuration for ONAP instance: onap Wait for the config-init pod is gone before trying to bring up a component or all of ONAP - around 60 sec (up to 10 min) - see https://wiki.onap.org/display/DW/ONAP+on+Kubernetes#ONAPonKubernetes-Waitingforconfig-initcontainertofinish-20sec root@ip-172-31-93-122:~/oom_20170908/oom/kubernetes/config# kubectl get pods --all-namespaces -a onap config 0/1 Completed 0 1m Note: When using the -a option the config container will show up with the status, however when not used with the -a flag, it will not be present Don't run all the pods unless you have at least 52G allocated - if you have a laptop/VM with 16G - then you can only run enough pods to fit in around 11G

(to bring up a single service at a time) Only if you have >52G run the following (all namespaces)

ONAP is OK if everything is 1/1 in the following

Run the ONAP portal via instructions at RunningONAPusingthevnc-portal Wait until the containers are all up Run Initial healthcheck directly on the host cd /dockerdata-nfs/onap/robot ./ete-docker.sh health check AAI endpoints root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# kubectl -n onap-aai exec -it aai-service-3321436576-2snd6 bash root@aai-service-3321436576-2snd6:/# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-systemd- root 7 1 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-master root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# curl https://127.0.0.1:30233/aai/v11/service-design-and-creation/models curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none |

List of Containers

Total pods is 48 (without DCAE)

Docker container list - source of truth: https://git.onap.org/integration/tree/packaging/docker/docker-images.csv

get health via

...

Cluster ConfigurationA mount is better. Try to run you host and client on a single VM (a 64g one) - if not you can run rancher and several clients across several machines/VMs. The /dockerdata-nfs share must be replicated across the cluster using a mount or by copying the directory to the other servers from the one the "config" pod actually runs. To verify this check your / root fs on each node.

Running ONAPDon't run all the pods unless you have at least 52G allocated - if you have a laptop/VM with 16G - then you can only run enough pods to fit in around 11G

(to bring up a single service at a time) Only if you have >52G run the following (all namespaces)

ONAP is OK if everything is 1/1 in the following

Run the ONAP portal via instructions at RunningONAPusingthevnc-portal Wait until the containers are all up Run Initial healthcheck directly on the host cd /dockerdata-nfs/onap/robot ./ete-docker.sh health check AAI endpoints root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# kubectl -n onap-aai exec -it aai-service-3321436576-2snd6 bash root@aai-service-3321436576-2snd6:/# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-systemd- root 7 1 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-master root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# curl https://127.0.0.1:30233/aai/v11/service-design-and-creation/models curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none |

List of Containers

Total pods is 57

Docker container list - source of truth: https://git.onap.org/integration/tree/packaging/docker/docker-images.csv

get health via

| Code Block |

|---|

NAMESPACE master:20170715 | NAME | Image | Log Volume External | Log Locations docker internal | Public / Debug Ports | Notes | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

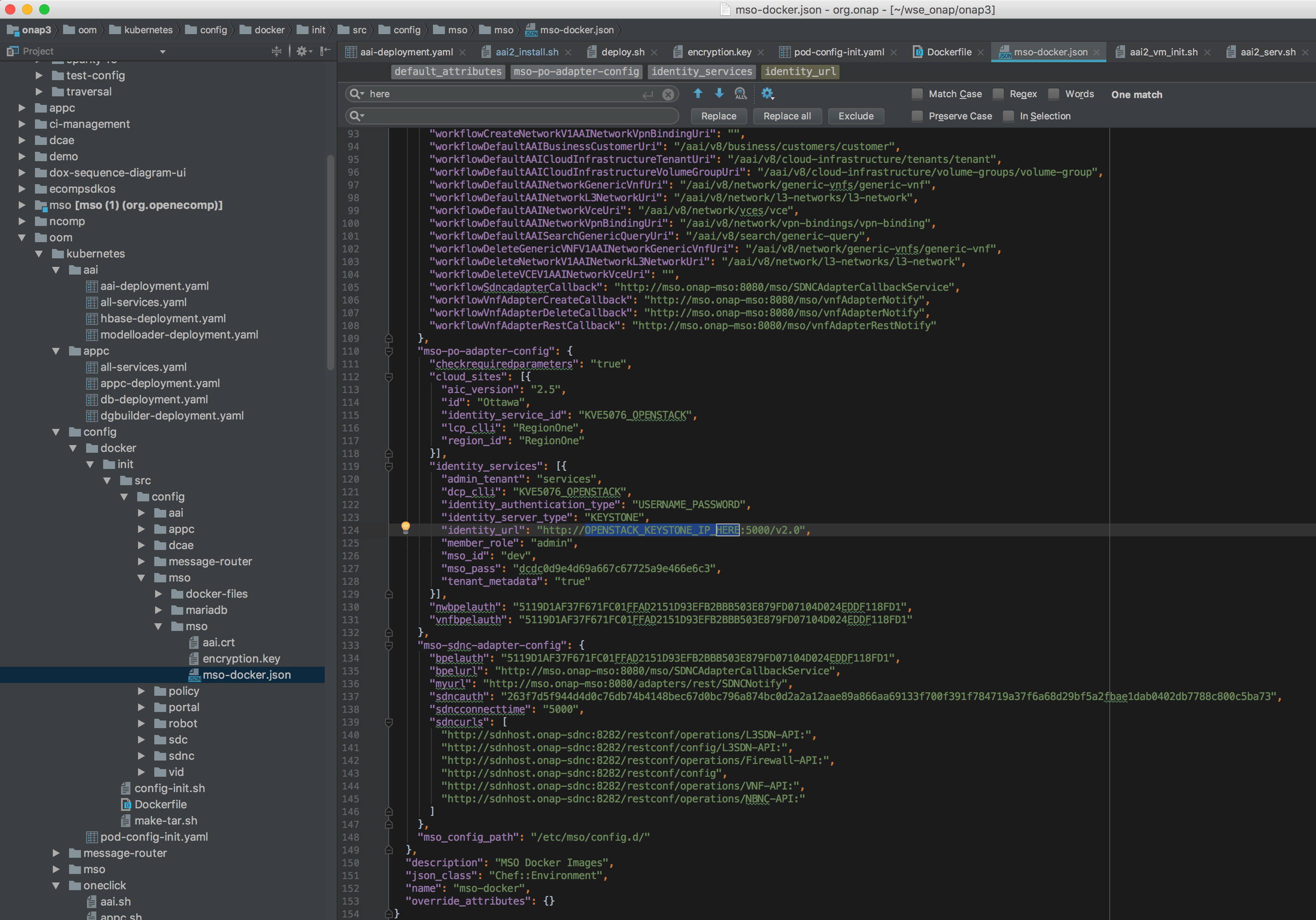

| default | config-init | The mount "config-init-root" is in the following location (user configurable VF parameter file below) /dockerdata-nfs/onapdemo/mso/mso/mso-docker.json | ||||||||||||||||||||

| onap-aai | aai-dmaap-522748218-5rw0v | |||||||||||||||||||||

| onap-aai | aai-kafka-2485280328-6264m | |||||||||||||||||||||

| onap-aai | aai-resources-3302599602-fn4xm | /opt/aai/logroot/AAI-RES | ||||||||||||||||||||

| onap-aai | aai-service-3321436576-2snd6 | |||||||||||||||||||||

| onap-aai | aai-traversal-2747464563-3c8m7 | /opt/aai/logroot/AAI-GQ | ||||||||||||||||||||

| onap-aai | aai-zookeeper-1010977228-l2h3h | |||||||||||||||||||||

| onap-aai | data-router-1397019010-t60wm | |||||||||||||||||||||

| onap-aai | elasticsearch-2660384851-k4txd | |||||||||||||||||||||

| onap-aai | gremlin-1786175088-m39jb | |||||||||||||||||||||

| onap-aai | hbase-3880914143-vp8z | |||||||||||||||||||||

| onap-aai | model-loader-service-226363973-wx6s3 | |||||||||||||||||||||

| onap-aai | search-data-service-1212351515-q4k6 | |||||||||||||||||||||

| onap-aai | sparky-be-2088640323-h2pbx | |||||||||||||||||||||

| onap-appc | appc-2044062043-bx6tc | |||||||||||||||||||||

| onap-appc | appc-dbhost-2039492951-jslts | |||||||||||||||||||||

| onap-appc | appc-dgbuilder-2934720673-mcp7c | |||||||||||||||||||||

| onap-appc | sdntldb01 (internal) | |||||||||||||||||||||

| onap-appc | sdnctldb02 (internal) | |||||||||||||||||||||

| onap-cli | ||||||||||||||||||||||

| onap-dcae | dcae-zookeeper | wurstmeister/zookeeper:latest | ||||||||||||||||||||

| onap-dcae | dcae-kafka | dockerfiles_kafka:latest | Note: currently there are no DCAE containers running yet (we are missing 6 yaml files (1 for the controller and 5 for the collector,staging,3-cdap pods)) - therefore DMaaP, VES collectors and APPC actions as the result of policy actions (closed loop) - will not function yet. In review: https://gerrit.onap.org/r/#/c/7287/

| |||||||||||||||||||

| onap-dcae | dcae-dmaap | attos/dmaap:latest | ||||||||||||||||||||

| onap-dcae | pgaas (PostgreSQL aaS | obrienlabs/pgaas | https://hub.docker.com/r/oomk8s/pgaas/tags/ | |||||||||||||||||||

| onap-dcae | dcae-collector-common-event | persistent volume: dcae-collector-pvs | ||||||||||||||||||||

| onap-dcae | dcae-collector-dmaapbc | |||||||||||||||||||||

| ||||||||||||||||||||||

| onap-dcae | dcae-ves-collector | |||||||||||||||||||||

| onap-dcae | cdap-0 | |||||||||||||||||||||

| onap-dcae | cdap-1 | |||||||||||||||||||||

| onap-dcae | cdap-2 | |||||||||||||||||||||

| onap-message-router | dmaap-3842712241-gtdkp | |||||||||||||||||||||

| onap-message-router | global-kafka-89365896-5fnq9 | |||||||||||||||||||||

| onap-message-router | zookeeper-1406540368-jdscq | |||||||||||||||||||||

| onap-msb | bring onap-msb up before the rest of onap follow

| |||||||||||||||||||||

| onap-msb | ||||||||||||||||||||||

| onap-msb | ||||||||||||||||||||||

| onap-msb | ||||||||||||||||||||||

| onap-mso | mariadb-2638235337-758zr | |||||||||||||||||||||

| onap-mso | mso-3192832250-fq6pn | |||||||||||||||||||||

| onap-multicloud | ||||||||||||||||||||||

| onap-multicloud | ||||||||||||||||||||||

| onap-policy | brmsgw-568914601-d5z71 | |||||||||||||||||||||

| onap-policy | drools-1450928085-099m2 | |||||||||||||||||||||

| onap-policy | mariadb-2932363958-0l05g | |||||||||||||||||||||

| onap-policy | nexus-871440171-tqq4z | |||||||||||||||||||||

| onap-policy | pap-2218784661-xlj0n | |||||||||||||||||||||

| onap-policy | pdp-1677094700-75wpj | |||||||||||||||||||||

| onap-policy | pypdp-3209460526-bwm6b | 1.0.0 only | ||||||||||||||||||||

| onap-portal | portalapps-1708810953-trz47 | |||||||||||||||||||||

| onap-portal | portaldb-3652211058-vsg8r | |||||||||||||||||||||

| onap-portal | portalwidgets-1728801515-r825g | |||||||||||||||||||||

| onap-portal | vnc-portal-948446550-76kj7 | |||||||||||||||||||||

| onap-robot | robot-964706867-czr05 | |||||||||||||||||||||

| onap-sdc | sdc-be-2426613560-jv8sk | /dockerdata-nfs/onap/sdc/logs/SDC/SDC-BE | ${log.home}/${OPENECOMP-component-name}/ ${OPENECOMP-subcomponent-name}/transaction.log.%i./var/lib/jetty/logs/SDC/SDC-BE/metrics.log ./var/lib/jetty/logs/SDC/SDC-BE/audit.log ./var/lib/jetty/logs/SDC/SDC-BE/debug_by_package.log ./var/lib/jetty/logs/SDC/SDC-BE/debug.log ./var/lib/jetty/logs/SDC/SDC-BE/transaction.log ./var/lib/jetty/logs/SDC/SDC-BE/error.log ./var/lib/jetty/logs/importNormativeAll.log ./var/lib/jetty/logs/ASDC/ASDC-FE/audit.log ./var/lib/jetty/logs/ASDC/ASDC-FE/debug.log ./var/lib/jetty/logs/ASDC/ASDC-FE/transaction.log ./var/lib/jetty/logs/ASDC/ASDC-FE/error.log ./var/lib/jetty/logs/2017_09_06.stderrout.log | |||||||||||||||||||

| onap-sdc | sdc-cs-2080334320-95dq8 | |||||||||||||||||||||

| onap-sdc | sdc-es-3272676451-skf7z | |||||||||||||||||||||

| onap-sdc | sdc-fe-931927019-nt94t | ./var/lib/jetty/logs/SDC/SDC-BE/metrics.log ./var/lib/jetty/logs/SDC/SDC-BE/audit.log ./var/lib/jetty/logs/SDC/SDC-BE/debug_by_package.log ./var/lib/jetty/logs/SDC/SDC-BE/debug.log ./var/lib/jetty/logs/SDC/SDC-BE/transaction.log ./var/lib/jetty/logs/SDC/SDC-BE/error.log ./var/lib/jetty/logs/importNormativeAll.log ./var/lib/jetty/logs/2017_09_07.stderrout.log ./var/lib/jetty/logs/ASDC/ASDC-FE/audit.log ./var/lib/jetty/logs/ASDC/ASDC-FE/debug.log ./var/lib/jetty/logs/ASDC/ASDC-FE/transaction.log ./var/lib/jetty/logs/ASDC/ASDC-FE/error.log ./var/lib/jetty/logs/2017_09_06.stderrout.log | ||||||||||||||||||||

| onap-sdc | sdc-kb-3337231379-8m8wx | |||||||||||||||||||||

| onap-sdnc | sdnc-1788655913-vvxlj | ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/journal/000006.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/cache/1504712225751.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/cache/1504712002358.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/tmp/xql.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/log/karaf.log ./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/taglist.log ./var/log/dpkg.log ./var/log/apt/history.log ./var/log/apt/term.log ./var/log/fontconfig.log ./var/log/alternatives.log ./var/log/bootstrap.log | ||||||||||||||||||||

| onap-sdnc | sdnc-dbhost-240465348-kv8vf | |||||||||||||||||||||

| onap-sdnc | sdnc-dgbuilder-4164493163-cp6rx | |||||||||||||||||||||

| onap-sdnc | sdnctlbd01 (internal) | |||||||||||||||||||||

| onap-sdnc | sdnctlb02 (internal) | |||||||||||||||||||||

| onap-sdnc | sdnc-portal-2324831407-50811 | ./opt/openecomp/sdnc/admportal/server/npm-debug.log ./var/log/dpkg.log ./var/log/apt/history.log ./var/log/apt/term.log ./var/log/fontconfig.log ./var/log/alternatives.log ./var/log/bootstrap.log | ||||||||||||||||||||

| onap-vid | vid-mariadb-4268497828-81hm0 | |||||||||||||||||||||

| onap-vid | vid-server-2331936551-6gxsp |

Fix MSO mso-docker.json (deprecated)

Before running pod-config-init.yaml - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example

...