| Table of Contents | ||

|---|---|---|

|

Kubernetes based Cloud-region support

Code Name: K8S

This page tracks the related materials/discussions/etc related to cloud regions that are controlled by Kubernetes.

As starting point, this effort has started as small subgroup of multicloud as task force.As the efforts evolve, logistics would be revised. Maybe this task force would be promoted to a independent group or an independent project.

Meetings

- [coe] Team ONAP5, (Tue) UTC 20:00 / ET 16:00 / PT 13:00 from Jan 16/17, 2018. weekly meeting

Slides/links

- Slides presented to architecture subcommittee to start this project as PoC :

View file name K8S_for_VNFs_And_ONAP_Support.pptx height 250

Project (This is sub project of Multi-Cloud as decided by Architecture subcommittee & Multi-Cloud team)

Project name: K8S based cloud-region support

code name: K8S

Repository name: multicloud/k8s

Background:

As of ONAP R2, ONAP can only orchestrate VNFs across cloud regions that have Openstack and its variations as VIM. Supported Multi-Cloud plugins include VMWare VIO, Windriver Titanium, Openstack Newton and Openstack Ocata. VMWare VIO and Windriver Titanium are vendor supported Openstack projects with additional features.

As of ONAP R2, ONAP can only deploy VM workload types.

Need for containers are felt for following reasons

- Resource constrained Edges : Power and space constraints limit number of compute nodes that can be deployed in edges.

- Ubiquity of container support : Many cloud providers are now supporting containerized workload using Kubernetes.

- Micro Service Architecture based solutions: Containers are considered to be ideal solution to implement micro-services.

Kubernetes is one of the popular container orchestrators. K8S is supported by GCE, AZURE and AWS and will be supported by Akraino Edge stack that enable edge clouds.

K8S is also being enhanced to support VM workload types too. This helps cloud-regions that need to support VMs while moving some workloads to containers. For security reason, cloud-regions may continue to support VM types for security reasons and hence there is need for VIM that support both VMs and containers.

Since same K8S instance can orchestrate both VM and container workload types, same compute nodes can be leveraged for both VMs and containers.

Telco and CSPs are seeing similar need to deploy networking applications as containers

There are few differences between containers for Enterprise applications and networking applications. Networking applications are of three types - Management applications, Control plane applications and data plane applications. Management and control plane applications are similar to Enterprise applications, but data plane applications different in following aspects:

- Multiple virtual network interfaces

- Multiple IP addresses

- SRIOV networking support

- Programmable virtual switch (for service function chaining, to tap the traffic for visibility etc..)

Since, both VMs and container workloads are used for networking applications, there would be need for

- Sharing the networks across VMs and containers.

- Sharing the volumes across VMs and containers.

Proposal 1 & Proposal 2 feedback from community

Please see slides attached in slides/links section on detailed information on Proposal 1 and Proposal 2.

In summary:

Proposal 1: All the orchestration information is represented as TOSCA based service templates. There is no cloud technology specific artifacts. All the information about VNF, VDU, VL and Volumes are represented as per ETSI SOL specifications. In this proposal, ONAP with the help of Multi-Cloud translates the TOSCA representation of VNF/VDU/VL/Volume to Cloud technology specific API information before issuing cloud technology specific API calls.

Proposal 2: In this proposal, service orchestration information is represented in TOSCA grammar, but majority of VNFD/VDU/VL/Volume information is represented in cloud-technology specific artifacts. These artifacts are part of the VNF portion of CSAR. Since, cloud region selected is unknown during the VNF onboarding, multiple cloud-technology specific artifacts would need to be part of CSAR. Right artifact is chosen at run time by ONAP. Cloud technology specific artifacts are : HOT in case of Openstack, ARM in case of Azure, K8S deployment information in case of K8S etc ..

Though Proposal 1 is ideal, for pragmatic reasons Proposal 2 is chosen in this POC. Few reasons are given here:

- TOSCA way of representing Kubernetes deployment is not popular today. Many container vendors are comfortable in providing K8S deployment yaml files or Helm charts.

- Current TOSCA standards don't expose all the capabilities of K8S. TOSCA based container standards can take lot of time in standardizing and agreed upon by the community. But, there is a need now to support K8S based cloud regions.

- K8S community is very active and features supported by various K8S providers can differ from one another. VNF vendors are aware of that and create VNF images that take advantage of those features. It is felt that ONAP should not hinder these deployments.

Project Description:

The effort will investigate/drive a way to allow ONAP to deploy/manage Network Services/VNFs over cloud regions that support K8S orchestrator.

This project enhances ONAP to support VNFs as containers in addition to VNFs as VMs.

This project is treated as POC project for Casablanca.

Though it may identify the changes required in VNF package, SDC, OOF, SO and Modeling, it will not try to enhance these projects as part of this effort.

This project is described using API capabilities and its functionality.

- Create a Multi-Cloud plugin service that interacts with Cloud regions supporting K8S

- VNF Bring up:

- API: Exposes API to upper layers in ONAP to bring up VNF.

- Currently Proposal 2 (Please see the attached presentation and referenced in Slides/Links section) seems to be the choice.

- Information expected by this plugin :

- K8S deployment information (in the form understood by K8S), which is opaque to rest of ONAP. This information is normally expected to be provided as part of VNF onboarding in CSAR)

- TBD - Is this artifact passed to Multi-Cloud as reference or is it going to be passed as immediate data from the upper layers of ONAP.

- Metadata information collected by upper layers of ONAP

- Cloud region ID

- Set of compute profiles (One for each VDU within the VNF).

- TBD - Is there anything to be passed

- K8S deployment information (in the form understood by K8S), which is opaque to rest of ONAP. This information is normally expected to be provided as part of VNF onboarding in CSAR)

- Functionality:

- Instantiate VNFs that only consist of VMs.

- Instantiate VNFs that only consist of containers

- Instantiate multiple VNFs (some VNFs realized as VMs and some VNFs realized as containers) that communicate with each other on same networks (External Connection Points of various VNFs could be on the same network)

- Reference to newly brought up VNF is stored in A&AI (Which is needed when VNF needs to be brought down, modify the deployment)

- TBD - Should it populate A&AI with reference to each VM & Container instance of VNF? Or one reference to entire VNF instance good enough? Assuming that there is a need for storing reference to each VM/Container instance in A&AI, some exploration is required to see whether this information is made available by K8S API or should it watch for the events from K8S?

- TBD - Is there any other information that this plugin is expected to populate A&AI DB (IP address of each VM/Container instance) and anything else?

- API: Exposes API to upper layers in ONAP to bring up VNF.

- VNF Bring down:

- API: Exposes API function to upper layers in ONAP to terminate VNF

- Functionality: Based on the request coming from upper ONAP layer, it will terminate the VNF that was created earlier.

- Scaling within VNF:

- It leaves the decision of scaling-out and scaling-in of services of the VNF to the K8S controller at the cloud-region.

- (TBD) - How the configuration life cycle management be taken care?

- Should the plugin watch for new replicas being created by K8S and inform APPC, which in turn sends the configuration?

- Or should we let the new instance that is being brought up talk to APP-C or anything else and let it get the latest configuration?

- Healing & Workload Movement (Not part of Casablanca)

- No API is expected as it is assumed that K8S master at the cloud region will take care of this.

- TBD - Is there any information to be populated in the A&AI when some healing or workload movement occurs that the cloud-region.

- VNF scaling: (Not part of Casablanca)

- API : Scaling of entire VNF

- Similar to VNF bring up.

- API : Scaling of entire VNF

- Create Virtual Link:

- API : Exposes API to create virtual link

- Meta data

- Opaque information (Since OVN + SRIOV are chosen, opaque information passed to it is amenable to create networks and subnets as per the OVN/SRIOV Controller capabilities)

- Reference to the newly created network is added to the A&AI.

- If network already exists, it is expected that use count is incremented.

- Functionality:

- Creates network if it does not exist.

- Using OVN/SRIOV CNI API, it will populate remote DHCP/DNS Servers.

- TBD : Need to understand OVN controller and SRIOV controller capabilities and figure out the functionality of this API in this plugin.

- API : Exposes API to create virtual link

- Delete Virtual Link:

- API : Exposes API to delete virtual network

- Functionality:

- If there is no reference to this network (if use count is 0), then using OVN/SRIOV controllers, it deletes the virtual network.

- Create persistent volume

- Create volume that needs to exist across VNF life cycle.

- Delete persistent volume

- Delete volume

- VNF Bring up:

FOLLOWING SECTIONS are YET TO BE UPDATED

Goal and scope

the first target of container/COE is k8s. but other container/COE technology, e.g. docker swarm, is not precluded. If volunteers steps up for it, it would be also addressed.

- Have ONAP take advantage of container/COE technology for cloud native era

- Utilizing of industry momentum/direction for container/COE

Influence/feedback the related technologies(e.g. TOSCA, container/COE)

Teach ONAP container/COE instead of openstack so that VNFs can be deployed/run over container/COE in cloud native way

At the same time it's important to keep ONAP working, not break them.

- Don’t change the existing components/work flow with (mostly) zero impact.

- Leverage the existing interfaces and the integration points where possible

Functionality

- Allow VNFs within container through container orchestration managed by ONAP same to VM based VIM.

- Allow closed loop feedback and policy

- Allow container based VNFs to be designed

- Allow container based VNFs be configured and monitored.

- Kubernetes cloud infrastructure as initial container orchestration technology under Multi-Cloud project.

API/Interfaces

Swagger API:

| View file | ||||

|---|---|---|---|---|

|

the following table summarizes the impact on other projects

component | comment |

modelling | New names of Data model to describe k8s node/COE instead of compute/openstack. Already modeling for k8s is being discussed. |

OOF | New policy to use COE, to run VNF in container |

A&AI/ESR | Schema extensions to represent k8s data. (kay value pairs) |

Multicloud | New plugin for COE/k8s. (depending on the community discussion, ARIA and helm support needs to be considered. But this is contained within multicloud project.) |

First target for first release

the scope of Beijing is

Scope for Beijing

First baby step to support containers in a Kubernetes cluster via a Multicloud SBI / Plugin

Minimal implementation with zero impact on MVP of Multicloud Beijing work

Use Cases

Sample VNFs(vFW and vDNS)

integration scenario

Register/unregister k8s cluster instance which is already deployed. (dynamic deployment of k8s is out of scope)

onboard VNFD/NSD to use container

Instantiate / de-instantiate containerized VNFs through K8S Plugin in K8S cluster

Vnf configuration with sample VNFs(vFW, vDNS)

Target for later release

- Installer/test/integration

- More container orchestration technology

- More than sample VNFs

- delegating functionalities to CoE/K8S

Non-Goal/out of scope

The followings are not goal or out-of-scope of this proposal.

- Not installer/deployment. ONAP running over container

- OOM project ONAP on kubernetes

- https://wiki.onap.org/pages/viewpage.action?pageId=3247305

- https://wiki.onap.org/display/DW/ONAP+Operations+Manager+Project

- Self hosting/management might be possible. But it would be further phase.

- container/COE deployment

- On-demand Installing container/coe on public cloud/VMs/baremetal as cloud deployment

- This is also out of scope for now.

- For ease of use/deployment, this will be further phase.

Architecture Alignment.

How does this project fit into the rest of the ONAP Architecture?

The architecture (will be)is designed to enhancement to some existing project.

It doesn’t introduce new dependency

How does this align with external standards/specifications?

Convert TOSCA model to each container northbound APIs in some ONAP component. To be discussed.

Are there dependencies with other open source projects?

Kubernetes pod API or other container northbound AP

CNCF(Cloud Native Computing Foundation), OCI(Open Container Initiative), CNI(Container Networking interface)

UseCases

- sample VNF(vFW and vDNS): In Beijing only deploying those VNF over CoE

- other potential usecases(vCPE) are addressed after Beijing release.

the work flow to register k8s instance is depicted as follows

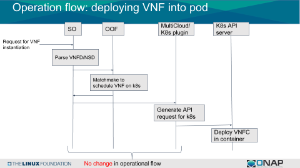

the work flow to deploy VNF into pod is as follows

Other Information:

link to seed code (if applicable) N/A

Vendor Neutral

if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

Meets Board policy (including IPR)

Use the above information to create a key project facts section on your project page

Key Project Facts:

This project will be subproject of Multicloud project. Isaku will lead this effort under the umbrella of multicloud project.

NOTE: if this effort is sub-project of multicloud as ARC committee recommended, this will be same to multicloud's.

Facts | Info |

|---|---|

| PTL (first and last name) | same to multicloud project |

| Jira Project Name | same to multicloud project |

| Jira Key | same to multicloud project |

| Project ID | same to multicloud project |

| Link to Wiki Space | Support for K8S (Kubernetes) based Cloud regions |

Release Components Name:

Note: refer to existing project for details on how to fill out this table

Components Name | Components Repository name | Maven Group ID | Components Description |

|---|---|---|---|

| container | multicloud/container | org.onap.multicloud.container | container orchestration engine cloud infrastructure |

Resources committed to the Release:

Note 1: No more than 5 committers per project. Balance the committers list and avoid members representing only one company.

Note 2: It is critical to complete all the information requested, that will help to fast forward the onboarding process.

Role | First Name Last Name | Linux Foundation ID | Email Address | Location |

|---|---|---|---|---|

| committer | Isaku Yamahata | yamahata | isaku.yamahata@gmail.com | PT(pacific time zone) |

| contributors | Munish Agarwal | Munish.Agarwal@ericsson.com | ||

| Bin Hu | bh526r | bh526r@att.com | ||

| Manjeet S. Bhatia | manjeets | |||

| Phuoc Hoang | hoangphuocbk | phuoc.hc@dcn.ssu.ac.kr | ||

| Mohamed ElSerngawy | melserngawy | mohamed.elserngawy@kontron.com | EST | |

| Interested (will attend my first on 20180206) - part of oom and logging projects | michaelobrien | frank.obrien@amdocs.com | EST (GMT-5) | |

| Victor Morales | electrocucaracha | victor.morale@intel.com | PST |