Draft. - Withdrawn as a standalone project. Will be handled as a Sub project of OOM

Project Name:

- Proposed name for the project: ONAP Operations Manager / ONAP on Containers

- Proposed name for the repository: oom/containers

Project description:

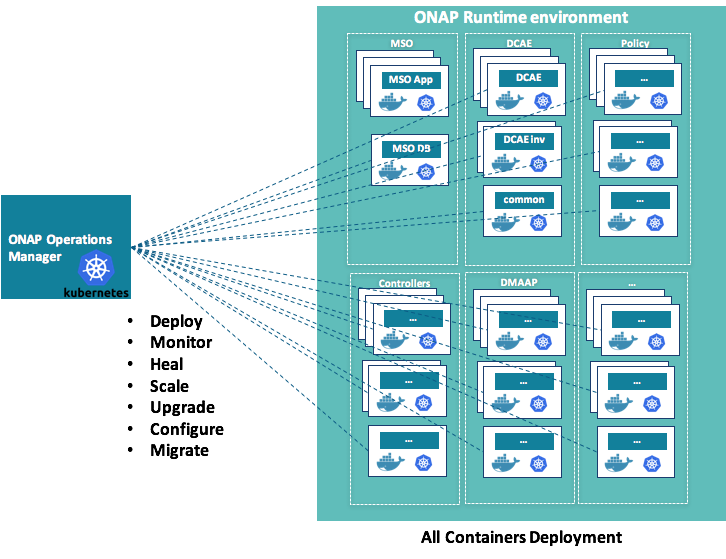

This project describes a deployment and orchestration option for the ONAP platform components (MSO, SDNC, DCAE, etc.) based on Docker containers and the open-source Kubernetes container management system. This solution removes the need for VMs to be deployed on the servers hosting ONAP components and allows Docker containers to directly run on the host operating system. As ONAP uses Docker containers presently, minimal changes to existing ONAP artifacts will be required.

The primary benefits of this approach are as follows:

- Life-cycle Management. Kubernetes is a comprehensive system for managing the life-cycle of containerized applications. Its use as a platform manager will ease the deployment of ONAP, provide fault tolerance and horizontal scalability, and enable seamless upgrades.

- Hardware Efficiency. As opposed to VMs that require a guest operating system be deployed along with the application, containers provide similar application encapsulation with neither the computing, memory and storage overhead nor the associated long term support costs of those guest operating systems. An informal goal of the project is to be able to create a development deployment of ONAP that can be hosted on a laptop.

- Deployment Speed. Eliminating the guest operating system results in containers coming into service much faster than a VM equivalent. This advantage can be particularly useful for ONAP where rapid reaction to inevitable failures will be critical in production environments.

- Cloud Provider Flexibility. A Kubernetes deployment of ONAP enables hosting the platform on multiple hosted cloud solutions like Google Compute Engine, AWS EC2, Microsoft Azure, CenturyLink Cloud, IBM Bluemix and more.

In no way does this project impair or undervalue the VM deployment methodology currently used in ONAP. Selection of an appropriate deployment solution is left to the ONAP user.

The ONAP on Containers project is part of the ONAP Operations Manager project and focuses on (as shown in green):

- Converting ONAP components deployment to docker containers

- Orchestrating ONAP components life cycle using Kubernetes

As part of the OOM project, it will manage the lifecycle of individual containers on the ONAP runtime environment.

Challenges

Although the current structure of ONAP lends itself to a container based manager there are challenges that need to be overcome to complete this project as follows:

- Duplicate containers – The VM structure of ONAP hides internal container structure from each of the components including the existence of duplicate containers such as Maria DB.

- DCAE - The DCAE component not only is not containerized but also includes its own VM orchestration system. A possible solution is to not use the DCAE Controller but port this controller’s policies to Kubenetes directly, such as scaling CDAP nodes to match offered capacity.

- Ports - Flattening the containers also expose port conflicts between the containers which need to be resolved.

- Permanent Volumes - One or more permanent volumes need to be established to hold non-ephemeral configuration and state data.

- Configuration Parameters - Currently ONAP configuration parameters are stored in multiple files; a solution to coordinate these configuration parameters is required. Kubernetes Config Maps may provide a solution or at least partial solution to this problem.

- Container Dependencies – ONAP has built-in temporal dependencies between containers on startup. Supporting these dependencies will likely result in multiple Kubernetes deployment specifications.

Scope:

- In scope: ONAP Operations Manager implementation using docker containers and kubernetes, i.e.

- Platform Deployment: Automated deployment/un-deployment of ONAP instance(s) / Automated deployment/un-deployment of individual platform components using docker containers & kubernetes

- Platform Monitoring & healing: Monitor platform state, Platform health checks, fault tolerance and self-healing using docker containers & kubernetes

- Platform Scaling: Platform horizontal scalability through using docker containers & kubernetes

- Platform Upgrades: Platform upgrades using docker containers & kubernetes

- Platform Configurations: Manage overall platform components configurations using docker containers & kubernetes

- Platform migrations: Manage migration of platform components using docker containers & kubernetes

- Out of scope: support of container networking for VNFs. The project is about containerization of the ONAP platform itself.

Architecture Alignment:

- How does this project fit into the rest of the ONAP Architecture?

- Please Include architecture diagram if possible

- What other ONAP projects does this project depend on?

- ONAP Operations Manager (OOM) [Formerly called ONAP Controller]: The ONAP on Containers project is a sub-project of OOM focusing on docker/kubernetes management of the ONAP platform components

- The current proposed "System Integration and Testing" (Integration) Project might have a dependency on this project - use OOM to deploy/undeploy/change the test environments, including creation of the container layer.

This project has also a dependency on the LF infrastructure (seed code from ci-management project)

- How does this align with external standards/specifications?

- N/A

- Are there dependencies with other open source projects?

- Docker

- Kubernetes

Resources:

- Primary Contact Person: David Sauvageau (Bell Canada)

- Roger Maitland (Amdocs)

- Jérôme Doucerain (Bell Canada)

- Marc-Alexandre Choquette ((Bell Canada)

Alexis De Talhouët (Bell Canada)

- Mike Elliott (Amdocs)

- Mandeep Khinda (Amdocs)

- Catherine Lefevre (AT&T)

- John Ng (AT&T)

- Arthur Berezin (Gigaspaces)

- John Murray (AT&T)

- Christopher Rath (AT&T)

- Éric Debeau (Orange)

- David Blaisonneau (Orange)

Alon Strikovsky (Amdocs)

Yury Novitsky (Amdocs)

Eliyahu Noach (Amdocs)

Elhay Efrat (Amdocs)

- Xin Miao (Futurewei)

- Josef Reisinger (IBM)

- Jochen Kappel (IBM)

- Jason Hunt (IBM)

- Earle West (AT&T)

- Hong Guan(AT&T)

Other Information:

- link to seed code (if applicable)

Docker/kubernetes seed code available from Bell Canada & AMDOCS - Waiting for repo availability.aai/aai-data AAI Chef environment filesaai/logging-service AAI common logging libraryaai/model-loader Loads SDC Models into A&AIappc/deployment APPC docker deploymentci-management - Management repo for Jenkins Job Builder, builder scripts and management related to the CI configuration.

mso/mso-config - mso-config Chef cookbook

dcae/apod/buildtools - Tools for building and packaging DCAE Analytics applications for deployment

dcae/apod/cdap - DCAE Analytics' CDAP cluster installation

dcae/operation - DCAE Operational Tools

dcae/operation/utils - DCAE Logging Library

dcae/utils - DCAE utilities

dcae/utils/buildtools - DCAE utility: package building tool

mso/chef-repo - Berkshelf environment repo for mso/mso-config

mso/docker-config -MSO Docker composition and lab config templatencomp/docker - SOMF Docker Adaptor

policy/docker - Contains the Policy Dockerfile's and docker compose script for building Policy Component docker images.

sdnc/oam - SDNC OAM

More seed code on docker deployments and kubernetes configurations to be provided by Bell Canada/Amdocs shortly.

- Vendor Neutral

- if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

- Meets Board policy (including IPR)

Use the above information to create a key project facts section on your project page

Key Project Facts

Project Name:

- JIRA project name: ONAP Operations Manager / ONAP on Containers

- JIRA project prefix: oom/containers

Repo name: oom/containers

Lifecycle State: Incubation

Primary Contact: David Sauvageau

Project Lead: David Sauvageau

mailing list tag oom-containers

Committers (Name - Email - IRC):

- Jérôme Doucerain - jerome.doucerain@bell.ca

- Alexis de Talhouët - alexis.de_talhouet@bell.ca - adetalhouet

- Mike Elliott - mike.elliott@amdocs.com

- Mandeep Khinda - mandeep.khinda@amdocs.com

- Éric Debeau - eric.debeau@orange.com

- Xiaolong Kong - xiaolong.kong@orange.com

- John Murray - jfm@research.att.com

Alon Strikovsky - Alon.Strikovsky@amdocs.com

Yury Novitsky - Yury.Novitsky@Amdocs.com

Eliyahu Noach - Eliyahu.Noach@amdocs.com

Elhay Efrat - Elhay.Efrat1@amdocs.com

Xin Miao - xin.miao@huawei.com

Julien Bertozzi - jb379x@att.com

Christopher Closset cc697w@intl.att.com

Earle West ew8463@att.com (AT&T)

- Hong Guan - hg4105@att.com

*Link to TSC approval:

Link to approval of additional submitters: Jochen Kappel

10 Comments

Christopher Price

This is a great idea. I would suggest looking into https://github.com/openstack/loci where OpenStack is working on the same principals using the build system to auto-generate lightweight OCI container images. These can then be used (both locally and by other consumers) directly as compact container images. Maybe a good process to look at as part of the this project.

Christopher Price

Another approach would be to use LXC as it allows you to seamlessly deploy either in containers or to baremetal using the same CI pipeline. This is the approach of the XCi work being used through OPNFV, OpenDaylight and FD.io at the moment primarily due to the flexibility allowed.

Earle West

It seems to me the use of containers (specifically Docker containers), and/or VMs (linux or not), is a question that should be entirely separated from the DEFINITION of the ONAP Operations Manager. One might expect VNF designers and the designers of services using VNFs might indeed want to optimize their own designs to use some combination of these and/or go for a common type of compute vehicle. One can readily imagine VNF designs that --when optimized-- use both (likely DB on VMs and web GUI on Docker). Indeed, a transition plan on the part of VNF vendors might reasonably begin with VM and end with Docker over a product life cycle.

In this context --of having this kind of choice-- one would expect an OOM to handle the both of these, and future evolutionary solutions that stem from advances in virtualization (I'm thinking of AWS Lambda service) as an example of extreme virtualization.

Michael O'Brien

Earle, I agree, the implementation and specification of the orchestrator could be distinct and decoupled so either can vary. Currently the switch to event-driven Function as a service as the most cost effective model for sparse clients (where running even an auto-scaled persistent tiny set of VMs is too much cost overhead) - favours AWS Lambda or the ECS container service. We should think about supporting client models where containers are run as "Containers as a service:" and pushed to the provider - like Netflix where a VM is spun up per client request - if there is no client traffic then only network infrastructure stays up (at almost no cost) - no VM's or event-driven functions run until they are required.

However this would be a future feature because of the large size/scope of the change.

20170526: Jason, Roger, Yes, I agree - the scope for this project/iteration is specifically ONAP.

Jason Hunt

I like the discussion about how VNFs might evolve over time to be run in containers or even serverless/FaaS environments.

For the purposes of this project proposal, though, the scope is only the ONAP components themselves, not the VNFs. As I understand it, this is about running SDC, SO, APPC, and all the other components on a containers-based environment for ease of installation, management, scaling, etc. The VNFs would still run on the VIM, and it would be the modeling, SO, controllers, and multi-VIM projects that would have to deal with VNFs that could run in containers, VMs, or serverless environments.

Someone please correct me if my understanding is not accurate.

Roger Maitland

Jason, you are correct, this project considers only the ONAP components and not the service providing VNFs which is much larger project.

Earle West

I'll capitulate on this round, but my comments on the CCF project above suggest we may have arbitrarily divided the world into "so" orchestration of VNFs, and ONAP-OM orchestration of the the ONAP solution. It seems to me that at the center of orchestration is a common set of powerful (CCF) tools that configure things for operational use, whether they are VNFs, or ONAP components. In that context, even VNFs would benefit from serverless/FaaS and/or Dockerized components. Indeed, I'd suggest VNFs (or their APP-Cs) might be among the first to be dockerized.

Jason Hunt

Earle – I agree that ONAP will eventually need to figure out how to address VNFs that are delivered as containers or in serverless environments. Hopefully, when ONAP needs to handle containerized VNFs, we can leverage lessons learned from this project to build that support – I believe that is also your point? My guess is that we'll be able to containerize most of ONAP long before we see many containerized VNFs.

Steven Wright

Jason,

VNF Requirements project may be a place to start considerations of future requirements for containerized VNFs

Giulio Graziani

Intel has contributed the following new functionality to Kubernetes that some of the ONAP apps/components may be able to leverage depending on their needs:

1) Multus Container Networking Interface (CNI) Plugin

2) Single Root I/O virtualization (SR-IOV) CNI Plugin

3) Node Feature Discovery (NFD) (available on GitHub.

4) CPU Core Manager for Kubernetes (Open-sourcing in progress)

More information on these features can be found here: https://builders.intel.com/docs/networkbuilders/enabling_new_features_in_kubernetes_for_NFV.pdf