| Table of Contents |

|---|

Overview

For ONAP SDN-R load and stress test and Proof of concept, June 19 a three node SDN-R cluster is used. Version is El Alto.

...

| draw.io Diagram | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

OpenStack Preparation

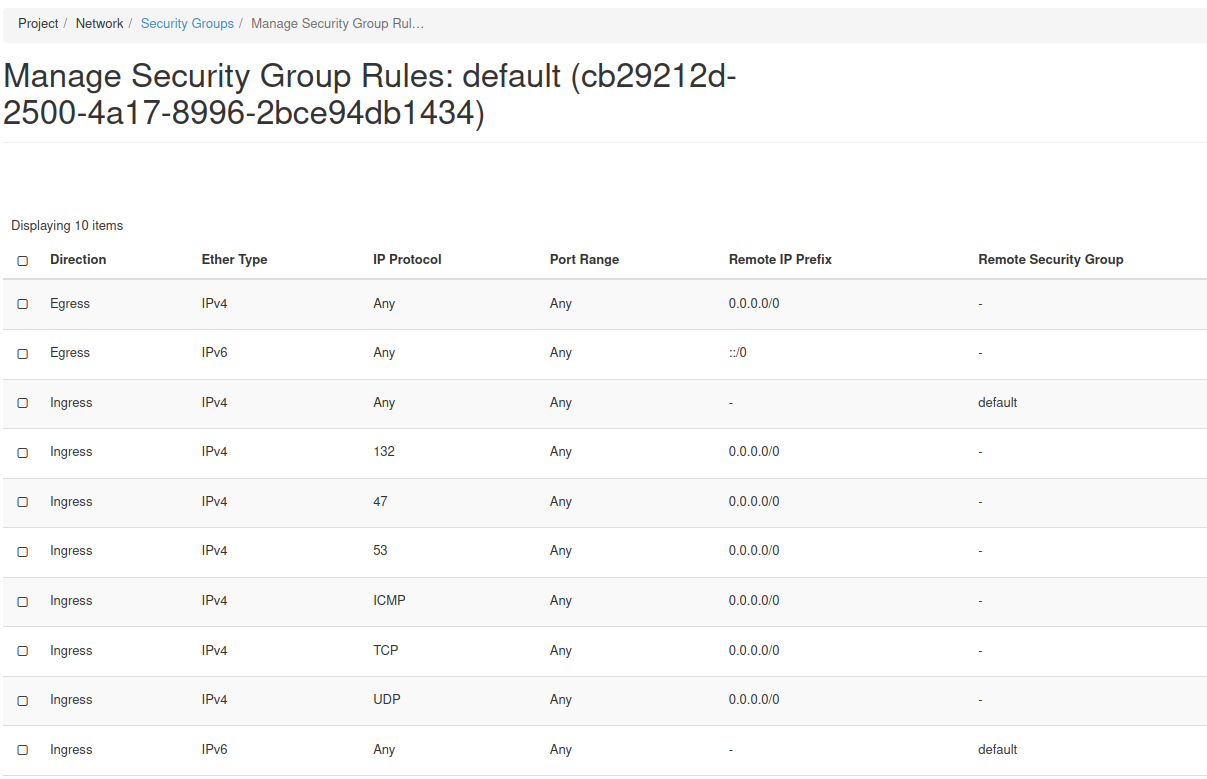

Required Security Groups Rules

Installing Kubernetes and Rancher

Create the Rancher 3 nodes control cluster named as onap-control on OpenStack

The following instructions describe how to create 3 OpenStack VMs to host the Highly-Available Kubernetes Control Plane. ONAP workloads will not be scheduled on these Control Plane nodes.

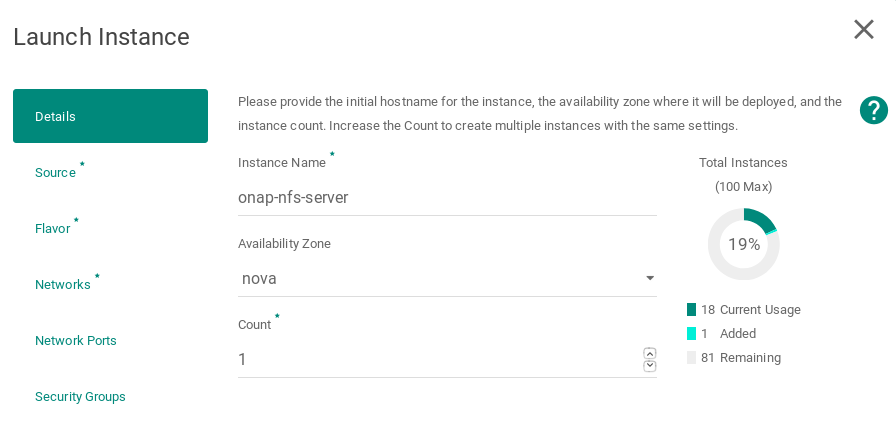

Launch new VMs in OpenStack.

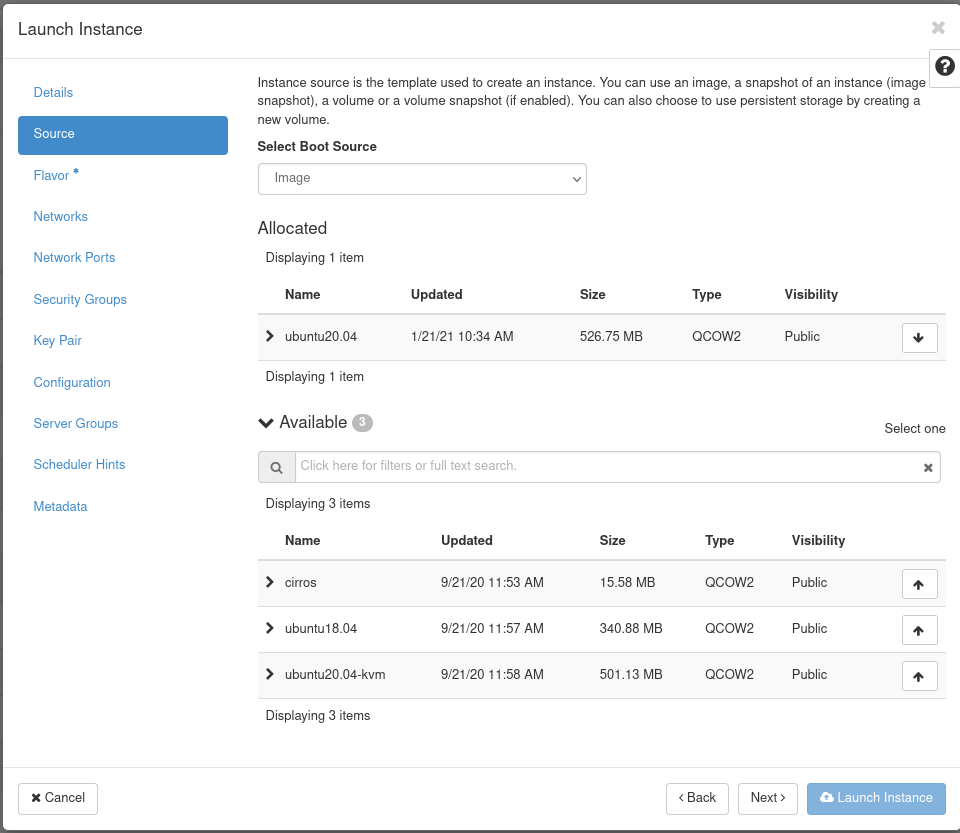

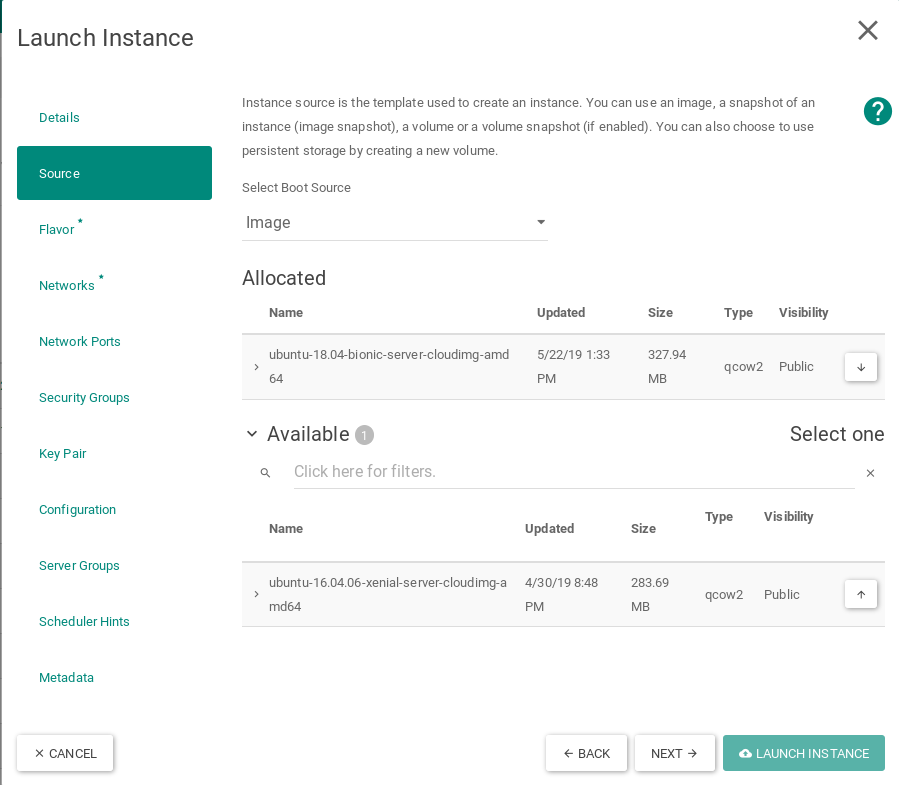

Select Ubuntu 20.04 as the boot image for the virtual machine without any volume

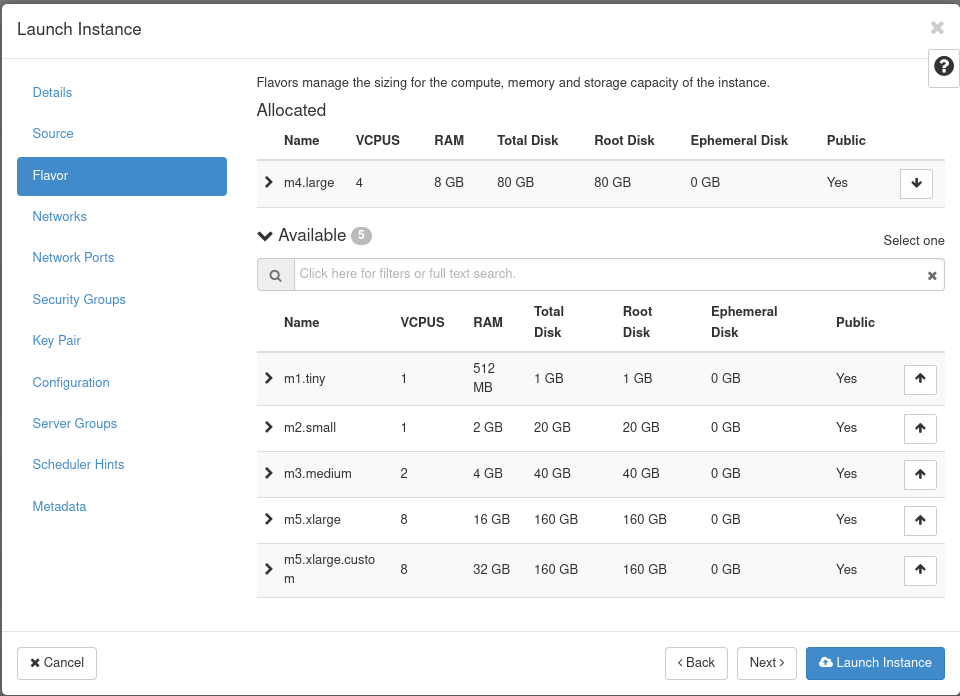

Select m4.large flavor

Networking

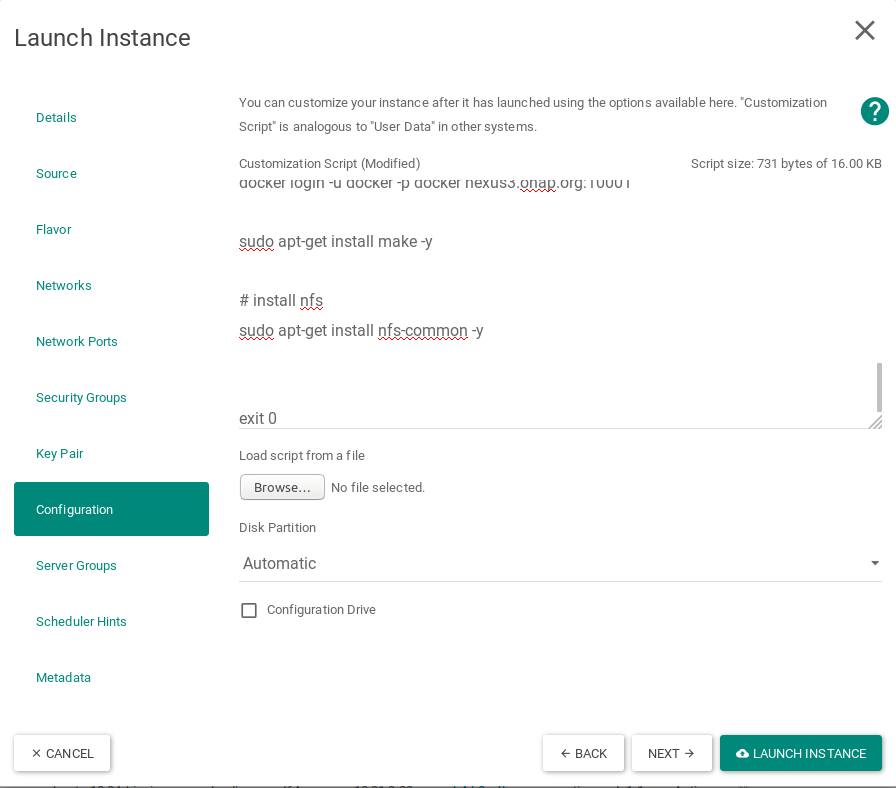

Apply customization script for Control Plane VMs

The script to be copied:

| Code Block |

|---|

#!/bin/bash

DOCKER_VERSION=19.03.15

sudo apt-get update

curl https://releases.rancher.com/install-docker/$DOCKER_VERSION.sh | sh

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries" : [ "nexus3.onap.org:10001" ],

"log-driver": "json-file",

"log-opts": {

"max-size": "1m",

"max-file": "9"

},

"mtu": 1450,

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64",

"registry-mirrors": ["https://nexus3.onap.org:10001"]

}

EOF

sudo usermod -aG docker ubuntu

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo apt-mark hold docker-ce

IP_ADDR=`ip address |grep ens|grep inet|awk '{print $2}'| awk -F / '{print $1}'`

HOSTNAME=`hostname`

echo "$IP_ADDR $HOSTNAME" >> /etc/hosts

docker login -u docker -p docker nexus3.onap.org:10001

sudo apt-get install make -y

#nfs server

sudo apt-get install nfs-kernel-server -y

sudo mkdir -p /dockerdata-nfs

sudo chown nobody:nogroup /dockerdata-nfs/

exit 0 |

...

- Update Ubuntu

- Install docker and hold the docker version to 19.03.15

- set hosts file with IP and hostname

- install nfs-kernel-server

Launched Instances

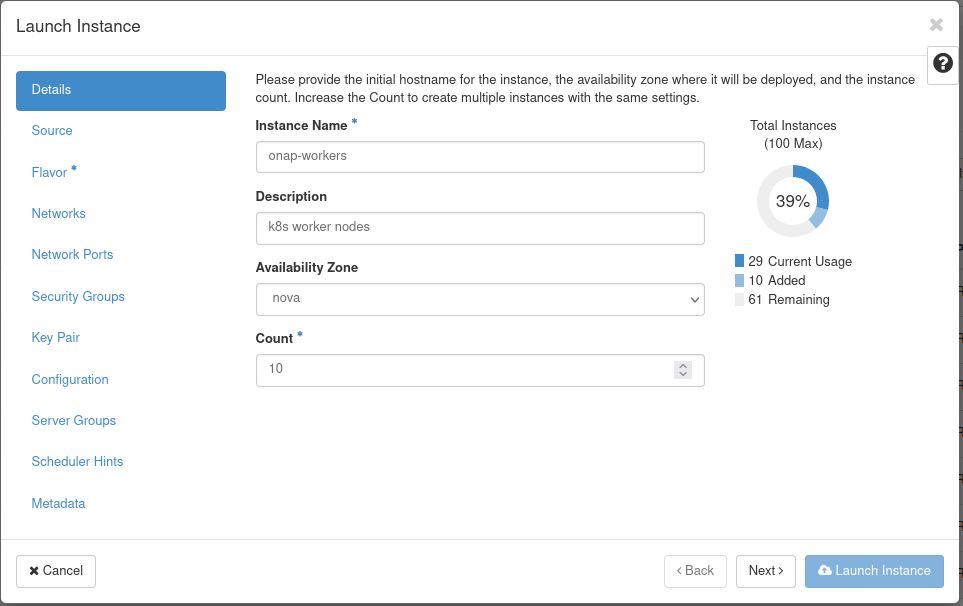

Create the Kubernetes 12 nodes worker cluster named as onap-workers on OpenStack cloud

The following instructions describe how to create OpenStack VMs to host the Highly-Available Kubernetes Workers. ONAP workloads will only be scheduled on these nodes.

Launch new VM instances in OpenStack

Select Ubuntu 20.04 as base image

Select Flavor

The size of Kubernetes hosts depend on the size of the ONAP deployment being installed.

If a small subset of ONAP applications are being deployed (i.e. for testing purposes), then 16GB or 32GB may be sufficient.

Networking

Apply customization script for Kubernetes VM(s)

The scrip to be copied:

| Code Block |

|---|

#!/bin/bash

DOCKER_VERSION=19.03.15

sudo apt-get update

curl https://releases.rancher.com/install-docker/$DOCKER_VERSION.sh | sh

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries" : [ "nexus3.onap.org:10001" ],

"log-driver": "json-file",

"log-opts": {

"max-size": "1m",

"max-file": "9"

},

"mtu": 1450,

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64",

"registry-mirrors": ["https://nexus3.onap.org:10001"]

}

EOF

sudo usermod -aG docker ubuntu

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo apt-mark hold docker-ce

IP_ADDR=`ip address |grep ens|grep inet|awk '{print $2}'| awk -F / '{print $1}'`

HOSTNAME=`hostname`

echo "$IP_ADDR $HOSTNAME" >> /etc/hosts

docker login -u docker -p docker nexus3.onap.org:10001

sudo apt-get install make -y

# install nfs

sudo apt-get install nfs-common -y

exit 0 |

...

- Update Ubuntu

- Install docker and hold the docker version to 19.03.15

- insert hostname and IP in the hosts file

- install nfs common

Launched onap-workers instances

Configure Rancher Kubernetes Engine

Install RKE

Download and install RKE on the onap-control-01 VM. Binaries can be found here for Linux and Mac: https://github.com/rancher/rke/releases/download/v1.2.7/

...

| Code Block |

|---|

# An example of an HA Kubernetes cluster for ONAP

nodes:

- address: 10.31.4.11

port: "22"

role:

- controlplane

- etcd

hostname_override: "onap-control-01"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.12

port: "22"

role:

- controlplane

- etcd

hostname_override: "onap-control-02"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.13

port: "22"

role:

- controlplane

- etcd

hostname_override: "onap-control-03"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.21

port: "22"

role:

- worker

hostname_override: "onap-workers-01"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.22

port: "22"

role:

- worker

hostname_override: "onap-workers-02"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.23

port: "22"

role:

- worker

hostname_override: "onap-workers-03"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.24

port: "22"

role:

- worker

hostname_override: "onap-workers-04"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.25

port: "22"

role:

- worker

hostname_override: "onap-workers-05"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.26

port: "22"

role:

- worker

hostname_override: "onap-workers-06"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.27

port: "22"

role:

- worker

hostname_override: "onap-workers-07"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.28

port: "22"

role:

- worker

hostname_override: "onap-workers-08"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.29

port: "22"

role:

- worker

hostname_override: "onap-workers-09"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

- address: 10.31.4.30

port: "22"

role:

- worker

hostname_override: "onap-workers-10"

user: ubuntu

ssh_key_path: "~/.ssh/id_ecdsa"

services:

kube-api:

service_cluster_ip_range: 10.43.0.0/16

pod_security_policy: false

always_pull_images: false

kube-controller:

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

kubelet:

cluster_domain: cluster.local

cluster_dns_server: 10.43.0.10

fail_swap_on: false

network:

plugin: canal

authentication:

strategy: x509

ssh_key_path: "~/.ssh/id_ecdsa"

ssh_agent_auth: false

authorization:

mode: rbac

ignore_docker_version: false

kubernetes_version: "v1.19.9-rancher1-1"

private_registries:

- url: nexus3.onap.org:10001

user: docker

password: docker

is_default: true

cluster_name: "onap"

restore:

restore: false

snapshot_name: "" |

Prepare cluster.yml

Before this configuration file can be used the IP address must be mapped for each control and worker node in this file.

Run RKE

From within the same directory as the cluster.yml file, simply execute:

...

| Code Block |

|---|

ubuntu@onap-control-01:~/rke$ ./rke up INFO[0000] Running RKE version: v1.2.7 INFO[0000] Initiating Kubernetes cluster INFO[0000] [dialer] Setup tunnel for host [10.31.4.1126] INFO[0000] [dialer] Setup tunnel for host [10.31.4.29] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2524] INFO[0000] [dialer] Setup tunnel for host [10.31.4.1227] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2125] INFO[0000] [dialer] Setup tunnel for host [10.31.4.1311] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2322] INFO[0000] [dialer] Setup tunnel for host [10.31.4.30] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2821] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2713] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2628] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2412] INFO[0000] [dialer] Setup tunnel for host [10.31.4.2223] WARN[0050] Failed to set up SSH tunneling for host [10.31.4.13]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.12]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.25]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.29]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.21]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.30]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.23]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.27]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.28]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Failed to set up SSH tunneling for host [10.31.4.24]: Can't retrieve Docker Info: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? WARN[0050] Removing host [10.31.4.13] from node lists WARN[0050] Removing host [10.31.4.12] from node lists WARN[0050] Removing host [10.31.4.25] from node lists WARN[0050] Removing host [10.31.4.29] from node lists WARN[0050] Removing host [10.31.4.21] from node lists WARN[0050] Removing host [10.31.4.30] from node lists WARN[0050] Removing host [10.31.4.23] from node lists WARN[0050] Removing host [10.31.4.27] from node lists WARN[0050] Removing host [10.31.4.28] from node lists WARN[0050] Removing host [10.31.4.24] from node lists INFO[0050] Checking if container [cluster-state-deployer] is running on host [10.31.4.26], try #1 INFO[0051] Pulling image [nexus3.onap.org:10001/rancher/rke-tools:v0.1.72] on host [10.31.4.26], try #1 INFO[0057] Image [nexus3.onap.org:10001/rancher/rke-tools:v0.1.72] exists on host [10.31.4.26] INFO[0057] Starting container [cluster-state-deployer] on host [10.31.4.26], try #1 INFO[0058] [state] Successfully started [cluster-state-deployer] container on host [10.31.4.26] INFO[0058] Checking if container [cluster-state-deployer] is running on host [10.31.4.11], try #1 INFO[0059] Pulling image [nexus3.onap.org:10001/rancher/rke-tools:v0.1.72] on host [10.31.4.11], try #1 INFO[0064] Image [nexus3.onap.org:10001/rancher/rke-tools:v0.1.72] exists on host [10.31.4.11] INFO[0065] Starting container [cluster-state-deployer] on host [10.31.4.11], try #1 INFO[0065] [state] Successfully started [cluster-state-deployer] container on host [10.31.4.11] INFO[0065] Checking if container [cluster-state-deployer] is running on host [10.31.4.22], try #1 INFO[0066] Pulling image [nexus3.onap.org:10001/rancher/rke-tools:v0.1.72] on host [10.31.4.22], try #1 INFO[0070] Image [nexus3.onap.org:10001/rancher/rke-tools:v0.1.72] exists on host [10.31.4.22] INFO[0071] Starting container [cluster-state-deployer] on host [10.31.4.22], try #1 INFO[0071] [state] Successfully started [cluster-state-deployer] container on host [10.31.4.22] INFO[0071] [certificates] Generating CA kubernetes certificates INFO[0072] [certificates] Generating Kubernetes API server aggregation layer requestheader client CA certificates INFO[0072] [certificates] GenerateServingCertificate is disabled, checking if there are unused kubelet certificates INFO[0072] [certificates] Generating Kubernetes API server certificates INFO[0072] [certificates] Generating Service account token key INFO[0072] [certificates] Generating Kube Controller certificates INFO[0072] [certificates] Generating Kube Scheduler certificates INFO[0072] [certificates] Generating Kube Proxy certificates INFO[0073] [certificates] Generating Node certificate INFO[0073] [certificates] Generating admin certificates and kubeconfig INFO[0073] [certificates] Generating Kubernetes API server proxy client certificates INFO[0073] [certificates] Generating kube-etcd-10-31-4-11 certificate and key INFO[0073] Successfully Deployed state file at [./cluster.rkestate] . . . . . . . . . . . . INFO[0168] [sync] Successfully synced nodes Labels and Taints INFO[0168] [network] Setting up network plugin: canal INFO[0168] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes INFO[0168] [addons] Successfully saved ConfigMap for addon rke-network-plugin to Kubernetes INFO[0168] [addons] Executing deploy job rke-network-plugin INFO[0178] [addons] Setting up coredns INFO[0178] [addons] Saving ConfigMap for addon rke-coredns-addon to Kubernetes INFO[0178] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to Kubernetes INFO[0178] [addons] Executing deploy job rke-coredns-addon INFO[0183] [addons] CoreDNS deployed successfully INFO[0183] [dns] DNS provider coredns deployed successfully INFO[0183] [addons] Setting up Metrics Server INFO[0183] [addons] Saving ConfigMap for addon rke-metrics-addon to Kubernetes INFO[0183] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to Kubernetes INFO[0183] [addons] Executing deploy job rke-metrics-addon INFO[0188] [addons] Metrics Server deployed successfully INFO[0188] [ingress] Setting up nginx ingress controller INFO[0188] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes INFO[0188] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes INFO[0188] [addons] Executing deploy job rke-ingress-controller INFO[0199] [ingress] ingress controller nginx deployed successfully INFO[0199] [addons] Setting up user addons INFO[0199] [addons] no user addons defined INFO[0199] Finished building Kubernetes cluster successfully |

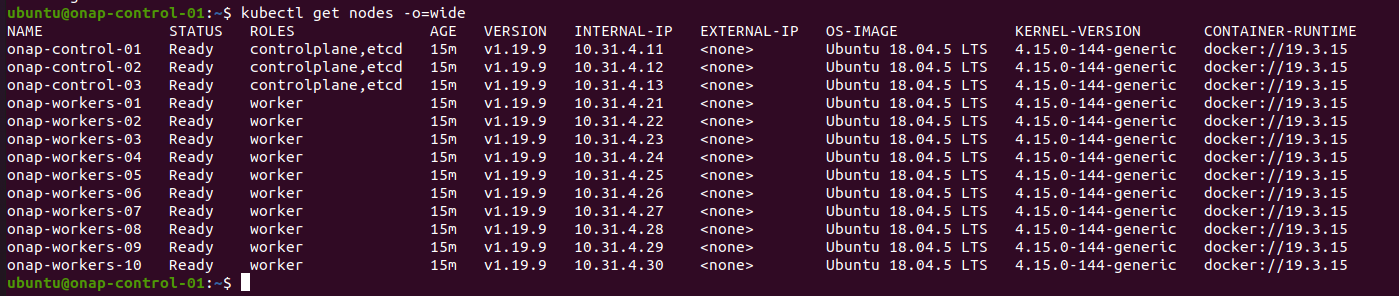

Validate RKE deployment

copy the file "kube_config_cluster.yml" to .kube directory on home of onap-control-01 VM.

| Code Block |

|---|

ubuntu@onap-control-01:~/rke$ cd

ubuntu@onap-control-01:~$ mkdir .kube

ubuntu@onap-control-01:~$ cp rke/kube_config_cluster.yml .kube/

ubuntu@onap-control-01:~$ cd .kube/

ubuntu@onap-control-01:~/.kube$ ll

total 16

drwxrwxr-x 2 ubuntu ubuntu 4096 Jun 14 15:09 ./

drwxr-xr-x 8 ubuntu ubuntu 4096 Jun 14 15:09 ../

-rw-r----- 1 ubuntu ubuntu 5375 Jun 14 15:09 kube_config_cluster.yml

ubuntu@onap-control-01:~/.kube$ mv kube_config_cluster.yml config

ubuntu@onap-control-01:~/.kube$ ll

total 16

drwxrwxr-x 2 ubuntu ubuntu 4096 Jun 14 15:10 ./

drwxr-xr-x 8 ubuntu ubuntu 4096 Jun 14 15:09 ../

-rw-r----- 1 ubuntu ubuntu 5375 Jun 14 15:09 config |

In "onap-control-1" vm:

| Code Block |

|---|

ubuntu@onap-control-1:~$ mkdir .kube

ubuntu@onap-control-1:~$ mv kube_config_cluster.yml .kube/config

ubuntu@onap-control-1:~$ kubectl config set-context --current --namespace=onap |

Perform these above operations on all control nodes as well as worker nodes to run kubectl and helm commands

| Code Block |

|---|

mkdir .kube

mv kube_config_cluster.yml .kube/config

kubectl config set-context --current --namespace=onap |

Verify the kubernetes cluster

| Code Block |

|---|

ubuntu@onap-control-1:~$ kubectl get nodes -o=wide |

Result:

Initialize Kubernetes Cluster for use by Helm

Perform this on onap-control-1 VM only during the first setup.

| Code Block |

|---|

kubectl -n kube-system create serviceaccount tiller

kubectl create clusterrolebinding tiller --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

helm init --stable-repo-url https://charts.helm.sh/stable --service-account tiller

kubectl -n kube-system rollout status deploy/tiller-deploy

helm repo remove stable

|

Perform this on the other onap-control nodes:

| Code Block | ||||

|---|---|---|---|---|

| ||||

helm init --stable-repo-url https://charts.helm.sh/stable --client-only

helm repo remove stable |

Setting up the NFS share for multinode kubernetes cluster:

Deploying applications to a Kubernetes cluster requires Kubernetes nodes to share a common, distributed filesystem. In this tutorial, we will setup an NFS Master, and configure all Worker nodes a Kubernetes cluster to play the role of NFS slaves.

It is recommneded that a separate VM, outside of the kubernetes cluster, be used. This is to ensure that the NFS Master does not compete for resources with Kubernetes Control Plane or Worker Nodes.

Launch new NFS Server VM instance

Select Ubuntu 18.04 as base image

Select Flavor

Networking

Apply customization script for NFS Server VM

Script to be added:

| Code Block |

|---|

#!/bin/bash

DOCKER_VERSION=18.09.5

export DEBIAN_FRONTEND=noninteractive

apt-get update

curl https://releases.rancher.com/install-docker/$DOCKER_VERSION.sh | sh

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries" : [ "nexus3.onap.org:10001","10.20.6.10:30000" ],

"log-driver": "json-file",

"log-opts": {

"max-size": "1m",

"max-file": "9"

},

"mtu": 1450,

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64",

"registry-mirrors": ["https://nexus3.onap.org:10001"]

}

EOF

sudo usermod -aG docker ubuntu

systemctl daemon-reload

systemctl restart docker

apt-mark hold docker-ce

IP_ADDR=`ip address |grep ens|grep inet|awk '{print $2}'| awk -F / '{print $1}'`

HOSTNAME=`hostname`

sudo echo "$IP_ADDR $HOSTNAME" >> /etc/hosts

docker login -u docker -p docker nexus3.onap.org:10001

sudo apt-get install make -y

# install nfs

sudo apt-get install nfs-common -y

sudo apt update

exit 0

|

This customization script will:

- Install docker and hold the docker version to 18.09.5

- insert hostname and IP address of the onap-nfs-server in the hosts file

- install nfs server

Resulting example

Configure NFS Share on Master node

Login into onap-nfs-server and perform the below commands

Create a master_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) node1_ip node2_ip ... nodeN_ip"

exit 1

}

if [ "$#" -lt 1 ]; then

echo "Missing NFS slave nodes"

usage

fi

#Install NFS kernel

sudo apt-get update

sudo apt-get install -y nfs-kernel-server

#Create /dockerdata-nfs and set permissions

sudo mkdir -p /dockerdata-nfs

sudo chmod 777 -R /dockerdata-nfs

sudo chown nobody:nogroup /dockerdata-nfs/

#Update the /etc/exports

NFS_EXP=""

for i in $@; do

NFS_EXP+="$i(rw,sync,no_root_squash,no_subtree_check) "

done

echo "/dockerdata-nfs "$NFS_EXP | sudo tee -a /etc/exports

#Restart the NFS service

sudo exportfs -a

sudo systemctl restart nfs-kernel-server

|

Make the above created file as executable and run the script in the onap-nfs-server with the IP's of the worker nodes:

| Code Block |

|---|

chmod +x master_nfs_node.sh

sudo ./master_nfs_node.sh {list kubernetes worker nodes ip}

example from the WinLab setup:

sudo ./master_nfs_node.sh 10.31.3.39 10.31.3.24 10.31.3.52 10.31.3.8 10.31.3.34 10.31.3.47 10.31.3.15 10.31.3.9 10.31.3.5 10.31.3.21 10.31.3.1 10.31.3.68 |

Login into each kubernetes worker node, i.e. onap-k8s VMs and perform the below commands

Create a slave_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) nfs_master_ip"

exit 1

}

if [ "$#" -ne 1 ]; then

echo "Missing NFS mater node"

usage

fi

MASTER_IP=$1

#Install NFS common

sudo apt-get update

sudo apt-get install -y nfs-common

#Create NFS directory

sudo mkdir -p /dockerdata-nfs

#Mount the remote NFS directory to the local one

sudo mount $MASTER_IP:/dockerdata-nfs /dockerdata-nfs/

echo "$MASTER_IP:/dockerdata-nfs /dockerdata-nfs nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0" | sudo tee -a /etc/fstab

|

Make the above created file as executable and run the script in all the worker nodes:

| Code Block |

|---|

chmod +x slave_nfs_node.sh

sudo ./slave_nfs_node.sh {master nfs node IP address}

example from the WinLab setup:

sudo ./slave_nfs_node.sh 10.31.3.11 |

ONAP Installation

Perform the following steps in onap-control-1 VM.

Clone the OOM helm repository

Use the master branch as Dublin branch is not available.

Perform these on the home directory

| Code Block |

|---|

git clone -b <branch> "https://gerrit.onap.org/r/oom" --recurse-submodules

mkdir .helm

cp -R ~/oom/kubernetes/helm/plugins/ ~/.helm

cd oom/kubernetes/sdnc |

Edit the values.yaml file

| Code Block |

|---|

...

# Add sdnrwt as true at the end of the config

config:

...

sdnrwt: true

|

Add sdnrwt value under config in the file.

Save the file.

navigate to templates folder:

| Code Block |

|---|

cd templates/ |

Edit the statefulset.yaml file

| Code Block |

|---|

...

spec:

...

template:

...

spec:

...

containers:

- name: {{ include "common.name" . }}

...

...

# add sdnrwt flag set to true under env

env:

...

- name: SDNRWT

value: "{{ .Values.config.sdnrwt}}" |

Add SDNRWT environment parameter and its value reference.

Save the file.

Edit the service.yaml file

| Code Block |

|---|

...

spec:

type: {{ .Values.service.type }}

ports:

{{if eq .Values.service.type "NodePort" -}}

...

- port: {{ .Values.service.externalPort4 }}

targetPort: {{ .Values.service.internalPort4 }}

nodePort: {{ .Values.global.nodePortPrefix | default .Values.nodePortPrefix }}{{ .Values.service.nodePort4 }}

name: "{{ .Values.service.portName }}-8443"

{{- else -}}

- port: {{ .Values.service.externalPort }}

targetPort: {{ .Values.service.internalPort }}

name: {{ .Values.service.portName }}

{{- end}}

selector:

app: {{ include "common.name" . }}

release: {{ .Release.Name }}

type: NodePort

sessionAffinity: ClientIP

externalTrafficPolicy: Cluster

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

status:

loadBalancer: {}

--- |

Append type NodePort, session affinity, session affinity config and load balancer parameters in service.

Copy override files

| Code Block |

|---|

cd

cp -r ~/oom/kubernetes/onap/resources/overrides .

cd overrides/ |

Edit the onap-all.yaml file

| Code Block |

|---|

# Copyright © 2019 Amdocs, Bell Canada

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

###################################################################

# This override file enables helm charts for all ONAP applications.

###################################################################

cassandra:

enabled: true

mariadb-galera:

enabled: true

aaf:

enabled: true

aai:

enabled: true

appc:

enabled: true

cds:

enabled: true

clamp:

enabled: true

cli:

enabled: true

consul:

enabled: true

contrib:

enabled: true

dcaegen2:

enabled: true

dmaap:

enabled: true

esr:

enabled: true

log:

enabled: true

sniro-emulator:

enabled: true

oof:

enabled: true

msb:

enabled: true

multicloud:

enabled: true

nbi:

enabled: true

policy:

enabled: true

pomba:

enabled: true

portal:

enabled: true

robot:

enabled: true

sdc:

enabled: true

sdnc:

enabled: true

config:

sdnrwt: true

so:

enabled: true

uui:

enabled: true

vfc:

enabled: true

vid:

enabled: true

vnfsdk:

enabled: true

modeling:

enabled: true

|

Save the file.

Start helm server

go to home directory and start helm server and local repository.

| Code Block |

|---|

cd

helm serve & |

Hit on ENTER key to come out of helm serve if it shows some logs

Add helm repository

Note the port number that is listed and use it in the Helm repo add as follows

| Code Block |

|---|

helm repo add local http://127.0.0.1:8879 |

Verify helm repository

| Code Block |

|---|

helm repo list |

output:

. . . .

. . . .

. . . .

INFO[0236] [sync] Successfully synced nodes Labels and Taints

INFO[0236] [network] Setting up network plugin: canal

INFO[0236] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0236] [addons] Successfully saved ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0236] [addons] Executing deploy job rke-network-plugin

INFO[0241] [addons] Setting up coredns

INFO[0241] [addons] Saving ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0241] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0241] [addons] Executing deploy job rke-coredns-addon

INFO[0251] [addons] CoreDNS deployed successfully

INFO[0251] [dns] DNS provider coredns deployed successfully

INFO[0251] [addons] Setting up Metrics Server

INFO[0251] [addons] Saving ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0251] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0251] [addons] Executing deploy job rke-metrics-addon

INFO[0256] [addons] Metrics Server deployed successfully

INFO[0256] [ingress] Setting up nginx ingress controller

INFO[0256] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0257] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0257] [addons] Executing deploy job rke-ingress-controller

INFO[0262] [ingress] ingress controller nginx deployed successfully

INFO[0262] [addons] Setting up user addons

INFO[0262] [addons] no user addons defined

INFO[0262] Finished building Kubernetes cluster successfully

|

Validate RKE deployment

copy the file "kube_config_cluster.yml" to .kube directory on home of onap-control-01 VM.

| Code Block |

|---|

ubuntu@onap-control-01:~/rke$ cd

ubuntu@onap-control-01:~$ mkdir .kube

ubuntu@onap-control-01:~$ cp rke/kube_config_cluster.yml .kube/

ubuntu@onap-control-01:~$ cd .kube/

ubuntu@onap-control-01:~/.kube$ ll

total 16

drwxrwxr-x 2 ubuntu ubuntu 4096 Jun 14 15:09 ./

drwxr-xr-x 8 ubuntu ubuntu 4096 Jun 14 15:09 ../

-rw-r----- 1 ubuntu ubuntu 5375 Jun 14 15:09 kube_config_cluster.yml

ubuntu@onap-control-01:~/.kube$ mv kube_config_cluster.yml config

ubuntu@onap-control-01:~/.kube$ ll

total 16

drwxrwxr-x 2 ubuntu ubuntu 4096 Jun 14 15:10 ./

drwxr-xr-x 8 ubuntu ubuntu 4096 Jun 14 15:09 ../

-rw-r----- 1 ubuntu ubuntu 5375 Jun 14 15:09 config |

In "onap-control-1" vm download kubectl to execute kubectl commands for the cluster:

| Code Block |

|---|

ubuntu@onap-control-01:~$ wget https://storage.googleapis.com/kubernetes-release/release/v1.19.9/bin/linux/amd64/kubectl

--2021-06-14 15:27:27-- https://storage.googleapis.com/kubernetes-release/release/v1.19.9/bin/linux/amd64/kubectl

Resolving storage.googleapis.com (storage.googleapis.com)... 142.250.72.112, 142.250.64.112, 142.250.64.80, ...

Connecting to storage.googleapis.com (storage.googleapis.com)|142.250.72.112|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 42987520 (41M) [application/octet-stream]

Saving to: ‘kubectl.1’

kubectl.1 100%[===============================================================================================================>] 41.00M 128MB/s in 0.3s

2021-06-14 15:27:31 (128 MB/s) - ‘kubectl.1’ saved [42987520/42987520]

ubuntu@onap-control-01:~$ chmod +x kubectl

ubuntu@onap-control-01:~$ sudo mv ./kubectl /usr/local/bin/kubectl |

Perform these above operations on all control nodes as well as worker nodes to run kubectl and helm commands

Create "onap" namespace and set that as default in kubernetes.

| Code Block |

|---|

ubuntu@onap-control-01:~$ kubectl create namespace onap

ubuntu@onap-control-01:~$ kubectl config set-context --current --namespace=onap |

Verify the kubernetes cluster

| Code Block |

|---|

ubuntu@onap-control-1:~$ kubectl get nodes -o=wide |

Result:

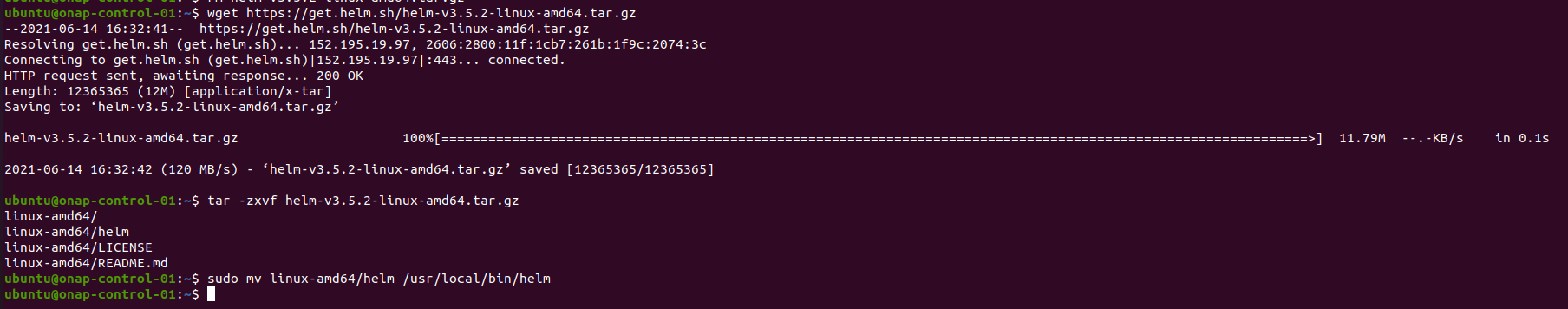

Install Helm

| Code Block | ||||||

|---|---|---|---|---|---|---|

| ||||||

ubuntu@onap-control-01:~$ wget https://get.helm.sh/helm-v3.5.2-linux-amd64.tar.gz

ubuntu@onap-control-01:~$ tar -zxvf helm-v3.5.2-linux-amd64.tar.gz

ubuntu@onap-control-01:~$ sudo mv linux-amd64/helm /usr/local/bin/helm |

Initialize Kubernetes Cluster for use by Helm

Perform this on onap-control-1 VM only during the first setup.

Create folder for local charts repository on onap-control-01.

| Code Block | ||||

|---|---|---|---|---|

| ||||

ubuntu@onap-control-01:~$ mkdir charts; chmod -R 777 charts

|

Run a docker server to serve local charts

| Code Block | ||||

|---|---|---|---|---|

| ||||

ubuntu@onap-control-01:~$ docker run -d -p 8080:8080 -v $(pwd)/charts:/charts -e DEBUG=true -e STORAGE=local -e STORAGE_LOCAL_ROOTDIR=/charts chartmuseum/chartmuseum:latest |

Install helm chartmuseum plugin

| Code Block | ||||

|---|---|---|---|---|

| ||||

ubuntu@onap-control-01:~$ helm plugin install https://github.com/chartmuseum/helm-push.git |

Setting up the NFS share for multinode kubernetes cluster:

Deploying applications to a Kubernetes cluster requires Kubernetes nodes to share a common, distributed filesystem. In this tutorial, we will setup an NFS Master, and configure all Worker nodes a Kubernetes cluster to play the role of NFS slaves.

It is recommneded that a separate VM, outside of the kubernetes cluster, be used. This is to ensure that the NFS Master does not compete for resources with Kubernetes Control Plane or Worker Nodes.

Launch new NFS Server VM instance

Select Ubuntu 18.04 as base image

Select Flavor

Networking

Apply customization script for NFS Server VM

Script to be added:

| Code Block |

|---|

#!/bin/bash

DOCKER_VERSION=18.09.5

export DEBIAN_FRONTEND=noninteractive

apt-get update

curl https://releases.rancher.com/install-docker/$DOCKER_VERSION.sh | sh

cat > /etc/docker/daemon.json << EOF

{

"insecure-registries" : [ "nexus3.onap.org:10001","10.20.6.10:30000" ],

"log-driver": "json-file",

"log-opts": {

"max-size": "1m",

"max-file": "9"

},

"mtu": 1450,

"ipv6": true,

"fixed-cidr-v6": "2001:db8:1::/64",

"registry-mirrors": ["https://nexus3.onap.org:10001"]

}

EOF

sudo usermod -aG docker ubuntu

systemctl daemon-reload

systemctl restart docker

apt-mark hold docker-ce

IP_ADDR=`ip address |grep ens|grep inet|awk '{print $2}'| awk -F / '{print $1}'`

HOSTNAME=`hostname`

sudo echo "$IP_ADDR $HOSTNAME" >> /etc/hosts

docker login -u docker -p docker nexus3.onap.org:10001

sudo apt-get install make -y

# install nfs

sudo apt-get install nfs-common -y

sudo apt update

exit 0

|

This customization script will:

- Install docker and hold the docker version to 18.09.5

- insert hostname and IP address of the onap-nfs-server in the hosts file

- install nfs server

Resulting example

Configure NFS Share on Master node

Login into onap-nfs-server and perform the below commands

Create a master_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) node1_ip node2_ip ... nodeN_ip"

exit 1

}

if [ "$#" -lt 1 ]; then

echo "Missing NFS slave nodes"

usage

fi

#Install NFS kernel

sudo apt-get update

sudo apt-get install -y nfs-kernel-server

#Create /dockerdata-nfs and set permissions

sudo mkdir -p /dockerdata-nfs

sudo chmod 777 -R /dockerdata-nfs

sudo chown nobody:nogroup /dockerdata-nfs/

#Update the /etc/exports

NFS_EXP=""

for i in $@; do

NFS_EXP+="$i(rw,sync,no_root_squash,no_subtree_check) "

done

echo "/dockerdata-nfs "$NFS_EXP | sudo tee -a /etc/exports

#Restart the NFS service

sudo exportfs -a

sudo systemctl restart nfs-kernel-server

|

Make the above created file as executable and run the script in the onap-nfs-server with the IP's of the worker nodes:

| Code Block |

|---|

chmod +x master_nfs_node.sh

sudo ./master_nfs_node.sh {list kubernetes worker nodes ip}

example from the WinLab setup:

sudo ./master_nfs_node.sh 10.31.4.21 10.31.4.22 10.31.4.23 10.31.4.24 10.31.4.25 10.31.4.26 10.31.4.27 10.31.4.28 10.31.4.29 10.31.4.30 |

Login into each kubernetes worker node, i.e. onap-k8s VMs and perform the below commands

Create a slave_nfs_node.sh file as below:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash

usage () {

echo "Usage:"

echo " ./$(basename $0) nfs_master_ip"

exit 1

}

if [ "$#" -ne 1 ]; then

echo "Missing NFS mater node"

usage

fi

MASTER_IP=$1

#Install NFS common

sudo apt-get update

sudo apt-get install -y nfs-common

#Create NFS directory

sudo mkdir -p /dockerdata-nfs

#Mount the remote NFS directory to the local one

sudo mount $MASTER_IP:/dockerdata-nfs /dockerdata-nfs/

echo "$MASTER_IP:/dockerdata-nfs /dockerdata-nfs nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0" | sudo tee -a /etc/fstab

|

Make the above created file as executable and run the script in all the worker nodes:

| Code Block |

|---|

chmod +x slave_nfs_node.sh

sudo ./slave_nfs_node.sh {master nfs node IP address}

example from the WinLab setup:

sudo ./slave_nfs_node.sh 10.31.3.11 |

ONAP Installation

Perform the following steps in onap-control-01 VM.

Clone the OOM helm repository

Perform these on the home directory

- Clone oom repository with recursive submodules option

- Add deploy plugin to helm

- Add undeploy plugin to helm

- Add helm local repository to the local running server

| Code Block |

|---|

ubuntu@onap-control-01:~$ git clone -b master http://gerrit.onap.org/r/oom --recurse-submodules

ubuntu@onap-control-01:~$ helm plugin install ~/oom/kubernetes/helm/plugins/deploy

ubuntu@onap-control-01:~$ helm plugin install ~/oom/kubernetes/helm/plugins/undeploy

ubuntu@onap-control-01:~$ helm repo add local http://127.0.0.1:8080 |

Make charts from oom repository

| Note |

|---|

Do not use sudo to perform the 'make' operation |

This will take somewhere around 20-30 minutes of time to make all the helm charts and save the charts into local helm repository.

| Code Block |

|---|

ubuntu@onap-control-01:~$ cd ~/oom/kubernetes; make all -e SKIP_LINT=TRUE; make onap -e SKIP_LINT=TRUE

Using Helm binary helm which is helm version v3.5.2

[common]

make[1]: Entering directory '/home/ubuntu/oom/kubernetes'

make[2]: Entering directory '/home/ubuntu/oom/kubernetes/common'

[common]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

==> Linting common

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing common-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[repositoryGenerator]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

==> Linting repositoryGenerator

[INFO] Chart.yaml: icon is recommended

1 chart(s) linted, 0 chart(s) failed

Pushing repositoryGenerator-8.0.0.tgz to local...

Done.

make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common'

[readinessCheck]

make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 2 charts

Deleting outdated charts

==> Linting readinessCheck

[INFO] Chart.yaml: icon is recommended

..

..

..

..

[onap]

make[1]: Entering directory '/home/ubuntu/oom/kubernetes'

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 39 charts

Downloading aaf from repo http://127.0.0.1:8080

Downloading aai from repo http://127.0.0.1:8080

Downloading appc from repo http://127.0.0.1:8080

Downloading cassandra from repo http://127.0.0.1:8080

Downloading cds from repo http://127.0.0.1:8080

Downloading cli from repo http://127.0.0.1:8080

Downloading common from repo http://127.0.0.1:8080

Downloading consul from repo http://127.0.0.1:8080

Downloading contrib from repo http://127.0.0.1:8080

Downloading cps from repo http://127.0.0.1:8080

Downloading dcaegen2 from repo http://127.0.0.1:8080

Downloading dcaegen2-services from repo http://127.0.0.1:8080

Downloading dcaemod from repo http://127.0.0.1:8080

Downloading holmes from repo http://127.0.0.1:8080

Downloading dmaap from repo http://127.0.0.1:8080

Downloading esr from repo http://127.0.0.1:8080

Downloading log from repo http://127.0.0.1:8080

Downloading sniro-emulator from repo http://127.0.0.1:8080

Downloading mariadb-galera from repo http://127.0.0.1:8080

Downloading msb from repo http://127.0.0.1:8080

Downloading multicloud from repo http://127.0.0.1:8080

Downloading nbi from repo http://127.0.0.1:8080

Downloading policy from repo http://127.0.0.1:8080

Downloading portal from repo http://127.0.0.1:8080

Downloading oof from repo http://127.0.0.1:8080

Downloading repository-wrapper from repo http://127.0.0.1:8080

Downloading robot from repo http://127.0.0.1:8080

Downloading sdc from repo http://127.0.0.1:8080

Downloading sdnc from repo http://127.0.0.1:8080

Downloading so from repo http://127.0.0.1:8080

Downloading uui from repo http://127.0.0.1:8080

Downloading vfc from repo http://127.0.0.1:8080

Downloading vid from repo http://127.0.0.1:8080

Downloading vnfsdk from repo http://127.0.0.1:8080

Downloading modeling from repo http://127.0.0.1:8080

Downloading platform from repo http://127.0.0.1:8080

Downloading a1policymanagement from repo http://127.0.0.1:8080

Downloading cert-wrapper from repo http://127.0.0.1:8080

Downloading roles-wrapper from repo http://127.0.0.1:8080

Deleting outdated charts

Skipping linting of onap

Pushing onap-8.0.0.tgz to local...

Done.

make[1]: Leaving directory '/home/ubuntu/oom/kubernetes'

ubuntu@onap-control-01:~/oom/kubernetes$

|

Deploying ONAP

Deploying ONAP with helm command

| Code Block | ||||||

|---|---|---|---|---|---|---|

| ||||||

ubuntu@onap-control-01:~/oom/kubernetes$ helm deploy demo local/onap --namespace onap --set global.masterPassword=WinLab_NetworkSlicing -f onap/resources/overrides/onap-all.yaml -f onap/resources/overrides/environment.yaml -f onap/resources/overrides/openstack.yaml --timeout 1200s

Output:

v3.5.2

/home/ubuntu/.local/share/helm/plugins/deploy/deploy.sh: line 92: [ -z: command not found

6

Use cache dir: /home/ubuntu/.local/share/helm/plugins/deploy/cache

0

0

/home/ubuntu/.local/share/helm/plugins/deploy/deploy.sh: line 126: expr--set global.masterPassword=WinLab_NetworkSlicing -f onap/resources/overrides/onap-all.yaml -f onap/resources/overrides/environment.yaml -f onap/resources/overrides/openstack.yaml --timeout 1200s: No such file or directory

0

fetching local/onap

history.go:56: [debug] getting history for release demo

install.go:173: [debug] Original chart version: ""

install.go:190: [debug] CHART PATH: /home/ubuntu/.local/share/helm/plugins/deploy/cache/onap

release "demo" deployed

release "demo-a1policymanagement" deployed

release "demo-aaf" deployed

release "demo-aai" deployed

release "demo-cassandra" deployed

release "demo-cds" deployed

release "demo-cert-wrapper" deployed

release "demo-cli" deployed

release "demo-consul" deployed

release "demo-contrib" deployed

release "demo-cps" deployed

release "demo-dcaegen2" deployed

release "demo-dcaegen2-services" deployed

release "demo-dcaemod" deployed

release "demo-dmaap" deployed

release "demo-esr" deployed

release "demo-holmes" deployed

release "demo-mariadb-galera" deployed

release "demo-modeling" deployed

release "demo-msb" deployed

release "demo-multicloud" deployed

release "demo-nbi" deployed

release "demo-oof" deployed

release "demo-platform" deployed

release "demo-policy" deployed

release "demo-portal" deployed

release "demo-repository-wrapper" deployed

release "demo-robot" deployed

release "demo-roles-wrapper" deployed

release "demo-sdc" deployed

release "demo-sdnc" deployed

release "demo-so" deployed

release "demo-uui" deployed

release "demo-vfc" deployed

release "demo-vid" deployed

release "demo-vnfsdk" deployed

6

ubuntu@onap-control-01:~/oom/kubernetes$ |

Verify the deploy:

| Code Block |

|---|

ubuntu@onap-control-01:~$ helm ls -n onap

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

demo onap 1 |

| Code Block |

ubuntu@onap-control-1:~$ helm repo list NAME URL 2021-07-13 15:14:35.025694014 +0000 UTC deployed onap-8.0.0 local http://127.0.0.1:8879/charts Honolulu demo-a1policymanagement onap 1 ubuntu@onap-control-1:~$ |

Make onap helm charts available in local helm repository

| Code Block |

|---|

cd ~/oom/kubernetes make all; make onap |

| Note |

|---|

Do not use sudo to perform the above 'make' operation |

This take somewhere around 10-15 minutes of time to make all the helm charts and save the charts into local helm repository

output:

| Code Block |

|---|

ubuntu@onap-control-1:~$ cd ~/oom/kubernetes/ ubuntu@onap-control-1:~/oom/kubernetes$ make all; make onap [common] make[1]: Entering directory '/home/ubuntu/oom/kubernetes' make[2]: Entering directory '/home/ubuntu/oom/kubernetes/common' [common] make[3]: Entering directory '/home/ubuntu/oom/kubernetes/common' ==> Linting common [INFO] Chart.yaml: icon is recommended 1 chart(s) linted, no failures Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/dist/packages/common-4.0.0.tgz make[3]: Leaving directory '/home/ubuntu/oom/kubernetes/common' ... ... ... [onap] make[1]: Entering directory '/home/ubuntu/oom/kubernetes' Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "local" chart repository ...Successfully got an update from the "stable" chart repository Update Complete. ⎈Happy Helming!⎈ Saving 33 charts Downloading aaf from repo http://127.0.0.1:8879 Downloading aai from repo http://127.0.0.1:8879 Downloading appc from repo http://127.0.0.1:8879 Downloading cassandra from repo http://127.0.0.1:8879 Downloading clamp from repo http://127.0.0.1:8879 Downloading cli from repo http://127.0.0.1:8879 Downloading common from repo http://127.0.0.1:8879 Downloading consul from repo http://127.0.0.1:8879 Downloading contrib from repo http://127.0.0.1:8879 Downloading dcaegen2 from repo http://127.0.0.1:8879 Downloading dmaap from repo http://127.0.0.1:8879 Downloading esr from repo http://127.0.0.1:8879 Downloading log from repo http://127.0.0.1:8879 Downloading sniro-emulator from repo http://127.0.0.1:8879 Downloading mariadb-galera from repo http://127.0.0.1:8879 Downloading msb from repo http://127.0.0.1:8879 Downloading multicloud from repo http://127.0.0.1:8879 Downloading nbi from repo http://127.0.0.1:8879 Downloading nfs-provisioner from repo http://127.0.0.1:8879 Downloading pnda from repo http://127.0.0.1:8879 Downloading policy from repo http://127.0.0.1:8879 Downloading pomba from repo http://127.0.0.1:8879 Downloading portal from repo http://127.0.0.1:8879 Downloading oof from repo http://127.0.0.1:8879 Downloading robot from repo http://127.0.0.1:8879 Downloading sdc from repo http://127.0.0.1:8879 Downloading sdnc from repo http://127.0.0.1:8879 Downloading so from repo http://127.0.0.1:8879 Downloading uui from repo http://127.0.0.1:8879 Downloading vfc from repo http://127.0.0.1:8879 Downloading vid from repo http://127.0.0.1:8879 Downloading vnfsdk from repo http://127.0.0.1:8879 Downloading modeling from repo http://127.0.0.1:8879 Deleting outdated charts ==> Linting onap Lint OK 1 chart(s) linted, no failures Successfully packaged chart and saved it to: /home/ubuntu/oom/kubernetes/dist/packages/onap-4.0.0.tgz make[1]: Leaving directory '/home/ubuntu/oom/kubernetes' ubuntu@onap-control-1:~/oom/kubernetes$ |

Deploy ONAP

The name of the release as 'demo', the namespace as 'onap' and the timeout of 300 seconds to deploy 'dmaap' and 'so' which takes some time to deploy these charts waiting for other components.

Perform these below commands in onap-control-1 where git was cloned.

| Code Block |

|---|

helm deploy demo local/onap --namespace onap -f ~/overrides/onap-all.yaml -f ~/overrides/environment.yaml --timeout 900 |

Takes some 60-70 minutes due to added timeout parameter and environment file.

The environment file provides module wise delay required to process the deployment.

The output:

| Code Block |

|---|

ubuntu@onap-control-1:~/oom/kubernetes$ helm deploy demo local/onap --namespace onap -f ~/overrides/onap-all.yaml --timeout 900

fetching local/onap

release "demo" deployed

release "demo-aaf" deployed

release "demo-aai" deployed

release "demo-appc" deployed

release "demo-cassandra" deployed

release "demo-cds" deployed

release "demo-clamp" deployed

release "demo-cli" deployed

release "demo-consul" deployed

release "demo-contrib" deployed

release "demo-dcaegen2" deployed

release "demo-dmaap" deployed

release "demo-esr" deployed

release "demo-log" deployed

release "demo-mariadb-galera" deployed

release "demo-modeling" deployed

release "demo-msb" deployed

release "demo-multicloud" deployed

release "demo-nbi" deployed

release "demo-oof" deployed

release "demo-policy" deployed

release "demo-pomba" deployed

release "demo-portal" deployed

release "demo-robot" deployed

release "demo-sdc" deployed

release "demo-sdnc" deployed

release "demo-sniro-emulator" deployed

release "demo-so" deployed

release "demo-uui" deployed

release "demo-vfc" deployed

release "demo-vid" deployed

release "demo-vnfsdk" deployed

ubuntu@onap-control-1:~/oom/kubernetes$ |

Verify the deploy

| Code Block |

|---|

ubuntu@onap-control-1:~/overrides$ helm ls NAME REVISION UPDATED 2021-07-13 15:14:38.155669343 +0000 UTC deployed a1policymanagement-8.0.0 1.0.0 demo-aaf onap 1 2021-07-13 15:14:44.083961609 +0000 UTC deployed aaf-8.0.0 demo-aai onap 1 2021-07-13 15:15:43.238758595 +0000 UTC deployed aai-8.0.0 demo-cassandra onap 1 2021-07-13 15:16:32.632257395 +0000 UTC deployed cassandra-8.0.0 demo-cds onap 1 2021-07-13 15:16:42.884519209 +0000 UTC deployed cds-8.0.0 demo-cert-wrapper onap 1 2021-07-13 15:17:08.172161 +0000 UTC deployed cert-wrapper-8.0.0 demo-cli onap 1 2021-07-13 15:17:17.703468641 +0000 UTC deployed cli-8.0.0 demo-consul onap 1 2021-07-13 15:17:23.487068838 +0000 UTC deployed consul-8.0.0 demo-contrib onap 1 2021-07-13 15:17:37.10843864 +0000 UTC deployed contrib-8.0.0 demo-cps onap STATUS CHART 1 APP VERSION NAMESPACE demo 2021-07-13 15:18:04.024754433 +0000 UTC deployed 1 cps-8.0.0 demo-dcaegen2 Fri Nov 29 16:26:16 2019 DEPLOYED onap-5.0.0 onap El Alto 1 onap demo-aaf 2021-07-13 1 15:18:21.92984273 +0000 UTC deployed Fri Nov 29 16:26:17 2019 DEPLOYED aaf-5 dcaegen2-8.0.0 demo-dcaegen2-services onap onap1 demo-aai 1 2021-07-13 15:19:27.147459081 +0000 UTC deployed Fri Nov 29 16:26:28 2019 DEPLOYED aai-5 dcaegen2-services-8.0.0 Honolulu demo-dcaemod onap onap demo-appc 1 1 Fri Nov 29 16:27:30 2019 DEPLOYED appc-5.0.02021-07-13 15:19:51.020335352 +0000 UTC deployed dcaemod-8.0.0 demo-dmaap onap 1 onap demo-cassandra 1 2021-07-13 15:20:23.115045736 +0000 UTC deployed Fri Nov 29 16:27:41 2019 DEPLOYED cassandra-5 dmaap-8.0.0 demo-esr onap demo-cds 1 1 Fri Nov 29 16:27:56 2019 DEPLOYED cds-5.0.0 2021-07-13 15:21:48.99450393 +0000 UTC deployed esr-8.0.0 demo-holmes onap demo-clamp 1 1 Fri Nov 29 16:28:58 2019 DEPLOYED clamp-5.0.0 2021-07-13 15:21:55.637545972 +0000 UTC deployed holmes-8.0.0 demo-mariadb-galera onap onap demo-cli 1 1 Fri Nov 29 16:29:31 2019 DEPLOYED cli-5.0.0 2021-07-13 15:22:26.899830789 +0000 UTC deployed mariadb-galera-8.0.0 demo-modeling onap demo-consul 1 Fri Nov 29 16:29:45 2019 DEPLOYED consul-5.0.0 2021-07-13 15:22:36.77062758 +0000 UTC deployed onap modeling-8.0.0 demo-contribmsb 1 Fri Nov 29 16:30:04 2019 DEPLOYED contrib-5.0.0 onap 1 onap demo-dcaegen2 1 2021-07-13 15:22:43.955119743 +0000 UTC deployed Fri Nov 29 16:31:03 2019 DEPLOYED dcaegen2-5 msb-8.0.0 demo-multicloud onap onap 1 demo-dmaap 1 2021-07-13 Fri Nov 29 16:35:47 2019 DEPLOYED dmaap-515:22:57.122972321 +0000 UTC deployed multicloud-8.0.0 demo-nbi onap onap demo-esr 1 1 Fri Nov 29 16:50:24 2019 DEPLOYED esr-5.0.0 2021-07-13 15:23:10.724184832 +0000 UTC deployed nbi-8.0.0 demo-oof onap onap demo-log 1 Fri Nov 29 16:50:38 2019 DEPLOYED log-5.0.0 2021-07-13 15:23:21.867288517 +0000 UTC deployed oof-8.0.0 demo-policy onap demo-mariadb-galera 1 onap Fri Nov 29 16:51:08 2019 DEPLOYED mariadb-galera-5.0.0 1 onap demo-modeling 1 2021-07-13 15:26:33.741229968 +0000 UTC deployed Fri Nov 29 16:51:29 2019 DEPLOYED modeling-5 policy-8.0.0 demo-portal onap onap1 demo-msb 1 2021-07-13 15:29:42.334773742 +0000 UTC deployed Fri Nov 29 16:51:45 2019 DEPLOYED msb-5 portal-8.0.0 demo-repository-wrapper onap 1 onap demo-multicloud2021-07-13 15:30:24.228522944 +0000 UTC deployed 1 repository-wrapper-8.0.0 demo-robot Fri Nov 29 16:52:23 2019 DEPLOYED multicloud-5.0.0 onap onap1 demo-nbi 1 2021-07-13 15:30:36.103266739 +0000 UTC deployed Fri Nov 29 16:53:26 2019 DEPLOYED nbi-5 robot-8.0.0 demo-roles-wrapper onap 1 onap demo-oof 2021-07-13 15:30:43.592035776 +0000 UTC deployed 1 Fri Nov 29 16:54:03 2019 DEPLOYED oof-5roles-wrapper-8.0.0 demo-sdc onap 1 onap demo-policy 1 2021-07-13 15:30:50.662463052 +0000 UTC deployed Fri Nov 29 16:56:27 2019 DEPLOYED policy-5 sdc-8.0.0 demo-so onap onap demo-pomba 1 1 Fri Nov 29 16:59:13 2019 DEPLOYED pomba-5.0.0 2021-07-13 15:34:44.911711191 +0000 UTC deployed so-8.0.0 demo-uui onap demo-portal onap 1 1 Fri Nov 29 17:01:44 2019 DEPLOYED portal-5.0.0 2021-07-13 15:55:27.84129364 +0000 UTC deployed onap uui-8.0.0 demo-robot vfc 1 Fri Nov 29 17:03:10 2019 DEPLOYED robot-5.0.0onap 1 onap demo-sdc 2021-07-13 15:55:32.516727818 +0000 UTC deployed 1 vfc-8.0.0 demo-vid Fri Nov 29 17:03:24 2019 DEPLOYED sdc-5.0.0 onap 1 onap demo-sdnc 2021-07-13 15:56:02.048766897 +0000 UTC 1deployed Fri Nov 29 17:05:27 2019 DEPLOYED sdnc-5 vid-8.0.0 demo-vnfsdk onap onap demo-sniro-emulator 1 Fri Nov 29 17:08:24 2019 DEPLOYED sniro-emulator-5.0.0 2021-07-13 15:56:19.960367033 +0000 UTC deployed onap demo-so 1 Fri Nov 29 17:10:33 2019 DEPLOYED so-5.0.0 vnfsdk-8.0.0 ubuntu@onap-control-01:~/oom/kubernetes$ |

In case of missing or failures in deployment.

If the deployment of any onap module fails, please go through these steps to redeploy the modules.

In this example, we demonstrate failure of dmaap, which normally occurs due to timeout issues.

Missing deployment

In the above deployment, demo-sdnc is missing. So we reinstall this.

| Code Block |

|---|

ubuntu@onap-control-01:~/oom/kubernetes$ helm install --namespace onap demo-sdnc local/sdnc --set global.masterPassword=WinLab_NetworkSlicing -f onap/resources/overrides/environment.yaml -f onap/resources/overrides/onap-all.yaml --timeout 1200s

NAME: demo-sdnc

LAST DEPLOYED: Tue Jul 13 16:11:58 2021

NAMESPACE: onap

STATUS: deployed

REVISION: 1

TEST SUITE: None

ubuntu@onap-control-01:~/oom/kubernetes$ |

Check the failed modules

perform 'helm ls' on the control node.

| Code Block |

|---|

ubuntu@onap-control-01:~$ helm ls -n onap NAME NAMESPACE onapREVISION UPDATED demo-uui 1 Fri Nov 29 17:24:55 2019 DEPLOYED uui-5.0.0 STATUS CHART onap APP VERSION demo-vfc 1 Frionap Nov 29 17:24:59 2019 DEPLOYED vfc-5.0.0 1 2021-07-13 15:14:35.025694014 +0000 UTC deployed onap-8.0.0 onap Honolulu demo-vid a1policymanagement onap 1 Fri Nov 29 17:26:05 2019 DEPLOYED vid-52021-07-13 15:14:38.155669343 +0000 UTC deployed a1policymanagement-8.0.0 1.0.0 demo-aaf onap demo-vnfsdk 1 Fri Nov 29 17:26:38 2019 DEPLOYED vnfsdk-52021-07-13 15:14:44.083961609 +0000 UTC deployed aaf-8.0.0 onap ubuntu@onap-control-1:~/oom/kubernetes$ |

In case of failures in deployment

If the deployment of any onap module fails, please go through these steps to redeploy the modules.

In this example, we demonstrate failure of dmaap, which normally occurs due to timeout issues.

Check the failed modules

perform 'helm ls' on the control node.

| Code Block |

|---|

ubuntu@onap-control-1:~/overrides$ helm ls NAMEdemo-aai onap 1 2021-07-13 15:15:43.238758595 +0000 UTC deployed aai-8.0.0 REVISION UPDATED demo-cassandra STATUSonap CHART1 2021-07-13 15:16:32.632257395 +0000 UTC deployed cassandra-8.0.0 APP VERSION NAMESPACE demo demo-cds onap 1 Fri Nov 29 16:26:16 2019 DEPLOYED onap-52021-07-13 15:16:42.884519209 +0000 UTC deployed cds-8.0.0 El Alto onap demo-cert-aafwrapper onap 1 Fri Nov 29 16:26:17 2019 DEPLOYED aaf-52021-07-13 15:17:08.172161 +0000 UTC deployed cert-wrapper-8.0.0 demo-cli onap demo-aai onap 1 Fri Nov 29 16:26:28 2019 DEPLOYED aai-52021-07-13 15:17:17.703468641 +0000 UTC deployed cli-8.0.0 onap demo-appcconsul onap 1 Fri Nov 29 16:27:30 2019 DEPLOYED appc-52021-07-13 15:17:23.487068838 +0000 UTC deployed consul-8.0.0 demo-contrib onap demo-cassandra onap 1 Fri Nov 29 16:27:41 2019 DEPLOYED cassandra-52021-07-13 15:17:37.10843864 +0000 UTC deployed contrib-8.0.0 onap demo-cdscps onap 1 Fri Nov 29 16:27:56 2019 DEPLOYED cds-52021-07-13 15:18:04.024754433 +0000 UTC deployed cps-8.0.0 onap demo-clampdcaegen2 onap 1 Fri Nov 29 16:28:58 2019 DEPLOYED clamp-52021-07-13 15:18:21.92984273 +0000 UTC deployed dcaegen2-8.0.0 onap demo-clidcaegen2-services onap 1 Fri Nov 29 16:29:31 2019 DEPLOYED cli-52021-07-13 15:19:27.147459081 +0000 UTC deployed dcaegen2-services-8.0.0 Honolulu demo-dcaemod onap demo-consul 1 Fri Nov 29 16:29:45 2019 DEPLOYED consul-52021-07-13 15:19:51.020335352 +0000 UTC deployed dcaemod-8.0.0 demo-dmaap onap demo-contrib onap 1 Fri Nov 29 16:30:04 2019 DEPLOYED contrib-52021-07-13 15:20:23.115045736 +0000 UTC deployed dmaap-8.0.0 onap demo-dcaegen2esr onap 1 Fri Nov 29 16:31:03 2019 DEPLOYED dcaegen2-52021-07-13 15:21:48.99450393 +0000 UTC deployed esr-8.0.0 onap demo-dmaapholmes 1onap 1 Mon Dec 2 05:45:05 2019 FAILED dmaap-5 2021-07-13 15:21:55.637545972 +0000 UTC deployed holmes-8.0.0 onap demo-esrmariadb-galera onap 1 Fri Nov 29 16:50:24 2019 DEPLOYED esr-52021-07-13 15:22:26.899830789 +0000 UTC deployed mariadb-galera-8.0.0 onap demo-modeling demo-log onap 1 Fri Nov 29 16:50:38 2019 DEPLOYED log-52021-07-13 15:22:36.77062758 +0000 UTC deployed modeling-8.0.0 onap demo-mariadb-galera 1 Fri Nov 29 16:51:08 2019 DEPLOYED mariadb-galera-5.0.0 -msb onap demo-modeling onap 1 Fri Nov 29 16:51:29 2019 DEPLOYED modeling-52021-07-13 15:22:43.955119743 +0000 UTC deployed msb-8.0.0 onap demo-msbmulticloud onap 1 Fri Nov 29 16:51:45 2019 DEPLOYED msb-52021-07-13 15:22:57.122972321 +0000 UTC deployed multicloud-8.0.0 demo-nbi onap 1 demo-multicloud 2021-07-13 15:23:10.724184832 +0000 UTC deployed nbi-8.0.0 1 Fri Nov 29 16:52:23 2019 DEPLOYED multicloud-5.0.0 demo-oof onap demo-nbi onap 1 Fri Nov 29 16:53:26 2019 DEPLOYED nbi-52021-07-13 15:23:21.867288517 +0000 UTC deployed oof-8.0.0 onap demo-oofpolicy 1onap 1 Mon Dec 2 2021-07-13 15:04:11 2019 DEPLOYED oof-526:33.741229968 +0000 UTC deployed policy-8.0.0 onap demo-portal demo-policy onap 1 Fri Nov 29 16:56:27 2019 DEPLOYED policy-52021-07-13 15:29:42.334773742 +0000 UTC deployed portal-8.0.0 onap demo-pombarepository-wrapper onap 1 Fri Nov 29 16:59:13 2019 DEPLOYED pomba-52021-07-13 15:30:24.228522944 +0000 UTC deployed repository-wrapper-8.0.0 demo-robot onap demo-portal onap 1 Fri Nov 29 17:01:44 2019 DEPLOYED portal-52021-07-13 15:30:36.103266739 +0000 UTC deployed robot-8.0.0 onap demo-roles-robotwrapper onap 1 Fri Nov 29 17:03:10 2019 DEPLOYED robot-52021-07-13 15:30:43.592035776 +0000 UTC deployed roles-wrapper-8.0.0 demo-sdc onap demo-sdc onap 1 Fri Nov 29 17:03:24 2019 DEPLOYED sdc-52021-07-13 15:30:50.662463052 +0000 UTC deployed sdc-8.0.0 onap demo-sdnc onap 1 Fri Nov 29 17:05:27 2019 DEPLOYED sdnc-52021-07-13 16:11:58.795467333 +0000 UTC deployed sdnc-8.0.0 onap demo-sniro-emulator 1 Fri Nov 29 17:08:24 2019 DEPLOYED sniro-emulator-5.0.0 so onap demo-so onap 1 Mon Dec 2 08:58:33 2019 DEPLOYED so-52021-07-13 15:34:44.911711191 +0000 UTC deployed so-8.0.0 onap demo-uui onap 1 Fri Nov 29 17:24:55 2019 DEPLOYED uui-52021-07-13 15:55:27.84129364 +0000 UTC deployed uui-8.0.0 onap demo-vfc onap 1 Fri Nov 29 17:24:59 2019 DEPLOYED vfc-52021-07-13 15:55:32.516727818 +0000 UTC deployed vfc-8.0.0 onap demo-vid 1 onap 1 Fri Nov 29 17:26:05 2019 DEPLOYED vid-5 2021-07-13 15:56:02.048766897 +0000 UTC deployed vid-8.0.0 onap demo-vnfsdk onap 1 Fri Nov 29 17:26:38 2019 DEPLOYED vnfsdk-52021-07-13 15:56:19.960367033 +0000 UTC deployed vnfsdk-8.0.0 onap |

Delete the failed module

use the right release name from the name as shown in helm ls.

...

| Code Block |

|---|

helm delete demo-dmaap --purge

kubectl get persistentvolumeclaims -n onap | grep demo-dmaap | sed -r 's/(^[^ ]+).*/kubectl delete persistentvolumeclaims -n onap \1/' | bash

kubectl get persistentvolumes -n onap | grep demo-dmaap | sed -r 's/(^[^ ]+).*/kubectl delete persistentvolumes -n onap \1/' | bash

kubectl get secrets -n onap | grep demo-dmaap | sed -r 's/(^[^ ]+).*/kubectl delete secrets -n onap \1/' | bash

kubectl get clusterrolebindings -n onap | grep demo-dmaap | sed -r 's/(^[^ ]+).*/kubectl delete clusterrolebindings -n onap \1/' | bash

kubectl get jobs -n onap | grep demo-dmaap | sed -r 's/(^[^ ]+).*/kubectl delete jobs -n onap \1/' | bash

kubectl get pods -n onap | grep demo-dmaap | sed -r 's/(^[^ ]+).*/kubectl delete pods -n onap \1/' | bash

|

...

| Code Block |

|---|

cd /dockerdata-nfs/ sudo rm -r demo-dmaap/ |

Reinstall module

Reinstall the deleted module with the same release name as used in the deletion

| Code Block |

|---|

helm install --namespace onap -n demo-dmaap local/dmaap --set global.masterPassword=WinLab_NetworkSlicing -f onap/resources/overrides/environment.yaml -f onap/resources/overrides/onap-all.yaml --timeout 9001200s |

You can verify the deployment in a parallel terminal by checking the pods using the command

...

Once this is deployed, you can verify using the "helm ls" command to check all the required modules are up and running.

Undeploy ONAP

For the release name 'demo' and namespace 'onap':

...

| Code Block |

|---|

helm undeploy demo --purge

kubectl get persistentvolumeclaims -n onap | grep demo | sed -r 's/(^[^ ]+).*/kubectl delete persistentvolumeclaims -n onap \1/' | bash

kubectl get persistentvolumes -n onap | grep demo | sed -r 's/(^[^ ]+).*/kubectl delete persistentvolumes -n onap \1/' | bash

kubectl get secrets -n onap | grep demo | sed -r 's/(^[^ ]+).*/kubectl delete secrets -n onap \1/' | bash

kubectl get clusterrolebindings -n onap | grep demo | sed -r 's/(^[^ ]+).*/kubectl delete clusterrolebindings -n onap \1/' | bash

kubectl get jobs -n onap | grep demo | sed -r 's/(^[^ ]+).*/kubectl delete jobs -n onap \1/' | bash

kubectl get pods -n onap | grep demo | sed -r 's/(^[^ ]+).*/kubectl delete pods -n onap \1/' | bash

|

...

| Code Block |

|---|

cd /dockerdata-nfs/ sudo rm -r * e.g. ubuntu@onap-nfs-server:~$ cd /dockerdata-nfs/ ubuntu@onap-nfs-server:/dockerdata-nfs$ sudo rm -r * |

Access SDN-R

ODLUX-GUI at winlab

The {userusername} should be replaced by your user id created in orbit-lab.

| Code Block |

|---|

SSH from your machine to create tunnels with given ports on console.sb10.orbit.org: hanif@ubuntu:~$ ssh -AL 30267:localhost:30267 -tL 30205:localhost:30205 {userusename}@console.sb10.orbit-lab.org SSH from console.sb10.orbit.org to create tunnels with given ports on node1-4.sb10.orbit.org: hanif@console:~$ ssh -L 30267:localhost:30267 -L 31939:localhost:31939 ssh -A -t native@node1-1.sb10.orbit-lab.org30205:localhost:30205 native@node1-4 SSH from node1-4.sb10.orbit.org to create tunnels with given ports on onap-control-01: native@node1-4:~$ ssh -L 3193930267:localhost:31939 ssh30267 -A -tL 30205:localhost:30205 ubuntu@10.31.3.2 -L 31939:localhost:31939 4.11 |

On your browser:

| Code Block |

|---|

http://localhost:3193930267/odlux/index.html |

The login credentials are:

...

password: Kp8bJ4SXszM0WXlhak3eHlcse2gAw84vaoGGmJvUy2U

ODLUX-GUI at your labs

Check the IP on the sdnc service bound to port 8282:

| Code Block |

|---|

ubuntu@onap-control-101:~$ kubectl get service -n onap | grep sdnc pomba-sdncctxbuildersdnc ClusterIPNodePort 10.43.145101.187189 <none> 95308443:30267/TCP 2d21h sdnc-ansible-server ClusterIP 10.43.46.219 <none> 2d18h sdnc 8000/TCP NodePort 10.43.103.106 <none> 2d21h sdnc-callhome 8282:31939/TCP,8202:31510/TCP,8280:30246/TCP,8443:30267/TCP 7m52s sdnc-ansible-server NodePort ClusterIP 10.43.113139.22627 <none> 80006666:30266/TCP 7m52s2d21h sdnc-cluster ClusterIP None <none> 2550/TCP 7m52s2d21h sdnc-dgbuilder NodePort 10.43.6224.5071 <none> 3000:30203/TCP 7m52s2d21h sdnc-dmaap-listener ClusterIP None <none> <none> 7m52s2d21h sdnc-portaloam NodePort ClusterIP 10.43.116227.73206 <none> 8443:302018282/TCP,8202/TCP 7m52s2d21h sdnc-ueb-listener ClusterIP None <none> <none> 7m52s so-sdnc-adapter2d21h sdnc-web-service NodePort ClusterIP 10.43.27211.8 74 <none> 80868443:30205/TCP 2d21h so-sdnc-adapter 142m vfc-zte-sdnc-driver ClusterIP 10.43.18920.17058 <none> 84118086/TCP 2d17h |

Look for sdnc service only and the port bound to 8282.

In the above result it is 31939.

| Code Block |

|---|

http://{ip-address}:{sdnc-port-bound-to-8282}/odlux/index.html |

The {ip-address} is the IP address of the onap-control-1 machine.

2d21h |

Troubleshooting

Documentation for troubleshooting.

ES-DB index read only – NFS out of disc space

ONAP log (elastic DB) consumes approx 1 GB per day. If disc space crosses a threshold, indindices of SDNC elastic DB are set to read-only.

...

| Code Block | ||

|---|---|---|

| ||

PUT _settings

{

"index": {

"blocks": {

"read_only_allow_delete": "false"

}

}

} |

helm repo add local http://127.0.0.1:8080