...

As starting point, this effort has started as small subgroup of multicloud as task force.As the efforts evolve, logistics would be revised. Maybe this task force would be promoted to a independent group or an independent project.

Meetings

- [coe] #coe Team ONAP5ONAP7, (Tue) Friday UTC 20:00 / ET 16:00 / PT 13:003:00pm from Jan 16/17, 2018. weekly meeting

...

- Slides presented to architecture subcommittee to start this project as PoC :

View file

Version 2 of slides are here:

K8S_for_VNFs_And_ONAP_Support_v2.pptx

...

K8s Plugin progress slide:

Project (This is sub project of Multi-Cloud as decided by Architecture subcommittee & Multi-Cloud team)

...

- Create a Multi-Cloud plugin service that interacts with Cloud regions supporting K8S

- VNF Bring up:

- API: Exposes API to upper layers in ONAP to bring up VNF.

- Currently Proposal 2 (Please see the attached presentation and referenced in Slides/Links section) seems to be the choice.

- Information expected by this plugin :

- K8S deployment information (in the form understood by K8S), which is opaque to rest of ONAP. This information is normally expected to be provided as part of VNF onboarding in CSAR) and some information (variables values) are created part of every instantiation.

- TBD - Is this artifact passed to Multi-Cloud as reference or is it going to be passed as immediate data from the upper layers of ONAP.

- Metadata information collected by upper layers of ONAP

- Cloud region ID

- Set of compute profiles (One for each VDU within the VNF).

- TBD - Is there anything to be passed

- K8S deployment information (in the form understood by K8S), which is opaque to rest of ONAP. This information is normally expected to be provided as part of VNF onboarding in CSAR) and some information (variables values) are created part of every instantiation.

- Functionality:

- Instantiate VNFs that only consist of VMs.

- Instantiate VNFs that only consist of containers

- Instantiate multiple VNFs (some VNFs realized as VMs and some VNFs realized as containers) that communicate with each other on same networks (External Connection Points of various VNFs could be on the same network)

- Reference to newly brought up VNF is stored in A&AI (Which is needed when VNF needs to be brought down, modify the deployment)

- TBD - Should it populate A&AI with reference to each VM & Container instance of VNF? Or one reference to entire VNF instance good enough? Assuming that there is a need for storing reference to each VM/Container instance in A&AI, some exploration is required to see whether this information is made available by K8S API or should it watch for the events from K8S?

- TBD - Is there any other information that this plugin is expected to populate A&AI DB (IP address of each VM/Container instance) and anything else?

- API: Exposes API to upper layers in ONAP to bring up VNF.

- VNF Bring down:

- API: Exposes API function to upper layers in ONAP to terminate VNF

- Functionality: Based on the request coming from upper ONAP layer, it will terminate the VNF that was created earlier.

- Note that there could be multiple artifacts for a given deployment. For example, EdgeXFoundry requires multiple deployment yaml files (one for each type of container) and multiple service yaml files. Since, there could be many of them, ordering in which they get executed is important. Hence, it is required that the priority order is given in a separate yaml artifact, which is interpreted only by K8S plugin.

- Supported K8S yaml artifacts - Deployment (POD, Daemonset, Stateful set)(, Service, Persistent volume). Others for for future releases.

- Kubernetes templates (artifacts) and variables:

- Since many instances of deployments can be instantiated using same CSAR, it is necessary that they are brought in different namespaces. Namespace name is expected to be part of variables (which is different for different instances).

- And also any template information starting with $.... is replaced from the variables.

- Metadata information collected by upper layers of ONAP

- Cloud region ID

- Set of compute profiles (One for each VDU within the VNF).

- TBD - Is there anything to be passed

- Functionality:

- Instantiate VNFs that only consist of VMs.

- Instantiate VNFs that only consist of containers

- Instantiate multiple VNFs (some VNFs realized as VMs and some VNFs realized as containers) that communicate with each other on same networks (External Connection Points of various VNFs could be on the same network)

- Reference to newly brought up VNF is stored in A&AI (Which is needed when VNF needs to be brought down, modify the deployment)

- TBD - Should it populate A&AI with reference to each VM & Container instance of VNF? Or one reference to entire VNF instance good enough? Assuming that there is a need for storing reference to each VM/Container instance in A&AI, some exploration is required to see whether this information is made available by K8S API or should it watch for the events from K8S?

- TBD - Is there any other information that this plugin is expected to populate A&AI DB (IP address of each VM/Container instance) and anything else?

- VNF Bring down:

- API: Exposes API function to upper layers in ONAP to terminate VNF

- Functionality: Based on the request coming from upper ONAP layer, it will terminate the VNF that was created earlier.

- Scaling within VNF:

- It leaves the decision of scaling-

- It leaves the decision of scaling-out and scaling-in of services of the VNF to the K8S controller at the cloud-region.

- (TBD) - How the configuration life cycle management be taken care?

- Should the plugin watch for new replicas being created by K8S and inform APPC, which in turn sends the configuration?

- Or should we let the new instance that is being brought up talk to APP-C or anything else and let it get the latest configuration?

- Healing & Workload Movement (Not part of Casablanca)

- No API is expected as it is assumed that K8S master at the cloud region will take care of this.

- TBD - Is there any information to be populated in the A&AI when some healing or workload movement occurs that the cloud-region.

- VNF scaling: (Not part of Casablanca)

- API : Scaling of entire VNF

- Similar to VNF bring up.

- API : Scaling of entire VNF

- Create Virtual Link:

- API : Exposes API to create virtual link

- Meta data

- Opaque information (Since OVN + SRIOV are chosen, opaque information passed to it is amenable to create networks and subnets as per the OVN/SRIOV Controller capabilities)

- Reference to the newly created network is added to the A&AI.

- If network already exists, it is expected that use count is incremented.

- Functionality:

- Creates network if it does not exist.

- Using OVN/SRIOV CNI API, it will populate remote DHCP/DNS Servers.

- TBD : Need to understand OVN controller and SRIOV controller capabilities and figure out the functionality of this API in this plugin.

- API : Exposes API to create virtual link

- Delete Virtual Link:

- API : Exposes API to delete virtual network

- Functionality:

- If there is no reference to this network (if use count is 0), then using OVN/SRIOV controllers, it deletes the virtual network.

- Create persistent volume

- Create volume that needs to exist across VNF life cycle.

- Delete persistent volume

- Delete volume

- VNF Bring up:

...

Offers Ansible playbooks for installing a Kubernetes Deployment with additional components required for ONAP MultiCloud plugin. Its temporal repository is https://github.com/electrocucaracha/krd

Activities:

| Activity (Non ONAP related, but necessary to prove K8S plugin) | Owner | Status |

|---|---|---|

| Add K8S installation scripts | Victor Morales | Done |

| Add flannel Networking support | Victor Morales | Done |

| Add OVN ansible playbook | Victor Morales | Done |

| Create functional test to validate OVN operability | In progress | |

| Add Virtlet ansible playbook | Victor Morales | In progressDone |

| Create functional test to validate Virtlet operability | In progress | |

| Prove deployment with EdgeXFoundry containers with flannel network | ramamani yeleswarapu | |

| Prove Prove deployment with one VM and container sharing flannel network | ||

| Prove deployment with one VM and container sharing CNI network | ||

| Add Multus CNI ansible playbook | ramamani yeleswarapu | In progress |

| Create functional test to validate Multus CNI operability | ramamani yeleswarapu | |

| Prove deployment with one VM (firewall VM) and container (simple router container) sharing two networks (both from OVN) | ||

| Prove deployment with one VM and container sharing two networks (one from OVN and another from Flannel | ||

| Document how the usage of the project | Victor Morales | In progress |

| Add Node Feature Discovery for Kubernetes | Victor Morales | |

Create functional test for NFD | Victor Morales |

MultiCloud/Kubernetes Plugin

Translates the ONAP runtime instructions into Kubernetes RESTful API calls. Its temporal repository is https://github.com/shank7485/k8-plugin-multicloud

Activities:

| Activity | Owner | Status |

|---|

K8S plugin for compute

Instantiation time:

- Loading artifact

- Updating loaded artifact based on API information.

- Making calls to K8S (Getting endpoint to talk to from ESR registered repo)

Return values to be put in the A&AI

Note: Once above list is decided, appropriate JIRA stories will be created.

FOLLOWING SECTIONS are YET TO BE UPDATED

Goal and scope

the first target of container/COE is k8s. but other container/COE technology, e.g. docker swarm, is not precluded. If volunteers steps up for it, it would be also addressed.

- Have ONAP take advantage of container/COE technology for cloud native era

- Utilizing of industry momentum/direction for container/COE

Influence/feedback the related technologies(e.g. TOSCA, container/COE)

Teach ONAP container/COE instead of openstack so that VNFs can be deployed/run over container/COE in cloud native way

At the same time it's important to keep ONAP working, not break them.

- Don’t change the existing components/work flow with (mostly) zero impact.

- Leverage the existing interfaces and the integration points where possible

Functionality

...

API/Interfaces

Swagger API:

| View file | ||||

|---|---|---|---|---|

|

the following table summarizes the impact on other projects

...

component

...

comment

...

modelling

...

New names of Data model to describe k8s node/COE instead of compute/openstack.

Already modeling for k8s is being discussed.

...

OOF

...

New policy to use COE, to run VNF in container

...

A&AI/ESR

...

Schema extensions to represent k8s data. (kay value pairs)

...

Multicloud

...

New plugin for COE/k8s.

(depending on the community discussion, ARIA and helm support needs to be considered. But this is contained within multicloud project.)

First target for first release

the scope of Beijing is

Scope for Beijing

First baby step to support containers in a Kubernetes cluster via a Multicloud SBI / Plugin

Minimal implementation with zero impact on MVP of Multicloud Beijing work

Use Cases

Sample VNFs(vFW and vDNS)

integration scenario

Register/unregister k8s cluster instance which is already deployed. (dynamic deployment of k8s is out of scope)

onboard VNFD/NSD to use container

Instantiate / de-instantiate containerized VNFs through K8S Plugin in K8S cluster

Vnf configuration with sample VNFs(vFW, vDNS)

Target for later release

- Installer/test/integration

- More container orchestration technology

- More than sample VNFs

- delegating functionalities to CoE/K8S

Non-Goal/out of scope

The followings are not goal or out-of-scope of this proposal.

- Not installer/deployment. ONAP running over container

- OOM project ONAP on kubernetes

- https://wiki.onap.org/pages/viewpage.action?pageId=3247305

- https://wiki.onap.org/display/DW/ONAP+Operations+Manager+Project

- Self hosting/management might be possible. But it would be further phase.

- container/COE deployment

- On-demand Installing container/coe on public cloud/VMs/baremetal as cloud deployment

- This is also out of scope for now.

- For ease of use/deployment, this will be further phase.

Architecture Alignment.

...

How does this project fit into the rest of the ONAP Architecture?

The architecture (will be)is designed to enhancement to some existing project.

It doesn’t introduce new dependency

...

How does this align with external standards/specifications?

Convert TOSCA model to each container northbound APIs in some ONAP component. To be discussed.

...

Are there dependencies with other open source projects?

| Create a layout for the project | Shashank Kumar Shankar | Done |

| Create a README file with the basic installation instructions | Shashank Kumar Shankar | Done |

| Define the initial swagger API | Shashank Kumar Shankar | Done |

Implement /vnf_instances POST endpoint | Victor Morales | Done |

Implement the Create method for VNFInstanceClient struct | Victor Morales | Done |

Implement /vnf_instances GET endpoint | Done | |

Implement the List method for VNFInstanceClient struct | Victor Morales | Done |

Implement /vnf_instances/{name} GET endpoint | Victor Morales | In progress |

Implement the Get method for VNFInstanceClient struct | In progress | |

Implement /vnf_instances/{name} PATCH endpoint | In progress | |

Implement the Get method for VNFInstanceClient struct | Shashank Kumar Shankar | In progress |

Implement /vnf_instances/{name} DELETE endpoint | Shashank Kumar Shankar | Done |

Implement the Delete method for VNFInstanceClient struct | Done | |

| Create the struct for the Creation response | ||

| Create the struct for the List response | Victor Morales | |

| Create the struct for the Get response | ||

| K8S Plugin API definition towards rest of ONAP for compute | ||

| K8S Plugin API definition towards rest of ONAP for networking | Shashank Kumar Shankar | |

| K8S plugin API definition towards rest of ONAP for storage (May not be needed) | Shashank Kumar Shankar | |

| Merge KRD and plugin repo and upload into the ONAP official repo | Victor Morales | |

| SO Simulator for compute | Shashank Kumar Shankar | |

K8S plugin for compute Instantiation time:

| ||

| Testing with K8S reference deployment with hardcoded flannel configuration at the site (Using EdgeXFoundry) - Deployment yaml files to be part of K8S plugin (uploaded manually) | ramamani yeleswarapu | |

| K8S Plugin implementation for OVN | Ritu Sood | |

| SO simulator for network | ||

| Testing with K8S reference deployment with OVN networking (using EdgeXFoundry) | ||

| Testing with K8S reference deployment with OVN with VM and containers having multiple interfaces | ||

| K8S plugin - Artifact distribution Client to receive artifacts from SDC (Mandatory - On demand artifact download, pro-active storage is stretch goal) | ||

| Above test scenario without harcoding yaml files in K8S plugin | ||

| K8s plugin - Download Kube Config file form AAI and use it to authenticate/operate with a Kubernetes cluster | Shashank Kumar Shankar | |

| K8s plugin - Add an endpoint to render Swagger file | Shashank Kumar Shankar |

Note: Once above list is decided, appropriate JIRA stories will be created.

Projects that may be impacted

| Project | Possible impact | Workaround | owner | Status |

|---|---|---|---|---|

| SO | Ability to call generic VNF API | Until SO is enhanced to support

SO will be simulated to test the K8S plugin and reference deployment | SO simulation owner: ??? | |

| SDC | May not be any impact, but need to see if there any impact

| Owner : Libo | ||

| A&AI AND ESR | May not be any impact, but need to see whether any schema changes are required

Check whether any existing fields in cloud-region can be used to store this information or introduce new attributes in the schema (under ESR) | Owner : Shashank and Dileep | ||

| MSB/ISTIO | No impact on MSB. But fixes required to do following: Integration with ISTIO CA to have the certificate enrolled for communicating with other ONAP servceis Also to communicate with remote K8S master. |

Activities that are in scope for phase1 (Stretch goals)

| Activity | Owner | Status |

|---|---|---|

| K8S node-feature discovery and population of A&AI DB with the features | ||

| Support for Cloud based CaaS (IBM, GCP to start with) |

Scope

- Support for K8S based sites (others such as Dockerswarm, Mesos are not in the scope of Casablanca)

- Support for OVN and flannel based networks in sites

- Support for virtlet to bring up VM based workloads (Others such as Kubevirt is for future)

- Support for bare-metal containers using docker run time (Kata containers support will be taken care later)

- Multiple virtual network support

- Support for multiple interfaces to VMs and containers.

- Proving using VFW VM, Simple router container and EdgeXFoundy containers.

- Support for K8S deployment and other yaml files as artifacts (Helm charts and pure TOSCA based container deployment representation is beyond Casablanca)

- Integration with ISTIO CA (for certificate enrolment)

API/Interfaces

Swagger API:

| View file | ||||

|---|---|---|---|---|

|

Key Project Facts:

This project will be subproject of Multicloud project.

...

Kubernetes pod API or other container northbound AP

...

UseCases

- sample VNF(vFW and vDNS): In Beijing only deploying those VNF over CoE

- other potential usecases(vCPE) are addressed after Beijing release.

the work flow to register k8s instance is depicted as follows

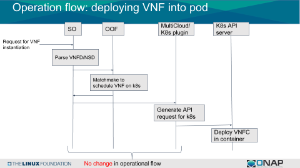

the work flow to deploy VNF into pod is as follows

...

link to seed code (if applicable) N/A

Vendor Neutral

if the proposal is coming from an existing proprietary codebase, have you ensured that all proprietary trademarks, logos, product names, etc., have been removed?

Meets Board policy (including IPR)

Use the above information to create a key project facts section on your project page

Key Project Facts:

This project will be subproject of Multicloud project. Isaku will lead this effort under the umbrella of multicloud project.

NOTE: if this effort is sub-project of multicloud as ARC committee recommended, this will be same to multicloud's.

...

Role | First Name Last Name | Linux Foundation ID | Email Address | Location | Location | |||

|---|---|---|---|---|---|---|---|---|

| committer | electrocucaracha | victor.morales@intel.com | PT(pacific time zone) | |||||

| contributors | munish agarwal | Munish.Agarwal@ericsson.com | ||||||

| Ritu Sood | ritusood | ritu.sood@intel.com | PT(pacific time zone) | |||||

| Shashank Kumar Shankar | shashank.kumar.shankar@intel.com | PT(pacific time zone) | ||||||

| ramamani yeleswarapu | ramamani.yeleswarapu@intel | committer | Isaku Yamahata | yamahata | isaku.yamahata@gmail.com | PT(pacific time zone) | ||

| Kiran Kamineni | kiran.k.kamineni@intel | contributors | Munish Agarwal | Munish.Agarwal@ericsson.com | PT(pacific time zone) | |||

| Bin Hu | bh526r | bh526r@att.com | bh526r@att.com | |||||

| libo zhu | ||||||||

| Manjeet Singh Bhatia | manjeets | manjeet.s.bhatia@intel.com | PT(pacific time zone) | Manjeet S. Bhatia | manjeets||||

| Phuoc Hoang | hoangphuocbk | phuoc.hc@dcn.ssu.ac.kr | ||||||

| Mohamed ElSerngawy | melserngawy | mohamed.elserngawy@kontron.com | EST | |||||

| Komer Poodari | kpoodari | kpoodari@berkeley.edu | PST | |||||

| ramki krishnan | ramkri123 | ramki krishnan | PST | |||||

| Interested (will attend my first on 20180206) - part of oom and logging projects | michaelobrien | frank.obrien@amdocs.com | EST (GMT-5) | Victor Morales | electrocucaracha | victor.morale@intel.com | PST||

| View file | ||||

|---|---|---|---|---|

|