This page explains the manual configurations required for setting up E2E network slicing use case - option 1.

| Table of Contents |

|---|

1. SDC

ONAP Portal: https://portal.api.simpledemo.onap.org:30225/ONAPPORTAL/login.htm (Username:cs0008, Password:demo123456!)

...

Refer for Template Design for Option 1 respective template creation and distribution.

2.UUI Configuration

Configure CST template UUID and Invariant UUID in slicing.properties file of uui-server microservice

...

The values of these two parameters come from CST template which can be find on SDC page.

3.MSB Configuration

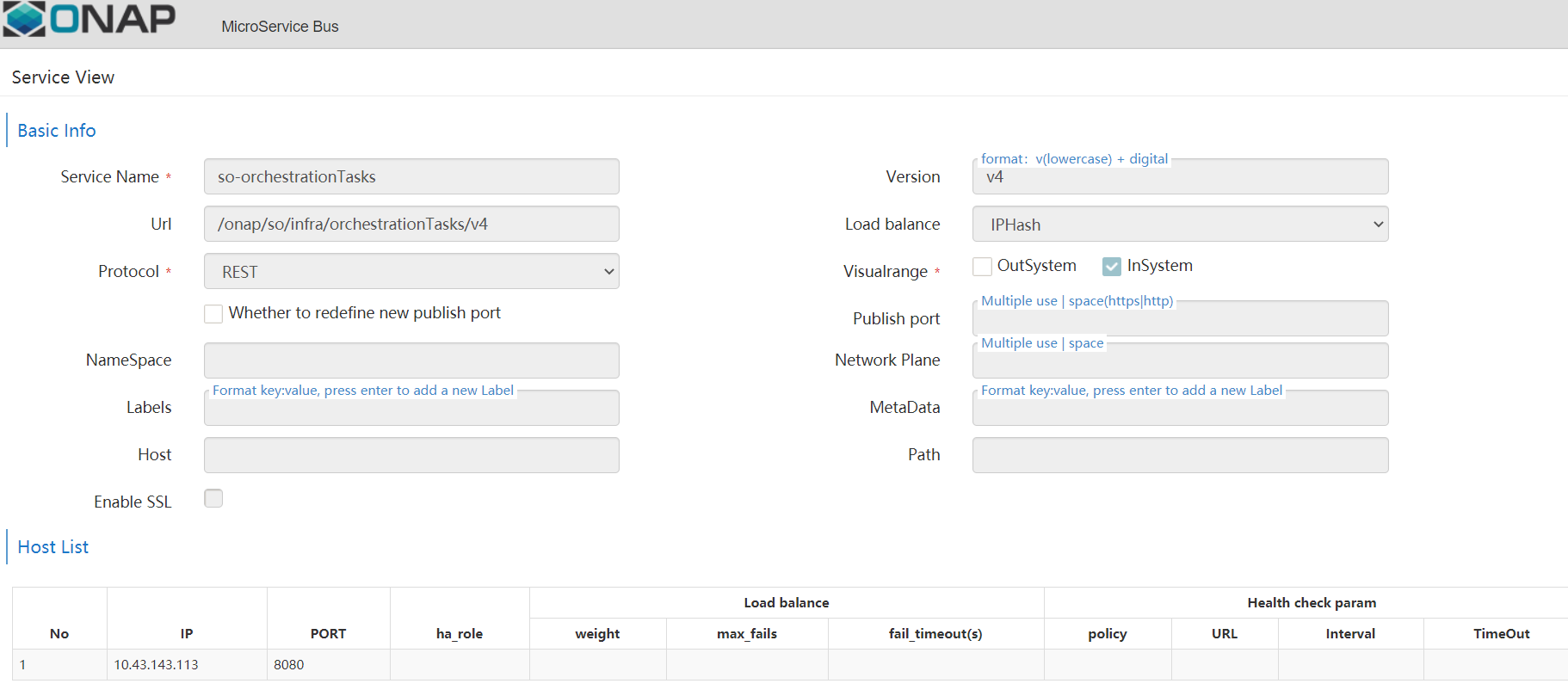

Register so-orchestrationTasks and so-serviceInstances interface to MSB.

...

Input IP Address and Port then click the 'SAVE' button. (Use cmd ’kubectl get svc -n onap so‘ to confirm IP and port.)

4. SO

Copy subnetCapability.json to SO-API Handler pod to configure subnet capabilities at run time.

6. You should add a aai-business service for MSB.

Steps:

- Got to msb https://{{master server ip}}:30284/iui/microservices/default.html

- Select "Service Discover" from left panel

- Click "Service Register" button

- ADD the following info:

Service Name: aai-business

Url: /aai/v13/business

Protocol: REST

Enable SSL to True

Version: v13

Load balancer: round-robin

Visualranfe: InSystem - Add host:

AAI service ip and port (8443)

- ADD the following info:

- Save all

4. SO

Copy subnetCapability.json to SO-API Handler pod to configure subnet capabilities at run time.

| Expand | ||

|---|---|---|

| ||

{ | ||

| Expand | ||

| ||

| Expand | { "latency": 5, "maxNumberofUEs": "100", "maxThroughput": "150", "terminalDensity": "50" }, "CN": { "latency": 10, "maxThroughput": 50, "maxNumberofConns": 100 }, "TN_FH": { "latency": 10, "maxThroughput": 90 }, "TN_MH ": { 10, 100 FH": { 90 } , |

You can You can copy the file to the pod using the following command

|

SO Database Update

Insert ORCHESTRATION_URI into service_recipe, SERVICE_MODEL_UUID replaced by CST.ModelId.

|

Insert ORCHESTRATION_URI into service_recipe, SERVICE_MODEL_UUID is ServiceProfile.ModelId

|

5.OOF Configuration

OSDF CHANGES(FOR NST SELECTION) for Guilin Release

New NST templates can be added in. There is a json file present in the osdf folder, where the nst templates can be added with the corresponding ModelId and ModelInvariantUUID.

...

apps/nst/optimizers/conf/configIinputs.json

6. Policy Creation Steps

Refer Optimization Policy Creation Steps for optimization policy creation and deployment steps

Refer Policy Models and Sample policies - NSI selection for sample policies

NOTE:

...

You need to restart the OOF docker container once you updated the slicing_config.yaml, you can do it using the following steps,

- Login to the worker VM where the OOF container is running. You can find the worker node by running (kubectl get pods -n onap -o wide | grep dev-oof)

- Find the container using docker ps | grep optf-osdf

- Restart the container using docker restart <container id>

7.AAI Configuration

Create customer id :

curl --user AAI:AAI -X PUT -H "X-FromAppId:AAI" -H "X-TransactionId:get_aai_subscr" -H "Accept:application/json" -H "Content-Type:application/json" -k -d '{

"global-customer-id":"5GCustomer",

"subscriber-name":"5GCustomer",

"subscriber-type":"INFRA"

}' "https://<worker-vm-ip>:30233/aai/v21/business/customers/customer/5GCustomer"

Create service type:

curl --user AAI:AAI -X PUT -H "X-FromAppId:AAI" -H "X-TransactionId:get_aai_subscr" -H "Accept:application/json" -H "Content-Type:application/json" -k https://<worker-vm-ip>:30233/aai/v21/business/customers/customer/5GCustomer/service-subscriptions/service-subscription/5G

Create cloud region:

curl --location -g --request PUT -k 'https://<worker-vm-ip>:30233/aai/v21/cloud-infrastructure/cloud-regions/cloud-region/k8scloudowner4/k8sregionfour' \

--header 'X-TransactionId: 7ee3319f-ce69-4430-8823-5c7e58484086' \

--header 'X-FromAppId: jimmy-postman' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header 'Authorization: Basic QUFJOkFBSQ==' \

--data-raw '{

"cloud-owner": "k8scloudowner4",

"cloud-region-id": "k8sregionfour",

"cloud-type": "k8s",

"owner-defined-type": "t1",

"cloud-region-version": "1.0",

"complex-name": "clli2",

"cloud-zone": "CloudZone",

"sriov-automation": false

}'

Create tenant:

curl --location -g --request PUT -k 'https://<worker-vm-ip>:30233/aai/v21/cloud-infrastructure/cloud-regions/cloud-region/k8scloudowner4/k8sregionfour/tenants/tenant/b3a2f61e13664e64a7a5f976c24fe63c' \

--header 'X-TransactionId: db1d7259-82c3-48c7-b8f3-18558bbc59dd' \

--header 'X-FromAppId: jimmy-postman' \

--header 'Content-Type: application/json' \

--header 'Accept: application/json' \

--header 'Authorization: Basic QUFJOkFBSQ==' \

--data-raw '{

"tenant-id": "b3a2f61e13664e64a7a5f976c24fe63c",

"tenant-name": "k8stenant",

"relationship-list": {

"relationship": [

{

"related-to": "service-subscription",

"relationship-label": "org.onap.relationships.inventory.Uses",

"related-link": "/aai/v21/business/customers/customer/5GCustomer/service-subscriptions/service-subscription/5G",

"relationship-data": [

{

"relationship-key": "customer.global-customer-id",

"relationship-value": "Demonstration"

},

{

"relationship-key": "service-subscription.service-type",

"relationship-value": "vfwk8s"

}

]

}

]

}

}'

8.ConfigDB

Config DB is a spring boot application that works with mariaDB. DB schema details are available at Config DB.

Install config DB application in a separate VM. MariaDB container should be up and running to access the config DB APIs.

Refer https://wiki.onap.org/display/DW/Config+DB+setup for configDB setup.

9. SDNC

Install SDNC using OOM charts and the below pods should be running.

SDNC Pods

...

kubectl get pods -n onap | grep sdncdev-sdnc-0 2/2 Running 0 46ddev-sdnc-ansible-server-6b449f8d8-7mjld 1/1 Running 0 46ddev-sdnc-dbinit-job-mwr8s 0/1 Completed 0 46ddev-sdnc-dgbuilder-86c9cb55bb-svcsh 1/1 Running 0 46ddev-sdnc-dmaap-listener-6bd7fbc64f-dl4ch 1/1 Running 0 46ddev-sdnc-sdnrdb-init-job-824vl 0/1 Completed 0 46ddev-sdnc-ueb-listener-769f74cb4b-wgcw7 1/1 Running 0 46ddev-sdnc-web-5b75c68fd8-zfsn6 1/1 Running 0 46d

| View file | ||||

|---|---|---|---|---|

|

Copy the policy files

unzip policies.zip |

Refer Policy Models and Sample policies - NSI selection for sample policies

Updated slice/service profile mapping - https://gerrit.onap.org/r/gitweb?p=optf/osdf.git;a=blob;f=config/slicing_config.yaml;h=179f54a6df150a62afdd72938c2f33d9ae1bd202;hb=HEAD

NOTE:

- The service name given for creating the policy must match with the service name in the request

- The scope fields in the policies should match with the value in the resourceSharingLevel(non-shared/shared). Do modify the policy accordingly.

- Check the case of the attributes with the OOF request with the attribute map (camel to snake and snake to camel) in config/slicing_config.yaml, if any mismatch found modify the attribute map accordingly.

You need to restart the OOF docker container once you updated the slicing_config.yaml, you can do it using the following steps,

- Login to the worker VM where the OOF container is running. You can find the worker node by running (kubectl get pods -n onap -o wide | grep dev-oof)

- Find the container using docker ps | grep optf-osdf

- Restart the container using docker restart <container id>

7.AAI Configuration

Create customer id :

curl --user AAI:AAI -X PUT -H "X-FromAppId:AAI" -H "X-TransactionId:get_aai_subscr" -H "Accept:application/json" -H "Content-Type:application/json" -k -d '{

"global-customer-id":"5GCustomer",

"subscriber-name":"5GCustomer",

"subscriber-type":"INFRA"

}' "https://<worker-vm-ip>:30233/aai/v21/business/customers/customer/5GCustomer"

Create service type:

curl --user AAI:AAI -X PUT -H "X-FromAppId:AAI" -H "X-TransactionId:get_aai_subscr" -H "Accept:application/json" -H "Content-Type:application/json" -k https://<worker-vm-ip>:30233/aai/v21/business/customers/customer/5GCustomer/service-subscriptions/service-subscription/5G

8.ConfigDB

Config DB is a spring boot application that works with mariaDB. DB schema details are available at Config DB.

Install config DB application in a separate VM. MariaDB container should be up and running to access the config DB APIs.

Refer https://wiki.onap.org/display/DW/Config+DB+setup for configDB setup. Latest source is available at Image versions, preparation steps and useful info-Config DB Preload Info Section.

Necessary RAN network functions data are preloaded in config DB while booting the maria DB container.

Note: Refer the latest templates from gerrit which are committed in June 2021. https://gerrit.onap.org/r/gitweb?p=ccsdk/distribution.git;a=commit;h=8b86f34f6ea29728e31c4f6799009e8562ef3b6f

9. SDNC

Install SDNC using OOM charts and the below pods should be running. As ran-slice RPCs are not visible in the latest SDN-C image, use the image version 2.1.0 for sdnc-image and dmaap listener. Manually, load the RANSlice DGs like below:

- Copy the DG XMLs from /distribution/platform-logic/ran-slice-api/src/main/xml (gerrit repo) to /opt/onap/sdnc/svclogic/graphs/ranSliceapi(sdnc container)

- Install the DGs : a) Navigate to /opt/onap/sdnc/svclogic/bin (Sdnc container) (b) Run ./install.sh

SDNC Pods

|

Check the below in SDNC pod (dev-sdnc-0).

- Latest ran-slice-api-dg.properties (/distribution/odlsli/src/main/properties/ran-slice-api-dg.properties) should be available at /opt/onap/ccsdk/data/properties/

- All ranSlice*.json template files (/distribution/platform-logic/restapi-templates/src/main/json) should present at /opt/onap/ccsdk/restapi/templates/

- DG XML files from /distribution/platform-logic/ran-slice-api/src/main/xml should present at /opt/onap/sdnc/svclogic/graphs/ranSliceapi

- Go to /opt/onap/sdnc/svclogic/bin

Run ./install.sh << this should re-install and activate all DG's>>

Note:

If SDN-C deletion is unsuccessful due to the leftover residues, use the below commands to delete it completely.

| Expand | ||

|---|---|---|

| ||

kubectl get secrets -n onap --no-headers=true | awk '/dev-sdnc/{print $1}' | xargs kubectl delete secrets -n onap |

Check the below in SDNC pod (dev-sdnc-0).

- Latest ran-slice-api-dg.properties (/distribution/odlsli/src/main/properties/ran-slice-api-dg.properties) should be available at /opt/onap/ccsdk/data/properties/

- All ranSlice*.json template files (/distribution/platform-logic/restapi-templates/src/main/json) should present at /opt/onap/ccsdk/restapi/templates/

- DG XML files from /distribution/platform-logic/ran-slice-api/src/main/xml should present at /opt/onap/sdnc/svclogic/graphs/ranSliceapi

Note:

If SDN-C deletion is unsuccessful due to the leftover residues, use the below commands to delete it completely.

| Expand | ||

|---|---|---|

| ||

kubectl get secrets -n onap --no-headers=true | awk '/dev-sdnc/{print $1}' | xargs kubectl delete secrets pvc -n onap to delete PV: kubectl get pv -n onap --no-headers=true | tail -n+2 | awk '/dev-nengsdn/{print $1}' | xargs kubectl delete patch pv -n onap -p '{"metadata":{"finalizers":null}}' kubectl delete secret pv -n onap dev-aai-keystore to delete PV: kubectl get pv -n onap --no-headers=true | tail -n+2 | awk '/dev-sdn/{print $1}' | xargs kubectl patch pv -n onap -p '{"metadata":{"finalizers":null}}' kubectl delete pv -n onap dev-sdnrdb-master-pv-0 --grace-period=0 --force |

DMAAP Messages

...

sdnrdb-master-pv-0 --grace-period=0 --force |

DMAAP Messages

Refer SDN-R_impacts for Dmaaps messages that can be used as an SDN-R input for RAN slice instantiation, modification, activation, deactivation and termination.

ACTN Simulator:

This Simulator section is bypassed and a workaround is used to continue the flow. Workaround is done in the SDNC DG. <>

ranSliceApi not deployed into SDNC

This issue occurs because you need to set the env SDNR_NORTHBOUND=true for the sdnc-image. This is by default set to false. With this flag all sdnr-northbound features are installed during startup.

How to fix it?

- Setting env into SDNC HELM CHART

- Go to values.yaml of SDNC OOM folder : https://gerrit.onap.org/r/gitweb?p=oom.git;a=blob;f=kubernetes/sdnc/values.yaml;h=fc093b8637bd64aaa523b9d0c2295fb6542a2a7d;hb=refs/heads/honolulu#l217

Add param sdnrenabled: true , under config section, like below:

Code Block config: sdnrenabled: true odlUid: 100 odlGid: 101

- Setting env into SDNC HELM CHART

- Rebuild the SDNC package and redeploy it.