Table of Contents

| Note | ||

|---|---|---|

| ||

This wiki is under construction - this means that content here may be not fully specified or missing. TODO: |

...

Architectural details of the OOM project is described here - OOM User Guide

...

Status

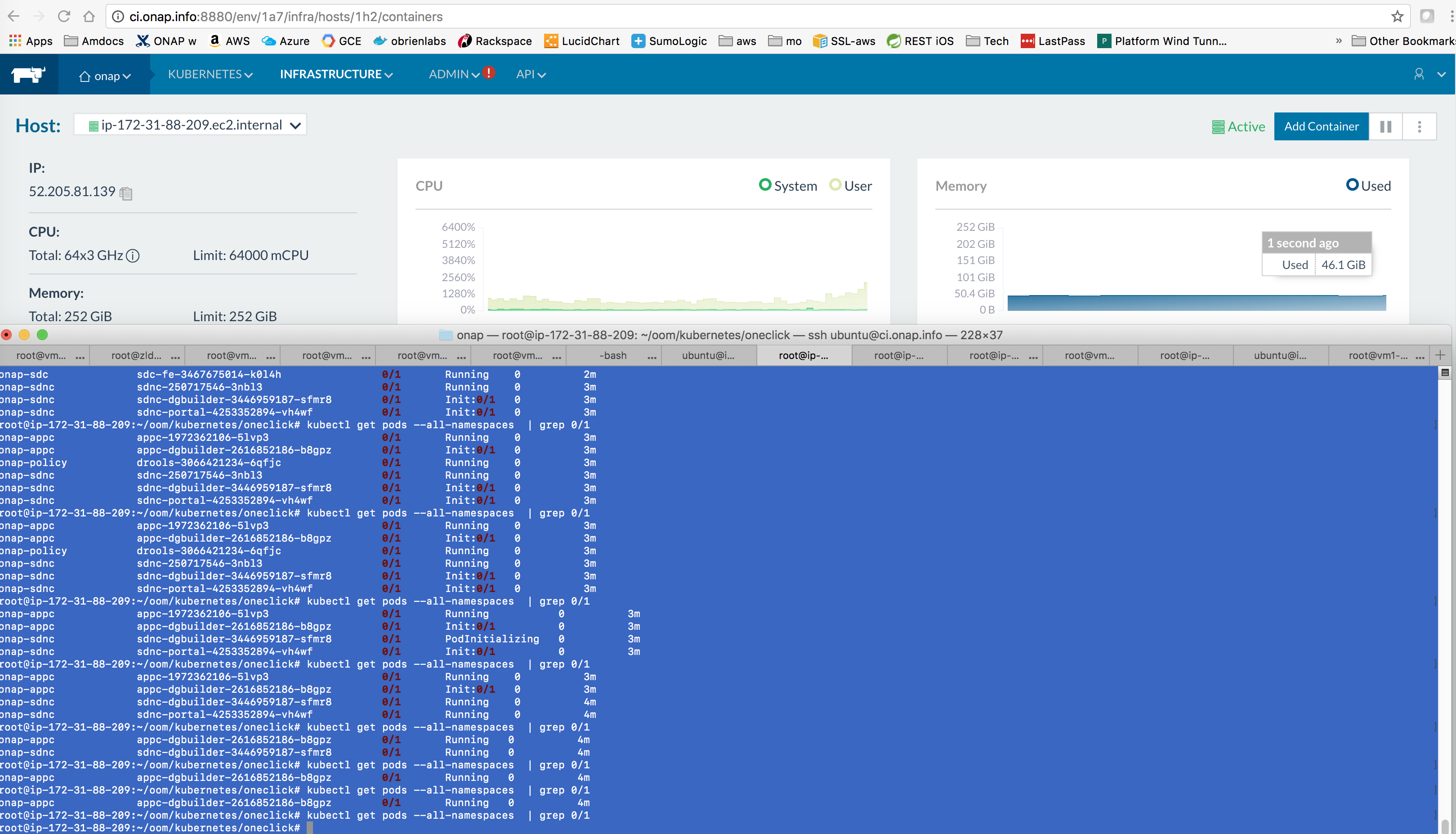

20170902: all containers except DCAE merged and up - see reference ONAP 1.1 install on vnc-portal: http://test.onap.info:30211 | rancher UI: http://test.onap.info:8880

Undercloud Installation

Requirements

...

48G w/o DCAE

64G with DCAE

...

Note: you need at least 48g RAM (3 is for Rancher/Kubernetes itself - this is without DCAE yet (the VFs exist on a separate openstack).

42 to start and 48 after running the system for a day

...

100G

We need a kubernetes installation either a base installation or with a thin API wrapper like Rancher.

There are several options - currently Rancher with Helm on Ubuntu 16.04 is a focus as a thin wrapper on Kubernetes - there are other alternative platforms in the subpage - ONAP on Kubernetes (Alternatives)

...

Ubuntu 16.04.2

!Redhat

...

Bare Metal

VMWare

...

Recommended approach

Issue with kubernetes support only in 1.12 (obsolete docker-machine) on OSX

...

ONAP Installation

Quickstart Installation

1) install rancher, clone oom, run config-init pod, run one or all onap components

...

*****************

Note: uninstall docker if already installed - as Kubernetes only support 1.12.x - as of 20170809

| Code Block |

|---|

% sudo apt-get remove docker-engine |

*****************

The official documentation for installation of ONAP with OOM / Kubernetes is located in Read the Docs:

- OOM User Guide — onap master documentation

- OOM Quick Start Guide — onap master documentation)

- OOM Cloud Setup Guide — onap master documentation

Install Rancher

ONAP deployment in kubernetes is modelled in the oom project as a 1:1 set of service:pod sets (1 pod per docker container). The fastest way to get ONAP Kubernetes up is via Rancher on any bare metal or VM that supports a clean Ubuntu 16.04 install and more than 50G ram.

(on each host) add to your /etc/hosts to point your ip to your hostname (add your hostname to the end). Add entries for all other hosts in your cluster.

| Code Block | ||

|---|---|---|

| ||

sudo vi /etc/hosts

<your-ip> <your-hostname> |

Try to use root - if you use ubuntu then you will need to enable docker separately for the ubuntu user

| Code Block |

|---|

sudo su -

apt-get update |

(to fix possible modprobe: FATAL: Module aufs not found in directory /lib/modules/4.4.0-59-generic)

(on each host (server and client(s) which may be the same machine)) Install only the 1.12.x (currently 1.12.6) version of Docker (the only version that works with Kubernetes in Rancher 1.6)

| Code Block |

|---|

curl https://releases.rancher.com/install-docker/1.12.sh | sh |

(on the master only) Install rancher (use 8880 instead of 8080) - note there may be issues with the dns pod in Rancher after a reboot or when running clustered hosts - a clean system will be OK - Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-236

| Code Block |

|---|

docker run -d --restart=unless-stopped -p 8880:8080 rancher/server |

In Rancher UI - dont use (http://127.0.0.1:8880) - use the real IP address - so the client configs are populated correctly with callbacks

You must deactivate the default CATTLE environment - by adding a KUBERNETES environment - and Deactivating the older default CATTLE one - your added hosts will attach to the default

- Default → Manage Environments

- Select "Add Environment" button

- Give the Environment a name and description, then select Kubernetes as the Environment Template

- Hit the "Create" button. This will create the environment and bring you back to the Manage Environments view

- At the far right column of the Default Environment row, left-click the menu ( looks like 3 stacked dots ), and select Deactivate. This will make your new Kubernetes environment the new default.

Register your host(s) - run following on each host (including the master if you are collocating the master/host on a single machine/vm)

For each host, In Rancher > Infrastructure > Hosts. Select "Add Host"

The first time you add a host - you will be presented with a screen containing the routable IP - hit save only on a routable IP.

Enter IP of host: (if you launched racher with 127.0.0.1/localhost - otherwise keep it empty - it will autopopulate the registration with the real IP)

Copy command to register host with Rancher,

Execute command on host, for example:

| Code Block |

|---|

% docker run --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.2 http://192.168.163.131:8880/v1/scripts/BBD465D9B24E94F5FBFD:1483142400000:IDaNFrug38QsjZcu6rXh8TwqA4 |

wait for kubernetes menu to populate with CLI

Install Kubectl

The following will install kubectl on a linux host. Once configured, this client tool will provide management of a Kubernetes cluster.

| Code Block |

|---|

% curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

% chmod +x ./kubectl

% mv ./kubectl /usr/local/bin/kubectl

% mkdir ~/.kube

% vi ~/.kube/config |

Paste kubectl config from Rancher (you will see the CLI menu in Rancher / Kubernetes after the k8s pods are up on your host)

Click on "Generate Config" to get your content to add into .kube/config

Verify that Kubernetes config is good

| Code Block |

|---|

root@obrien-kube11-1:~# kubectl cluster-info

Kubernetes master is running at ....

Heapster is running at....

KubeDNS is running at ....

kubernetes-dashboard is running at ...

monitoring-grafana is running at ....

monitoring-influxdb is running at ...

tiller-deploy is running at.... |

Install Helm

The following will install Helm (use 2.3.0 not current 2.6.0) on a linux host. Helm is used by OOM for package and configuration management.

Prerequisite: Install Kubectl

| Code Block |

|---|

# wget http://storage.googleapis.com/kubernetes-helm/helm-v2.3.0-linux-amd64.tar.gz

# tar -zxvf helm-v2.3.0-linux-amd64.tar.gz

# mv linux-amd64/helm /usr/local/bin/helm

Test Helm

# helm help |

Undercloud done - move to ONAP

clone oom (scp your onap_rsa private key first - or clone anon - Ideally you get a full gerrit account and join the community)

see ssh/http/http access links below

https://gerrit.onap.org/r/#/admin/projects/oom

| Code Block |

|---|

anonymous http

1.0 branch

git clone -b release-1.0.0 http://gerrit.onap.org/r/oom

or 1.1/R1 master branch

git clone http://gerrit.onap.org/r/oom

or using your key

git clone -b release-1.0.0 ssh://michaelobrien@gerrit.onap.org:29418/oom |

or use https (substitute your user/pass)

| Code Block |

|---|

git clone -b release-1.0.0 https://michaelnnnn:uHaBPMvR47nnnnnnnnRR3Keer6vatjKpf5A@gerrit.onap.org/r/oom |

Wait until all the hosts show green in rancher, then run the createConfig/createAll scripts that wraps all the kubectl commands

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Run the setenv.bash script in /oom/kubernetes/oneclick/ (new since 20170817)

| Code Block |

|---|

source setenv.bash |

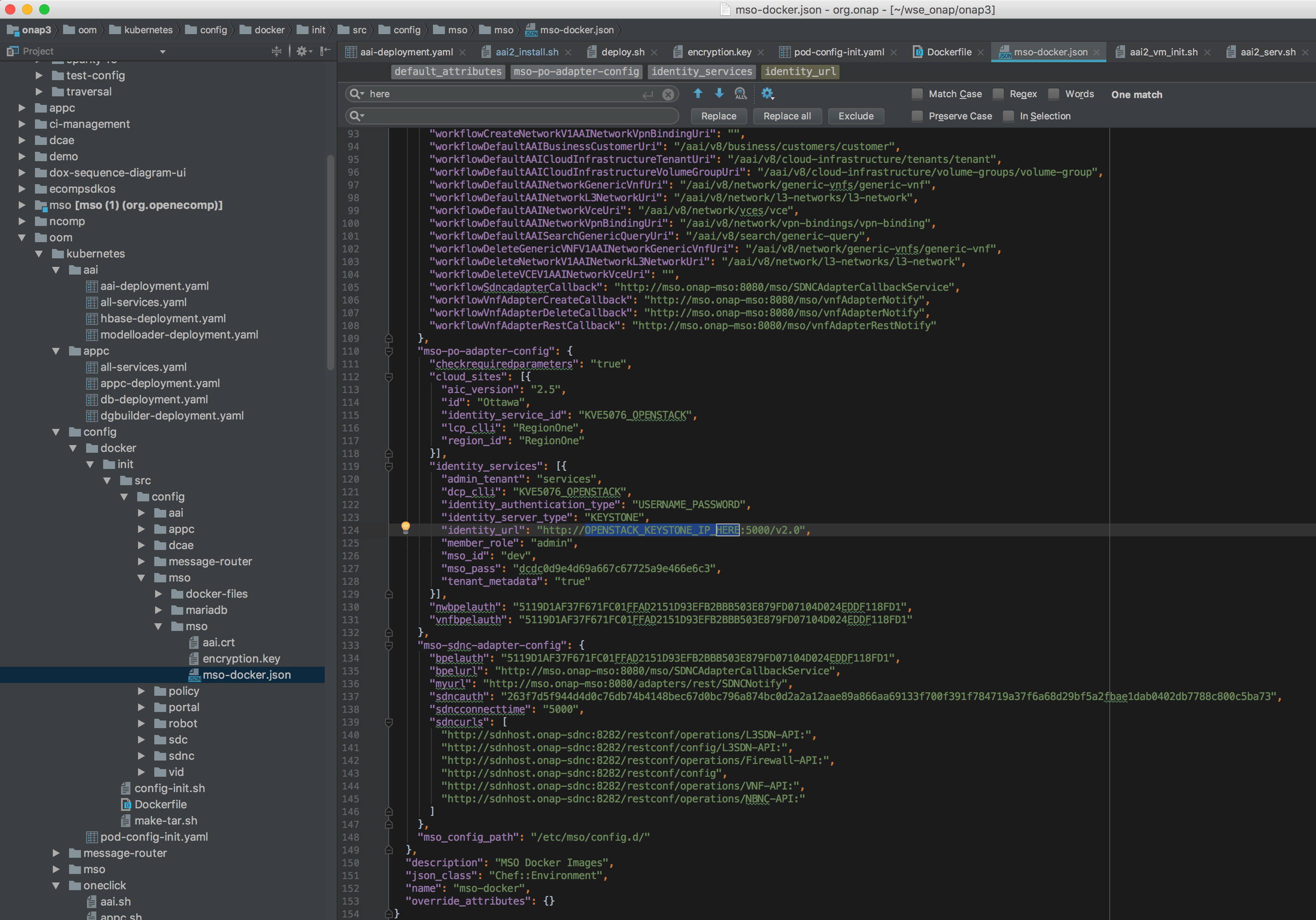

Use the sample config or populate your Openstack parameters below

(only if you are planning on closed-loop) - Before running createConfig.sh (see below) - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example

vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json

replace for example

"identity_services": [{"identity_url": "http://OPENSTACK_KEYSTONE_IP_HERE:5000/v2.0",

run the one time config pod - which mounts the volume /dockerdata/ contained in the pod config-init. This mount is required for all other ONAP pods to function.

Note: the pod will stop after NFS creation - this is normal.

| Code Block |

|---|

% cd oom/kubernetes/config

# edit or copy the config for MSO data

vi onap-parameters.yaml

# or

cp onap-parameters-sample.yaml onap-parameters.yaml

% ./createConfig.sh -n onap

|

**** Creating configuration for ONAP instance: onap

namespace "onap" created

pod "config-init" created

**** Done ****

Wait for the config-init pod is gone before trying to bring up a component or all of ONAP - around 60 sec - see https://wiki.onap.org/display/DW/ONAP+on+Kubernetes#ONAPonKubernetes-Waitingforconfig-initcontainertofinish-20sec

root@ip-172-31-93-122:~/oom_20170908/oom/kubernetes/config# kubectl get pods --all-namespaces -a

onap config 0/1 Completed 0 1m

Note: When using the -a option the config container will show up with the status, however when not used with the -a flag, it will not be present

Note: use only the hardcoded "onap" namespace prefix - as URLs in the config pod are set as follows "workflowSdncadapterCallback": "http://mso.onap-mso:8080/mso/SDNCAdapterCallbackService"

Don't run all the pods unless you have at least 40G (without DCAE) or 50G allocated - if you have a laptop/VM with 16G - then you can only run enough pods to fit in around 11G

Ignore errors introduced around 20170816 - these are non-blocking and will allow the create to proceed -

| Jira | ||||||

|---|---|---|---|---|---|---|

|

| Code Block |

|---|

% cd ../oneclick

% vi createAll.bash

% ./createAll.bash -n onap -a robot|appc|aai |

(to bring up a single service at a time)

Only if you have >50G run the following (all namespaces)

| Code Block |

|---|

% ./createAll.bash -n onap |

ONAP is OK if everything is 1/1 in the following

| Code Block |

|---|

% kubectl get pods --all-namespaces |

Run the ONAP portal via instructions at RunningONAPusingthevnc-portal

Wait until the containers are all up

Run Initial healthcheck directly on the host

cd /dockerdata-nfs/onap/robot

./ete-docker.sh health

check AAI endpoints

root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# kubectl -n onap-aai exec -it aai-service-3321436576-2snd6 bash

root@aai-service-3321436576-2snd6:/# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-systemd-

root 7 1 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-master

root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# curl https://127.0.0.1:30233/aai/v11/service-design-and-creation/models

curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none

List of Containers

Total pods is 48 (without DCAE)

Docker container list - source of truth: https://git.onap.org/integration/tree/packaging/docker/docker-images.csv

get health via

| Code Block |

|---|

root@ip-172-31-93-160:~# kubectl get pods --all-namespaces -a | grep 1/1

kube-system heapster-4285517626-7wdct 1/1 Running 0 2d

kube-system kubernetes-dashboard-716739405-xxn5k 1/1 Running 0 2d

kube-system monitoring-grafana-3552275057-hvfw8 1/1 Running 0 2d

kube-system monitoring-influxdb-4110454889-7s5fj 1/1 Running 0 2d

kube-system tiller-deploy-737598192-jpggg 1/1 Running 0 2d

onap-aai aai-dmaap-522748218-5rw0v 1/1 Running 0 1d

onap-aai aai-kafka-2485280328-6264m 1/1 Running 0 1d

onap-aai aai-resources-3302599602-fn4xm 1/1 Running 0 1d

onap-aai aai-service-3321436576-2snd6 1/1 Running 0 1d

onap-aai aai-traversal-2747464563-3c8m7 1/1 Running 0 1d

onap-aai aai-zookeeper-1010977228-l2h3h 1/1 Running 0 1d

onap-aai data-router-1397019010-t60wm 1/1 Running 0 1d

onap-aai elasticsearch-2660384851-k4txd 1/1 Running 0 1d

onap-aai gremlin-1786175088-m39jb 1/1 Running 0 1d

onap-aai hbase-3880914143-vp8zk 1/1 Running 0 1d

onap-aai model-loader-service-226363973-wx6s3 1/1 Running 0 1d

onap-aai search-data-service-1212351515-q4k68 1/1 Running 0 1d

onap-aai sparky-be-2088640323-h2pbx 1/1 Running 0 1d

onap-appc appc-1972362106-4zqh8 1/1 Running 0 1d

onap-appc appc-dbhost-2280647936-s041d 1/1 Running 0 1d

onap-appc appc-dgbuilder-2616852186-g9sng 1/1 Running 0 1d

onap-message-router dmaap-3565545912-w5lp4 1/1 Running 0 1d

onap-message-router global-kafka-701218468-091rt 1/1 Running 0 1d

onap-message-router zookeeper-555686225-vdp8w 1/1 Running 0 1d

onap-mso mariadb-2814112212-zs7lk 1/1 Running 0 1d

onap-mso mso-2505152907-xdhmb 1/1 Running 0 1d

onap-policy brmsgw-362208961-ks6jb 1/1 Running 0 1d

onap-policy drools-3066421234-rbpr9 1/1 Running 0 1d

onap-policy mariadb-2520934092-3jcw3 1/1 Running 0 1d

onap-policy nexus-3248078429-4k29f 1/1 Running 0 1d

onap-policy pap-4199568361-p3h0p 1/1 Running 0 1d

onap-policy pdp-785329082-3c8m5 1/1 Running 0 1d

onap-policy pypdp-3381312488-q2z8t 1/1 Running 0 1d

onap-portal portalapps-2799319019-00qhb 1/1 Running 0 1d

onap-portal portaldb-1564561994-50mv0 1/1 Running 0 1d

onap-portal portalwidgets-1728801515-r825g 1/1 Running 0 1d

onap-portal vnc-portal-700404418-r61hm 1/1 Running 0 1d

onap-robot robot-349535534-lqsvp 1/1 Running 0 1d

onap-sdc sdc-be-1839962017-n3hx3 1/1 Running 0 1d

onap-sdc sdc-cs-2640808243-tc9ck 1/1 Running 0 1d

onap-sdc sdc-es-227943957-f6nfv 1/1 Running 0 1d

onap-sdc sdc-fe-3467675014-v8jxm 1/1 Running 0 1d

onap-sdc sdc-kb-1998598941-57nj1 1/1 Running 0 1d

onap-sdnc sdnc-250717546-xmrmw 1/1 Running 0 1d

onap-sdnc sdnc-dbhost-3807967487-tdr91 1/1 Running 0 1d

onap-sdnc sdnc-dgbuilder-3446959187-dn07m 1/1 Running 0 1d

onap-sdnc sdnc-portal-4253352894-hx9v8 1/1 Running 0 1d

onap-vid vid-mariadb-2932072366-n5qw1 1/1 Running 0 1d

onap-vid vid-server-377438368-kn6x4 1/1 Running 0 1d

#busted containers 0/1 filter (ignore config-init it is a 1-time container)

kubectl get pods --all-namespaces -a | grep 0/1

onap config-init 0/1 Completed 0 1d |

...

NAMESPACE

master:20170715

...

The mount "config-init-root" is in the following location

(user configurable VF parameter file below)

/dockerdata-nfs/onapdemo/mso/mso/mso-docker.json

...

aai-dmaap-522748218-5rw0v

...

aai-kafka-2485280328-6264m

...

aai-resources-3302599602-fn4xm

...

/opt/aai/logroot/AAI-RES

...

aai-service-3321436576-2snd6

...

aai-traversal-2747464563-3c8m7

...

/opt/aai/logroot/AAI-GQ

...

aai-zookeeper-1010977228-l2h3h

...

data-router-1397019010-t60wm

...

elasticsearch-2660384851-k4txd

...

gremlin-1786175088-m39jb

...

hbase-3880914143-vp8z

...

model-loader-service-226363973-wx6s3

...

search-data-service-1212351515-q4k6

...

sparky-be-2088640323-h2pbx

...

wurstmeister/zookeeper:latest...

dockerfiles_kafka:latest...

Note: currently there are no DCAE containers running yet (we are missing 6 yaml files (1 for the controller and 5 for the collector,staging,3-cdap pods)) - therefore DMaaP, VES collectors and APPC actions as the result of policy actions (closed loop) - will not function yet.

In review: https://gerrit.onap.org/r/#/c/7287/

| Jira | ||||||

|---|---|---|---|---|---|---|

|

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

attos/dmaap:latest...

not required

dcae-controller

...

bring onap-msb up before the rest of onap

follow

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

1.0.0 only

...

portalwidgets-1728801515-r825g

...

/dockerdata-nfs/onap/sdc/logs/SDC/SDC-BE

${log.home}/${OPENECOMP-component-name}/ ${OPENECOMP-subcomponent-name}/transaction.log.%i./var/lib/jetty/logs/SDC/SDC-BE/metrics.log

./var/lib/jetty/logs/SDC/SDC-BE/audit.log

./var/lib/jetty/logs/SDC/SDC-BE/debug_by_package.log

./var/lib/jetty/logs/SDC/SDC-BE/debug.log

./var/lib/jetty/logs/SDC/SDC-BE/transaction.log

./var/lib/jetty/logs/SDC/SDC-BE/error.log

./var/lib/jetty/logs/importNormativeAll.log

./var/lib/jetty/logs/ASDC/ASDC-FE/audit.log

./var/lib/jetty/logs/ASDC/ASDC-FE/debug.log

./var/lib/jetty/logs/ASDC/ASDC-FE/transaction.log

./var/lib/jetty/logs/ASDC/ASDC-FE/error.log

./var/lib/jetty/logs/2017_09_06.stderrout.log

...

./var/lib/jetty/logs/SDC/SDC-BE/metrics.log

./var/lib/jetty/logs/SDC/SDC-BE/audit.log

./var/lib/jetty/logs/SDC/SDC-BE/debug_by_package.log

./var/lib/jetty/logs/SDC/SDC-BE/debug.log

./var/lib/jetty/logs/SDC/SDC-BE/transaction.log

./var/lib/jetty/logs/SDC/SDC-BE/error.log

./var/lib/jetty/logs/importNormativeAll.log

./var/lib/jetty/logs/2017_09_07.stderrout.log

./var/lib/jetty/logs/ASDC/ASDC-FE/audit.log

./var/lib/jetty/logs/ASDC/ASDC-FE/debug.log

./var/lib/jetty/logs/ASDC/ASDC-FE/transaction.log

./var/lib/jetty/logs/ASDC/ASDC-FE/error.log

./var/lib/jetty/logs/2017_09_06.stderrout.log

...

./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/journal/000006.log

./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/cache/1504712225751.log

./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/cache/1504712002358.log

./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/tmp/xql.log

./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/data/log/karaf.log

./opt/opendaylight/distribution-karaf-0.5.1-Boron-SR1/taglist.log

./var/log/dpkg.log

./var/log/apt/history.log

./var/log/apt/term.log

./var/log/fontconfig.log

./var/log/alternatives.log

./var/log/bootstrap.log

...

./opt/openecomp/sdnc/admportal/server/npm-debug.log

./var/log/dpkg.log

./var/log/apt/history.log

./var/log/apt/term.log

./var/log/fontconfig.log

./var/log/alternatives.log

./var/log/bootstrap.log

...

Fix MSO mso-docker.json

Before running pod-config-init.yaml - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example

vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json

...

"mso-po-adapter-config": {

"checkrequiredparameters": "true",

"cloud_sites": [{

"aic_version": "2.5",

"id": "Ottawa",

"identity_service_id": "KVE5076_OPENSTACK",

"lcp_clli": "RegionOne",

"region_id": "RegionOne"

}],

"identity_services": [{

"admin_tenant": "services",

"dcp_clli": "KVE5076_OPENSTACK",

"identity_authentication_type": "USERNAME_PASSWORD",

"identity_server_type": "KEYSTONE",

"identity_url": "http://OPENSTACK_KEYSTONE_IP_HERE:5000/v2.0",

"member_role": "admin",

"mso_id": "dev",

"mso_pass": "dcdc0d9e4d69a667c67725a9e466e6c3",

"tenant_metadata": "true"

}],

...

"mso-po-adapter-config": {

"checkrequiredparameters": "true",

"cloud_sites": [{

"aic_version": "2.5",

"id": "Dallas",

"identity_service_id": "RAX_KEYSTONE",

"lcp_clli": "DFW", # or IAD

"region_id": "DFW"

}],

"identity_services": [{

"admin_tenant": "service",

"dcp_clli": "RAX_KEYSTONE",

"identity_authentication_type": "RACKSPACE_APIKEY",

"identity_server_type": "KEYSTONE",

"identity_url": "https://identity.api.rackspacecloud.com/v2.0",

"member_role": "admin",

"mso_id": "9998888",

"mso_pass": "YOUR_API_KEY",

"tenant_metadata": "true"

}],

Kubernetes DevOps

...

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Deleting All Containers

Delete all the containers (and services)

| Code Block |

|---|

./deleteAll.bash -n onap |

Delete/Rerun config-init container for /dockerdata-nfs refresh

Delete the config-init container and its generated /dockerdata-nfs share

There may be cases where new configuration content needs to be deployed after a pull of a new version of ONAP.

for example after pull brings in files like the following (20170902)

...

root@ip-172-31-93-160:~/oom/kubernetes/oneclick# git pull

Resolving deltas: 100% (135/135), completed with 24 local objects.

From http://gerrit.onap.org/r/oom

bf928c5..da59ee4 master -> origin/master

Updating bf928c5..da59ee4

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/metadata.rb | 7 +

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/recipes/aai-resources-aai-keystore.rb | 8 +

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/CHANGELOG.md | 2 +-

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/README.md | 4 +-

see (worked with Zoran)

| Jira | ||||||

|---|---|---|---|---|---|---|

|

| Code Block |

|---|

# check for the pod

kubectl get pods --all-namespaces -a

# delete all the pod/services

./deleteAll.bash -n onap

# delete the fs

rm -rf /dockerdata-nfs

At this moment, its empty env

#Pull the repo

git pull

# rerun the config

./createConfig.bash -n onap

If you get an error saying release onap-config is already exists then please run :- helm del --purge onap-config

example 20170907

root@kube0:~/oom/kubernetes/oneclick# rm -rf /dockerdata-nfs/

root@kube0:~/oom/kubernetes/oneclick# cd ../config/

root@kube0:~/oom/kubernetes/config# ./createConfig.sh -n onap

**** Creating configuration for ONAP instance: onap

Error from server (AlreadyExists): namespaces "onap" already exists

Error: a release named "onap-config" already exists.

Please run: helm ls --all "onap-config"; helm del --help

**** Done ****

root@kube0:~/oom/kubernetes/config# helm del --purge onap-config

release "onap-config" deleted

# rerun createAll.bash -n onap |

Waiting for config-init container to finish - 20sec

...

root@ip-172-31-93-160:~/oom/kubernetes/config# kubectl get pods --all-namespaces -a

NAMESPACE NAME READY STATUS RESTARTS AGE

onap config-init 0/1 ContainerCreating 0 6s

root@ip-172-31-93-160:~/oom/kubernetes/config# kubectl get pods --all-namespaces -a

NAMESPACE NAME READY STATUS RESTARTS AGE

onap config-init 1/1 Running 0 9s

root@ip-172-31-93-160:~/oom/kubernetes/config# kubectl get pods --all-namespaces -a

NAMESPACE NAME READY STATUS RESTARTS AGE

onap config-init 0/1 Completed 0 14s

Container Endpoint access

Check the services view in the Kuberntes API under robot

robot.onap-robot:88 TCP

robot.onap-robot:30209 TCP

...

kubectl get services --all-namespaces -o wide

onap-vid vid-mariadb None <none> 3306/TCP 1h app=vid-mariadb

onap-vid vid-server 10.43.14.244 <nodes> 8080:30200/TCP 1h app=vid-server

...

kubectl --namespace onap-vid logs -f vid-server-248645937-8tt6p

16-Jul-2017 02:46:48.707 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 22520 ms

kubectl --namespace onap-portal logs portalapps-2799319019-22mzl -f

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

onap-robot robot-44708506-dgv8j 1/1 Running 0 36m 10.42.240.80 obriensystemskub0

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-robot logs -f robot-44708506-dgv8j

2017-07-16 01:55:54: (log.c.164) server started

Robot Logs

Yogini and I needed the logs in OOM Kubernetes - they were already there and with a robot:robot auth

http://test.onap.info:30209/logs/demo/InitDistribution/report.html

for example after a

root@ip-172-31-57-55:/dockerdata-nfs/onap/robot# ./demo-k8s.sh distribute

find your path to the logs by using for example

root@ip-172-31-57-55:/dockerdata-nfs/onap/robot# kubectl --namespace onap-robot exec -it robot-4251390084-lmdbb bash

root@robot-4251390084-lmdbb:/# ls /var/opt/OpenECOMP_ETE/html/logs/demo/InitD

InitDemo/ InitDistribution/

path is

http://test.onap.info:30209/logs/demo/InitDemo/log.html#s1-s1-s1-s1-t1

SSH into ONAP containers

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

...

Get the pod name viakubectl get pods --all-namespaces -o wide

bash into the pod via

kubectl -n onap-mso exec -it mso-1648770403-8hwcf /bin/bash...

Trying to get an authorization file into the robot pod

...

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/home/ubuntu

above works?

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/etc/lighttpd/authorization

tar: authorization: Cannot open: File exists

tar: Exiting with failure status due to previous errors

...

Running ONAP using the vnc-portal

see Installing and Running the ONAP Demos

or run the vnc-portal container to access ONAP using the traditional port mappings. See the following recorded video by Mike Elliot of the OOM team for a audio-visual reference

Check for the vnc-portal port via (it is always 30211)

| Code Block | ||

|---|---|---|

| ||

obrienbiometrics:onap michaelobrien$ ssh ubuntu@dev.onap.info

ubuntu@ip-172-31-93-122:~$ sudo su -

root@ip-172-31-93-122:~# kubectl get services --all-namespaces -o wide

NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

onap-portal vnc-portal 10.43.78.204 <nodes> 6080:30211/TCP,5900:30212/TCP 4d app=vnc-portal

|

launch the vnc-portal in a browser

password is "password"

Open firefox inside the VNC vm - launch portal normally

http://portal.api.simpledemo.openecomp.org:8989/ECOMPPORTAL/login.htm

(20170906) Before running SDC - fix the /etc/hosts (thanks Yogini for catching this) - edit your /etc/hosts as follows

(change sdc.ui to sdc.api)

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

...

...

Continue with the normal ONAP demo flow at (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

Troubleshooting

Rancher fails to restart on server reboot

Having issues after a reboot of a colocated server/agent

Installing Clean Ubuntu

...

apt-get install ssh

apt-get install ubuntu-desktop

DNS resolution

ignore - not relevant

Search Line limits were exceeded, some dns names have been omitted, the applied search line is: default.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

https://github.com/rancher/rancher/issues/9303

Questions

https://lists.onap.org/pipermail/onap-discuss/2017-July/002084.html

Links

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Please help out our OPNFV friends

https://wiki.opnfv.org/pages/viewpage.action?pageId=12389095

Of interest

https://github.com/cncf/cross-cloud/

Reference Reviews

https://gerrit.onap.org/r/#/c/6179/

https://gerrit.onap.org/r/#/c/9849/

...