Table of Contents

The official documentation for installation of ONAP with OOM / Kubernetes is located in readthedocs here (TBD). The supported versions of Kubernetes is as follows:

...

If you're looking for instructions on how to create a Kubernetes environment (a one time activity) follow one of these guides:

- Setting up Kubernetes with Rancher

- Setting up Kubernetes with Kubeadm

- Setting up Kubernetes with Cloudify

There are many public cloud systems with native Kubernetes support such as AWS, Azure, and Google.

The specific versions of the components are as follows:

...

Quickstart Guide

Install Kubernetes

Advanced Kubernetes Installations

| Children Display |

|---|

| Table of Contents |

|---|

...

| title | Warning: Draft Content |

|---|

This wiki is under construction - this means that content here may be not fully specified or missing.

TODO: get DCAE yamls working, fix health tracking issues for healing

There are multiple ways to provision or connect to a Kubernetes cluster - Kubeadm, Cloudify and Rancher - please help us document others in the sub-pages of this wiki space.

Official Documentation: https://onap.readthedocs.io/en/latest/submodules/oom.git/docs/OOM%20User%20Guide/oom_user_guide.html?highlight=oom

Integration: https://wiki.opnfv.org/pages/viewpage.action?pageId=12389095

The OOM (ONAP Operation Manager) project has pushed Kubernetes based deployment code to the oom repository - based on ONAP 1.1. This page details getting ONAP running (specifically the vFirewall demo) on Kubernetes for various virtual and native environments. This page assumes you have access to any type of bare metal or VM running a clean Ubuntu 16.04 image - either on Rackspace, Openstack, your laptop or AWS spot EC2.

Architectural details of the OOM project is described here - OOM User Guide

see Alexis' page in ONAP on Kubernetes on Rancher in OpenStack

And the SDNC clustering work SDN-C Clustering on Kubernetes

...

Status

Server

URL

Notes

Live Amsterdam server

http://amsterdam.onap.info:8880

Login to Rancher/Kubernetes only in the last 45 min of the hour

Use the system only in the last 10 min of the hour

Jenkins server

http://jenkins.onap.info/job/oom-cd/

view deployment status, deployment (pod up status)

Kibana server

query "message" logs or view the dashboard

Undercloud Installation

Requirements

Metric

Min

Full System

Notes

vCPU

8

64 recommended

(16/32 ok)

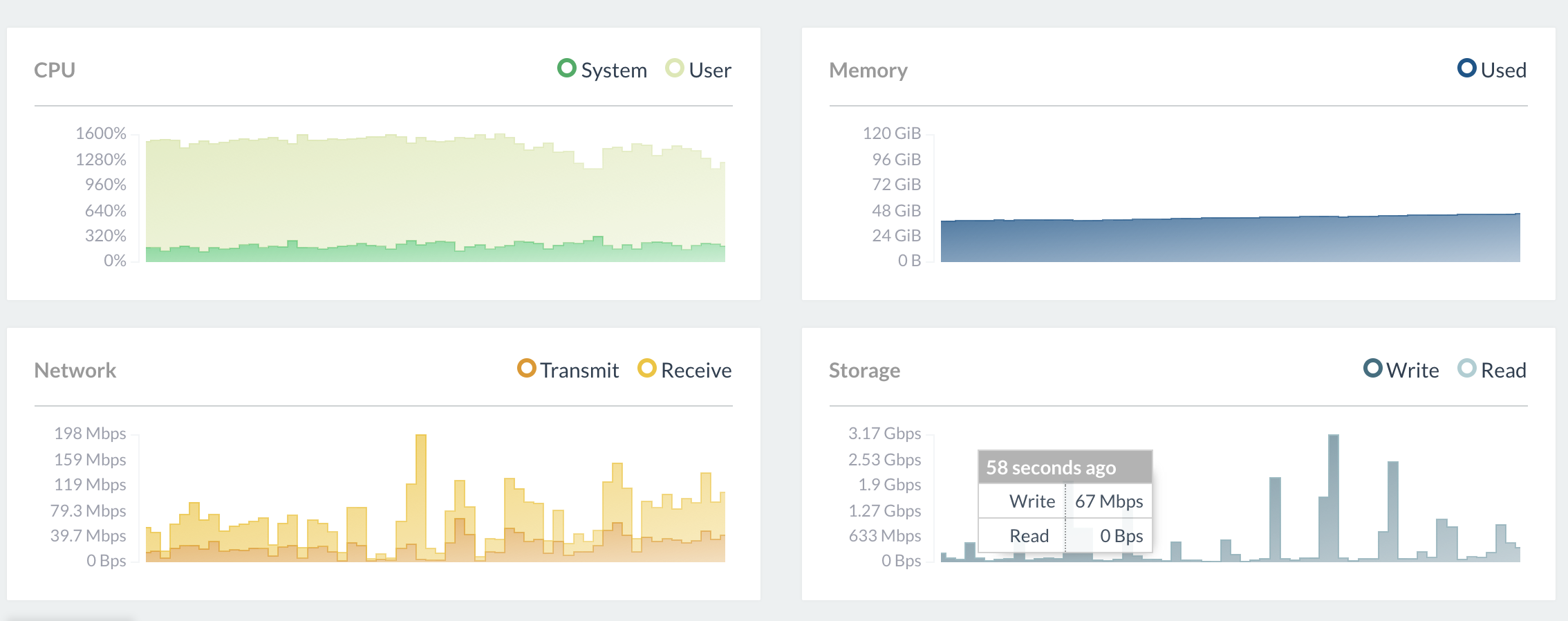

The full ONAP system of 85+ containers is CPU and Network bound on startup - therefore if you pre pull the docker images to remove the network slowdown - vCPU utilization will peak at 52 cores on a 64 core system and bring the system up in under 4 min. On a system with 8/16 cores you will see the normal 13/7 min startup time as we throttle 52 to 8/16.

RAM

7g (a couple components)

55g (75 containers)

Note: you need at least 53g RAM (3g is for Rancher/Kubernetes itself (the VFs exist on a separate openstack).

53 to start and 57 after running the system for a day

HD

60g

120g+

Software

Beijing/Master

Rancher v1.6.14

Kubernetes server 1.8.5, Kubectl client 1.9.2

Docker 17.03.2-ce

HELM 2.8.0 on openlab, Rackspace and AWS - as of master 20180124

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-535

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-406

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-457

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-530

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-330

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-441

Amsterdam

Rancher 1.6.10 (kubectl 1.7.7), Helm 2.3, Docker 1.12, Kubectl 1.7.7 - test downgrade from 1.8.6)

We need a kubernetes installation either a base installation or with a thin API wrapper like Rancher.

There are several options - currently Rancher with Helm on Ubuntu 16.04 is a focus as a thin wrapper on Kubernetes - there are other alternative platforms in the subpage - ONAP on Kubernetes (Alternatives)

OS

VIM

Description

Status

Nodes

Links

Ubuntu 16.04.2

!Redhat

Bare Metal

VMWare

Recommended approach

1-n

http://rancher.com/docs/rancher/v1.6/en/quick-start-guide/

ONAP Installation

Automated Installation

OOM Automated Installation Videos

| View file | ||||

|---|---|---|---|---|

|

Latest 20171206 AWS install from clean Ubuntu 16.04 VM using rancher setup script below and the cd.sh script to bring up OOM - after the 20 min prepull of dockers - OOM comes up fully with only the known aaf issue 84 of 85 containers - all healthcheck passes except DCAE at 29/30, portal tested and an AAI cloud-region put

| View file | ||||

|---|---|---|---|---|

|

Prerequisites

Register your domain, get an EIP, a security group with ports 0-65535 set to the all-open 0.0.0.0/0 CIDR, associate with your VM

Install Ubuntu 16.04 on a clean 64G VM

Run as root or Ubuntu (adjust sudo where appropriate - as in logout/login to pickup the docker ubuntu user)

see video for AWS on ONAP on Kubernetes on Amazon EC2#AWSCLIEC2CreationandDeployment

SCP required files

...

obrienbiometrics:_artifacts michaelobrien$ scp * ubuntu@cd2.onap.info:~/ aai-cloud-region-put.json 100% 285 5.8KB/s 00:00 aaiapisimpledemoopenecomporg.cer 100% 1662 37.1KB/s 00:00 cd.sh 100% 9316 201.2KB/s 00:00 onap-parameters.yaml 100% 643 14.0KB/s 00:00 oom_rancher_setup_1.sh 100% 1234 26.9KB/s 00:00

Install Docker/Rancher/Helm/Kubectl

assumes root user not ubuntu

| View file | ||||

|---|---|---|---|---|

|

If you run the above script at ubuntu not root - log out and back in - so docker picks up the user

Install Rancher Client (manual for now)

run http://<your_dns_name>:8880/ and follow instructions in the manual quickstart section (register your host) below (create k8s env, register host, run rancher client, copy token to ~/.kube/config)

Prepull docker images

The script curl is incorporated in the cd.sh script below until

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Install/Refresh OOM

This step you can run repeatedly

Run the following (requires onap-parameters.yaml and the following json body for the AAI cloud-region PUT so robot init can run - on the FS beside the cd.sh script)

The ./deleteAll.bash inside the cd.sh currently runs the -y override for

| Jira | ||||||

|---|---|---|---|---|---|---|

|

| View file | ||||

|---|---|---|---|---|

|

| View file | ||||

|---|---|---|---|---|

|

| View file | ||||

|---|---|---|---|---|

|

...

./cd.sh -b master | amsterdam

https://github.com/obrienlabs/onap-root/blob/master/cd.sh

This is the same script that runs on our jenkins CD hourly job for master

http://jenkins.onap.info/job/oom-cd/

Amsterdam OOM + DCAE Heat installation

WIP as of 20180204 - details on

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Follow page ONAP on Kubernetes on Rancher in OpenStack

The onap-parameters.yaml is significantly larger due the incorporation of the dcae controller variables to enable the bridge between ONAP in Kubernetees and DCAE in HEAT (cdap,...)

https://git.onap.org/oom/tree/kubernetes/config/onap-parameters-sample.yaml?h=amsterdam

Currently using this onap-parameters.yaml (keys/passwords obfuscated)

And the heat template (added an OOM vm to the network) - and will take out everything except the DCAE controller and OOM VM - as all the other AAI, sdc, so...VMs are no longer required in HEAT - the rest calls get routed back to kubernetes. The templates are only for reference against onap-parameters.yaml

| View file | ||||

|---|---|---|---|---|

|

| View file | ||||

|---|---|---|---|---|

|

...

All of DCAE is up via OOM (including the 7 CDAP nodes) Issue was: each tenant hits its floating IP allocation after 2.5 DCAE installs - we run out of IPs because they are not deleted. Fix: delete all unassociated IPs before brining up OOM/DCAE - we cannot mix cloudify blueprint orchestration with manual openstack deletion - once in a blueprint - we need to remove everything orchestrated on top of HEAT using the cloudify manager - or do as the integration team does and clean the tenant before a deployment. after deleting all floating IPs and rerunning the OOM deployment Time: 35 min from heat side dcae-boot install - 55 min total from one-click OOM install obrienbiometrics:lab_logging michaelobrien$ ssh ubuntu@10.12.6.124 Last login: Fri Feb 9 16:50:48 2018 from 10.12.25.197 ubuntu@onap-oom-obrien:~$ kubectl -n onap-dcaegen2 exec -it heat-bootstrap-4010086101-fd5p2 bash root@heat-bootstrap:/# cd /opt/heat root@heat-bootstrap:/opt/heat# source DCAE-openrc-v3.sh root@heat-bootstrap:/opt/heat# openstack server list +--------------------------------------+---------------------+--------+----------------------------------------------------+--------------------------+------------+ | ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+---------------------+--------+----------------------------------------------------+--------------------------+------------+ | 29990fcb-881f-457c-a386-aa32691d3beb | dcaepgvm00 | ACTIVE | oam_onap_3QKg=10.99.0.13, 10.12.6.144 | ubuntu-16-04-cloud-amd64 | m1.medium | | 7b4b63f3-c436-41a8-96dd-665baa94a698 | dcaecdap01 | ACTIVE | oam_onap_3QKg=10.99.0.19, 10.12.5.219 | ubuntu-16-04-cloud-amd64 | m1.large | | f4e6c499-8938-4e04-ab78-f0e753fe3cbb | dcaecdap00 | ACTIVE | oam_onap_3QKg=10.99.0.9, 10.12.6.69 | ubuntu-16-04-cloud-amd64 | m1.large | | 60ccff1f-e7c3-4ab4-b749-96aef7ee0b8c | dcaecdap04 | ACTIVE | oam_onap_3QKg=10.99.0.16, 10.12.5.106 | ubuntu-16-04-cloud-amd64 | m1.large | | df56d059-dc91-4122-a8de-d59ea14c5062 | dcaecdap05 | ACTIVE | oam_onap_3QKg=10.99.0.15, 10.12.6.131 | ubuntu-16-04-cloud-amd64 | m1.large | | 648ea7d3-c92f-4cd8-870f-31cb80eb7057 | dcaecdap02 | ACTIVE | oam_onap_3QKg=10.99.0.20, 10.12.6.128 | ubuntu-16-04-cloud-amd64 | m1.large | | c13fb83f-1011-44bb-bc6c-36627845a468 | dcaecdap06 | ACTIVE | oam_onap_3QKg=10.99.0.18, 10.12.6.134 | ubuntu-16-04-cloud-amd64 | m1.large | | 5ed7b172-1203-45a3-91e1-c97447ef201e | dcaecdap03 | ACTIVE | oam_onap_3QKg=10.99.0.6, 10.12.6.123 | ubuntu-16-04-cloud-amd64 | m1.large | | 80ada3ca-745e-42db-b67c-cdd83140e68e | dcaedoks00 | ACTIVE | oam_onap_3QKg=10.99.0.12, 10.12.6.173 | ubuntu-16-04-cloud-amd64 | m1.medium | | 5e9ef7af-abb3-4311-ae96-a2d27713f4c5 | dcaedokp00 | ACTIVE | oam_onap_3QKg=10.99.0.17, 10.12.6.168 | ubuntu-16-04-cloud-amd64 | m1.medium | | d84bbb08-f496-4762-8399-0aef2bb773c2 | dcaecnsl00 | ACTIVE | oam_onap_3QKg=10.99.0.7, 10.12.6.184 | ubuntu-16-04-cloud-amd64 | m1.medium | | 53f41bfc-9512-4a0f-b431-4461cd42839e | dcaecnsl01 | ACTIVE | oam_onap_3QKg=10.99.0.11, 10.12.6.188 | ubuntu-16-04-cloud-amd64 | m1.medium | | b6177cb2-5920-40b8-8f14-0c41b73b9f1b | dcaecnsl02 | ACTIVE | oam_onap_3QKg=10.99.0.4, 10.12.6.178 | ubuntu-16-04-cloud-amd64 | m1.medium | | 5e6fd14b-e75b-41f2-ad61-b690834df458 | dcaeorcl00 | ACTIVE | oam_onap_3QKg=10.99.0.8, 10.12.6.185 | CentOS-7 | m1.medium | | 5217dabb-abd7-4e57-972a-86efdd5252f5 | dcae-dcae-bootstrap | ACTIVE | oam_onap_3QKg=10.99.0.3, 10.12.6.183 | ubuntu-16-04-cloud-amd64 | m1.small | | 87569b68-cd4c-4a1f-9c6c-96ea7ce3d9b9 | onap-oom-obrien | ACTIVE | oam_onap_w37L=10.0.16.1, 10.12.6.124 | ubuntu-16-04-cloud-amd64 | m1.xxlarge | | d80f35ac-1257-47fc-828e-dddc3604d3c1 | oom-jenkins | ACTIVE | appc-multicloud-integration=10.10.5.14, 10.12.6.49 | | v1.xlarge | +--------------------------------------+---------------------+--------+----------------------------------------------------+--------------------------+------------+ root@heat-bootstrap:/opt/heat#

...

(Manual instructions)

ONAP Minimum R1 Installation Helm Apps

oom/kubernetes/oneclick/setenv.bash maybe updated to the following reduce app set.

...

HELM_APPS=('mso' 'message-router' 'sdnc' 'vid' 'robot' 'portal' 'policy' 'appc' 'aai' 'sdc' 'log') #HELM_APPS=('consul' 'msb' 'mso' 'message-router' 'sdnc' 'vid' 'robot' 'portal' 'policy' 'appc' 'aai' 'sdc' 'dcaegen2' 'log' 'cli' 'multicloud' 'clamp' 'vnfsdk' 'uui' 'aaf' 'vfc' 'kube2msb' 'esr')

1) install rancher, clone oom, run config-init pod, run one or all onap components

*****************

Note: uninstall docker if already installed - as Kubernetes 1.8 under rancher supports 17.03.2 as of 20180124

...

% sudo apt-get remove docker-engine

*****************

Install Rancher

ONAP deployment in kubernetes is modelled in the oom project as a 1:1 set of service:pod sets (1 pod per docker container). The fastest way to get ONAP Kubernetes up is via Rancher on any bare metal or VM that supports a clean Ubuntu 16.04 install and more than 55G ram.

TODO: REMOVE from table cell - wrapping is not working

(on each host) add to your /etc/hosts to point your ip to your hostname (add your hostname to the end). Add entries for all other hosts in your cluster.

For example on openlab - you will need to add the name of your host before you install docker - to avoid the error below

sudo: unable to resolve host onap-oom

...

| language | bash |

|---|

sudo vi /etc/hosts <your-ip> <your-hostname>

Open Ports

On most hosts like openstack or EC2 you can open all the ports or they are open by default - on some environments like Rackspace VM's you need to open them

...

sudo iptables -I INPUT 1 -p tcp --dport 8880 -j ACCEPT iptables-save > /etc/iptables.rules

Fix virtual memory allocation (to allow onap-log:elasticsearch to come up under Rancher 1.6.11)

...

sudo sysctl -w vm.max_map_count=262144

clone oom (scp your onap_rsa private key first - or clone anon - Ideally you get a full gerrit account and join the community)

see ssh/http/http access links below

https://gerrit.onap.org/r/#/admin/projects/oom

...

git clone http://gerrit.onap.org/r/oom

(on each host (server and client(s) which may be the same machine)) Install only the 17.03.2 version of Docker (the only version that works with Kubernetes in Rancher 1.6.13+)

Install Docker

...

# for root just run the following line and skip to next section curl https://releases.rancher.com/install-docker/17.03.sh | sh # when running as non-root (ubuntu) run the following and logout/log back in sudo usermod -aG docker ubuntu

Pre pull docker images the first time you install onap. Currently the pre-pull will take 16-180 min depending on your network. Pre pulling the images will allow the entire ONAP to start in 3-8 min instead of up to 3 hours.

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Use script above in oom/kubernetes/config once it is merged.

https://git.onap.org/oom/tree/kubernetes/config/prepull_docker.sh

...

cp oom/kubernetes/config/prepull_docker.sh . chmod 777 prepull_docker.sh nohup ./prepull_docker.sh > prepull.log &

To monitor when prepull is finished see section: Prepulldockerimages. It is advised to wait until pre pull has finished before continuing.

(on the master only) Install rancher (Optional: use 8880 instead of 8080 if there is a conflict) - note there may be issues with the dns pod in Rancher after a reboot or when running clustered hosts - a clean system will be OK -

Jira server ONAP JIRA serverId 425b2b0a-557c-3c0c-b515-579789cceedb key OOM-236

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

docker run -d --restart=unless-stopped -p 8880:8080 --name rancher-server rancher/server:v1.6.14

In Rancher UI - dont use (http://127.0.0.1:8880) - use the real IP address - so the client configs are populated correctly with callbacks

You must deactivate the default CATTLE environment - by adding a KUBERNETES environment - and Deactivating the older default CATTLE one - your added hosts will attach to the default

Default → Manage Environments

Select "Add Environment" button

Give the Environment a name and description, then select Kubernetes as the Environment Template

Hit the "Create" button. This will create the environment and bring you back to the Manage Environments view

At the far right column of the Default Environment row, left-click the menu ( looks like 3 stacked dots ), and select Deactivate. This will make your new Kubernetes environment the new default.

Register your host

Register your host(s) - run following on each host (including the master if you are collocating the master/host on a single machine/vm)

For each host, In Rancher > Infrastructure > Hosts. Select "Add Host"

The first time you add a host - you will be presented with a screen containing the routable IP - hit save only on a routable IP.

Enter IP of host: (if you launched racher with 127.0.0.1/localhost - otherwise keep it empty - it will autopopulate the registration with the real IP)

Copy command to register host with Rancher,

Execute command on each host, for example:

...

sudo docker run --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.9 http://rackspace.onap.info:8880/v1/scripts/CDE31E5CDE3217328B2D:1514678400000:xLr2ySIppAaEZYWtTVa5V9ZGc

wait for kubernetes menu to populate with the CLI

Install Kubectl

The following will install kubectl (for Kubernetes 1.9.2 ) https://github.com/kubernetes/kubernetes/issues/57528 on a linux host. Once configured, this client tool will provide management of a Kubernetes cluster.

...

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.9.2/bin/linux/amd64/kubectl chmod +x ./kubectl sudo mv ./kubectl /usr/local/bin/kubectl mkdir ~/.kube vi ~/.kube/config

Paste kubectl config from Rancher (you will see the CLI menu in Rancher / Kubernetes after the k8s pods are up on your host)

Click on "Generate Config" to get your content to add into .kube/config

Verify that Kubernetes config is good

...

root@obrien-kube11-1:~# kubectl cluster-info Kubernetes master is running at .... Heapster is running at.... KubeDNS is running at .... kubernetes-dashboard is running at ... monitoring-grafana is running at .... monitoring-influxdb is running at ... tiller-deploy is running at....

Install Helm

The following will install Helm - currently 2.8.0 on a linux host. Helm is used by OOM for package and configuration management.

https://lists.onap.org/pipermail/onap-discuss/2018-January/007674.html

Prerequisite: Install Kubectl

...

wget http://storage.googleapis.com/kubernetes-helm/helm-v2.8.0-linux-amd64.tar.gz tar -zxvf helm-v2.8.0-linux-amd64.tar.gz sudo mv linux-amd64/helm /usr/local/bin/helm # verify version helm version # Rancher 1.6.14 installs 2.6.2 - upgrade to 2.8.0 - you will need to upgrade helm on the server to the version to level of client helm init --upgrade

NOTE: If helm version takes long time and eventually errors out, this is most likely because incoming access to port 10250 (exposed by kubelet) is blocked by firewall. Make sure to configure firewall accordingly

Undercloud done - move to ONAP Installation

You can install OOM manually below or run the cd.sh below or attached to the top of this page - Install/RefreshOOM

https://github.com/obrienlabs/onap-root/blob/master/cd.sh

manually.....

Wait until all the hosts show green in rancher,

Then we are ready to configure and deploy onap environment in kubernetes. These scripts are found in the folders:

oom/kubernetes/oneclick

oom/kubernetes/config

First source oom/kubernetes/oneclick/setenv.bash. This will set your helm list of components to start/delete

...

cd ~/oom/kubernetes/oneclick/ source setenv.bash

Seconds we need configure the onap before deployment. This is a onetime operation that spawns temporality config pod. This mounts the volume /dockerdata/ contained in the pod config-init and also creates the directory “/dockerdata-nfs” on the kubernetes node. This mount is required for all other ONAP pods to function.

Note: the pod will stop after NFS creation - this is normal.

https://git.onap.org/oom/tree/kubernetes/config/onap-parameters-sample.yaml

...

cd ~/oom/kubernetes/config # edit or copy the config for MSO data vi onap-parameters.yaml # or cp onap-parameters-sample.yaml onap-parameters.yaml # run the config pod creation % ./createConfig.sh -n onap

**** Creating configuration for ONAP instance: onap

namespace "onap" created

pod "config-init" created

**** Done ****

Wait for the config-init pod is gone before trying to bring up a component or all of ONAP - around 60 sec (up to 10 min) - see https://wiki.onap.org/display/DW/ONAP+on+Kubernetes#ONAPonKubernetes-Waitingforconfig-initcontainertofinish-20sec

root@ip-172-31-93-122:~/oom_20170908/oom/kubernetes/config# kubectl get pods --all-namespaces -a

onap config 0/1 Completed 0 1m

Note: When using the -a option the config container will show up with the status, however when not used with the -a flag, it will not be present

Cluster Configuration (optional - do not use if your server/client are co-located)

3. Share the /dockerdata-nfs Folder between Kubernetes Nodes

...

Don't run all the pods unless you have at least 52G allocated - if you have a laptop/VM with 16G - then you can only run enough pods to fit in around 11G

...

% cd ../oneclick % vi createAll.bash % ./createAll.bash -n onap -a robot|appc|aai

(to bring up a single service at a time)

Use the default "onap" namespace if you want to run robot tests out of the box - as in "onap-robot"

Bring up core components

...

root@kos1001:~/oom1004/oom/kubernetes/oneclick# cat setenv.bash #HELM_APPS=('consul' 'msb' 'mso' 'message-router' 'sdnc' 'vid' 'robot' 'portal' 'policy' 'appc' 'aai' 'sdc' 'dcaegen2' 'log' 'cli' 'multicloud' 'clamp' 'vnfsdk' 'kube2msb' 'aaf' 'vfc') HELM_APPS=('consul' 'msb' 'mso' 'message-router' 'sdnc' 'vid' 'robot' 'portal' 'policy' 'appc' 'aai' 'sdc' 'log' 'kube2msb') # pods with the ELK filebeat container for capturing logs root@kos1001:~/oom1004/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -a | grep 2/2 onap-aai aai-resources-338473047-8k6vr 2/2 Running 0 1h onap-aai aai-traversal-2033243133-6cr9v 2/2 Running 0 1h onap-aai model-loader-service-3356570452-25fjp 2/2 Running 0 1h onap-aai search-data-service-2366687049-jt0nb 2/2 Running 0 1h onap-aai sparky-be-3141964573-f2mhr 2/2 Running 0 1h onap-appc appc-1335254431-v1pcs 2/2 Running 0 1h onap-mso mso-3911927766-bmww7 2/2 Running 0 1h onap-policy drools-2302173499-t0zmt 2/2 Running 0 1h onap-policy pap-1954142582-vsrld 2/2 Running 0 1h onap-policy pdp-4137191120-qgqnj 2/2 Running 0 1h onap-portal portalapps-4168271938-4kp32 2/2 Running 0 1h onap-portal portaldb-2821262885-0t32z 2/2 Running 0 1h onap-sdc sdc-be-2986438255-sdqj6 2/2 Running 0 1h onap-sdc sdc-fe-1573125197-7j3gp 2/2 Running 0 1h onap-sdnc sdnc-3858151307-w9h7j 2/2 Running 0 1h onap-vid vid-server-1837290631-x4ttc 2/2 Running 0 1h

Only if you have >52G run the following (all namespaces)

...

% ./createAll.bash -n onap

ONAP is OK if everything is 1/1 or 2/2 in the following

...

% kubectl get pods --all-namespaces

Run the ONAP portal via instructions at RunningONAPusingthevnc-portal

Wait until the containers are all up

check AAI endpoints

root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# kubectl -n onap-aai exec -it aai-service-3321436576-2snd6 bash

root@aai-service-3321436576-2snd6:/# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-systemd-

root 7 1 0 15:50 ? 00:00:00 /usr/local/sbin/haproxy-master

root@ip-172-31-93-160:/dockerdata-nfs/onap/robot# curl https://127.0.0.1:30233/aai/v11/service-design-and-creation/models

curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: none

Run Health Check

...

Run Initial healthcheck directly on the host Initialize robot cd ~/oom/kubernetes/robot root@ip-172-31-83-168:~/oom/kubernetes/robot# ./demo-k8s.sh init_robot # password for test:test then health root@ip-172-31-83-168:~/oom/kubernetes/robot# ./ete-k8s.sh health

...

When running the non-root ubuntu and jenkins users - the NFS share needs its permissions upgraded in order for a delete to occur on VM reset

...

ubuntu@ip-172-31-85-6:~$ sudo chmod 777 -R /dockerdata-nfs/

Ports

List of Containers

Total pods is 84 and 16 filebeat containers

Docker container list - may not be fully up to date: https://git.onap.org/integration/tree/packaging/docker/docker-images.csv

OOM Pod Init Dependencies

...

The diagram above describes the init dependencies for the ONAP pods when first deploying OOM through Kubernetes.

Configure Openstack settings in onap-parameters.yaml

https://git.onap.org/oom/tree/kubernetes/config/onap-parameters-sample.yaml

Before running pod-config-init.yaml - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example and run the demo robot scripts.

vi oom/kubernetes/config/config/onap-parameters.yaml

Rackspace example

defaults OK

OPENSTACK_UBUNTU_14_IMAGE: "Ubuntu_14.04.5_LTS"

OPENSTACK_PUBLIC_NET_ID: "e8f51956-00dd-4425-af36-045716781ffc"

OPENSTACK_OAM_NETWORK_ID: "d4769dfb-c9e4-4f72-b3d6-1d18f4ac4ee6"

OPENSTACK_OAM_SUBNET_ID: "191f7580-acf6-4c2b-8ec0-ba7d99b3bc4e"

OPENSTACK_OAM_NETWORK_CIDR: "192.168.30.0/24"

OPENSTACK_FLAVOUR_MEDIUM: "m1.medium"

OPENSTACK_SERVICE_TENANT_NAME: "service"

DMAAP_TOPIC: "AUTO"

DEMO_ARTIFACTS_VERSION: "1.1.0-SNAPSHOT"

Modify for your OS/RS account

OPENSTACK_USERNAME: "yourlogin"

use password below

...

Kubernetes DevOps

...

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Deleting All Containers

Delete all the containers (and services)

...

./deleteAll.bash -n onap -y # in amsterdam only ./deleteAll.bash -n onap

Delete/Rerun config-init container for /dockerdata-nfs refresh

refer to the procedure as part of https://github.com/obrienlabs/onap-root/blob/master/cd.sh

Delete the config-init container and its generated /dockerdata-nfs share

There may be cases where new configuration content needs to be deployed after a pull of a new version of ONAP.

for example after pull brings in files like the following (20170902)

root@ip-172-31-93-160:~/oom/kubernetes/oneclick# git pull

Resolving deltas: 100% (135/135), completed with 24 local objects.

From http://gerrit.onap.org/r/oom

bf928c5..da59ee4 master -> origin/master

Updating bf928c5..da59ee4

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/metadata.rb | 7 +

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/aai-resources/aai-resources-auth/recipes/aai-resources-aai-keystore.rb | 8 +

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/CHANGELOG.md | 2 +-

kubernetes/config/docker/init/src/config/aai/aai-config/cookbooks/{ajsc-aai-config => aai-resources/aai-resources-config}/README.md | 4 +-

see (worked with Zoran)

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

# check for the pod kubectl get pods --all-namespaces -a # delete all the pod/services # master ./deleteAll.bash -n onap -y # amsterdam ./deleteAll.bash -n onap # delete the fs rm -rf /dockerdata-nfs/onap At this moment, its empty env #Pull the repo git pull # rerun the config cd ../config ./createConfig.bash -n onap If you get an error saying release onap-config is already exists then please run :- helm del --purge onap-config example 20170907 root@kube0:~/oom/kubernetes/oneclick# rm -rf /dockerdata-nfs/ root@kube0:~/oom/kubernetes/oneclick# cd ../config/ root@kube0:~/oom/kubernetes/config# ./createConfig.sh -n onap **** Creating configuration for ONAP instance: onap Error from server (AlreadyExists): namespaces "onap" already exists Error: a release named "onap-config" already exists. Please run: helm ls --all "onap-config"; helm del --help **** Done **** root@kube0:~/oom/kubernetes/config# helm del --purge onap-config release "onap-config" deleted # rerun createAll.bash -n onap

Container Endpoint access

Check the services view in the Kuberntes API under robot

robot.onap-robot:88 TCP

robot.onap-robot:30209 TCP

kubectl get services --all-namespaces -o wide

onap-vid vid-mariadb None <none> 3306/TCP 1h app=vid-mariadb

onap-vid vid-server 10.43.14.244 <nodes> 8080:30200/TCP 1h app=vid-server

...

kubectl --namespace onap-vid logs -f vid-server-248645937-8tt6p

16-Jul-2017 02:46:48.707 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 22520 ms

kubectl --namespace onap-portal logs portalapps-2799319019-22mzl -f

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

onap-robot robot-44708506-dgv8j 1/1 Running 0 36m 10.42.240.80 obriensystemskub0

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-robot logs -f robot-44708506-dgv8j

2017-07-16 01:55:54: (log.c.164) server started

A pods may be setup to log to a volume which can be inspected outside of a container. If you cannot connect to the container you could inspect the backing volume instead. This is how you find the backing directory for a pod which is using a volume which is an empty directory type, the log files can be found on the kubernetes node hosting the pod. More details can be found here https://kubernetes.io/docs/concepts/storage/volumes/#emptydir

here is an example of finding SDNC logs on a VM hosting a kubernetes node.

...

| language | bash |

|---|

#find the sdnc pod name and which kubernetes node its running on. kubectl -n onap-sdnc get all -o wide #describe the pod to see the empty dir volume names and the pod uid kubectl -n onap-sdnc describe po/sdnc-5b5b7bf89c-97qkx #ssh to the VM hosting the kubernetes node if you are not alredy on the vm ssh root@vm-host #search the /var/lib/kubelet/pods/ directory for the log file sudo find /var/lib/kubelet/pods/ | grep sdnc-logs #The result is path that has the format /var/lib/kubelet/pods/<pod-uid>/volumes/kubernetes.io~empty-dir/<volume-name> /var/lib/kubelet/pods/d6041229-d614-11e7-9516-fa163e6ff8e8/volumes/kubernetes.io~empty-dir/sdnc-logs /var/lib/kubelet/pods/d6041229-d614-11e7-9516-fa163e6ff8e8/volumes/kubernetes.io~empty-dir/sdnc-logs/sdnc /var/lib/kubelet/pods/d6041229-d614-11e7-9516-fa163e6ff8e8/volumes/kubernetes.io~empty-dir/sdnc-logs/sdnc/karaf.log /var/lib/kubelet/pods/d6041229-d614-11e7-9516-fa163e6ff8e8/plugins/kubernetes.io~empty-dir/sdnc-logs /var/lib/kubelet/pods/d6041229-d614-11e7-9516-fa163e6ff8e8/plugins/kubernetes.io~empty-dir/sdnc-logs/ready

Robot Logs

Yogini and I needed the logs in OOM Kubernetes - they were already there and with a robot:robot auth

http://<your_dns_name>:30209/logs/demo/InitDistribution/report.html

for example after a

oom/kubernetes/robot$./demo-k8s.sh distribute

find your path to the logs by using for example

root@ip-172-31-57-55:/dockerdata-nfs/onap/robot# kubectl --namespace onap-robot exec -it robot-4251390084-lmdbb bash

root@robot-4251390084-lmdbb:/# ls /var/opt/OpenECOMP_ETE/html/logs/demo/InitD

InitDemo/ InitDistribution/

path is

http://<your_dns_name>:30209/logs/demo/InitDemo/log.html#s1-s1-s1-s1-t1

...

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

Get the pod name via

kubectl get pods --all-namespaces -o wide

bash into the pod via

kubectl -n onap-mso exec -it mso-1648770403-8hwcf /bin/bash

...

Trying to get an authorization file into the robot pod

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/home/ubuntu

above works?

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/etc/lighttpd/authorization

tar: authorization: Cannot open: File exists

tar: Exiting with failure status due to previous errors

Redeploying Code war/jar in a docker container

see building the docker image - use your own local repo or a repo on dockerhub - modify the values.yaml and delete/create your pod to switch images

example in

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Turn on Debugging

via URL

http://cd.onap.info:30223/mso/logging/debug

via logback.xml

Attaching a debugger to a docker container

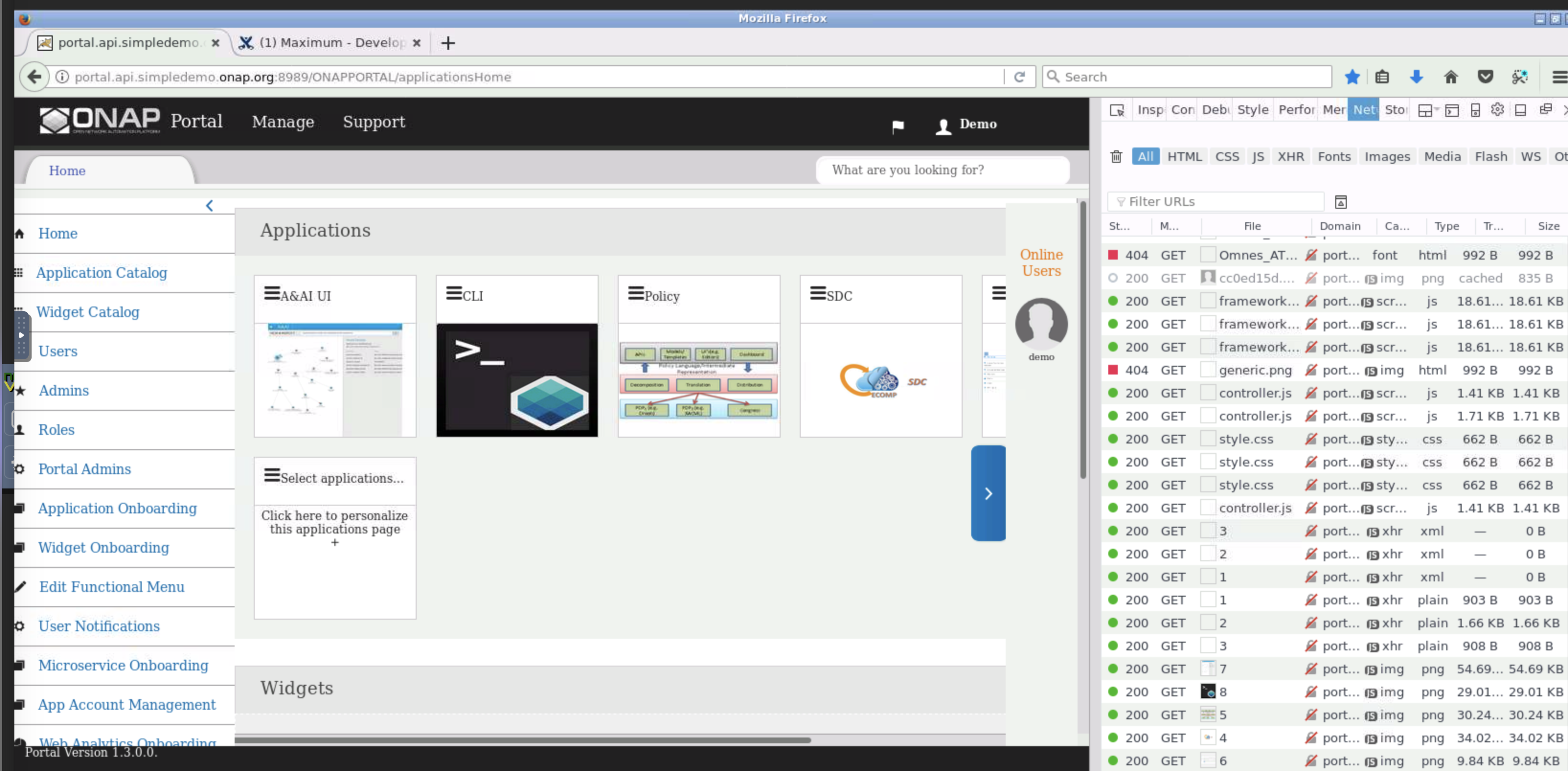

Running ONAP Portal UI Operations

Running ONAP using the vnc-portal

see (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

or run the vnc-portal container to access ONAP using the traditional port mappings. See the following recorded video by Mike Elliot of the OOM team for a audio-visual reference

Check for the vnc-portal port via (it is always 30211)

...

| language | java |

|---|

obrienbiometrics:onap michaelobrien$ ssh ubuntu@dev.onap.info ubuntu@ip-172-31-93-122:~$ sudo su - root@ip-172-31-93-122:~# kubectl get services --all-namespaces -o wide NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR onap-portal vnc-portal 10.43.78.204 <nodes> 6080:30211/TCP,5900:30212/TCP 4d app=vnc-portal

launch the vnc-portal in a browser

password is "password"

Open firefox inside the VNC vm - launch portal normally

http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm

(20170906) Before running SDC - fix the /etc/hosts (thanks Yogini for catching this) - edit your /etc/hosts as follows

(change sdc.ui to sdc.api)

| Jira | ||||||

|---|---|---|---|---|---|---|

|

before

after

notes

...

Continue with the normal ONAP demo flow at (Optional) Tutorial: Onboarding and Distributing a Vendor Software Product (VSP)

Running Multiple ONAP namespaces

Run multiple environments on the same machine - TODO

...

is located in Read the Docs:

- OOM User Guide — onap master documentation

- OOM Quick Start Guide — onap master documentation)

- OOM Cloud Setup Guide — onap master documentation

...

For example we can see when the AAI model-loader container was created

http://127.0.0.1:8880/v1/containerevents

{

"id": "1ce88",

"type": "containerEvent",

"links": {

"self": "…/v1/containerevents/1ce88",

"account": "…/v1/containerevents/1ce88/account",

"host": "…/v1/containerevents/1ce88/host"

},

"actions": {

"remove": "…/v1/containerevents/1ce88/?action=remove"

},

"baseType": "containerEvent",

"state": "created",

"accountId": "1a7",

"created": "2017-09-17T20:07:37Z",

"createdTS": 1505678857000,

"data": {

"fields": {

"dockerInspect": {

"Id": "59ec11e257fb9061b250fe7ce6a7c86ffd10a82a2f26776c0adc9ac0eb3c6e54",

"Created": "2017-09-17T20:07:37.750772403Z",

"Path": "/pause",

"Args": [ ],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 25115,

"ExitCode": 0,

"Error": "",

"StartedAt": "2017-09-17T20:07:37.92889179Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:99e59f495ffaa222bfeb67580213e8c28c1e885f1d245ab2bbe3b1b1ec3bd0b2",

"ResolvConfPath": "/var/lib/docker/containers/59ec11e257fb9061b250fe7ce6a7c86ffd10a82a2f26776c0adc9ac0eb3c6e54/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/59ec11e257fb9061b250fe7ce6a7c86ffd10a82a2f26776c0adc9ac0eb3c6e54/hostname",

"HostsPath": "/var/lib/docker/containers/59ec11e257fb9061b250fe7ce6a7c86ffd10a82a2f26776c0adc9ac0eb3c6e54/hosts",

"LogPath": "/var/lib/docker/containers/59ec11e257fb9061b250fe7ce6a7c86ffd10a82a2f26776c0adc9ac0eb3c6e54/59ec11e257fb9061b250fe7ce6a7c86ffd10a82a2f26776c0adc9ac0eb3c6e54-json.log",

"Name": "/k8s_POD_model-loader-service-849987455-532vd_onap-aai_d9034afb-9be3-11e7-ac87-024d93e255bc_0",

"RestartCount": 0,

"Driver": "aufs",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

"HostConfig": {

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": { }

},

"NetworkMode": "none",

"PortBindings": { },

"RestartPolicy": {

"Name": "",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"Dns": null,

"DnsOptions": null,

"DnsSearch": null,

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "",

"Cgroup": "",

"Links": null,

"OomScoreAdj": -998,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": [

"seccomp=unconfined"

],

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [ 2 items

0,

0

],

"Isolation": "",

"CpuShares": 2,

"Memory": 0,

"CgroupParent": "/kubepods/besteffort/podd9034afb-9be3-11e7-ac87-024d93e255bc",

"BlkioWeight": 0,

"BlkioWeightDevice": null,

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": null,

"DiskQuota": 0,

"KernelMemory": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": -1,

"OomKillDisable": false,

"PidsLimit": 0,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0

},

"GraphDriver": {

"Name": "aufs",

"Data": null

},

"Mounts": [ ],

"Config": {

"Hostname": "model-loader-service-849987455-532vd",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": null,

"Cmd": null,

"Image": "gcr.io/google_containers/pause-amd64:3.0",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": [

"/pause"

],

"OnBuild": null,

"Labels": {

"annotation.kubernetes.io/config.seen": "2017-09-17T20:07:35.940708461Z",

"annotation.kubernetes.io/config.source": "api",

"annotation.kubernetes.io/created-by": "{\"kind\":\"SerializedReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"ReplicaSet\",\"namespace\":\"onap-aai\",\"name\":\"model-loader-service-849987455\",\"uid\":\"d9000736-9be3-11e7-ac87-024d93e255bc\",\"apiVersion\":\"extensions\",\"resourceVersion\":\"1306\"}}\n",

"app": "model-loader-service",

"io.kubernetes.container.name": "POD",

"io.kubernetes.docker.type": "podsandbox",

"io.kubernetes.pod.name": "model-loader-service-849987455-532vd",

"io.kubernetes.pod.namespace": "onap-aai",

"io.kubernetes.pod.uid": "d9034afb-9be3-11e7-ac87-024d93e255bc",

"pod-template-hash": "849987455"

}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "6ebd4ae330d1fded82301d121e604a1e7193f20c538a9ff1179e98b9e36ffa5f",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": { },

"SandboxKey": "/var/run/docker/netns/6ebd4ae330d1",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "",

"Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"MacAddress": "",

"Networks": {

"none": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "9812f79a4ddb086db1b60cd10292d729842b2b42e674b400ac09101541e2b845",

"EndpointID": "d4cb711ea75ed4d27b9d4b3a71d1b3dd5dfa9f4ebe277ab4280d98011a35b463",

"Gateway": "",

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": ""

}

}

}

}

}

},

Troubleshooting

Rancher fails to restart on server reboot

Having issues after a reboot of a colocated server/agent

Installing Clean Ubuntu

apt-get install ssh

apt-get install ubuntu-desktop

DNS resolution

ignore - not relevant

Search Line limits were exceeded, some dns names have been omitted, the applied search line is: default.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

https://github.com/rancher/rancher/issues/9303

Config Pod fails to start with Error

Make sure your Openstack parameters are set if you get the following starting up the config pod

...

root@obriensystemsu0:~# kubectl get pods --all-namespaces -a NAMESPACE NAME READY STATUS RESTARTS AGE kube-system heapster-4285517626-l9wjp 1/1 Running 4 22d kube-system kube-dns-2514474280-4411x 3/3 Running 9 22d kube-system kubernetes-dashboard-716739405-fq507 1/1 Running 4 22d kube-system monitoring-grafana-3552275057-w3xml 1/1 Running 4 22d kube-system monitoring-influxdb-4110454889-bwqgm 1/1 Running 4 22d kube-system tiller-deploy-737598192-841l1 1/1 Running 4 22d onap config 0/1 Error 0 1d root@obriensystemsu0:~# vi /etc/hosts root@obriensystemsu0:~# kubectl logs -n onap config Validating onap-parameters.yaml has been populated Error: OPENSTACK_UBUNTU_14_IMAGE must be set in onap-parameters.yaml + echo 'Validating onap-parameters.yaml has been populated' + [[ -z '' ]] + echo 'Error: OPENSTACK_UBUNTU_14_IMAGE must be set in onap-parameters.yaml' + exit 1 fix root@obriensystemsu0:~/onap_1007/oom/kubernetes/config# helm delete --purge onap-config release "onap-config" deleted root@obriensystemsu0:~/onap_1007/oom/kubernetes/config# ./createConfig.sh -n onap **** Creating configuration for ONAP instance: onap Error from server (AlreadyExists): namespaces "onap" already exists NAME: onap-config LAST DEPLOYED: Mon Oct 9 21:35:27 2017 NAMESPACE: onap STATUS: DEPLOYED RESOURCES: ==> v1/ConfigMap NAME DATA AGE global-onap-configmap 15 0s ==> v1/Pod NAME READY STATUS RESTARTS AGE config 0/1 ContainerCreating 0 0s **** Done **** root@obriensystemsu0:~/onap_1007/oom/kubernetes/config# kubectl get pods --all-namespaces -a NAMESPACE NAME READY STATUS RESTARTS AGE kube-system heapster-4285517626-l9wjp 1/1 Running 4 22d kube-system kube-dns-2514474280-4411x 3/3 Running 9 22d kube-system kubernetes-dashboard-716739405-fq507 1/1 Running 4 22d kube-system monitoring-grafana-3552275057-w3xml 1/1 Running 4 22d kube-system monitoring-influxdb-4110454889-bwqgm 1/1 Running 4 22d kube-system tiller-deploy-737598192-841l1 1/1 Running 4 22d onap config 1/1 Running 0 25s root@obriensystemsu0:~/onap_1007/oom/kubernetes/config# kubectl get pods --all-namespaces -a NAMESPACE NAME READY STATUS RESTARTS AGE kube-system heapster-4285517626-l9wjp 1/1 Running 4 22d kube-system kube-dns-2514474280-4411x 3/3 Running 9 22d kube-system kubernetes-dashboard-716739405-fq507 1/1 Running 4 22d kube-system monitoring-grafana-3552275057-w3xml 1/1 Running 4 22d kube-system monitoring-influxdb-4110454889-bwqgm 1/1 Running 4 22d kube-system tiller-deploy-737598192-841l1 1/1 Running 4 22d onap config 0/1 Completed 0 1m

Prepull docker images

The script curl is incorporated in the cd.sh script below until

| Jira | ||||||

|---|---|---|---|---|---|---|

|

Watch the parallel set of pulls complete by doing the following grep for 15-40 min

ps -ef | grep docker | grep pull | wc -l

...

20171231 master (beijing) ubuntu@ip-172-31-73-50:~$ ps -ef | grep docker | grep pull root 18285 14573 8 02:13 pts/0 00:00:00 /bin/bash ./prepull_docker.sh root 18335 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 18350 18285 3 02:13 pts/0 00:00:00 docker pull mysql:5.7 root 18365 18285 0 02:13 pts/0 00:00:00 docker pull gcr.io/google-samples/xtrabackup:1.0 root 18380 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/ccsdk-dgbuilder-image:v0.1.0 root 18395 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/sdnc-image:v1.2.1 root 18412 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/admportal-sdnc-image:v1.2.1 root 18475 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/msb/msb_discovery:1.0.0 root 18535 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 18560 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/refrepo/postgres:latest root 18587 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/refrepo:1.0-STAGING-latest root 18657 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/aai/esr-server:v1.0.0 root 18701 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 18716 18285 0 02:13 pts/0 00:00:00 docker pull docker.elastic.co/logstash/logstash:5.4.3 root 18731 18285 0 02:13 pts/0 00:00:00 docker pull docker.elastic.co/kibana/kibana:5.5.0 root 18746 18285 0 02:13 pts/0 00:00:00 docker pull docker.elastic.co/elasticsearch/elasticsearch:5.5.0 root 18786 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/sniroemulator root 18824 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 18849 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/policy/policy-pe:v1.1.1 root 18875 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/policy/policy-drools:v1.1.1 root 18901 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/policy/policy-db:v1.1.1 root 18927 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/policy/policy-nexus:v1.1.1 root 18953 18285 0 02:13 pts/0 00:00:00 docker pull ubuntu:16.04 root 18990 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19005 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/aaf/authz-service:latest root 19031 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/library/cassandra:2.1.17 root 19092 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19107 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/sdc-kibana:v1.1.0 root 19124 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/sdc-frontend:v1.1.0 root 19139 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/sdc-elasticsearch:v1.1.0 root 19155 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/sdc-cassandra:v1.1.0 root 19170 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/sdc-backend:v1.1.0 root 19209 18285 3 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19224 18285 0 02:13 pts/0 00:00:00 docker pull aaionap/haproxy:1.1.0 root 19249 18285 1 02:13 pts/0 00:00:00 docker pull aaionap/hbase:1.2.0 root 19274 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/model-loader:v1.1.0 root 19300 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/aai-resources:v1.1.0 root 19327 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/aai-traversal:v1.1.0 root 19353 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/data-router:v1.1.0 root 19378 18285 0 02:13 pts/0 00:00:00 docker pull elasticsearch:2.4.1 root 19404 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/search-data-service:v1.1.0 root 19432 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/sparky-be:v1.1.0 root 19459 18285 0 02:13 pts/0 00:00:00 docker pull aaionap/gremlin-server root 19496 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19511 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/multicloud/framework:v1.0.0 root 19526 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/multicloud/vio:v1.0.0 root 19543 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/multicloud/openstack-ocata:v1.0.0 root 19586 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19601 18285 1 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/library/mariadb:10 root 19619 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/vid:v1.1.1 root 19657 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19673 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/portal-apps:v1.3.0 root 19687 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/portal-db:v1.3.0 root 19711 18285 2 02:13 pts/0 00:00:00 docker pull oomk8s/mariadb-client-init:1.0.0 root 19728 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/portal-wms:v1.3.0 root 19743 18285 2 02:13 pts/0 00:00:00 docker pull oomk8s/ubuntu-init:1.0.0 root 19762 18285 0 02:13 pts/0 00:00:00 docker pull dorowu/ubuntu-desktop-lxde-vnc root 19830 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 19845 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/mso:v1.1.1 root 19860 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/mariadb:10.1.11 root 19906 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/nslcm:v1.0.2 root 19927 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/resmanagement:v1.0.0 root 19951 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/gvnfmdriver:v1.0.1 root 19972 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/ztevmanagerdriver:v1.0.2 root 19994 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/nfvo/svnfm/huawei:v1.0.2 root 20026 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/ztesdncdriver:v1.0.0 root 20048 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/nfvo/svnfm/nokia:v1.0.2 root 20075 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/jujudriver:v1.0.0 root 20099 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/vnflcm:v1.0.1 root 20121 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/vnfres:v1.0.1 root 20141 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/vnfmgr:v1.0.1 root 20165 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/emsdriver:v1.0.1 root 20187 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/wfengine-mgrservice:v1.0.0 root 20210 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/vfc/wfengine-activiti:v1.0.0 root 20265 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 20281 18285 0 02:13 pts/0 00:00:00 docker pull attos/dmaap:latest root 20295 18285 0 02:13 pts/0 00:00:00 docker pull wurstmeister/kafka:latest root 20338 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/usecase-ui:v1.0.1 root 20478 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 20494 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/clamp:v1.1.0 root 20522 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/mariadb:10.1.11 root 20560 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 20575 18285 1 02:13 pts/0 00:00:00 docker pull mysql:5.7 root 20590 18285 0 02:13 pts/0 00:00:00 docker pull gcr.io/google-samples/xtrabackup:1.0 root 20605 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/ccsdk-dgbuilder-image:v0.1.0 root 20621 18285 1 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/sdnc-image:v1.2.1 root 20638 18285 1 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/admportal-sdnc-image:v1.2.1 root 20688 18285 5 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 20703 18285 0 02:13 pts/0 00:00:00 docker pull oomk8s/pgaas:1 root 20717 18285 0 02:13 pts/0 00:00:00 docker pull oomk8s/cdap-fs:1.0.0 root 20734 18285 0 02:13 pts/0 00:00:00 docker pull oomk8s/cdap:1.0.7 root 20749 18285 0 02:13 pts/0 00:00:00 docker pull attos/dmaap:latest root 20764 18285 0 02:13 pts/0 00:00:00 docker pull wurstmeister/kafka:latest root 20781 18285 0 02:13 pts/0 00:00:00 docker pull wurstmeister/zookeeper:latest root 20797 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/dcae-dmaapbc:1.1-STAGING-latest root 20812 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/dcae-collector-common-event:1.1-STAGING-latest root 20902 18285 4 02:13 pts/0 00:00:00 docker pull oomk8s/readiness-check:1.0.0 root 20917 18285 0 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/openecomp/appc-image:v1.2.0 root 20932 18285 0 02:13 pts/0 00:00:00 docker pull mysql/mysql-server:5.6 root 20949 18285 1 02:13 pts/0 00:00:00 docker pull nexus3.onap.org:10001/onap/ccsdk-dgbuilder-image:v0.1.0

...

https://lists.onap.org/pipermail/onap-discuss/2017-July/002084.html

Links

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Please help out our OPNFV friends

https://wiki.opnfv.org/pages/viewpage.action?pageId=12389095

Of interest

https://github.com/cncf/cross-cloud/

https://en.wikipedia.org/wiki/Fiddler_(software) - thanks Rahul

http://www.opencontrail.org/opencontrail-quick-start-guide/

https://github.com/prometheus/prometheus

http://zipkin.io/pages/quickstart

http://cloudify.co/2017/09/27/model-driven-onap-operations-manager-oom-boarding-tosca-cloudify/

Reference Reviews

https://gerrit.onap.org/r/#/c/6179/

https://gerrit.onap.org/r/#/c/9849/

...