| Table of Contents |

|---|

Creating a Service Instance

In this tutorial we show how to take a service design that has been distributed and create a running instance of the service.

...

TODO:

20171120: Brian Freeman has commented on R1 changes - need to verify these in a live system before posting here

VNF preload is now part of VID in a checkbox - so we don't require the sdnc rest call as part of demo.sh preload

Robot in OOM is run in oom/kubernetes/robot now

Creating a Service Instance

In this tutorial we show how to take a service design that has been distributed and create a running instance of the service.

| SDNC preload fragment |

|---|

"service-type": "11819dd6-6332-42bc-952c-1a19f8246663", above is the vf-module (3 of 3 in the diagram below) avove ios the vnf (2 of 3) |

| Gliffy Diagram | ||||

|---|---|---|---|---|

|

To simplify this we are going to use scripts (with some selenium robot scripts) to create the design, pre-load customer and network information, and orchestrate parts of the virtual firewall closed loop example. The following steps assume that you have completed and understand basic concepts from the setting up the platform and using the portal tutorials.

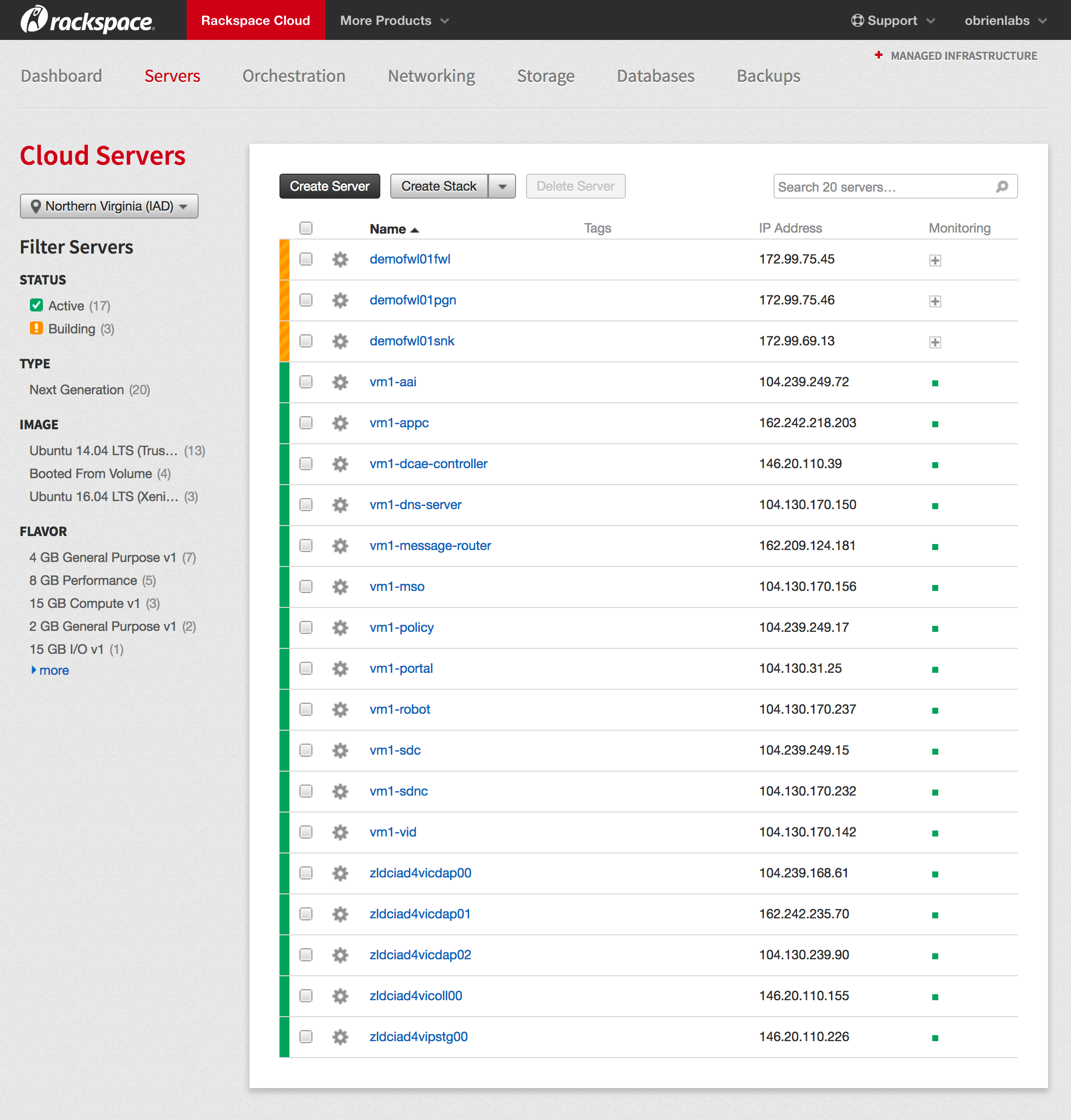

Let's start by finding the IP Address of vm1-robot in the Rackspace list of servers. Use this vm1-robot IP address, your Rackspace private key, and the PuTTY client to login to vm1-robot as root.

Note: The current default LCP Region is IAD - to use DFW switch the example zip in the last section - currently though we have hardcoding that must be fixed:

| Jira | ||||||

|---|---|---|---|---|---|---|

|

osx$ ssh-add onap_rsa osx$ ssh root@104.130.170.232 |

|---|

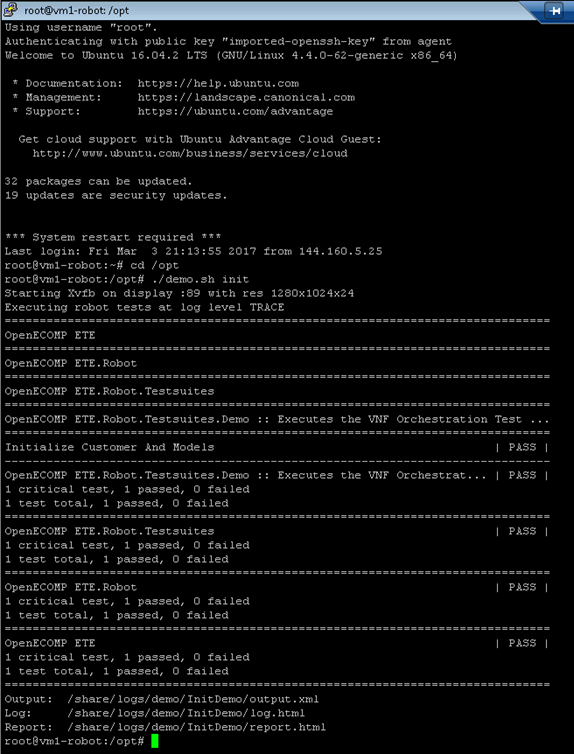

Run Robot demo.sh init

At the command prompt type

root@vm1-robot:~# cd /opt root@vm1-robot:/opt# ./demo.sh init |

|---|

Wait for all steps to complete (will take 60-120 sec) as shown below

If you want to see the details of what ran, you can open report.html in a browser. (located within openecompete_container docker container)

root@vm1-robot:/opt# docker ps CONTAINER |

|---|

Let's start by finding the IP Address of vm1-robot in the Rackspace list of servers. Use this vm1-robot IP address, your Rackspace private key, and the PuTTY client to login to vm1-robot as root.

Note: The current default LCP Region is IAD - to use DFW switch the example zip in the last section - currently though we have hardcoding that must be fixed:

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

osx$ ssh-add onap_rsa

osx$ ssh root@104.130.170.232

Run Robot demo.sh init

At the command prompt type

...

root@vm1-robot:~# cd /opt

root@vm1-robot:/opt# ./demo.sh init

Wait for all steps to complete (will take 60-120 sec) as shown below

If you want to see the details of what ran, you can open report.html in a browser. (located within openecompete_container docker container)

root@vm1-robot:/opt# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f99954f00ab2 nexus3.onap.org:10001/openecomp/testsuite:1.0-STAGING-latest "lighttpd -D -f /e..." 19 hours ago Up 19 hours 0.0.0.0:88->88/tcp openecompete_container root@vm1-robot:/opt# docker exec -it openecompete_container bash root@f99954f00ab2:/# cat /share/logs/demo/InitDemo/ log.html output.xml report.html |

|---|

...

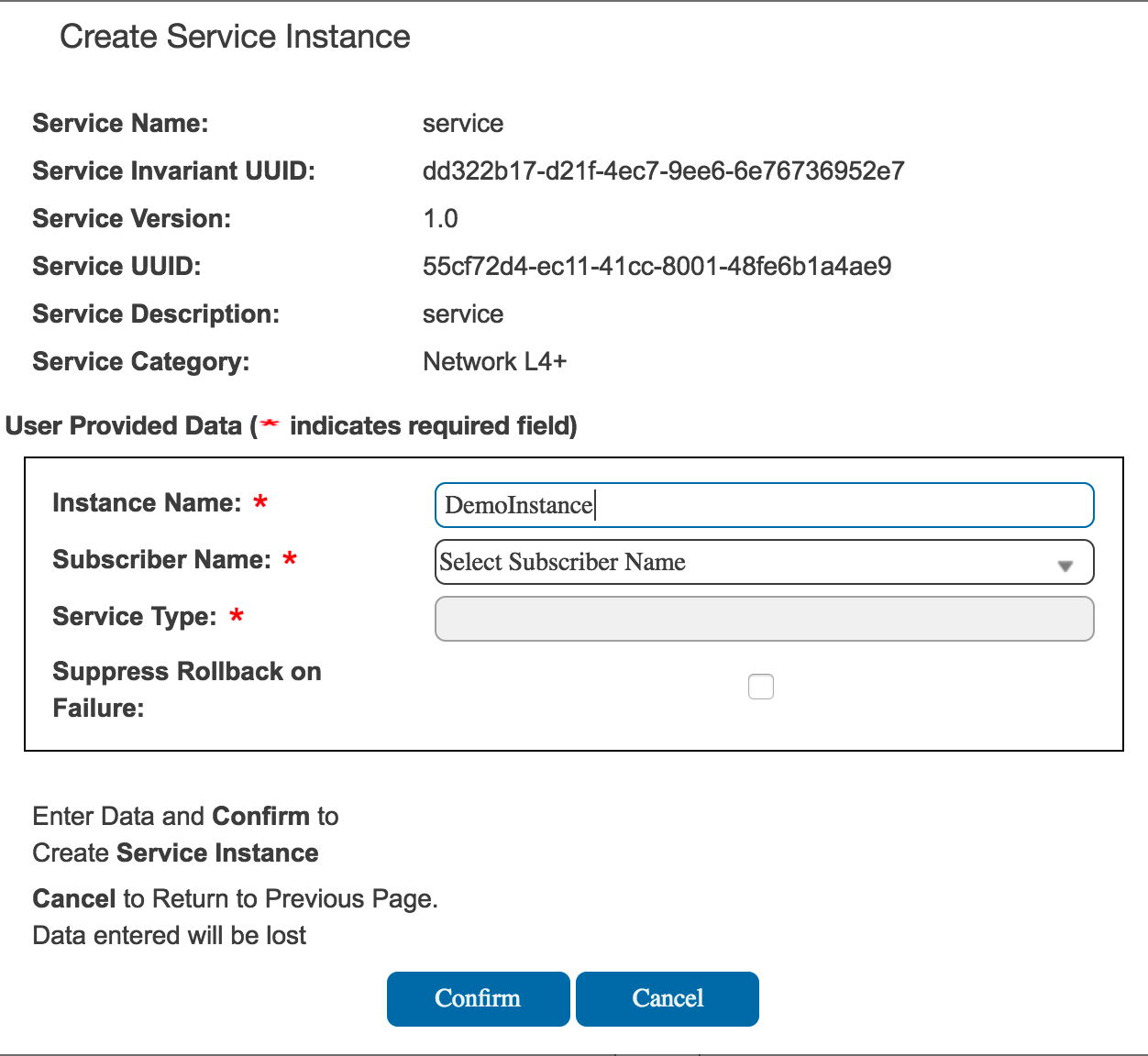

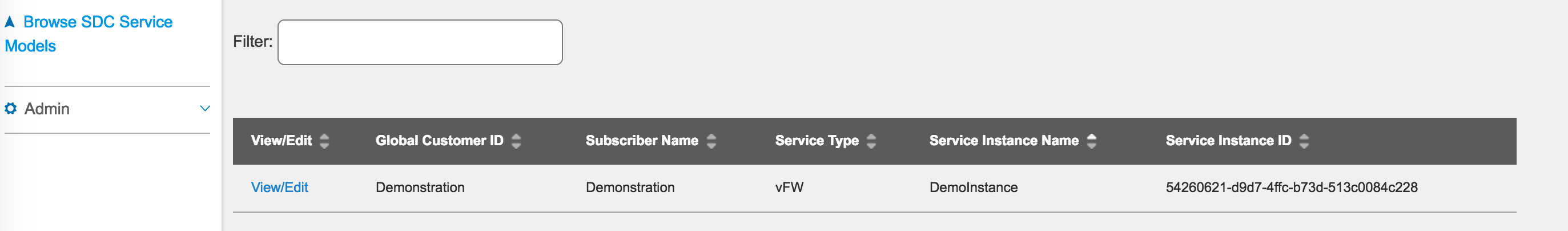

From the ONAP portal, login to the VID application using demo user, browse to locate the demo SDC Service Models, and Deploy an instance of the service you created - not the pre-populated demoVFW.

(Note: deploy your "service" above - not demoVFW or demoVLB - these 2 are leftover pre-population artifacts of the init script and will be removed)

Use the generated demoVFW above (you don't need to onboard/distribute your own be removed)

Fill in the information (Instance Name=DemoInstance, Demonstration, vFW) for a Service Instance as shown below and press Confirm.

adjust above for project and owning entity and vFWCL/vSNK

Wait for a response and close the window

...

/sdnc-oam/admportal/mobility.js router.post('/addVnfNetwork', csp.checkAuth, function(req,res){ |

|---|

...

)"; |

|---|

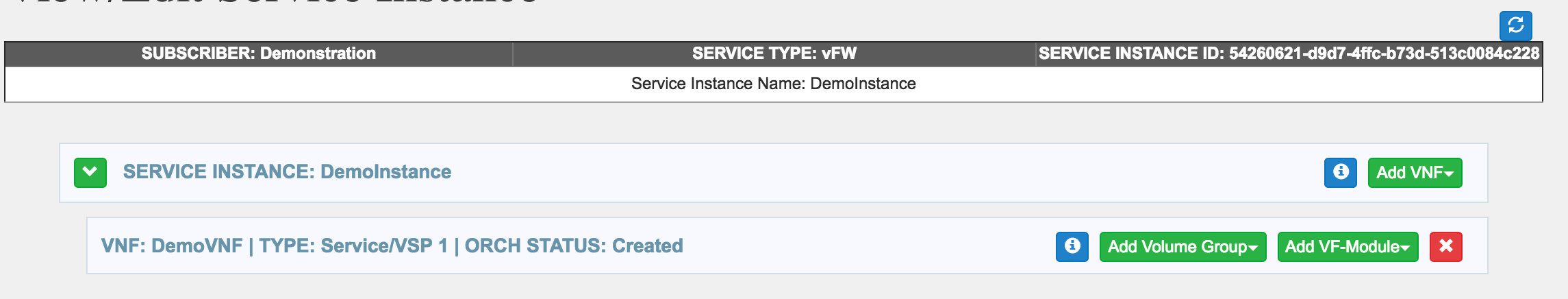

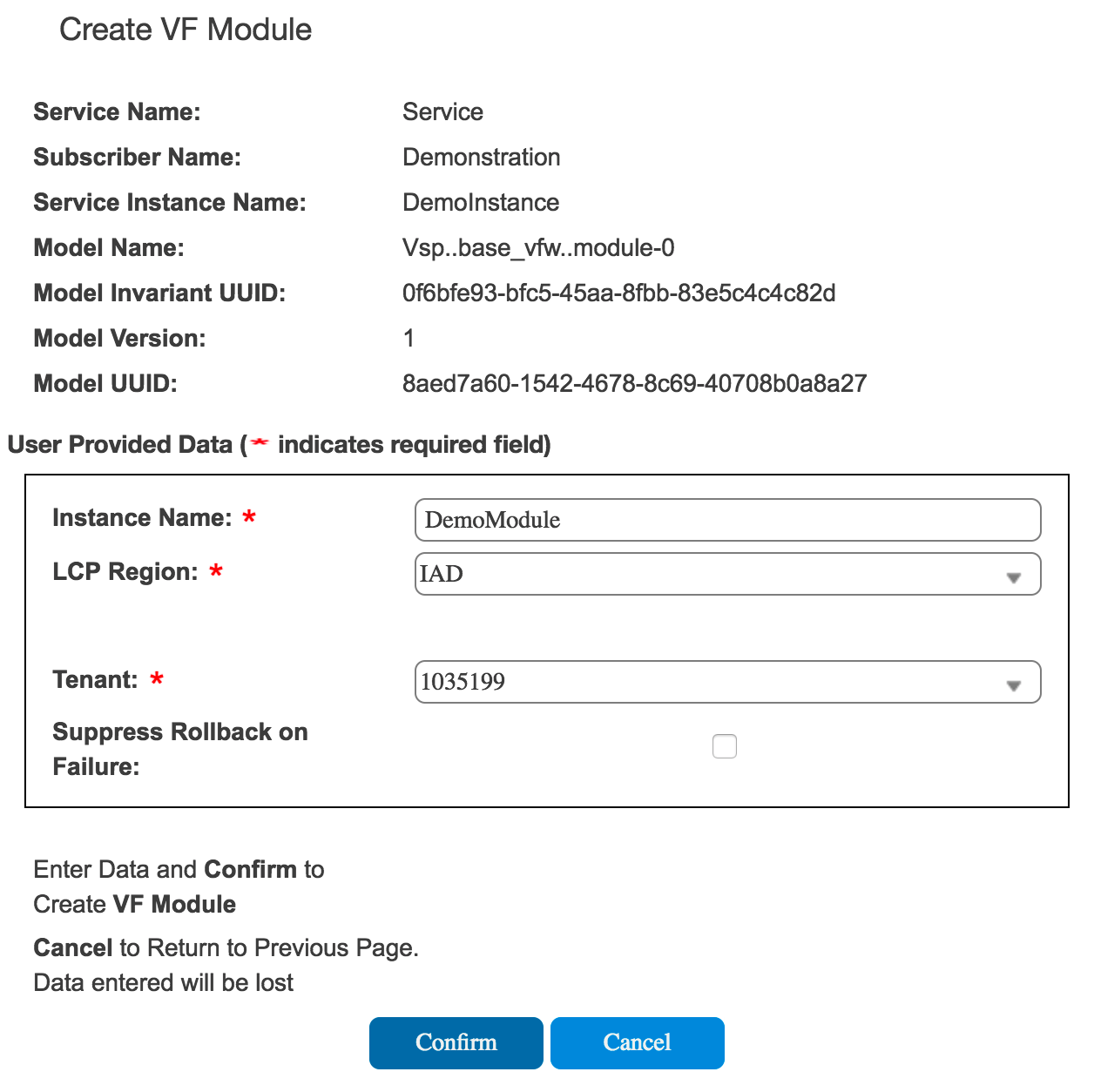

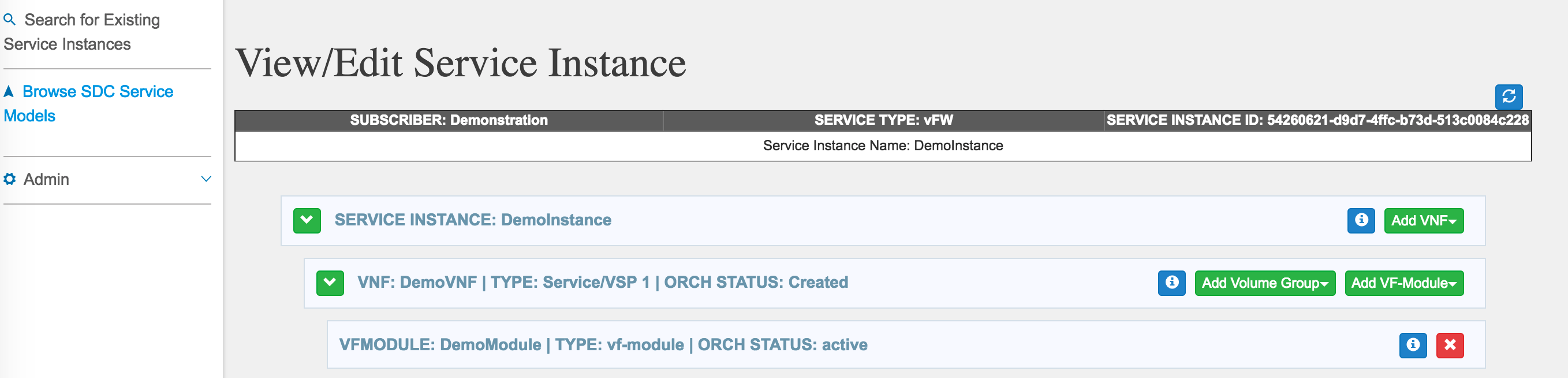

Add a VF Module in VID

Option 1: REST call to MSO

POST to http://{{mso_ip}}:8080/ecomp/mso/infra/serviceInstances/v2/<id>/vnfs/<id>/vfModules - see UCA-20 OSS JAX-RS 2 Client

Option 2: VID GUI

Add a VF Module using the drop down button.

Fill in information for the VF module (service name = Service) and confirm.

Watch VF VM stack creation

Watch as the 3 VMs for the VF start to come up on Rackspace (dialog is still up)

Note: Openstack users with RegionOne may see failures here. Looks into the below ticker to update MSO docker container /shared/mso-docker.json file with RegionOne settings. For logs use

| Panel |

|---|

| docker logs -f testlab_mso_1 |

.

Fill in information for the VF module (service name = Service) and confirm.

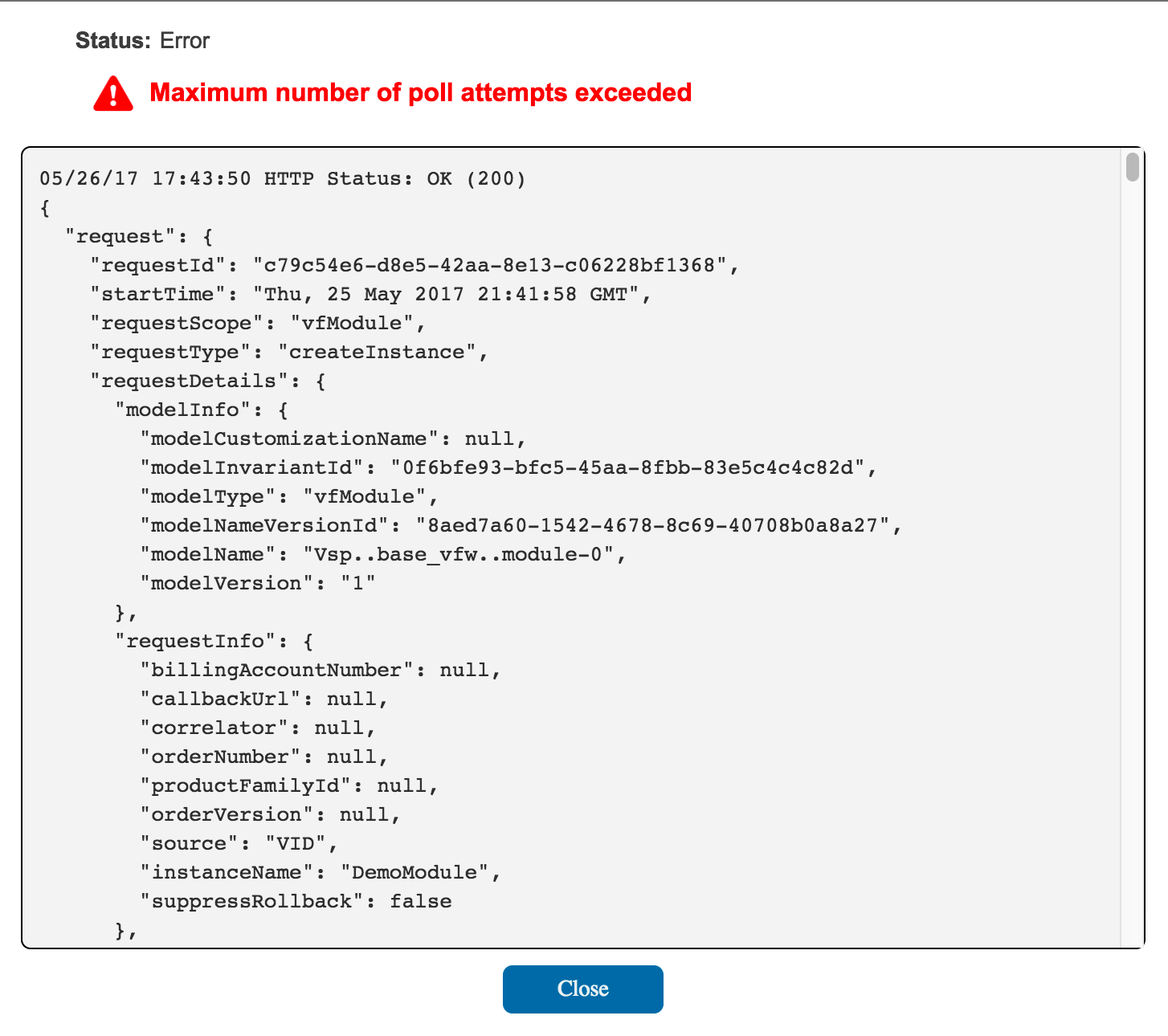

Create VF Module - polling hangs - vFW VMs are created though

Eventually you will see a (red-herring) poll timeout - we need to adjust the wait time and # of retries here - anyway the 3 VM's are up (with pings but not necessarily with 200 health checks on the processes)

...

Select close, and later cancel (for now) - as the VMs are actually up

For now cancel the Create VF Module dialog (the VMs were created)

For now cancel the Create VF Module dialog (the VMs were created)

Watch VF VM stack creation

Watch as the 3 VMs for the VF start to come up on Rackspace (dialog is still up)

Note: Openstack users with RegionOne may see failures here. Looks into the below ticker to update MSO docker container /shared/mso-docker.json file with RegionOne settings. For logs use

| Panel |

|---|

| docker logs -f testlab_mso_1 |

...

Browse our new vFW service

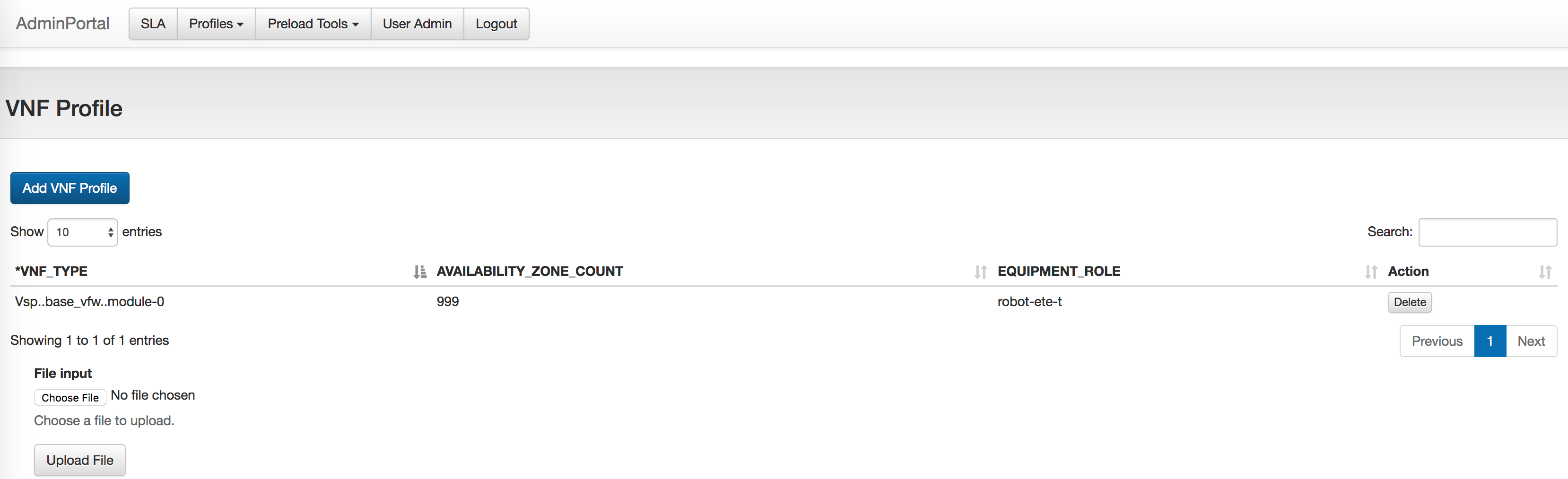

Verify VNF Profile

create an account on SDNC http://sdnc-ip:8843/signup

login http://sdnc-ip:8843/login

Check VNF Profile in Profile menu

Wait for the response and close the window as was done in prior steps. The VF Module creation can also be viewed as a stack in Rackspace as shown below.

...

onap@server-01:~/onap$ openstack port list | grep ip_address=.10.1.0. | 6d4c9ef9-ceec-4c62-85b1-fa6f2de34256 | FirewallSvcModule-vfw_private_2_port-ewvqxhjdm2tv | BC:76:4E:20:57:DB | ip_address='10.1.0.11', subnet_id='5a4808b2-2fca-40ab-ba43-10d21a9e5b64' | ACTIVE | | 7861e542-600f-4bfa-96d0-47e1be19331d | FirewallSvcModule-vpg_private_1_port-ctu2jymvh2yr | BC:76:4E:20:3B:75 | ip_address='10.1.0.12', subnet_id='5a4808b2-2fca-40ab-ba43-10d21a9e5b64' | ACTIVE | | b22e7d79-58e6-4c16-8acc-f1a4c358c8c9 | FirewallSvcModule-vsn_private_1_port-xit2fdnpz2yd | BC:76:4E:20:3B:63 | ip_address='10.1.0.13', subnet_id='5a4808b2-2fca-40ab-ba43-10d21a9e5b64' | ACTIVE | |

|---|

...

05/18/17 15:05:57 HTTP Status: OK (200)

{

"request": {

"requestId": "6c0afeaf-42a4-4628-9312-2305e533f673",

"startTime": "Wed, 17 May 2017 19:04:56 GMT",

"requestScope": "vfModule",

"requestType": "createInstance",

...

"requestStatus": {

"requestState": "FAILED",

"statusMessage": "Received vfModuleException from VnfAdapter: category='INTERNAL' message='Exception during create VF 0 : Stack error (CREATE_FAILED): Resource CREATE failed: IpAddressInUseClient: resources.vsn_private_1_port: Unable to complete operation for network 6dfab28d-183e-4ffd-8747-b360aa41b078. The IP address 10.1.0.13 is in use. - stack successfully deleted' rolledBack='true'",

"percentProgress": 100,

"finishTime": "Wed, 17 May 2017 19:05:48 GMT"

}

}

}

-xit2fdnpz2yd | BC:76:4E:20:3B:63 | ip_address='10.1.0.13', subnet_id='5a4808b2-2fca-40ab-ba43-10d21a9e5b64' | ACTIVE | |

|---|

05/18/17 15:05:57 HTTP Status: OK (200)

{

"request": {

"requestId": "6c0afeaf-42a4-4628-9312-2305e533f673",

"startTime": "Wed, 17 May 2017 19:04:56 GMT",

"requestScope": "vfModule",

"requestType": "createInstance",

...

"requestStatus": {

"requestState": "FAILED",

"statusMessage": "Received vfModuleException from VnfAdapter: category='INTERNAL' message='Exception during create VF 0 : Stack error (CREATE_FAILED): Resource CREATE failed: IpAddressInUseClient: resources.vsn_private_1_port: Unable to complete operation for network 6dfab28d-183e-4ffd-8747-b360aa41b078. The IP address 10.1.0.13 is in use. - stack successfully deleted' rolledBack='true'",

"percentProgress": 100,

"finishTime": "Wed, 17 May 2017 19:05:48 GMT"

}

}

}

|

|---|

Handle outdated vFW (201702xx) zip causing Traffic Generation not to start

Fix: Use the 1.0.0 template in Nexus - or the updated one on this wiki

1) The vFW zip attached to the onap.org wiki that we were using will not work with 1.0.0-SNAPSHOT or 1.0.0 (disabled/replacing it) – we are using the official yaml now from 1.0.0 - this fixes the userdata bootstrap script on the PGN instance – where nexus pulls of TG scripts was failing (why the demo did not work in the past) – we now use (with modified ssh key, ips and networks)

Heat template:

https://nexus.onap.org/content/sites/raw/org.openecomp.demo/heat/vFW/1.0.0/

Scripts to verify on the pgn VM:

https://nexus.onap.org/content/sites/raw/org.openecomp.demo/vnfs/vfw/1.0.0/

After this we were able to run ./demo.sh appc – to start the TG

Issue:

We are currently having issues with the traffic generator - both starting the stream and also actually sshing to the VM (looks like the ssh key in the env is not picked up)

Fix: the repo URL in the vFW zip has changed to

#repo_url: https://ecomp-nexus:8443/repository/raw/org.openecomp.simpledemo

Handle outdated vFW (201702xx) zip causing Traffic Generation not to start

Fix: Use the 1.0.0 template in Nexus - or the updated one on this wiki

1) The vFW zip attached to the onap.org wiki that we were using will not work with 1.0.0-SNAPSHOT or 1.0.0 (disabled/replacing it) – we are using the official yaml now from 1.0.0 - this fixes the userdata bootstrap script on the PGN instance – where nexus pulls of TG scripts was failing (why the demo did not work in the past) – we now use (with modified ssh key, ips and networks)

Heat template:

https://nexus.onap.org/content/sites/raw/org.openecomp.demo/heat/vFW/1.0.0/

Scripts to verify on the pgn VM:

repo_url:

https://nexus.onap.org/content/sites/raw/org.openecomp.demo/vnfs/vfw/1.0

...

Check your TG VM and look for scripts in /config like the following that should have been copied over

wget --user=$REPO_USER --password=$REPO_PASSWD $REPO_URL/v_firewall_init.sh

Also the private key for the 3 vFW VM's is in /testsuite/robot/assets/keys/robot_ssh_private_key.pvt

TODO: 20181023 during the Academic Conference : the SDNC preload checkbox does not actually run the preload robot script - - still need a manual preload via the rest call in Vetted vFirewall Demo - Full draft how-to for F2F and ReadTheDocs - it just tells SO to pull in data from SDNC

Install the vFWCL first because it has the network

to do repeated instantiations - adjust the network values in the preload-vnf-topology-operation rest call - being automated in casablanca - 92,96, put the right service-type (Service Instance ID - top right in the gui)

...

After this we were able to run ./demo.sh appc – to start the TG

Issue:

We are currently having issues with the traffic generator - both starting the stream and also actually sshing to the VM (looks like the ssh key in the env is not picked up)

Fix: the repo URL in the vFW zip has changed to

#repo_url: https://ecomp-nexus:8443/repository/raw/org.openecomp.simpledemo

repo_url: https://nexus.onap.org/content/sites/raw/org.openecomp.demo/vnfs/vfw/1.0.0-SNAPSHOT

Check your TG VM and look for scripts in /config like the following that should have been copied over

wget --user=$REPO_USER --password=$REPO_PASSWD $REPO_URL/v_firewall_init.sh

Also the private key for the 3 vFW VM's is in /testsuite/robot/assets/keys/robot_ssh_private_key.pvt