...

| Code Block |

|---|

|

# setup the master

git clone https://gerrit.onap.org/r/logging-analytics

sudo logging-analytics/deploy/rancher/oom_rancher_setup.sh -b master -s <your domain/ip> -e onap

# manually delete the host that was installed on the master - in the rancher gui for now

# run without a client on the master

sudo logging-analytics/deploy/aws/oom_cluster_host_install.sh -n false -s <your domain/ip> -e fs-nnnnnn1b -r us-west-1 -t 371AEDC88zYAZdBXPM -c true -v true

ls /dockerdata-nfs/

onap test.sh

# run the script from git on each cluster node

git clone https://gerrit.onap.org/r/logging-analytics

sudo logging-analytics/deploy/aws/oom_cluster_host_install.sh -n true -s <your domain/ip> -e fs-nnnnnn1b -r us-west-1 -t 371AEDC88zYAZdBXPM -c true -v true

# check a node

ls /dockerdata-nfs/

onap test.sh

sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6e4a57e19c39 rancher/healthcheck:v0.3.3 "/.r/r /rancher-en..." 1 second ago Up Less than a second r-healthcheck-healthcheck-5-f0a8f5e8

f9bffc6d9b3e rancher/network-manager:v0.7.19 "/rancher-entrypoi..." 1 second ago Up 1 second r-network-services-network-manager-5-103f6104

460f31281e98 rancher/net:holder "/.r/r /rancher-en..." 4 seconds ago Up 4 seconds r-ipsec-ipsec-5-2e22f370

3e30b0cf91bb rancher/agent:v1.2.9 "/run.sh run" 17 seconds ago Up 16 seconds rancher-agent

# On the master - fix helm after adding nodes to the master

sudo helm init --upgrade

$HELM_HOME has been configured at /home/ubuntu/.helm.

Tiller (the Helm server-side component) has been upgraded to the current version.

sudo helm repo add local http://127.0.0.1:8879

# check the cluster on the master

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ip-172-31-16-85.us-west-1.compute.internal 129m 3% 1805Mi 5%

ip-172-31-25-15.us-west-1.compute.internal 43m 1% 1065Mi 3%

ip-172-31-28-145.us-west-1.compute.internal 40m 1% 1049Mi 3%

ip-172-31-21-240.us-west-1.compute.internal 30m 0% 965Mi 3%

# important: secure your rancher cluster by adding an oauth github account - to keep out crypto miners

http://cluster.onap.info:8880/admin/access/github

# now back to master to install onap

sudo cp logging-analytics/deploy/cd.sh .

sudo ./cd.sh -b master -e onap -c true -d true -w false -r false

136 pending > 0 at the 1th 15 sec interval

ubuntu@ip-172-31-28-152:~$ kubectl get pods -n onap | grep -E '1/1|2/2' | wc -l

20

120 pending > 0 at the 39th 15 sec interval

ubuntu@ip-172-31-28-152:~$ kubectl get pods -n onap | grep -E '1/1|2/2' | wc -l

47

99 pending > 0 at the 93th 15 sec interval

after an hour most of the 136 containers should be up

kubectl get pods --all-namespaces | grep -E '0/|1/2'

onap onap-aaf-cs-59954bd86f-vdvhx 0/1 CrashLoopBackOff 7 37m

onap onap-aaf-oauth-57474c586c-f9tzc 0/1 Init:1/2 2 37m

onap onap-aai-champ-7d55cbb956-j5zvn 0/1 Running 0 37m

onap onap-drools-0 0/1 Init:0/1 0 1h

onap onap-nexus-54ddfc9497-h74m2 0/1 CrashLoopBackOff 17 1h

onap onap-sdc-be-777759bcb9-ng7zw 1/2 Running 0 1h

onap onap-sdc-es-66ffbcd8fd-v8j7g 0/1 Running 0 1h

onap onap-sdc-fe-75fb4965bd-bfb4l 0/2 Init:1/2 6 1h

# cpu bound - a small cluster has 4x4 cores - try to run with 4x16 cores

ubuntu@ip-172-31-28-152:~$ kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ip-172-31-28-145.us-west-1.compute.internal 3699m 92% 26034Mi 85%

ip-172-31-21-240.us-west-1.compute.internal 3741m 93% 3872Mi 12%

ip-172-31-16-85.us-west-1.compute.internal 3997m 99% 23160Mi 75%

ip-172-31-25-15.us-west-1.compute.internal 3998m 99% 27076Mi 88%

# for reference

register_node() {

#constants

DOCKERDATA_NFS=dockerdata-nfs

DOCKER_VER=17.03

USERNAME=ubuntu

if [[ "$IS_NODE" != false ]]; then

sudo curl https://releases.rancher.com/install-docker/$DOCKER_VER.sh | sh

sudo usermod -aG docker $USERNAME

fi

sudo apt-get install nfs-common -y

sudo mkdir /$DOCKERDATA_NFS

sudo mount -t nfs4 -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2 $AWS_EFS.efs.$AWS_REGION.amazonaws.com:/ /$DOCKERDATA_NFS

if [[ "$IS_NODE" != false ]]; then

echo "Running agent docker..."

if [[ "$COMPUTEADDRESS" != false ]]; then

echo "sudo docker run --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.9 http://$MASTER:8880/v1/scripts/$TOKEN"

sudo docker run --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.9 http://$MASTER:8880/v1/scripts/$TOKEN

else

echo "sudo docker run -e CATTLE_AGENT_IP=\"$ADDRESS\" --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.9 http://$MASTER:8880/v1/scripts/$TOKEN"

sudo docker run -e CATTLE_AGENT_IP="$ADDRESS" --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.9 http://$MASTER:8880/v1/scripts/$TOKEN

fi

fi

}

IS_NODE=false

MASTER=

TOKEN=

AWS_REGION=

AWS_EFS=

COMPUTEADDRESS=true

ADDRESS=

VALIDATE=

while getopts ":u:n:s:e:r:t:c:a:v" PARAM; do

case $PARAM in

u)

usage

exit 1

;;

n)

IS_NODE=${OPTARG}

;;

s)

MASTER=${OPTARG}

;;

e)

AWS_EFS=${OPTARG}

;;

r)

AWS_REGION=${OPTARG}

;;

t)

TOKEN=${OPTARG}

;;

c)

COMPUTEADDRESS=${OPTARG}

;;

a)

ADDRESS=${OPTARG}

;;

v)

VALIDATE=${OPTARG}

;;

?)

usage

exit

;;

esac

done

if [ -z $MASTER ]; then

usage

exit 1

fi

register_node $IS_NODE $MASTER $AWS_EFS $AWS_REGION $TOKEN $COMPUTEADDRESS $ADDRESS $VALIDATE

|

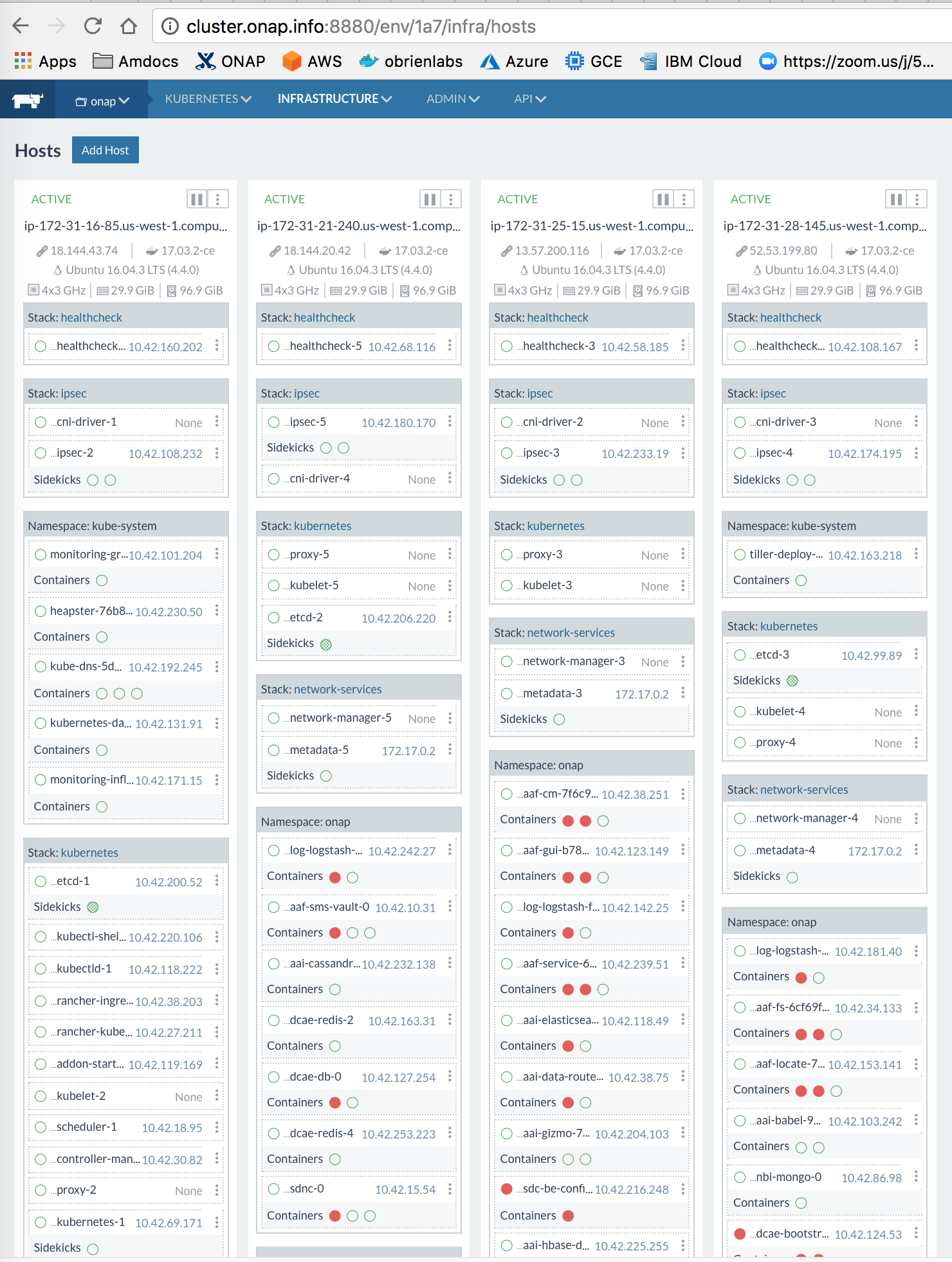

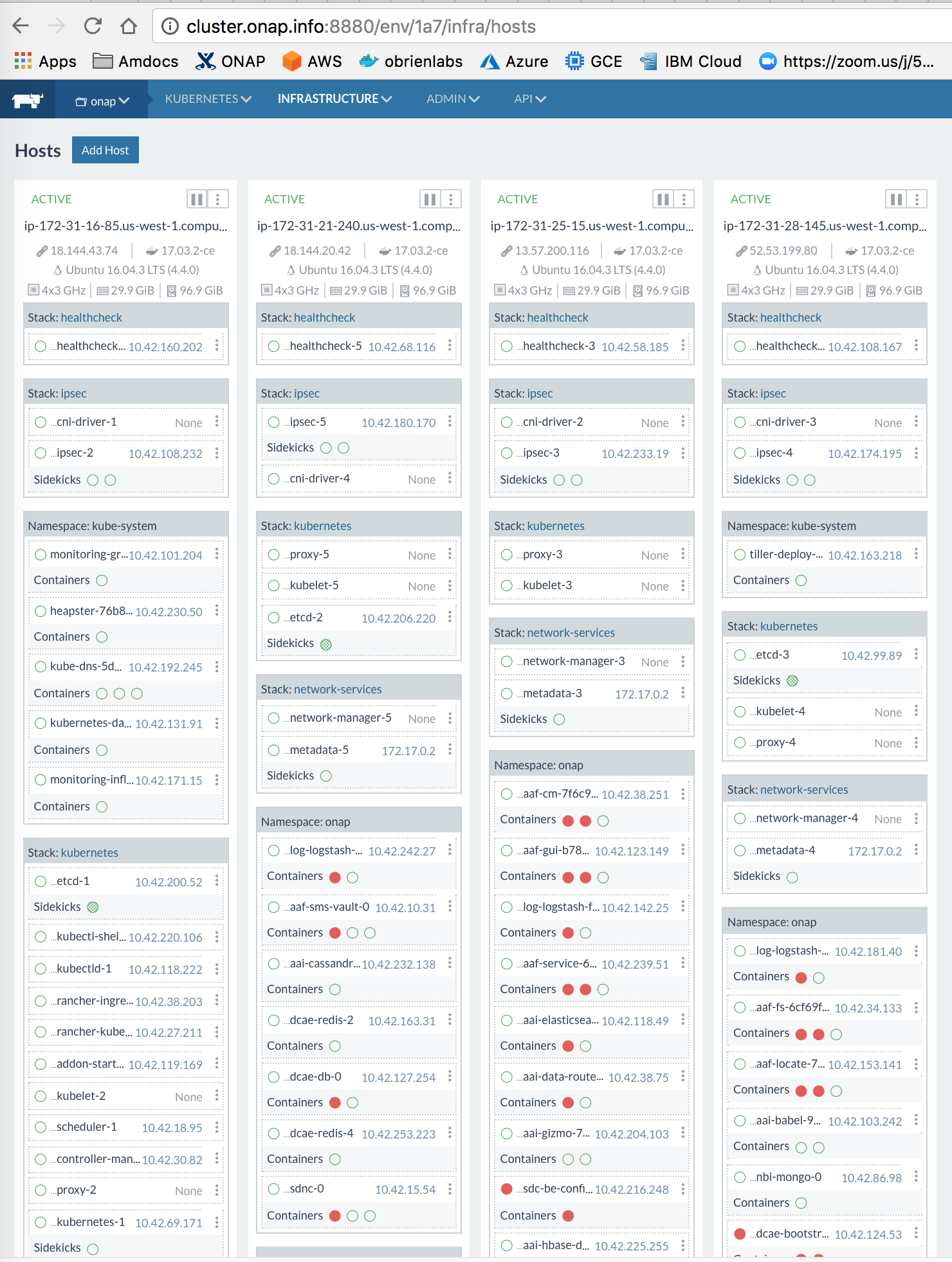

13 Node Kubernetes Cluster on AWS

Notice that we are vCore bound Ideally we need 64 vCores for a minimal production system - this runs with 12 x 4 vCores = 48

Client Install

| Code Block |

|---|

|

ssh ubuntu@ld.onap.info

# setup the master

git clone https://gerrit.onap.org/r/logging-analytics

sudo logging-analytics/deploy/rancher/oom_rancher_setup.sh -b master -s <your domain/ip> -e onap

# manually delete the host that was installed on the master - in the rancher gui for now

# run without a client on the master

ls /dockerdata-nfs/

onap test.sh

# run the script from git on each cluster node

git clone https://gerrit.onap.org/r/logging-analytics

sudo logging-analytics/deploy/aws/oom_cluster_host_install.sh -n true -s <your domain/ip> -e fs-nnnnnn1b -r us-west-1 -t 371AEDC88zYAZdBXPM -c true -v true

# check a node

ls /dockerdata-nfs/

onap test.sh

# important: secure your rancher cluster by adding an oauth github account - to keep out crypto miners

http://cluster.onap.info:8880/admin/access/github

# now back to master to install onap

sudo cp logging-analytics/deploy/cd.sh .

sudo ./cd.sh -b master -e onap -c true -d true -w false -r false

after an hour most of the 136 containers should be up

kubectl get pods --all-namespaces | grep -E '0/|1/2'

# cpu bound - a small cluster has 4x4 cores - try to run with 4x16 cores

ubuntu@ip-172-31-28-152:~$ kubectl top nodes

|

Kubernetes Installation via CloudFormation

...