Overview: ONAP Next Generation Security & Logging Architecture, Design and Roadmap

- Provided Rationale for Service-Mesh Security: uniform, open-source- and standard-based security at the platform-level

- Istio was chosen for ONAP Service-Mesh Security to fulfill ONAP security requirements

- Defined ONAP Security Architecture, leveraging Istio, Keycloak, Cert-Mgr, Ingress, Egress

- Multi-Tenancy architecture and plan were addressed

- OOM team defined the service-mesh implementation priority for Istanbul; service-to-service mTLS first and then, AAA (AuthN, AuthZ, Audit)

- Currently, collecting feedback from NSA

- Implementation Plan for Istanbul:

- Phase 1: OOM will contribute this mTLS with Service-Mesh (contribution target: SDC, DCAE, SO, AAI, DMaaP, SDNC)

- make selected components Service-Mesh compatible

- Configure service-to-service mTLS communication

- Configure peer authentication policies

- Configure authorization policy for coarse-grained authorization

Phase 2: OOM plans to contribute on AAA, Multi-Tenancy and logging (stretch goals)

- Deploy/Configure Keycloak as the reference IdAM

- Configure Istiod for public key and JWT handling

- Configure request authentication policies

- Configure additional authorization policies

- Phase 1: OOM will contribute this mTLS with Service-Mesh (contribution target: SDC, DCAE, SO, AAI, DMaaP, SDNC)

Migration from AAF to Service-Mesh Security

Why not ONAP AAF (Application AuthZ Framework)

- It is a solution for ONAP, which is maintained by ONAP only

- AAF CADI plugin supports Java only, where some ONAP components are written in other languages (Python, etc.)

- Clients in various languages could have language specific restrictions

- AAF needs to keep up with the latest security technology evolution, but no active support in ONAP

- AAF is not widely used by ONAP projects due to:

- Integration/enforcement of AuthN and AuthZ are done by each project

- Mutual-TLS enablement & Secrets/certificate management are done by each project

- For encryption, each project needs to understand AAF certificate management mechanism

- Compatibility with variety of external authentication systems is a challenge

- Third-party microservices requires modification to work with AAF

- SSO and Multi-factor authentication support is questionable

We want to achieve simpler, uniform, open source- and standard-based Security (Service Mesh-pattern Security):

- Eliminate or reduce microservice infrastructure level security tasks in services

- Security is handled at the platform level

- Enabling uniform security across applications

- Minimize code changes and increase stability

- Platform-agnostic abstraction reduces risks on integration, extensibility and customization

- Use of Sidecar, so security layer is transparent for the application

- External Interfaces secured at Ingress Gateway

- Internal Interfaces secured at Service Mesh Sidecar

- User Management and RBAC is managed by open-source technologies

Benefits to ONAP, O-RAN and External Components

- The uniform, open-source-based and standard-based security would be a foundation for secure integration between ONAP / O-RAN and external components

- Product impacts for security would be minimum

- Ensure ONAP services have only the business logic of that service

- Third-party microservices can participate in ONAP security by leveraging the platform-level security (sidecar)

- Vendors can take advantage of open-source-based security, instead of having and maintaining their own security mechanism: cost effective

Service Mesh Security with Istio

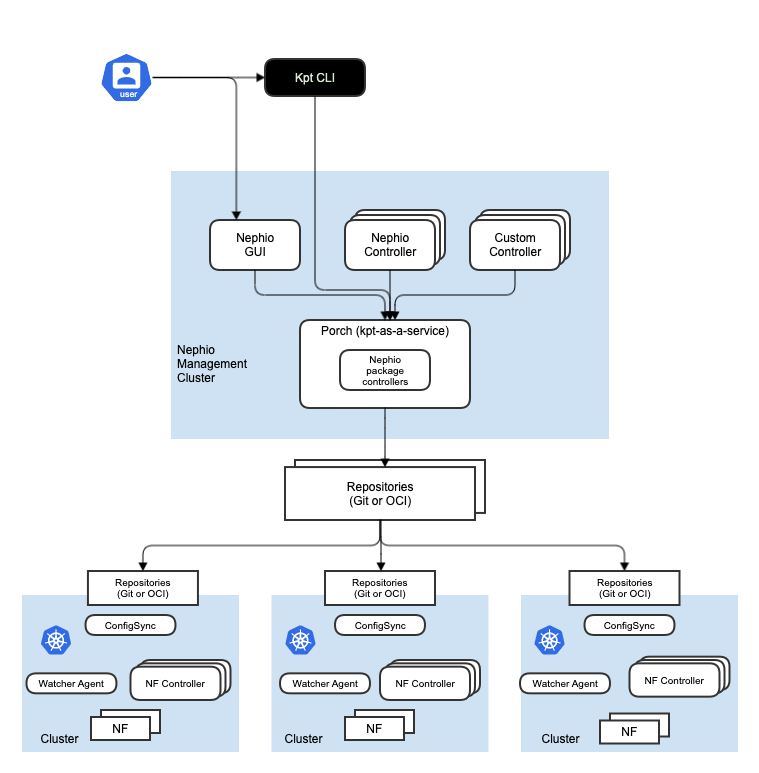

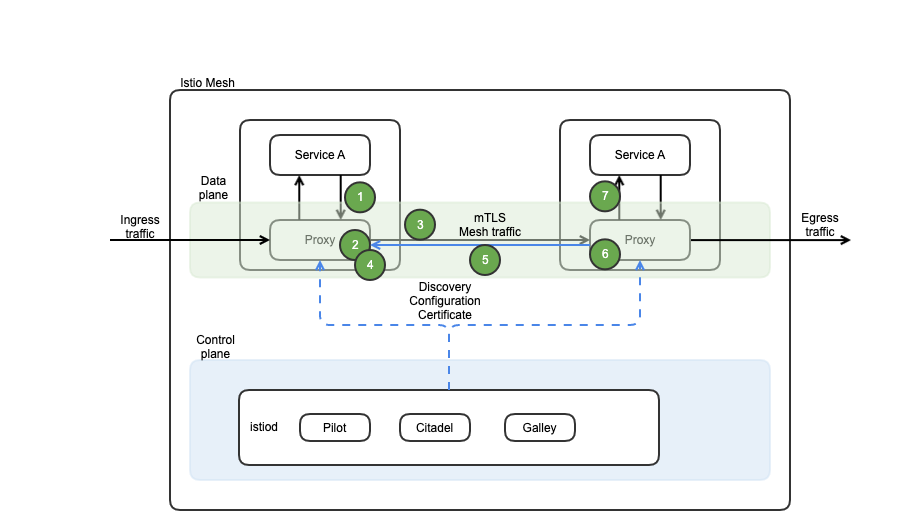

The following diagram depicts the Istio mesh architecture overview:

Why Istio?

Istio was chosen because it fulfills the current ONAP security requirements/needs with the following capabilities and yet provides future-proof mechanism.

However, please note that a new platform could be considered in the future as needed.

Istio authentication and authorization:

- Provides each service with a strong identity representing its role to enable interoperability across clusters and clouds.

- Secures service-to-service communication with authentication and authorization.

- Provides a key management system to automate key and certificate generation, distribution, and rotation.

Microservices have security needs:

- To defend against man-in-the-middle attacks, they need traffic encryption.

- To provide flexible service access control, they need mTLS (mutual TLS) and fine-grained access policies.

- To determine who did what at what time, they need auditing tools.

With Control and Data planes, mTLS, Ingress, Proxy and Egress, Istio provides Security features for :

- Strong identity

- Powerful policy

- Transparent TLS encryption

- Authentication, Authorization and Audit (AAA) tools to protect your services and data

Goals of Istio-based security are:

- Security by default: no changes needed to application code and infrastructure

- Defense in depth: integrate with existing security systems to provide multiple layers of defense

- Zero-trust network: build security solutions on distrusted networks

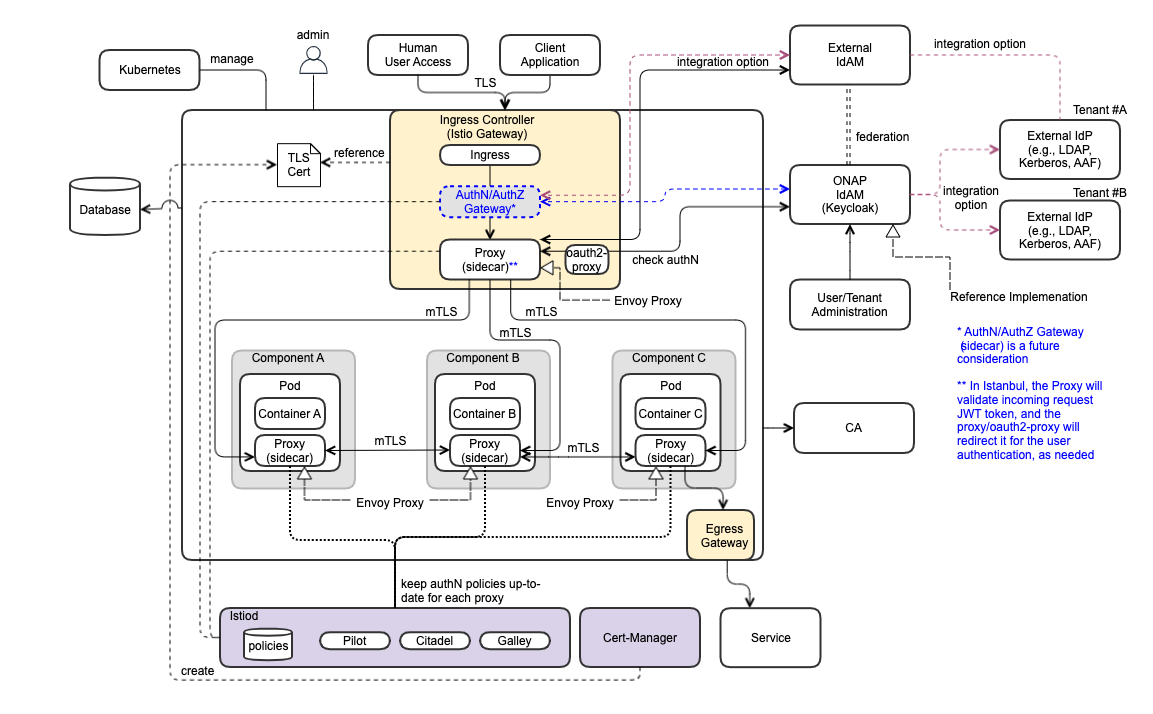

ONAP Security Architecture - Leveraging Istio, Keycloak, Cert-Mgr, Ingress, Egress

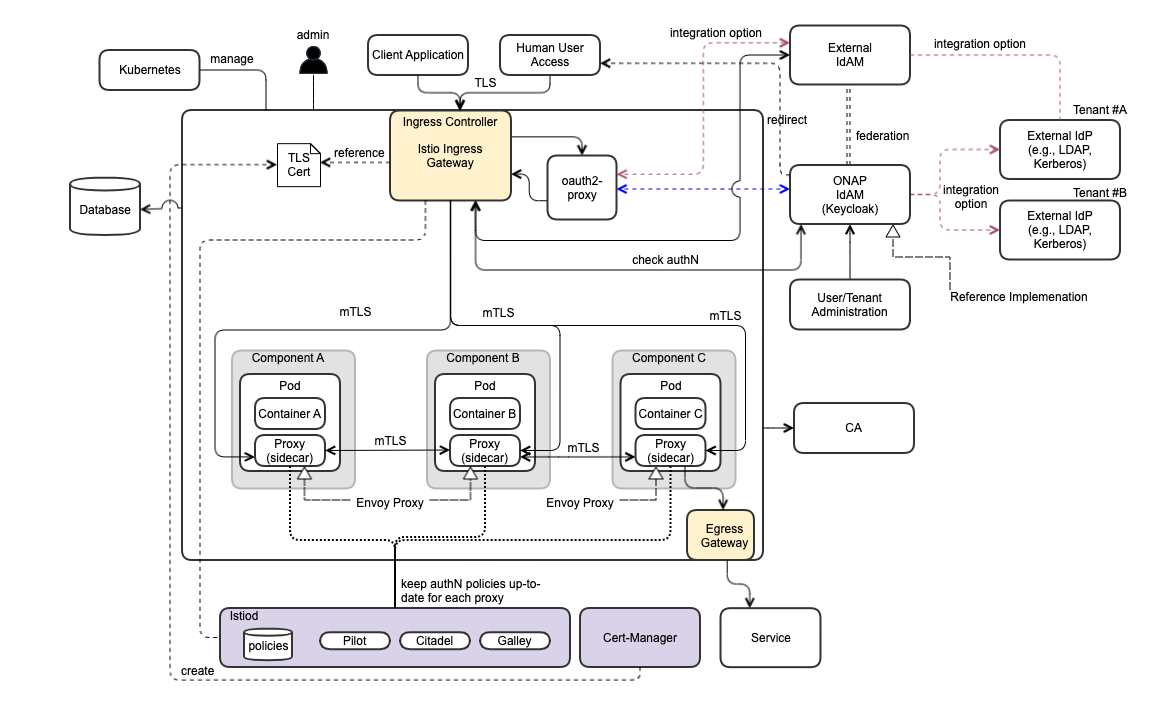

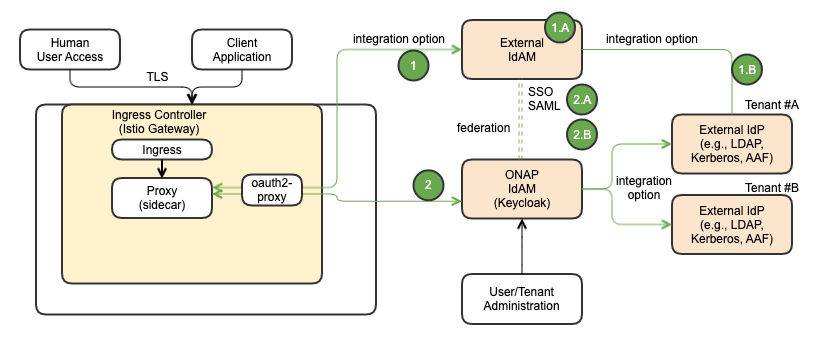

The following diagram depicts ONAP Security Architecture.

Security Functional Blocks

- Ingress Controller (realized by istio-ingress gateway)

- AuthN/AuthZ Gateway (sidecar) – future consideration

- ONAP IdAM (realized by Keycloak)

- User Administration

- Tenant Administration

- Proxy (sidecar) – for data plane

- oauth2-proxy

- External IdP (vendor-provided component)

- External IdAM

- Egress

- Istiod – control plane (pilot, citadel, galley, cert-mgr)

- Certificate Authority (CA)

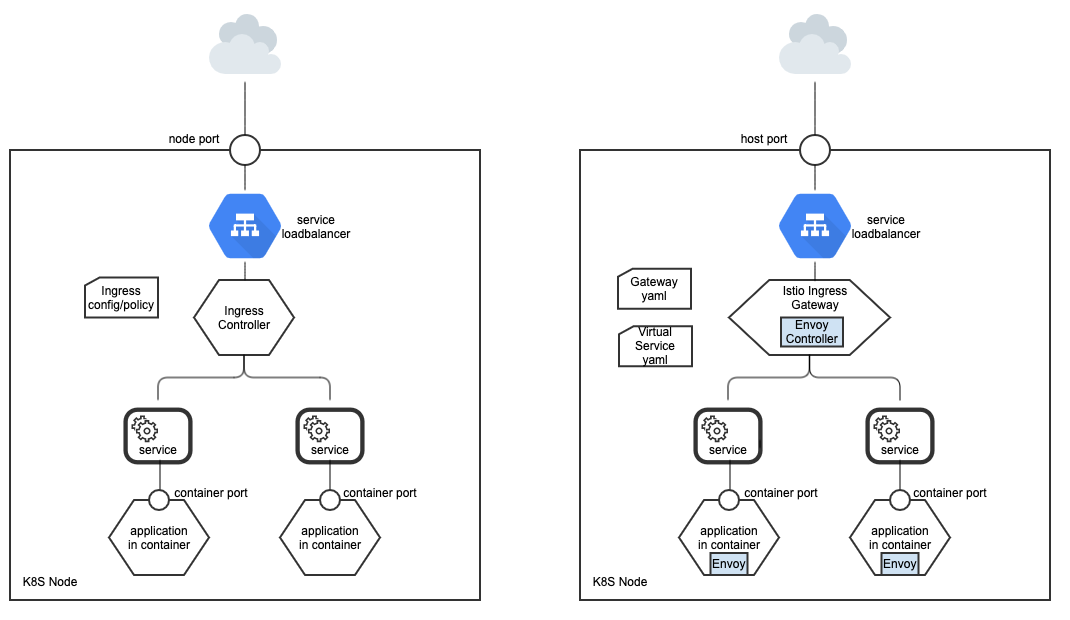

Kubernetes Ingress vs. Istio Ingress Gateway

Kubernetes Ingress

- One NodePort per service

- It is a reverse proxy and Nginx is recommended

- Different ports for different applications

- It has limited traffic control/routing rules and capabilities

Istio Ingress Gateway

- It is a POD with Envoy that does the routing

- It is configured by Gateway and Virtual Service (which configures routing and enables intelligent routing)

- It can be configured for routing rules, traffic rate limiting, policy-based checking, metrics collections

Security Architecture Highlights

- Use of control plane and pluggable sidecar (platform container) proxies to protect application service

- Intercept traffic and check AuthN/AuthZ with IdAM and IdP

- Support SSO for Human user access request

- Compatibility with variety of external authN/authZ systems (e.g., external IdP, IdAM)

- Allowing external IdP(s) and IdAM(s)

- Enabling a foundation for authN federation

- RBAC support, based on user role, group & permission

- Multi-Tenancy support

ONAP Component Security Requirements

The following is ONAP component security requirements.

# | Requirement |

1 | No implicit dependencies between common components and ONAP |

3 | istio-ingress is used as ingress controller |

4 | Separate Northbound (for user) and Southbound (xNF) interfaces |

5 | Every ingress gateway terminates the TLS and re-encrypts the payload before sending to the destination component using mTLS |

6 | ISTIO network policy must be configured for only authorized services communications |

7 | AuthN between services is done using certs via mTLS |

8 | OpenID Connect is used to authenticate user |

9 | ONAP IdAM is realized by Keycloak, but it can be replaced with an external IdAM that is compatible with OIDC; authentication federation is out-of-scope from Istanbul |

10 | Cert-manager and Citadel are used to manage (CRUDQ) certificates |

11 | Kubernetes is configured to use encryption at rest |

12 | ISTIO automated sidecar injection is configured in underlying Kubernetes |

13 | No root pods are used |

14 | All DB is considered external |

16 | Support possible options to integrate with LDAP, Kerberos, AAF as IdP |

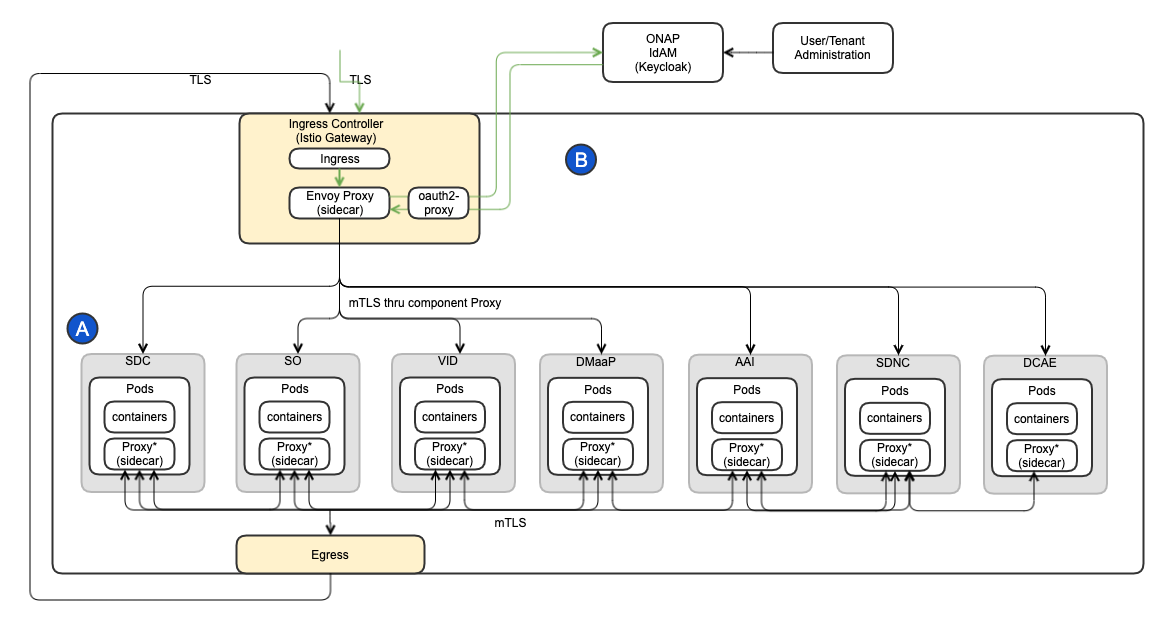

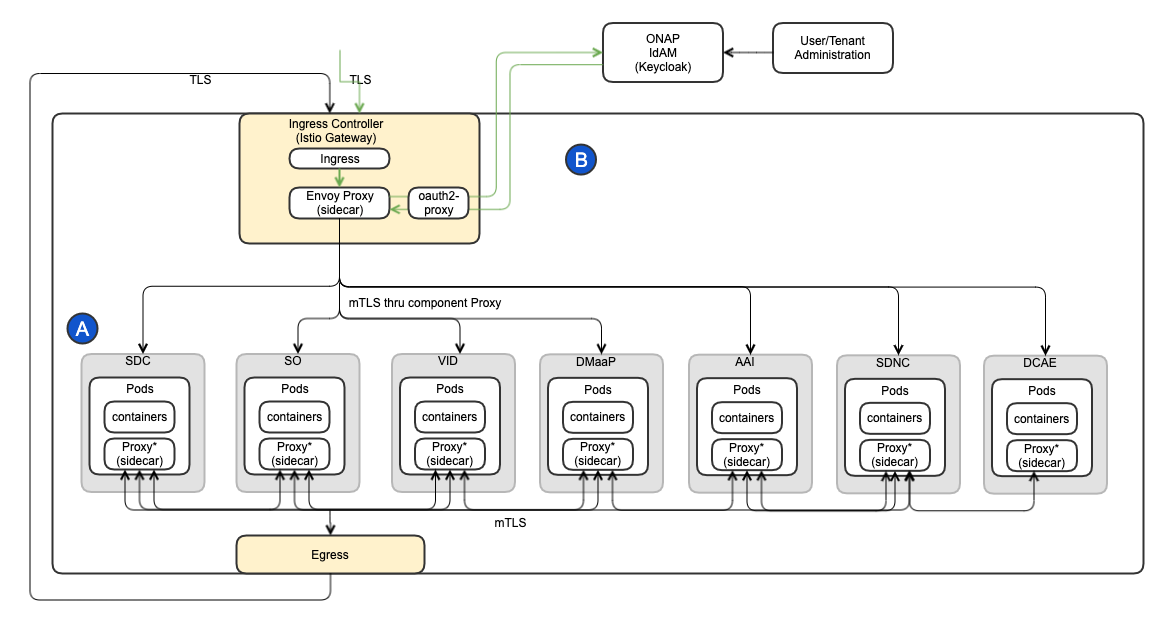

ONAP OOM Plan - Istanbul Priorities

The following diagram depicts ONAP OOM plan and Istanbul priorities:

note:

- Istio 1.10 is used

- for Istanbul, the ONAP default IdAM (realized by Keycloak) will be used as the reference implementation/configuration.

- Keycloak internal IdP will be used

- Multi-tenancy support of external IdAM and IdP will not configured

ONAP Component mTLS (A): make core ONAP deployable on Service Mesh (mTLS first without AAA): basic_onboarding, basic_vm automated integration tests

- Service Mesh compatibility:

- DMaaP Compatibility on service mesh

- Cassandra (and Istio 1.10?)

- AAI is ongoing

- issue on jobs; trying here to "quitquitquit" solution (cleanly exit the server)

- issue on sparky be: there's an aaf leftover part

- cannot test part needing cassandra for now

- SDC is ongoing

- SO is planned

- DCAE is ongoing

- SDNC is planned

- Better use of Service Mesh within components:

- SO: patch ongoing: https://gerrit.onap.org/r/c/so/+/122263

- Plan to check if we can run without authentication on these components; also check if we enlarge # of components

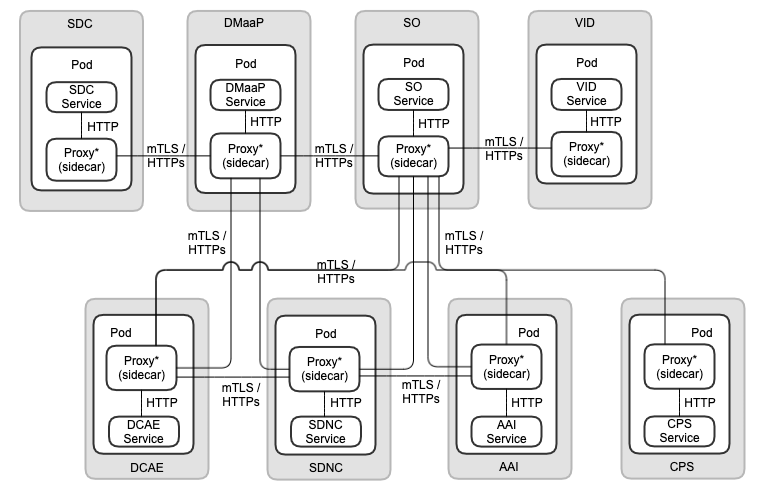

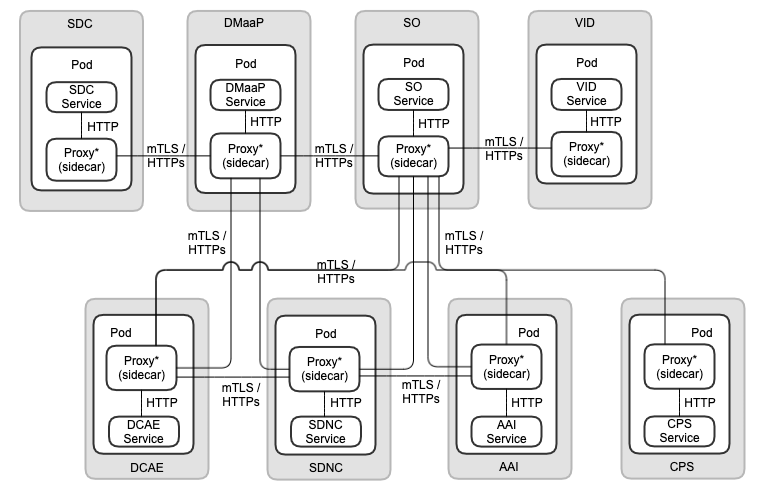

Communication Protocol between Service and Proxy and between Proxies

- The communication protocol between Service and Proxy is "HTTP".

- Service-Mesh Security architecture supports security at the platform level

- Application does not handle security directly

- For that, Service and the corresponding Proxy will sit on the same POD to make their communication internal

- The communication protocol between Proxies is mTLS via "HTTPs".

ONAP Component AAA (B) – stretch goal for Istanbul

- onboard roles and realm on Keycloak for tests / reference implementation (use of OIDC / JWT)

- add oauth2-proxy in the solution to redirect unauthenticated traffic to SSO Portal (keycloak as example)

- add some rules to enforce (AuthorizationPolicy)

- add some service accounts (work ongoing)

OAuth2-based Access Token AuthN/AuthZ

The following diagram depicts OAuth2-based Access Token AuthN/AuthZ.

- If the access token is used (unless self-contained access token), the Resource Server needs to ask the Authorization Server for the access token validation.

- This can cause chatty communication between the Resource Server and the Authorization Server.

- By leveraging the JWT (JSON Web Token), the above chatty communication can be reduced.

- In ONAP, the JWT is used for the browser client and application client request authN and authZ - see the following

OAuth2-based JWT AuthN/AuthZ

The following diagram depicts OAuth2-based JWT AuthN/AuthZ.

- By leveraging the JWT (JSON Web Token), the chatty communication between the ResourceServer and the Authorization Server can be removed.

- In ONAP, the JWT is used for the browser client and application client request authN and authZ - see the following

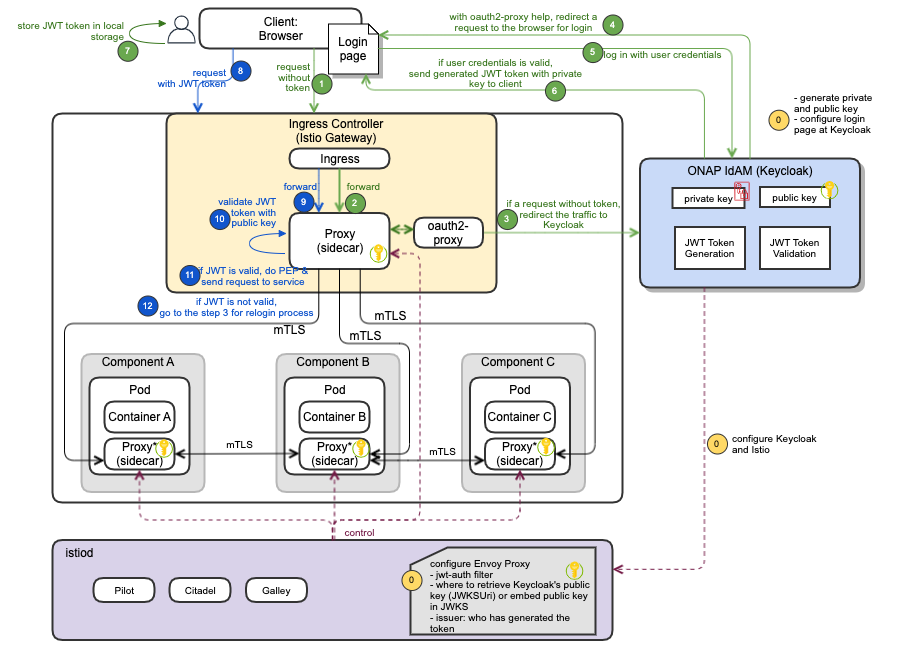

ONAP Browser Client Request Authentication and Authorization Use Case

The following diagram depicts the browser client request authentication and authorization process.

| # | Sequence |

|---|---|

| 0 | Configuration steps:

|

| 1 | Client sends a request without token |

| 2 | Ingress intercepts and forwards the request to Envoy proxy |

| 3 | If the request without token, oauth2-proxy redirects the traffic to Keycloak (reference configuration; could configure to use external IdAM) for login process

|

| 4 | Keycloak (with oauth2-proxy help) redirects the request to the login page. Note: this login page is configured at KeyCloak, and is provided to the user. This redirection is important because uses are completely isolated from applications and application never see a user's credentials. Applications instead are given an identity token or assertion that is cryptographically signed. |

| 5 | Client logs in with user credentials |

| 6 | If user credentials is valid, Keycloak generates JWT token with private/public key pair and send the generated token to the client |

| 7 | Client stores JWT token in local storage |

| 8 | Client sends a request with JWT token |

| 9 | Ingress intercepts and forwards the request to Envoy proxy |

| 10 | Envoy proxy validates JWT token with a public key |

| 11 | If JWT is valid, it enforces the security policy (e.g., authN, authZ). Once all is passed, it sends the request to service |

| 12 | if JWT is not valid, go to the step3 for the re-login process. If the max try is exceeded, error handling will be processed (details are TBD) |

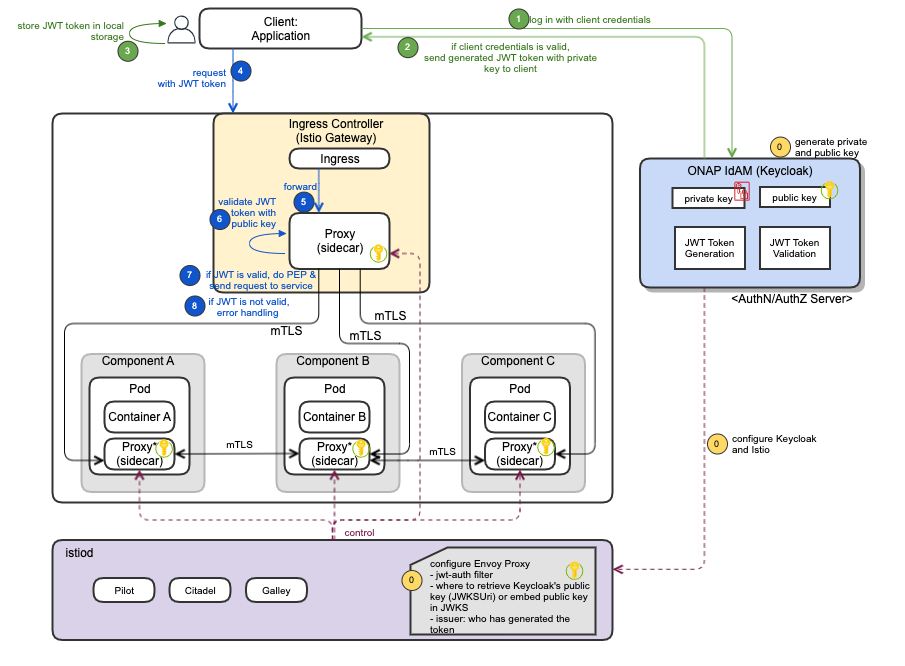

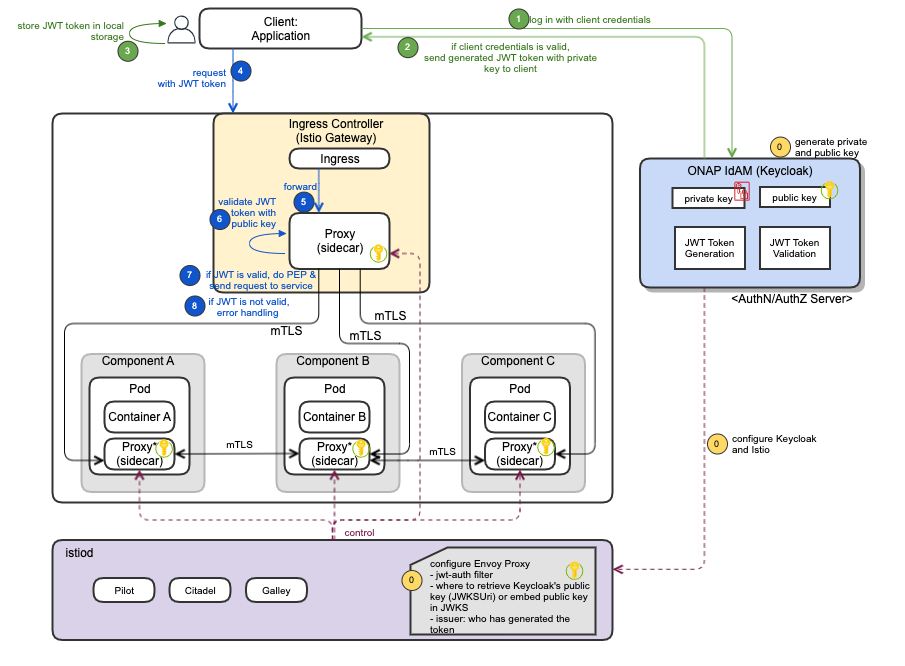

Machine Client Request Authentication and Authorization

The following diagram depicts the machine client request authentication and authorization process, by leveraging IdAM and Istio which support JWT.

| # | Sequence |

|---|---|

| 0 | Configuration steps:

|

| 1 | Client logs in with client credentials towards Keycloak |

| 2 | If client credentials is valid, Keycloak generates JWT token with private/public key pair and send the generated token to the client |

| 3 | Client stores JWT token in local storage |

| 4 | Client sends a request with JWT token |

| 5 | Ingress intercepts and forwards the request to Envoy proxy |

| 6 | Envoy proxy validates JWT token with a public key |

| 7 | If JWT is valid, it enforces the security policy (e.g., authN, authZ). Once all is passed, it sends the request to service |

| 8 | If JWT is not valid, error handling will be processed (details are TBD) |

Request Authentication Configuration

Request authentication policies specify the values needed to validate a JWT. Istio checks the presented token.

- If presented requests are against the rules in the request authentication policy, it rejects requests with invalid tokens

- When requests carry no token, they are accepted, but authorization policies can validate requests

- If more than one policy matches a workload, Istio combines all rules

See the authentication policy section for details.

Service-2-Service (Peer) mTLS Communication With Istio

- The Service-to-Service authN is supported by leveraging mTLS certificates, instead of username/password

- Also, authorization will be supported for mTLS by Istio authorization configuration

- Two services will communicate using a direct connection (e.g., via REST APIs) without passing thru Ingress Controller or MSB

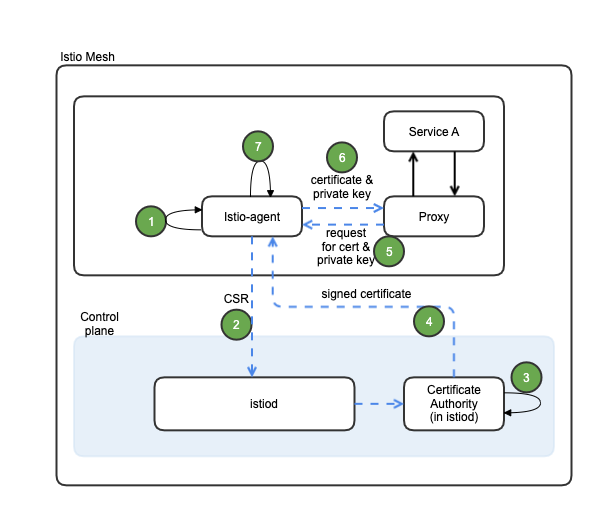

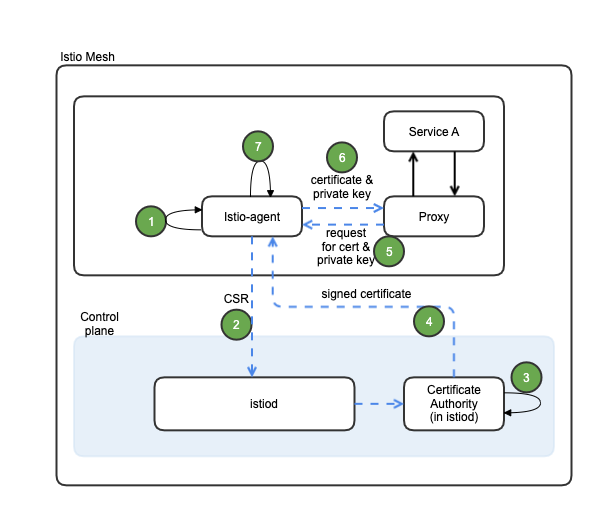

mTLS Certification Management

The following diagram depicts the Istio Certificate management and interaction with the Proxy (istio-agent and envoy proxy work together with istiod to automate key and certificate roation):

# | Sequence (mTLS Certification Management) |

1 | When started, Istio agent creates private key & CSR (certificate signing request) |

2 | Istio agent sends CSR with its credentials to istiod for signing |

3 | CA in istioid validates credentials in the CSR, signs CSR to generate certificate upon successful validation |

4 | CA in istioid sends the signed certificate to istio-agent |

5 | When a workload is started, Envoy proxy requests certificate and key from istio-agent via SDS (secret discovery service) API |

6 | Istio-agent sends certificates and private key to Envoy Proxy |

7 | Istio-agent monitors the expiration of the workload certificate. The above process repeats periodically for certificate and key rotation |

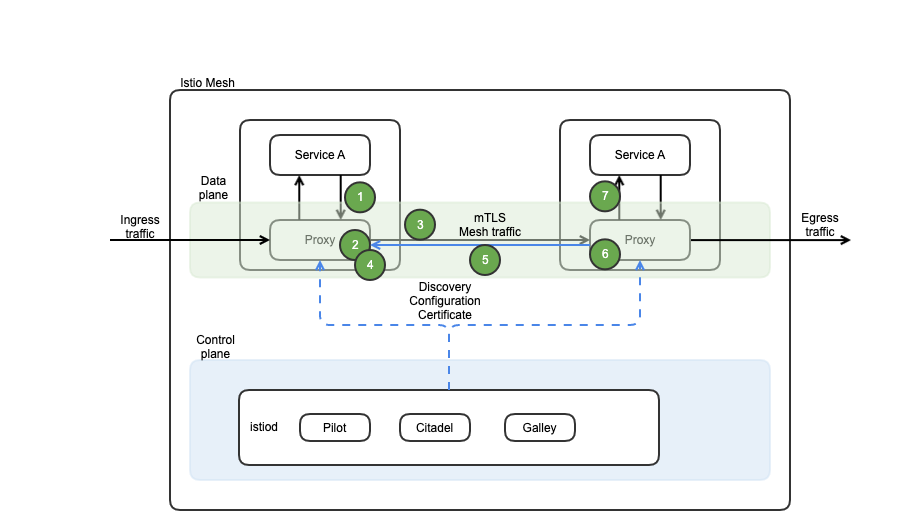

mTLS Service-2-Service Secure communication with Authentication and Authorization

The following diagram depicts the Service-to-Service mTLS secure communication with Authentication and Authorization:

# | Sequence (mTLS Authentication Flow) - TLSv1_2 |

1 | Client application in a container sends a plain-text HTTP request towards the server |

2 | Client proxy container intercepts the outbound request |

3 | Client proxy performs a TLS handshake with the server-side proxy |

A. This handshake exchanges client and server certificates | |

B. Istio preloaded certificates the proxy containers (see diagram A) | |

4 | Client proxy checks for ‘secure naming’ on the server’s certificate, and verify the server identify (service account presented in the server certificate) is authorized to run the target service |

5 | Client and server establish a mTLS connection |

| 6 | Istio forwards the traffic from the client side proxy to the server side proxy |

7 | The server side proxy authorizes the request |

A. if authorized, Istio forwards the traffic to the server application service through local TCP | |

| B. if not authorized, an error condition arises |

Authentication Mode

Peer authentication policies specify the mTLS mode that Istio enforces on target workloads:

- PERMISSIVE: workloads accept both mTLS and plain text traffic

- STRICT: workloads accepts only mTLS traffic

- DISABLE: mTLS is disabled

A permissive mode allows a service to accept both plaintext traffic and mutual TLS traffic at the same time. During the transition period, the permissive mode would be allowed for non-Istio clients communicate with non-Istio servers. Once ONAP components are Service-Mesh enabled, the permissive mode could be disabled.

Authentication Policy Configuration

Note: during Istanbul, peer authentication will be configured first (high priority). Then, OOM will visit the request authentication.

Authentication Policies are configured in .yaml files, and are saved in the Istio configuration storage once deployed. The Istio controller watches the configuration storage. Upon any policy changes, Istio sends configurations to the targeted endpoints (e.g., Envoy Proxy). Once the proxy receives the configuration, the new authentication requirement takes effect immediately on that pod.

- for request authentication, the client application is responsible for acquiring and attaching the JWT credential to the request.

- for peer authentication, Istio automatically upgrades all traffic between two PEPs to mTLS.

- If authentication policies disable mTLS mode, Istio continues to use plain text between PEPs. In ONAP Istanbul, mTLS mode will be enabled. Otherwise, the disabled use cases will be reviewed as an exception request.

Example,

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: "onap-peer-policy"

namespace: "onap_x"

spec:

selector:

matchLabels:

app: onap_A

mtls:

mode: STRICT

Authorization Policy Configuration

Note: during Istanbul, service-to-service (workload-to-workload) authorization will be configured first (high priority). Then, OOM will visit end-user-to-service (workload) authorization.

- The authorization policy enforces access control to the inbound traffic in the server side Envoy proxy. Each Envoy proxy runs an authorization engine that authorizes requests at runtime.

- When a request comes to the proxy, the authorization engine evaluates the request context against the current authorization policies, and returns the authorization result, either

ALLOWorDENY. - Istio authorization policies are configured using

.yamlfiles.

<source: https://istio.io/latest/docs/concepts/security/#authentication-policies>

Authorization policies support ALLOW, DENY and CUSTOM actions. The following digram depicts the policy precedence.

- CUSTOM → DENY → ALLOW

- in ONAP Istanbul, DENY and ALLOW will be configured first, as coarse-grained authorization. Then, CUSTOM action would be considered for fine-grained authorization in the future (as time allows).

<source: https://istio.io/latest/docs/concepts/security/#authentication-policies>

Example,

<source: https://istio.io/latest/docs/concepts/security/#authentication-policies>

Role-Based Access Control

ONAP Istanbul release supports Role-Based Access Control (RBAC). For that, roles (e.g., Admin, user, manager, etc.) will be defined, and mapping between users/groups and roles (m:n) will be set by leveraging Keycloak and Istiod.

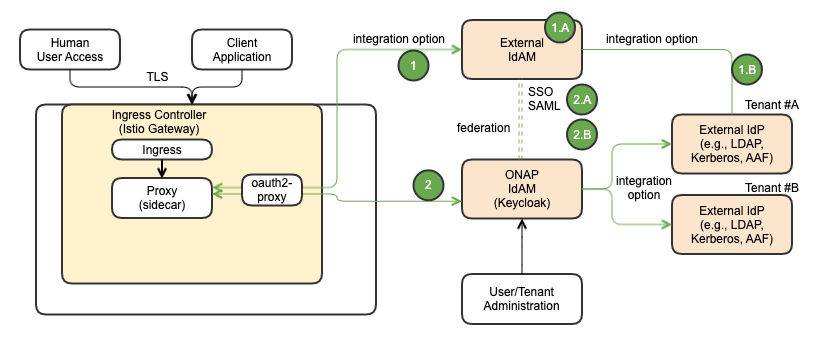

ONAP IdAM and External IdAM Interaction

The following diagram depicts the ONAP IdAM and External IdAM Interaction.

# | Choice |

1 | If the vendor chooses their own IdAM (external IdAM), the AuthN/AuthZ GW should be able to use the external IdAM, instead of ONAP IdAM – full integration choice |

A.The external IdAM will handle AuthN/AuthZ for ONAP incoming traffic; session cookie handling, session handling | |

B.The external IdAM will typically use their own IdP (external IdP) to verify their own user identities | |

2 | If ONAP IdAM is used, based on business use cases ,the ONAP IdAM could interface with the external IdAM, based on IdAM-to-IdAM interface configuration |

A.SSO | |

B.Authentication federation (SAML) – not part of Istanbul |

ONAP Security Components, IdAM External IdP(s), and Multi-Tenancy Support

Each service provider will likely have existing centralized IdP that ONAP should integrate with. This architecture supports that integration.

- ONAP IdAM proxies via plugin to a designated external IdP server(s)

- ONAP IdAM must support fallback mode, where it can act as IdP for cases where external IdP is unavailable

- Multi-Tenancy allows the same Username inside different tenants; There is no SSO between different tenants

- Realms will be defined to support multi-tenancy, by leveraging Keycloak.

- Realms are isolated from one another and can only manage and authenticate the users that they control.

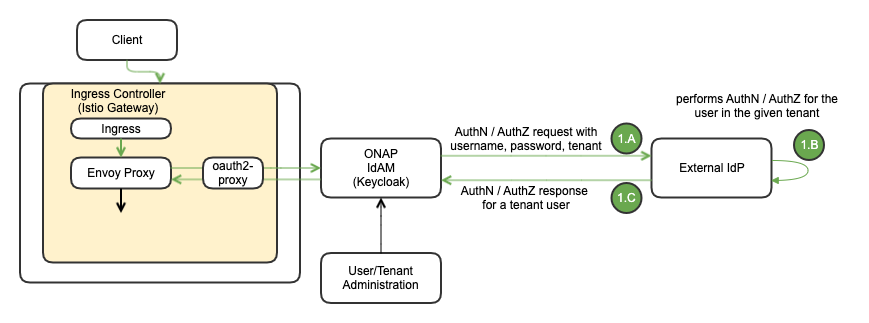

Single External IdP Use Case

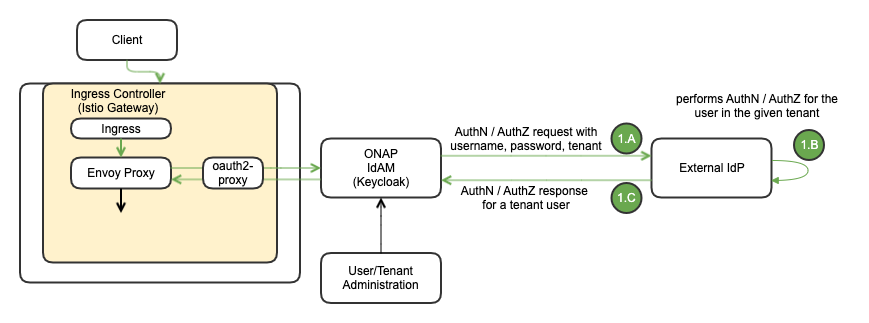

The following diagram depicts the Multi-Tenancy Support with single External IdP.

# | Sequence (Single External IdP) - A |

1 | If ONAP IdAM is connected to a single external IdP, the IdP contains the credentials of all the users from all the tenants |

A.AuthN/AuthZ requests including username, password and tenant will be forwarded from ONAP IdAM to the external IdP | |

B.The external IdP performs the authN/authZ for the user in the tenant realm | |

C.The external IdP returns with AuthN/AuthZ response |

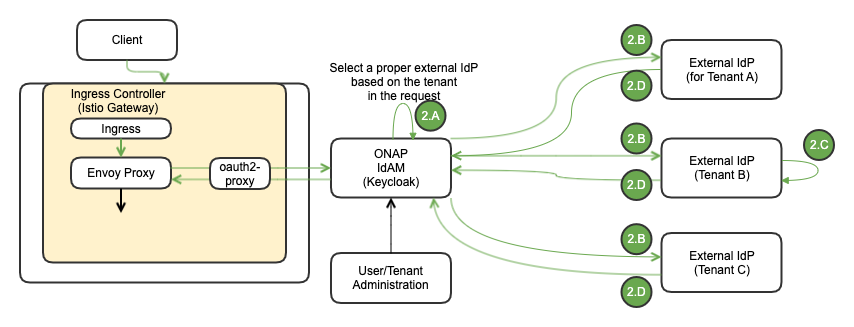

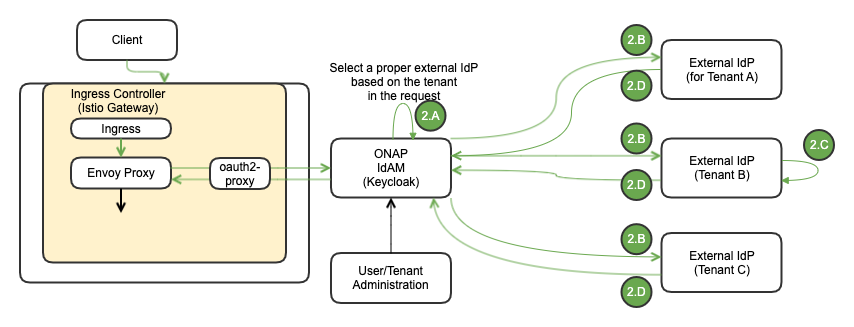

Multiple IdPs Use Case

The following diagram depicts the Multi-Tenancy Support with multiple External IdPs. Each tenant has its own external IdP:

# | Sequence (Multiple IdPs (each tenant has its own external IdP)) - B |

2 | If each tenant has its own external IdP, the IdP contains the credentials for its tenant users |

A.Based on the tenant info in the request, ONAP IdAM selects the correct external IdP | |

B.ONAP IdAM forwards the request to the selected external IdP | |

C.The selected external IdP performs AuthN/AuthZ | |

D.The selected external IdP returns with AuthN/AuthZ response |

ONAP Multi-Tenancy Support with Istio

Objective: ONAP components implement the right access controls for different end users/tenants to:

- Build& distribute the SDC artifacts

- Build & distribute CDS blueprints

- Build & distribute custom operational SO workflows

- Build & distribute Policies

- Build & distribute Control loops

- Access / manage the AAI inventory data for objects they own

- Publish & consume messages on DMaaP / Kafka topics they have access to

- Build, deploy & manage DCAE collectors & DCAE analytics applications

- Build, deploy & manage CDS executors

Approach & State:

- Single logical instance, with Multi-Tenancy built-in:

- SDC: partial implementation through user roles; RBAC for viewing, authoring, updating and distributing

- A&AI: is not extensive enough to allow any fine-grained control; set permission per owing group / user, not only per role

- SO: different groups of people are allowed to orchestrate/view/change different instances

- DMaaP: separate message bus topics based on consumers/producers

- Several logic instances, where deployment/configuration for those instances can be handled independently:

- Controllers (SDNC):

- Collectors (DCAE): it can be deployed and controlled by the tenants, based on the roles

- Policy executors (Policy): centralize PAP, Policy API; distribute PDPs by namespace

Istio Tenancy Model

- Namespace tenancy: a cluster can be shared across multiple teams, each using a different namespace

- Cluster tenancy: using clusters as a unit of tenancy

- Mesh tenancy: each mesh can be used as the unit of isolation

<source: https://istio.io/latest/docs/ops/deployment/deployment-models/>

An ONAP option: Istio supports soft multi-tenancy with multiple Istio control planes

- One control plane and one mesh per tenant

- The cluster admin gets control and visibility across all the Istio control planes

- The tenant admin gets control of a specific Istio instance

- Separation between the tenants is provided by Kubernetes namespaces and RBAC (define Role and RoleBinding)

ONAP Logging

Logging Propositions

- Treat logs as event streams

- An App should not concern itself with routing or storage of its output steam

- Each running process writes its event stream, unbuffered, to stdout or stderr

- Each process stream is:

- captured by execution environment,

- collated together with other streams from the app

- routed to one or more final destinations for viewing and long-term archival

- Archival destinations should not be visible to or configurable by the app (separation of concerns, security reasons)

Container logging is different

- When pods are evicted, crashed, deleted or scheduled on a different node, the logs from the containers are gone.

- Transferring transient local log data in the containers to the centralized (or even distributed) long-term log storage is a must.

Logging Types in Kubernetes

- Kubernetes Container Logging: container logs are generated by containerized applications. The typical way to capture container logs is to use STDOUT and STDERR.

- Kubernetes Node Logging: container logs are streamed by the container engine to a logging driver which can be realized by an open-source tool such as fluentbit, fluentd. Node-level logs could be kernel logs or systemd logs

- Kubernetes Cluster Logging: it refers to Kubernetes itself and all of its system component logs. Kubernetes does not provide a native solution for the cluster-level logging, but they can use various node-level logging agent, sidecar container as defined the following ONAP logging functional architecture.

- Kubernetes Events Logging: events hold information about resources state changes or errors related to pod eviction or decisions were made by the scheduler.

- Kubernetes Audit Logging: detailed descriptions of each call made to the kube-apiserver. It shows chronological activity event sequence.

Logging Scope

The ONAP application logging will be supported. Other logging scopes are under discussion.

- ONAP Application Logging

- Security Component Logging (e.g., Keycloak, IdP, external IdAM, external IdP)

- Infrastructure Logging (e.g., Kuberenets logging)

- xNF Logging(e.g., vFW, VNF, CNF)

Collating across logging scopes is under discussion, as an e2e tracing, but it is out of scope from Istanbul. It is not sure if we can achieve this in the near future release, either.

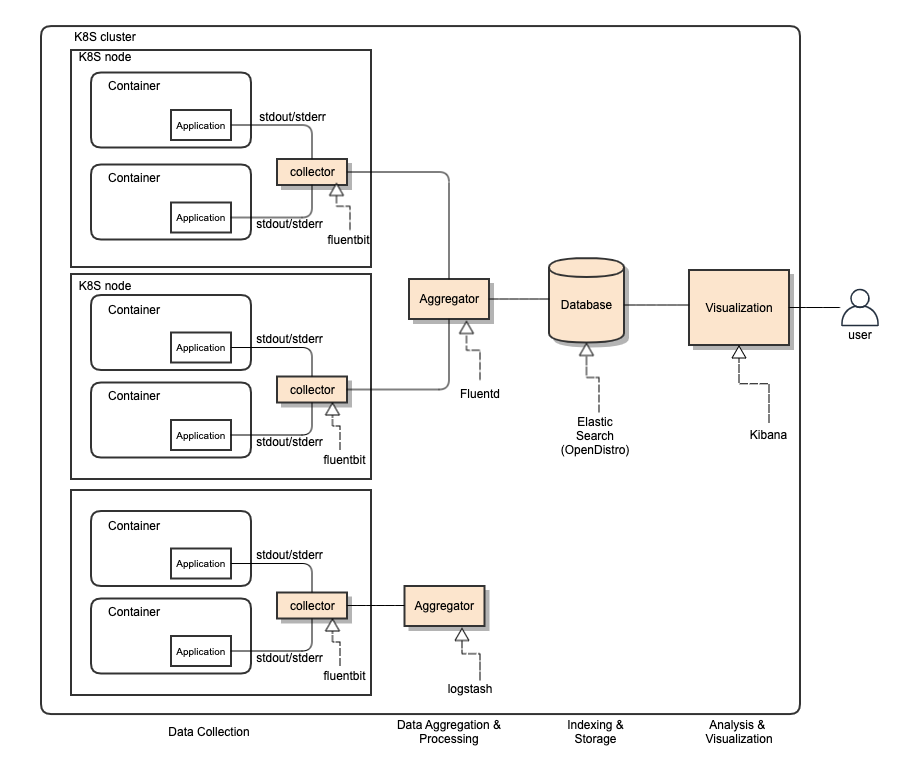

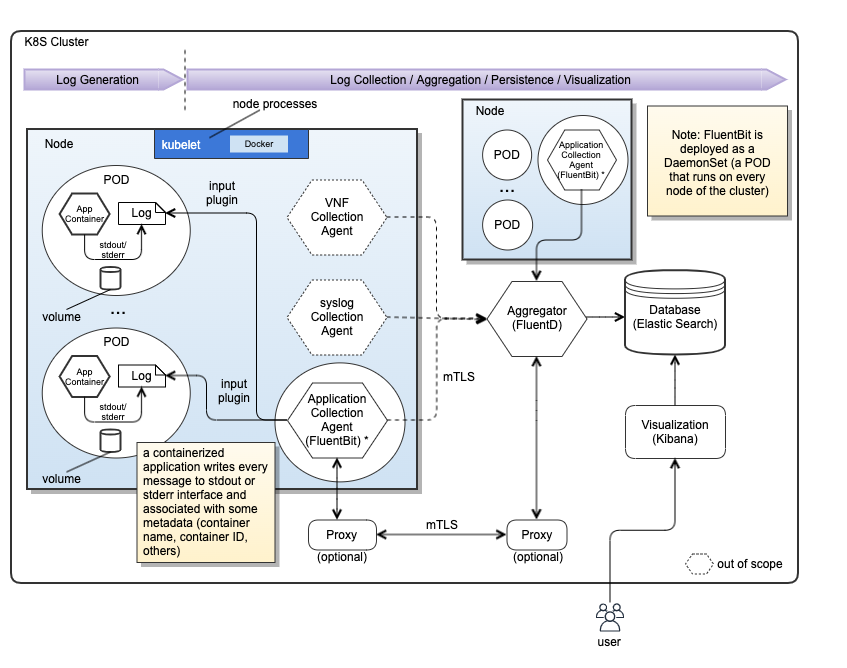

ONAP Logging Functional Architecture

The following diagram depicts ONAP logging functional architecture:

- ONAP supports open-source- and standard-based Logging architecture.

- Once all ONAP components push their logs into STDOUT/STDERR, any standard log pipe can work.

- Allowing the logging component stack is realized by choices of vendors

- ONAP provides a reference implementation/choice

- Note: e.g., for apps uses log4j/slf4j properties file, the stdout and stderr redirection can be configured

log4j.appender.stdout = org.apache.log4j.ConsoleAppender log4j.appender.stdout.Threshold = TRACE log4j.appender.stdout.Target = System.outlog4j.appender.stderr = org.apache.log4j.ConsoleAppender log4j.appender.stderr.Threshold = WARN log4j.appender.stderr.Target = System.err<log4j:configuration> <appender name="stderr" class="org.apache.log4j.ConsoleAppender"> <param name="threshold" value="warn" /> <param name="target" value="System.err"/> <layout class="org.apache.log4j.PatternLayout"> <param name="ConversionPattern" value="%-5p %d [%t][%F:%L] : %m%n" /> </layout> </appender> <appender name="stdout" class="org.apache.log4j.ConsoleAppender"> <param name="threshold" value="debug" /> <param name="target" value="System.out"/> <layout class="org.apache.log4j.PatternLayout"> <param name="ConversionPattern" value="%-5p %d [%t][%F:%L] : %m%n" /> </layout> <filter class="org.apache.log4j.varia.LevelRangeFilter"> <param name="LevelMin" value="debug" /> <param name="LevelMax" value="info" /> </filter> </appender> <root> <priority value="debug"></priority> <appender-ref ref="stderr" /> <appender-ref ref="stdout" /> </root> </log4j:configuration>

- The stdout or stderr streams will be picked up by the kubelet service running on that node and end up in the PV (PVC).

- ONAP logs will be exported to a different and centralized location for security, persistent and aggregation reasons

- Log collector sends logs to the aggregator in a different container

- Aggregator sends logs to the centralized database in a different container

- Logging Functional Blocks:

- Collector (one per K8S node)

- Aggregator (few per K8S cluster)

- Database (one per K8S cluster)

- Visualization (one per K8S cluster)

- ONAP reference implementation choice:

- EFK: Elastic Search, Fluentd, Fluentbit, Kibana

- LFG: Loki, Grafana, Fluentd / Flentbit

- ONAP logging conforms to SECCOM Container Logging requirements

- Event Types

- Log Data

- Log Management

ONAP Reference Implementation

The above diagram also indicates reference realization, such as:

- As a log driver, Fluent Bit is deployed per node as a collector and forwarder of log data to the Fluentd instance

- Instead of doing heavy log transformations, just forward logs securely to the Flentd Aggregator cluster

- FluentBit (node-level logging agent) needs to be run on every node to collect logs from every POD, so FluentBit is deployed as a DaemonSet (a POD that runs on every node of the cluster)

- When FluentBit runs, it will read, parse and filter the logs of every POD and could enrich each entry with the following information (metadata):

- POD Name

- POD ID

- Container Name

- Container ID

- Labels

- Annotations

- To obtain this information, a built-in filter plugin called kubernetes talks to the Kubernetes API server to retrieve relevant information such as the pod_id, labels and annotations, other fields such as pod_name, container_id and container_name are retrieved locally from the log file names. All of this is handled automatically, no intervention is required from a configuration aspect.

- A Fluentd instance (or a few Fluentd instances) is deployed per cluster as an aggregator - processing the data and routing it to a different destination (e.g., Elastic Search)

- It can handle heavy throughput - aggregating from multiple inputs, processing data and routing to different outputs. Also, it can handle a much larger amount of input and output sources

- centralized way to transform data; normalize log data and collect additional info from containers

- e.g., filter_record_transformer built-in filter plugin

<filter foo.bar>

@type record_transformer

remove_keys hostname,$.kubernetes.pod_id

- e.g., filter_record_transformer built-in filter plugin

</filter>

<record>

container ${id = tag.split('.')[5]; JSON.parse(IO.read("/var/lib/docker/containers/#{id}/config.v2.json"))["Name"][1..-1]}

hostname "#{Socket.gethostname}"

</record>

- or other custom plugins as needed

- routing logs to ElasticSearch or equivalent component(s)

- Note: for IoT (edge host/equipment), FluentBit could be installed per device, sending data to a Fluentd instance

- Elastic Search is a centralized log data indexing and storage

- Kibana / Grafana could be used for log data visualization.

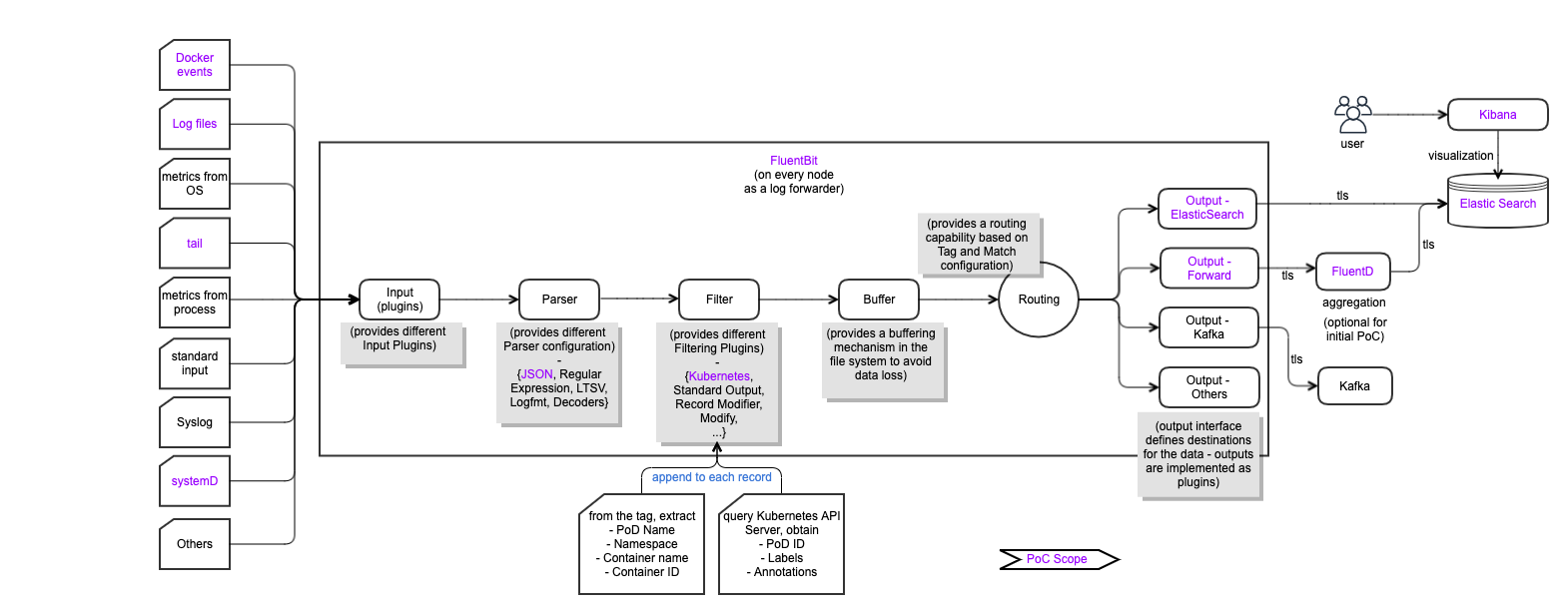

FluentBit Internal Pipeline

Logging Generation and Collection Architecture

The following diagram depicts a various ways of log collection.

By leveraging various collection agents and configurations, various-type log data will be collected and aggregated to be stored in the centralized persistent storage. However, it is not clear how we collate heterogeneous log data. In many case, ONAP cannot control external log data contents / formats to extract hints/attributes for collation.

- xNF logs will be collected via the VNF collection agent (collector), and will be sent to the Aggregator.

- Application (ONAP component) logs will be collected via the application collection agent (collector), and will be sent to the Aggregator.

- Infrastructure logs will be collected via syslog collection agent (collector), and will be sent to the Aggregator.

- The aggregator can be processed/normalized log data to the centralized database. Note that the database could be one or multiple based on log data types. It would be system provider's deployment choices.

- Visualization can be centralized or distributed based on log data types.

System Component Logs

Similar to the container logs, system component logs in the /var/log directory should be rotated once the size exceeds the maximum.

Cluster-Level Logging Requirements and Options (preferred option)

ONAP needs to support Cluster-Level Logging:

- Allow users to access application logs event even if a container crashes, a pod get evicted or a node dies

- Require a separate backend to store, analyze and query logs

- Write to standard output and standard error streams

- E.g., A container engine handles and redirects any output generated to a containerized application’s STDOUT and STDERR streams (e.g., /dev/stdout, /dev/stderr)

- Support multi-tenancy logging

Cluster-level Logging Architecture (A) - ONAP Reference implementation uses this option

- Use a node-level logging agent (e.g., DaemonSet) that runs on every node

- the logging agent has access to a directory with log files from all of the application containers on that node

- one agent per node is used

Include a dedicated sidecar container for logging in an application pod- A container engine handles and redirects any output generated to a containerized application's stdout and stderr.

- Push logs directly to a backend from within an application

- Note:

- node-level logging creates only one agent per node and does not require any changes to the applications running on the node

- Prerequisite: Application containers write STDOUT and STDERR

- A node-level agent collects these logs and forwards them for aggregation

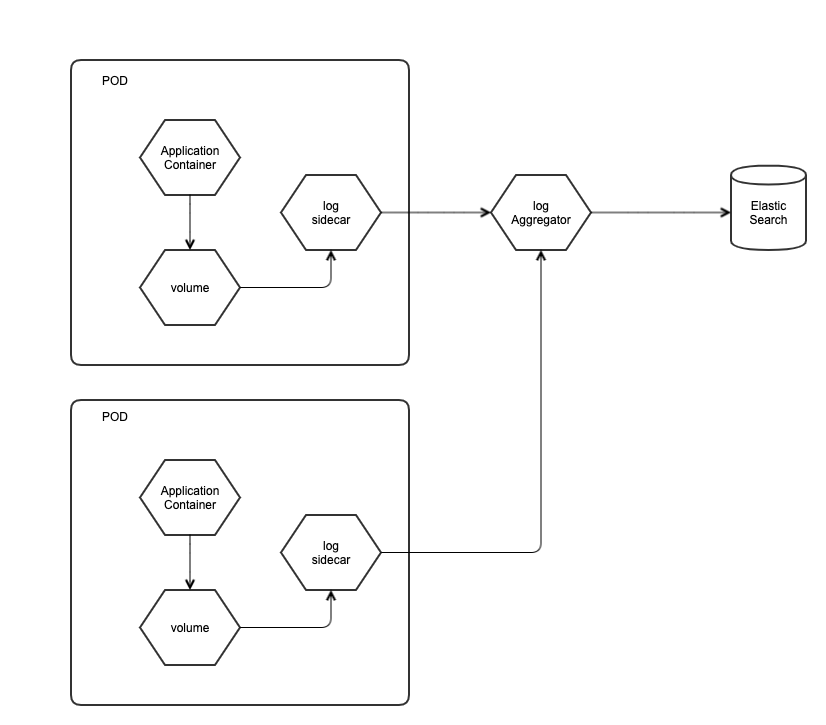

Streaming Sidecar Container with the Logging Agent Architecture (B)

- Allows users to separate log streams (sidecar) from applications, which can lack support for writing to STDOUT or STDERR

- The sidecar container runs a logging agent, which is configured to pick up logs from an application container

- It allows to separate several log streams from different parts of applications

Pod-Level Logging Agent Architecture (C)

- If the node-level logging agent is not flexible, write an application-specific sidecar

POD Sidecar Architecture

Why Sidecar Logging, instead of K8S Cluster Level Logging

Not all the container in K8S is writing logs to stdout, though it is recommended. Placing a log sidecar next to the container application would facilitate the uniform logging and distribution.

Note: In ONAP, the POD sidecar is not used because each ONAP application generates app logs to STDOUT or STDERR; i.e., no need to use the POD sidecar.

For the Log sidecar, define three major tags:

- Source - define the file details to monitor/lookup and to set format to look out for

- Filter - customize the event collected overwriting fields or adding fields

- write custom scripts to transform log formats for uniform log contents or additional fields from containers

- Match - define what to do with the matching data/log events and where to move

Logging Type Support

- Application logging

- Platform-level logging, including Kubernetes

- Need to define traceability support scope (e.g., service, environment)

- Can ONAP (or wants to) trace external components and infrastructure logs?

- Security component logging, such as IdAM, IdP

- Transaction logging for tracing for specified collation id/request id (e.g., MDC-based logging)

Analysis of ONAP Application Logging Specification

Existing ONAP Application Logging Specification

LFN DDF June 2021 Presentation

The ONAP Next Generation Security and Logging Architecture, design and Roadmap was presented at the LFN DDF June 2021 Presentation.

Please visit the https://wiki.lfnetworking.org/display/LN/2021-06-08+-+ONAP%3A+Next+Generation+Security+and+Logging+Architecture%2C+Design+and+Roadmap for presentation slide deck and recordings.

Note: there are several updates in this wiki page on top of the presented materials. So, this wiki page is up-to-date.

References

- ONAP Next Generation Security Architecture, https://wiki.onap.org/pages/viewpage.action?pageId=103417456

- Istio Architecture, https://istio.io/latest/docs/ops/deployment/architecture/

- Istio Security, https://istio.io/latest/docs/concepts/security/#authentication-policies

- Service Mesh Risk Analysis, https://wiki.onap.org/display/DW/Service+Mesh+Risk+Analysis

- Service Mesh Impact on Project, https://wiki.onap.org/display/DW/Service+Mesh+Impact+on+Projects

- ONAP Security Model, https://wiki.onap.org/display/DW/ONAP+Security+Model

- Logging Architecture Proposal, https://wiki.onap.org/display/DW/Logging+Architecture+Proposal

- Logging Enhancements Project proposal, https://wiki.onap.org/display/DW/Logging+Enhancements+Project+Proposal

- SECCOM Container Logging Requirements, https://wiki.onap.org/display/DW/CNF+2021+Meeting+Minutes?focusedTaskId=239&preview=/93004634/100896899/2021-02-22_LoggingRequirementEvents_v8.pptx

Overview: ONAP Next Generation Security & Logging Architecture, Design and Roadmap

- Provided Rationale for Service-Mesh Security: uniform, open-source- and standard-based security at the platform-level

- Istio was chosen for ONAP Service-Mesh Security to fulfill ONAP security requirements

- Defined ONAP Security Architecture, leveraging Istio, Keycloak, Cert-Mgr, Ingress, Egress

- Multi-Tenancy architecture and plan were addressed

- OOM team defined the service-mesh implementation priority for Istanbul; service-to-service mTLS first and then, AAA (AuthN, AuthZ, Audit)

- Currently, collecting feedback from NSA

- Implementation Plan for Istanbul:

- Phase 1: OOM will contribute this mTLS with Service-Mesh (contribution target: SDC, DCAE, SO, AAI, DMaaP, SDNC)

- make selected components Service-Mesh compatible

- Configure service-to-service mTLS communication

- Configure peer authentication policies

- Configure authorization policy for coarse-grained authorization

Phase 2: OOM plans to contribute on AAA, Multi-Tenancy and logging (stretch goals)

- Deploy/Configure Keycloak as the reference IdAM

- Configure Istiod for public key and JWT handling

- Configure request authentication policies

- Configure additional authorization policies

- Phase 1: OOM will contribute this mTLS with Service-Mesh (contribution target: SDC, DCAE, SO, AAI, DMaaP, SDNC)

Migration from AAF to Service-Mesh Security

Why not ONAP AAF (Application AuthZ Framework)

- It is a solution for ONAP, which is maintained by ONAP only

- AAF CADI plugin supports Java only, where some ONAP components are written in other languages (Python, etc.)

- Clients in various languages could have language specific restrictions

- AAF needs to keep up with the latest security technology evolution, but no active support in ONAP

- AAF is not widely used by ONAP projects due to:

- Integration/enforcement of AuthN and AuthZ are done by each project

- Mutual-TLS enablement & Secrets/certificate management are done by each project

- For encryption, each project needs to understand AAF certificate management mechanism

- Compatibility with variety of external authentication systems is a challenge

- Third-party microservices requires modification to work with AAF

- SSO and Multi-factor authentication support is questionable

We want to achieve simpler, uniform, open source- and standard-based Security (Service Mesh-pattern Security):

- Eliminate or reduce microservice infrastructure level security tasks in services

- Security is handled at the platform level

- Enabling uniform security across applications

- Minimize code changes and increase stability

- Platform-agnostic abstraction reduces risks on integration, extensibility and customization

- Use of Sidecar, so security layer is transparent for the application

- External Interfaces secured at Ingress Gateway

- Internal Interfaces secured at Service Mesh Sidecar

- User Management and RBAC is managed by open-source technologies

Benefits to ONAP, O-RAN and External Components

- The uniform, open-source-based and standard-based security would be a foundation for secure integration between ONAP / O-RAN and external components

- Product impacts for security would be minimum

- Ensure ONAP services have only the business logic of that service

- Third-party microservices can participate in ONAP security by leveraging the platform-level security (sidecar)

- Vendors can take advantage of open-source-based security, instead of having and maintaining their own security mechanism: cost effective

Service Mesh Security with Istio

The following diagram depicts the Istio mesh architecture overview:

Why Istio?

Istio was chosen because it fulfills the current ONAP security requirements/needs with the following capabilities and yet provides future-proof mechanism.

However, please note that a new platform could be considered in the future as needed.

Istio authentication and authorization:

- Provides each service with a strong identity representing its role to enable interoperability across clusters and clouds.

- Secures service-to-service communication with authentication and authorization.

- Provides a key management system to automate key and certificate generation, distribution, and rotation.

Microservices have security needs:

- To defend against man-in-the-middle attacks, they need traffic encryption.

- To provide flexible service access control, they need mTLS (mutual TLS) and fine-grained access policies.

- To determine who did what at what time, they need auditing tools.

With Control and Data planes, mTLS, Ingress, Proxy and Egress, Istio provides Security features for :

- Strong identity

- Powerful policy

- Transparent TLS encryption

- Authentication, Authorization and Audit (AAA) tools to protect your services and data

Goals of Istio-based security are:

- Security by default: no changes needed to application code and infrastructure

- Defense in depth: integrate with existing security systems to provide multiple layers of defense

- Zero-trust network: build security solutions on distrusted networks

ONAP Security Architecture - Leveraging Istio, Keycloak, Cert-Mgr, Ingress, Egress

The following diagram depicts ONAP Security Architecture.

Security Functional Blocks

- Ingress Controller (realized by istio-ingress)

- AuthN/AuthZ Gateway (sidecar) – future consideration

- ONAP IdAM (realized by Keycloak)

- User Administration

- Tenant Administration

- Proxy (sidecar) – for data plane

- oauth2-proxy

- External IdP (vendor-provided component)

- External IdAM

- Egress

- Istiod – control plane (pilot, citadel, galley, cert-mgr)

- Certificate Authority (CA)

Security Architecture Highlights

- Use of control plane and pluggable sidecar (platform container) proxies to protect application service

- Intercept traffic and check AuthN/AuthZ with IdAM and IdP

- Support SSO for Human user access request

- Compatibility with variety of external authN/authZ systems (e.g., external IdP, IdAM)

- Allowing external IdP(s) and IdAM(s)

- Enabling a foundation for authN federation

- RBAC support, based on user role, group & permission

- Multi-Tenancy support

ONAP Component Security Requirements

The following is ONAP component security requirements.

# | Requirement |

1 | No implicit dependencies between common components and ONAP |

3 | istio-ingress is used as ingress controller |

4 | Separate Northbound (for user) and Southbound (xNF) interfaces |

5 | Every ingress gateway terminates the TLS and re-encrypts the payload before sending to the destination component using mTLS |

6 | ISTIO network policy must be configured for only authorized services communications |

7 | AuthN between services is done using certs via mTLS |

8 | OpenID Connect is used to authenticate user |

9 | ONAP IdAM is realized by Keycloak, but it can be replaced with an external IdAM that is compatible with OIDC; authentication federation is out-of-scope from Istanbul |

10 | Cert-manager and Citadel are used to manage (CRUDQ) certificates |

11 | Kubernetes is configured to use encryption at rest |

12 | ISTIO automated sidecar injection is configured in underlying Kubernetes |

13 | No root pods are used |

14 | All DB is considered external |

16 | Support possible options to integrate with LDAP, Kerberos, AAF as IdP |

ONAP OOM Plan - Istanbul Priorities

The following diagram depicts ONAP OOM plan and Istanbul priorities:

note:

- Istio 1.10 is used

- for Istanbul, the ONAP default IdAM (realized by Keycloak) will be used as the reference implementation/configuration.

- Keycloak internal IdP will be used

- Multi-tenancy support of external IdAM and IdP will not configured

ONAP Component mTLS (A): make core ONAP deployable on Service Mesh (mTLS first without AAA): basic_onboarding, basic_vm automated integration tests

- Service Mesh compatibility:

- DMaaP Compatibility on service mesh

- Cassandra (and Istio 1.10?)

- AAI is ongoing

- issue on jobs; trying here to "quitquitquit" solution (cleanly exit the server)

- issue on sparky be: there's an aaf leftover part

- cannot test part needing cassandra for now

- SDC is ongoing

- SO is planned

- DCAE is ongoing

- SDNC is planned

- Better use of Service Mesh within components:

- SO: patch ongoing: https://gerrit.onap.org/r/c/so/+/122263

- Plan to check if we can run without authentication on these components; also check if we enlarge # of components

Communication Protocol between Service and Proxy and between Proxies

- The communication protocol between Service and Proxy is "HTTP".

- Service-Mesh Security architecture supports security at the platform level

- Application does not handle security directly

- For that, Service and the corresponding Proxy will sit on the same POD to make their communication internal

- The communication protocol between Proxies is mTLS via "HTTPs".

ONAP Component AAA (B) – stretch goal for Istanbul

- onboard roles and realm on Keycloak for tests / reference implementation (use of OIDC / JWT)

- add oauth2-proxy in the solution to redirect unauthenticated traffic to SSO Portal (keycloak as example)

- add some rules to enforce (AuthorizationPolicy)

- add some service accounts (work ongoing)

OAuth2-based Access Token AuthN/AuthZ

The following diagram depicts OAuth2-based Access Token AuthN/AuthZ.

- If the access token is used (unless self-contained access token), the Resource Server needs to ask the Authorization Server for the access token validation.

- This can cause chatty communication between the Resource Server and the Authorization Server.

- By leveraging the JWT (JSON Web Token), the above chatty communication can be reduced.

- In ONAP, the JWT is used for the browser client and application client request authN and authZ - see the following

OAuth2-based JWT AuthN/AuthZ

The following diagram depicts OAuth2-based JWT AuthN/AuthZ.

- By leveraging the JWT (JSON Web Token), the chatty communication between the ResourceServer and the Authorization Server can be removed.

- In ONAP, the JWT is used for the browser client and application client request authN and authZ - see the following

ONAP Browser Client Request Authentication and Authorization Use Case

The following diagram depicts the browser client request authentication and authorization process.

| # | Sequence |

|---|---|

| 0 | Configuration steps:

|

| 1 | Client sends a request without token |

| 2 | Ingress intercepts and forwards the request to Envoy proxy |

| 3 | If the request without token, oauth2-proxy redirects the traffic to Keycloak (reference configuration; could configure to use external IdAM) for login process

|

| 4 | Keycloak (with oauth2-proxy help) redirects the request to the login page. Note: this login page is configured at KeyCloak, and is provided to the user. This redirection is important because uses are completely isolated from applications and application never see a user's credentials. Applications instead are given an identity token or assertion that is cryptographically signed. |

| 5 | Client logs in with user credentials |

| 6 | If user credentials is valid, Keycloak generates JWT token with private/public key pair and send the generated token to the client |

| 7 | Client stores JWT token in local storage |

| 8 | Client sends a request with JWT token |

| 9 | Ingress intercepts and forwards the request to Envoy proxy |

| 10 | Envoy proxy validates JWT token with a public key |

| 11 | If JWT is valid, it enforces the security policy (e.g., authN, authZ). Once all is passed, it sends the request to service |

| 12 | if JWT is not valid, go to the step3 for the re-login process. If the max try is exceeded, error handling will be processed (details are TBD) |

Machine Client Request Authentication and Authorization

The following diagram depicts the machine client request authentication and authorization process, by leveraging IdAM and Istio which support JWT.

| # | Sequence |

|---|---|

| 0 | Configuration steps:

|

| 1 | Client logs in with client credentials towards Keycloak |

| 2 | If client credentials is valid, Keycloak generates JWT token with private/public key pair and send the generated token to the client |

| 3 | Client stores JWT token in local storage |

| 4 | Client sends a request with JWT token |

| 5 | Ingress intercepts and forwards the request to Envoy proxy |

| 6 | Envoy proxy validates JWT token with a public key |

| 7 | If JWT is valid, it enforces the security policy (e.g., authN, authZ). Once all is passed, it sends the request to service |

| 8 | If JWT is not valid, error handling will be processed (details are TBD) |

Request Authentication Configuration

Request authentication policies specify the values needed to validate a JWT. Istio checks the presented token.

- If presented requests are against the rules in the request authentication policy, it rejects requests with invalid tokens

- When requests carry no token, they are accepted, but authorization policies can validate requests

- If more than one policy matches a workload, Istio combines all rules

See the authentication policy section for details.

Service-2-Service (Peer) mTLS Communication With Istio

- The Service-to-Service authN is supported by leveraging mTLS certificates, instead of username/password

- Also, authorization will be supported for mTLS by Istio authorization configuration

- Two services will communicate using a direct connection (e.g., via REST APIs) without passing thru Ingress Controller or MSB

mTLS Certification Management

The following diagram depicts the Istio Certificate management and interaction with the Proxy (istio-agent and envoy proxy work together with istiod to automate key and certificate roation):

# | Sequence (mTLS Certification Management) |

1 | When started, Istio agent creates private key & CSR (certificate signing request) |

2 | Istio agent sends CSR with its credentials to istiod for signing |

3 | CA in istioid validates credentials in the CSR, signs CSR to generate certificate upon successful validation |

4 | CA in istioid sends the signed certificate to istio-agent |

5 | When a workload is started, Envoy proxy requests certificate and key from istio-agent via SDS (secret discovery service) API |

6 | Istio-agent sends certificates and private key to Envoy Proxy |

7 | Istio-agent monitors the expiration of the workload certificate. The above process repeats periodically for certificate and key rotation |

mTLS Service-2-Service Secure communication with Authentication and Authorization

The following diagram depicts the Service-to-Service mTLS secure communication with Authentication and Authorization:

# | Sequence (mTLS Authentication Flow) - TLSv1_2 |

1 | Client application in a container sends a plain-text HTTP request towards the server |

2 | Client proxy container intercepts the outbound request |

3 | Client proxy performs a TLS handshake with the server-side proxy |

A. This handshake exchanges client and server certificates | |

B. Istio preloaded certificates the proxy containers (see diagram A) | |

4 | Client proxy checks for ‘secure naming’ on the server’s certificate, and verify the server identify (service account presented in the server certificate) is authorized to run the target service |

5 | Client and server establish a mTLS connection |

| 6 | Istio forwards the traffic from the client side proxy to the server side proxy |

7 | The server side proxy authorizes the request |

A. if authorized, Istio forwards the traffic to the server application service through local TCP | |

| B. if not authorized, an error condition arises |

Authentication Mode

Peer authentication policies specify the mTLS mode that Istio enforces on target workloads:

- PERMISSIVE: workloads accept both mTLS and plain text traffic

- STRICT: workloads accepts only mTLS traffic

- DISABLE: mTLS is disabled

A permissive mode allows a service to accept both plaintext traffic and mutual TLS traffic at the same time. During the transition period, the permissive mode would be allowed for non-Istio clients communicate with non-Istio servers. Once ONAP components are Service-Mesh enabled, the permissive mode could be disabled.

Authentication Policy Configuration

Note: during Istanbul, peer authentication will be configured first (high priority). Then, OOM will visit the request authentication.

Authentication Policies are configured in .yaml files, and are saved in the Istio configuration storage once deployed. The Istio controller watches the configuration storage. Upon any policy changes, Istio sends configurations to the targeted endpoints (e.g., Envoy Proxy). Once the proxy receives the configuration, the new authentication requirement takes effect immediately on that pod.

- for request authentication, the client application is responsible for acquiring and attaching the JWT credential to the request.

- for peer authentication, Istio automatically upgrades all traffic between two PEPs to mTLS.

- If authentication policies disable mTLS mode, Istio continues to use plain text between PEPs. In ONAP Istanbul, mTLS mode will be enabled. Otherwise, the disabled use cases will be reviewed as an exception request.

Example,

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: "onap-peer-policy"

namespace: "onap_x"

spec:

selector:

matchLabels:

app: onap_A

mtls:

mode: STRICT

Authorization Policy Configuration

Note: during Istanbul, service-to-service (workload-to-workload) authorization will be configured first (high priority). Then, OOM will visit end-user-to-service (workload) authorization.

- The authorization policy enforces access control to the inbound traffic in the server side Envoy proxy. Each Envoy proxy runs an authorization engine that authorizes requests at runtime.

- When a request comes to the proxy, the authorization engine evaluates the request context against the current authorization policies, and returns the authorization result, either

ALLOWorDENY. - Istio authorization policies are configured using

.yamlfiles.

<source: https://istio.io/latest/docs/concepts/security/#authentication-policies>

Authorization policies support ALLOW, DENY and CUSTOM actions. The following digram depicts the policy precedence.

- CUSTOM → DENY → ALLOW

- in ONAP Istanbul, DENY and ALLOW will be configured first, as coarse-grained authorization. Then, CUSTOM action would be considered for fine-grained authorization in the future (as time allows).

<source: https://istio.io/latest/docs/concepts/security/#authentication-policies>

Example,

<source: https://istio.io/latest/docs/concepts/security/#authentication-policies>

Role-Based Access Control

ONAP Istanbul release supports Role-Based Access Control (RBAC). For that, roles (e.g., Admin, user, manager, etc.) will be defined, and mapping between users/groups and roles (m:n) will be set by leveraging Keycloak and Istiod.

ONAP IdAM and External IdAM Interaction

The following diagram depicts the ONAP IdAM and External IdAM Interaction.

# | Choice |

1 | If the vendor chooses their own IdAM (external IdAM), the AuthN/AuthZ GW should be able to use the external IdAM, instead of ONAP IdAM – full integration choice |

A.The external IdAM will handle AuthN/AuthZ for ONAP incoming traffic; session cookie handling, session handling | |

B.The external IdAM will typically use their own IdP (external IdP) to verify their own user identities | |

2 | If ONAP IdAM is used, based on business use cases ,the ONAP IdAM could interface with the external IdAM, based on IdAM-to-IdAM interface configuration |

A.SSO | |

B.Authentication federation (SAML) – not part of Istanbul |

ONAP Security Components, IdAM External IdP(s), and Multi-Tenancy Support

Each service provider will likely have existing centralized IdP that ONAP should integrate with. This architecture supports that integration.

- ONAP IdAM proxies via plugin to a designated external IdP server(s)

- ONAP IdAM must support fallback mode, where it can act as IdP for cases where external IdP is unavailable

- Multi-Tenancy allows the same Username inside different tenants; There is no SSO between different tenants

- Realms will be defined to support multi-tenancy, by leveraging Keycloak.

- Realms are isolated from one another and can only manage and authenticate the users that they control.

Single External IdP Use Case

The following diagram depicts the Multi-Tenancy Support with single External IdP.

# | Sequence (Single External IdP) - A |

1 | If ONAP IdAM is connected to a single external IdP, the IdP contains the credentials of all the users from all the tenants |

A.AuthN/AuthZ requests including username, password and tenant will be forwarded from ONAP IdAM to the external IdP | |

B.The external IdP performs the authN/authZ for the user in the tenant realm | |

C.The external IdP returns with AuthN/AuthZ response |

Multiple IdPs Use Case

The following diagram depicts the Multi-Tenancy Support with multiple External IdPs. Each tenant has its own external IdP:

# | Sequence (Multiple IdPs (each tenant has its own external IdP)) - B |

2 | If each tenant has its own external IdP, the IdP contains the credentials for its tenant users |

A.Based on the tenant info in the request, ONAP IdAM selects the correct external IdP | |

B.ONAP IdAM forwards the request to the selected external IdP | |

C.The selected external IdP performs AuthN/AuthZ | |

D.The selected external IdP returns with AuthN/AuthZ response |

ONAP Multi-Tenancy Support with Istio

Objective: ONAP components implement the right access controls for different end users/tenants to:

- Build& distribute the SDC artifacts

- Build & distribute CDS blueprints

- Build & distribute custom operational SO workflows

- Build & distribute Policies

- Build & distribute Control loops

- Access / manage the AAI inventory data for objects they own

- Publish & consume messages on DMaaP / Kafka topics they have access to

- Build, deploy & manage DCAE collectors & DCAE analytics applications

- Build, deploy & manage CDS executors

Approach & State:

- Single logical instance, with Multi-Tenancy built-in:

- SDC: partial implementation through user roles; RBAC for viewing, authoring, updating and distributing

- A&AI: is not extensive enough to allow any fine-grained control; set permission per owing group / user, not only per role

- SO: different groups of people are allowed to orchestrate/view/change different instances

- DMaaP: separate message bus topics based on consumers/producers

- Several logic instances, where deployment/configuration for those instances can be handled independently:

- Controllers (SDNC):

- Collectors (DCAE): it can be deployed and controlled by the tenants, based on the roles

- Policy executors (Policy): centralize PAP, Policy API; distribute PDPs by namespace

Istio Tenancy Model

- Namespace tenancy: a cluster can be shared across multiple teams, each using a different namespace

- Cluster tenancy: using clusters as a unit of tenancy

- Mesh tenancy: each mesh can be used as the unit of isolation

<source: https://istio.io/latest/docs/ops/deployment/deployment-models/>

An ONAP option: Istio supports soft multi-tenancy with multiple Istio control planes

- One control plane and one mesh per tenant

- The cluster admin gets control and visibility across all the Istio control planes

- The tenant admin gets control of a specific Istio instance

- Separation between the tenants is provided by Kubernetes namespaces and RBAC (define Role and RoleBinding)

ONAP Logging

Logging Propositions

- Treat logs as event streams

- An App should not concern itself with routing or storage of its output steam

- Each running process writes its event stream, unbuffered, to stdout or stderr

- Each process stream is:

- captured by execution environment,

- collated together with other streams from the app

- routed to one or more final destinations for viewing and long-term archival

- Archival destinations should not be visible to or configurable by the app (separation of concerns, security reasons)

Container logging is different

- When pods are evicted, crashed, deleted or scheduled on a different node, the logs from the containers are gone.

- Transferring transient local log data in the containers to the centralized (or even distributed) long-term log storage is a must.

Logging Types in Kubernetes

- Kubernetes Container Logging: container logs are generated by containerized applications. The typical way to capture container logs is to use STDOUT and STDERR.

- Kubernetes Node Logging: container logs are streamed by the container engine to a logging driver which can be realized by an open-source tool such as fluentbit, fluentd. Node-level logs could be kernel logs or systemd logs

- Kubernetes Cluster Logging: it refers to Kubernetes itself and all of its system component logs. Kubernetes does not provide a native solution for the cluster-level logging, but they can use various node-level logging agent, sidecar container as defined the following ONAP logging functional architecture.

- Kubernetes Events Logging: events hold information about resources state changes or errors related to pod eviction or decisions were made by the scheduler.

- Kubernetes Audit Logging: detailed descriptions of each call made to the kube-apiserver. It shows chronological activity event sequence.

Logging Scope

The ONAP application logging will be supported. Other logging scopes are under discussion.

- ONAP Application Logging

- Security Component Logging (e.g., Keycloak, IdP, external IdAM, external IdP)

- Infrastructure Logging (e.g., Kuberenets logging)

- xNF Logging(e.g., vFW, VNF, CNF)

Collating across logging scopes is under discussion, as an e2e tracing, but it is out of scope from Istanbul. It is not sure if we can achieve this in the near future release, either.

ONAP Logging Functional Architecture

The following diagram depicts ONAP logging functional architecture:

- ONAP supports open-source- and standard-based Logging architecture.

- Once all ONAP components push their logs into STDOUT/STDERR, any standard log pipe can work.

- Allowing the logging component stack is realized by choices of vendors

- ONAP provides a reference implementation/choice

- ONAP logs will be exported to a different and centralized location for security, persistent and aggregation reasons

- Log collector sends logs to the aggregator in a different container

- Aggregator sends logs to the centralized database in a different container

- Logging Functional Blocks:

- Collector (one per K8S node)

- Aggregator (few per K8S cluster)

- Database (one per K8S cluster)

- Visualization (one per K8S cluster)

- ONAP reference implementation choice:

- EFK: Elastic Search, Fluentd, Fluentbit, Kibana

- LFG: Loki, Grafana, Fluentd / Flentbit

- ONAP logging conforms to SECCOM Container Logging requirements

- Event Types

- Log Data

- Log Management

ONAP Reference Implementation

The above diagram also indicates reference realization, such as:

- As a log driver, Fluent Bit is deployed per node as a collector and forwarder of log data to the Fluentd instance

- Instead of doing heavy log transformations, just forward logs securely to the Flentd Aggregator cluster

- FluentBit (node-level logging agent) needs to be run on every node to collect logs from every POD, so FluentBit is deployed as a DaemonSet (a POD that runs on every node of the cluster)

- When FluentBit runs, it will read, parse and filter the logs of every POD and could enrich each entry with the following information (metadata):

- POD Name

- POD ID

- Container Name

- Container ID

- Labels

- Annotations

- To obtain this information, a built-in filter plugin called kubernetes talks to the Kubernetes API server to retrieve relevant information such as the pod_id, labels and annotations, other fields such as pod_name, container_id and container_name are retrieved locally from the log file names. All of this is handled automatically, no intervention is required from a configuration aspect.

- A Fluentd instance (or a few Fluentd instances) is deployed per cluster as an aggregator - processing the data and routing it to a different destination (e.g., Elastic Search)

- It can handle heavy throughput - aggregating from multiple inputs, processing data and routing to different outputs. Also, it can handle a much larger amount of input and output sources

- centralized way to transform data; normalize log data and collect additional info from containers

- e.g., filter_record_transformer built-in filter plugin

<filter foo.bar>

@type record_transformer

remove_keys hostname,$.kubernetes.pod_id

- e.g., filter_record_transformer built-in filter plugin

</filter>

<record>

container ${id = tag.split('.')[5]; JSON.parse(IO.read("/var/lib/docker/containers/#{id}/config.v2.json"))["Name"][1..-1]}

hostname "#{Socket.gethostname}"

</record>

- or other custom plugins as needed

- routing logs to ElasticSearch or equivalent component(s)

- Note: for IoT (edge host/equipment), FluentBit could be installed per device, sending data to a Fluentd instance

- Elastic Search is a centralized log data indexing and storage

- Kibana / Grafana could be used for log data visualization.

FluentBit Internal Pipeline

Logging Generation and Collection Architecture

The following diagram depicts a various ways of log collection.

By leveraging various collection agents and configurations, various-type log data will be collected and aggregated to be stored in the centralized persistent storage. However, it is not clear how we collate heterogeneous log data. In many case, ONAP cannot control external log data contents / formats to extract hints/attributes for collation.

- xNF logs will be collected via the VNF collection agent (collector), and will be sent to the Aggregator.

- Application (ONAP component) logs will be collected via the application collection agent (collector), and will be sent to the Aggregator.

- Infrastructure logs will be collected via syslog collection agent (collector), and will be sent to the Aggregator.

- The aggregator can be processed/normalized log data to the centralized database. Note that the database could be one or multiple based on log data types. It would be system provider's deployment choices.

- Visualization can be centralized or distributed based on log data types.

System Component Logs

Similar to the container logs, system component logs in the /var/log directory should be rotated once the size exceeds the maximum.

Cluster-Level Logging Requirements and Options (preferred option)

ONAP needs to support Cluster-Level Logging:

- Allow users to access application logs event even if a container crashes, a pod get evicted or a node dies

- Require a separate backend to store, analyze and query logs

- Write to standard output and standard error streams

- E.g., A container engine handles and redirects any output generated to a containerized application’s STDOUT and STDERR streams (e.g., /dev/stdout, /dev/stderr)

- Support multi-tenancy logging

Cluster-level Logging Architecture (A)

- Use a node-level logging agent (e.g., DaemonSet) that runs on every node

- the logging agent has access to a directory with log files from all of the application containers on that node

- one agent per node is used

- Include a dedicated sidecar container for logging in an application pod

- Push logs directly to a backend from within an application

- Note:

- node-level logging creates only one agent per node and does not require any changes to the applications running on the node

- Prerequisite: Application containers write STDOUT and STDERR

- A node-level agent collects these logs and forwards them for aggregation

Streaming Sidecar Container with the Logging Agent Architecture (B)

- Allows users to separate log streams (sidecar) from applications, which can lack support for writing to STDOUT or STDERR

- The sidecar container runs a logging agent, which is configured to pick up logs from an application container

- It allows to separate several log streams from different parts of applications

Pod-Level Logging Agent Architecture (C)

- If the node-level logging agent is not flexible, write an application-specific sidecar

POD Sidecar Architecture

Why Sidecar Logging, instead of K8S Cluster Level Logging

Not all the container in K8S is writing logs to stdout, though it is recommended. Placing a log sidecar next to the container application would facilitate the uniform logging and distribution.

For the Log sidecar, define three major tags:

- Source - define the file details to monitor/lookup and to set format to look out for

- Filter - customize the event collected overwriting fields or adding fields

- write custom scripts to transform log formats for uniform log contents or additional fields from containers

- Match - define what to do with the matching data/log events and where to move

Logging Type Support

- Application logging

- Platform-level logging, including Kubernetes

- Need to define traceability support scope (e.g., service, environment)

- Can ONAP (or wants to) trace external components and infrastructure logs?

- Security component logging, such as IdAM, IdP

- Transaction logging for tracing for specified collation id/request id (e.g., MDC-based logging)

Analysis of ONAP Application Logging Specification

Existing ONAP Application Logging Specification

LFN DDF June 2021 Presentation

The ONAP Next Generation Security and Logging Architecture, design and Roadmap was presented at the LFN DDF June 2021 Presentation.

Please visit the https://wiki.lfnetworking.org/display/LN/2021-06-08+-+ONAP%3A+Next+Generation+Security+and+Logging+Architecture%2C+Design+and+Roadmap for presentation slide deck and recordings.

Note: there are several updates in this wiki page on top of the presented materials. So, this wiki page is up-to-date.

References

- ONAP Next Generation Security Architecture, https://wiki.onap.org/pages/viewpage.action?pageId=103417456

- Istio Architecture, https://istio.io/latest/docs/ops/deployment/architecture/

- Istio Security, https://istio.io/latest/docs/concepts/security/#authentication-policies

- Service Mesh Risk Analysis, https://wiki.onap.org/display/DW/Service+Mesh+Risk+Analysis

- Service Mesh Impact on Project, https://wiki.onap.org/display/DW/Service+Mesh+Impact+on+Projects

- ONAP Security Model, https://wiki.onap.org/display/DW/ONAP+Security+Model

- Logging Architecture Proposal, https://wiki.onap.org/display/DW/Logging+Architecture+Proposal

- Logging Enhancements Project proposal, https://wiki.onap.org/display/DW/Logging+Enhancements+Project+Proposal

- SECCOM Container Logging Requirements, https://wiki.onap.org/display/DW/CNF+2021+Meeting+Minutes?focusedTaskId=239&preview=/93004634/100896899/2021-02-22_LoggingRequirementEvents_v8.pptx