Table of Contents

OOM restarts ONAP components after a failure

OOM uses a Kubernetes controller to constantly monitor all of the deployed containers (pods) in an OOM deployment of ONAP automatically restarting any container that fails. If you’d like to try this out for yourself stop one of the containers by using the following command:

kubectl delete pod <pod name> -n <pod namespace>

You’ll see the pod go down and immediately be restarted.

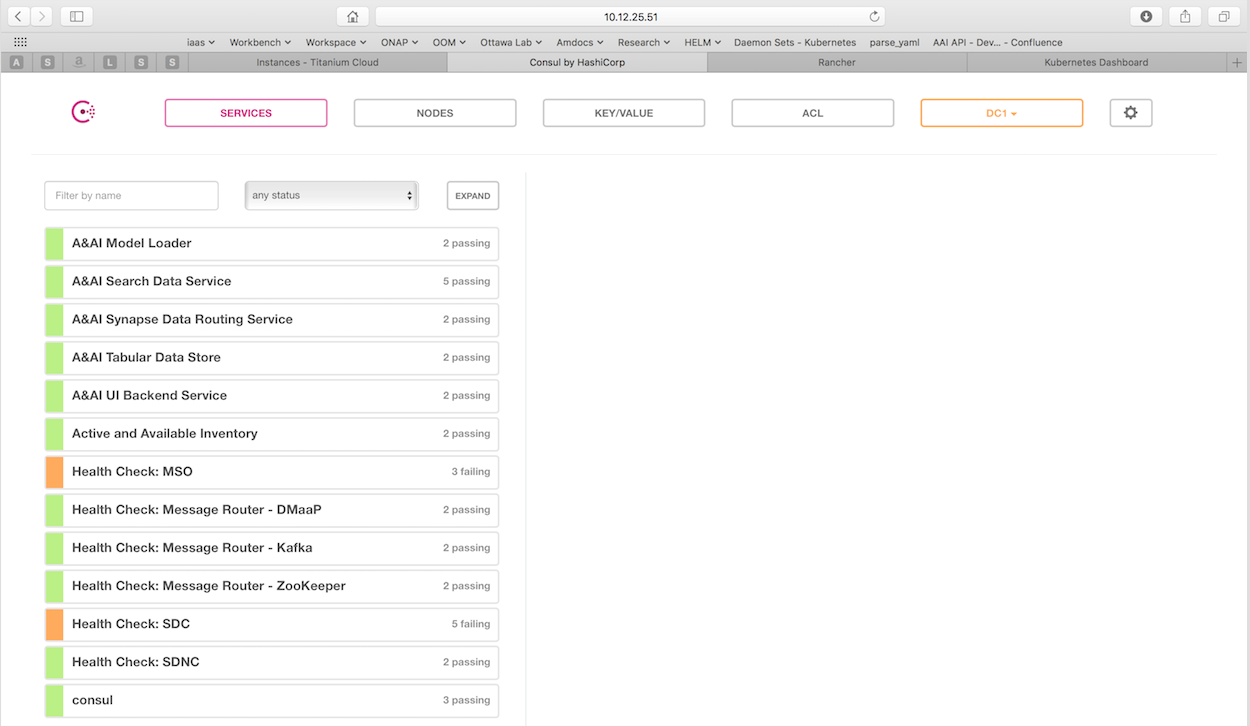

OOM provides a real-time view of ONAP component health

OOM deploys a Consul cluster that actively monitors the health of all ONAP components in a deployment. To see what the health of your deployment looks like, enter the following url in your browser:

http://<kubernetes IP>:30270/ui/

More information can be found on our wiki at Health Monitoring.

OOM supports deploying subsets of the ONAP components

ONAP has a lot of components! If you would like to focus on a select few components you can do so today by modifying the environment file setenv.bash and changing the list of components.

For example, in Beijing we have:

HELM_APPS=('consul' 'msb' 'mso' 'message-router' 'sdnc' 'vid' 'robot' 'portal' 'policy' 'appc' 'aai' 'sdc' 'dcaegen2' 'log' 'cli' 'multicloud' 'clamp' 'vnfsdk' 'uui' 'aaf' 'vfc' 'kube2msb' 'esr')Simply modify the array to something like this:

HELM_APPS=('mso' 'message-router' 'sdnc' 'vid' 'robot' 'portal' 'policy' 'appc' 'aai' 'sdc')Launch the “oneclick” installer and wait for your pods to startup.

# createAll.bash -n onap

OOM makes services available on any Node in a K8S cluster using a NodePort

This allows a service to be reachable on any node in the cluster, using a statically assigned NodePort (default range: 30000-32767). NodePorts are automatically mapped to the internal port of a service.

Some examples of services available today using NodePorts:

30023 --> 8080 (m)so

All services are mapped here: in OOM wiki.

OOM manages dependencies between ONAP components

OOM uses deployment descriptor files to define what components are deployed in ONAP. Inside the descriptor files, initContainers are used to ensure that dependent components are running and are healthy (ie. ready) before a component's container is started.

Example mso deployment descriptor file shows mso's dependency on its mariadb component:

OOM supports scaling stateful applications

OOM leverages the kubernetes capability to scale stateful applications. And this is done without the need to change any application code.

You can try these examples today to scale out and back in the SDNC database.

Scale out sdnc database to 5 instances:

kubectl scale statefulset sdnc-dbhost -n onap-sdnc --replicas=5

Scale in sdnc database to 2 instances:

kubectl scale statefulset sdnc-dbhost -n onap-sdnc --replicas=2

OOM is deployed and validated hourly as a Continuous Deployment of ONAP master on AWS

Currently, merges in ONAP get compiled/validated by the JobBuilder – this is good CI. Additional validation via CD on top of CI tag sets allows for full CI/CD per commit as part of the JobBuilder CI chain.

OOM Kubernetes deployment + Jenkins + ELK: gives us historical healthcheck and live build validation

Examples: The day before the F2F in Dec – the only AAI healthcheck failure in the last 3 months – a chef issue – appeared and was fixed by the AA&I team in 3 hours.

changes to Kube2msb were validated and visible in the last 7 days view below – click on the top-right “last 7 days” to expand search or click the red pie to sort on failures and the inner pie segment in the larger pie to sort by component.

The last 60 min of merges to ONAP component configuration and OOM Kubernetes infrastructure as well as the daily Linux Foundation docker images are tested in each slice in the 7d view above. Results from robot healthcheck, and partial vFW init/distribute are streamed from the VM under test -via Jenkins into the ELK stack – tracking hourly ONAP deployment health.

Jenkins:

Kibana:

Master: (ONAP pods up at the 22m mark of each hour) – security temporarily off for a couple days

- http://amsterdam.onap.info:8880/env/1a7/infra/hosts/1h1/containers

- http://amsterdam.onap.info:8880/r/projects/1a7/kubernetes-dashboard:9090/#!/pod?namespace=_all

OOM enhances ONAP by deploying Logging framework

OOM implements Logging Enhancement Project by deploying Elastic Stack and defining consistent logging configuration.

OOM deploys (ElasticSearch, Logstash, Kibana) together with filebeat to collect logs from the different ONAP components. It enables components with canonical logs configuration.

You can access ONAP Logs Kibana at:

http://<any_of_your_kubernetes_nodes>:30253/app/kibana

Components that have logs available in OOM Elastic Stack: A&AI, APPC, MSO, Policy, Portal, SDC, SDNC, VID.

If you would like ONAP to collect your components logs in a consistent matter, please contact the OOM team.