During the Beijing release the following capabilities are added or enhanced from the OOM capabilities available in the Amsterdam release:

- Deployment - with built-in component dependency management (including multiple clusters, federated deployments across sites, and anti-affinity rules)

- Configuration - unified configuration across all ONAP components

- Monitoring - real-time health monitoring feeding to a Consul UI and Kubernets

- Heal- failed ONAP containers are recreated automatically

- Clustering and Scaling - cluster ONAP services to enable seamless scaling

- Upgrade - change-out containers or configuration with little or no service impact

- Deletion - cleanup individual containers or entire deployments

The following sections describe these areas in more detail.

Deploy

The OOM team with assistance from the ONAP project teams, have built a comprehensive set of Kubernetes deployment specifications, yaml files very similar to TOSCA files, that describe the composition of each of the ONAP components and the relationship within and between components. These deployment specifications describe the desired state of an ONAP deployment and instruct the Kubernetes manager as to how to maintain the deployment in this state.

When an initial deployment of ONAP is requested the current state of the system is NULL so ONAP is deployed by the Kubernetes manager as a set of Docker containers on one or more predetermined hosts. The hosts could be physical machines or virtual machines. When deploying on virtual machines the resulting system will be very similar to “Heat” based deployments, i.e. Docker containers running within a set of VMs, the primary difference being that the allocation of containers to VMs is done dynamically with OOM and statically with “Heat”.

The intra and inter component dependencies described by the deployment descriptors are used to control the order in-which the components are brought up. If component B depends on A, A will be started first and only when the “readiness” probe is successful will B we started. OOM has created readiness probes that look for an indication that container is ready for use by other containers.

Example mso deployment descriptor file shows mso's dependency on its mariadb component:

During the Beijing release the 'initContainers' constructs were updated from the previous beta implementation to the current released syntax under the JIRA Story OOM-406 - Getting issue details... STATUS

Configuration

Each project within ONAP has its own configuration data generally consisting of: environment variables, configuration files, and database initial values. Many technologies are used across the projects resulting in significant operational complexity and an inability to apply global parameters across the entire ONAP deployment. OOM solves this problem by introducing a common configuration technology, Helm charts, that provide a hierarchical configuration configuration with the ability to override values with higher level charts or command line options. For example, if one wishes to change the OpenStack instance oam_network_cidr and ensure that all ONAP components reflect this change, one could change the vnfDeployment/openstack/oam_network_cidr value in the global configuration file as shown below:

The migration from the disparate configuration methodologies to Helm charts is tracked under the OOM-460 - Getting issue details... STATUS JIRA story. Within each of the projects a new configuration repository contains all of the project specific configuration artifacts. As changes are made within the project, it's the responsibility of the project team to make appropriate changes to the configuration data.

Monitoring

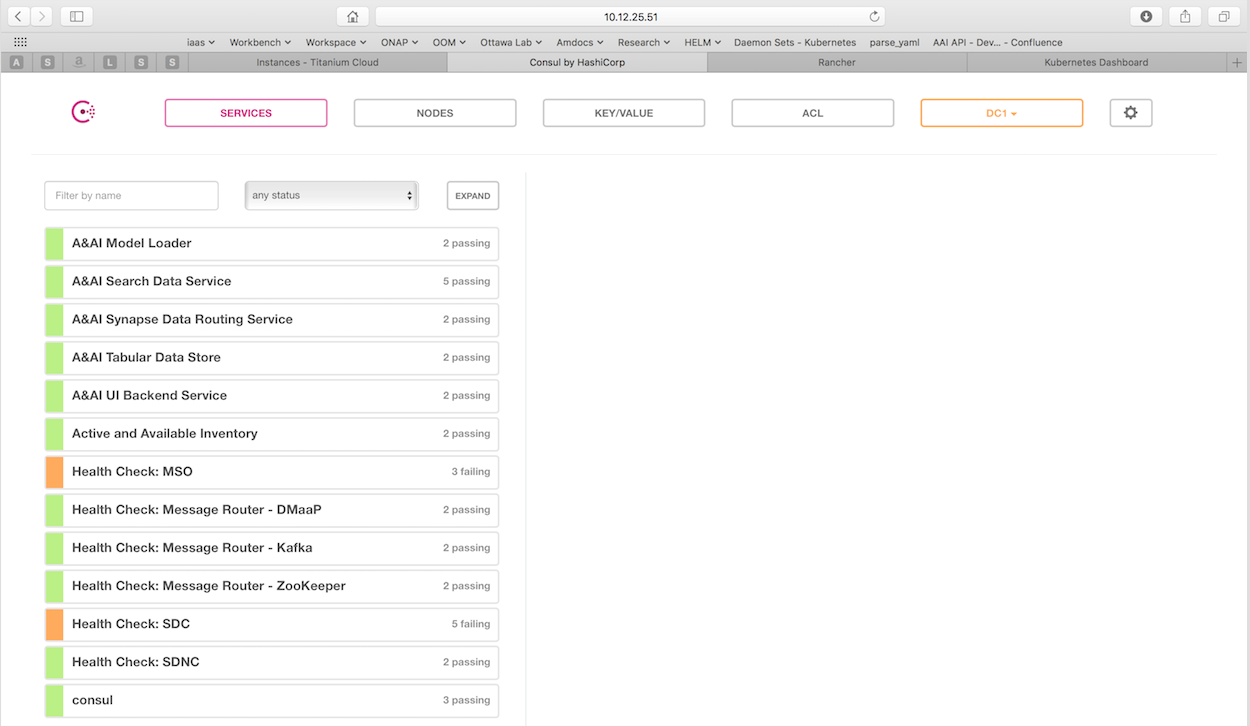

OOM provides two mechanims to monitor the real-time health of an ONAP deployment: a Consul GUI for a human operator or downstream monitoring systems and Kubernetes liveness probes that enable automatic healing of failed containers.

OOM deploys a 3 instance Consul server cluster that provides a real-time health monitoring capability for all of the ONAP components. For each of the ONAP components a Consul health check has been created, here is an example from the AAI model loader:

To see the real-time health of a deployment go to:

http://<kubernetes IP>:30270/ui/

where a GUI much like the following will be found: