Warning: Draft Content

This wiki is under construction as of 20171227 - this means that content here may be not fully specified or missing as we bring up the system and investigate deployments - this notice will be removed in the near future

slack | cloudify-developers list | github | cloudify-source JIRA | JIRA Board |

see OOM with TOSCA and Cloudify and OOM-579 - Getting issue details... STATUS

Note: cloudify manager is used in 3 places (as an alternative wrapper on OOM/Kubernetes, as a pending multi-VIM southbound plugin manager between SO and the Python based Multi-VIM containers and as the orchestrator in DCAEGEN2 during DCAE bootstrap of the HEAT version of DCAE.

+ cfy local init --install-plugins -p ./blueprints/centos_vm.yaml -i /tmp/local_inputs -i datacenter=0LrL

Purpose

Investigate the use of Cloudify Community edition - specifically http://cloudify.co/community/ in bringing up ONAP on Kubernetes using TOSCA blueprints on top of Helm. The white paper and blog article detail running hybrid Kubernetes and non-Kubernetes deployment together via http://cloudify.co/cloudify.co/2017/09/27/model-driven-onap-operations-manager-oom-boarding-tosca-cloudify/

TOSCA: https://www.oasis-open.org/committees/tc_home.php?wg_abbrev=tosca

One use case where Cloudify would be used as a layer above OOM and Kubernetes - is if you wish to run one of your databases - for example the MariaDB instance outside of the managed kubernetes environment (possibly as part of a phased in migration into kubernetes) - in this case you would need an external cloud controller to manage this hybrid environment - we will attempt to simulate this use case.

Quickstart

Here are the step to to deploy ONAP on Kubenetes using TOSCA and Cloudify:

- Install Cloudify manager, the fastest way is to use an existing Image for your environment (OpenStack, AWS, etc.)

- http://cloudify.co/download/

- Here are detailed instruction per environment (choose the non-bootstrap option) https://github.com/cloudify-examples/cloudify-environment-setup

- Provision a Kubernetes Cluster

- login to Coudify Manager UI

- Upload Kubernetes Blueprint zip file

- create a deployment

- execute the installation workflow

- After the Kubernetes cluster is up, prepare OOM environment

- Install Helm on Kubernetes master

- Pull docker images on all cluster working nodes

- After completion of pulling docker images, provision ONAP using Helm TOSCA blueprint (Link to be provided soon)

Quickstart Validation Examples

OpenStack

Install Cloudify manager

Note: You don't need install cloudify manager every time. Once you set the cloudify manager, you can reuse it.

- Upload the Cloudify manager image to your OpenStack environment.

The image file can be found here (https://cloudify.co/download/#)

This image ID will be used in the openstack.yaml file. - Create a VM in your OpenStack. This VM will be used for launching the cloudify manager. Attach a floating IP on this VM to access it.

Perpare the virtual environment

sudo apt-get update sudo apt-get install python-dev sudo apt-get install virtualenv sudo apt-get install python-pip virtualenv env . env/bin/activate

Install Cloudify CLI

install Cloudify CLiwget http://repository.cloudifysource.org/cloudify/4.2.0/ga-release/cloudify-cli-4.2ga.deb sudo dpkg -i cloudify-cli-4.2ga.deb

Run cfy to test whether cfy isnatll successfully.

ubuntu@cloudify-launching:~$ cfy Usage: cfy [OPTIONS] COMMAND [ARGS]... Cloudify's Command Line Interface Note that some commands are only available if you're using a manager. You can use a manager by running the `cfy profiles use` command and providing it with the IP of your manager (and ssh credentials if applicable). To activate bash-completion. Run: `eval "$(_CFY_COMPLETE=source cfy)"` Cloudify's working directory resides in ~/.cloudify. To change it, set the variable `CFY_WORKDIR` to something else (e.g. /tmp/). Options: -v, --verbose Show verbose output. You can supply this up to three times (i.e. -vvv) --version Display the version and exit (if a manager is used, its version will also show) -h, --help Show this message and exit. Commands: agents Handle a deployment's agents blueprints Handle blueprints on the manager bootstrap Bootstrap a manager cluster Handle the Cloudify Manager cluster deployments Handle deployments on the Manager dev Run fabric tasks [manager only] events Show events from workflow executions executions Handle workflow executions groups Handle deployment groups init Initialize a working env install Install an application blueprint [locally] ldap Set LDAP authenticator. logs Handle manager service logs maintenance-mode Handle the manager's maintenance-mode node-instances Show node-instance information [locally] nodes Handle a deployment's nodes plugins Handle plugins on the manager profiles Handle Cloudify CLI profiles Each profile can... rollback Rollback a manager to a previous version secrets Handle Cloudify secrets (key-value pairs) snapshots Handle manager snapshots ssh Connect using SSH [manager only] ssl Handle the manager's external ssl status Show manager status [manager only] teardown Teardown a manager [manager only] tenants Handle Cloudify tenants (Premium feature) uninstall Uninstall an application blueprint user-groups Handle Cloudify user groups (Premium feature) users Handle Cloudify users workflows Handle deployment workflowsDownload and edit the cloudfy-environment-setup blueprint

run following command to get the cloudfy-environment-setup blueprint

wget https://github.com/cloudify-examples/cloudify-environment-setup/archive/4.1.1.zip sudo apt-get install unzip unzip 4.1.1.zip

vi cloudify-environment-setup-4.1.1/inputs/openstack.yaml

Fill in the openstack information.

username: -cut- password: -cut- tenant_name: -cut- auth_url: https://-cut-:5000/v2.0 region: RegionOne external_network_name: GATEWAY_NET cloudify_image: centos_core_image: ubuntu_trusty_image: small_image_flavor: 2 large_image_flavor: 4 bootstrap: True

Install the Cloudify manager , it will take about 10 mins to finish the installation.

sudo cfy install cloudify-environment-setup-4.1.1/openstack-blueprint.yaml -i cloudify-environment-setup-4.1.1/inputs/openstack.yaml --install-plugins

Find the floating IP of cloudify manager

Then you can access the Cloudify Manager GUI from that IP Address. The default username and password is admin:admin.

Provision a Kubernetes Cluster

- login to Coudify Manager UI

- Upload Kubernetes Blueprint zip file

You can get the Blueprint file from oom repo.

https://gerrit.onap.org/r/gitweb?p=oom.git;a=tree;f=TOSCA/kubernetes-cluster-TOSCA

- Zip the directory kubernetes-cluster-TOSCA

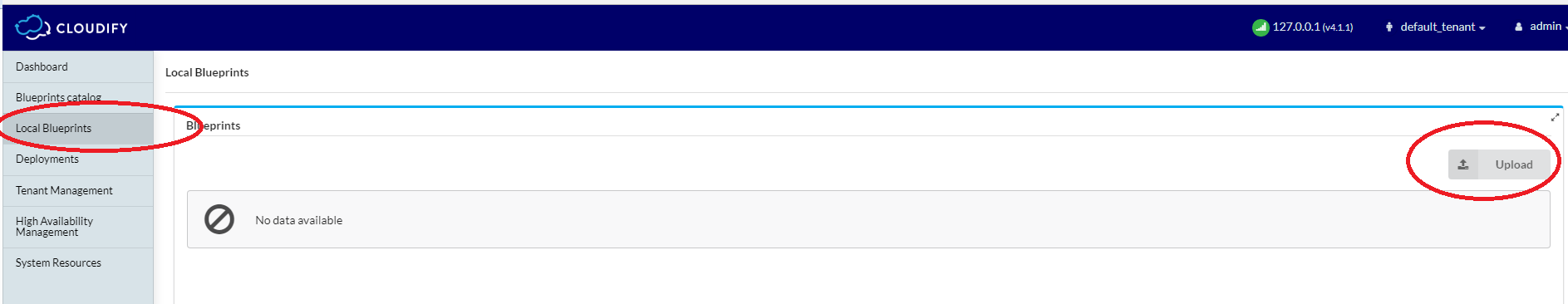

- In the cloudify manager , first click the Local Blueprints Button on the left side, then click the upload button

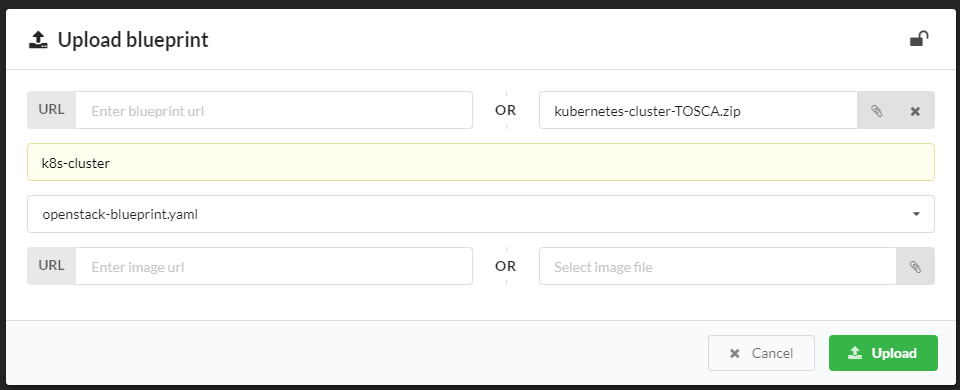

- Click Select blueprint file to upload the blueprint from local.

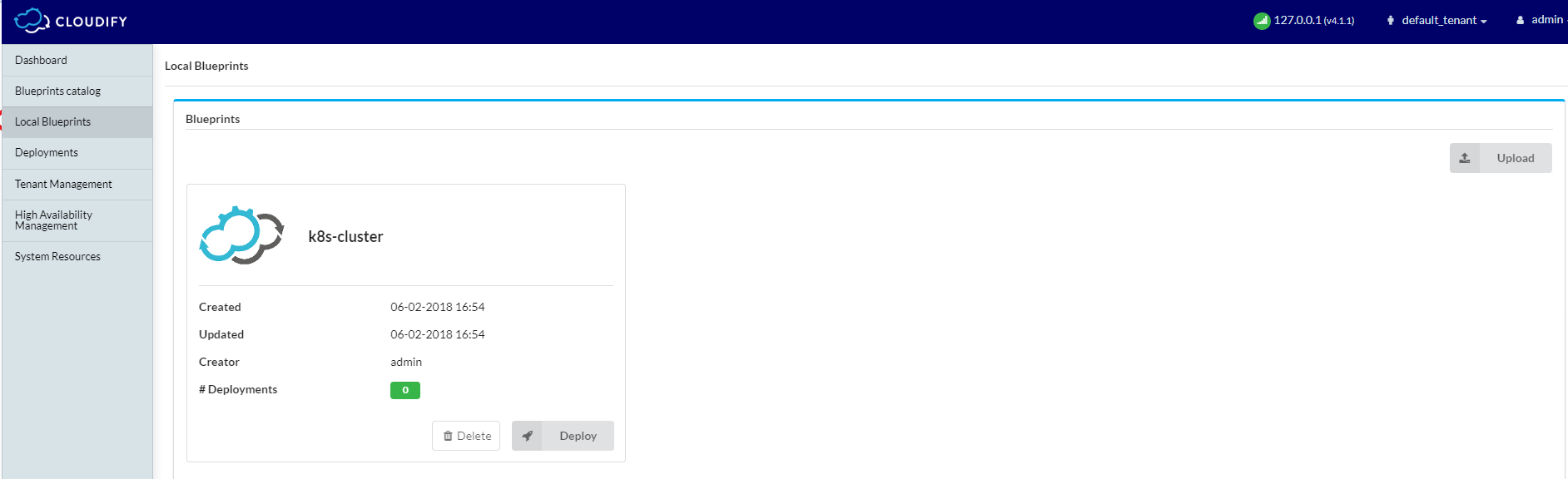

- Click upload, then your bule print shows here

- Zip the directory kubernetes-cluster-TOSCA

3. create a deployment

- Click the deploy button

- You only need give a deployment name, then click deploy button.

- Click the deploy button

4. execute the installation workflow

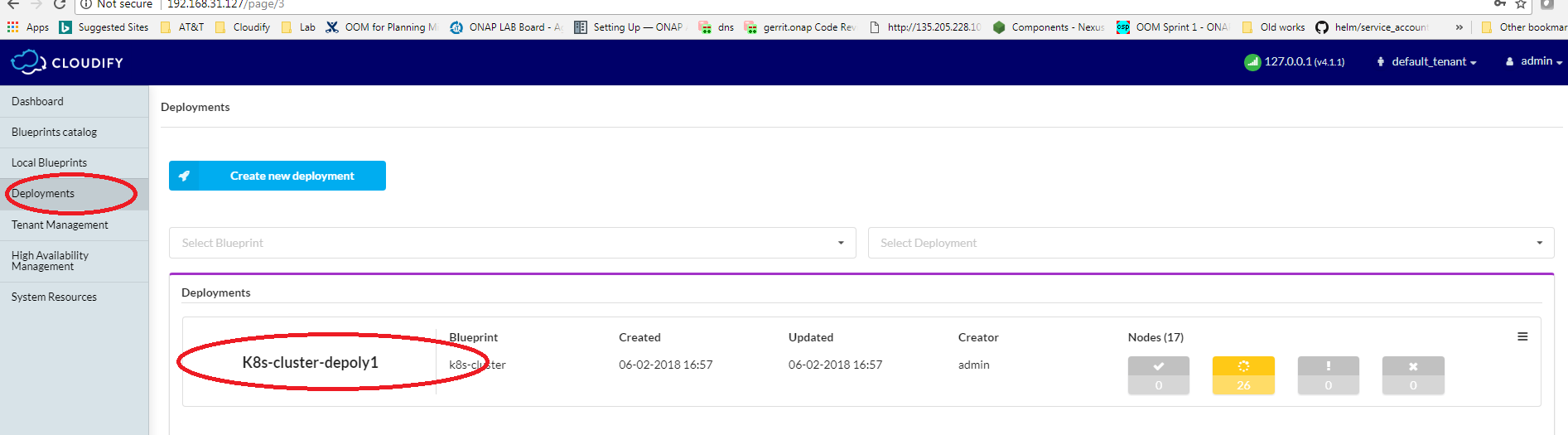

Deployments → K8s-cluster-deploy1→ Execute workflow→ Install

Scroll down, you can see the install workflow is searted.

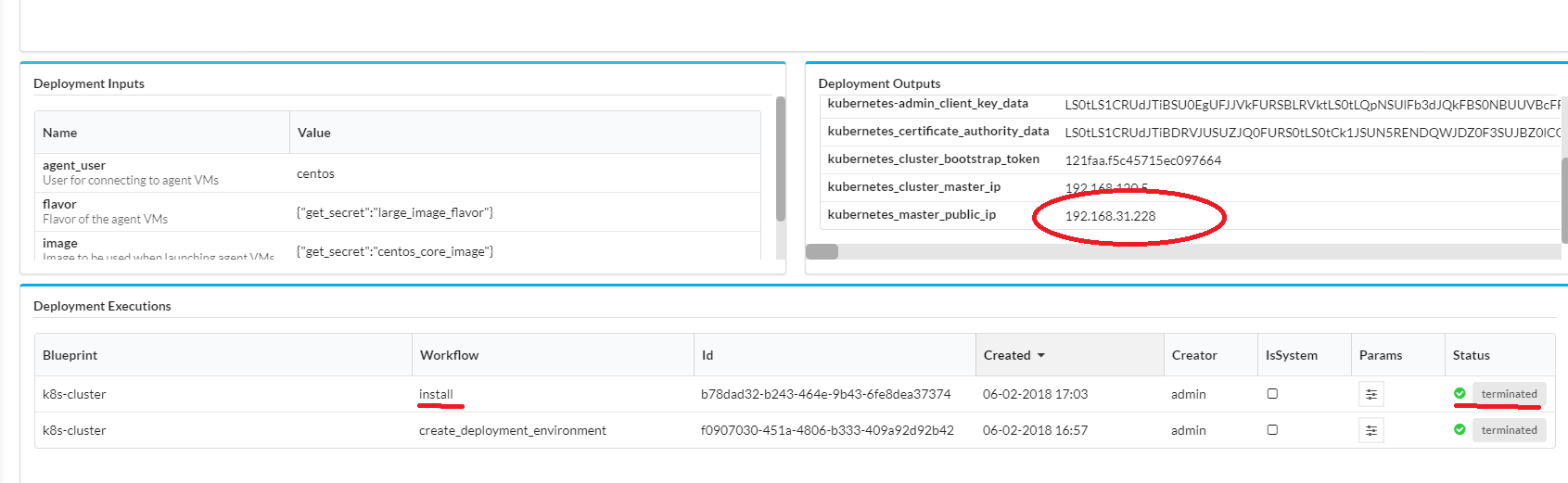

5.When install workflow finishes, it will show terminated .

You can find the public IP of master node. The private key to access those VM is in that cloudify-launching vm.

(env) ubuntu@cloudify-launching:~$ cd .ssh

(env) ubuntu@cloudify-launching:~/.ssh$ ls

authorized_keys cfy-agent-key-os cfy-agent-key-os.pub cfy-manager-key-os cfy-manager-key-os.pub

ubuntu@cloudify-launching:~/.ssh$ sudo ssh -i cfy-agent-key-os centos@192.168.31.228

sudo: unable to resolve host cloudify-launching

The authenticity of host '192.168.31.228 (192.168.31.228)' can't be established.

ECDSA key fingerprint is SHA256:ZMHvC2MrgNNqpRaO96AxTaVjdEMcwXcXY8eNwzrhoNA.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.31.228' (ECDSA) to the list of known hosts.

Last login: Tue Feb 6 22:05:03 2018 from 192.168.120.3

[centos@server-k8s-cluster-depoly1-kubernetes-master-host-7g4o4w ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

server-k8s-cluster-depoly1-kubernetes-master-host-7g4o4w Ready master 11m v1.8.6

server-k8s-cluster-depoly1-kubernetes-node-host-1r81t1 Ready <none> 10m v1.8.6

server-k8s-cluster-depoly1-kubernetes-node-host-js0gj6 Ready <none> 10m v1.8.6

server-k8s-cluster-depoly1-kubernetes-node-host-o73jcr Ready <none> 10m v1.8.6

server-k8s-cluster-depoly1-kubernetes-node-host-zhstql Ready <none> 10m v1.8.6

[centos@server-k8s-cluster-depoly1-kubernetes-master-host-7g4o4w ~]$ kubectl version

Client Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.6", GitCommit:"6260bb08c46c31eea6cb538b34a9ceb3e406689c", GitTreeState:"clean", BuildDate:"2017-12-21T06:34:11Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.7", GitCommit:"b30876a5539f09684ff9fde266fda10b37738c9c", GitTreeState:"clean", BuildDate:"2018-01-16T21:52:38Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

[centos@server-k8s-cluster-depoly1-kubernetes-master-host-7g4o4w ~]$ docker version

Client:

Version: 1.12.6

API version: 1.24

Package version: docker-1.12.6-71.git3e8e77d.el7.centos.1.x86_64

Go version: go1.8.3

Git commit: 3e8e77d/1.12.6

Built: Tue Jan 30 09:17:00 2018

OS/Arch: linux/amd64

Server:

Version: 1.12.6

API version: 1.24

Package version: docker-1.12.6-71.git3e8e77d.el7.centos.1.x86_64

Go version: go1.8.3

Git commit: 3e8e77d/1.12.6

Built: Tue Jan 30 09:17:00 2018

OS/Arch: linux/amd64

prepare OOM environment

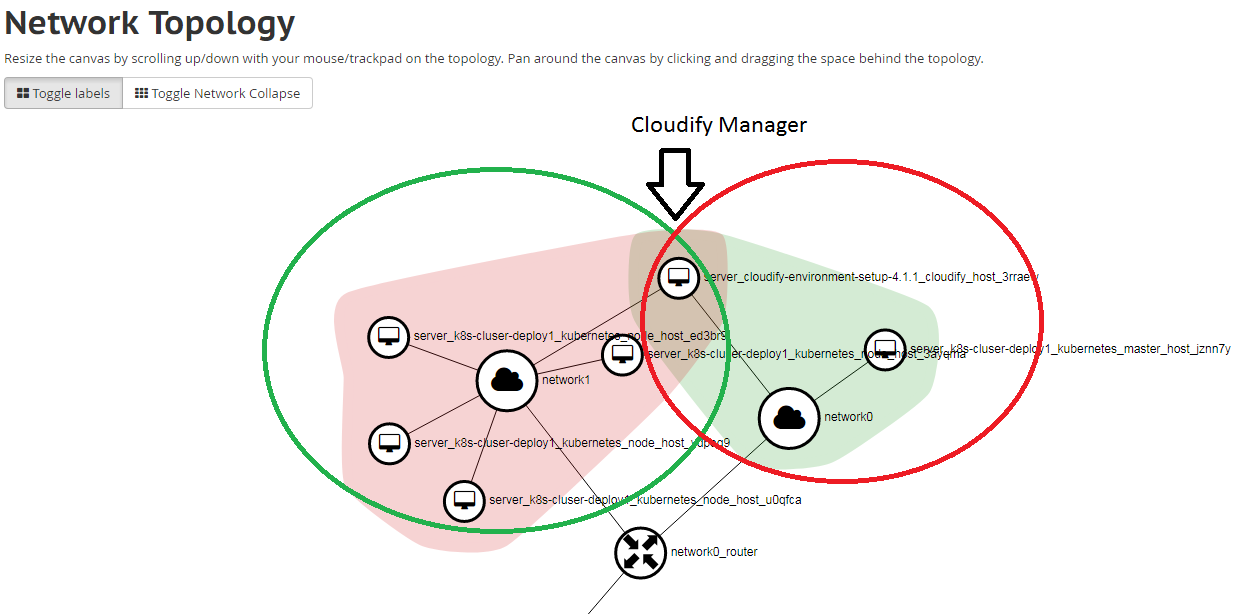

Let's look into this Cluster

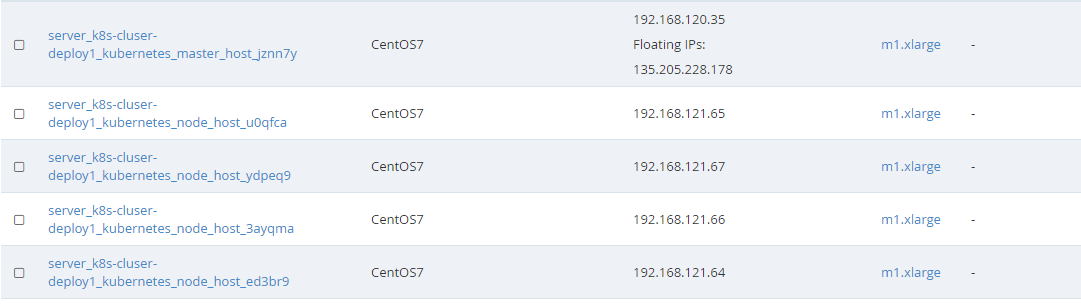

In this kubernetes Cluster, there is one master node sit on public network (network0), and 4 work nodes on privare netwrok.

- Install Helm on Kubernetes master

Log into Kubernetes master run following command to install the helm

sudo yum install git wget -y #install helm wget http://storage.googleapis.com/kubernetes-helm/helm-v2.7.0-linux-amd64.tar.gz tar -zxvf helm-v2.7.0-linux-amd64.tar.gz sudo mv linux-amd64/helm /usr/bin/helm

RBAC is enabled in this cluster, so you need create a service account for the tiller.

kubectl -n kube-system create sa tiller kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller helm init --service-account tiller

result:

[centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y ~]$ kubectl -n kube-system create sa tiller helm init --service-account tiller serviceaccount "tiller" created [centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y ~]$ kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller clusterrolebinding "tiller" created [centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y ~]$ helm init --service-account tiller Creating /home/centos/.helm Creating /home/centos/.helm/repository Creating /home/centos/.helm/repository/cache Creating /home/centos/.helm/repository/local Creating /home/centos/.helm/plugins Creating /home/centos/.helm/starters Creating /home/centos/.helm/cache/archive Creating /home/centos/.helm/repository/repositories.yaml Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com Adding local repo with URL: http://127.0.0.1:8879/charts $HELM_HOME has been configured at /home/centos/.helm. Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. Happy Helming! [centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y ~]$ helm version Client: &version.Version{SemVer:"v2.7.0", GitCommit:"08c1144f5eb3e3b636d9775617287cc26e53dba4", GitTreeState:"clean"} Server: &version.Version{SemVer:"v2.7.0", GitCommit:"08c1144f5eb3e3b636d9775617287cc26e53dba4", GitTreeState:"clean"}

2. Pull docker images on all cluster working nodes

The floating IP didn't attach on those working nodes, to access those nodes we should do the following setps.

a. Log into the Cloudify launching VM and find the cfy-manager-key-os this is the ssh key for cloudify manager.

ubuntu@ubuntu-cloudify411-env-set:~/.ssh$ pwd /home/ubuntu/.ssh ubuntu@ubuntu-cloudify411-env-set:~/.ssh$ ls authorized_keys cfy-agent-key-os cfy-agent-key-os.pub cfy-manager-key-os cfy-manager-key-os.pub known_hosts

b. ssh into the cloudify manager, because cloudify manager set in the same netwok as the work nodes and it has the floating IP.

ubuntu@ubuntu-cloudify411-env-set:~/.ssh$ ssh -i cfy-manager-key-os centos@135.205.228.200 Last login: Tue Feb 6 15:37:32 2018 from 135.205.228.197 [centos@cloudify ~]$

c. Get the private key, and create the private key in your file system.

[centos@cloudify ~]$ cfy secrets get agent_key_private Getting info for secret `agent_key_private`... Requested secret info: private_resource: False created_by: admin key: agent_key_private tenant_name: default_tenant created_at: 2017-12-06 19:04:33.208 updated_at: 2017-12-06 19:04:33.208 value: -----BEGIN RSA PRIVATE KEY----- MIIEpAIBAAKCAQEAkzWvhUAuAQuwNVOwZYtb/qMG+FuOPcP2R/I/D96CQmFMC3O+ *************************************************************** hide my private key *************************************************************** sUyvHj1250wOWN0aO7PmVoaEH0WgjmD0tcZrxzEpoPtp8XtiCxtAaA== -----END RSA PRIVATE KEY----- [centos@cloudify ~]$ cd .ssh [centos@cloudify .ssh]$ nano agentkey #copy & paste the value into the agentkey file [centos@cloudify .ssh]$ ls agentkey authorized_keys key_pub known_hosts # agentkey shows in the file system, you can use this key to ssh the worknodes

d. ssh into the work nodes

[centos@cloudify .ssh]$ ssh -i agentkey centos@192.168.121.64 The authenticity of host '192.168.121.64 (192.168.121.64)' can't be established. ECDSA key fingerprint is b8:0d:01:5d:58:db:f3:d7:3d:ee:7b:dd:19:88:59:bf. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.121.64' (ECDSA) to the list of known hosts. Last login: Wed Feb 7 15:21:28 2018 [centos@server-k8s-cluser-deploy1-kubernetes-node-host-ed3br9 ~]$

e. Run the following command to pull the docker image

sudo yum install git -y git clone -b master http://gerrit.onap.org/r/oom curl https://jira.onap.org/secure/attachment/10750/prepull_docker.sh > prepull_docker.sh chmod 777 prepull_docker.sh nohup ./prepull_docker.sh &

f. Repeat setp D and E, for all work nodes.

Note: The steps above is for the first time the user create the environment. If you already set the ssh key in cloudify manager VM, the only steps you need take is login to cloudify manager VM and run step D and E for each working nodes.

The prepull_docker.sh is triggered parallel, it will take several hours to finish, based on the net work speed.

To check whether the pull images finish, run following command in working node.

docker images | wc -l

If you got 80+, you are good to go.

Provision ONAP using Helm TOSCA blueprint

Download the OOM project to the kubernetes master and run config

git clone -b master https://gerrit.onap.org/r/p/oom.git cd oom/kubernetes/config/ cp onap-parameters-sample.yaml onap-parameters.yaml ./createConfig.sh -n onap

Make sure the onap config pod completed

[centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y config]$ kubectl get pod --all-namespaces -a NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 3h kube-system kube-apiserver-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 3h kube-system kube-controller-manager-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 3h kube-system kube-dns-545bc4bfd4-9gm6s 3/3 Running 0 3h kube-system kube-proxy-46jfc 1/1 Running 0 3h kube-system kube-proxy-cmslq 1/1 Running 0 3h kube-system kube-proxy-pglbl 1/1 Running 0 3h kube-system kube-proxy-sj6qj 1/1 Running 0 3h kube-system kube-proxy-zzkjh 1/1 Running 0 3h kube-system kube-scheduler-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 3h kube-system tiller-deploy-5458cb4cc-ck5km 1/1 Running 0 3h kube-system weave-net-7z6zr 2/2 Running 0 3h kube-system weave-net-bgs46 2/2 Running 0 3h kube-system weave-net-g78lp 2/2 Running 1 3h kube-system weave-net-h5ct5 2/2 Running 0 3h kube-system weave-net-ww89n 2/2 Running 1 3h onap config 0/1 Completed 0 1m

Note: Currently OOM development is basend on Rancher Environment. Some config setting is not good for this K8S cluster.

In [oom.git] / kubernetes / aai / values.yaml and [oom.git] / kubernetes / policy / values.yaml

There is a constant Cluster IP. aaiServiceClusterIp: 10.43.255.254

However this Cluster IP is not in the CIDR range for this cluster.

Change it to 10.100.255.254

In [oom.git] / kubernetes / sdnc / templates / nfs-provisoner-deployment.yaml

The nfs-provisoner will create a volumes mount to /dockerdata-nfs/{{ .Values.nsPrefix }}/sdnc/data

51 volumes:

52 - name: export-volume

53 hostPath:

54 path: /dockerdata-nfs/{{ .Values.nsPrefix }}/sdnc/data

In this K8s cluster the /dockerdata-nfs is already mounted to sync the config data between working nodes.

That will cause error in nfs-procisoner container

Change the code to- path:/dockerdata-nfs/{{ .Values.nsPrefix }}/sdnc/data+ path:/nfs-provisioner/{{ .Values.nsPrefix }}/sdnc/data+type: DirectoryOrCreate

- Upload the ONAP-helm TOSCA to Cloudify Manager GUI

Get the helm Blueprint

https://gerrit.onap.org/r/gitweb?p=oom.git;a=tree;f=TOSCA/Helm

Zip the helm derectory, and upload it to Cloudify Manager GUI - Create depolyment

Fill in the deployment name and kubernetes master floating IP. - Exectue Install workflow

Deployments → ONAP-tets→ Execute workflow→ Install

Results:kubectl get pod --all-namespaces

[centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y oneclick]$ kubectl get pod --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 5h kube-system kube-apiserver-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 5h kube-system kube-controller-manager-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 5h kube-system kube-dns-545bc4bfd4-9gm6s 3/3 Running 0 5h kube-system kube-proxy-46jfc 1/1 Running 0 5h kube-system kube-proxy-cmslq 1/1 Running 0 5h kube-system kube-proxy-pglbl 1/1 Running 0 5h kube-system kube-proxy-sj6qj 1/1 Running 0 5h kube-system kube-proxy-zzkjh 1/1 Running 0 5h kube-system kube-scheduler-server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y 1/1 Running 0 5h kube-system tiller-deploy-5458cb4cc-ck5km 1/1 Running 0 4h kube-system weave-net-7z6zr 2/2 Running 2 5h kube-system weave-net-bgs46 2/2 Running 2 5h kube-system weave-net-g78lp 2/2 Running 8 5h kube-system weave-net-h5ct5 2/2 Running 2 5h kube-system weave-net-ww89n 2/2 Running 3 5h onap-aaf aaf-c68d8877c-sl6nd 0/1 Running 0 42m onap-aaf aaf-cs-75464db899-jftzn 1/1 Running 0 42m onap-aai aai-resources-7bc8fd9cd9-wvtpv 2/2 Running 0 7m onap-aai aai-service-9d59ff9b-69pm6 1/1 Running 0 7m onap-aai aai-traversal-7dc64b448b-pn42g 2/2 Running 0 7m onap-aai data-router-78d9668bd8-wx6hb 1/1 Running 0 7m onap-aai elasticsearch-6c4cdf776f-xl4p2 1/1 Running 0 7m onap-aai hbase-794b5b644d-qpjtx 1/1 Running 0 7m onap-aai model-loader-service-77b87997fc-5dqpg 2/2 Running 0 7m onap-aai search-data-service-bcd6bb4b8-w4dck 2/2 Running 0 7m onap-aai sparky-be-69855bc4c4-k54cc 2/2 Running 0 7m onap-appc appc-775c4db7db-6w92c 2/2 Running 0 42m onap-appc appc-dbhost-7bd58565d9-jhjwd 1/1 Running 0 42m onap-appc appc-dgbuilder-5dc9cff85-bbg2p 1/1 Running 0 42m onap-clamp clamp-7cd5579f97-45j45 1/1 Running 0 42m onap-clamp clamp-mariadb-78c46967b8-xl2z2 1/1 Running 0 42m onap-cli cli-6885486887-gh4fc 1/1 Running 0 42m onap-consul consul-agent-5c744c8758-s5zqq 1/1 Running 5 42m onap-consul consul-server-687f6f6556-gh6t9 1/1 Running 1 42m onap-consul consul-server-687f6f6556-mztgf 1/1 Running 0 42m onap-consul consul-server-687f6f6556-n86vh 1/1 Running 0 42m onap-kube2msb kube2msb-registrator-58957dc65-75rlq 1/1 Running 2 42m onap-log elasticsearch-7689bd6fbd-lk87q 1/1 Running 0 42m onap-log kibana-5dfc4d4f86-4z7rv 1/1 Running 0 42m onap-log logstash-c65f668f5-cj64f 1/1 Running 0 42m onap-message-router dmaap-84bd655dd6-jnq4x 1/1 Running 0 43m onap-message-router global-kafka-54d5cf7b5b-g6rkx 1/1 Running 0 43m onap-message-router zookeeper-7df6479654-xz2jw 1/1 Running 0 43m onap-msb msb-consul-6c79b86c79-b9q8p 1/1 Running 0 42m onap-msb msb-discovery-845db56dc5-zthwm 1/1 Running 0 42m onap-msb msb-eag-65bd96b98-84vxz 1/1 Running 0 42m onap-msb msb-iag-7bb5b74cd9-6nf96 1/1 Running 0 42m onap-mso mariadb-6487b74997-vkrrt 1/1 Running 0 42m onap-mso mso-6d6f86958b-r9c8q 2/2 Running 0 42m onap-multicloud framework-77f46548ff-dkkn8 1/1 Running 0 42m onap-multicloud multicloud-ocata-766474f955-7b8j2 1/1 Running 0 42m onap-multicloud multicloud-vio-64598b4c84-pmc9z 1/1 Running 0 42m onap-multicloud multicloud-windriver-68765f8898-7cgfw 1/1 Running 2 42m onap-policy brmsgw-785897ff54-kzzhq 1/1 Running 0 42m onap-policy drools-7889c5d865-f4mlb 2/2 Running 0 42m onap-policy mariadb-7c66956bf-z8rsk 1/1 Running 0 42m onap-policy nexus-77487c4c5d-gwbfg 1/1 Running 0 42m onap-policy pap-796d8d946f-thqn6 2/2 Running 0 42m onap-policy pdp-8775c6cc8-zkbll 2/2 Running 0 42m onap-portal portalapps-dd4f99c9b-b4q6b 2/2 Running 0 43m onap-portal portaldb-7f8547d599-wwhzj 1/1 Running 0 43m onap-portal portalwidgets-6f884fd4b4-pxkx9 1/1 Running 0 43m onap-portal vnc-portal-687cdf7845-4s7qm 1/1 Running 0 43m onap-robot robot-7747c7c475-r5lg4 1/1 Running 0 42m onap-sdc sdc-be-7f6bb5884f-5hns2 2/2 Running 0 42m onap-sdc sdc-cs-7bd5fb9dbc-xg8dg 1/1 Running 0 42m onap-sdc sdc-es-69f77b4778-jkxvl 1/1 Running 0 42m onap-sdc sdc-fe-7c7b64c6c-djr5p 2/2 Running 0 42m onap-sdc sdc-kb-c4dc46d47-6jdsv 1/1 Running 0 42m onap-sdnc dmaap-listener-78f4cdb695-k4ffk 1/1 Running 0 43m onap-sdnc nfs-provisioner-845ccc8495-sl5dl 1/1 Running 0 43m onap-sdnc sdnc-0 2/2 Running 0 43m onap-sdnc sdnc-dbhost-0 2/2 Running 0 43m onap-sdnc sdnc-dgbuilder-8667587c65-s4jss 1/1 Running 0 43m onap-sdnc sdnc-portal-6587c6dbdf-qjqwf 1/1 Running 0 43m onap-sdnc ueb-listener-65d67d8557-87mjc 1/1 Running 0 43m onap-uui uui-578cd988b6-n5g98 1/1 Running 0 42m onap-uui uui-server-576998685c-cwcbw 1/1 Running 0 42m onap-vfc vfc-catalog-6ff7b74b68-z6ckl 1/1 Running 0 42m onap-vfc vfc-emsdriver-7845c8f9f-frv5w 1/1 Running 0 42m onap-vfc vfc-gvnfmdriver-56cf469b46-qmtxs 1/1 Running 0 42m onap-vfc vfc-hwvnfmdriver-588d5b679f-tb7bh 1/1 Running 0 42m onap-vfc vfc-jujudriver-6db77bfdd5-hwnnw 1/1 Running 0 42m onap-vfc vfc-nokiavnfmdriver-6c78675f8d-6sw9m 1/1 Running 0 42m onap-vfc vfc-nslcm-796b678d-9fc5s 1/1 Running 0 42m onap-vfc vfc-resmgr-74f858b688-p5ft5 1/1 Running 0 42m onap-vfc vfc-vnflcm-5849759444-bg288 1/1 Running 0 42m onap-vfc vfc-vnfmgr-77df547c78-22t7v 1/1 Running 0 42m onap-vfc vfc-vnfres-5bddd7fc68-xkg8b 1/1 Running 0 42m onap-vfc vfc-workflow-5849854569-k8ltg 1/1 Running 0 42m onap-vfc vfc-workflowengineactiviti-699f669db9-2snjj 1/1 Running 0 42m onap-vfc vfc-ztesdncdriver-5dcf694c4-sg2q4 1/1 Running 0 42m onap-vfc vfc-ztevnfmdriver-585d8db4f7-x6kp6 0/1 ErrImagePull 0 42m onap-vid vid-mariadb-6788c598fb-2d4j9 1/1 Running 0 43m onap-vid vid-server-795bdcb4c8-8pkn2 2/2 Running 0 43m onap-vnfsdk postgres-5679d856cf-n6l94 1/1 Running 0 42m onap-vnfsdk refrepo-594969bf89-kzr5r 1/1 Running 0 42m

./ete-k8s.sh health

[centos@server-k8s-cluser-deploy1-kubernetes-master-host-jznn7y robot]$ ./ete-k8s.sh health Starting Xvfb on display :88 with res 1280x1024x24 Executing robot tests at log level TRACE ============================================================================== OpenECOMP ETE ============================================================================== OpenECOMP ETE.Robot ============================================================================== OpenECOMP ETE.Robot.Testsuites ============================================================================== OpenECOMP ETE.Robot.Testsuites.Health-Check :: Testing ecomp components are... ============================================================================== Basic DCAE Health Check [ WARN ] Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f5841c12850>: Failed to establish a new connection: [Errno -2] Name or service not known',)': /healthcheck [ WARN ] Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f5841d18410>: Failed to establish a new connection: [Errno -2] Name or service not known',)': /healthcheck [ WARN ] Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f583fed7810>: Failed to establish a new connection: [Errno -2] Name or service not known',)': /healthcheck | FAIL | ConnectionError: HTTPConnectionPool(host='dcae-controller.onap-dcae', port=8080): Max retries exceeded with url: /healthcheck (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f583fed7c10>: Failed to establish a new connection: [Errno -2] Name or service not known',)) ------------------------------------------------------------------------------ Basic SDNGC Health Check | PASS | ------------------------------------------------------------------------------ Basic A&AI Health Check | PASS | ------------------------------------------------------------------------------ Basic Policy Health Check | FAIL | 'False' should be true. ------------------------------------------------------------------------------ Basic MSO Health Check | PASS | ------------------------------------------------------------------------------ Basic ASDC Health Check | PASS | ------------------------------------------------------------------------------ Basic APPC Health Check | PASS | ------------------------------------------------------------------------------ Basic Portal Health Check | PASS | ------------------------------------------------------------------------------ Basic Message Router Health Check | PASS | ------------------------------------------------------------------------------ Basic VID Health Check | PASS | ------------------------------------------------------------------------------ Basic Microservice Bus Health Check | PASS | ------------------------------------------------------------------------------ Basic CLAMP Health Check | PASS | ------------------------------------------------------------------------------ catalog API Health Check | PASS | ------------------------------------------------------------------------------ emsdriver API Health Check | PASS | ------------------------------------------------------------------------------ gvnfmdriver API Health Check | PASS | ------------------------------------------------------------------------------ huaweivnfmdriver API Health Check | PASS | ------------------------------------------------------------------------------ multicloud API Health Check | PASS | ------------------------------------------------------------------------------ multicloud-ocata API Health Check | PASS | ------------------------------------------------------------------------------ multicloud-titanium_cloud API Health Check | PASS | ------------------------------------------------------------------------------ multicloud-vio API Health Check | PASS | ------------------------------------------------------------------------------ nokiavnfmdriver API Health Check | PASS | ------------------------------------------------------------------------------ nslcm API Health Check | PASS | ------------------------------------------------------------------------------ resmgr API Health Check | PASS | ------------------------------------------------------------------------------ usecaseui-gui API Health Check | FAIL | 502 != 200 ------------------------------------------------------------------------------ vnflcm API Health Check | PASS | ------------------------------------------------------------------------------ vnfmgr API Health Check | PASS | ------------------------------------------------------------------------------ vnfres API Health Check | PASS | ------------------------------------------------------------------------------ workflow API Health Check | FAIL | 404 != 200 ------------------------------------------------------------------------------ ztesdncdriver API Health Check | PASS | ------------------------------------------------------------------------------ ztevmanagerdriver API Health Check | FAIL | 502 != 200 ------------------------------------------------------------------------------ OpenECOMP ETE.Robot.Testsuites.Health-Check :: Testing ecomp compo... | FAIL | 30 critical tests, 25 passed, 5 failed 30 tests total, 25 passed, 5 failed ============================================================================== OpenECOMP ETE.Robot.Testsuites | FAIL | 30 critical tests, 25 passed, 5 failed 30 tests total, 25 passed, 5 failed ============================================================================== OpenECOMP ETE.Robot | FAIL | 30 critical tests, 25 passed, 5 failed 30 tests total, 25 passed, 5 failed ============================================================================== OpenECOMP ETE | FAIL | 30 critical tests, 25 passed, 5 failed 30 tests total, 25 passed, 5 failed ============================================================================== Output: /share/logs/ETE_14594/output.xml Log: /share/logs/ETE_14594/log.html Report: /share/logs/ETE_14594/report.html command terminated with exit code 5We got the similar result as OOM jenkins job, except policy. http://jenkins.onap.info/job/oom-cd/1728/console

Already created the JIRA ticket OOM-667 - Getting issue details... STATUS

Single VIM: Amazon AWS EC2

Following https://github.com/cloudify-examples/cloudify-environment-setup

Navigate to your AWS console https://signin.aws.amazon.com/oauth

Create a 64G VM on EBS - using Ubuntu 16.04 for now and the cost effective spot price for R4.2xLarge - enough to deploy all of ONAP in 55G plus the undercloud - the same as the OOM RI

Install Cloudify CLI

bootstrap (not pre-baked AMI) - I am doing this as root (to avoid any permissions issues) and on Ubuntu 16.04 (our current recommended OS for Rancher)

https://github.com/cloudify-examples/cloudify-environment-setup

"Install Cloudify CLI. Make sure that your CLI is using a local profile. (You must have executed cfy profiles use local in your shell."

links to http://docs.getcloudify.org/4.1.0/installation/from-packages/

switch to community tab

click DEB - verify you are human - fill out your name, email and company - get cloudify-cli-community-17.12.28.deb

scp the file up to your vm

obrienbiometrics:_deployment michaelobrien$ scp ~/Downloads/cloudify-cli-community-17.12.28.deb ubuntu@cloudify.onap.info:~/ cloudify-cli-community-17.12.28.deb 39% 17MB 2.6MB/s 00:09 ETA obrienbiometrics:_deployment michaelobrien$ ssh ubuntu@cloudify.onap.info ubuntu@ip-172-31-19-14:~$ sudo su - root@ip-172-31-19-14:~# cp /home/ubuntu/cloudify-cli-community-17.12.28.deb . root@ip-172-31-19-14:~# sudo dpkg -i cloudify-cli-community-17.12.28.deb Selecting previously unselected package cloudify. (Reading database ... 51107 files and directories currently installed.) Preparing to unpack cloudify-cli-community-17.12.28.deb ... You're about to install Cloudify! Unpacking cloudify (17.12.28~community-1) ... Setting up cloudify (17.12.28~community-1) ... Thank you for installing Cloudify!

Configure the CLI

root@ip-172-31-19-14:~# cfy profiles use local Initializing local profile ... Initialization completed successfully Using local environment... Initializing local profile ... Initialization completed successfully

Download the archive

wget https://github.com/cloudify-examples/cloudify-environment-setup/archive/latest.zip root@ip-172-31-19-14:~# apt install unzip root@ip-172-31-19-14:~# unzip latest.zip creating: cloudify-environment-setup-latest/ inflating: cloudify-environment-setup-latest/README.md inflating: cloudify-environment-setup-latest/aws-blueprint.yaml inflating: cloudify-environment-setup-latest/azure-blueprint.yaml inflating: cloudify-environment-setup-latest/circle.yml inflating: cloudify-environment-setup-latest/gcp-blueprint.yaml creating: cloudify-environment-setup-latest/imports/ inflating: cloudify-environment-setup-latest/imports/manager-configuration.yaml creating: cloudify-environment-setup-latest/inputs/ inflating: cloudify-environment-setup-latest/inputs/aws.yaml inflating: cloudify-environment-setup-latest/inputs/azure.yaml inflating: cloudify-environment-setup-latest/inputs/gcp.yaml inflating: cloudify-environment-setup-latest/inputs/openstack.yaml inflating: cloudify-environment-setup-latest/openstack-blueprint.yaml creating: cloudify-environment-setup-latest/scripts/ creating: cloudify-environment-setup-latest/scripts/manager/ inflating: cloudify-environment-setup-latest/scripts/manager/configure.py inflating: cloudify-environment-setup-latest/scripts/manager/create.py inflating: cloudify-environment-setup-latest/scripts/manager/delete.py inflating: cloudify-environment-setup-latest/scripts/manager/start.py

Configure the archive with your AWS credentials

- vpc_id: This is the ID of the vpc. The same vpc that your manager is attached to.

- private_subnet_id: This is the ID of a subnet that does not have inbound internet access on the vpc. Outbound internet access is required to download the requirements. It must be on the same vpc designated by VPC_ID.

- public_subnet_id: This is the ID of a subnet that does have internet access (inbound and outbound). It must be on the same vpc designated by VPC_ID.

- availability_zone: The availability zone that you want your instances created in. This must be the same as your public_subnet_id and private_subnet_id.

- ec2_region_endpoint: The AWS region endpint, such as ec2.us-east-1.amazonaws.com.

- ec2_region_name: The AWS region name, such as ec2_region_name.

- aws_secret_access_key: Your AWS Secret Access Key. See here for more info. This may not be provided as an environment variable. The string must be set as a secret.

- aws_access_key_id: Your AWS Access Key ID. See here for more info. This may not be provided as an environment variable. The string must be set as a secret.

Install the archive

# I am on AWS EC2 root@ip-172-31-19-14:~# cfy install cloudify-environment-setup-latest/aws-blueprint.yaml -i cloudify-environment-setup-latest/inputs/aws.yaml --install-plugins --task-retries=30 --task-retry-interval=5 Initializing local profile ... Initialization completed successfully Initializing blueprint... #30 sec Collecting https://github.com/cloudify-incubator/cloudify-utilities-plugin/archive/1.4.2.1.zip (from -r /tmp/requirements_whmckn.txt (line 1))2018-01-13 15:28:40.563 CFY <cloudify-environment-setup-latest> [cloudify_manager_ami_i29qun.create] Task started 'cloudify_awssdk.ec2.resources.image.prepare' 2018-01-13 15:28:40.639 CFY <cloudify-environment-setup-latest> [vpc_w1tgjn.create] Task failed 'cloudify_aws.vpc.vpc.create_vpc' -> EC2ResponseError: 401 Unauthorized <?xml version="1.0" encoding="UTF-8"?> <Response><Errors><Error><Code>AuthFailure</Code><Message>AWS was not able to validate the provided access credentials</Message></Error></Errors><RequestID>d8e7ff46-81ec-4a8a-8451-13feef29737e</RequestID></Response> 2018-01-13 15:28:40.643 CFY <cloudify-environment-setup-latest> 'install' workflow execution failed: Workflow failed: Task failed 'cloudify_aws.vpc.vpc.create_vpc' -> EC2ResponseError: 401 Unauthorized <?xml version="1.0" encoding="UTF-8"?> <Response><Errors><Error><Code>AuthFailure</Code><Message>AWS was not able to validate the provided access credentials</Message></Error></Errors><RequestID>d8e7ff46-81ec-4a8a-8451-13feef29737e</RequestID></Response> Workflow failed: Task failed 'cloudify_aws.vpc.vpc.create_vpc' -> EC2ResponseError: 401 Unauthorized <?xml version="1.0" encoding="UTF-8"?> <Response><Errors><Error><Code>AuthFailure</Code><Message>AWS was not able to validate the provided access credentials</Message></Error></Errors><RequestID>d8e7ff46-81ec-4a8a-8451-13feef29737e</RequestID></Response>

I forgot to add my AWS auth tokens - editing....rerunning

Multi VIM: Amazon AWS EC2 + Microsoft Azure VM

Investigation

Starting with the AWS EC2 example TOSCA blueprint at https://github.com/cloudify-cosmo/cloudify-hello-world-example/blob/master/ec2-blueprint.yaml - starting with the intro page in http://docs.getcloudify.org/4.2.0/intro/what-is-cloudify/

Git/Gerrit/JIRA Artifacts

OOM-569 - Getting issue details... STATUS as part of bringing DCAE into K8S OOM-565 - Getting issue details... STATUS

OOM-46 - Getting issue details... STATUS

OOM-105 - Getting issue details... STATUS

OOM-106 - Getting issue details... STATUS

OOM-450 - Getting issue details... STATUS

OOM-63 - Getting issue details... STATUS

INT-371 - Getting issue details... STATUS

Github Artifacts

https://github.com/cloudify-cosmo/cloudify-hello-world-example/issues/58

https://github.com/cloudify-cosmo/cloudify-hello-world-example/issues/59

Notes

Download Cloudify - select Community tab on the right

17.12 community looks to align with 4.2 http://cloudify.co/releasenotes/community-edition-release-notes

Aria is the RI for Tosca http://ariatosca.org/project-aria-announcement/

https://github.com/opnfv/models/tree/master/tests/blueprints

discuss

- OASIS TOSCA SImple YAML Profile v1.2

- OASIS TOSCA NFV Profile (WD5)