Warning: Draft Content

This wiki is under construction - this means that content here may be not fully specified or missing.

TODO: determine/fix containers not ready, get DCAE yamls working, fix health tracking issues for healing

Overview

The OOM (ONAP Operation Manager) project has pushed Kubernetes based deployment code to the oom repository. This page details getting ONAP running (specifically the vFirewall demo) on Kubernetes for various virtual and native environments.

Kubernetes Setup

We need a kubernetes installation either a base installation or with a thin API wrapper like Rancher or Redhat. There are several options - currently Rancher is a focus as a thin wrapper on Kubernetes. For other installation options please refer Deprecated Kubernetes Installation Options.

Install only the 1.12.x (currently 1.12.6) version of Docker (the only version that works with Kubernetes in Rancher 1.6) Install rancher |

|---|

Verify your Rancher admin console is up on the external port you 8880

Wait for the docker container to finish DB startup

In Rancher UI (http://127.0.0.1:8880) , Set IP name of master node in config, create a new onap environment as Kubernetes (will setup kube containers), stop default environment

To add hosts to the environment install docker 1.12 if not already installed and then click on "Add Host" button in the Rancher GUI. It will show the command to be run on the machine to add it as a host.

Adding hosts to the Kubernetes environment will kick in k8s containers

Setup Kubectl

install kubectl curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl paste kubectl config from rancher gui mkdir ~/.kube |

|---|

Monitor Container Deployment

first verify your kubernetes system is up

Troubleshooting

Rancher fails to restart on server reboot

Having issues after a reboot of a colocated server/agent

ONAP Installation

ONAP deployment in kubernetes is modeled in the oom project as a 1:1 set of service:pod sets (1 pod per docker container). The fastest way to get ONAP Kubernetes up is via Rancher.

Primary platform is virtual Ubuntu 16.04 VMs on VMWare Workstation 12.5 on an up to two separate 64Gb/6-core 5820K Windows 10 systems.

Secondary platform is bare-metal 4 NUCs (i7/i5/i3 with 16G each)

Cloning details

Install the latest version of the OOM (ONAP Operations Manager) project repo - specifically the ONAP on Kubernetes work just uploaded June 2017

https://gerrit.onap.org/r/gitweb?p=oom.git

git clone ssh://yourgerrituserid@gerrit.onap.org:29418/oom cdoom/kubernetes/oneclick Versions oom : master (1.1.0-SNAPSHOT) onap deployments: 1.0.0 |

|---|

Nexus3 security settings

Fix nexus3 security for each namespace

in createAll.bash add the following two lines just before namespace creation - to create a secret and attach it to the namespace (thanks to Jason Hunt of IBM last friday to helping us attach it - when we were all getting our pods to come up). A better fix for the future will be to pass these in as parameters from a prod/stage/dev ecosystem config.

create_namespace() {

kubectl create namespace $1-$2

+ kubectl --namespace $1-$2 create secret docker-registry regsecret --docker-server=nexus3.onap.org:10001 --docker-username=docker --docker-password=docker --docker-email=email@email.com

+ kubectl --namespace $1-$2 patch serviceaccount default -p '{"imagePullSecrets": [{"name": "regsecret"}]}'

} |

|---|

Instantiate the container

Wait until all the hosts show green in rancher, then run the script that wraps all the kubectl commands

cd oom/kubernetes/oneclick vi createAll.bash |

|---|

Wait until the containers are all up - you should see...

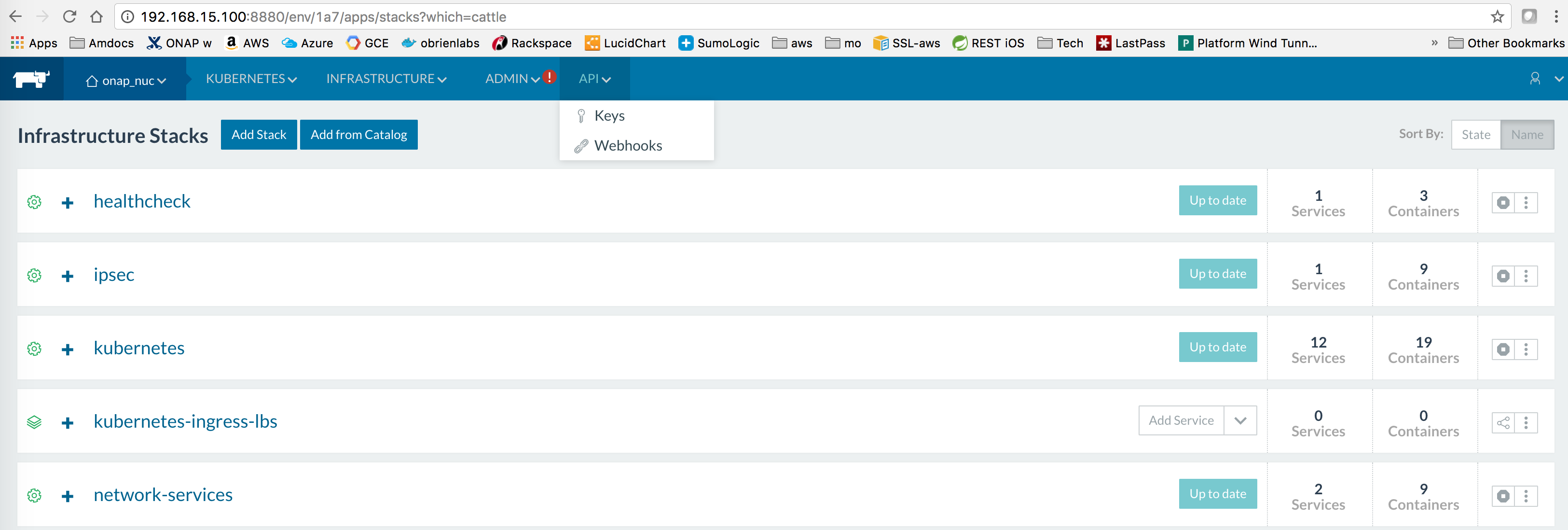

Four host Kubernetes cluster in Rancher

In this case 4 Intel NUCs running Ubuntu 16.04.2 natively

Target Deployment State

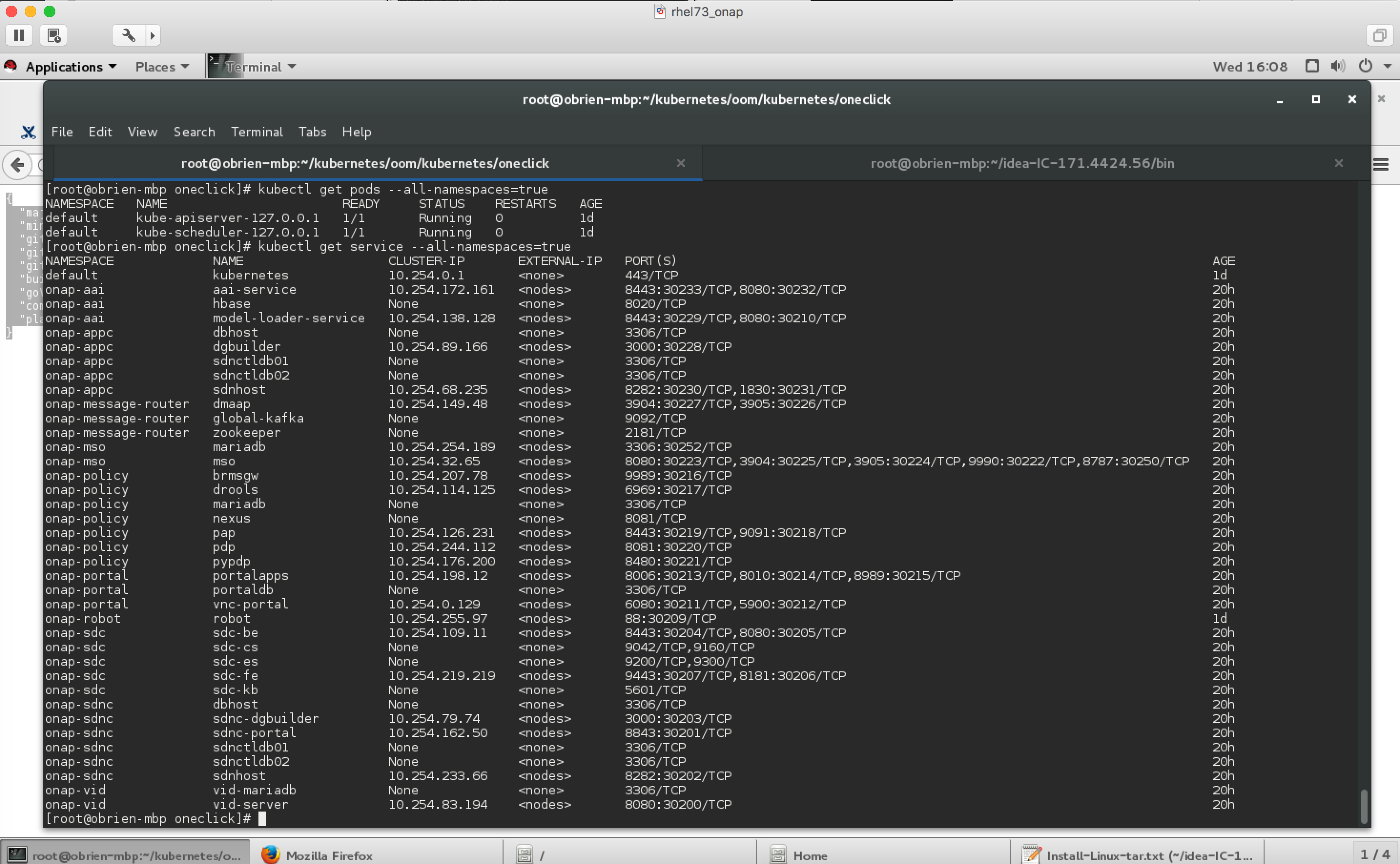

root@obriensystemsucont0:~/onap/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide

The colored container has issues getting to running state.

NAMESPACE master:20170705 | NAME | READY | STATUS | RESTARTS (in 14h) | Host | Notes |

|---|---|---|---|---|---|---|

| onap-aai | aai-service-346921785-624ss | 1/1 | Running | 0 | 1 | |

| onap-aai | hbase-139474849-7fg0s | 1/1 | Running | 0 | 2 | |

| onap-aai | model-loader-service-1795708961-wg19w | 0/1 | Init:1/2 | 82 | 2 | |

| onap-appc | appc-2044062043-bx6tc | 1/1 | Running | 0 | 1 | |

| onap-appc | appc-dbhost-2039492951-jslts | 1/1 | Running | 0 | 2 | |

| onap-appc | appc-dgbuilder-2934720673-mcp7c | 1/1 | Running | 0 | 2 | |

| onap-dcae | not yet pushed | Note: currently there are no DCAE containers running yet (we are missing 6 yaml files (1 for the controller and 5 for the collector,staging,3-cdap pods)) - therefore DMaaP, VES collectors and APPC actions as the result of policy actions (closed loop) - will not function yet. | ||||

| onap-dcae-cdap | not yet pushed | |||||

| onap-dcae-stg | not yet pushed | |||||

| onap-dcae-coll | not yet pushed | |||||

| onap-message-router | dmaap-3842712241-gtdkp | 0/1 | CrashLoopBackOff | 164 | 1 | |

| onap-message-router | global-kafka-89365896-5fnq9 | 1/1 | Running | 0 | 2 | |

| onap-message-router | zookeeper-1406540368-jdscq | 1/1 | Running | 0 | 1 | |

| onap-mso | mariadb-2638235337-758zr | 1/1 | Running | 0 | 1 | |

| onap-mso | mso-3192832250-fq6pn | 0/1 | CrashLoopBackOff | 167 | 2 | |

| onap-policy | brmsgw-568914601-d5z71 | 0/1 | Init:0/1 | 82 | 1 | |

| onap-policy | drools-1450928085-099m2 | 0/1 | Init:0/1 | 82 | 1 | |

| onap-policy | mariadb-2932363958-0l05g | 1/1 | Running | 0 | 0 | |

| onap-policy | nexus-871440171-tqq4z | 0/1 | Running | 0 | 2 | |

| onap-policy | pap-2218784661-xlj0n | 1/1 | Running | 0 | 1 | |

| onap-policy | pdp-1677094700-75wpj | 0/1 | Init:0/1 | 82 | 2 | |

| onap-policy | pypdp-3209460526-bwm6b | 0/1 | Init:0/1 | 82 | 2 | |

| onap-portal | portalapps-1708810953-trz47 | 0/1 | Init:CrashLoopBackOff | 163 | 2 | Initial dockerhub mariadb download issue - fixed |

| onap-portal | portaldb-3652211058-vsg8r | 1/1 | Running | 0 | 0 | |

| onap-portal | vnc-portal-948446550-76kj7 | 0/1 | Init:0/5 | 82 | 1 | |

| onap-robot | robot-964706867-czr05 | 1/1 | Running | 0 | 2 | |

| onap-sdc | sdc-be-2426613560-jv8sk | 0/1 | Init:0/2 | 82 | 2 | |

| onap-sdc | sdc-cs-2080334320-95dq8 | 0/1 | CrashLoopBackOff | 163 | 2 | |

| onap-sdc | sdc-es-3272676451-skf7z | 1/1 | Running | 0 | 1 | |

| onap-sdc | sdc-fe-931927019-nt94t | 0/1 | Init:0/1 | 82 | 1 | |

| onap-sdc | sdc-kb-3337231379-8m8wx | 0/1 | Init:0/1 | 82 | 1 | |

| onap-sdnc | sdnc-1788655913-vvxlj | 1/1 | Running | 0 | 0 | |

| onap-sdnc | sdnc-dbhost-240465348-kv8vf | 1/1 | Running | 0 | 0 | |

| onap-sdnc | sdnc-dgbuilder-4164493163-cp6rx | 1/1 | Running | 0 | 0 | |

| onap-sdnc | sdnc-portal-2324831407-50811 | 0/1 | Running | 25=vm 0=nuc | 1 | |

| onap-vid | vid-mariadb-4268497828-81hm0 | 0/1 | CrashLoopBackOff | 169 | 2 | |

| onap-vid | vid-server-2331936551-6gxsp | 0/1 | Init:0/1 | 82 | 1 |

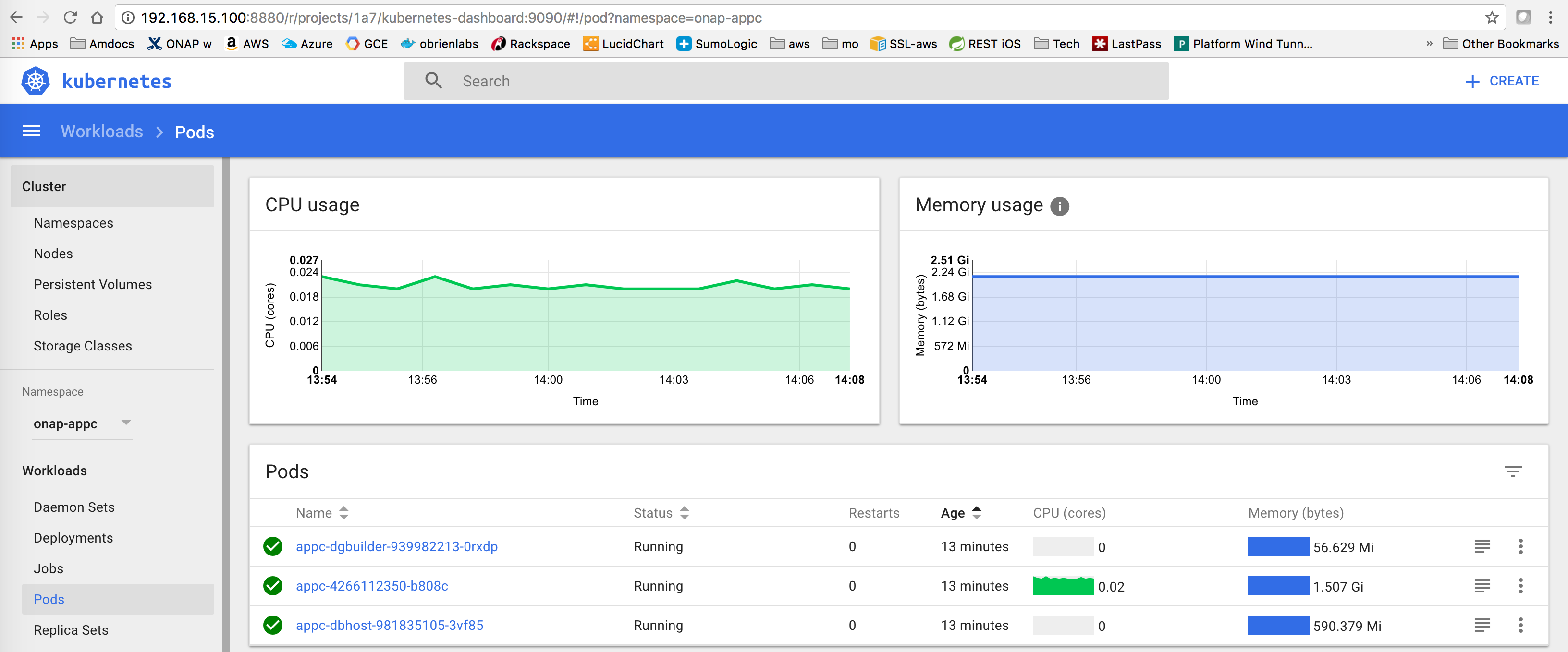

I get the same container issues on 3 different deployments (virtual Ubuntu on 2 separate VMWare based machines, and a 3 node NUC cluster). For example the APPC 3-pod service is running fine.

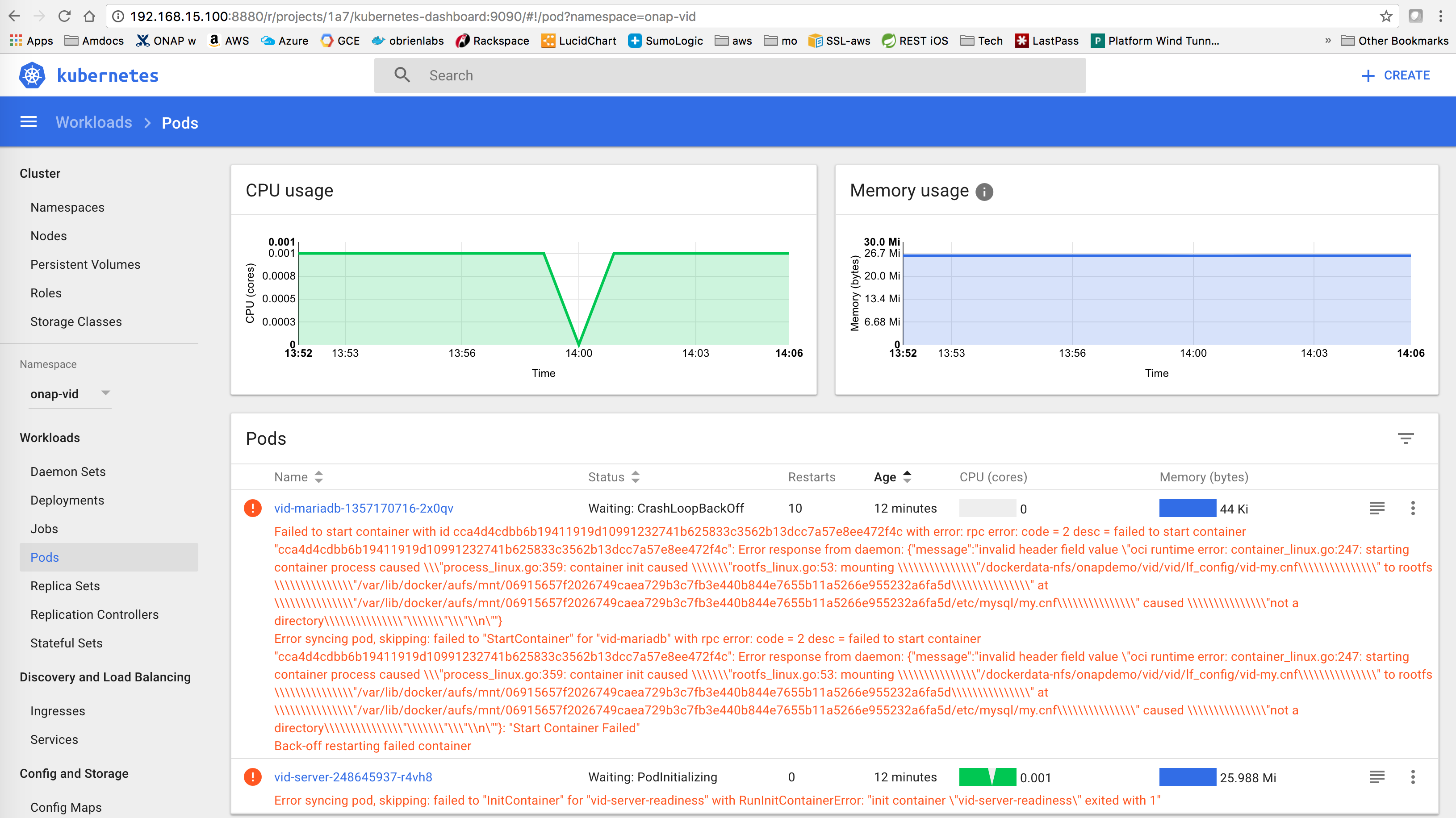

But the 2-pod VID service has failed startup.

Dashboard

start the dashboard at http://localhost:8001/ui with the following command:-

| kubectl proxy & |

|---|

SSH into ONAP containers

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

kubectl exec -it robot -- /bin/bash |

|---|

The pod id should be sufficient

root@obriensystemsucont0:~/onap/oom/kubernetes/oneclick# kubectl describe node obriensystemsucont0 | grep robot Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits --------- ---- ------------ ---------- --------------- ------------- |

|---|

https://jira.onap.org/browse/OOM-47

in queue....

OOM Repo changes

20170629: fix on 20170626 on a hardcoded proxy - (for those who run outside the firewall) - https://gerrit.onap.org/r/gitweb?p=oom.git;a=commitdiff;h=131c2a42541fb807f395fe1f39a8482a53f92c60

Running ONAP Portal UI Operations

see Installing and Running the ONAP Demos

In queue.....

Deprecated Kubernetes Installation Options

| OS | VIM | Description | Status | Nodes | Links |

|---|---|---|---|---|---|

Ubuntu 16.04.2

| Bare Metal VMWare | Rancher | Recommended approach Issue with kubernetes support only in 1.12 (obsolete docker-machine) on OSX | 1-4 | http://rancher.com/docs/rancher/v1.6/en/quick-start-guide/ |

| AWS EC2 | EC2 VM's (not ECS or EBS PaaS) | Interesting - in the queue... | x | ||

| Linux | Bare Metal or VM | Kubernetes Directly on Ubuntu 16 (no Rancher) | In progress | 1 | https://kubernetes.io/docs/setup/independent/install-kubeadm/ https://lukemarsden.github.io/docs/getting-started-guides/kubeadm/ |

OSX Linux | CoreOS | On Vagrant (ThanksYves) | Issue: thecoreosVM 19G size is insufficient | 1 | https://coreos.com/kubernetes/docs/latest/kubernetes-on-vagrant-single.html Implement OSX fix for Vagrant 1.9.6 https://github.com/mitchellh/vagrant/issues/7747 Avoid the kubectllock https://github.com/coreos/coreos-kubernetes/issues/886 |

| OSX | MInikube on VMWare Fusion VM | minikube VM not restartable | 1 | https://github.com/kubernetes/minikube | |

| RHEL 7.3 | VMWare VM | Redhat Kubernetes | services deploy, fix kubectl exec | 1 | https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux_atomic_host/7/html-single/getting_started_with_kubernetes/ |

Bare RHEL 7.3 VM - Multi Node Cluster

In progress as of 20170701

https://kubernetes.io/docs/getting-started-guides/scratch/

https://github.com/kubernetes/kubernetes/releases/latest https://github.com/kubernetes/kubernetes/releases/tag/v1.7.0 https://github.com/kubernetes/kubernetes/releases/download/v1.7.0/kubernetes.tar.gz tar -xvf kubernetes.tar optional build from source vi Vagrantfile go directly to binaries /run/media/root/sec/onap_kub/kubernetes/cluster ./get-kube-binaries.sh export Path=/run/media/root/sec/onap_kub/kubernetes/client/bin:$PATH [root@obrien-b2 server]# pwd /run/media/root/sec/onap_kub/kubernetes/server kubernetes-manifests.tar.gz kubernetes-salt.tar.gz kubernetes-server-linux-amd64.tar.gz README tar -xvf kubernetes-server-linux-amd64.tar.gz /run/media/root/sec/onap_kub/kubernetes/server/kubernetes/server/bin build images [root@obrien-b2 etcd]# make

(go lang required - adjust google docs) https://golang.org/doc/install?download=go1.8.3.linux-amd64.tar.gz |

|---|

CoreOS on Vagrant on RHEL/OSX

(Yves alerted me to this) - currently blocked by the 19g VM size (changing the HD of the VM is unsupported in the VirtualBox driver)

https://coreos.com/kubernetes/docs/latest/kubernetes-on-vagrant-single.html

Implement OSX fix for Vagrant 1.9.6 https://github.com/mitchellh/vagrant/issues/7747

Adjust the VagrantFile for your system

NODE_VCPUS = 1 NODE_MEMORY_SIZE = 2048 to (for a 5820K on 64G for example) NODE_VCPUS = 8 NODE_MEMORY_SIZE = 32768 |

|---|

curl -O https://storage.googleapis.com/kubernetes-release/release/v1.6.1/bin/darwin/amd64/kubectl chmod +x kubectl skipped (mv kubectl /usr/local/bin/kubectl) - already there ls /usr/local/bin/kubectl git clone https://github.com/coreos/coreos-kubernetes.git cd coreos-kubernetes/single-node/ vagrant box update sudo ln -sf /usr/local/bin/openssl /opt/vagrant/embedded/bin/openssl vagrant up Wait at least 5 min (Yves is good) (rerun from here) export KUBECONFIG="${KUBECONFIG}:$(pwd)/kubeconfig" kubectl config use-context vagrant-single obrienbiometrics:single-node michaelobrien$ export KUBECONFIG="${KUBECONFIG}:$(pwd)/kubeconfig" obrienbiometrics:single-node michaelobrien$ kubectl config use-context vagrant-single Switched to context "vagrant-single". obrienbiometrics:single-node michaelobrien$ kubectl proxy & [1] 4079 obrienbiometrics:single-node michaelobrien$ Starting to serve on 127.0.0.1:8001 goto $ kubectl get nodes $ kubectl get service --all-namespaces $ kubectl cluster-info git clone ssh://michaelobrien@gerrit.onap.org:29418/oom cd oom/kubernetes/oneclick/ obrienbiometrics:oneclick michaelobrien$ ./createAll.bash -n onap **** Done ****obrienbiometrics:oneclick michaelobrien$ kubectl get service --all-namespaces ... onap-vid vid-server 10.3.0.31 <nodes> 8080:30200/TCP 32s obrienbiometrics:oneclick michaelobrien$ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system heapster-v1.2.0-4088228293-3k7j1 2/2 Running 2 4h kube-system kube-apiserver-172.17.4.99 1/1 Running 1 4h kube-system kube-controller-manager-172.17.4.99 1/1 Running 1 4h kube-system kube-dns-782804071-jg3nl 4/4 Running 4 4h kube-system kube-dns-autoscaler-2715466192-k45qg 1/1 Running 1 4h kube-system kube-proxy-172.17.4.99 1/1 Running 1 4h kube-system kube-scheduler-172.17.4.99 1/1 Running 1 4h kube-system kubernetes-dashboard-3543765157-qtnnj 1/1 Running 1 4h onap-aai aai-service-346921785-w3r22 0/1 Init:0/1 0 1m ... reset obrienbiometrics:single-node michaelobrien$ rm -rf ~/.vagrant.d/boxes/coreos-alpha/ |

|---|

OSX Minikube

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/darwin/amd64/kubectl chmod +x ./kubectl sudo mv ./kubectl /usr/local/bin/kubectl kubectl cluster-info kubectl completion -h brew install bash-completion curl -Lo minikube https://storage.googleapis.com/minikube/releases/v0.19.0/minikube-darwin-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/ minikube start --vm-driver=vmwarefusion kubectl run hello-minikube --image=gcr.io/google_containers/echoserver:1.4 --port=8080 kubectl expose deployment hello-minikube --type=NodePort kubectl get pod curl $(minikube service hello-minikube --url) minikube stop |

|---|

When upgrading from 0.19 to 0.20 - do a minikube delete

RHEL Kubernetes - Redhat 7.3 Enterprise Linux Host

Running onap kubernetes services in a single VM using Redhat Kubernetes for 7.3

Redhat provides 2 docker containers for the scheduler and nbi components and spins up 2 (# is scalable) pod containers for use by onap.

[root@obrien-mbp oneclick]# docker ps |

|---|

Kubernetes setup

Uninstall docker-se (we installed earlier) subscription-manager repos --enable=rhel-7-server-optional-rpms [root@obrien-mbp opt]# ./kubestart.sh [root@obrien-mbp opt]# ss -tulnp | grep -E "(kube)|(etcd)"

|

|---|

References

OOM-1 - Getting issue details... STATUS

Links

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/