Background

Main purpose of this feature to place VNFCs in sites with SRIOV-NIC enabled compute nodes.

If there are no sites with available SRIOV-NIC compute nodes, OOF can choose next best compute node flavors. Next best compute nodes may not SRIOV-NICs. Of course VNFCs, in this case, assumed to be tested with and without SRIOV-NIC by vendors.

The parameters to instantiate VNFs differ whether SRIOV-NIC based switching or normal vSwitch based switching at the NFVI.

In case of Openstack based cloud-regions, port is expected to be created explicitly with binding:vnic_type as direct. In case of vSwitch based switching, there is no need for explicit creation of port, but port can be created with binding:vnic_type as normal. So, based on flavor, appropriate value is expected to be passed when talking to Openstack.

Some more additional requirements are also to be considered.

- A given VNFC might require multiple SRIOV VFs assigned to it from different PCIe NIC cards.

- A given VNFC might require VFs from different types of PCIe NIC Cards (example: Intel, Melanox etc..)

- A given VNFC might require these VFs coming from different provider networks.

Those requirements can be satisfied, only if there are compute nodes with that kind of NIC hardware and only if ONAP can discover that from various cloud sites.

Overall design

1. Scenario

Let us say, a site has three kinds of compute nodes with respect to SRIOV-NIC

1st set contains, say SRIOV-NIC cards of type XYZ (PCIe vendor ID: 1234 Device ID: 5678) and YUI(PCIe vendor ID:2345 Device ID:6789.

2nd set contains say SRIOV-NIC cards of type ABC (PCIe vendor ID: 4321 Device ID: 8765).

3rd set does not contain any SRIOV NIC cards.

1.1 Openstack Config SRIOV

Openstack configuration:

1. NIC configuration refer to https://docs.openstack.org/neutron/pike/admin/config-sriov.html

2. An example of a site having three types of compute nodes. 1st set of compute nodes have two SRIOV NIC cards with vendor/device id as 1234, 5678 and vendor/device id as 2345 &6789. 2nd set of compute nodes have two SRIOV-NIC of same type 4321 & 8765. And third set of compute nodes don't have any SRIOV-NIC cards. And hence Openstack administrator at the site creates three flavors to reflect the hardware the site has. As you see in this example, it is expected that host aggregate format is followed. The value supposed to be of the form "NIC-sriov-<vendor ID>-<device ID>-<Provider network>

Flavor1

$ openstack aggregate create --property aggregate_instance_extra_specs:sriov_nic=sriov-nic-intel-1234-5678-physnet1:1 aggr11

$ openstack aggregate create --property aggregate_instance_extra_specs:sriov_nic=sriov-nic-intel-2345-6789-physnet2:1 aggr12

$ openstack flavor create flavor1 --id auto --ram 512 --disk 40 --vcpus 4

$ openstack flavor set flavor1 --property aggregate_instance_extra_specs:sriov_nic=sriov-nic-intel-1234-5678-physnet1:1

$ openstack flavor set flavor1 --property aggregate_instance_extra_specs:sriov_nic=sriov-nic-intel-2345-6789-physnet2:1

Flavor2

$ openstack aggregate create --property aggregate_instance_extra_specs:sriov_nic=sriov-nic-intel-4321-8756-physnet3:1 aggr21

$ openstack flavor create flavor2 --id auto --ram 512 --disk 40 --vcpus 4

$ openstack flavor set flavor2 --property aggregate_instance_extra_specs:sriov_nic=sriov-nic-intel-4321-8756-physnet3:1

Flavor3

$ openstack flavor create flavor3 --id auto --ram 512 --disk 40 --vcpus 4

1.2 Mutli-cloud discovery

When it reads the flavors information from openstack site, if the pci_passthrough alias start with NIC-sriov, then it assumes that it is SRIOV NIC type.

Next two integers are meant for vendor id and device id.

If it is present after device id, it is assumed to be provider network.

As part of discovery, it populates the A&AI with two PCIe features for Flavor1.

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

Hpa-attribute-key | Hpa-attribute-value |

pciVendorId | 1234 |

pciDeviceId | 5678 |

pciCount | 1 |

directive | [ {"attribute_name": "vnic-type", "attribute_value": "direct"}, {"attribute_name": "physical-network", "attribute_value": "physnet1"}, ] |

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

Hpa-attribute-key | Hpa-attribute-value |

pciVendorId | 2345 |

pciDeviceId | 6789 |

pciCount | 1 |

directive | [ {"attribute_name": "vnic-type", "attribute_value": "direct"}, {"attribute_name": "physical-network", "attribute_value": "physnet2"}, ] |

1.3 SO call OOF

SO will get pciVendorId, pciDeviceId and interfaceType from CSAR file, then call to OOF. OOF will response homing information to SO, SO don't interpret it and pass through it to Multi-cloud.

HOT template that uses parameter to be filled up based on OOF output (Example)

|

1.4 VF-C call OOF

VF-C will get pciVendorId, pciDeviceId and interfaceType from CSAR file, then call to OOF. OOF will response homing information to VF-C.

VF-C get OOF response, It will create network of SRIOV-NIC using physical network.

Then, It will create port based on network using interface type.

1.5 OOF Response

OOF just check /cloud-infrastructure/cloud-regions/cloud-region/{cloud-owner}/{cloud-region-id}/flavors/flavor/{flavor-id}/hpa-capabilities

OOF will match the SRIOV information along with the constraint provided by Policy and add extra attributes inside the assignmentInfo data block when returning the response to SO and VF-C.

Sample looks like below.

"assignmentInfo": [ { "key":"locationType", { "key":"vimId", { "key":"oofDirectives","directives":[ { "vnfc_directives":[} ] |

For the newly added oofDirectives, we only return the vnfc part. For example:

"vnfc_directives": [ { "vnfc_id":"","directives":[ { "directive_name": "flavor_directive","attributes": [ ] }, { "directive_name": "vnic-info2",] |

It is worth noting that the vnic-type is converted from interfaceType in OOF.

If interfaceType is SRIOV-NIC, then OOF returns 'vnic-type' as 'direct', If interfaceType is not SRIOV-NIC, OOF return 'vnic-type' as 'normal'.

1.6 Policy Data

#

#Example 1: vFW, Pcie Passthrough

#one VNFC(VFC) with two Pcie Passthrough requirements

#

{

"service": "hpaPolicy",

"policyName": "oofCasablanca.hpaPolicy_vFW",

"description": "HPA policy for vFW",

"templateVersion": "0.0.1",

"version": "1.0",

"priority": "3",

"riskType": "test",

"riskLevel": "2",

"guard": "False",

"content": {

"resources": "vFW",

"identity": "hpaPolicy_vFW",

"policyScope": ["vFW", "US", "INTERNATIONAL", "ip", "vFW"],

"policyType": "hpaPolicy",

"flavorFeatures": [

{

"id" : "<vdu.Name>",

"type":"vnfc/tocsa.nodes.nfv.Vdu.Compute",

"directives":[

{

"directive_name":"flavor",

"attributes":[

{

"attribute_name":" oof_returned_flavor_for_firewall ", //Admin needs to ensure that this value is same as flavor parameter in HOT

"attribute_value": "<Blank>"

}

]

}

]

"flavorProperties": [

{

"hpa-feature": "pciePassthrough",

"mandatory": "True",

"architecture": "generic",

"directives" : [

{

"directive_name": "pciePassthrough_directive",

"attributes": [

{ "attribute_name": "oof_returned_vnic_type_for_firewall_protected",

"attribute_value": "direct"

},

{ "attribute_name": "oof_returned_provider_network_for_firewall_protected",

"attribute_value": "physnet1"

}

]

}

],

"hpa-feature-attributes": [

{ "hpa-attribute-key": "pciVendorId", "hpa-attribute-value": "1234", "operator": "=", "unit": "" },

{ "hpa-attribute-key": "pciDeviceId", "hpa-attribute-value": "5678", "operator": "=", "unit": "" },

{ "hpa-attribute-key": "pciCount", "hpa-attribute-value": "1", "operator": ">=", "unit": "" }

]

},

{

"hpa-feature": "pciePassthrough",

"mandatory": "True",

"architecture": "generic",

"directives" : [

{

"directive_name": "pciePassthrough_directive",

"attributes": [

{ "attribute_name": "oof_returned_vnic_type_for_firewall_unprotected",

"attribute_value": "direct"

}

{ "attribute_name": "oof_returned_provider_for_firewall_unprotected",

"attribute_value": "physnet2"

}

]

}

],

"hpa-feature-attributes": [

{ "hpa-attribute-key": "pciVendorId", "hpa-attribute-value": "3333", "operator": "=", "unit": "" },

{ "hpa-attribute-key": "pciDeviceId", "hpa-attribute-value": "7777", "operator": "=", "unit": "" },

{ "hpa-attribute-key": "pciCount", "hpa-attribute-value": "1", "operator": ">=", "unit": "" }

]

}

]

}

]

}

}

2. ONAP Module Modify

| Module Name | Modification | status | owner | comments |

|---|---|---|---|---|

| SDC | Add SR-IOV NIC attributes. | Completed | Alex Lianhao | |

| Policy | Add SR-IOV NIC attributes. | In Progress | Libo | |

| VF-C | Add create port process. | In Progress | Haibin | |

| SO | Add create port process. | In Progress | Marcus | |

| OOF | Add the process for cloud region HPA capabilities | In Progess | Ruoyu | |

| AAI | Nothing, we just add one hpa-attribute-key and hpa-attribute-value | Completed | - | |

| ESR | Add SR-IOV NIC info to cloud extra info. | In Progress | Haibin | |

| Multi-cloud | Register SR-IOV info to AAI. | In Progress | Haibin | |

| VIM | Config SR-IOV NIC and create network with SR-IOV NIC. | In Progress | Haibin |

3. SR-IOV NIC related Capability in Data model

This is refer to Supported HPA Capability Requirements(DRAFT)#LogicalNodei/ORequirements

Logical Node i/O Requirements

Capability Name | Capability Value | Descriptiopn |

|---|---|---|

pciVendorId | PCI-SIG vendor ID for the device | |

pciDeviceId | PCI-SIG device ID for the device | |

pciNumDevices | Number of PCI devices required. | |

pciAddress | Geographic location of the PCI device via the standard PCI-SIG addressing model of Domain:Bus:device:function | |

pciDeviceLocalToNumaNode | required notRequired | Determines if I/O device affinity is required. |

Network Interface Requirements

Capability Name | Capability Value | Description |

|---|---|---|

nicFeature | LSO, LRO, RSS, RDMA | Long list of NIC related items such as LSO, LRO, RSS, RDMA, etc. |

dataProcessingAccelerationLibray | Dpdk_Version | Name and version of the data processing acceleration library required. Orchestration can match any NIC that is known to be compatible with the specified library. |

interfaceType | Virtio, PCI-Passthrough, SR-IOV, E1000, RTL8139, PCNET | Network interface type |

vendorSpecificNicFeature | TBA | List of vendor specific NIC related items. |

4. Reference

https://docs.openstack.org/neutron/pike/admin/config-sriov.html

https://builders.intel.com/docs/networkbuilders/EPA_Enablement_Guide_V2.pdf of "2.3 Support for I/O Passthrough via SR-IOV"

Supported HPA Capability Requirements(DRAFT)#LogicalNodei/ORequirements

41 Comments

Alexander Vul

Anatoly Andrianov - will this work for the Nokia SO contribution?

Bin Yang

Some critical detail need to be clarified:

1, what kind of SR-IOV information passed via csar? pci vendor/device id?

2, if yes to first question , then you may need figure out how these SR-IOV correlate to physnet name according to https://builders.intel.com/docs/networkbuilders/EPA_Enablement_Guide_V2.pdf

3, Is there any change needed in SO to perform 5.3 ? If yes, who is the owner from SO team?

Thanks

Huang Haibin

Thank you for your comments.

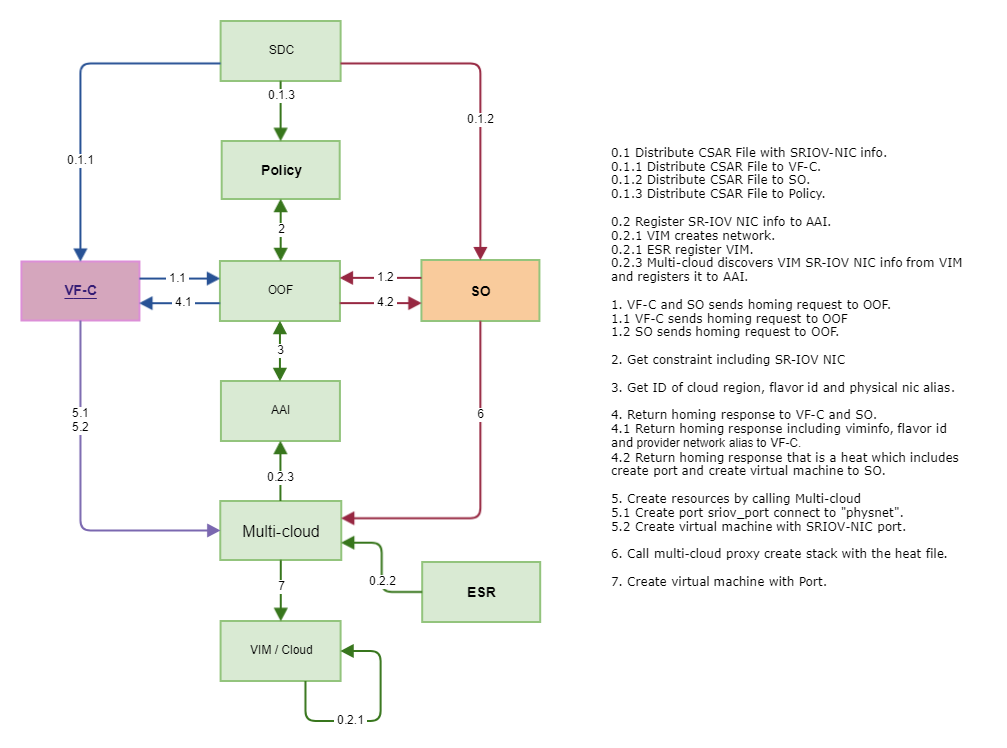

Please see new flow.

Srinivasa Addepalli

Hi Haibin,

My understanding is that the HEAT template have support for SRIOV-NIC already. So, the HEAT service at the Cloud-region will take care of creating SRIOV port and associating with server VM. But what we need to do as part of this effort is to support Cloud-regions that don't have SRIOV-NIC available Note that OOF selects the best possible cloud-region based on the constraint policies. That is, OOF may select non SRIOV-NIC cloud region if SRIOV-NIC is not made mandatory in the OOF HPA policy. Since, HEAT template is prepopulated with SRIOV-NIC support, there is no choice other than to make SRIOV-NIC feature mandatory in HPA policy in R2.

As part of this feature, we want to give flexibility of making SRIOV-NIC feature either mandatory or optional in the HPA policy. There could be cases where a given VNFC might have been tested with both SRIOV NIC and with normal virtio-net drivers. In this case, VNF onboarding might involve two HOT template fragments - One with SRIOV-NIC and another without it. At run time, based on the OOF output, SO+Multi-Cloud is expected to choose the right template fragment and combine that with rest of HOT while instantiating VNF.

Srini

Srinivasa Addepalli

Hi Huang Haibin,

As part of HOT templates, I believe following is one method to dynamically update SRIOV vs normal. Here, I am taking example of two provider networks with each provider network is realized using different physical Ethernet port with VLANs. Here the assumption is that virtual firewall appliance works with both SRIOV NICs as well as normal virtio vNICs. Assuming that SRIOV-NIC is optional in HPA policies. In this case, based on cloud-region and flavor selected by OOF, "binging:vnic_type" needs to be modified dynamically. Hence, this is made as a parameter.

parameters: oof_returned_vnic_type_for_firewall: type:string description:This parameter value is determined by OOF. If OOF selects the region and flavor that support SRIOV-NICs, then OOF returns 'direct'. If not, it returns 'normal' resources: network_protected: type: OS::Neutron::Net properties: name: mynetwork_protected network_type: vlan physical_network: physnet1 segmentation_id: 100 network_unprotected: properties: name: mynetwork_unprotected network_type: vlan physical_network: physnet2 segmentation_id: 200 /** Subnet details are not provided for brevity**/ protected_interface: type: OS::Neutron::Port properties: name: protected_port network_id : { get_resource: network_protected } binding:vnic_type: {get_param: oof_returned_vnic_type_for_firewall} unprotected_interface: type: OS::Neutron::Port properties: name: unprotected_port network_id : { get_resource: network_unprotected } binding:vnic_type: {get_param: oof_returned_vnic_type_for_firewall} virtual_firewall_appliance: type: OS::Nova::Server image: .... networks: - port: {get_resource: protected_interface} - port: {get_resource: unprotected_interface}OOF role : OLF is expected to return to SO whether the Cloud-region & flavor selected support SRIOV-NICs or not.

SO : Expected to pass information returned by SO to Multi-Coud.

Multi-Cloud openstack plugins: Create HEAT parameter before calling Create Stack API of openstack. binding:vnic_type to be 'direct' in SRIOV-NIC feature is supported or 'normal' if SRIOV-NIC is not supported.

Ruoyu Ying

Hi Srini,

One question, so for the OOF part, do you mean our current response is sufficient enough to support SRIOV-NIC or we need to add one more parameter to specify the interface type?

Thanks.

Ruoyu.

Srinivasa Addepalli

Ruoyu Ying

OOF to SO (Response) needs to be extensible, if it is not already. Please work with Marcus Williams on this API definition to ensure that the response data is KV pair based to pass current information,SRIOV-NIC support information and any future information. SO team wants to make sure that there are no code changes whenever new information is passed by OOF for Multi-Cloud consumption.

Now the question is how does OOF know that the flavor supports SRIOV-NIC feature. In Beijing timeframe, there was a thought to use some naming convention to represent SRIOV feature. Huang Haibin, can you help in explaining on how SRIOV feature is discovered by Multi-Cloud plugins?

Ruoyu Ying

Yes. I think it is extensible now, as one part inside the response of OOF is a KV pair. You may add more information based on your need.

Bin Yang

I am supportive this approach. Thanks for your great effort

Huang Haibin

Hi Srini,

I think multi-cloud plugins can't automatic discover SR-IOV NIC feature, VIM adminitrator needs to register SR-IOV NIC information to AAI using ESR.

Then, AAI will have below information.

hpa-capability-id="q236fd3d-0b15-11w4-81b2-6210efc6dff9",

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 1}

pciVendorId

{value: "15B3"}

If we want to automatic discover SR-IOV NIC feature, we need to create aggregate and create association between flavor and aggregate just like you said

SRINI> But, I was talking about guidelines to Openstack admin to create a hostaggregate metadata variable called sriov-nic. And add sriov-nic=true in case of flavors that represent SRIOV-NIC nodes and sriov-nic=-false in case of flavors for other nodes. Multi-Cloud plugin as part of reading the flavors would look at the sriov-nic metadata and populate an HPA feature SRIOV-NIC with attribute such as sriov-present attribute to true or false.

[Haibin] I have considered this method. If we put SRIOV-NIC info to flavor. We need to create many flavors because we have many combinations like below.

Flavor1: CPU 2, Mem 4096M, CPU Pinning

Flavor2: CPU 2, Mem 4096M, SRIOV-NIC

Flavor3: CPU 4, Mem 8192M, SRIOV-NIC

Flavor4: CPU 4, Mem 8192M, CPU Pinning

we have two questions:

First, user want to use one combination including CPU Pinning, SRIOV-NIC and Huge Pages, but we will can't found it expect we register all combinations (this is very large 10! ) of HPA features.

Second, If we want to create new flavor, we can’t create associated between host aggregate and flavor.

Maybe we can do it like below, if we assume that some compute nodes may have Intel SRIOV-NIC, some may have Mellanox SRIOV-NIC and some may not have any SRIOV-NIC cards.

we maybe consider use availability-zone like below:

$ nova aggregate-create sriov-aggregate sriov-az

$ nova aggregate-add-host $sriov-aggregate-id compute-1

$ nova boot --image cirros --flavor 1 --nic net-name=net1 --availability-zone sriov-az vm6

then we can register sriov info to AAI like below.

hpa-capability-id="q236fd3d-0b15-11w4-81b2-6210efc6dff9",

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 1}

pciVendorId

{value: "15B3"}

Srinivasa Addepalli

Huang Haibin, Ruoyu Ying and Dileep Ranganathan,

It is possible to avoid storing physical interfaces aliases anywhere in HPA. See if it work (it was discussed during Beijing time frame). I hope this works.

Scenario

Let us say, a site has three kinds of compute nodes with respect to SRIOV-NIC

1st set contains, say SRIOV-NIC cards of type XYZ (PCIe vendor ID: 1234 Device ID: 5678)

2nd set contains say SRIOV-NIC cards of type ABC (PCIe vendor ID: 4321 Device ID: 8765)

3rd set does not contain any SRIOV NIC cards.

Openstack site administrator is expected to do following:

1) create the sriov-nic variable. I am taking example of using aggregate metadata variable, but one might use traits for openstack releases pike and beyond.

In nova.conf, PCI aliases are expected to be created in the manner where alias itself has PCIe vendor ID and device ID as ONAP has no way to read the nova.conf file.

As per the example, there would be two entries in nova.conf

pci_alias={"vendor_id":"1234", "product_ id":"5678", "name":"XYZNIC-1234-5678", “device_ type”:”NIC”}

pci_alias={"vendor_id":"4321", "product_ id":"8765", "name":"ABCNIC-4321-8765", “device_ type”:”NIC”}

Associate NICs and SRIOV capabilty to flavors.

Above is not crazy as this is something normally done.

ONAP Enhancements

Multi-Cloud Discovery:

As part of discovery of capabilities from Openstack region, it is expected to populate the flavors and HPA features in the A&AI.

Multi-Cloud discovery of PCIe devices reads pci_passthrough property and extracts vendorID and device ID from the value string and populate A&AI PCIe feature. I guess this is already done in Beijing for any PCIe support.

Multi-Cloud needs to be enahnced to erad aggregate instance extra specs variable sriov-nic. If it is true, then it should populate new HPA feature called SRIOV-NIC.

For the example, as part of Multi-Cloud discovery, it creates three flavors in A&AI

In flavor1, following HPA features are created:

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 2}

pciVendorId

{value: "1234"}

hpa-feature=”nic”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

nic-type

{value:sriov}

In flavor2, following are created

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 2}

pciVendorId

{value: "4321"}

hpa-feature=”nic”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

nic-type

{value:sriov}

In flavor3

hpa-feature=”nic”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

nic-type

{value:normal}

OOF Enhancements

There may not be any changes in the HPA filter in my view. But, it needs to pass sriov nic related information back to the SO as part of homing request from SO (Let us say there are two VMs in the VNF). Some changes are required to take care of that in OOF. For example, policy should have information about parameter names in the value to be used to send the information back to SO as follows.

{ "key": "SRIOV-NIC"

"value":{

oof_returned_vnic_type_for_cde: direct

oof_returned_vnic_type_for_ghk: normal

}

Multi Cloud runtime enhancement

If the request to create stack in MC is issued by SO, it needs to be enhanced to have following logic

Use the value section of SRIOV-NIC and pass them as parameters when calling HEAT API.

Example Policy

Say a VNF has firewall VM, Packet generator VM and Sink VM.

Let us say that firewall VM requires 2 SRIOV-NIC VFs of ABC NIC and packet generator requires 1 SRIOV-NIC VF and Sink VM requires no SRIOV-NIC VF.

Let us assume that parameter names used for vnic-type in HEAT template is as follows:

For firewall VM, the parameter name is oof_returned_vnic_type_for_firewall

For packet generator VM, the parameter name is oof_returned_vnic_type_for_generator

For sink VM, the parameter name is oof_returned_vnic_type_for_sink

Policy would look like this:

"policyType": "hpaPolicy", "flavorFeatures": [ { "flavorLabel": "flavor_label_vm_firewall", "sriovNICLabel": "oof_returned_vnic_type_for_firewall "flavorProperties":[ { "hpa-feature" : "pciePassthrough", "mandatory" : "True", "architecture": "generic", "hpa-feature-attributes": [ {"hpa-attribute-key":"pciVendorId", "hpa-attribute-value": "1234","operator": "=", "unit": ""}, {"hpa-attribute-key":"pciDeviceId", "hpa-attribute-value": "5678","operator": "=", "unit": ""}, {"hpa-attribute-key":"pciCount", "hpa-attribute-value": "2","operator": "=", "unit": ""} ] }, { "hpa-feature" : "nic", "mandatory" : "True", "architecture": "generic", "hpa-feature-attributes": [ {"hpa-attribute-key": "nic-type", "hpa-attribute-value": "sriov", "operator": "=", "unit": ""} ] } }, { "flavorLabel": "flavor_label_vm_generator", "sriovNICLabel": "oof_returned_vnic_type_for_generator" "flavorProperties":[ { "hpa-feature" : "pciePassthrough", "mandatory" : "True", "architecture": "generic", "hpa-feature-attributes": [ {"hpa-attribute-key":"pciVendorId", "hpa-attribute-value": "1234","operator": "=", "unit": ""}, {"hpa-attribute-key":"pciDeviceId", "hpa-attribute-value": "5678","operator": "=", "unit": ""}, {"hpa-attribute-key":"pciCount", "hpa-attribute-value": "1","operator": "=", "unit": ""} ] }, { "hpa-feature" : "nic", "mandatory" : "True", "architecture": "generic", "hpa-feature-attributes": [ {"hpa-attribute-key": "nic-type", "hpa-attribute-value": "sriov", "operator": "=", "unit": ""}, ] } }, { "flavorLabel": "flavor_label_vm_sink", "sriovNICLabel": "oof_returned_vnic_type_for_sink" "flavorProperties":[ { "hpa-feature" : "nic", "mandatory" : "False", "architecture": "generic", "hpa-feature-attributes": [ {"hpa-attribute-key": "nic-type", "hpa-attribute-value": "normal", "operator": "=", "unit": ""}, ] } }, ]OOF Enhacements

In Casablanca, we may need to go for label approach. But it is good to make that also extensible. In R2, every time we add new label, code may have to be changed. It is good if label also made map based. That is, if it is possible to do this,it would be great.

Instead of this

If it is represented as this, I feel it is extensible.

"Labels":[ { "label-key":""flavorLabel", "label-value":"flavor_label_vm_firewall"}, { "label-ley":"sriovNicLabel", "label-value":"oof_returned_vnic_type_for_firewall"} ]This way, we don't need to change the OOF common code, only the HPA filter need to be enhanced every time new label is needed.

Ofcourse, one time change of OOF common code that interpret labels array is needed though .

.

Srinivasa Addepalli

Huang Haibin, Ruoyu Ying and Dileep Ranganathan, please let me know if you see any issues with above approach.

Ruoyu Ying

Hi Srini,

One thing here, for the newly added hpa-feature 'nic', in your sample AAI flavors, the hpa-attribute-key is 'nic-type'. However, in your sample policy, it is shown as 'sriov'. I think they have to be the same so that OOF can match and compare their values.

Next question is that if the hpa-attribute-key is set as 'nic-type' or 'sriov', is it a new attribute that will be added inside TOSCA or does it refer to the 'interfaceType' inside TOSCA?

And also is the value 'direct' or 'normal' generated internally by OOF, according to whether there's a mandatory requirement on SRIOV?

Thanks.

Ruoyu

Srinivasa Addepalli

One thing here, for the newly added hpa-feature 'nic', in your sample AAI flavors, the hpa-attribute-key is 'nic-type'. However, in your sample policy, it is shown as 'sriov'. I think they have to be the same so that OOF can match and compare their values.

SRINI> Good catch. I vacillated between these two. And hence the mismatch. Thanks for pointing that out. I corrected it now.

Next question is that if the hpa-attribute-key is set as 'nic-type' or 'sriov', is it a new attribute that will be added inside TOSCA or does it refer to the 'interfaceType' inside TOSCA?

SRINI> That is something we need to think about as part of TOSCA definition. Need to work with @Alex on this. But for now, let us assume that TOSCA DM to HPA policy conversion takes care of this.

And also is the value 'direct' or 'normal' generated internally by OOF, according to whether there's a mandatory requirement on SRIOV?

SRINI> Now that we are going with nic-type, value of this can be used by OOF to pass the information back to SO.

Huang Haibin

I summed up that we mainly have the following different places:

1. We may encounter three situations in one region.

First, All compute nodes have the same SRIOV-NIC.

Second, some compute nodes have the same SRIOV-NIC, some compute nodes haven’t SRIOV-NIC.

Third, some compute nodes have the same INTEL SRIOV-NIC, some compute nodes have the same ABC SRIOV-NIC.

I think we don't need create host aggregates and put into flavor in First and Second situations.

we just need create host aggregates and put into flavor in Third situations.

2. AAI Presentation

I think it is like below:

hpa-capability-id="q236fd3d-0b15-11w4-81b2-6210efc6dff9",

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 1}

pciVendorId

{value: "15B3"}

we refer to Supported HPA Capability Requirements(DRAFT)#LogicalNodei/ORequirements which is created by Alexander Vul

If you want to change {interfaceType: {value:"SR-IOV"}} to {nic-type: {value: "sriov"}, you need discuss to Alex or Let policy to convert it.

About providerPhyNetwork, we have discussed it with Bin, Yang. I think we need it.

About vnicType, why do you think we don't need it?

3. If we use flavor + aggregate, we need register many HPA flavors to AAI.

We assume that we have five kinds of basic flavors with different basic capabilities(such as vcpu, mem and storage), along with about 10 kinds of HPA features(each of them may include two different cases). In order to fulfill all these possible situations, we have to create about 5*(2^10) kinds of flavors which is a huge number we cannot afford. And also, it would be hard for OOF to give out homing solutions through matching all these flavors.

why we need to register all the combinations, because we don't know what resources the VNF needs. If we don't enumerate all the combinations, then we don't have a combination for the VNF, then VF-C must create flavor. When creating flavor, It can't be associated with host aggregate because VF-C can't know what host aggregate is.

Srinivasa Addepalli

Hi Haibin,

We need to explore using traits instead of hostaggreate as host aggregate is not a preferred mechanism.

On your table, why do you require physnet to be mentioned. On top of it, how does Multi-Cloud know to populate this information on PCIe basis?

Dileep Ranganathan

Srinivasa Addepalli Huang Haibin Ruoyu Ying

Shall I make a suggestion to use directives as an hpa-attribute itself? This is applicable to all hpa attributes in general, if we want future hpa attributes to support directives and will be simply passed through oof to so ultimately to multicloud.

Since the attribute match is made from the capabilities requirement(from policy) to flavor capabilities(discovered from multicloud), adding a new "metadata" attribute like directive won't do any harm and will make it more generic.

Example:

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 1}

pciVendorId

{value: "15B3"}

Huang Haibin

A good method.

Srinivasa Addepalli

Dileep Ranganathan, Huang Haibin, Shankaranarayanan Puzhavakath Narayanan and Ruoyu Ying,

Normally, labels are defined by a person who has role to create HEAT templates. They can give any label name he/she wants.

Moroever, if a VNF contains multiple VNFCs. label name need to be different for each NOVA::Server of the HEAT template.

So, Multi-Cloud can't assume the label names.

How does OOF HPA filter logic work. Once it reads the directives from the flavors, what does it do?

Srini

Dileep Ranganathan

Srini Each VNF demand will have a flavorLabel passed from SO. When the HPA constraint finishes execution, it will find and associate the best flavor in the cloud-region with the flavorLabel and pass it back in candidate_list.

Srinivasa Addepalli

Dileep Ranganathan, I still don't understand. Multiple VNFs can match the same flavor. Each VNFC of VNF might have its own label. If we put a label in the directive, how does it work for multiple VNFs?

Ruoyu Ying

Hi Srinivasa Addepalli, according to my understanding that this label is not passed from SO, but from Policy. In the R2, we had one flavor_label and let this flavor_label to identify each vdu. So once OOF reads the data from AAI and find out the best flavor for that vdu, It will construct a KV pair, the flavor_label will be used as the key and the flavor name will be used as the value, and then pass it back inside solution. But for the precise value that maps to that flavor_label inside DM, still need to confirm with Alexander Vul.

Also, for our current design, I've got one thought that we may replace the 'flavor_label' by the 'vnfc_id' (should have a similar meaning as vdu_id I think), since one vdu should have only one flavor. Which means that inside that data block there won't be the 'flavor_label_1'' any more but may substitute with just a string 'flavor_name'. Also it will be better to work as a pair of 'key' and 'value' I think.

{ "vnfc_id":"<vdu_Id from DM>",{ "directive_name": "flavor_directive",For the 'sriov_nic_label', I agree with you that Multicloud can't get that. But I'm little confused about the value that should put inside 'sriov-nic-label'. It should be used to map with a specific nic, right? As policy got most of its information from DM, but in your example this value comes from HEAT template. How can policy get that value?

Thanks.

Huang Haibin

we discussed with VF-C team, they also advises to replace the 'flavor_label' by the 'vnfc_id' (should have a similar meaning as vdu_id we think, If you know, can you help to point it in https://github.com/lianhao/vCPE_tosca/blob/master/vgw/Definitions/MainServiceTemplate.yaml)

Dileep Ranganathan

My bad. Yes. Ruoyu is correct. It is passed from the policy. If the cause of confusion is due to the name sriov-nic-label. I used a random word at that time, what I really meant was to use what multicloud needs. Multicloud can decide what Key and Value to pass as suited. We do match for per VDU so we associate the directives if present to that label in the code not in the schema. If this is not clear, then we can have a discussion. My only concern is not to use if/else conditions for specific HPA feature as OOF wants to maintain the HPA and all other constraints generic.

Srinivasa Addepalli

Hi Dileep and Ruoyu, (Dileep Ranganathan and Ruoyu Ying)

May be I did not explain the scenario properly.

Say that there are two VNFs- vCPE VNF and vFW VNF.

Say that vCPE VNF has two VNFCs - vGW & XYZ VMs.

Say that vFW VNF has three VNFCs - vFirewall, vGenerator, vSink.

Say that vFirewall has requirement for two SRIOV VF ports

Say for now that all other VNFCs including vCPE has a need for only one SRIOV VF.

Say that vCPE VNF HEAT template author uses following labels for SRIOV VF.

Say that vFW VNF HEAT template autor uses following labels for SRIOV VFs

Let us say for discussion sake, all VNFCs match the same flavor.

Now the question is:

Detailed answer (with policy example and HPA feature directive example for above scenario) would help me to ensure that we are on the same page on the problem statement and the understand the solution

Srini

Srinivasa Addepalli

Hi Dileep (Attention Dileep Ranganathan and Ruoyu Ying), are you saying that directive would be authored by the policy? If so, that is what I was thinking too. If that is the case, it is good if you give example of how policy would look like with respect to flavor directive and SRIOV-NIC related directive. I would assume that flavor directive would be part of the flavor section and SRIOV_NIC directive would be part of HPA feature in the policy.

Dileep Ranganathan

Srini , Ruoyu Ying

This is what we also propose. But we need to know how the mapping between the label and port and the requirement(SRIOV needed or not is decided). Also according to analysis from Ruoyu, OOF HPA constraint filter code needs to handle multiple HPA features with same name.

AAI Representation WIP DRAFT

hpa-feature=”pciePassthrough”,

architecture=”{hw_arch}",

version=”v1”,

hpa-attribute-key

hpa-attribute-value

pciCount

{value: 1}

pciVendorId

{value: "15B3"}

Policy Representation WIP DRAFT

{ "service": "hpaPolicy", "policyName": "oofCasablanca.hpaPolicy_vFW", "description": "HPA policy for vFW", "templateVersion": "0.0.1", "version": "1.0", "priority": "3", "riskType": "test", "riskLevel": "2", "guard": "False", "content": { "resources": "vFW", "identity": "hpaPolicy_vFW", "policyScope": [ "vFW", "US", "INTERNATIONAL", "ip", "vFW" ], "policyType": "hpaPolicy", "flavorFeatures": [ { "flavorLabel": "flavor_label_vm_firewall", "flavorProperties": [ { "hpa-feature": "pciePassthrough", "mandatory": "True", "architecture": "generic", "directive" : { "NICDirective" : { "oof_returned_vnic_type_for_firewall_protected" : "vnic-type", // "{External_Label_Association}" : "{directive_type}" // Use the directive_type to find the value from directive hpa-attribute-KV pair and replace with appropriate value. /* Another approach.. To search the directive easier, We can reverse the mapping here and when the values are found we can create a key with the External_Label and value from directive For Eg: "vnic_type": "oof_returned_vnic_type_for_firewall_protected", "{directive_type}" : "{External_Label_Association}" */ } }, "hpa-feature-attributes": [ { "hpa-attribute-key": "pciVendorId", "hpa-attribute-value": "1234", "operator": "=", "unit": "" }, { "hpa-attribute-key": "pciDeviceId", "hpa-attribute-value": "5678", "operator": "=", "unit": "" }, { "hpa-attribute-key": "pciCount", "hpa-attribute-value": "1", "operator": ">=", "unit": "" } ] }, { "hpa-feature": "pciePassthrough", "mandatory": "True", "architecture": "generic", "directive" : { "NICDirective" : { "oof_returned_vnic_type_for_firewall_unprotected" : "vnic_type", } }, "hpa-feature-attributes": [ { "hpa-attribute-key": "pciVendorId", "hpa-attribute-value": "3333", "operator": "=", "unit": "" }, { "hpa-attribute-key": "pciDeviceId", "hpa-attribute-value": "7777", "operator": "=", "unit": "" }, { "hpa-attribute-key": "pciCount", "hpa-attribute-value": "1", "operator": ">=", "unit": "" } ] } ] }, { "flavorLabel": "flavor_label_vm_generator", "flavorProperties": [ { "hpa-feature": "pciePassthrough", "mandatory": "True", "architecture": "generic", "directive" : { "NICDirective" : { "oof_returned_vnic_type_for_generator" : "vnic_type" } }, "hpa-feature-attributes": [ { "hpa-attribute-key": "pciVendorId", "hpa-attribute-value": "1234", "operator": "=", "unit": "" }, { "hpa-attribute-key": "pciDeviceId", "hpa-attribute-value": "5678", "operator": "=", "unit": "" }, { "hpa-attribute-key": "pciCount", "hpa-attribute-value": "1", "operator": "=", "unit": "" } ] } ] }, { "flavorLabel": "flavor_label_vm_sink", "sriovNICLabel": "oof_returned_vnic_type_for_sink", "flavorProperties": [ { "hpa-feature": "nic", "mandatory": "False", "architecture": "generic", "directive" : { "NICDirective" : { "oof_returned_vnic_type_for_generator" : "vnic_type" } }, "hpa-feature-attributes": [ { "hpa-attribute-key": "nic-type", "hpa-attribute-value": "normal", "operator": "=", "unit": "" } ] } ] } ] } }Srinivasa Addepalli

I like this. But it requires slight modifications.

If that is the case, why do you need full 'directive' in HPA feature in A&AI. I feel that it should not be there and that was my original concern and hence it needs to be removed. We just need information that is expected to be filled up by the OOF

Now to the suggestions to the policy:

Keeping above comments in mind. I feel policy should look like this:

"policyType":"hpaPolicy","flavorFeatures": [{"directives":[

{"directive_name":"flavor",

"attributes":[

{

"attribute_name":"oof_returned_flavor_for_firewall", //Admin needs to ensure that this value is same as flavor parameter in HOT

"attribute_value": "<Blank - filled by OOF>"

}

"flavorProperties": [{"hpa-feature":"pciePassthrough","mandatory":"True","architecture":"generic","directives":[

{"directive_name":"SRIOV-NIC",

"attributes":[

{

"attribute_name":"oof_returned_vnic_type_for_firewall_protected_interface",

"attribute_value": "direct"

},

{"attribute_name":"oof_returned_provider_network_for_firewall_protected_interface",

"attribute_value": "physnet1"

}

]

"hpa-feature-attributes": [{"hpa-attribute-key":"pciVendorId","hpa-attribute-value":"1234","operator":"=","unit":""},{"hpa-attribute-key":"pciDeviceId","hpa-attribute-value":"5678","operator":"=","unit":""},{"hpa-attribute-key":"pciCount","hpa-attribute-value":"1","operator":">=","unit":""},{"hpa-attribute-key":"cardType","hpa-attribute-value":"sriov-nic","operator":"=","unit":""},{"hpa-attribute-key":"providerNetwork","hpa-attribute-value":"physnet1","operator":"=","unit":""},]},{"hpa-feature":"pciePassthrough","mandatory":"True","architecture":"generic","directives":[

{"directive_name":"SRIOV-NIC",

"attributes":[

{"attribute_name":"oof_returned_vnic_type_for_firewall_unprotected_interface",

"attribute_value": "direct"

},

{"attribute_name":"oof_returned_provider_network_for_firewall_unprotected_interface",

"attribute_value": "physnet2"

}

]

"hpa-feature-attributes": [{"hpa-attribute-key":"pciVendorId","hpa-attribute-value":"1234","operator":"=","unit":""},{"hpa-attribute-key":"pciDeviceId","hpa-attribute-value":"5678","operator":"=","unit":""},{"hpa-attribute-key":"pciCount","hpa-attribute-value":"1","operator":">=","unit":""},{"hpa-attribute-key":"cardType","hpa-attribute-value":"sriov-nic","operator":"=","unit":""},{"hpa-attribute-key":"providerNetwork","hpa-attribute-value":"physnet2","operator":"=","unit":""},]},Dileep Ranganathan

Srini Let's for the sake of discussion keep flavor mapping as it is now.

If the cardType is passed from policy then the vnic-type is already known at that time (provided there is matching capability). So OOF only needs to check if there is a match or not and just return the label and value. AAI also don't need the directive then.

If the admin creates the policy saying I need a sriov-nic cardType with corresponding, pciVendorId, pciDeviceId etc. Then the directive solely depends on its availability. There is no extra information required to find the value of vnic-type. Can the value direct/normal assigned in policy itself? And OOF passes this back to SO once the match is found.

So the flow becomes like this. If there is HPA capability match pass the directives from Policy to SO through OOF.

Srinivasa Addepalli

Flavor part of the directive is unknown to the policy autor. Hence, it needs to be filled up by the OOF.

Srinivasa Addepalli

What kind of changes required to do this? As we are introducing new policy properties.

Also, we are changing the API response from the OSDF to SO. What changes are required

Thanks

Srini

Dileep Ranganathan

In OSDF it will be minimal if we keep the flavor in tact. My understanding is that a small change is needed to pass the new parameter from OSDF to HAS and for response no change needed as whatever HAS creates it will wrap it and send it back to SO.

Can we define directives as basically passdowns from components? Flavor is something that needs to be solved (so technically can be considered outside of a directive).

Srinivasa Addepalli

Yes. Each filter component is expected to send set of directives (if there are many directives, it needs to combine them properly). In case of HPA filter, based on what we know, on per VNFC basis, there is one directive for flavor, possible multiple directives for SRIOV. It needs to combine all of them for each VNFC and pass on to something which combines multiple directives coming from various filters. What is that something? Is that OSDF or something in HAS itself?

Srini

Ruoyu Ying

Hi Srini(Srinivasa Addepalli), Dileep(Dileep Ranganathan) and Haibin(Huang Haibin)

Due to the reason that we also need to take care of VF-C inside this design. Add 'direct' and 'physnet' inside directives seems to be hard for them as there's no such value inside Tosca model.

So I'd like to suggest we still put these two information inside AAI(which will not be used for matching)

"policyType":"hpaPolicy","flavorFeatures": [{"directives":[

{"directive_name":"flavor",

"attributes":[

{

"attribute_name":"flavor_label",

"attribute_value": "oof_returned_flavor_for_firewall"//Admin needs to ensure that this value is same as flavor parameter in HOT

{"attribute_name":"vduName",

"attribute_value": "firewall_vdu_name"//Admin needs to ensure that this value is same as the vduName in Tosca

"flavorProperties": [{"hpa-feature":"pciePassthrough","mandatory":"True","architecture":"generic","directives":[

{"directive_name":"SRIOV-NIC",

"attributes":[

{

"attribute_name":"NIC_label",

"attribute_value": "oof_returned_vnic_type_for_firewall_protected_interface"

]

"hpa-feature-attributes": [{"hpa-attribute-key":"pciVendorId","hpa-attribute-value":"1234","operator":"=","unit":""},{"hpa-attribute-key":"pciDeviceId","hpa-attribute-value":"5678","operator":"=","unit":""},{"hpa-attribute-key":"pciCount","hpa-attribute-value":"1","operator":">=","unit":""}]},{"hpa-feature":"pciePassthrough","mandatory":"True","architecture":"generic","directives":[

{"directive_name":"SRIOV-NIC",

"attributes":[

{"attribute_name":"NIC_label",

"attribute_value": "oof_returned_vnic_type_for_firewall_unprotected_interface"

]

"hpa-feature-attributes": [{"hpa-attribute-key":"pciVendorId","hpa-attribute-value":"1234","operator":"=","unit":""},{"hpa-attribute-key":"pciDeviceId","hpa-attribute-value":"5678","operator":"=","unit":""},{"hpa-attribute-key":"pciCount","hpa-attribute-value":"1","operator":">=","unit":""}]},...

Please check if it is ok with both sides, Srinivasa Addepalli and Huang Haibin. Any suggestions will be appreciated. Thanks.

Best Regards,

Ruoyu

Huang Haibin

Ruoyu Ying and Srinivasa Addepalli and Alexander Vul

Two questions:

Policy is dynamically generated from tosca for VF-C. If you do like this, VF-C will not work.

Srinivasa Addepalli

Ruoyu Ying, Huang Haibin and Dileep Ranganathan,

1 On having full directive in the HPA properties is that Dileep and Shankar does not like to see HPA feature/capability specific logic in the HPA matching logic. This is inline with our goal of no changes to the HPA code in OOF every time new HPA feature is added to the ONAP. Based on what I understand, if something is stored in A&AI, there may be specific logic in processing SRIOV-NIC PCIe feature. In any case, you need to ensure that there is no HPA feature specific logic in OOF.

2. vdu_name is not part of the directive. I thought it is global to all individual directives.

3. In your examples, I don't see the logic that checks for physnet and cardtype. Note that some VMs may only need to be brought up NICs that are connected to some physical networks.

Srini

Srinivasa Addepalli

In relation to figuring out the logic from TOSCA to Policy to have cardType and physical net, it needs to derived from the "

tosca.datatypes.nfv.VirtualNetworkInterfaceRequirements" or internal type "tosca.datatypes.nfv.LogicalNodeData".We may have to influence modeling team to add type of card (SRIOV NIC VF) and physical network it belongs in one of those above types. We should not be limiting the features of policy based HPA due to some challenges in the translation. If above can't be added, then the DM translation logic needs to assume default values.

Srini

Ruoyu Ying

Hi Srini(Srinivasa Addepalli)

First, I agree that there would be minimal changes in HAS matching logic if we put all these information inside directives, and it works perfectly for SO where policies are manually created. But when we try to apply the same logic to VF-C, here comes the problem. The policy used by VF-C is going to be generated dynamically from TOSCA (CSAR), which means every single information inside policy will come from TOSCA. However, information such as providerNetwork or vnic_type really doesn’t exist. So now i follow your suggestion put that structure there. But also I need to put them inside AAI, for OOF to get such information when it is requested by VF-C. The solution today to make the logic more generic I think is to put these information under the key ‘directive’, so that every time when it finishes the matching, it would check whether there’s a directive key inside that hpa feature attributes block. If there is, respond back. Can you help check whether it is feasible or not? Dileep Ranganathan

For the second thing, it’s my mistake to put it inside directives. I’ll change that. And also add "Type" in the same level.

The third thing is closely related to the first issue, that both OOF and Policy need to support the two different models and no matter the policies are generated dynamically or manually. So the best solution here is to align the two schema, and OOF would able to get these information with the same format and do the homing allocation.But currently we are not able to get cardType and providerNetwork inside Tosca, so i just removed the two attributes in policy.

According to our recent discussion, we make the structure look like this: sample_policy.txt

We added the vduName and Type inside and what removed is the card type and physical network inside hpa-feature-attributes, as it needs to confirmed by Alex.

Thanks.

Best Regards,

Ruoyu

Ruoyu Ying

Hi Srini,

If we are able to add cardType and physical network inside Tosca, that will be the best. Hi Alex(Alexander Vul), can you help check that are we able to implement this?

Srinivasa Addepalli

@Ruoyu and @Dileep,

In my view, cardType and physical network need to be part of TOSCA. Until then, those values can be hard coded (as sriov and physnet1 or from some configuration file) in the DM translation logic as policy expects them.

On the changes to OOF: We can add generic logic, but not per HPA feature basis. Even for capacity check, we are trying to go over pains to make the OOF as generic as possible.

Srini

Bin Yang

Hi all, with several round of offline discussion, I would share my thinking w.r.t. how this SR-IOV NIC can be realized, note that this is just for your reference and there are many open questions to be figured out yet.

SR-IOV NIC discovery and consumption with ONAP

Assumption:

0, We are discussing the VNF(s) orchestrated to a single VIM/Cloud instance, not the case of being acrossing multiple VIM/Cloud instances.

1, OpenStack will create multiple provider network (a special kind of tenant network) with backend of SRIOV NICs: neutron resource with type: OS::Neutron::ProviderNet

2, VNF templates (heat or tosca based templates) will define the parameters referring to those provider networks. ONAP is not supposed to create a provider network during orchestration. (The reason includes but not limited to that ONAP might be not provisioned of VIM/Cloud admin previlige)

3, unlike the flavor, the provider networks will be consumable resource, which means, for internal VL (between VDU of the same VNF), once a (provider) network name/id is allocated, it is depleted.

4, The intention of isolate from or connect to each other can be determined by the fact: Within the scope of a VNF template, vport created with different network names should be isolated from each other; on the other hand, vport created with the same network name should connect to each other via same provider network.

5, What about the extVL between different VNF ? They should be allocated/associated with the same (provider) network.

The association to the same (provider) network by different VNF can not be resolved by HPA capability matchmaking (which just consume one unused network resource), but by resovling the VL reference between VNFs (which refer to the consumed network resource), so there must be a standalone VL and associated by an unused network resource? How can the heat based templates realize/mapping this standalone VL concept? Should this extVL be addressed by SDNC instead of OOF?

Directive normalization and mapping:

*** aggreement for policy, oof, multicloud:

1, type: specify a directive types

The mapping should be defined to abstract the resources or parameters of VNF.

e.g. The mapping for heat => policy directive type:

"OS::Neutron::Net" => "type_vnet"

"OS::Neutron::Port" => "type_vport"

"OS::Nova::Server.flavor" => "type_flavor"

...

e.g. The mapping for TOSCA VNFD => policy directive type:

"CP?" => "type_vport"

"VL?" => "type_vnet"

"virtualCompute?" => "type_flavor"

2, directives' attributes matchmaking and population by OOF:

Policy

AAI

OOF_RESPONSE

OOF action

aai_attribute_name

attribute_name

matchmaking between policy and AAI

oof_attribute_name

attribute_name

populate oof_attribute_name into attribute_name of OOF_RESPONSE

attribute-value

attribute-value

populate attribute-value from AAI to attribute-value of OOF_RESPONSE

3, heat parameter updating

1, comparing the parameter name to attribute_name of OOF_RESPONSE

2, update the parameter value by attribute-value once the matched as above

====================================================================================

OpenStack ProviderNets example:

sriov-net1: {type: vlan, segmentation-id=1001, physical-network: physnet1, pciVendorId=1234, pciDeviceId=5678}

sriov-net2: {type: vlan, segmentation-id=1002, physical-network: physnet2, pciVendorId=1234, pciDeviceId=5678}

Host Aggregates ?

flavors ?

--------------------------------------------------------------------------------

AAI "hpa-capability" object (populated by MC)

Q: should these SRIOV hpa-capabilities be child object of cloud region instead of flavors ?

Example:

{

hpa-feature="SriovNICNetwork",

architecture=”generic",

version=”v1”,

[

{"Hpa-attribute-key":"pciVendorId", "Hpa-attribute-value":"1234"},

{"Hpa-attribute-key":"pciDeviceId", "Hpa-attribute-value":"5678"},

{"Hpa-attribute-key":"directives": "Hpa-attribute-value":

[{

"type":"type_vport",

"attributes": [{ "attribute_name": "vnic_type", "attribute_value": "direct" }],

},

{

"type":"type_vnet",

"attributes": [{ "attribute_name": "provider_network", "attribute_value": "sriov-net1" }],

}]

},

{"Hpa-attribute-key":"directives": "Hpa-attribute-value":

[{

"type":"type_vport",

"attributes": [{ "attribute_name": "vnic_type", "attribute_value": "direct" }],

},

{

"type":"type_vnet",

"attributes": [{ "attribute_name": "provider_network", "attribute_value": "sriov-net2" }],

}]

}

]

}

Note:

1, content of "Hpa-attribute-value" should be always string, so the directives content can be json array

2, those qualified (as following criteria) entries of the attributes will be populated into oof_response's directives with following update:

Criteria: the "attribute_name" will be matched against policy's "aai_attribute_name",

Update: the value for "attribute_name" will be replaced by policy's "oof_attribute_name"

3, Q: How to mark the allocation status for each SRIOV resource so that the same provider_network will not be assocaited to the different network names in a single heat template (e.g. about the allocation status of sriov-net1, sriov-net2) ?

--------------------------------------------------------------------------------

HEAT template based VNF example:

{

"files": {},

"disable_rollback": true,

"parameters": {

"vfw_vm_flavor": "m1.heat",

"vfw_private_port1_binding": "normal",

"vfw_private_net1": "vnet1",

"vfw_private_port2_binding": "normal",

"vfw_private_net2": "vnet2"

},

"stack_name": "vfw_stack",

"template": {

"heat_template_version": "2013-05-23",

"description": "Simple template to test heat commands",

"parameters": {

"vfw_vm_flavor": {

"default": "m1.tiny",

"type": "string"

},

"vfw_private_port1_binding": {

"default": "normal",

"type": "string"

},

"vfw_private_port2_binding": {

"default": "normal",

"type": "string"

},

"vfw_private_net1": {

"default": "vfw_net1",

"type": "string"

},

"vfw_private_net2": {

"default": "vfw_net1",

"type": "string"

}

},

"resources": {

vfw_private_port1:

type: OS::Neutron::Port

properties:

binding:vnic_type: { get_param: vfw_private_port1_binding}

network: { get_param: vfw_private_net1}

vfw_private_port2:

type: OS::Neutron::Port

properties:

binding:vnic_type: { get_param: vfw_private_port2_binding}

network: { get_param: vfw_private_net2}

vfw_vm:

type: OS::Nova::Server

properties:

image: { get_param: ubuntu_1604_image }

flavor: { get_param: vfw_vm_flavor }

name: vfw_vm

key_name: { get_resource: vm_key }

networks:

- port: { get_resource: vfw_private_port1 }

- port: { get_resource: vfw_private_port2 }

}

},

"timeout_mins": 60

}

--------------------------------------------------------------------------------

Policy example: The policy should be created (manually or automatically)

Q: how to reflect/enforce the network isolation in policy?

SRIOV NIC policy1 (VNF/template scope):

{

"hpa-feature": "SriovNICNetwork",

"mandatory": "True",

"architecture": "generic",

"hpa-feature-attributes": [

{ "hpa-attribute-key": "pciVendorId", "hpa-attribute-value": "1234", "operator": "=", "unit": "" },

{ "hpa-attribute-key": "pciDeviceId", "hpa-attribute-value": "5678", "operator": "=", "unit": "" },

{ "hpa-attribute-key": "directives", "hpa-attribute-value": "<directives content>", "operator": "=", "unit": "" },

],

},

example of directives content:

[

{

"type":"type_vport",

"attributes": [{ "aai_attribute_name": "vnic_type", "oof_attribute_name": "vfw_private_port1_binding" }],

},

{

"type":"type_vnet",

"attributes": [{ "aai_attribute_name": "provider_network", "oof_attribute_name": "vfw_private_net1" }],

},

{

"type":"type_vport",

"attributes": [{ "aai_attribute_name": "vnic_type", "oof_attribute_name": "vfw_private_port2_binding" }],

},

{

"type":"type_vnet",

"attributes": [{ "aai_attribute_name": "provider_network", "oof_attribute_name": "vfw_private_net2" }],

}

],

--------------------------------------------------------------------------------

directives of OOF_RESPONSE to MC/VFC:

"directives": [{

"type":"type_vport",

"attributes": [{ "attribute_name": "vfw_private_port1_binding", "attribute_value": "direct" }],

},

{

"type":"type_vnet",

"attributes": [{ "attribute_name": "vfw_private_net1", "attribute_value": "sriov-net1" }],

},

{

"type":"type_vport",

"attributes": [{ "attribute_name": "vfw_private_port2_binding", "attribute_value": "direct" }],

},

{

"type":"type_vnet",

"attributes": [{ "attribute_name": "vfw_private_net2", "attribute_value": "sriov-net2" }],

}]

--------------------------------------------------------------------------------

Updated heat parameters by MC:

{

"files": {},

"disable_rollback": true,

"parameters": {

"vfw_vm_flavor": "f.hpa.1",

"vfw_private_port1_binding": "direct",

"vfw_private_net1": "sriov-net1",

"vfw_private_port2_binding": "direct",

"vfw_private_net2": "sriov-net2"

},

"stack_name": "vfw_stack",

"template": {

"heat_template_version": "2013-05-23",

"description": "Simple template to test heat commands",

"parameters": {

"vfw_vm_flavor": {

"default": "m1.tiny",

"type": "string"

},

"vfw_private_port1_binding": {

"default": "normal",

"type": "string"

},

"vfw_private_port2_binding": {

"default": "normal",

"type": "string"

},

"vfw_private_net1": {

"default": "vfw_net1",

"type": "string"

},

"vfw_private_net2": {

"default": "vfw_net1",

"type": "string"

}

},

"resources": {

vfw_private_port1:

type: OS::Neutron::Port

properties:

binding:vnic_type: { get_param: vfw_private_port1_binding}

network: { get_param: vfw_private_net1}

vfw_private_port2:

type: OS::Neutron::Port

properties:

binding:vnic_type: { get_param: vfw_private_port2_binding}

network: { get_param: vfw_private_net2}

vfw_vm:

type: OS::Nova::Server

properties:

image: { get_param: ubuntu_1604_image }

flavor: { get_param: vfw_vm_flavor }

name: vfw_vm

key_name: { get_resource: vm_key }

networks:

- port: { get_resource: vfw_private_port1 }

- port: { get_resource: vfw_private_port2 }

}

},

"timeout_mins": 60

}

==============================================================================================================

Workflow:

Prerequisites:

1, OpenStack admin: create multiple ProviderNets (heat resource with type: OS::Neutron::ProviderNet) which binds to SR-IOV NICs

e.g. pnet1

2, OpenStack admin: create host aggregate conforming to ONAP naming convention: sriovnic-{network-name}-{vendor-id}-{device-id}

e.g. ha-sriovnic1: {metadata: "sriovnic-pnet1-1234-4568"}

3, associate the compute hosts to this host aggregate.

4, add the metadata into some flavors

1, VIM/Cloud instance onboarding to ONAP: MC will discover and represent SRIOV NIC resources via AAI hpa-capability objects

2, VNF templates is ready: parameterize the vnic_type and network name for vports

3, Create VNF policies: to specify the requirements of vport: SR-IOV hpa-capability, with parameters in directives

4, VFC/SO -> OOF: OOF matches the requirement of vports to AAI hpa-capabilities objects

5.1, OOF -> SO: OOF returns oof_response with directives (updated with AAI data) to SO

5.2, SO -> MC: SO pass the oof_response to MC

5.3, MC -> VIM/Cloud instance: MC update the heat templates/parameters by looking up the directives from oof_response

6.1 OOF -> VFC: OOF returns oof_response with directives (updated with AAI data) to VFC

6.2 VFC(G-VNFM/S-VNFM) -> MC: VFC create vport via MC NBI, VFC create VM with vport via MC NBI