Precondition

- Server flavor : 8 vCPU / 30GB RAM / 100GB HDD

- Server OS : Ubuntu 16.04

Planned

- Heat template DONE

- Heat template commit to gerrit repo DONE

- HV-VES XNF simulator integration to ONAP procedure DONE

HV-VES simulator standalone mode installation

- Deploy HV-VES simulator in standalone mode using Heat template : https://gerrit.onap.org/r/gitweb?p=integration.git;a=blob_plain;f=test/mocks/hvvessimulator/hvves_sim.yaml;hb=HEAD

- Login to the deployed server using root/onap credentials

- Verify that all docker containers are up :

docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 5a4c5011bfeb nexus3.onap.org:10003/onap/ves-hv-collector-xnf-simulator "./run-java.sh run..." 27 seconds ago Up 27 seconds 0.0.0.0:6062->6062/tcp hv-ves_sim_xnf-simulator_1 ad910797eb72 nexus3.onap.org:10003/onap/ves-hv-collector:latest "./run-java.sh run..." 28 seconds ago Up 27 seconds (healthy) 0.0.0.0:6060-6061->6060-6061/tcp hv-ves_sim_ves-hv-collector_1 db196f77fd8e wurstmeister/kafka "start-kafka.sh" 28 seconds ago Up 28 seconds 0.0.0.0:9092->9092/tcp hv-ves_sim_kafka_1 e8713cceb027 progrium/consul "/bin/start -serve..." 30 seconds ago Up 28 seconds 53/tcp, 53/udp, 8300-8302/tcp, 8400/tcp, 8301-8302/udp, 0.0.0.0:8500->8500/tcp hv-ves_sim_consul_1 5440e045d0a3 wurstmeister/zookeeper "/bin/sh -c '/usr/..." 30 seconds ago Up 30 seconds 22/tcp, 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp hv-ves_sim_zookeeper_1

- Verify HV-VES configuration from HV-VES docker container :

docker exec -ti ad910797eb72 bash

root@ad910797eb72:/opt/ves-hv-collector# curl http://consul:8500/v1/kv/veshv-config

[{"CreateIndex":5,"ModifyIndex":5,"LockIndex":0,"Key":"veshv-config","Flags":0,"Value":"eyJrYWZrYUJvb3RzdHJhcFNlcnZlcnMiOiAia2Fma2E6OTA5MiIsInJvdXRpbmciOlt7ImZyb21Eb21haW4iOjExLCJ0b1RvcGljIjoidmVzX2h2UmFuTWVhcyJ9XX0="}]

HV-VES simulator standalone mode usage

- Start HV-VES container log :

docker logs --tail 0 -f ad910797eb7

- Start Kafka consumer log :

docker exec -ti db196f77fd8e sh / # kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic ves_hvRanMeas

- Send message using simulator.sh script :

hv-ves_sim/simulator.sh send hv-ves_sim/samples/xnf-valid-messages-request.json

{"response":"Request accepted"}

- Check HV-VES log :

root@hv-ves-sim:~# docker logs --tail 0 -f ad910797eb72 2018-09-05T13:38:32.668Z INFO [o.o.d.c.v.i.s.NettyTcpServer ] - Handling connection from /172.18.0.6:51530 2018-09-05T13:38:32.669Z TRACE [o.o.d.c.v.i.w.WireChunkDecoder ] - Got message with total size of 335 B 2018-09-05T13:38:32.669Z TRACE [o.o.d.c.v.i.w.WireChunkDecoder ] - Wire payload size: 327 B 2018-09-05T13:38:32.669Z TRACE [o.o.d.c.v.i.w.WireChunkDecoder ] - Received end-of-transmission message 2018-09-05T13:38:32.670Z INFO [o.o.d.c.v.i.VesHvCollector ] - Completing stream because of receiving EOT message 2018-09-05T13:38:32.672Z TRACE [o.o.d.c.v.i.a.k.KafkaSink ] - Message #10001 has been sent to ves_hvRanMeas:0 2018-09-05T13:38:32.672Z INFO [o.o.d.c.v.i.s.NettyTcpServer ] - Connection from /172.18.0.6:51530 has been closed

- Check Kafka consumer log :

docker exec -ti db196f77fd8e sh

/ # kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic ves_hvRanMeas

?

sample-version

*sample-version2sample-version:sample-version@???9H???9Rsample-nf-naming-codeZsample-nfc-naming-codebsample-reporting-entity-idjsample-reporting-entity-namersample-source-idzsample-source-namec

a

sample/uri8873???

? ??????????????????????? ?????/???????a???????

HV-VES xNF simulator integration to ONAP

- Deploy HV-VES simulator in onap mode using Heat template : https://gerrit.onap.org/r/gitweb?p=integration.git;a=blob_plain;f=test/mocks/hvvessimulator/hvves_sim.yaml;hb=HEAD

- Check if HV-VES pod is running :

kubectl -n onap get pods | grep hv-ves dep-dcae-hv-ves-collector-6ddbb546c8-v5gv4 2/2 Running 0 1d

- Check if xNF simulator is up :

docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES eb946d45cc01 nexus3.onap.org:10001/onap/org.onap.dcaegen2.collectors.hv-ves.hv-collector-xnf-simulator:latest "./run-java.sh run..." 24 hours ago Up 24 hours 0.0.0.0:6062->6062/tcp brave_bartik

- Forward Kafka pod port to node one :

kubectl -n onap port-forward dev-message-router-kafka-7d75bf94bc-77jbf 9092:9092 Forwarding from 127.0.0.1:9092 -> 9092

- Forward HV-VES simulator 9092 port to the node from previous step :

ssh -L 9092:localhost:9092 10.183.34.151

- Send event from xNF simulator :

cd hv-ves_sim ; ./simulator.sh send ./samples/single_xnf-simulator-smaller-valid-request.json 6447df9c-b044-47bb-a1c2-77b6d21b9c9d

- Check HV-VES log :

kubectl -n onap logs dep-dcae-hv-ves-collector-6ddbb546c8-v5gv4 dcae-hv-ves-collector -f --tail=4 p.dcae.collectors.veshv.impl.socket.NettyTcpServer | 2018-09-27T10:00:39.020Z | INFO | Handling connection from /10.42.0.1:56454 | | reactor-tcp-server-epoll-14 p.dcae.collectors.veshv.impl.socket.NettyTcpServer | 2018-09-27T10:01:39.028Z | INFO | Idle timeout of 60 s reached. Closing connection from /10.42.0.1:56454... | | reactor-tcp-server-epoll-14 p.dcae.collectors.veshv.impl.socket.NettyTcpServer | 2018-09-27T10:01:39.029Z | INFO | Connection from /10.42.0.1:56454 has been closed | | reactor-tcp-server-epoll-14 p.dcae.collectors.veshv.impl.socket.NettyTcpServer | 2018-09-27T10:01:39.029Z | DEBUG | Channel (/10.42.0.1:56454) closed successfully. | | reactor-tcp-server-epoll-14

- Check Kafka topic content :

Casablanca

kafkacat -C -b localhost:9092 -t HV_VES_PERF3GPP -D "" -o -1 -c 1 ? sample-versionperf3gpp * perf3GPP222sample-event-name:sample-event-type@????H????Rsample-nf-naming-codeZsample-nfc-naming-codebsample-nf-vendor-namejsample-reporting-entity-idrsample-reporting-entity-namezsample-source-id?sample-xnf-name? UTC+02:00?7.0.2test test test

Dublin onward

kafkacat -C -b message-router-kafka:9092 -t HV_VES_PERF3GPP -X security.protocol=SASL_PLAINTEXT -X sasl.mechanisms=PLAIN -X sasl.username=admin -X sasl.password=admin_secret -D "" -o -1 -c 1 ? sample-versionperf3gpp * perf3GPP222sample-event-name:sample-event-type@????H????Rsample-nf-naming-codeZsample-nfc-naming-codebsample-nf-vendor-namejsample-reporting-entity-idrsample-reporting-entity-namezsample-source-id?sample-xnf-name? UTC+02:00?7.0.2test test test

- Decode message from Kafka topic :

Casablanca

kafkacat -C -b localhost:9092 -t HV_VES_PERF3GPP -D "" -o -1 -c 1 | protoc --decode_raw

1 {

1: "sample-version"

2: "perf3gpp"

3: 1

4: 1

5: "perf3GPP22"

6: "sample-event-name"

7: "sample-event-type"

8: 1539263857

9: 1539263857

10: "sample-nf-naming-code"

11: "sample-nfc-naming-code"

12: "sample-nf-vendor-name"

13: "sample-reporting-entity-id"

14: "sample-reporting-entity-name"

15: "sample-source-id"

16: "sample-xnf-name"

17: "UTC+02:00"

18: "7.0.2"

}

2: "test test test"

Dublin onward

kafkacat -C -b message-router-kafka:9092 -t HV_VES_PERF3GPP -X security.protocol=SASL_PLAINTEXT -X sasl.mechanisms=PLAIN -X sasl.username=admin -X sasl.password=admin_secret -D "" -o -1 -c 1 | protoc --decode_raw

1 {

1: "sample-version"

2: "perf3gpp"

3: 1

4: 1

5: "perf3GPP22"

6: "sample-event-name"

7: "sample-event-type"

8: 1539263857

9: 1539263857

10: "sample-nf-naming-code"

11: "sample-nfc-naming-code"

12: "sample-nf-vendor-name"

13: "sample-reporting-entity-id"

14: "sample-reporting-entity-name"

15: "sample-source-id"

16: "sample-xnf-name"

17: "UTC+02:00"

18: "7.0.2"

}

2: "test test test"

HV-VES xNF message simulation from shell

- Prepare HV-VES VesEvent message in hex dump format :

cd ~/hv-ves_sim/proto ; cat hvves_event

commonEventHeader: {

version: "sample-version"

eventName: "sample-event-name"

domain: "perf3gpp"

eventId: "perf3GPP22"

eventType: "sample-event-type"

nfcNamingCode: "sample-nfc-naming-code"

nfNamingCode: "sample-nf-naming-code"

nfVendorName: "sample-nf-vendor-name"

sourceId: "sample-source-id"

sourceName: "sample-xnf-name"

reportingEntityId: "sample-reporting-entity-id"

reportingEntityName: "sample-reporting-entity-name"

priority: 1

startEpochMicrosec: 1539263857

lastEpochMicrosec: 1539263857

timeZoneOffset: "UTC+02:00"

sequence: 1

vesEventListenerVersion: "7.0.2"

}

eventFields: "test test test"

cd ~/hv-ves_sim/proto ; echo -n "\x`cat hvves_event | protoc -I=/root/hv-ves_sim/proto VesEvent.proto --encode=VesEvent | xxd -p -c 1000 | grep -o .. | xargs echo -n | sed 's/ /\\\x/g'`" \x0a\x94\x02\x0a\x0e\x73\x61\x6d\x70\x6c\x65\x2d\x76\x65\x72\x73\x69\x6f\x6e\x12\x08\x70\x65\x72\x66\x33\x67\x70\x70\x18\x01\x20\x01\x2a\x0a\x70\x65\x72\x66\x33\x47\x50\x50\x32\x32\x32\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x6e\x61\x6d\x65\x3a\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x74\x79\x70\x65\x40\xf1\x9a\xfd\xdd\x05\x48\xf1\x9a\xfd\xdd\x05\x52\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x5a\x16\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x63\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x62\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x76\x65\x6e\x64\x6f\x72\x2d\x6e\x61\x6d\x65\x6a\x1a\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x69\x64\x72\x1c\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x6e\x61\x6d\x65\x7a\x10\x73\x61\x6d\x70\x6c\x65\x2d\x73\x6f\x75\x72\x63\x65\x2d\x69\x64\x82\x01\x0f\x73\x61\x6d\x70\x6c\x65\x2d\x78\x6e\x66\x2d\x6e\x61\x6d\x65\x8a\x01\x09\x55\x54\x43\x2b\x30\x32\x3a\x30\x30\x92\x01\x05\x37\x2e\x30\x2e\x32\x12\x0e\x74\x65\x73\x74\x20\x74\x65\x73\x74\x20\x74\x65\x73\x74

- Put the above VesEvent message into Wire Frame Protocol structure documented in KotlinDoc https://gerrit.onap.org/r/gitweb?p=dcaegen2/collectors/hv-ves.git;a=blob_plain;f=sources/hv-collector-domain/src/main/kotlin/org/onap/dcae/collectors/veshv/domain/wire_frame.kt;hb=refs/heads/master :

\xaa\x01\x00\x00\x00\x00\x00\x01\x00\x00\x01\x27\x0a\x94\x02\x0a\x0e\x73\x61\x6d\x70\x6c\x65\x2d\x76\x65\x72\x73\x69\x6f\x6e\x12\x08\x70\x65\x72\x66\x33\x67\x70\x70\x18\x01\x20\x01\x2a\x0a\x70\x65\x72\x66\x33\x47\x50\x50\x32\x32\x32\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x6e\x61\x6d\x65\x3a\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x74\x79\x70\x65\x40\xf1\x9a\xfd\xdd\x05\x48\xf1\x9a\xfd\xdd\x05\x52\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x5a\x16\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x63\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x62\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x76\x65\x6e\x64\x6f\x72\x2d\x6e\x61\x6d\x65\x6a\x1a\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x69\x64\x72\x1c\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x6e\x61\x6d\x65\x7a\x10\x73\x61\x6d\x70\x6c\x65\x2d\x73\x6f\x75\x72\x63\x65\x2d\x69\x64\x82\x01\x0f\x73\x61\x6d\x70\x6c\x65\x2d\x78\x6e\x66\x2d\x6e\x61\x6d\x65\x8a\x01\x09\x55\x54\x43\x2b\x30\x32\x3a\x30\x30\x92\x01\x05\x37\x2e\x30\x2e\x32\x12\x0e\x74\x65\x73\x74\x20\x74\x65\x73\x74\x20\x74\x65\x73\x74

- Send WFP message to HV-VES :

echo -ne "\xaa\x01\x00\x00\x00\x00\x00\x01\x00\x00\x01\x27\x0a\x94\x02\x0a\x0e\x73\x61\x6d\x70\x6c\x65\x2d\x76\x65\x72\x73\x69\x6f\x6e\x12\x08\x70\x65\x72\x66\x33\x67\x70\x70\x18\x01\x20\x01\x2a\x0a\x70\x65\x72\x66\x33\x47\x50\x50\x32\x32\x32\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x6e\x61\x6d\x65\x3a\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x74\x79\x70\x65\x40\xf1\x9a\xfd\xdd\x05\x48\xf1\x9a\xfd\xdd\x05\x52\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x5a\x16\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x63\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x62\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x76\x65\x6e\x64\x6f\x72\x2d\x6e\x61\x6d\x65\x6a\x1a\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x69\x64\x72\x1c\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x6e\x61\x6d\x65\x7a\x10\x73\x61\x6d\x70\x6c\x65\x2d\x73\x6f\x75\x72\x63\x65\x2d\x69\x64\x82\x01\x0f\x73\x61\x6d\x70\x6c\x65\x2d\x78\x6e\x66\x2d\x6e\x61\x6d\x65\x8a\x01\x09\x55\x54\x43\x2b\x30\x32\x3a\x30\x30\x92\x01\x05\x37\x2e\x30\x2e\x32\x12\x0e\x74\x65\x73\x74\x20\x74\x65\x73\x74\x20\x74\x65\x73\x74" | netcat k8s_node_ip 30222

- Decode the message from Kafka topic :

Casablanca

kafkacat -C -b localhost:9092 -t HV_VES_PERF3GPP -D "" -o -1 -c 1 | protoc --decode_raw

1 {

1: "sample-version"

2: "perf3gpp"

3: 1

4: 1

5: "perf3GPP22"

6: "sample-event-name"

7: "sample-event-type"

8: 1539263857

9: 1539263857

10: "sample-nf-naming-code"

11: "sample-nfc-naming-code"

12: "sample-nf-vendor-name"

13: "sample-reporting-entity-id"

14: "sample-reporting-entity-name"

15: "sample-source-id"

16: "sample-xnf-name"

17: "UTC+02:00"

18: "7.0.2"

}

2: "test test test"

Dublin onward

kafkacat -C -b message-router-kafka:9092 -t HV_VES_PERF3GPP -X security.protocol=SASL_PLAINTEXT -X sasl.mechanisms=PLAIN -X sasl.username=admin -X sasl.password=admin_secret -D "" -o -1 -c 1 | protoc --decode_raw

1 {

1: "sample-version"

2: "perf3gpp"

3: 1

4: 1

5: "perf3GPP22"

6: "sample-event-name"

7: "sample-event-type"

8: 1539263857

9: 1539263857

10: "sample-nf-naming-code"

11: "sample-nfc-naming-code"

12: "sample-nf-vendor-name"

13: "sample-reporting-entity-id"

14: "sample-reporting-entity-name"

15: "sample-source-id"

16: "sample-xnf-name"

17: "UTC+02:00"

18: "7.0.2"

}

2: "test test test"

HV-VES with SSL enabled

Casablanca

Generate testing PKCS #12 files using https://gerrit.onap.org/r/gitweb?p=dcaegen2/collectors/hv-ves.git;a=blob_plain;f=tools/ssl/gen-certs.sh;hb=refs/heads/master and store in k8s nfs dir /dockerdata-nfs/ssl

Edit HV-VES deployment (kubectl -n onap edit deployment/dep-dcae-hv-ves-collector) by removing VESHV_SSL_DISABLE flag and adding VESHV_TRUST_STORE, VESHV_KEY_STORE, VESHV_TRUST_STORE_PASSWORD, VESHV_KEY_STORE_PASSWORD ones.

Add entry to mount node:/dockerdata-nfs/ssl to containter:/etc/ves-hv :

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "4"

creationTimestamp: 2018-10-04T15:15:21Z

generation: 4

labels:

app: dcae-hv-ves-collector

cfydeployment: hv-ves

cfynode: hv-ves

cfynodeinstance: hv-ves_eipq6a

k8sdeployment: dep-dcae-hv-ves-collector

name: dep-dcae-hv-ves-collector

namespace: onap

resourceVersion: "1452331"

selfLink: /apis/extensions/v1beta1/namespaces/onap/deployments/dep-dcae-hv-ves-collector

uid: 4f6c9488-c7e8-11e8-b920-026901117392

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: dcae-hv-ves-collector

cfydeployment: hv-ves

cfynode: hv-ves

cfynodeinstance: hv-ves_eipq6a

k8sdeployment: dep-dcae-hv-ves-collector

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: dcae-hv-ves-collector

cfydeployment: hv-ves

cfynode: hv-ves

cfynodeinstance: hv-ves_eipq6a

k8sdeployment: dep-dcae-hv-ves-collector

spec:

containers:

- env:

- name: CONSUL_HOST

value: consul-server.onap

- name: VESHV_KEY_STORE_PASSWORD

value: onaponap

- name: VESHV_TRUST_STORE_PASSWORD

value: onaponap

- name: VESHV_KEY_STORE

value: /etc/ves-hv/server.p12

- name: VESHV_TRUST_STORE

value: /etc/ves-hv/trust.p12

- name: VESHV_CONFIG_URL

value: http://consul-server.onap:8500/v1/kv/dcae-hv-ves-collector

- name: VESHV_LISTEN_PORT

value: "6061"

- name: CONFIG_BINDING_SERVICE

value: config-binding-service

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

image: nexus3.onap.org:10001/onap/org.onap.dcaegen2.collectors.hv-ves.hv-collector-main:1.0.0-SNAPSHOT

imagePullPolicy: IfNotPresent

name: dcae-hv-ves-collector

ports:

- containerPort: 6061

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /opt/app/HvVesCollector/logs

name: component-log

- mountPath: /etc/ves-hv

name: ssldir

- env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

image: docker.elastic.co/beats/filebeat:5.5.0

imagePullPolicy: IfNotPresent

name: filebeat

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/log/onap/dcae-hv-ves-collector

name: component-log

- mountPath: /usr/share/filebeat/data

name: filebeat-data

- mountPath: /usr/share/filebeat/filebeat.yml

name: filebeat-conf

subPath: filebeat.yml

dnsPolicy: ClusterFirst

hostname: dcae-hv-ves-collector

imagePullSecrets:

- name: onap-docker-registry-key

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- emptyDir: {}

name: component-log

- emptyDir: {}

name: filebeat-data

- configMap:

defaultMode: 420

name: dcae-filebeat-configmap

name: filebeat-conf

- hostPath:

path: /dockerdata-nfs/ssl

type: ""

name: ssldir

status:

availableReplicas: 1

conditions:

- lastTransitionTime: 2018-10-04T15:15:21Z

lastUpdateTime: 2018-10-04T15:15:21Z

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: 2018-10-04T15:15:21Z

lastUpdateTime: 2018-10-05T14:10:15Z

message: ReplicaSet "dep-dcae-hv-ves-collector-7986d777dc" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 4

readyReplicas: 1

replicas: 1

updatedReplicas: 1

Deploy HV-VES simulator in onapmode with tls enabled using Heat template : https://gerrit.onap.org/r/gitweb?p=integration.git;a=blob_plain;f=test/mocks/hvvessimulator/hvves_sim.yaml;hb=HEAD

Dublin onward

Prepare CA, Server and Client Private Keys and CSR.

openssl genrsa -out ca.key 2048 openssl req -new -x509 -days 36500 -key ca.key -out ca.pem -subj /CN=dcae-hv-ves-ca openssl genrsa -out server.key 2048 openssl req -new -key server.key -out server.csr -subj /CN=dcae-hv-ves-collector openssl genrsa -out client.key 2048 openssl req -new -key client.key -out client.csr -subj /CN=dcae-hv-ves-client

Sign Server and Client certificates by the CA.

openssl x509 -req -days 36500 -in server.csr -CA ca.pem -CAkey ca.key -out server.pem -set_serial 00 openssl x509 -req -days 36500 -in client.csr -CA ca.pem -CAkey ca.key -out client.pem -set_serial 00

Create passwordless p12 CA and Server certificate files.

openssl pkcs12 -export -out ca.p12 -inkey ca.key -in ca.pem -passout pass: openssl pkcs12 -export -out server.p12 -inkey server.key -in server.pem -passout pass:

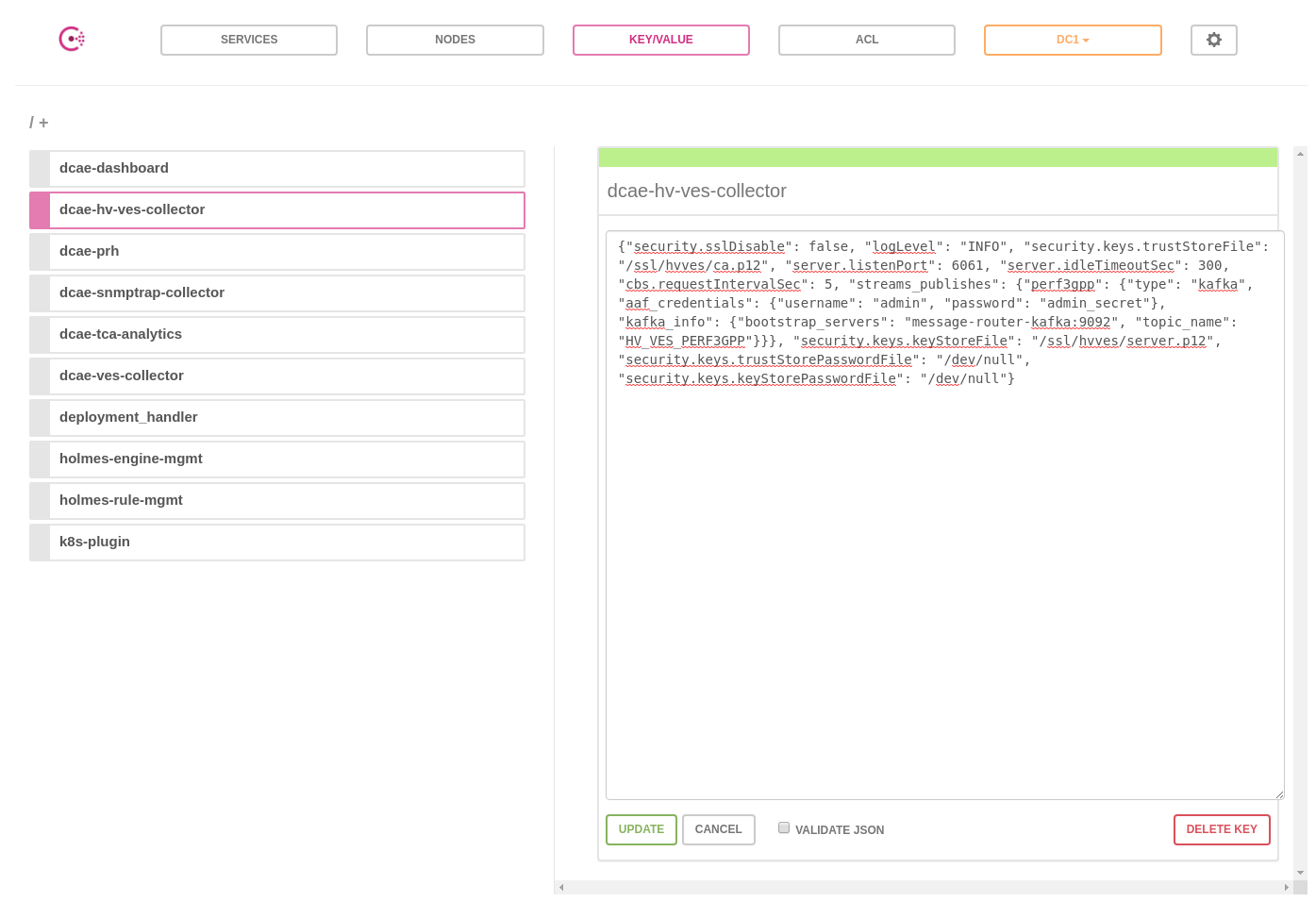

Enable SSL feature in HV-VES collector via Consul UI :

http://<node_ip>:30270/ui/#/dc1/kv/dcae-hv-ves-collector/edit

Combine Client Private Key and Public Certificate into PEM file.

cat client.key client.pem > client-all.pem

Send message to HV-VES collector using openssl command.

echo -ne "\xaa\x01\x00\x00\x00\x00\x00\x01\x00\x00\x01\x27\x0a\x94\x02\x0a\x0e\x73\x61\x6d\x70\x6c\x65\x2d\x76\x65\x72\x73\x69\x6f\x6e\x12\x08\x70\x65\x72\x66\x33\x67\x70\x70\x18\x01\x20\x01\x2a\x0a\x70\x65\x72\x66\x33\x47\x50\x50\x32\x32\x32\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x6e\x61\x6d\x65\x3a\x11\x73\x61\x6d\x70\x6c\x65\x2d\x65\x76\x65\x6e\x74\x2d\x74\x79\x70\x65\x40\xf1\x9a\xfd\xdd\x05\x48\xf1\x9a\xfd\xdd\x05\x52\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x5a\x16\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x63\x2d\x6e\x61\x6d\x69\x6e\x67\x2d\x63\x6f\x64\x65\x62\x15\x73\x61\x6d\x70\x6c\x65\x2d\x6e\x66\x2d\x76\x65\x6e\x64\x6f\x72\x2d\x6e\x61\x6d\x65\x6a\x1a\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x69\x64\x72\x1c\x73\x61\x6d\x70\x6c\x65\x2d\x72\x65\x70\x6f\x72\x74\x69\x6e\x67\x2d\x65\x6e\x74\x69\x74\x79\x2d\x6e\x61\x6d\x65\x7a\x10\x73\x61\x6d\x70\x6c\x65\x2d\x73\x6f\x75\x72\x63\x65\x2d\x69\x64\x82\x01\x0f\x73\x61\x6d\x70\x6c\x65\x2d\x78\x6e\x66\x2d\x6e\x61\x6d\x65\x8a\x01\x09\x55\x54\x43\x2b\x30\x32\x3a\x30\x30\x92\x01\x05\x37\x2e\x30\x2e\x32\x12\x0e\x74\x65\x73\x74\x20\x74\x65\x73\x74\x20\x74\x65\x73\x74" | openssl s_client -connect dcae-hv-ves-collector:30222 -CAfile ca.pem -msg -state -cert client-all.pem