If you already have the Full ONAP(Frankfurt Release) environment, You can use it for 5G network slicing experience.

To reduce the resource requirements of ONAP ,you can install a minmum scope ONAP to test the 5G Network scling.

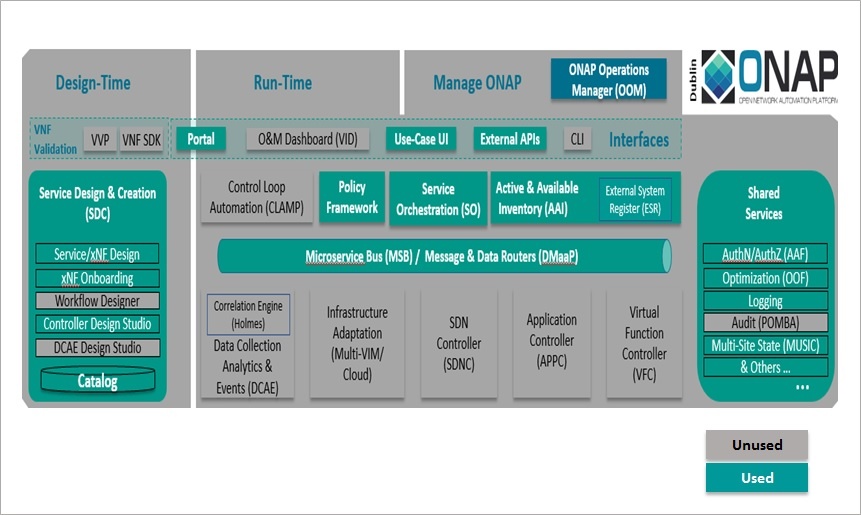

17/32 modules are used in 5G network slicing usecase(refer to oom repo: kubernetes/onap/resources/overrides/onap-5g-network-slicing.yaml). and some charts of some modules are not used.

Please find the details:

| Module | Contained in the customization version(Yes/No) | removed charts(Which is not used in the module) | Description |

|---|---|---|---|

| aaf | Yes | ||

| aai | Yes | Required image version: aai-traversal:1.6.3、aai-schema-service:1.6.8、aai-graphadmin:1.6.3 | |

| appc | No | ||

| cassandra | Yes | ||

| cds | Yes | cds-command-cexecuter | |

| clamp | No | ||

| cli | No | ||

| consul | No | ||

| contrib | No | ||

| dmaap | Yes | ||

| dcaegen2 | No | ||

| pnda | No | ||

| esr | Yes | ||

| log | Yes | ||

| sniro-smulator | No | ||

| oof | Yes | Required image version: optf-osdf:2.0.4、optf-has:2.0.4 | |

| mariadb-galera | Yes | ||

| msb | Yes | ||

| multicloud | No | ||

| nbi | Yes | Required image version: 6.0.3 | |

| policy | Yes | Required image version: policy-pe:1.6.4 | |

| pomba | No | ||

| portal | Yes | ||

| robot | Yes | ||

| sdc | Yes | sdc-dcae-be;sdc-dcae-fe;sdc-dcae-dt;sdc-dcae-tosca-lab;sdc-wfd-be;sdc-wfd-fe | |

| sdnc | No | ||

| so | Yes | so-openstack-adapter;so-sdnc-adapter;so-vfc-adapter;so-vnfm-adapter;so-ve-vnfm-adapter | Required image version: 1.6.3 |

| uui | Yes | Required image version: 3.0.4 | |

| vfc | No | ||

| vid | No | ||

| vnfsdk | No | ||

| Modeling | No |

Request resources:

| Option | CPU | Memory |

|---|---|---|

| Full ONAP | 112 | 224G |

| Customization Version | 64 | 128G |

The following is the recommended component version.

| Software | Version |

|---|---|

| Kubernetes | 1.13.5 |

| Helm | 2.12.3 |

| kubectl | 1.13.5 |

| Docker | 18.09.5 |

Installation Steps:

Please refer to the following link for the 1-3 steps:

https://docs.onap.org/en/elalto/submodules/oom.git/docs/oom_setup_kubernetes_rancher.html

1.Install kubectl

2.Instal helm

3.Set up NFS

4.Clone the OOM repository from ONAP gerrit:

> git clone http://gerrit.onap.org/r/oom --recurse -submodules

5.Install Helm Plugins required to deploy ONAP:

> cd oom/kubernetes

> sudo cp -R ./helm/plugins/ ~/.helm

6.Currently OOM does not support the chart config , So remove the charts manually. Will work with OOM team to make the chart level configable.

Customize the helm charts to suit use case(from the kubernetes directory):

> rm -rf cds/charts/cds-command-executor/

> rm -rf sdc/charts/sdc-dcae-be/

> rm -rf sdc/charts/sdc-dcae-dt/

> rm -rf sdc/charts/sdc-dcae-fe/

> rm -rf sdc/charts/sdc-dcae-tosca-lab/

> rm -rf sdc/charts/sdc-wfd-be/

> rm -rf sdc/charts/sdc-wfd-fe/

> rm -rf so/charts/so-openstack-adapter/

> rm -rf so/charts/so-sdnc-adapter/

> rm -rf so/charts/so-vfc-adapter/

> rm -rf so/charts/so-vnfm-adapter/

> rm -rf so/charts/so-ve-vnfm-adapter/

7.To setup a local Helm server to server up the ONAP charts:

> helm serve &

> helm repo add local http://127.0.0.1:8879

8.Build a local Helm repository(from the kubernetes directory):

> make all &

9.To deploy ONAP applications use this command:

> helm deploy dev local/onap --namespace onap -f onap/resources/overrides/onap-5g-network-slicing.yaml -f onap/resources/environments/public-cloud.yaml --set global.masterPassword=onap --verbose --timeout 2000 &

10.Check the pod status after install:

> kubectl get pods -n onap

11.Health check:

> bash oom/kubernetes/robot/ete-k8s.sh onap health

15 Comments

yan yan

F版的代码从哪儿可以下载呢,我想测试一下。请给予指点。

QingJie Zhang

F version has not been released, you can use the code of master branch like this:

> git clone http://gerrit.onap.org/r/oom --recurse -submodules

yan yan

这版本出来就是 准 F版?我看一下 还是e版,故有此疑问。

LIN MENG

Hi, May I ask which company are you from?

yan yan

chinasofti

cai pan

有推荐的fankfurt版本6.0.0的安装详细步骤吗?官方的安装过程基本走不下去,搞了大半个月,太多坑了,国内网络环境又不行,租用了国外的vps在线安装,还是有很多pod起不来,怎么办呢?

Goutam Roy

Hi I have execute following command to run ONAP 5G slicing.

>sudo helm deploy dev local/onap --namespace onap -f onap/resources/overrides/onap-5g-network-slicing.yaml -f onap/resources/overrides/environment.yaml -f onap/resources/overrides/openstack.yaml --timeout 1200 --verbose

I have download this code from master branch.

But i am getting following exception. Please help me to solve it.

Release "dev-so" does not exist. Installing it now.

Error: render error in "so/templates/secret.yaml": template: so/templates/secret.yaml:16:3: executing "so/templates/secret.yaml" at <include "common.secret" .>: error calling include: template: so/charts/common/templates/_secret.tpl:376:15: executing "common.secret" at <include "common.secret._value" $valueDesc>: error calling include: template: so/charts/common/templates/_secret.tpl:75:8: executing "common.secret._value" at <include "common.createPassword" (dict "dot" $global "uid" $name)>: error calling include: template: so/charts/common/templates/_createPassword.tpl:63:13: executing "common.createPassword" at <include "common.masterPassword" $dot>: error calling include: template: so/charts/common/templates/_createPassword.tpl:36:7: executing "common.masterPassword" at <fail "masterPassword not provided">: error calling fail: masterPassword not provided

Release "dev-uui" does not exist. Installing it now.

NAME: dev-uui

LAST DEPLOYED: Wed Apr 29 20:37:18 2020

NAMESPACE: onap

STATUS: DEPLOYED

RESOURCES:

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

dev-uui-server-864f8fcc8-6l6dj 0/1 ContainerCreating 0 0s

dev-uui-server-864f8fcc8-6l6dj 0/1 ContainerCreating 0 0s

With Regards,

Goutam Roy.

QingJie Zhang

Hi Goutam Roy,

you should add the option " --set global.masterPassword=xxxx" in your command, "xxxx" means the password you want to set.

pls refer to step 9.

Goutam Roy

QingJie Zhang , Thank you.

Goutam Roy

Hi QingJie Zhang ,

Thank you for your help. Your suggestion has solved the Password Issue but after that i faced one more problem.

I am getting following exception. for

[2020-04-30 13:50:30,817] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2020-04-30 13:50:47,692] ERROR Disk error while locking directory /var/lib/kafka/data (kafka.server.LogDirFailureChannel)

java.io.IOException: No locks available

at sun.nio.ch.FileDispatcherImpl.lock0(Native Method)

at sun.nio.ch.FileDispatcherImpl.lock(FileDispatcherImpl.java:94)

at sun.nio.ch.FileChannelImpl.tryLock(FileChannelImpl.java:1114)

at java.nio.channels.FileChannel.tryLock(FileChannel.java:1155)

at kafka.utils.FileLock.tryLock(FileLock.scala:54)

at kafka.log.LogManager.$anonfun$lockLogDirs$1(LogManager.scala:239)

at scala.collection.TraversableLike.$anonfun$flatMap$1(TraversableLike.scala:244)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at scala.collection.TraversableLike.flatMap(TraversableLike.scala:244)

at scala.collection.TraversableLike.flatMap$(TraversableLike.scala:241)

at scala.collection.AbstractTraversable.flatMap(Traversable.scala:108)

at kafka.log.LogManager.lockLogDirs(LogManager.scala:236)

at kafka.log.LogManager.<init>(LogManager.scala:97)

at kafka.log.LogManager$.apply(LogManager.scala:1022)

at kafka.server.KafkaServer.startup(KafkaServer.scala:242)

at io.confluent.support.metrics.SupportedServerStartable.startup(SupportedServerStartable.java:114)

at io.confluent.support.metrics.SupportedKafka.main(SupportedKafka.java:66)

[2020-04-30 13:50:47,699] INFO Loading logs. (kafka.log.LogManager)

[2020-04-30 13:50:47,708] INFO Logs loading complete in 9 ms. (kafka.log.LogManager)

[2020-04-30 13:50:47,723] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2020-04-30 13:50:47,726] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2020-04-30 13:50:47,728] INFO Starting the log cleaner (kafka.log.LogCleaner)

[2020-04-30 13:50:47,800] INFO [kafka-log-cleaner-thread-0]: Starting (kafka.log.LogCleaner)

[2020-04-30 13:50:48,050] INFO Awaiting socket connections on 0.0.0.0:9091. (kafka.network.Acceptor)

[2020-04-30 13:50:48,085] INFO Successfully logged in. (org.apache.kafka.common.security.authenticator.AbstractLogin)

[2020-04-30 13:50:48,120] INFO [SocketServer brokerId=0] Created data-plane acceptor and processors for endpoint : EndPoint(0.0.0.0,9091,ListenerName(EXTERNAL_SASL_PLAINTEXT),SASL_PLAINTEXT) (kafka.network.SocketServer)

[2020-04-30 13:50:48,120] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.Acceptor)

[2020-04-30 13:50:48,128] INFO [SocketServer brokerId=0] Created data-plane acceptor and processors for endpoint : EndPoint(0.0.0.0,9092,ListenerName(INTERNAL_SASL_PLAINTEXT),SASL_PLAINTEXT) (kafka.network.SocketServer)

[2020-04-30 13:50:48,130] INFO [SocketServer brokerId=0] Started 2 acceptor threads for data-plane (kafka.network.SocketServer)

[2020-04-30 13:50:48,170] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2020-04-30 13:50:48,170] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2020-04-30 13:50:48,171] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2020-04-30 13:50:48,172] INFO [ExpirationReaper-0-ElectPreferredLeader]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2020-04-30 13:50:48,191] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2020-04-30 13:50:48,192] INFO [ReplicaManager broker=0] Stopping serving replicas in dir /var/lib/kafka/data (kafka.server.ReplicaManager)

[2020-04-30 13:50:48,206] INFO [ReplicaManager broker=0] Broker 0 stopped fetcher for partitions and stopped moving logs for partitions because they are in the failed log directory /var/lib/kafka/data. (kafka.server.ReplicaManager)

[2020-04-30 13:50:48,206] INFO Stopping serving logs in dir /var/lib/kafka/data (kafka.log.LogManager)

[2020-04-30 13:50:48,208] ERROR Shutdown broker because all log dirs in /var/lib/kafka/data have failed (kafka.log.LogManager)

I have provided sufficient space for onap installation.

Please help me to solve this problem

With Regards,

Goutam Roy.

QingJie Zhang

Goutam Roy

Sorry to reply so late, but i don't know how to fix it, maybe you should ask in community.

Goutam Roy

Hi,

I took Elalto OOM code and try to deploy the onap code for Slicing.

I am facing following dmaap problem:

pod: dev-dmaap-dmaap-dr-node-0 and dev-dmaap-message-router-0

dev-dmaap-dmaap-dr-node- : Liveness probe failed: dial tcp 192.168.135.49:8080: connect: connection refused

Kubernetes version: v1.15.2

Helm version: v2.16.6

Code : elalto

Git: https://gerrit.onap.org/r/oom

OS: 18.04.1-Ubuntu

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m45s default-scheduler Successfully assigned onap/dev-dmaap-dmaap-dr-node-0 to node3

Normal Pulling 2m43s kubelet, node3 Pulling image "oomk8s/readiness-check:2.0.0"

Normal Pulled 2m40s kubelet, node3 Successfully pulled image "oomk8s/readiness-check:2.0.0"

Normal Created 2m40s kubelet, node3 Created container dmaap-dr-node-readiness

Normal Started 2m40s kubelet, node3 Started container dmaap-dr-node-readiness

Normal Pulling 2m38s kubelet, node3 Pulling image "docker.io/busybox:1.30"

Normal Pulled 2m34s kubelet, node3 Successfully pulled image "docker.io/busybox:1.30"

Normal Created 2m34s kubelet, node3 Created container dmaap-dr-node-permission-fixer

Normal Started 2m34s kubelet, node3 Started container dmaap-dr-node-permission-fixer

Normal Pulling 2m33s kubelet, node3 Pulling image "nexus3.onap.org:10001/onap/dmaap/datarouter-node:2.1.2"

Normal Created 2m30s kubelet, node3 Created container dmaap-dr-node

Normal Pulled 2m30s kubelet, node3 Successfully pulled image "nexus3.onap.org:10001/onap/dmaap/datarouter-node:2.1.2"

Normal Started 2m29s kubelet, node3 Started container dmaap-dr-node

Normal Pulling 2m29s kubelet, node3 Pulling image "docker.elastic.co/beats/filebeat:5.5.0"

Normal Pulled 2m26s kubelet, node3 Successfully pulled image "docker.elastic.co/beats/filebeat:5.5.0"

Normal Created 2m26s kubelet, node3 Created container dmaap-dr-node-filebeat-onap

Normal Started 2m26s kubelet, node3 Started container dmaap-dr-node-filebeat-onap

Warning Unhealthy 8s (x3 over 28s) kubelet, node3 Readiness probe failed: dial tcp 192.168.135.49:8080: connect: connection refused

Warning Unhealthy 5s (x3 over 25s) kubelet, node3 Liveness probe failed: dial tcp 192.168.135.49:8080: connect: connection refused

Normal Killing 5s kubelet, node3 Container dmaap-dr-node failed liveness probe, will be restarted

r# kubectl logs dev-dmaap-dmaap-dr-node-0 -n onap -c dmaap-dr-node-readiness

2020-07-07 08:20:40,319 - INFO - Checking if dmaap-dr-prov is ready

2020-07-07 08:20:40,829 - INFO - dmaap-dr-prov is ready!

# kubectl logs dev-dmaap-dmaap-dr-node-0 -n onap -c dmaap-dr-node-permission-fixer

With Regards

Goutam Roy.

Goutam Roy

I have some more informatiom log.

at sun.security.x509.CertificateValidity.valid(CertificateValidity.java:274)

at sun.security.x509.X509CertImpl.checkValidity(X509CertImpl.java:629)

at sun.security.provider.certpath.BasicChecker.verifyValidity(BasicChecker.java:190)

at sun.security.provider.certpath.BasicChecker.check(BasicChecker.java:144)

at sun.security.provider.certpath.PKIXMasterCertPathValidator.validate(PKIXMasterCertPathValidator.java:125)

... 32 common frames omitted

07/07-11:52:48.700|org.onap.dmaap.datarouter.node.NodeConfigManager||||Node Configuration Timer|fetchconfigs||ERROR||192.168.3.78|dev-dmaap-dmaap-dr-node-0.dmaap-dr-node.onap.svc.cluster.local||

|EELF0004E Configuration failed. javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path validation failed: java.security.cert.CertPathValidatorException: validity check failed - try again later.

07/07-11:52:48.701|org.onap.dmaap.datarouter.node.NodeConfigManager||||Node Configuration Timer|fetchconfigs||ERROR||192.168.3.78|dev-dmaap-dmaap-dr-node-0.dmaap-dr-node.onap.svc.cluster.local||

|NODE0306 Configuration failed javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path validation failed: java.security.cert.CertPathValidatorException: validity check failed - try again later

javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path validation failed: java.security.cert.CertPathValidatorException: validity check failed

Let me know what i should do.

With Regards

Goutam Roy.

Kamel Idir

Please use Frankfurt branch to deploy ONAP for 5G Network Slicing use-case.

cai pan

Hello, everyone!

I recently used Ubuntu to install ONAP, but Make is available only in Casablanca and Frankfurt is all reported to be in error. Therefore, it is impossible to install onap according to the official installation procedure. Is there any good advice?

This is the installation address:https://docs.onap.org/projects/onap-oom/en/latest/oom_user_guide.html

>make all & This command is directly interrupted.

I am from Wuhan, Hubei, China. I hope to meet friends who study ONAP technology in China.