Members

NOTE - In order to make fast progress, we are looking to keep the group size to a minimum and consisting as much as possible of TOSCA SME's.

Kickoff Materials

Meeting Information

Weekly meetings, on Thursdays...

Join Zoom Meeting

https://zoom.us/j/943224851

One tap mobile

+16699006833,,943224851# US (San Jose)

+19294362866,,943224851# US

Dial by your location

+1 669 900 6833 US (San Jose)

+1 929 436 2866 US

Meeting ID: 943 224 851

Find your local number: https://zoom.us/u/acMf3zvkUP

Assumptions

Objectives

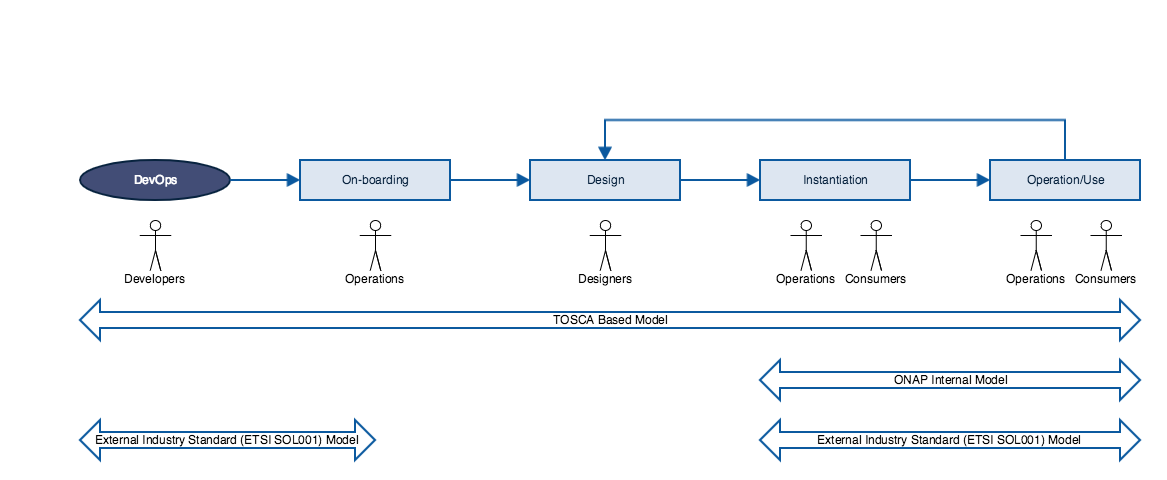

- Establish TOSCA as the "normative", supplier/operator neutral way to package and describe (model) network service and functions in ONAP.

- Enable template reuse and orchestration outcome consistency across ONAP related on-boarding, design, instantiation and operation activities.

- Identify TOSCA adoption barriers/gaps and recommend closure actions.

Tasks

- Template use...

- Define when and how TOSCA templates are used across on-boarding, design, instantiation and operation time

- Define template versioning strategy.

- New versions of VNFDs inside VNF packages to be on-boarded

- Support for multiple VNF template versions (e.g.SOL001) inside ONAP (transcoding)

- Identify template gaps and recommend closure actions.

- Network service and function lifecycle orchestration...

- Define end-to-end component interactions and dependencies required for TOSCA based orchestration activities across on-boarding, design, instantiation and operation time.

- Identify interaction gaps and recommend closure actions.

- Define how TOSCA templates are used in conjunction with emerging encoding and packaging alternatives.

- Recommendations...

- Architecture Subcommittee

- Modeling Subcommittee

- Projects

TOSCA Task Force - Dublin Recommendations

Personas & NS/NF Template Lifecycle (Draft)

To Do's

- Initial list of gaps - Fred (Verizon)

- Initial persona definitions - Michela (Ericsson)

- Stage definitions - Alex

- Gaps with respect to VNF requirements - Thinh (Nokia)

- Versions of TOSCA grammar to be supported by ONAP for on-boarding and/or internal use

Initial Gaps

Model for describing VNF Application Configuration Data

SOL001 has VNF configurable_properties data type but no prescriptive definition of use

Currently being used for setting VNF Day 0/1 configuration, e.g. self IP, NTP server, DNS server, EMS IP, …

VNF KPI/Indicators are not well described

Need description of KPIs/indicators exposed by the VNF and access method for each

VES may be a possible model

VNF Rules (Policies)

Need default policies based on above KPIs that can be used to drive various Life Cycle operations

Persona Definition

Service Provider: The entity providing a service

Consumers: The entity requiring and consuming a service

Different actors can be involved in providing a service :

Developers: . The developer may create additional artifacts/templates/blueprint to be used during the lifecycle of the service in addition to the ones provided by the vendors (e.g. create a new SO flow).

Operations: the operation team is responsible of the status changes of a service. It operates and monitor the service status.

According to ETSI SOL004, VNF package shall support a method for authenticity and integrity assurance. The VNF provider creates a zip file consisting of the CSAR file, signature and certificate files. Manifest file provides the integrity assurance of the VNF package. The VNF provider may optionally digitally sign some or all artifacts individually, in particular software images. The security aspects of the package and its contents will be covered during the onboarding phase by the operation team. Some vendor VNF Managers use the signatures to verify that the package artifacts haven't been modified so the original package artifacts need to be passed to the VNF Manager

Designers: It is responsible to design new artifacts to be used at run time (e.g a microservice blueprint, a service template, a clamp blueprints).

The role of the actors can be more specific per use case/stage.

Pre-onboarding

A pre-onboarding phase is not included in the NS/NF templace lifecycle above .

During the pre-onboarding phase/validation phase, TOSCA descriptor is validated according to the Validation Program based on VNF SDK tools. Today this is applicable to NF only, it could be applicable to NS too in the future. ONAP Reference: VNF Test Platform (VTP)

Use Case: VNF package validation

Actors: Vendor or Operator

Role: They both can run the test cases/test flows Different validation tools options are provided where both a vendor or an operator as a 3rf parties lab testing can run test cases.

Onboarding

Design

Instantiation

Operation/Use

Contributions...

80 Comments

Steve Smokowski

Please add me if there are still slots

Alexander Vul

Done.

Alexander Vul

Kickoff slides have been published on the Task Force page...

Srinivasa Addepalli

Hi,

I know that I getting ahead on this . I want to give my 2 cents.

. I want to give my 2 cents.

In one of the architecture meetings (may be 2 to 3 weeks back) when I was presenting Kubernetes based Edge/site support, orchestration models were discussed.

In last architecture meeting, Randy from Nokia provided very good wisdom that their experience showed that cloud-specific VNF description is needed in many cases. I have heard same thing from few other people too. That is, VNFs being described in HOT, K8S yaml, Azure ARM, CloudFormation etc... are needed. if some cloud-region supports TOSCA based VNFs (say some cloud region has Openstack tacker for example), then VNFs also can be described in TOSCA.

That said, TOSCA kind of standard language is required for service definition. Inside these service definition, there need to be pointers to VNF descriptions relevant for that service.

Kind of hybrid approach, in my view, needs to be supported - TOSCA representation for services and VNF description using cloud-specific technologies.

Srini

Fernando Oliveira

I respectfully disagree. I think that the VNF can be well described with a TOSCA based representation which can be converted to appropriate cloud based implementations. The VNF descriptor can certainly reference external artifacts (VM images, Docker containers, HOT templates, Helm charts) that can be used by the NFVO/VNFM to LCM the VNF onto an appropriate "cloud".

We expect that most of the VNFs that we deploy will have TOSCA based VNF Descriptors.

Srinivasa Addepalli

I don't mean to rule out VNF description in TOSCA. I am only saying that it should be possible for VNF vendors to describe in cloud-technology specific ways too.

On "TOSCA based representation which can be converted to appropriate cloud based implementations" : On this, one question that comes to mind is whether to do this conversion at the design time or at run time. When I say design time, conversion happening when TOSCA VNFD (using TOSCA CSAR) uploaded to SDC, Multi-Cloud openstack/K8S/Azure/AWS can register for notifications and convert them to cloud technology specific format and keep them locally. At run time, Multi-Cloud services could use these templates without incurring any conversion delays. Or do you see any advantages of doing this conversion at run time?

I guess few questions that need to be answered/recommended by this TOSCA task force.

Love to hear your views.

ramki krishnan

Thanks Fred - I am merging the two wiki threads for simplicity. I am on the same page as Srini in not ruling out TOSCA based VNFD. But I do want to make sure that the target is something realistic.

Cloud Native Technologies (K8S etc.) are much more advanced in infrastructure management capabilities as compared to OpenStack etc. The question to answer is that if the TOSCA VNFD aims to create a generic model across all VIMs or it is more of a simple wrapper across cloud specific technologies such as K8S Helm/Yaml, OpenStack Heat etc. To me the simple wrapper model seems more realistic.

On the other hand, NSD is an area where TOSCA can bring value right away where none of the aforementioned cloud technology specific issues exist.

Love to hear your thoughts.

Fernando Oliveira

Srini,

I would expect that the conversion from TOSCA to cloud implementation would happen at runtime since a designed service (NS-D) could be deployed on different cloud types at each instantiation, ie a service deployment might have one of the VNFs on an OpenStack cloud whereas another deployment of that service might have that VNF on AWS or Azure cloud.

Ramki,

As far as I understand, OpenStack is similarly "Cloud Native" depending on how you define CN. It seems to me that the Heat/OpenStack infrastructure capabilities are similar to Helm/K8S if not better in the network management. to me it seems that having a generic model which can be converted at runtime would allow a much more flexible service design which would be independent of the actual VNF deployed execution environment.

It seems to me that the Heat/OpenStack infrastructure capabilities are similar to Helm/K8S if not better in the network management. to me it seems that having a generic model which can be converted at runtime would allow a much more flexible service design which would be independent of the actual VNF deployed execution environment.

In the current ONAP environment the NSD is not exposed outside of ONAP, so a "proprietary" NSD might actually be OK. However, in the scenario where ONAP would interact with other orchestration environments, I expect that a TOSCA based NSD would be very desirable.

ramki krishnan

Hi Fred,

K8S is substantially advanced in terms capabilities as compared to open source OpenStack. One good example is "scale out" which is a built-in feature in K8S. With open source OpenStack, since the "scale out" feature is absent, we have to do a lot of heavy lifting in ONAP. Besides "scale out", K8S offers other features such as service discovery etc.

With this context, we have to examine the feasibility of a generic model for VNFD.

Thanks,

Ramki

Fernando Oliveira

It all depends on what you include in the term OpenStack. Heat is very capable of providing "scale out and scale In" as well as "heal" and even upgrade for OpenStack. Why doesn't ONAP just use the Heat capabilities. I think that ONAP will have more issues defining the policies that can be delegated to K8S than it does with OpenStack.

Both Heat and K8S require a configuration descriptor with references to the resources, images, scale in/out parameters, ... I am interested to understand what you think would not be common between the 2 execution models.

Regards,

Fred

ramki krishnan

While OpenStack Heat does provide scale out functionality, the K8S solution is comprehensive and easy to use with custom metric support on application-provided metrics besides infrastructure metrics. Please take a look at – https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/. The advanced functionality is where a common model gets harder.

Thanks,

Ramki

Fernando Oliveira

I would rather not get into a debate about which tool is easier to use or has the most capabilities. I still think that most if not all of the functionality is available in K8S, OpenStack, AWS, VMware, ... and that this functionality can be described by an abstract representation which can be "compiled" into the appropriate implementation language. One of the challenges that I see in leveraging either K8S or OpenStack advanced functionality is how ONAP delegates some of the policies to the underlying VIM while maintaining higher order policies for the service in general. This seems like a subset of the issues that will come up when ONAP is delegating policies in an "Edge" scenario which has a "sub" ONAP managing a portion of the service..

It would be useful to discuss why you think the advanced functionality is unique and what about it would be hard to describe in abstract terms. Perhaps Alex can arrange for a F2F workshop where we can discuss in detail.

Srinivasa Addepalli

Hi Fred,

I think you are not ruling about ONAP supporting VNF vendors that provide cloud specific formats, but you like to see ONAP supporting VNF vendors that support TOSCA VNFD too. If that is the case, we are all in agreement. But there is one thing you mentioned is not clear to me. You said "I expect that the VNF will be delivered in a CSAR package with a TOSCA VNF-D. That CSAR package might also include HOT, Helm, etc templates that could be used to construct appropriate deployment artifacts at runtime." What does that mean? Do you mean that anything,that is not supported by TOSCA VNFD,represented using Helm/HOT etc.. by VNF vendors? In my view, that kind of hybrid VNFD is not preferred. It is good if VNF vendors, for a given VNF, provide VNFD in TOSCA or set of cloud specific formats.

Just brainstorming on following.

My second question is related to your preference of run-time conversion of TOSCA VNFD to Cloud specific formats. What are the advantages of run-time conversion over on-board time conversion? My thinking is that during on-board of TOSCA VNFD, all Multi-Cloud plugins would get notified and each Multi-Cloud plugin converts the TOSCA VNFD to its own format. At run time, when SO calls Multi-Cloud to instantiate the VNF, control goes to right plugin based on cloud technology. Since the conversion already happened earlier, it would just use that cloud specific template and corresponding environment to talk to cloud.

My third question is related to values for parameters in templates. For each service, there would be multiple consumers (e.g customers). For each consumer, there could be set of values that are different from other consumers. In case of HOT, there is environment where values for set of parameters are defined. In case of K8S Helm charts, values files are used to define environment specific values. In both HOT and Helm, there is another flexibility of overriding even environment/values-files with parameter=value at run time. In case of HOT, these overriding parameters can be sent to HEAT service using additional sets of parameters & values. In case of Helm, helm deploy and helm templates can take parameters and values as additional arguments. I always wonder what are equivalent concepts in TOSCA - How do people represent the environment? Is there any standard defined?

Srini

Fernando Oliveira

Srini,

I wouldn't rule out VNFs that are delivered to only support a specific cloud environment, but I would like to see them all use a common VNF Descriptor which can describe the resources required to run, the images for the elements that would be deployed, the topology of the internal connectivity between the elements and the external connectivity points. In the ETSI SOL001 (VNF-D in TOSCA) there is the notion of "scripts" which can be used at various VNF Life Cycle operations. One of these scripts could be a HOT or Helm template that could be used at instantiation time. I agree that VNF vendors whould prvide a VNF-D in TOSCA format.

2nd question, It seems like your approach (early but for all formats) would work best if he VNF was going to be launched many times on many different execution environments whereas the JIT approach would work best in cases where the VNF would be launched less often or only on a particular type of execution environment. I'm not sure which is right, but my intuition is towards the latter (JIT).

3rd question, ETSI SOL001 (TOSCA VNF-D) and SOL003 (or-Vnfm interface) have the concept of "configuration parameters" and "modifiable parameters" which can be used for this kind of variable substitution. The "configurable parameters" can also be used to describe the "application" configuration that could be used to control day 1-N operation and the SOL002/3 interface has a mechanism to communicate with the VNF to pass those parameters.

Chesla Wechsler

Fernando (or ANYONE with an example),

I've recently been educated by Thinh on the differences between the configurable properties and modifiable properties but it would still help me immensely to see a fleshed out example of a VNF-D that has configurable properties at the VNF level and VNFC level so it can become more "real" to me. Do you or ANYONE have an example you could provide (or create)? Thanks.

Fernando Oliveira

Hi Chesla,

Unfortunately, the examples that I have are vendor copyrighted so I can't share. Thinh Nguyenphu might be able to share an example from a Nokia VNF.

Srinivasa Addepalli

These are old ones (based on TOSCA NFV profile 1.0). It might provide some insight.

https://github.com/openstack/tacker/tree/master/samples/tosca-templates/nsd

In this example, there is one NSD, having two VNFs (vnfd1, vnfd2). Both the VNFDs are imported in NSD (sample-tosca-nsd.yaml). VNFD1 has two VDUs and VFND2 also has two VDUs. There are two virtual links that shared between two VNFDs. Since those are shared, they are created in NSD.

I see a parameter file called ns_param.yaml file that has values for some parameters defined in the NSD.

Hope it helps.

Chesla Wechsler

Thanks, Srini. I just looked at them and they are too old and "sample" for my purposes. I'd love to see something real that includes the configurable properties according to the new data type. Something like the firewall (packet gen, firewall, sink) would do.

Srinivasa Addepalli

Hi Fred,

In regards to TOSCA using Helm templates : Based on your response, it seems that we may be talking same thing. I was saying that a given VNF in a service can be represented in Helm and can be referred from TOSCA service (NSD). You also seem to be saying same, but instead of referring Helm charts & scripts from TOSCA service (NSD), you seem to say that Helm charts are referred from TOSCA VNFD. Essentially, TOSCA VNFD will have artifacts which refer to helm charts . In VNF LCM (interfaces:standard:create) portion of node_template, have a reference to script, which executes helm to create K8S yaml files and invoke multi-cloud service to deploy containers. This way, VNF vendor need to provide skeleton VNFD in TOSCA with all the intelligence in Helm charts. Is that correct understanding of your response.

I also had gone through artifact processor section in TOSCA 1.2. It seems that VNF LCM operations can be executed in orchestration systems also. So, above should work fine.

Srini

Fernando Oliveira

Srini,

Yes, I agree that the vendor would provide a TOSCA VNF-D which could have references to artifacts which could be skeleton Helm charts or HOT templates. However, I am thinking that the Helm chart or HOT template would be a simple template and that the TOSCA VNF-D would have most of the intelligence. ONAP can choose to delegate some of the policies (scale in/out) in the TOSCA template to Helm/K8S or Heat/OpenStack but might retain other policies (heal?) at the higher level.

I think the challenge will be in figuring out which policies should be delegated to the VIM and which need to be processed in ONAP.

Best,

Fred

Srinivasa Addepalli

Yes. I agree that would be a big challenge. In case of K8S, many of the LCM operations are taken care by K8S and associated CRDs. For example, K8S can take care of scale-out, scale-in, affinity, anti-affinity, auto-healing (restart policy), auto-updating the load balancers etc.. Many helm charts take advantage of these already. So,it is interesting to understand the breakdown between TOSCA VNFD and Helm charts. I hope this task force will discuss and provide recommendation on that.

One topic of interest to me is that the sharing of resources across VNFDs, where some portions of VNFDs are helm based and some portions of another VNFD are HOT based. . For example,if ONAP user needs a virtual network (Virtual Link is the term used by TOSCA NFV) or storage shared across multiple VNFDs of different types. how does this get represented in various VNFDs? Who will make changes to VNFDs and in what phase? I hope TOSCA task force will get chance to discuss this and provide recommendations.

Srini

Fernando Oliveira

The sharing of resources among different VNFs is one of the main reasons that ONAP will need to be careful about what it delegates to the VIMs. Heat/OpenStack and Helm/K8S seem to have approximately the same capabilities so perhaps a common way of deciding which policies and capabilities get delegated can be designed.

I have the same hope for the TOSCA task force.

Fred

Tal Liron

This wiki conversation has become complex, but I will add a "middle" perspective here --

Like Fred, I believe TOSCA can be used to powerfully describe any cloud technology, both legacy and cloud-native, though I would add -- as long as that technology is properly modeled in TOSCA. In my view this means ignoring the Simple Profile normative types (both the general and NFV ones) and instead creating domain-specific profiles, e.g. special models for Kubernetes, special models for OpenStack (similar to Heat), special models for Azure, etc. Even bare metal and other physical resources can be represented as TOSCA types, allowing a truly end-to-end topology, with each domain being supported by its native tools, and in this respect I'm aligning with Srini.

This allows us to stay within the same design/validation/packaging toolchain while also allowing full expressivity of crucial technological features. It is possible to have our cake and eat it.

There is an obvious cost to this approach in that we would require vendors to align with TOSCA. But I would say that they already have a long list of requirements to align with, and having us publish clearly supported TOSCA types is more straightforward than publishing matrixes of different Helm, HOT, Azure ARM, CloudFormation, Ansible, etc. versions and features that we do or do not support (and of course we would have to test this matrix).

Writing descriptors is simply part of the job of VNF onboarding and certification, and sticking to one language could help us reduce complexity.

Fernando Oliveira

Hi Tal,

One of my goals (requirements??) for a VNF from a vendor is that it can be instantiated on multiple cloud management environments (VIMs?), eg K8S, OpenShift, Swarm, Openstack, Azure, AWS, GCE, ...). For this reason, I would rather that the VNF descriptor NOT be domain specific, but rather be an abstract (superset) description of the execution resources required by the VNF, the VNFC topology (internal connection points), the lifecycle management (LCM) policies for the various VNFC and the external connection points that are exposed from the VNF. I am not opposed to creating and publishing ONAP supported TOSCA types as long as we can get all (most) of the VNF vendors to supply VNFs using those types (without charging an exorbitant amount to customize the VNF Descriptor to those types).

My ideal scenario would have the ONAP design time create a Service with a topology based on the externally exposed PNF/VNF connection points such that the ONAP runtime can

A nit on your last statement; I expect that writing VNF descriptors is part of the job of VNF creation and that the VNF descriptors are generally immutable so won't change during onboarding or certification. I agree that using one language could help us reduce complexity.

Chesla Wechsler

I think there are a few things to explore here:

I was also gratified to learn that the ETSI SOL001 spec, while using TOSCA that passes syntactic parsing, intentionally did not attempt to leverage all of the orchestration aspects of TOSCA language. To do so might have mandated an implementation. Given this task force has as its intent to explore a TOSCA-based implementation across the platform, this fact was even more validation that the internal model (which should leverage TOSCA as an orchestration language) is NOT the same as the onboarding model (which avoids it on purpose).

Tal Liron

I agree with all of this!

I will add two points about the orchestration aspects of TOSCA:

1) They are useful at the SO level for integration with OSS/BSS. So here we're not talking about VIM workflows (provisioning virtual machines) but rather integration with business processes. Proper separation indeed means that at this level we know nothing about the lower-level orchestration.

2) At the lower levels, orchestration aspects are sometimes useful and sometimes not. Truly cloud-native VNFs should self-orchestrate: once you deploy them to Kubernetes, they will have operators and controllers that handle that. You would not normally be running "workflows", "playbooks", "scripts", etc. All that is pre-cloud-native old-school orchestration and LCM. We're happy to throw that out! It's fragile, slow, and requires considerable resources to manage state and logging. Still, for "legacy" (pre-cloud-native) VNFs we might still find these orchestration aspects useful. If you compare with Heat templates, this is where TOSCA adds an important feature. Indeed, it's a reason why Heat is sometimes insufficient and must be complemented or replaced with Ansible or another orchestration tool. TOSCA could actually be a very powerful OpenStack orchestration solution. (I have a PoC that does exactly that.)

Tal Liron

Thanks for responding, this conversation is helping me find better ways to explain my approach. I realize that's what's confusing is the word "descriptor".

In the situation I'm imagining "descriptor" is actually cleanly separated into two grammatically related parts. Consider a VNF packaged in a single CSAR file. Inside you'll find:

1) A descriptor.yaml file. This would have be a one or more TOSCA node types that represent the VNF, plus any new extended capability types or relationship types that might be used by the node type. This is the type information that could be imported into and used at the SO level to create a complete network service. All of these types would be inherited from a base profile, so that we can ensure a lowest-common-denominator ability to relate to other VNFs and components that do not recognize the extended capabilities and relationships. (This is the power of TOSCA's object-oriented approach.) And here's the important part for this discussion: all of this is platform-agnostic. At this level, it's all "logical". This means that even a "VDU" type should not exist here, because that would be considered as a platform implementation detail (the concept is meaningless in the Kubernetes paradigm, and indeed LCM needs to be rethought).

2) Then we have a set of TOSCA files that are platform-specific: openstack.yaml, aws.yaml, kubernetes.yaml, etc. These would use TOSCA's substitution mapping grammar to gramatically connect to the logical types in descriptor.yaml.

The orchestrator would then know which of these the platform-specific TOSCA templates to substitute according to the selected cloud technologies.

Srini's objection seems to be about #2: he claims that instead of openstack.yaml we should have a Heat template, and that instead of kubernetes.yaml we should have a Helm chart, because those are the appropriate technologies for those platforms. Sure, we can do that, but we lose the main benefits of using TOSCA, as I see it: validation, composition, and common management of our topology. The service orchestrator would have to treat Heat templates and Helm charts as opaque (VIM as black box), and thus would have to delegate management to other orchestration components, adding considerable complexity, and also requiring additional abstraction layers. But if it's all TOSCA the interface with the VIM would be managed.

(Also, there's no real one distribution technology per platform. Heat is very limited and some might have Ansible playbooks instead. Helm is popular, but it's not the only Kubernetes packager by any means. The list of artifact types we'd want to support can get very long indeed.)

Importantly, it would also be easier to avoid "orchestration lock-in" by going all-in on TOSCA: for example, conflicts between different versions of Heat on different OpenStack clouds, or different versions of Helm, etc. At least in Puccini it's very easy to handle these differences because it's just short and straightforward JavaScript scriptlets that handle the VIM interface. Another TOSCA solution could handle it in other ways. The point is that by sticking to TOSCA we are still in control of and manage the complete "descriptor" (here I use the word as a totality including both #1 and #2 above).

Chesla Wechsler

Tal Liron, I would like to know more about what you would include in your base profile.

Tal Liron

I would like to know, too. It's one area I personally have not worked on yet, as I'm trying to build from the bottom up (tech comes first) and be confident that TOSCA can handle specific the underlying platforms (part #2). And I'm quite confident indeed at this point, as I have a few successful PoCs.

It's one area I personally have not worked on yet, as I'm trying to build from the bottom up (tech comes first) and be confident that TOSCA can handle specific the underlying platforms (part #2). And I'm quite confident indeed at this point, as I have a few successful PoCs.

My initial thoughts on the top layer:

1) We don't need any node types. Specifically, we don't need a "VirtualFunction". Derivative types like "vRouter" or "vEPC" are also even more unnecessary. One reason is reality: it's very, very hard to encapsulate the huge variety of VNFs under such simplistic types. Indeed, we're seeing more and more VNFs that combine a lot of functionality. We definitely don't want to limit innovation or hide features. The second reason has to do with TOSCA's grammar, leading to point #2.

2) We need capability types and relationship types that abstractly model the data plane and SDN. The descriptor.yaml files will create special node types that are in effect assemblages of the networking functionality they provide. Remember that in TOSCA a requirement is the "plug" that connects to a capability "socket". It does not connect to the node itself. So, TOSCA grammar allows any node type to expose any such combination of such "sockets". And of course they can derive capability types in order to extend that functionality. That should be fine by us, because TOSCA's object-oriented contract ensures that the parent type should still be supported. (Something that SOL001 did not seem to appreciate when it derived from Compute and then "deprecated" some of the parent type features...)

The way it will work is that we'll use TOSCA's import functionality (not service composition) to bring in the descriptor.yaml files from all the VNFs we want to include into the service, and our topology template will have node templates of these types. Our work will just be to specify and configure their requirements.

That's pretty much it in terms of the bare minimum required to design network functions.

Notice there's no VDUs or any kind of compute specification (that stuff should be in the lower levels). Also no "forwarding graphs": that should be extrapolated, if necessary, from the finally compiled relationship graph.

This can be extended beyond the bare minimum, possibly with extra (and optional) profiles that can also be imported. They can provide additional integration functionality with other and external orchestration systems:

1) OSS/BSS profile: We can use TOSCA's workflows and trigger policies (what Chesla called the "orchestration aspects") to allow this network service to integrate with business processes. This will likely mean integration with BPMN.

2) Composition policies: The VNFs (node types) might have various deployment options, depending on licensing, performance envelopes, etc. Essentially this provides hint for inventory management, and possibly actual licensing information.

3) Provisioning policies: Various TOSCA policy types that provide "hints" for lower level orchestrators, such as which one should be chosen and what it should do. For example, matching performance profiles with certain cloud IDs. How these policies will be modeled depends very much on what our orchestrators can do, and as usual I would lean towards a custom model that exposes all the functionality of that orchestrator. So, the policy could handle configuration of existing PNFs, or re-use of existing VNFs that support multi-tenancy. Obviously the decision-making process is complex – in the future it will surely involve machine learning and AI -- but here is where the network service designer can specify intent and limits.

4) Scaling policies: Cloud-native VNFs will change after being deployed. Here we can set limits and rules for how they are allowed to scale in/out. Again, we can only provide hints and limits: cloud-native means the VNF knows best.

General thought regarding TOSCA policy types: TOSCA doesn't normally let you specify whether a policy is "required" or not. That means that if some implementations are missing a certain orchestration component (they see a policy type that they do not recognize), they are free to simply ignore it. That might not be acceptable. So, it may be useful to add such a flag, either by creating a new root policy type, or suggesting this as a grammatical change with OASIS.

Michael Lando

please add me as well.

Michael Lando

Alexander Vul reminder since you probably missed my comment please add me to the list.

Fernando Oliveira

Please add me too.

Andrei Kojukhov

Please add me too.

Ethan Lynn

Please add me as well.

ramki krishnan

+1 +2 +3 +4 +5 on Srini's comment. Besides Nokia, I hear the same from several VNF vendors. TOSCA representation for services should be the immediate priority.

Fernando Oliveira

I respectfully disagree. We have had good response from our key vendors that with the latest SOL001 (VNF-D in TOSCA) they can describe their VNF.

On the service side, I think that it will be difficult to completely describe some of the business logic in pure TOSCA and that we will need some BPMN like extensions similar to what Tal described a few weeks ago using the Puccini code ( https://github.com/tliron/puccini-bpmn/).

Phuoc Hoang

Hi team,

This topic is interested to me. Can you guys add me as well. Thanks.

ramki krishnan

Please add me too.

Thanks,

Ramki

Chesla Wechsler

Are we all in agreement that, wherever we use TOSCA, we have some ground rules:

Fernando Oliveira

I certainly agree with the spirit of those ground rules. From my experience, the ETSI NFV SOL team has had difficulty getting OASIS to consider changes that were needed to support VNF description so I don't hold out much hope of getting OASIS to consider our changes quickly. I suggest that we consider ETSI NFV SOL group as a proxy for getting any new changes into OASIS TOSCA. If we can get the SOL group to consider the features that we feel are necessary and get those incorporated into the SOLXXX specifications, I think that we can make reasonable progress in getting agreement from an appropriate industry SDO.

Claude Noshpitz

SOL-001 may be sort of a special case wrt OASIS TOSCA Simple Profile, where the NFV group ended up with requirements for functionality that went beyond what the core Simple Profile had already defined. The input from NFV continues to drive progress in Simple Profile – so that's good synergy.

That said, the problem of SDOs each running on their own cadence and expressing needs that are potentially out of sync with one another isn't going away. It seems good to consolidate sources of requirements "close to" the group(s) defining standards that can meet them.

I would encourage the Task Force to engage as directly as possible with the Simple Profile effort – both to surface the TF's observations/concerns, and to ensure that potential requirements are injected effectively into SP workstreams.

Chris Lauwers

I don't believe that Fernando's characterization is entirely fair. In my opinion, there is a very healthy working relationship between OASIS TOSCA and ETSI NFV SOL. In fact, many of the feature enhancements that are currently being added to the TOSCA spec are motivated by NFV as a very relevant and very powerful use case. If there are any challenges, they fall in one of the following two buckets, in my opinion:

Fernando Oliveira

I was only relaying my experience. I would be happy if we can collaborate with the various SDO to achieve consensus and move the standards forward. I agree that one of the challenges is differing release schedules and that will likely continue. I would be happy to participate in the N-way education, hopefully without slowing down the process. Any suggestions on how we can get this education started ASAP?

I would be happy if we can collaborate with the various SDO to achieve consensus and move the standards forward. I agree that one of the challenges is differing release schedules and that will likely continue. I would be happy to participate in the N-way education, hopefully without slowing down the process. Any suggestions on how we can get this education started ASAP?

Chris Lauwers

I'm not sure of what the best forum would be, but I'll happily participate in any TOSCA tutorial sessions and/or exchange of information about planned TOSCA enhancements and ONAP requirements.

Chesla Wechsler

Chris, it might be useful having an analysis of the current types to point out a few areas of where the ETSI NFV types are orthogonal to TOSCA The ones I think I've identified are the following:

I could also use an education on how I'm supposed to craft TOSCA to assign values to properties in a template that will be used in substitution (for the node type being substituted). That is, if my substitution mappings declare my type to be vendor.nodes.MyVNF, how do I assign properties to that VNF?

Also, if I have properties I would like to associate with an artifact, how do I do that? I see 1.3 is deprecating that. The ETSI NFV sw_image seems to belong more with the artifact than the node that's using the artifact (esp. if there is more than one artifact).

Finally, to what degree are the current normative types really considered normative as the virtualized world continues to change?

Chris Lauwers

Thanks Chesla, very good points:

I think there are actually two separate points here:

Yes. These types of issues are the result of using TOSCA as a language in which to describe data structures, rather than as an orchestration language.

Yes. Avoiding these types of issues will require a better appreciation for requirements and capabilities.

Are you referring to the node type hierarchy here? If so, this is probably related to your comment about TOSCA Normative Types. We've started to create better abstractions, but these types haven't had the benefit of a lot of practical use. I anticipate they will continue to evolve based on user feedback.

W.r.t. artifact properties, they will show up (again) in the final version of 1.3. While the current spec includes artifact properties, there is nothing that states how an orchestrator is expected to handle those properties. As a result, we considered removing them altogether, but after much discussion i believe we've come up with a rational explanation for how and where to use artifact properties.

These are great discussions. I'm wondering what the process is for turning the ideas floated here into something actionable?

Avi Chapnick

Please add me to the team

Thanks,

Avi

Tal Liron

Please add me, too.

By the way, I've recently added TOSCA for OpenStack support for Puccini. The idea is to have TOSCA models that are as similar as possible to those in Heat (after all, the TOSCA and HOT languages have superficial similarities and even share some history). Ansible is then used to do the orchestration, so you do not have to install the (very limited) Heat orchestration element into OpenStack. But also other orchestrators can be used instead of Ansible. So, it could to be possible to remove even Heat from the list and really have 100% TOSCA without losing any Heat features (and actually allowing for more features and more powerful integrations: the Ansible roles can be included into larger playbooks that do more than just provision OpenStack resources).

This is a very different approach from that of the Tacker project, where the idea was to convert the TOSCA Simple Profile types to Heat. The Simple Profile types are so minimal that too many features are lost, and Tacker could never be a proper replacement for Heat.

Chris Lauwers

Yes, translating TOSCA to Heat is an interesting academic exercise, but not very helpful in practice. If your goal is to express your service description in Heat, then write it in Heat in the first place rather than translating from TOSCA

Of course, that doesn't mean there isn't a very appropriate place to use Heat in a TOSCA-based environment. In my opinion, Heat fits in as follows:

Tal Liron

Well, if we can do everything in TOSCA that we can do in Heat and more, then there doesn't seem to be a necessity for Heat...

By the way, the HOT language is not the only limitation of Heat. Heat itself is a very limited orchestration/LCM component. It does some things, but not all things. That's why in the real world you see many products supplementing (or replacing) Heat with Ansible playbooks, Chef recipes, etc. Heat is too limited: sometimes you might one to include external tasks inside your orchestration graph (deploy some Kubernetes pods and make sure they're up before continuing) or sometimes you want to integrate your work into OSS/BSS – but not as a single big "black box". It's very hard to do these things with Heat, because it's really not what it was designed for.

So, TOSCA can open the door to a BYOO (bring your own orchestrator) approach that provides unified modeling but customizable implementations.

Chris Lauwers

Yes, I see TOSCA as the end-to-end (abstract) representation of the service. You can't create such an end-to-end representation in Heat. To instantiate the service, an orchestrator would "decompose and delegate": it would decompose the end-to-end service into sub-topologies (possibly recursively), and some parts of those sub-topologies could be delegated to Heat/Hot, Ansible, Kubernetes, etc. The reason one would "delegate" (rather than implement natively in TOSCA) is because of the features of the systems to which TOSCA is delegating (which presumably TOSCA doesn't have). To re-iterate Tal's statement: TOSCA provides the unified (end-to-end) modeling and orchestration descriptions of the service, while leveraging third-party (customizable) implementations for localized orchestration of parts of the service.

Srinivasa Addepalli

Yes. There seems to be good consensus across the board on TOSCA for service orchestration. I think there is no dis-agreement on using TOSCA for nested service definition too.

Within the service, how VNFDs are represented is the actual debate. I think majority of discussions are on this aspect.

How much of VNFD is generic across various clouds and how much VNFD needs to be specific to each cloud technology. That is one answer we need to get first I think.

I guess there would be no disagreement on representing generic portion of VNFD in TOSCA.

Cloud specific portion of VNFD : Should it be in Cloud specific format such as HOT, Helm, Cloud-formation and ARM (OR) should it be represented in TOSCA way with cloud technology specific node types? This is second answer we need to agree on. As Tal Liron mentioned, my leaning towards Cloud specific format is due to following reasons

That said, if there is a way we can provide a tool (offline) to convert from Helm charts to TOSCA, some of above challenges can be mitigated. But, we need to consider worthiness .

.

ramki krishnan

Thinking a bit radically ... how about K8S YAML as the standard for VNFD -

Srinivasa Addepalli

Yes. It is radical .

.

ONAP team, after long discussions , decided on SOLxxx. So, it is good to stick to that.

SOLxxx for NSD and CSAR for sure. VNFD? I guess that is the discussion and I am hoping that this task force will provide guidance that is practical.

Tal Liron

Re point #1: It's true that Helm is common, but it's not the only package manager. Helm specifically has technical and architectural deficiencies: it uses text templating (terrible idea for YAML), and requires a custom orchestrating controller (Tiller), so it shares some of the problems that Heat has. We're at an early enough phase in the Kubernetes world that it's possible to make an impact. The fight for a common packaging is not over yet.

Re point #2: It's true that it would take time to create an alternative, but it's a much, much smaller task than anything else we're doing in ONAP. We have vast amounts of code already doing things that overlap with other open source projects. In this case, I think creating a TOSCA solution for Kubernetes would be an important contribution to the community at large, and I also think it fits very much with our goals.

Let me put it this way: if there already was a stable TOSCA solution for Kubernetes, wouldn't we have adopted it enthusiastically?

I'll point out another important advantage for TOSCA over something like Helm: service meshes. TOSCA's topological awareness makes modeling service meshes so, so much easier than with Istio. I'll add to this also the new efforts on network service mesh for the data plane.

Srinivasa Addepalli

Tal Liron

Attn: Kiran Kamineni, Victor Morales amd Ritu Sood

On #1: In R3, we don't expect tiller to be run. In Multi-Cloud service, as part of K8S plugin, Helm charts gets converted to K8 yaml using overriding values and then K8S API calls are made. That is, we don't expect tiller to be installed in K8S based cloud regions.

On #1: Can you elaborate on "it uses text templating (terrible idea for YAML)"?

On "Let me put it this way: if there already was a stable TOSCA solution for Kubernetes, wouldn't we have adopted it enthusiastically?" : yes. Helm has big momentum and hence the need for using it . That said, as I have been saying, we need to support multiple formats - Pure TOSCA based VNFD as well as Helm based VNFD.

. That said, as I have been saying, we need to support multiple formats - Pure TOSCA based VNFD as well as Helm based VNFD.

On "TOSCA's topological awareness makes modeling service meshes": Can you expand on this further? What is TOSCA topological awareness?

Tal Liron

Well, Helm without Tiller is extremely minimal, it's really not much "packaging" at all. It's very close to just using text templating for YAML. But you still write the actual Kubernetes resource specs.

Why is text templating a bad idea? Because YAML is structured (and painfully structured at that, with significant indentation). With text templating you can easily break the structure: imagine inserting an unescaped string, or a var returning the wrong type, etc. Despite validation tools, you really can't be sure the result will work or not until it gets applied to your Kubernetes cluster. You're really not doing any modeling with Helm, it's just a tool to manage a bunch of specs together. Of course it could be all you need, but it's important to know what it is and what it isn't. In Puccini I chose to use JavaScript as a way to generate and manipulate the data structures that become Kubernetes specs. Other "packagers" have chosen other approaches. In my view, almost anything is better than text templating.

And if you want to support Helm in Multi-Cloud COE, well, you might need to support it with Tiller, too, no? And multiple versions of Helm? If you start making limits and requirements for VNF packagers, I think you can go all the way. To me this is acceptable. Even within Linux, there are several packaging systems, and if you want to join an ecosystem, it's expected that you play by its rules.

What I mean by "topological awareness" is the inherent relationship graph created by TOSCA grammar. When you configure Istio, it's nothing like that. Think of it this way, from an example we've all done in the past: let's say you need to model a traditional IP network with many routers and hops. You might draw a whiteboard with the entire inter-network topology, with arrows of various kinds for the routing paths. Now, you need to make this a reality, which would end up being a bunch of IP routing tables for each hop. There is a vast disconnect between the whiteboard model and the routing tables. Another way to think about it is as the difference between architecture and engineering.

Istio is about configuring routing tables. There are visualization tools for Istio that do show nice graphs of your topology, but these are the results that are derived from collected data. They are definitely not definitive. What TOSCA can do here is let you design the service mesh topology using the familiar whiteboard-style drawing. It's all relationships of various kinds. The routing tables (Kubernetes specs for Istio CRDs) are then generated for you. It is a way to manage the service mesh all at once. And the nice thing is that it integrates with the Kubernetes concepts already modeled in TOSCA (pods, services, ingress routers) so that everything comes together as a complete picture, validated by TOSCA.

Chris Lauwers

Technically speaking, TOSCA doesn't actually orchestrate anything itself. TOSCA provides the "generic" representation, but it also provides technology/cloud-specific formats through the use of Artifacts that are associated with TOSCA nodes or relationship. Artifacts provide the "implementation" of TOSCA lifecycle management operations, and TOSCA invokes those lifecycle management operations by having the corresponding Artifact "processed" by the appropriate processor/orchestrator. If the artifact is a Heat template, TOSCA would delegate to OpenStack. If the Artifact is a Helm chart, it could delegate to Kubernetes. Hopefully this shows how TOSCA could provide a generic representation, while at the same time providing a number of technology-specific artifacts to allow the orchestrators to decide (at deployment time) which technology to invoke based on the available cloud technologies.

Chris Lauwers

By the way, I've build tools to translate YANG models to TOSCA. I haven't thought about translating Helm, but I imagine that would be equally feasible.

Tal Liron

Chris, check out the Puccini project, which has Kubernetes modeling in TOSCA. It is not only feasible, but I believe a good use case for TOSCA and hopefully a useful contribution to the Kubernetes ecosystem.

Chris Lauwers

I've looked at Puccini, but I believe Srini's question was about converting Helm charts to TOSCA, presumably to create a starting point for new TOSCA templates to be deployed on Kubernetes (possibly through Puccini).

Tal Liron

Oh, I misread your intention. I guess it would be possible. Helm charts are not interesting, they are really just regular old Kubernetes specs, just with some templating. In some cases the templating may be converted into TOSCA intrinsic functions. At least in Puccini's case, the Kubernetes profile deliberately models the specs very closely, so no information would be lost.

However, I wonder if such automation is the right way to go. When onboarding a VNF we need a lot of certainty that it is well modeled. Humans should be doing it – including documenting, verifying and testing it. Perhaps an automated tool could help in the initial stage to produce a draft.

Chris Lauwers

Yes, that's what I do with YANG: i automate the translation from YANG models into TOSCA data types, but then there is a manual process to "morph" the result into something that is useful for TOSCA, e.g. deciding how to aggregate data into node types, which data should be turned into capabilities, where to introduce requirements, etc. The automation just gets all the syntactical stuff out of the way.

I agree on the need for certainty in the models.That's where the value is.

Chris Lauwers

I agree with Tal that the main benefit of TOSCA is that it enable technology-independent service descriptions that can be mapped at deployment time into technology-specific "implementations". Real-world services may involve some VM-based components, some "cloud-native" components, and a bunch of network segments to interconnect those components and make them available to end-users. TOSCA is the only orchestration technology that can provide an end-to-end view of such services.

Of course, if we're serious about using TOSCA then we should start developing service templates that actually leverage TOSCA features. Those features are extremely powerful, but unfortunately are not frequently used in practice. I'm talking specifically about:

There are other TOSCA features that should be considered (such as '"policies" to encode closed-loop automation) but the three I've listed are a good starting point IMHO.

Fernando Oliveira

I agree and would advocate for adding "policies" as one of the features that we should be considering in the TOSCA TF. I think that policy description is one of the gaps in the (P/G/V/C)NF descriptors as well as in the Network Service Descriptors. Some of the NF policies are being codified in the Helm charts in some of the new NFs. I think that this should be abstractly described and controlled through TOSCA orchestration.

Chesla Wechsler

If I'm interpreting Tal and Chris correctly, the following is what's being discussed (by analogy):

I have two entities, Human and Wall. Each has certain components (TOSCA capabilities).

Human

Wall

I have a requirement to put something that breathes into a box for an hour. I need something with a capability of Breathe and Lay still howLong: 1hr. Most Humans can do that but no Walls.

I have a requirement to stop balls that are thrown at it. I need something with a capability of StopForwardProgress with a howWell of 99%. A Wall and a good Human baseball shortstop would both suffice. If I increase that howWell to 100%, the Wall is the better choice. If I add a requirement of Catch, then only the Human will do.

Nowhere have I said that there is a nodetype of Human or Wall. The entities are just the sum of their capabilities.

I'm an instance person (i.e., my background is AAI). I know I have to support queries like "find me all the entities of type X that have this, that, and the other value." The aspect that concerns me is the "of type X". Do we have to namespace our capabilities somehow, so that if there are three capabilities that all Xs have in common, I can distinguish them from Ys which also have those same three capabilities in common as well as two more? So there would be x.breathe, x.layStill, x.walk and y.breathe, y.layStill, and y.walk? And perhaps there is a walk capability that both x.walk and y.walk derive from?

Tal Liron

This is close to TOSCA, but not quite.

One difference is that you actually do need a node type for Human and Wall, because that's how TOSCA currently works: every node template must have a "type" and these are custom assemblages of capabilities so they require a custom type. (I've been working on a draft for a new TOSCA grammar that doesn't need that. It is explicitly non-object-oriented.)

The other difference is that the requirement is not for a node, but for a capability. So you don't require a "thing" that breathes, instead you require "breathability", and the compiler will look for node templates that have it. Indeed the TOSCA grammar doesn't have a way to specify requirements as a group: each requirement can be satisfied by a different capability from a different node. What you can do is filter the target nodes, but even then you are working at the level of node templates and the instantiated relationships are up to the orchestrator.

As for your thoughts on querying – I imagine any graph querying language would work here, though our topologies are so small that it's probably easier to crawl across them programatically to find what you need. For the requirement filtering language the capability namespace is local to the target node template. TOSCA lets you use either the capability name or the capability type name. It definitely gets confusing.

If you haven't already, perhaps check out my online TOSCA course: part 1, part 2.

Chris Lauwers

I'm not sure types are much of a problem: they provide convenient groupings ("reusable components") and they allow for a lot of validation to prevent incorrect service templates. It could be useful, however, to support adding new capabilities to entities in an inventory without having to define those capabilities as part of the entity's type. We've discussed this type of "decoration" before in the TOSCA TC, and I think we should continue to explore this.

Of course, I don't think this decoration would be useful without addressing the more important issue that Tal brings up: in current TOSCA grammar, each requirement is for exactly one capability. I agree we should think about supporting "groups of capabilities" in requirements. While the result of fulfilling a requirement is a relationship between exactly two nodes, it should be possible to specify more than one required capability in the target node. You can sort of do this right now with node filters, but node filters are not the correct representation of the desired semantics in my opinion.

Tal Liron

Types are problematic due to single inheritance – what do you do with a VNF that functions both as a vRouter and as a Gateway? Just let each VNF be its own custom type. I really see no advantage in having a common basic node type, only disadvantages.

The result of fulfilling a requirement is specifically a relationship between one node to the capability "socket" of another node. I actually think this is one thing that TOSCA got right. If you have a requirement, it is fulfilled by a capability. The fact that the capability is provided by a node is an implementation detail.

If you have a requirement, it is fulfilled by a capability. The fact that the capability is provided by a node is an implementation detail.

Chris Lauwers

As it relates to types, I think the bigger problem with the NFV base type is that it creates an explicit distinction between VNFs and PNFs. I think models should be functional, and clearly a router provides routing functionality independent of whether it is a virtual or a physical router. The "virtualness" of a VNF is not an intrinsic aspect of the functionality of that VNF, it only describes its realization. So yes, the VNF base type is something that gets in the way rather than something that helps.

Multiple inheritance could help, but in I personally believe that the additional complexity of implementing multiple inheritance isn't worth the potential benefits.

The relationship/capability aspects of TOSCA actually align well with the Component/System pattern as presented by Nigel Davis (although slightly pruned and refactored). They represent the "ports" of components that allow those components to be interconnected into systems.

Tal Liron

I have a whole plan for a "TOSCA 2.0" that does away with object-orientation and uses a more flexible and aggregated type system. It also flattens the distinction between type and template, another big source of confusion. If there's interest, I can present it to this Task Force.

Viswanath Kumar Skand Priya

Tal Liron - I'm interested to know further. Would you mind dropping me any info, if you aren't going to present in Arch / Tosca TF in near future ? Thanks.

Tal Liron

I created a repository for it.

Chris Lauwers

Alexander Vul, I noticed you removed my name from the member list. Is there a formal membership process I failed to follow?

Gil Bullard

Here is the deck I shared today... (updated file to include just the slides I presented today)

(updated file to include just the slides I presented today)

Brinda Santh Muthuramalingam

Hi Team,

I work on CCSDK, SDNC, etc projects, In Casablanca release we delivered the Controller Design Studio (CDS) & Controller Component Orchestration( Using SLI directed Grapg Engine) through TOSCA V1.2 JSON Definitions. We have requirement to model Operation Definitions with Policy( Access Control, Re Trigger, etc ) and Outputs.

Please suggest me, how to add those definition as TOSCA V1.2 standard, so that we may not end up with custom definitions.

I attach the TOSCA definitions we use and Models for reference.

Controller BluePrint Model

CDS Architecture, Design and TOSCA Definitions used:

Modifcation:

Alexander Vul

Folks,

I posted the presentation slides from today on the Wiki. Again, think of them as a straw man proposal that needs to be refined. What I would like to do is focus on the Dublin view of the world first, and the post-Dublin "utopia" second.

Best regards,

Alex

Chesla Wechsler

Has there been an effort to abstract out from the VNFD TOSCA what is common for NFs? For example, I could see creating the following and it could possibly replace both VNF and PNF with judicious use of capabilities:

tosca.nodes.nfv.NF:

derived_from: tosca.nodes.Root

properties:

descriptor_id:

type: string # GUID

required: true

descriptor_version:

type: string

required: true

provider:

type: string

required: true

product_name:

type: string

required: true

software_version:

type: string

required: true

product_info_name:

type: string

required: false

product_info_description:

type: string

required: false

localization_languages:

type: list

entry_schema:

type: string

required: false

default_localization_language:

type: string

required: false

flavour_id:

type: string

required: false # change from current type, should be attribute

flavour_description:

type: string

required: false # change from current type, belongs in NFDF definition

monitoring_parameters:

type: list

entry_schema:

type: tosca.datatypes.nfv.NfMonitoringParameter

description: Describes monitoring parameters applicable to the NF.

required: false

#capabilities:

# monitoring_parameter:

# modelled as ad hoc capabilities in the VNF node template

# PNFs could be PhysicallyMoveable

# VNFs could be VirtuallyMoveable

# VNFs could be ManagedByVnfm

# Both could be Configurable and/or Modifiable

requirements:

- virtual_link:

capability: tosca.capabilities.nfv.VirtualLinkable

relationship: tosca.relationships.nfv.VirtualLinksTo

node: tosca.nodes.nfv.VirtualLink # Change

occurrences: [ 0, UNBOUNDED ]

Work with OASIS on normative representations of compute, storage, architecture, etc. I'll just call them ExecutionEnvironmentAspects for this example

tosca.nodes.nfv.ExecutionEnvironment: <== may not need this, might just be composable

# Only VNFs could have this capability

tosca.capabilities.nfv.ManagedByVnfm:

derived_from: tosca.capabilities.Root

properties:

vnfm_info:

type: list

entry_schema:

type: string

required: true

# Fraught with peril

tosca.capabilities.nfv.ManagedByPnfM:

derived_from: tosca.capabilities.Root

# etc.

tosca.capabilities.nfv.LifeCycleManageable:

derived_from: tosca.capabilities.Root

properties:

lcm_operations_configuration:

type: tosca.datatypes.nfv.NfLcmOperationsConfiguration

description: Describes the configuration parameters for the NF LCM operations

required: true

tosca.capabilities.nfv.Configurable:

derived_from: tosca.capabilities.Root

properties:

configurable_properties:

type: tosca.datatypes.nfv.VnfConfigurableProperties <== remove Vnf prefix?

required: true

tosca.capabilities.nfv.Modifiable:

derived_from: tosca.capabilities.Root

properties:

modifiable_attributes:

type: tosca.datatypes.nfv.VnfInfoModifiableAttributes <== remove Vnf prefix?

required: true

tosca.capabilities.nfv.Moveable:

derived_from: tosca.capabilities.Root

# Only PNFs could have this capability

tosca.capabilities.nfv.PhysicallyMoveable:

derived_from: tosca.capabilities.nfv.Moveable

# Only VNFs could have this capability

tosca.capabilities.nfv.VirtuallyMoveable:

derived_from: tosca.capabilities.nfv.Moveable

tosca.capabilities.nfv.PurposeBuiltPlatform:

derived_from: tosca.capabilities.Root

properties:

provider:

type: string

required: true

serial_number:

type: string

required: false

attributes:

serial_number:

type: string

required: true

valid_source_types: []

Srinivasa Addepalli

What is TOSCA-X?

If the input is HELM, how does it looks like in TOSCA-X? Is there full conversion of Helm to TOSCA or is TOSCA used to wrap the Helm?

Gaurav Mittal

Hi,

I am new to VNF Packaging. I have a simple application containing 2 docker containers in a single pod (MariaDB Container + Simple Spring Boot Container)

Currently I use helm commands to deploy this pod in the k8s Cluster. I would like to bundle this as a VNF using TOSCA Templates.

Can you kindly help me to point to some simple links on how to create a VNF package for the docker (k8s-pod) based application.

Regards,

Gaurav Mittal