AAI has a single instance of ONAP in the "A & AI" project in the windriver lab.

Once you are connected to the openlab via openvpn, use ssh to connect to to the AAI rancher server as user 'ubuntu'

Rancher server IP address is currently 10.12.6.205

$ ssh -i ~/.ssh/onap_key ubuntu@10.12.6.205

Become root:

$ sudo /bin/su

If you want to test out a change to one of AAI's charts, make the change and submit to gerrit.

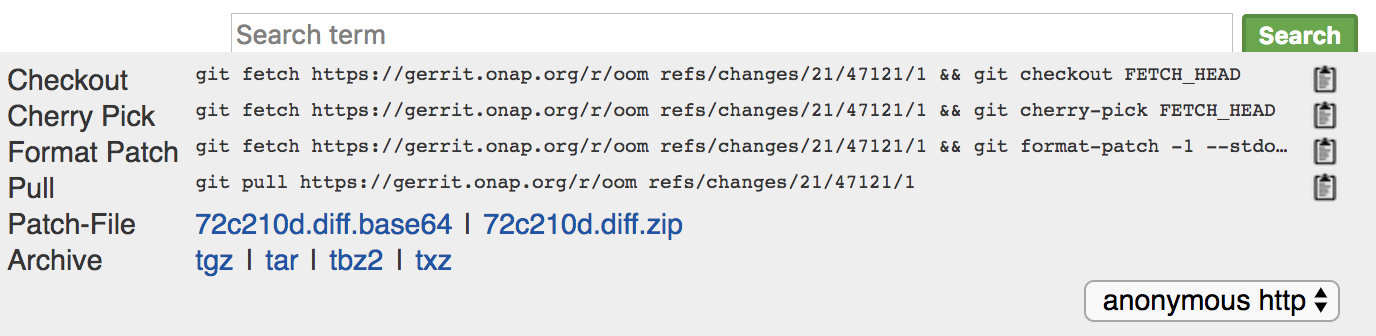

Cherry pick your change (in this example we're pulling patch set 1 of 47121). If you click "Download" from the gerrit change you want to cherry pick, it will give you a dialog box as below and you can copy it to your clipboard:

$ cd /root/oom $ git fetch https://gerrit.onap.org/r/oom refs/changes/21/47121/1 && git cherry-pick FETCH_HEAD

Now I have my changes local to rancher, where I can give it a try.

Run the following to get your change applied:

$ cd /root/oom/kubernetes $ make all $ cd /root $ helm upgrade -i dev local/onap --namespace onap -f integration-override.yaml --set aai.enabled=false $ helm upgrade -i dev local/onap --namespace onap -f integration-override.yaml --set aai.enabled=true

Look at the AAI pods:

$ kubectl get pods -n onap | grep aai

You'll see something similar to this if everything came up correctly:

root@rancher:/home/ubuntu# kubectl get pods -n onap | grep aai dev-aai-77d54bc645-kkmb8 1/1 Running 0 22h dev-aai-babel-7cf9689996-jt866 2/2 Running 0 22h dev-aai-cassandra-0 1/1 Running 0 12h dev-aai-cassandra-1 1/1 Running 0 22h dev-aai-cassandra-2 1/1 Running 0 22h dev-aai-champ-5dfdf9dfb5-7fc79 1/1 Running 0 15h dev-aai-data-router-7989bcfcb4-p5qwn 1/1 Running 0 22h dev-aai-elasticsearch-7fc496d745-tf67m 1/1 Running 0 22h dev-aai-gizmo-5c46b4fc4c-8d42w 2/2 Running 0 22h dev-aai-hbase-5d9f9b4595-fx82p 1/1 Running 5 22h dev-aai-modelloader-787b7c9c54-jnd9j 2/2 Running 0 22h dev-aai-resources-6c6f56cd86-6clpb 2/2 Running 0 22h dev-aai-search-data-5f8465445f-l7j8n 2/2 Running 0 22h dev-aai-sparky-be-687fc7487d-7mqzg 2/2 Running 1 15h dev-aai-traversal-7955b55464-tz98p 2/2 Running 0 22h

Run a healthdist to check the AAI services

$ cd /root/oom/kubernetes/robot; ./ete-k8s.sh onap healthdist

Log into the resources node:

$ kubectl exec -it $(kubectl get pods -n onap | grep dev-aai-resources | awk '{print $1}') /bin/bash

From the resources container you can look at logs to see application status, or send requests via curl to test the API.

13 Comments

Zi Li

Hi Jimmy,

How many resources are need to setup a AAI with helm? I tried to set up with a k8s work node with 12 VCPUs/64GB RAM and 160GB disk. But at last several pods status are Evicted

James Forsyth

Hi, Zi Li - that should work for you - which ones are exited? Do you get any logs for the failed ones?

Zi Li

For my circumstance. Both data-router/hbase/search-data are evicted. And the status of sparky-be/traversal are kept "Init:0/1".

the evicted pod details shows bellow:

ubuntu@k8s-master:~$ kubectl describe pod aai-aai-data-router-74b89f4449-ft6j5 -n onap

Name: aai-aai-data-router-74b89f4449-ft6j5

Namespace: onap

Priority: 0

PriorityClassName: <none>

Node: k8s-worker1/

Start Time: Thu, 16 Aug 2018 09:09:17 +0000

Labels: app=aai-data-router

pod-template-hash=3064590005

release=aai

Annotations: <none>

Status: Failed

Reason: Evicted

Message: The node was low on resource: ephemeral-storage. Container aai-data-router was using 136048Ki, which exceeds its request of 0.

IP:

Controlled By: ReplicaSet/aai-aai-data-router-74b89f4449

Init Containers:

init-sysctl:

Image: docker.io/busybox

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

mkdir -p /logroot/data-router/logs

chmod -R 777 /logroot/data-router/logs

chown -R root:root /logroot

Environment:

NAMESPACE: onap (v1:metadata.namespace)

Mounts:

/logroot/ from aai-aai-data-router-logs (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-6wpx5 (ro)

Containers:

aai-data-router:

Image: nexus3.onap.org:10001/onap/data-router:1.2.2

Port: 9502/TCP

Host Port: 0/TCP

Liveness: tcp-socket :9502 delay=10s timeout=1s period=10s #success=1 #failure=3

Readiness: tcp-socket :9502 delay=10s timeout=1s period=10s #success=1 #failure=3

Environment:

SERVICE_BEANS: /opt/app/data-router/dynamic/conf

CONFIG_HOME: /opt/app/data-router/config/

KEY_STORE_PASSWORD: OBF:1y0q1uvc1uum1uvg1pil1pjl1uuq1uvk1uuu1y10

DYNAMIC_ROUTES: /opt/app/data-router/dynamic/routes

KEY_MANAGER_PASSWORD: OBF:1y0q1uvc1uum1uvg1pil1pjl1uuq1uvk1uuu1y10

PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

JAVA_HOME: usr/lib/jvm/java-8-openjdk-amd64

Mounts:

/etc/localtime from localtime (ro)

/logs/ from aai-aai-data-router-logs (rw)

/opt/app/data-router/config/auth from aai-aai-data-router-auth (rw)

/opt/app/data-router/config/data-router.properties from aai-aai-data-router-properties (rw)

/opt/app/data-router/config/schemaIngest.properties from aai-aai-data-router-properties (rw)

/opt/app/data-router/dynamic/conf/entity-event-policy.xml from aai-aai-data-router-dynamic-policy (rw)

/opt/app/data-router/dynamic/routes/entity-event.route from aai-aai-data-router-dynamic-route (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-6wpx5 (ro)

Volumes:

localtime:

Type: HostPath (bare host directory volume)

Path: /etc/localtime

HostPathType:

aai-aai-data-router-auth:

Type: Secret (a volume populated by a Secret)

SecretName: aai-aai-data-router

Optional: false

aai-aai-data-router-properties:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: aai-aai-data-router-prop

Optional: false

aai-aai-data-router-dynamic-route:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: aai-aai-data-router-dynamic

Optional: false

aai-aai-data-router-dynamic-policy:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: aai-aai-data-router-dynamic

Optional: false

aai-aai-data-router-logs:

Type: HostPath (bare host directory volume)

Path: /dockerdata-nfs/aai/aai/data-router/logs

HostPathType:

default-token-6wpx5:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-6wpx5

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events: <none>

Zi Li

Hi, James Forsyth, For the minimum function of AAI -to store/query/update data. I think I could remove some charts to decrease the resources consumption. May be the sparky/modelloader/elasticsearch could be remove ? Any suggestion for the minimum system of AAI?

James Forsyth

I think the bare minimum configuration to do store/query/update graph data would be resources, traversal, haproxy, Cassandra. Venkata Harish Kajur anything else?

Zi Li

Also, after I add a new work node to the k8s cluster, try to "helm del --purge aai" and helm install again. I got a error " persistent volumes aai-aai-cassandra-0 already exists". It seems that the persistent volumes can not be deleted with helm.

So, do you know how to deal with this issue about install aai again in the original k8s environment?

James Forsyth

Venkata Harish Kajur or Pavel Paroulek have you guys seen this before?

Pavel Paroulek

Hi James Forsyth, no I haven't.

I don't know what storage provider is in openlab (by default AAI uses the default storage provider), if this not a single occurance of this error message (it might be - for example someone already installed AAI), it would be better to open a JIRA bug.

Zi Li

Hi James Forsyth, Pavel Paroulek, I tried "kubectl get pvc -n onap" and then delete all the pvc items. Then the aai could be re-installed.

Pavel Paroulek

Hi Zi Li, I am not sure where the error comes from but what you could do is:

Please before deleting the volumes in step 1 could you paste the output of the following command here: kubectl describe pv aai-aai-cassandra-0 -n onap

Thanks!

Brian Freeman

What I tend to do on a re-install of a entire project is to not only do the kubectl -n onap get pv but also pvc, security, services and make sure the project is gone

Then I go to /dockerdata-nfs/dev and delete the subtending directory for that project

then I do the helm upgrade with --set project.enabled=true

K8S / helm basic commands for ONAP integration is a page with some of the commands

Pavel Paroulek

Maybe there should be a purging mechanism as these manual steps seem error prone. It even seems easier to spin up a new VM (j/k)

(j/k)

Brian Freeman

Feel free to submit a proposed script perhaps to the integration project ? A good topic for the integration meeting on Wednesdays