Current status (summer 2019)

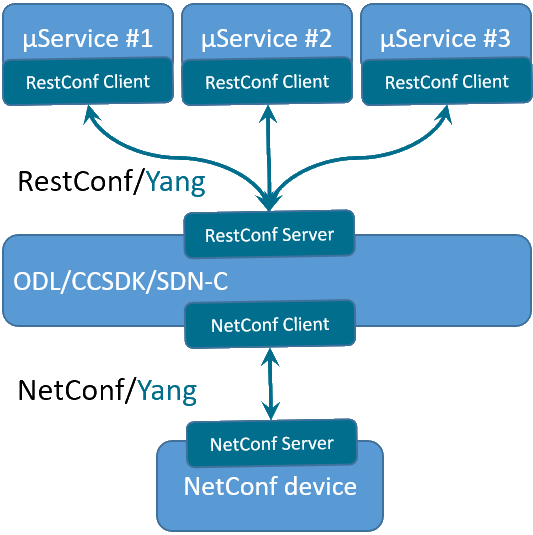

The following figure shows the current communication from any µService northbound of the Controller towards NetConf controlled devices. Each µService can use the on-the-fly autogenerated RestConf interfaces of ODL for device configuration. The exposed RestConf interface is derived from the same YANG modules, which are exposed by the device during the "NetConf <hello-message>" process.

Perfect!!! ;)

… but,

- Experience showed that the independent µServices are not aligned (and should not be aligned), which leads to repeated GET requests to the devices. Especially after a session reconnect the µServices are updating their internal database and are requesting very similar information (how many SFP, how many traffic interfaces, status, ...)

- It also happened the concurrent configurations by the µServices lead to errors and misbehavior (e.g. Device is locked, …).

- µServices needs to know which SDN-C instance is “responsible” for a certain NetConf session to the device.

- Other uServices cannot directly know configuration result since RestConf interface is one-to-one communication channel.

Motivation

- ONAP components usually communicate via the message bus DMaaP.

- µServices should be informed about configuration results via DMaaP.

- Provide a generic way based on the schema information (model-driven based on yang modules) learned by ODL, avoding specific DMaaP listner implementations for some configuration. Note: that more and more APIs are described in yang (e.g. O-RAN, OpenROADM, TAPI, ...)

Future?

Using a message bus by µServices gives the advantages, that the communication to a certain NetConf device can be done

- avoiding that data is requested from the devices twice (in a short time period – e.g. re-connect), when µService x can subscribe for responses of µService y,

- without knowing the master controller of the NetConf connection for this device.

A new component on ONAP SDNC - called "(yang) model driven DMaaP Agent" - could provide the required functionality.

Requirements

The previous RestConf commincation is not affected.

All NetConf/RestConf operations should be supported

.

SDNC-887 - Getting issue details... STATUS

Use Cases

The following use cases should be considered, when desiging the functionalty of the "model-driven DMaaP Agent".

- devices with well-known yang modules (already operational fluent)

- a new device with "unknown" yangs

NetConf operations

| Operation | Description | Topic proposal | new propoal based on topic: NETCONF_TOPOLOGY_CHANGE |

|---|---|---|---|

| <get> | Retrieve running configuration and device state information | NETCONF_<node-id> | n/a |

| <get-config> | Retrieve all or part of a specified configuration datastore | same as above | n/a |

| <edit-config> | Edit a configuration datastore by creating, deleting, merging or replacing content | same as above | n/a |

| <copy-config> | Copy an entire configuration datastore to another configuration datastore | same as above | n/a |

| <delete-config> | Delete a configuration datastore | same as above | n/a |

| <lock> | Lock an entire configuration datastore of a device | same as above | NETCONF_LOCK |

| <unlock> | Release a configuration datastore lock previously obtained with the <lock> operation | same as above | NETCONF_LOCK (by purpose not NETCONF_UNLOCK |

| <close-session> | Request graceful termination of a NETCONF session | same as above | covered by NETCONF_TOPOLOGY_CAHNGE |

| <kill-session> | Force the termination of a NETCONF session | same as above | covered by NETCONF_TOPOLOGY_CAHNGE |

Push back from highsteet dev team:

- too much spam on DMaaP

- DMaaP is made for steaming not for responses (did not fully understood)

- adding operation RESPONSES on DMaaP: the concext of the respose is unknow to µService → senseless

- too big jsons based on topic proposal → a more fine topic name is required - only the µService should define the topic → API to instruct SDN-R to provide dynamic topics.

new proposal

- µServices should be informed about NetConf topology changes → connect, disconnect, yang-capablities → topic: NETCONF_TOPOLOGY_CAHNGE

- µService can then decide, if it is affected or not and knows the supported yang modules and the name and the reponsible controller

- With this information the µService can use RestConf

Selected YANG statements

| YANG statements | Description | Topic proposal | new propoal based on topic: NETCONF_TOPOLOGY_CHANGE |

|---|---|---|---|

| rpc | The "rpc" statement is used to define an RPC operation. | NETCONF_<node-id> | n/a |

| action | The "action" statement is used to define an operation connected to a specific container or list data node. | same as above | n/a |

| notification | The "notification" statement is used to define a notification. | NETCONF_<node-id>_NOTIFICATION | NETCONF_NOTIFICATION |

Notes form meetings to be updated:

Overall Workflow

- A new netconf device is attached.

available: create mount point in odl-netconf topology

(Q: Is there a topic "odl mount"? yes: e.g. PNF-PnP

Note: may or may not be usable for the use case - at least needs to be extended.

Assumption: new topic, specific to the NetConf node, but is generic for all message from and to the node.

topic-name: sdnc/node-id → makes it globally unique

[Dongho] here node-id is like yang file name for a device? [sko] node-id could be a device-name - fqdn of the device, ... SO → pnfName from AAI

can we have an example? [sko] Here you go: https://docs.opendaylight.org/en/stable-oxygen/user-guide/netconf-user-guide.html#spawning-new-netconf-connectors

Let's call it "generic mount listener". - ODL gets a yang model and creates a relevant RESTCONF N/B.

available: NetConf hello-message, plus existing odl functionality.

Note: RestConf already exists for known yang modules for already mounted devices. - A user configures something for the DMaaP interface of that new device (e.g. topic name, Message Router service name, etc.)

User puts changes in the message bus and ODL needs to "grep" it (new functionality → generic DMaaP Listener)

(Q. not sure if we can automate this step?)

(A: There is nothing which cannot be automated. Q: What do you think we are missing?) - Then, our new module start listening to that topic for the specified MR.

This happens in step 1 already due to the change we made in step 1 - generic mount listener) - ‘Somehow’ yang model should be exposed to uS developer

( Q. do you have any idea about how uS can see such yang model?

I know you already have GUI in sdn-r, can we see yang model there?)

A: look at the function used by the apidoc/explorer (look at the methodology that swagger uses for yang modules - RFC yang to restConf ).

Note, already know yang-modules (known devices, payloads, ...) don't need that discovery.- Based on that yang model, uS sends a DMaaP message to a topic..

4 cases need to be defined:

a) config and 1x GET operations (happens only one time)

> no need for its own (new) topic → topic is sdnc/node-id

by Sandeep: per node-id might not be the best option → add the ability to be generic → more discussion needed.

> operation: GET, PUT, DELETE and url and payload are part of the message.

Q: What to do with the response - 200, 201, 401, 500? Do we need such responses on DMaaP? [Dongho] I think we might need some level of status of config request in a response message.. not sure how much detail we need.. did we have some agreement about it at the call?

Jeff: such codes are available already in the karaf logs and should be used

b) operational data

TO BE CONTINUED

what is the main difference to a)? topic, and responses.

no PUT/POST

changeEventNotifications

topic is sdnc/node-id/specificIdToSubscribeTo ( container, yang-module ???)

c) notifications

A subscription mechanism will drive the topic and whether the NetConf notification can be added as is to DMaaP.

topic: sdnc/node-id/notification (generic way, maybe in a second step more specific)

reuse whatever data structure comes from the device, will be forwarded to DMaaP.

how to expose the schema of notifications - to be defined.

d) (yang-statement) rpc

Each RPC gets its own topic to add the response on the DMaaP.

Will be HTTP-POSTs

topic is sdnc/node-id (similar to HTTP-PUT, see a))

[Dongho] one Q: is it easy to translate DMaaP msg to RESTCONF? A:

- Based on that yang model, uS sends a DMaaP message to a topic..

- Our new module will get it and translate received DMaaP (json) message to RESTCONF (Q. will this be feasible?)

yes - target Frankfurt - message body with json - trying to avoid addressin localhost/restconf. - once the new module gets a response from NetConf, it posts a response message to a topic.

yes - NetConf-Response - goes to HTTP-Post to DMaaP: - uS will get the response.

yes - via DMaaP

Question: how does the model-driven-DMaaP-Agent interwork with VES-Collector.

Automated "topic" creation is needed - align with DMaaP.

How many topics are sopported?

How to integrate with AAF - security.

[Dongho] one more topic to be discussed: do we need to define a generic set of header for dmaap message such as msgID, closedloopname, msgtype(e.g. request, response) etc. ?

yes - assumption header of the message content - in addtion to the DMaaP header

2 Comments

Herbert Eiselt

DMaaP specifies two rules: Provider and Consumer The agent seems to be a Consumer". Where is the provider implemented? (Refering to here: (https://onap.readthedocs.io/en/amsterdam/submodules/dmaap/messagerouter/messageservice.git/docs/message-router/message-router.)

Dongho Kim

This agent is a bidirectional bridge between dmaap (other ONAP components such as uServices or policy will send message via dmaap) and ODL (which is connected to Netconf devices).

For the part from dmaap to ODL, it would be considered consumer as you pointed out.. example would be GET, PUT, DELETE as mentioned above.

For the part from ODL to dmaap, it would be provider (from the point of view of agent). example would be parameter values in response to GET request, response msg including success/failure about PUT or DELETE request.