TODO: update/link Running the ONAP Demos

TODO :20171207

- To Conclude Cloud-Config.json for using MultiCloud for creating the Vf Module .

MultiCloud Support in OOM

OOM-493 - Getting issue details... STATUS .

TODO :20171207

OOM Challenges

- TO have a list of small list of kubectl commands needed for OOM .

- OOM Kubernetes Pods gets rebooted and every information is wiped off in OOM .This requires doing the complete service distribution and AAI Steps and VNF/VF Creation all over again .

This page aims to capture all the information , challenges and troubleshooting tricks to run vFWCL Demo successfully . This page assumes that you should have

- successfully deployed Openstack/VIO Successfully in a Multi Node Environment .

- Firewall Rules on Horizon dashboard has been setup to allow SSH , HTTP, HTTPS .

- Ports 10001 to 10005 towards Open Internet have been Open if your environment is behind a proxy.

running vFW Demo requires two workFlow .vFW Onboarding and vFW Instantation .

a. There are important Point that should be taken care

a.1 use vFWCL Zip images only because vFW has been spiltted into 2 packages for Amsterdam release older vFW Does not work .

a.2 Robot VM and demo.sh init can not be used for distributing vFWCL services because Robot has not been evolved to init and distribute the Split fireall vFWCl,

a.3 All the 3 VM's as a part of vFW should have external network attached to them since these VM's download some data from External /Open Internet .

a.4 try check in the browser < private IP address of Sink VM :667 > and check if the Graph are displayed .

a.5 for vLB/vDNS - There is no splitting done as in vFWCL .

a.6 vFWCL/vDNS/vLB requires some additional steps to make them pingable .

KubeCtl Commands (OOM Use)

1. Edit the mso-docker.json file

I see two ways of doing this:

- from the pods itself

kubectl --namespace=onap-mso exec -it mso-3784963895-brdxx bash

vi /shared/mso-docker.json

- from the host running the containers

vi /dockerdata-nfs/onap/mso/mso/mso-docker.json

2. Bounce the mso pod

kubectl --namespace=onap-mso delete pod mso-3784963895-brdxx

vFW Oboarding is step4 to step12 . Post vFW/Service Distribution the workFlow of vFW instantiation gets executed.

1. Deploy the ONAP using the latest heat template .

1.a location of the onap heat environment files ( environment and yaml file ) is

1.b Modify the environment file as per your environment Openstack/VIO Deployment .

1.c our Environment File with filled values .

1.d Heat Command to be executed on controller Node .

openstack stack create -t heat_ONAP_onap_openstack.yaml -e heat_ONAP_onap_openstack.env ONAP

1.e ONAP Stack Creation + ONAP VM's Deployment Challenges

- ONAP Stack gets created within 15-30 mins

- Docker Pull as a part of Each ONAP VM Creation is generally not clean .

Docker Pull Errors

a. - Image Not Found Error on Nexus Repo for multiple different Docker Images .

Error: image aaionap/hbase:1.0.0 not found

b- TLS Handshake Error for few docker images .

Error response from daemon: Get https://nexus3.onap.org:10001/v2/openecomp/data-router/manifests/1.1-STAGING-latest: net/http: TLS handshake timeout

Error response from daemon: Get https://nexus3.onap.org:10001/v2/openecomp/aai-traversal/manifests/1.1-STAGING-latest: net/http: TLS handshake timeout

c- I/O Timeout

Error response from daemon: Get https://nexus3.onap.org:10001/v1/_ping: dial tcp 199.204.45.137:10001: i/o timeout

d- curl command for docker-compose.yaml pull inside initscripts keeps failing Intermittenly .

e- directory creation in VID , SDNC and SDC failes . t

d and e requires manual creation of directory and relaunch Init scripts manually

f- SDC/SDNC does a GITClone for roughly 800 MB of Size .This hangs intermittently .

workaround -

VID - remove vid directory and placed docker-compose file inside /opt and start /vid_install.sh

SDC

- create mkdir -p /opt/sdc and trigger reinstall.sh

Post Docker Download errors .

- AAI2 and AAI1 - has Init issues if certain order is not followed .

-SDC sanity container keeps exiting intermittently

WorkArounds -

– reboot AA1 and AAi2 helps in recovering.

2. Run the healthCheck inside Robot VM.

2.1 login to Robot VM .

2.2 go to openecompete_container

2.3 root@onap-robot:/opt# ./ete.sh health

Starting Xvfb on display :88 with res 1280x1024x24

Executing robot tests at log level TRACE

==============================================================================

OpenECOMP ETE

==============================================================================

OpenECOMP ETE.Robot

==============================================================================

OpenECOMP ETE.Robot.Testsuites

==============================================================================

OpenECOMP ETE.Robot.Testsuites.Health-Check :: Testing ecomp components are...

==============================================================================

Basic DCAE Health Check [ WARN ] Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fd56db2bc90>: Failed to establish a new connection: [Errno 111] Connection refused',)': /healthcheck

[ WARN ] Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fd56f898c10>: Failed to establish a new connection: [Errno 111] Connection refused',)': /healthcheck

[ WARN ] Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fd56f542dd0>: Failed to establish a new connection: [Errno 111] Connection refused',)': /healthcheck

| FAIL |

ConnectionError: HTTPConnectionPool(host='10.0.4.1', port=8080): Max retries exceeded with url: /healthcheck (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fd56daab690>: Failed to establish a new connection: [Errno 111] Connection refused',))

------------------------------------------------------------------------------

Basic SDNGC Health Check | PASS |

------------------------------------------------------------------------------

Basic A&AI Health Check | PASS |

------------------------------------------------------------------------------

Basic Policy Health Check | PASS |

------------------------------------------------------------------------------

Basic MSO Health Check | PASS |

------------------------------------------------------------------------------

Basic ASDC Health Check | PASS |

------------------------------------------------------------------------------

Basic APPC Health Check | PASS |

------------------------------------------------------------------------------

Basic Portal Health Check | PASS |

------------------------------------------------------------------------------

Basic Message Router Health Check | PASS |

------------------------------------------------------------------------------

Basic VID Health Check | PASS |

------------------------------------------------------------------------------

Basic Microservice Bus Health Check | PASS |

------------------------------------------------------------------------------

Basic CLAMP Health Check | PASS |

------------------------------------------------------------------------------

catalog API Health Check | PASS |

------------------------------------------------------------------------------

emsdriver API Health Check | PASS |

------------------------------------------------------------------------------

gvnfmdriver API Health Check | PASS |

------------------------------------------------------------------------------

huaweivnfmdriver API Health Check | PASS |

------------------------------------------------------------------------------

multicloud API Health Check | PASS |

------------------------------------------------------------------------------

multicloud-ocata API Health Check | PASS |

------------------------------------------------------------------------------

multicloud-titanium_cloud API Health Check | PASS |

------------------------------------------------------------------------------

multicloud-vio API Health Check | PASS |

------------------------------------------------------------------------------

nokiavnfmdriver API Health Check | PASS |

------------------------------------------------------------------------------

nslcm API Health Check | PASS |

------------------------------------------------------------------------------

resmgr API Health Check | PASS |

------------------------------------------------------------------------------

usecaseui-gui API Health Check | PASS |

------------------------------------------------------------------------------

vnflcm API Health Check | PASS |

------------------------------------------------------------------------------

vnfmgr API Health Check | PASS |

------------------------------------------------------------------------------

vnfres API Health Check | PASS |

------------------------------------------------------------------------------

workflow API Health Check | PASS |

------------------------------------------------------------------------------

ztesdncdriver API Health Check | PASS |

------------------------------------------------------------------------------

ztevmanagerdriver API Health Check | PASS |

------------------------------------------------------------------------------

OpenECOMP ETE.Robot.Testsuites.Health-Check :: Testing ecomp compo... | FAIL |

30 critical tests, 29 passed, 1 failed

30 tests total, 29 passed, 1 failed

==============================================================================

OpenECOMP ETE.Robot.Testsuites | FAIL |

30 critical tests, 29 passed, 1 failed

30 tests total, 29 passed, 1 failed

==============================================================================

OpenECOMP ETE.Robot | FAIL |

30 critical tests, 29 passed, 1 failed

30 tests total, 29 passed, 1 failed

==============================================================================

OpenECOMP ETE | FAIL |

30 critical tests, 29 passed, 1 failed

30 tests total, 29 passed, 1 failed

==============================================================================

3. Once the healthCheck are passed except DCAE .

TODO 20171128 - To check and conclude if the step4 ,5,6 ,11,12 are applicable to vLB/vDNS , these are not applicable to vFWCL .

4. login to Robot VM .

5. Update the CLOUD_OWNER inside below file in Robot VM .

/var/opt/OpenECOMP_ETE/robot/resources/global_properties.robot

to be same value as given in ONAP heat Environment File . For our case - we gave "openstack".

6. create the directory /share/heat/vFW inside Robot VM .

7. download the vfw files ( yaml file , json file and base file ) from git repo and place it inside /share/heat/vFW/ on Robot VM.

8. Create the CloudRegion with openstack , RegionOne in AAI .

Headers to be used in POSTMAN RestRequest to AAI .

8.1 create Cloud Owner and Region

PUT https://<aai_ip>:8443/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/openstack/RegionOne

Request Body

{

"cloud-owner": "openstack",

"cloud-region-id": "RegionOne",

}

8.2 verify with GET Command

GET https://<aai_ip>:8443/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/openstack/RegionOne

in the response you will get the resource-version .

8.3 create the Tenant and put tht the resource version from 8.2 into requestBody

PUT https://<aai_ip:8443/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/openstack/RegionOne

RequestBody

{

"cloud-owner": "openstack",

"cloud-region-id": "RegionOne",

"resource-version": "1510199020715",

"cloud-type": "openstack",

"owner-defined-type": "owner type",

"cloud-region-version": "v2.5",

"cloud-zone": "cloud zone",

"tenants": {

"tenant": [{

"tenant-id": "74c7fa9e54f246f5878c902c346e590d",

"tenant-name": "onap"

}]

}

}

8.4 Imp Points - "Cloud Owner " , "Cloud-region-id" has to be same as in the ONAP Heat Environment File .

9. Create the services and complexes in the A&AI .

9.1 create the service

9.1.1 go to the link https://www.uuidgenerator.net/ and pick the Version 4 UUID like: f3fe1523-09ec-4d91-91ae-60ef9a2dd050

9.1.2

PUT https://aai_ip:8443/aai/v11/service-design-and-creation/services/service/f3fe1523-09ec-4d91-91ae-60ef9a2dd050

{

"service-id": "f3fe1523-09ec-4d91-91ae-60ef9a2dd050",

"service-description": "vFW"

}

9.1.3 GET https://aai_ip:8443/aai/v11/service-design-and-creation/services

TODO - 9.2 create the complex

9.2.1 PUT Command

PUT https://aai_ip:8443/aai/v11/cloud-infrastructure/complexes/complex/clli1

{

"physical-location-type":"Delhi",

"street1":"str1",

"city":"Delhi",

"postal-code":"110001",

"country":"India",

"region":"Asia"

}

9.2.2 GET Command

GET /aai/v11/cloud-infrastructure/complexes/complex/clli1

10. Create the Customer inside A&AI where the Region is to be same as given in AAI_ZONE in /var/opt/OpenECOMP_ETE/robot/resources/global_properties.robot (Note that this file is under the openecompete_container Docker)

10.1 create the customer

PUT https://aai_ip:8443/aai/v11/business/customers/customer/Demonstration3

{

"global-customer-id": "Demonstration3",

"subscriber-name": "Demonstration3",

"subscriber-type": "INFRA",

"service-subscriptions": {

"service-subscription": [

{

"service-type": "vFW",

"relationship-list": {

"relationship": [{

"related-to": "tenant",

"relationship-data": [

{"relationship-key": "cloud-region.cloud-owner", "relationship-value": "openstack"},

{"relationship-key": "cloud-region.cloud-region-id", "relationship-value": "RegionOne"},

{"relationship-key": "tenant.tenant-id", "relationship-value": "74c7fa9e54f246f5878c902c346e590d"}

]

}]

}

},

{

"service-type": "vLB",

"relationship-list": {

"relationship": [{

"related-to": "tenant",

"relationship-data": [

{"relationship-key": "cloud-region.cloud-owner", "relationship-value": "openstack"},

{"relationship-key": "cloud-region.cloud-region-id", "relationship-value": "RegionOne"},

{"relationship-key": "tenant.tenant-id", "relationship-value": "74c7fa9e54f246f5878c902c346e590d"}

]

}]

}

},

{

"service-type": "vIMS",

"relationship-list": {

"relationship": [{

"related-to": "tenant",

"relationship-data": [

{"relationship-key": "cloud-region.cloud-owner", "relationship-value": "openstack"},

{"relationship-key": "cloud-region.cloud-region-id", "relationship-value": "RegionOne"},

{"relationship-key": "tenant.tenant-id", "relationship-value": "74c7fa9e54f246f5878c902c346e590d"}

]

}]

}

}

]}

}

10.2 Imp Points -

10.2.1 "Cloud-region-id" has to be same as given AAI_ZONE in /var/opt/OpenECOMP_ETE/robot/resources/global_properties.robot .

10.2.2 Every time a new customer is be used by Robot VM . The customer needs to be created inside A&AI .

TODO- 10.2.3 tenant id should be picked from horizon dashboard or ONAP heat environment file ?. This needs to be concluded .

11. Modify the PREFIX_DEMO in the below Files .

/var/opt/OpenECOMP_ETE/robot/resources/demo_preload.robot

12. Run the demo.sh init inside Robot VM .

TODO – Issues faced till Step12 and workaround used .

13. Login to ONAP Portal as demo user and check on the existing services on VID Gui .

14. From the VID Gui - Deploy the service and Create the serviceinstance

15 .From the VID Gui - Create the VNF instances .

16 . Access the SDNC Admin Portal and Add the VNF profile .

16.1 create the user if not created already .<sdnc_ip>:8843/signup

16.2 Once sign up done and then <sdnc_ip>:8843/login

16.3 Add VNF Profile , Important thing to Note is VNF Type to be filled in .

17 . uploading the VNF Topology JSON FIle using SDNC VNF API

17.1SDNC VM – login/password for the SDNC API Access .

username: admin

password: Kp8bJ4SXszM0WXlhak3eHlcse2gAw84vaoGGmJvUy2U

17.2 Access <sdnc_ip>:8282/apidoc/explorer/index.html on the SDNC VM.

17.3 Click on VNF-API

17.4 Scroll down to the POST /operations/VNF-API:preload-vnf-topology-operation

17.5 filled JSON vFW Files from our environment

{

"input":

{

"request-information":

{

"notification-url":"openecomp.org",

"order-number":"1",

"order-version":"1",

"request-action":"PreloadVNFRequest",

"request-id": "robot20"

},

"sdnc-request-header":

{

"svc-action": "reserve",

"svc-notification-url": "http://openecomp.org:8080/adapters/rest/SDNCNotify",

"svc-request-id":"robot20"

},

"vnf-topology-information":

{

"vnf-assignments":

{

"availability-zones":[],

"vnf-networks":[],

"vnf-vms":[]

},

"vnf-parameters":

[

{"vnf-parameter-name":"vfw_private_ip_2","vnf-parameter-value": "10.0.100.4"},

{"vnf-parameter-name":"public_net_id","vnf-parameter-value": "87cdc31f-362f-4bdc-8b50-a7894ed759e9"},

{"vnf-parameter-name":"key_name","vnf-parameter-value":"onapviokey"},

{"vnf-parameter-name":"pub_key","vnf-parameter-value":"ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC4CegUDC7k2bqru0KkQ2HzSXZMZJ0cJBizQkt82CZ4Z8RlLFbxNwYhcuI67zEEB3PeVGzw6xsDDo0Su9OT1DxzFsLy14yxWI7+4K0kv/FYKw0ULT7UrBi3sjZI+e65Y/YL7tSZxiPHnPSncBFhMqXZT+WpKJF3BPDIpzbgnvbTH0O1OOQPYmN63Z87Alu8abZKCkClwbdmfl1dnEUoIve1/0f8jZTMC/qO1mQt04s59V7HNQyykZ6POSItH/cgjy3HI7e7gr8E/MseK/LOGu0mVPpcay/FcUKxI+u+sZ/GqY5+1nMQKKVnBWhc5P+cRoMMWjlNs7AiJmrnueAbNDLl Generated-by-Nova"},

{"vnf-parameter-name":"repo_url","vnf-parameter-value":"https://nexus.onap.org/content/sites/raw"}

],

"vnf-topology-identifier":

{

"service-type":"7a9ae3bc-caef-4200-a2f7-2afdbaa41e0d",

"generic-vnf-name":"demo4VFWVNF20",

"generic-vnf-type":"c38867a1-c1b8-422f-8808 0",

"vnf-name":"demo4VFWVNF20-1",

"vnf-type":"C38867a1C1b8422f8808..base_vfw..module-0"

}

}

}

}

17.6 filled JSON vLb Files from our environment

{

"input":

{

"request-information":

{

"notification-url":"openecomp.org",

"order-number":"1",

"order-version":"1",

"request-action":"PreloadVNFRequest",

"request-id": "robot20"

},

"sdnc-request-header":

{

"svc-action": "reserve",

"svc-notification-url": "http://openecomp.org:8080/adapters/rest/SDNCNotify",

"svc-request-id":"robot20"

},

"vnf-topology-information":

{

"vnf-assignments":

{

"availability-zones":[],

"vnf-networks":[],

"vnf-vms":[]

},

"vnf-parameters":

[

{"vnf-parameter-name":"public_net_id","vnf-parameter-value": "aa83b3d9-dda6-4106-b776-9280799993ce"},

{"vnf-parameter-name":"vfw_private_ip_2","vnf-parameter-value": "10.0.100.4"},

{"vnf-parameter-name":"vfw_image_name","vnf-parameter-value": "ubuntu_16.04"},

{"vnf-parameter-name":"key_name","vnf-parameter-value":"onapkey"},

{"vnf-parameter-name":"pub_key","vnf-parameter-value":"ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC4CegUDC7k2bqru0KkQ2HzSXZMZJ0cJBizQkt82CZ4Z8RlLFbxNwYhcuI67zEEB3PeVGzw6xsDDo0Su9OT1DxzFsLy14yxWI7+4K0kv/FYKw0ULT7UrBi3sjZI+e65Y/YL7tSZxiPHnPSncBFhMqXZT+WpKJF3BPDIpzbgnvbTH0O1OOQPYmN63Z87Alu8abZKCkClwbdmfl1dnEUoIve1/0f8jZTMC/qO1mQt04s59V7HNQyykZ6POSItH/cgjy3HI7e7gr8E/MseK/LOGu0mVPpcay/FcUKxI+u+sZ/GqY5+1nMQKKVnBWhc5P+cRoMMWjlNs7AiJmrnueAbNDLl Generated-by-Nova"},

{"vnf-parameter-name":"repo_url","vnf-parameter-value":"https://nexus.onap.org/content/sites/raw"}

],

"vnf-topology-identifier":

{

"service-type":"7a9ae3bc-caef-4200-a2f7-2afdbaa41e0d",

"generic-vnf-name":"demo4VFWVNF10",

"generic-vnf-type":"c38867a1-c1b8-422f-8808 0",

"vnf-name":"demo4VFWVNF10-1",

"vnf-type":"C38867a1C1b8422f8808..base_vfw..module-0"

}

}

}

}

18. Go To Portal GUI and From VID - create VF Module

18.a SO↔VIM(Openstack/VIO) - This does not use Multi Cloud

a.1 Cloud-Config.JSON inside /etc/mso/config.d

root@mso:/etc/mso/config.d# cat cloud_config.json

{

"cloud_config":

{

"identity_services":

{

"DEFAULT_KEYSTONE":

{

"identity_url": "KEYSTONE_URL",

"mso_id": "onap",

"mso_pass": "f8cf78bd37b4e258e85076eabb161977",

"admin_tenant": "service",

"member_role": "admin",

"tenant_metadata": true,

"identity_server_type": "KEYSTONE",

"identity_authentication_type": "USERNAME_PASSWORD"

}

},

"cloud_sites":

{

"nova":

{

"region_id": "nova",

"clli": "nova",

"aic_version": "2.5",

"identity_service_id": "DEFAULT_KEYSTONE"

}

}

}

}

18b. SO<->MultiCloud↔(Openstack/VIO ) - This interaction is via MultiCloud

TBConfirmed - MultiCloud Configuration in SO .

{

"cloud_config":

{

"identity_services":

{

"DEFAULT_KEYSTONE":

{

"identity_url": "http://10.0.14.1/api/multicloud/v0/vmware_vio/identity/v2.0",

"mso_id": "onap",

"mso_pass": "f8cf78bd37b4e258e85076eabb161977",

"admin_tenant": "service",

"member_role": "admin",

"tenant_metadata": true,

"identity_server_type": "KEYSTONE",

"identity_authentication_type": "USERNAME_PASSWORD"

}

},

"cloud_sites":

{

"nova":

{

"region_id": "nova",

"clli": "nova",

"aic_version": "2.5",

"identity_service_id": "DEFAULT_KEYSTONE"

}

}

}

}

18.2 registering MultiCloud to AAI-ESR

There are two ways to register a VIM to A&AI

You can register VIM from esr gui http://MSB_SERVER_IP:80/iui/aai-esr-gui/extsys/vim/vimView.html . For the ESR usage detail you can refer to http://onap.readthedocs.io/en/latest/submodules/aai/esr-gui.git/docs/platform/installation.html.

2. Register VIM with the API from A&AI, here is an example

PUT https://A&AI_SERVER_IP:8443/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/ZTE/region-one

Authorization:

header:

body:

{

"cloud-owner": "ZTE",

"cloud-region-id": "region-one",

"cloud-type": "openstack",

"owner-defined-type": "owner-defined-type",

"cloud-region-version": "ocata",

"cloud-zone": "cloud zone",

"complex-name": "complex name",

"sriov-automation": false,

"cloud-extra-info": "cloud-extra-info",

"esr-system-info-list": {

"esr-system-info": [

{

"esr-system-info-id": "432ac032-e996-41f2-84ed-9c7a1766eb29",

"service-url": "http://10.74.151.22:5000/v2.0",

"user-name": "admin",

"password": "admin",

"system-type": "VIM",

"ssl-insecure": true,

"cloud-domain": "cloud-domain"

}

]

}

}

19. vFW Network Topology

20 Additional Step For vFWCL on VIO 20171207-

1- for each of the network - create Router in the horizon .

2 . update /etc/resolve.conf in firewall VM to point to external DNS (10.112.64.1 ) So that VM Can reach out to Open Internet .

3. Check on Horizon under the networks → <Network Name> → Ports to see if the Gateway IP address of the subnet/network is showen as "router:interface"

4 Since we are updating the network given in the sdnc preload - please make sure you login to each VM (Firewall VM , Sink VM and PktGen VM )

4.1 Login using tenant network and remove every other network .

4.2 once logged into VM update the ip address and cidr files present in /opt/config in each VM

4.3 also update the /etc/network/interfaces files for eth1 , eth2 for Sink and PktGen VM and eth1 ,eth2 , eth3 for Firewall VM .

4.4 attach the Network via Horizon to each of these VM for Firewall VM , try disabling the v_firewall_install.sh and v_firewall_init.sh after running these script onces .

5. Sink VM and PktGen VM are generally able to ping on Tenant and ONAP OOM network including Robot VM .

6 Sink VM can ping the protected network gateway .

7. PktGen can ping unprotected network gateway .

8. firewall VM Can not ping Gateway of ONAP OOM .-- Why this is piece of investigation . .

tocompare with 20171205

1. Network Toplogy Example from a successful vFWCL Lab on Openstack /OOM

2. Nothing was done as in doing additional steps .

3. 667 Port was not open on Sink VM in successful vFW Demo case .

21 Additional Step For vLB/ vDNS on VIO 20171207-

1- for each of the network - create Router in the horizon .

2 . update /etc/resolve.conf in firewall VM to point to external DNS So that VMs can reach out to Open Internet .

3. Check on Horizon under the networks → <Network Name> → Ports to see if the Gateway IP address of the subnet/network is showen as "router:interface"

4 Since we are updating the network given in the sdnc preload - please make sure you login to each VM (Load Balancer VM, DNS VM and PktGen VM )

4.1 Login using tenant network and remove every other network

4.2 once logged into VM update the ip address and cidr files present in /opt/config in each VM

4.3 also update the /etc/network/interfaces files for eth1 , eth2 for DNS and PktGen VM and eth1 ,eth2 , eth3 for Load Balancer VM .

4.4 attach the Network via Horizon to each of these VM for Load Balancer VM , try disabling the v_firewall_install.sh and v_firewall_init.sh after running these script onces .

5. DNS VM and PktGen VM are generally able to ping on Tenant and ONAP OOM network including Robot VM .

6 DNS VM can ping the protected network gateway .

7. PktGen can ping unprotected network gateway .

8. Load Balancer VM Can not ping Gateway of ONAP OOM .-- Why this, is piece of investigation..

22 .TODO – Issues faced from step13 to Step18 and workaround used .

22.1 Challenges faced

22.1.1 SDC Sanity Docker keep exiting – Needs to raise a JIRA Ticket for the same .This result into every operation from Portal VID GUI resulting in 500 error or 400 error and no operation succeed .

WorkAround

This is works as designed .Non Issue .

22.1.2 SDNC VM "root" becomes 100% full this results into container being unstable and keep exiting - JIRA Ticket raised

22.1.3 Once the SDC is into Issue of 500 or 400 error - The sevices gets into inconsistent state and that requires creating , distributiing the service all over again including customer creation into AAI .

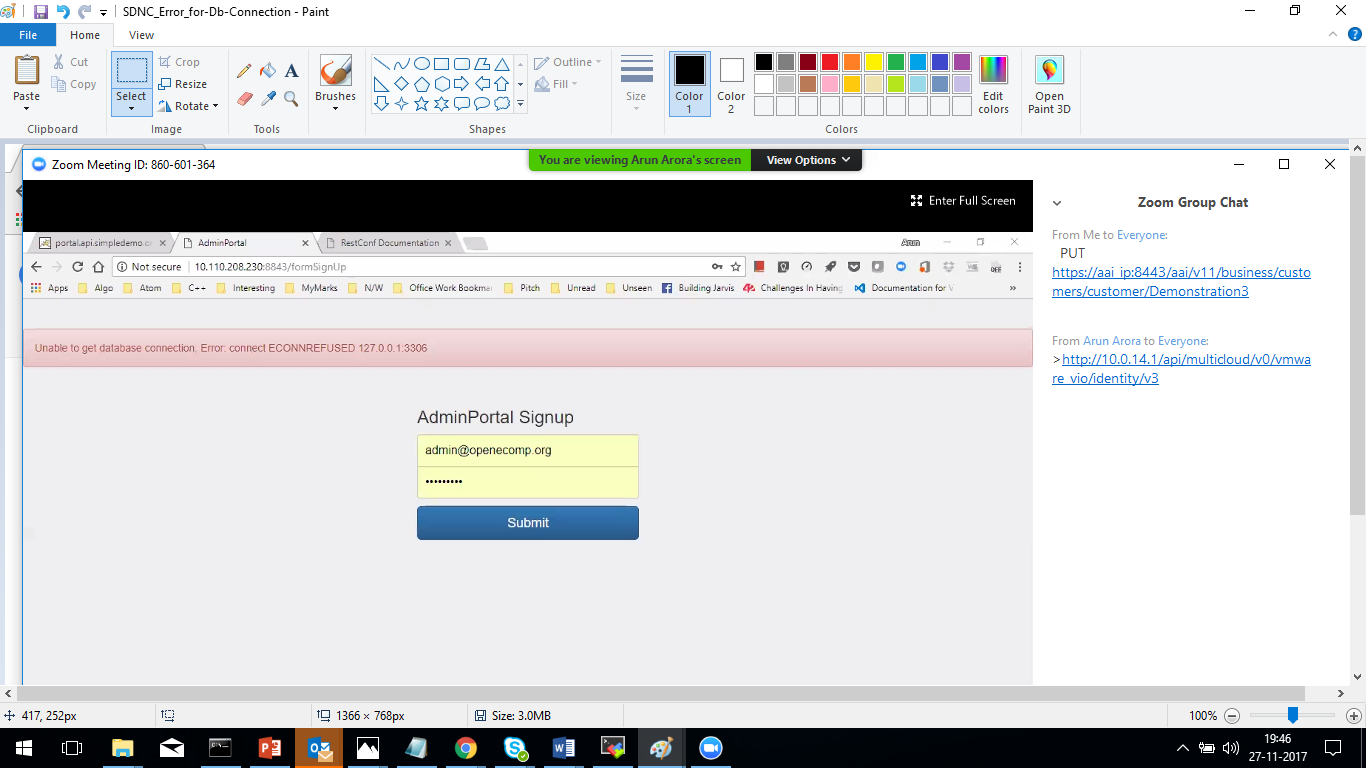

22.1.4 SDNC Login page throwing Error :- "Unable to get database connection :Error :connect ECONNREFUSED 127.0.0.1:3306" . Pls see the screen below

WorkAround

-- remove the SDNC Docker

– restart the SDNC Docker .

TODO - raise a JIRA Ticket for the SDNC Issue .

22.1.5 SO Complaining about Certificate Error while calling createVfModule . createVfModule fails

017-11-22T13:31:17.150Z|19374e93-7461-4303-8de9-13d105ab519b|keystoneUrl=http://10.110.208.162:5000/v2.0

2017-11-22T13:31:17.478Z|19374e93-7461-4303-8de9-13d105ab519b|heatUrl=https://10.110.209.230:8004/v1/74c7fa9e54f246f5878c902c346e590d, region=nova

2017-11-22T13:31:17.478Z|19374e93-7461-4303-8de9-13d105ab519b|Caching HEAT Client for nova:74c7fa9e54f246f5878c902c346e590d

2017-11-22T13:31:17.478Z|19374e93-7461-4303-8de9-13d105ab519b|Found: com.woorea.openstack.heat.Heat@3ca4e80c

2017-11-22T13:31:17.487Z|19374e93-7461-4303-8de9-13d105ab519b|OpenstackConnectException at:org.openecomp.mso.openstack.utils.MsoHeatUtils.queryHeatStack request:StackResource.GetStack Retry indicated. Attempts remaining:2

2017-11-22T13:31:22.496Z|19374e93-7461-4303-8de9-13d105ab519b|OpenstackConnectException at:org.openecomp.mso.openstack.utils.MsoHeatUtils.queryHeatStack request:StackResource.GetStack Retry indicated. Attempts remaining:1

2017-11-22T13:31:27.508Z|19374e93-7461-4303-8de9-13d105ab519b|OpenstackConnectException at:org.openecomp.mso.openstack.utils.MsoHeatUtils.queryHeatStack request:StackResource.GetStack Retry indicated. Attempts remaining:0

2017-11-22T13:31:32.518Z|19374e93-7461-4303-8de9-13d105ab519b|MSO-RA-9202E Exception communicating with OpenStack: Openstack Heat connection error on QueryAllStack: com.woorea.openstack.base.client.OpenStackConnectException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

2017-11-22T13:31:32.518Z|19374e93-7461-4303-8de9-13d105ab519b|

Solution

picked up the VIO Certifcate from the loadBalance VM

: /usr/local/share/ca-certificates and copied to : /usr/local/share/ca-certificates

inside MSO_TestLab Container .

update-ca-certificates with root inside the mso_testlab docker

22.1.6 ADD VNF Failes From VID GUI with the error – No Valid Catalogue Entry Specified

WorkAround

--- Create a new user via AAI Rest Command and new service using demo.sh init .

- Once the service is successfully distributed Try creating the VNF from VID Gui , It shall succeed .

23. Working with Multi-cloud

- Multi-cloud project provides interfaces to work with a variety of VIMs. When using Multi-cloud its service interfaces are used by the applications instead of VIM interfaces.

- To register your VIM and use it through Multi-cloud interfaces, following need to be done:

- Register AAI services with MSB. Following are the REST Requests to do it:

curl -X POST -H “Content-Type: application/json” -d ‘{“serviceName”: “aai-cloudInfrastructure”, “version”: “v11”, “url”: “/aai/v11/cloud-infrastructure”,”protocol”: “REST”, “enable_ssl”:”true”, “visualRange”:”1”, “nodes”: [ {“ip”: “A&AI_SERVER_IP”,”port”: “8443”}]}’ “http://MSB_SERVER_IP:10081/api/microservices/v1/services“

curl -X POST -H “Content-Type: application/json” -d ‘{“serviceName”: “aai-externalSystem”, “version”: “v11”, “url”: “/aai/v11/external-system”,”protocol”: “REST”, “enable_ssl”:”true”, “visualRange”:”1”, “nodes”: [ {“ip”: “A&AI_SERVER_IP”,”port”: “8443”}]}’ “http://MSB_SERVER_IP:10081/api/microservices/v1/services“ - Register ESR services with MSB. Following are the REST Requests to do it:

curl -X POST -H “Content-Type: application/json” -d ‘{“serviceName”: “aai-esr-server”, “version”: “v1”, “url”: “/api/aai-esr-server/v1”,”protocol”: “REST”, “visualRange”:”1”, “nodes”: [ {“ip”: “ESR_SERVER_IP”,”port”: “9518”}]}’ “http://MSB_SERVER_IP:10081/api/microservices/v1/services“

curl -X POST -H “Content-Type: application/json” -d ‘{“serviceName”: “aai-esr-gui”, “url”: “/esr-gui”,”protocol”: “UI”, “visualRange”:”1”, “path”:”/iui/aai-esr-gui”, “nodes”: [ {“ip”: “ESR_SERVER_IP”,”port”: “9519”}]}’ “http://MSB_SERVER_IP:10081/api/microservices/v1/services“ - Register Multicloud framework services and your VIM specific services with MSB. Following are the REST Requests to do it:

curl -X POST -H “Content-Type: application/json” -d ‘{“serviceName”: “multicloud”, “version”: “v0”, “url”: “/api/multicloud/v0”,”protocol”: “REST”, “nodes”: [ {“ip”: “’$MultiCloud_IP’”,”port”: “9001”}]}’ “http://$MSB_SERVER_IP:10081/api/microservices/v1/services“

curl -X POST -H “Content-Type: application/json” -d ‘{“serviceName”: “multicloud-vio”, “version”: “v0”, “url”: “/api/multicloud-vio/v0”,”protocol”: “REST”, “nodes”: [ {“ip”: “’$MultiCloud_IP’”,”port”: “9004”}]}’ “http://$MSB_SERVER_IP:10081/api/microservices/v1/services“ - Register VIM Information in AAI with region name “vmware” and region id “vio”. Following is the REST Request to do it:

curl -X PUT -H "Authorization: Basic QUFJOkFBSQ==" -H "Content-Type: application/json" -H "X-TransactionId:get_aai_subcr" \

https://aai_resource_docker_host_ip:30233/aai/v01/cloud-infrastructure/cloud-regions/cloud-region/vmware/vio \

- Register AAI services with MSB. Following are the REST Requests to do it:

-d "{

"cloud-owner": "vmware",

"cloud-type": "vmware",

"cloud-region-version": "4.0",

"esr-system-info-list": {

"esr-system-info": [

{

"esr-system-info-id": "123-456",

"system-name": "vim-vio",

"system-type": "vim",

"type": "vim",

"user-name": "admin",

"password": "vmware",

"service-url": "<keystone auth url>",

"cloud-domain": "default",

"default-tenant": "admin",

"ssl-insecure": false

}

]

}

}"

Please note: The IP and port numbers may vary in HEAT vs OOM based ONAP setup. This need to be considered while formulating the curl requests.

1 Comment

Brian Freeman

CloudOwner should be "CloudOwner" to setup for vCPE GENERIC-RESOURCE-API assumptions.

Make sure the complex, zone and availability-zone are setup (availability zone for vCPE as well)

If you want to do closed loop for vFW there is a new two VNF service for Amsterdam (vFWCL - it is in the demo repo) that separates the traffic generator into a second VNF/Heat stack so that Policy an associate the event on the LB with the VNF to be controlled (the traffic generator) through APPC. Contact Pam and Marco for details.

There is also dynamic policy definition for one of the closed loop policies that needs to be