...

The network between the BRG and the BNG is specific to a particular BNG instance, and thus it is reasonable to model it as part of the same Service (and Service instance) as the BNG.

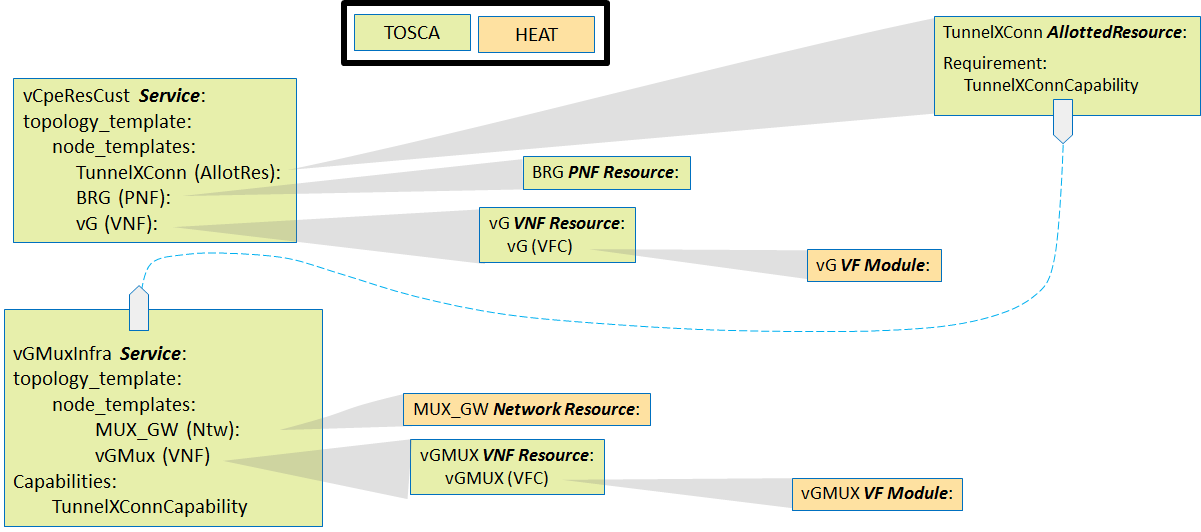

In the "real world", each instance of the Customer Service will be comprised of:

- A BRG, which will be a physical device (PNF) dedicated to the customer's service instance.

- A vG, which is a virtual network function that is dedicated to the customer's service instance.

- A "slice" of functionality of the vGMUX, which provides a "cross connect" function between the BRG and vG. We use the term "slide" informally to convey the sense that something (capacity) is consumed and dedicated (configuration record) in the vGMUX for this customer's service instance. The way in which SDC models a "slice" of functionality of a VNF is through the "Allotted Resource" construct, because what is being consumed by the customer's service instance is an "allotment" of capacity and configuration from the underlying vGMUX. We will refer to this as the TunnelXConn Allotted Resource.

Because in this sense the vGMUX is providing a "service" an allotment of which is being consumed, we consider the TunnelXConn Allotted Resource to be taken from the vGMUX's Infrastructure Service, vGMuxInfra.

It is true that

Though in the "real world" the BRG will be a physical device,

...

for purposes of this use case we will emulate such a physical device via a VNF, the vBRG_EMU VNF. We will instantiate this VNF separately from the Customer's service instance, and model it as a separate service: BRG_EMU Service. Upon instantiation of a BRG_EMU service instance with its vBRG_EMU VNF, that VNF will begin acting like its "real world" PNF counterpart.

As a Release 1 ONAP "stretch goal", we will also attempt to demonstrate how a standalone general TOSCA Orchestrator can be incorporated into SO to provide the "cloud resource orchestration" functionality without relying on HEAT orchestration to provide this functionality. As such we will model the "vCpeCoreInfra" Service in a manner that does not leverage HEAT templates, but rather as pure TOSCA.

...

The specific infrastructure functions will be implemented as two separate services: one for vBNG, another for vG_MUX, in order to allow (not in R1) to scale each separately, and also to enable one vBNG to connect to multiple vG_MUXs as well as one vG_MUX to connect to multiple vBNGs - thus supporting different deployment scenarios of these two functions.

Note: All detailed flows (with diagrams) are for R1. Flows planned for subsequent releases are tagged as "aspiration" and are only mentioned, details will be added later.

Onboarding of vCPE VNFs

- Infrastructure instantiation

- General infrastructure instantiation (vDHCP, vDNS, vAAA)

- Specific infrastructure instantiation 1 (vBNG)

- Specific infrastructure instantiation 2 (vG_MUX)

- General infrastructure instantiation (vDHCP, vDNS, vAAA)

- Customer ordering

- New vG ordering, instantiation and activation

- Aspiration goal: Cut service

- Aspiration goal: Per service activation ( connectivity to Internet, IMS VoIP, IPTV)

- Aspiration goal: Wholesale access (future)

- Self-service

- Aspiration goal: Tiered bandwidth

- Aspiration goal: Bandwidth on Demand

- Aspiration goal: Change internet access bandwidth

- Software management

- Aspiration goal: Upgrade service

- Aspiration goal: Upgrade a specific element (VNF) of the service

- Aspiration goal: Delete service

- Scaling

- Aspiration goal: Storage (future)

- New vG ordering, instantiation and activation

- Auto-healing

- Automatic reboot/restart

- Aspiration goal: Rebuild

- Aspiration goal: Migrate/evacuate to different CO Re-architected as DC (future)

- Aspiration goal: Reconnect over an alternative path?

- Infrastructure instantiation

...