| Table of Contents |

|---|

| Jira | ||||||

|---|---|---|---|---|---|---|

|

What is Culprit Locator

Culprit Locator (or root-cause finder) is a set of ELK-based dashboards created based on ONAP logs to identify where within a failed flow that a problem originated in the most efficient and effective way. The intention is that this would be able to help the testers, developers, and developers operations to get issues from identification to diagnosis/fix more quickly. This application demonstrates how the traceable logs can be used to enhance troubleshooting efforts for the testers, developers and developersoperations. The current version is based on explicit ERROR logs to quickly locate the problem sources in terms of components or subcomponents or a series of significant log details.

Features

- Given a RequestId (or TransactionId), bring all related relevant logs across all components for investigation

- Aggregate and visualize trace down the logs by log level as highlighting , component/subcomponent, and error message pattern

- Highlight the meaningful logs with by a color coding for the intuitive understanding of the process flows

- Guide to quickly move to the meaningful logs around the issuesList up all significant logs and their details

- Provide a drilldown link for further investigation of the logs at the subcomponent level

Data Requirements (logstash)

We assume the index pattern "logstash-*" has been created on Kibana either by default or manual (refer to Logging User Guide Dashboard), and the following data fields are available from the latest version of onap-pipeline.conf:

- Timestamp (date formatted)

- RequestId (UUID)

- componentName (string)

- subComponent (string)

- loglevel (one of INFO, DEBUG, WARN, ERROR)

- vLogLevel (integer value assigned to each loglevel => INFO:1, DEBUG:3, WARN:5, ERROR:10)

- detailmessage (which is equivalent to the field message but aggregatable)

- source (log file path)

- Logger (logging object)

In case the fields for subComponent, vLogLevel, detailmessage do not appear, check and add the following part in the onap-pipeline.conf around the end of the filter. (Not necessary to restart logstash)

| Code Block |

|---|

ruby {

code => "

path = event.get('source').split('/')

if path[5].include? 'log'

event.set('subComponent', path[4])

else

event.set('subComponent', path[5])

end

event.set('component_subComponent', event.get('componentName') + '_' + event.get('subComponent'))

case event.get('loglevel')

when 'INFO'

event.set('vLogLevel', 1)

when 'DEBUG'

event.set('vLogLevel', 3)

when 'WARN'

event.set('vLogLevel', 5)

when 'ERROR'

event.set('vLogLevel', 10)

end

"

}

mutate { add_field => { "detailmessage" => "%{message}" } } |

Create Kibana Scripted Fields

The following two fields should need to be manually created by the user against the index pattern "logstash-*" on Kibana scripted field page (Management → Index Patterns → scripted fields):

- LogLevel: which is equivalent to "loglevel" with color coding colors assgined like below (Note: the color values do not necessary to be same with the ones below)

- Drill Down: URL link to the subcomponent level dashboard (see below for the details)

Script

The code below needs to be put into the 'Script' part of the above page.

The drill-down time range is pre-set by -60 seconds to +5 seconds; the user could change the values as necessary.

- The IP address in the URL of the code should be modified for the user's ONAP kibana server.

- The dashboard ID Id (105a83d0- ... in in this case, "cl_Culprit_Locator_Subcomponent_Level" in the code) should match with the one for the imported dashboard "Culprit Locator (Subcomponent Level)". . Import Kibana objects is the nex step. If it does not match, the user needs to fix the script code.

| Code Block |

|---|

if (doc['loglevel.keyword'].value !== "INFOERROR") { DateTimeFormatter ft = DateTimeFormatter.ofPattern("YYYY-MM-dd HH:mm:ss.SSS"); Instant from_instant = Instant.ofEpochMilli(doc['Timestamp'].value - 60000); Instant to_instant = Instant.ofEpochMilli(doc['Timestamp'].value + 5000); ZonedDateTime from_z = from_instant.atZone(ZoneId.of("UTC")); ZonedDateTime to_z = to_instant.atZone(ZoneId.of("UTC")); String from_ts = from_z.format(ft); String to_ts = to_z.format(ft); String ReqId = doc['RequestId.keyword'].value; String URL = "http://10.147.58.138:5601/app/kibana#/dashboard/105a83d0-215e-11e8-a744-95cec6f8f1a4cl_Culprit_Locator_Subcomponent_Level?_g=(refreshInterval:(display:Off,pause:!f,value:0),time:(from:'" + from_ts + "',mode:absolute,to:'" + to_ts + "'))&_a=(description:'',filters:!(),options:(darkTheme:!f),query:(query_string:(analyze_wildcard:!t,query:'RequestId:%20%22" + ReqId + "%22')),timeRestore:!f,viewMode:view)"; return URL; } |

Import Kibana

...

Objects

Take one of two approaches below to import the Kibana objects (dashboards/visualizations/searches).

Import on User's Computer

Download the file Culprit Locator Kibana Objects into To import the dashboards and visualizations, the user could either run the script on the ONAP kibana server, or import the json object from the user's computer (desktop/laptop).

1. On Kibana browser import the file: Management→Saved Objects → Import.

2. Refresh the Kibana browser and check if the objects are properly loaded.

Import on ELK Server

Download and uncompress the tar file attached in the home directory of your onap-log kibana server. Run the script, "import_onap_kibana_objects"

or

Download json object file attached to the user's computer, and on Kibana do Management→Saved Objects → Import the object file.

tar file culprit_locator.tar into any directory of the ELK server, where elasticsearch is accessible; not necessary to move it into containers.

1. The default host and port is set to "localhost" and "30254". If different, open the shell script "cl_insert_kibana_objects.sh" and provide correct HOST and PORT values.

2. Run the script on the command line.

3. Refresh the Kibana browser and check if the objects are properly loaded.

How To Use

This explains the basic features of current version of Culprit Locator. The users are encouraged to take advantage of the full flexibility of Kibana features to modify and enhance the dashboards to their puporses and preferences.

...

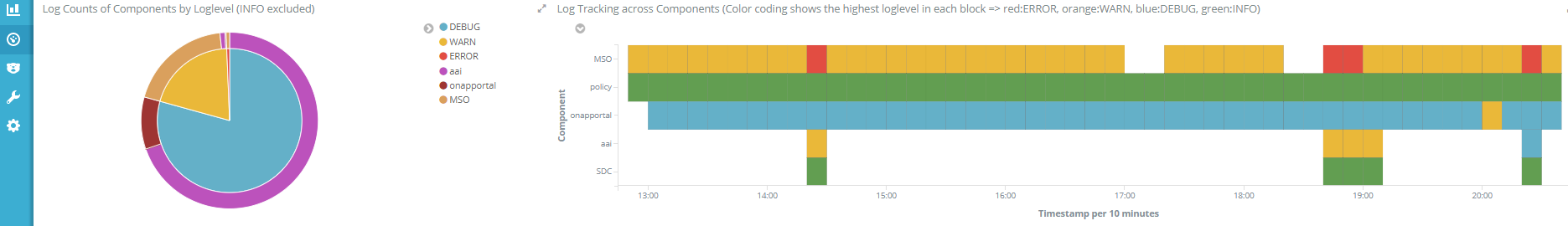

1. Open Dashboard "Culprit Locator (Component Level)" and adjust the time picker for the parts you're interested in or choose one like "Last 7 days". The dashboard features:

...

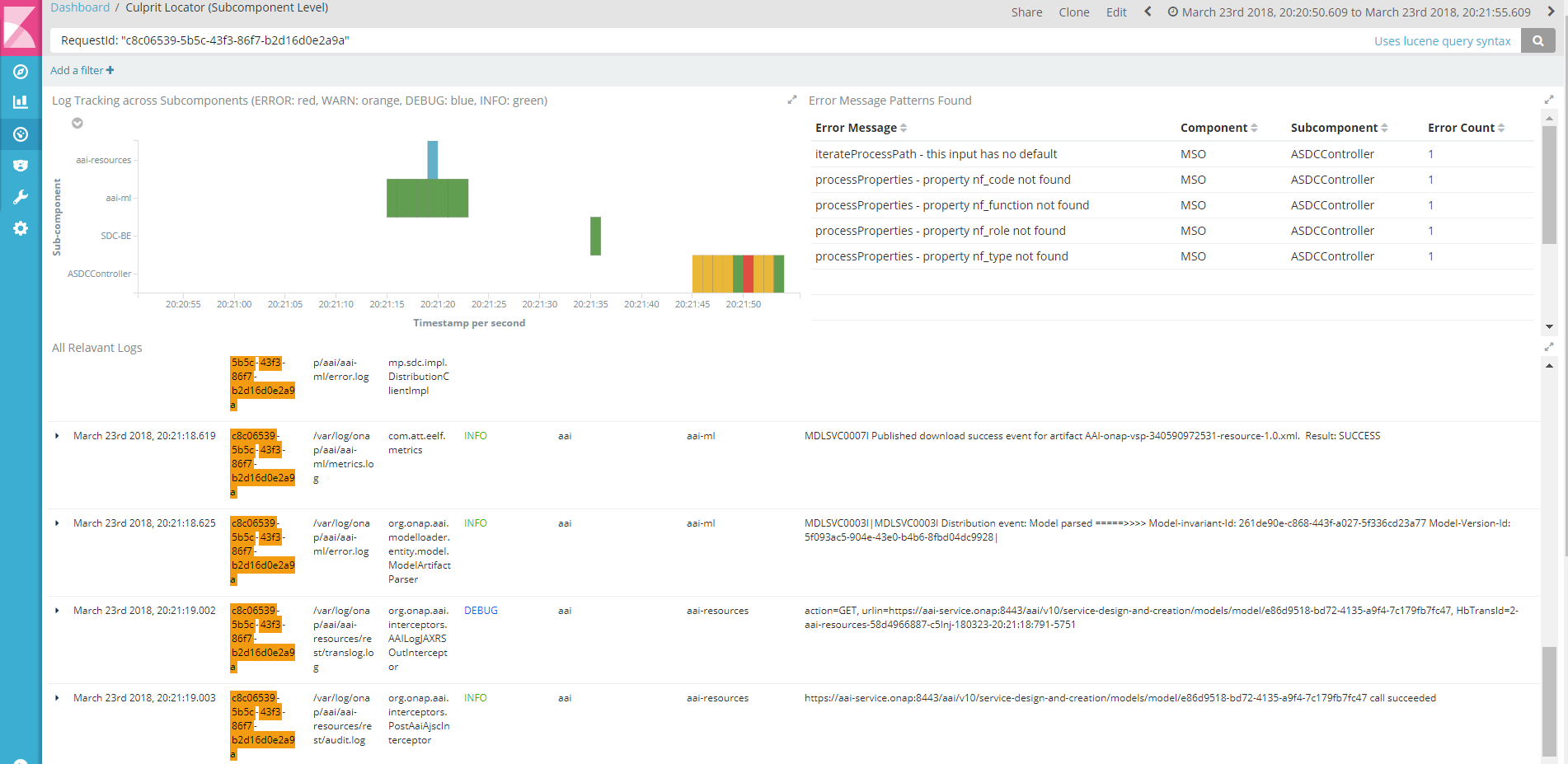

2. Drill-down (or more focused) view dashboard automatically opens as on a separate tab with time perid set ranging from -60 seconds prior to the error time to +5 seconds after the error occurred. The user can still continue to zoom in or click the boxes. The dashboard features:

- Log trracking by sub-components over time with the same color scheme with the previous dashboard

- Table of error message patterns categorizing all diffeerent error messages (to be imporved)

- Table of all relevant logs at all loglevel, scrollable for investigating the logs for the specified time period

Below is a sample zoomed-in view of the red box in the previous view:

Feature Improvements

The next-step of Culprit Locator is to automate the identification of root causes with more intelligent features.

- Implicit error detection, not only based on actual ERROR logs

- Pattern recognition of issues: e.g., a series of transactions across the components over time

- Identification of precursor to failures

- Identification of issues based on metrics, e.g., elpased time for a specific API alls between the components

- Customized features based on specifc use cases. e.g., VoLTE, vFW, vCPE