Warning: Draft Content

This wiki is under construction - this means that content here may be not fully specified or missing.

TODO: determine/fix containers not ready, get DCAE yamls working, fix health tracking issues for healing

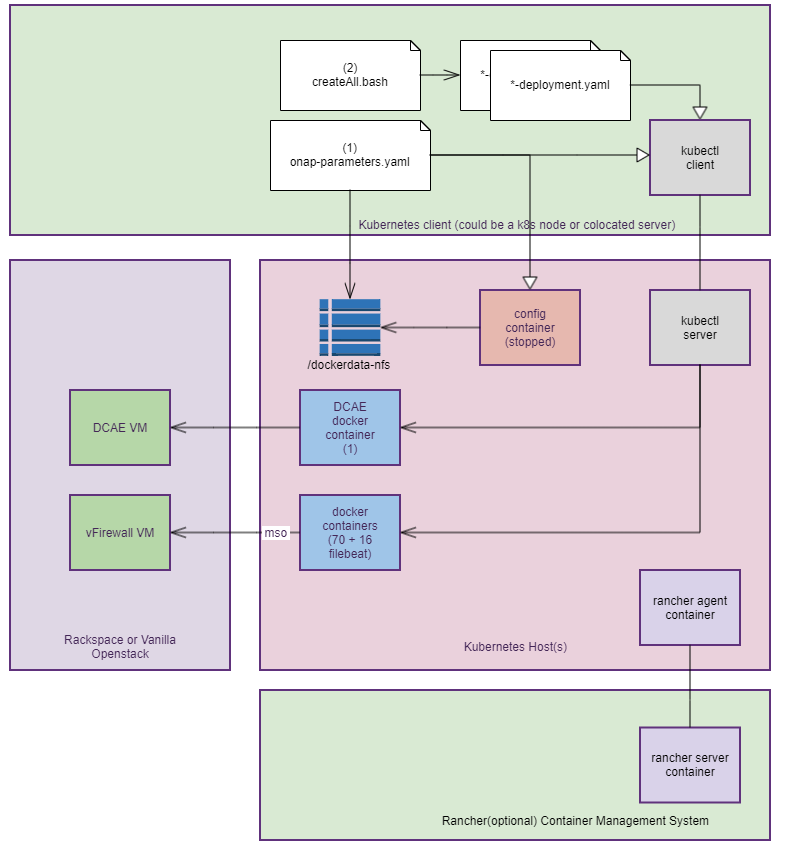

The OOM (ONAP Operation Manager) project has pushed Kubernetes based deployment code to the oom repository - based on ONAP 1.0 (not 1.1 yet). This page details getting ONAP running (specifically the vFirewall demo) on Kubernetes for various virtual and native environments.

Architectural details of the OOM project is described here - OOM User Guide

Reviews

https://gerrit.onap.org/r/#/c/6179/

Undercloud Installation

Requirements

| Metric | Min | Notes |

|---|---|---|

| RAM | 37G w/o DCAE 50G with DCAE | Note: you need at least 37g RAM (34 for ONAP services - this is without DCAE yet and without running the vFirewall yet). |

| HD | 100G w/o DCAE |

We need a kubernetes installation either a base installation or with a thin API wrapper like Rancher or Redhat

There are several options - currently Rancher on Ubuntu 16.04 is a focus as a thin wrapper on Kubernetes - there are other alternative platforms in the subpage - ONAP on Kubernetes (Alternatives)

| OS | VIM | Description | Status | Nodes | Links |

|---|---|---|---|---|---|

Ubuntu 16.04.2

| Bare Metal VMWare | Rancher | Recommended approach Issue with kubernetes support only in 1.12 (obsolete docker-machine) on OSX | 1-4 | http://rancher.com/docs/rancher/v1.6/en/quick-start-guide/ |

ONAP Installation

Quickstart Installation

1) install rancher, clone oom, run config-init pod, run one or all onap components

***************** Note: uninstall docker if already installed - as Kubernetes only support 1.12.x - as of 20170809 ***************** |

|---|

ONAP deployment in kubernetes is modelled in the oom project as a 1:1 set of service:pod sets (1 pod per docker container). The fastest way to get ONAP Kubernetes up is via Rancher.

Primary platform is virtual Ubuntu 16.04 VMs on VMWare Workstation 12.5 on a up to two separate 64Gb/6-core 5820K Windows 10 systems.

Secondary platform is bare-metal 4 NUCs (i7/i5/i3 with 16G each)

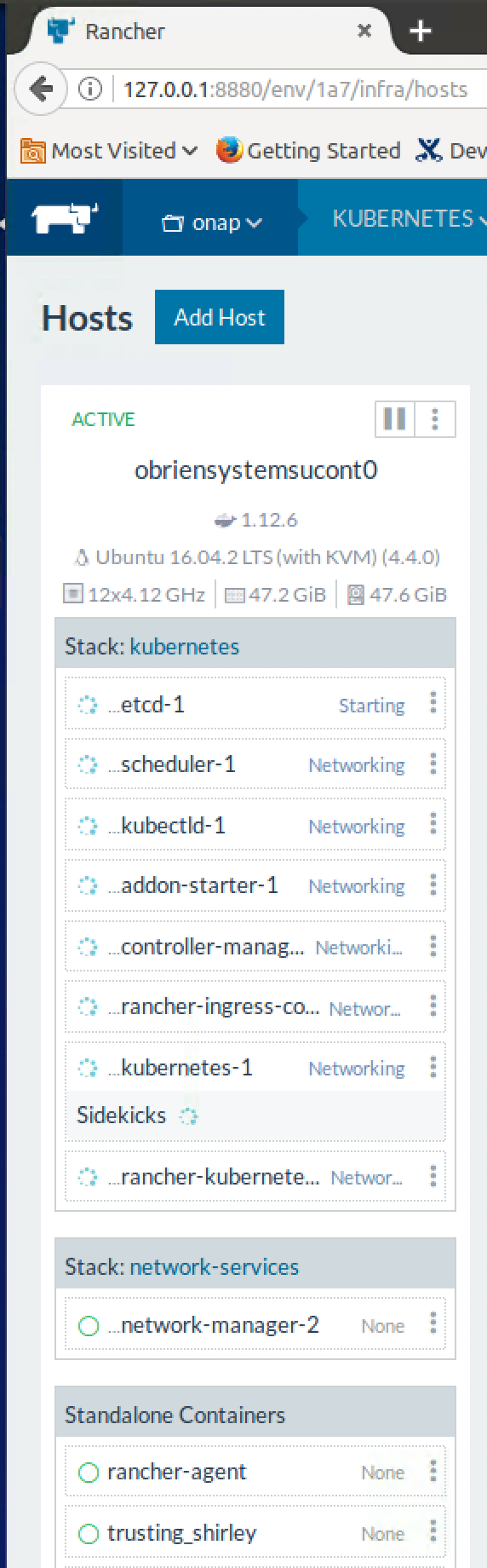

(on each host) fix your /etc/hosts to point localhost/127.0.0.1 to your hostname (add your hostname to the end) sudo vi /etc/hosts 127.0.0.1 localhost your-hostname Try to use root - if you use ubuntu then you will need to enable docker separately for the ubuntu user sudo su - apt-get update (to fix possible modprobe: FATAL: Module aufs not found in directory /lib/modules/4.4.0-59-generic) (on each host) Install only the 1.12.x (currently 1.12.6) version of Docker (the only version that works with Kubernetes in Rancher 1.6) (on the master) Install rancher (use 8880 instead of 8080) In Rancher UI - dont use (http://127.0.0.1:8880) - use the real IP address - so the client configs are populated correctly with callbacks You must deactivate the default CATTLE environment - by adding a KUBERNETES environment - and Deactivating the older default CATTLE one

Register your host(s) - run following on each host (get from "add host" menu) - install docker 1.12 if not already on the host (note the host can be the same machine as the master) curl https://releases.rancher.com/install-docker/1.12.sh | sh wait for kubernetes menu to populate with CLI install kubectl mkdir ~/.kube vi ~/.kube/config paste kubectl config from rancher (you will see the CLI menu in Rancher | Kubernetes after the k8s pods are up on your host Click on "Generate Config" to get your content to add into .kube/config Verify that Kubernetes config is good root@obrien-kube11-1:~# kubectl cluster-info Undercloud done - move to ONAP clone oom (scp your onap_rsa private key first - or clone anon - Ideally you get a full gerrit account and join the community) see ssh/http/http access links below https://gerrit.onap.org/r/#/admin/projects/oom git clone -b release-1.0.0 ssh://michaelobrien@gerrit.onap.org:29418/oom or use https root@obrienk-1:~$ git clone -b release-1.0.0 https://michaelnnnn:uHaBPMvR47nnnnnnnnRR3Keer6vatjKpf5A@gerrit.onap.org/r/oom Wait until all the hosts show green in rancher, then run the script that wraps all the kubectl commands

OOM-115

-

Getting issue details...

STATUS

Run the setenv.bash script in /oom/kubernetes/oneclick/ (new since 20170817) source setenv.bash run the one time config pod - which mounts the volume /dockerdata/ contained in the pod config-init. This mount is required for all other ONAP pods to function. Note: the pod will stop after NFS creation - this is normal. cd oom/kubernetes/config chmod 777 createConfig.sh ./createConfig.sh -n onap **** Creating configuration for ONAP instance: onap (note: in the release-1.0.0 branch - do a chmod 755 on createConfig.sh for now) (only if you are planning on closed-loop) - Before running createConfig.sh - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json replace for example "identity_services": [{ Wait for the config-init pod is gone before trying to bring up a component or all of ONAP Note: use only the hardcoded "onap" namespace prefix - as URLs in the config pod are set as follows "workflowSdncadapterCallback": "http://mso.onap-mso:8080/mso/SDNCAdapterCallbackService" Don't run all the pods unless you have at least 40G (without DCAE) or 50G allocated - if you have a laptop/VM with 16G - then you can only run enough pods to fit in around 11G Ignore errors introduced around 20170816 - these are non-blocking and will allow the create to proceed - OOM-146 - Getting issue details... STATUS cd ../oneclick ./createAll.bash -n onap -a robot|aapc|aai (to bring up a single service at a time) Only if you have >50G run the following (all namespaces) ./createAll.bash -n onap Wait until the containers are all up - you should see... |

List of Containers

Total pods is 47

Docker container list - source of truth: https://git.onap.org/integration/tree/packaging/docker/docker-images.csv

below any coloured container had issues getting to running state (currently 32 of 33 come up after 45 min) - there are an addition 11 dcae pods - bringing the total to 44 with an additional 4 for appc/sdnc and the config-init pod

NAMESPACE master:20170715 | NAME | READY | Image | STATUS | Notes |

|---|---|---|---|---|---|

| default | config-init | 0/0 | Terminated (Succeeded) | The mount "config-init-root" is in the following location (user configurable VF parameter file below) /dockerdata-nfs/onapdemo/mso/mso/mso-docker.json | |

| onap-aai | aai-service-346921785-624ss | 1/1 | Running | ||

| onap-aai | hbase-139474849-7fg0s | 1/1 | Running | ||

| onap-aai | model-loader-service-1795708961-wg19w | 0/1 | Init:1/2 | ||

| onap-appc | appc-2044062043-bx6tc | 1/1 | Running | ||

| onap-appc | appc-dbhost-2039492951-jslts | 1/1 | Running | ||

| onap-appc | appc-dgbuilder-2934720673-mcp7c | 1/1 | Running | ||

| onap-appc | sdntldb01 (internal) | ||||

| onap-appc | sdnctldb02 (internal) | ||||

| onap-dcae | dcae-zookeeper | 1/1 | wurstmeister/zookeeper:latest | Running | |

| onap-dcae | dcae-kafka | dockerfiles_kafka:latest | debugging | Note: currently there are no DCAE containers running yet (we are missing 6 yaml files (1 for the controller and 5 for the collector,staging,3-cdap pods)) - therefore DMaaP, VES collectors and APPC actions as the result of policy actions (closed loop) - will not function yet. In review: https://gerrit.onap.org/r/#/c/7287/ OOM-5 - Getting issue details... STATUS OOM-62 - Getting issue details... STATUS | |

| onap-dcae | dcae-dmaap | attos/dmaap:latest | debugging | ||

| onap-dcae | pgaas | 1/1 | obrienlabs/pgaas | https://hub.docker.com/r/oomk8s/pgaas/tags/ | |

| onap-dcae | dcae-collector-common-event | 1/1 | Running | persistent volume: dcae-collector-pvs | |

| onap-dcae | dcae-collector-dmaapbc | 1/1 | Running | ||

| |||||

| onap-dcae | dcae-ves-collector | debugging | |||

| onap-dcae | cdap-0 | debugging | |||

| onap-dcae | cdap-1 | debugging | |||

| onap-dcae | cdap-2 | debugging | |||

| onap-message-router | dmaap-3842712241-gtdkp | 0/1 | CrashLoopBackOff | ||

| onap-message-router | global-kafka-89365896-5fnq9 | 1/1 | Running | ||

| onap-message-router | zookeeper-1406540368-jdscq | 1/1 | Running | ||

| onap-mso | mariadb-2638235337-758zr | 1/1 | Running | ||

| onap-mso | mso-3192832250-fq6pn | 0/1 | CrashLoopBackOff | fixed by config-init and resolv.conf | |

| onap-policy | brmsgw-568914601-d5z71 | 0/1 | Init:0/1 | fixed by config-init and resolv.conf | |

| onap-policy | drools-1450928085-099m2 | 0/1 | Init:0/1 | fixed by config-init and resolv.conf | |

| onap-policy | mariadb-2932363958-0l05g | 1/1 | Running | ||

| onap-policy | nexus-871440171-tqq4z | 0/1 | Running | ||

| onap-policy | pap-2218784661-xlj0n | 1/1 | Running | ||

| onap-policy | pdp-1677094700-75wpj | 0/1 | Init:0/1 | fixed by config-init and resolv.conf | |

| onap-policy | pypdp-3209460526-bwm6b | 0/1 | Init:0/1 | fixed by config-init and resolv.conf 1.0.0 only | |

| onap-portal | portalapps-1708810953-trz47 | 0/1 | Init:CrashLoopBackOff | Initial dockerhub mariadb download issue - fixed | |

| onap-portal | portaldb-3652211058-vsg8r | 1/1 | Running | ||

| onap-portal | vnc-portal-948446550-76kj7 | 0/1 | Init:0/5 | fixed by config-init and resolv.conf | |

| onap-robot | robot-964706867-czr05 | 1/1 | Running | ||

| onap-sdc | sdc-be-2426613560-jv8sk | 0/1 | Init:0/2 | fixed by config-init and resolv.conf | |

| onap-sdc | sdc-cs-2080334320-95dq8 | 0/1 | CrashLoopBackOff | fixed by config-init and resolv.conf | |

| onap-sdc | sdc-es-3272676451-skf7z | 1/1 | Running | ||

| onap-sdc | sdc-fe-931927019-nt94t | 0/1 | Init:0/1 | fixed by config-init and resolv.conf | |

| onap-sdc | sdc-kb-3337231379-8m8wx | 0/1 | Init:0/1 | fixed by config-init and resolv.conf | |

| onap-sdnc | sdnc-1788655913-vvxlj | 1/1 | Running | ||

| onap-sdnc | sdnc-dbhost-240465348-kv8vf | 1/1 | Running | ||

| onap-sdnc | sdnc-dgbuilder-4164493163-cp6rx | 1/1 | Running | ||

| onap-sdnc | sdnctlbd01 (internal) | ||||

| onap-sdnc | sdnctlb02 (internal) | ||||

| onap-sdnc | sdnc-portal-2324831407-50811 | 0/1 | Running | root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-sdnc logs -f sdnc-portal-3375812606-01s1d | grep ERR npm ERR! fetch failed https://registry.npmjs.org/is-utf8/-/is-utf8-0.2.1.tgz | |

| onap-vid | vid-mariadb-4268497828-81hm0 | 0/1 | CrashLoopBackOff | fixed by config-init and resolv.conf | |

| onap-vid | vid-server-2331936551-6gxsp | 0/1 | Init:0/1 | fixed by config-init and resolv.conf |

List of Docker Images

root@obriensystemskub0:~/oom/kubernetes/dcae# docker images missing: # can be replaced by public dockerHub link |

|---|

Verifying Container Startup

Run VID if required to verify that config-init has mounted properly (and to verify resolv.conf)

Cloning details

Install the latest version of the OOM (ONAP Operations Manager) project repo - specifically the ONAP on Kubernetes work just uploaded June 2017

https://gerrit.onap.org/r/gitweb?p=oom.git

git clone ssh://yourgerrituserid@gerrit.onap.org:29418/oom cd oom/kubernetes/oneclick Versions oom : master (1.1.0-SNAPSHOT) onap deployments: 1.0.0 |

|---|

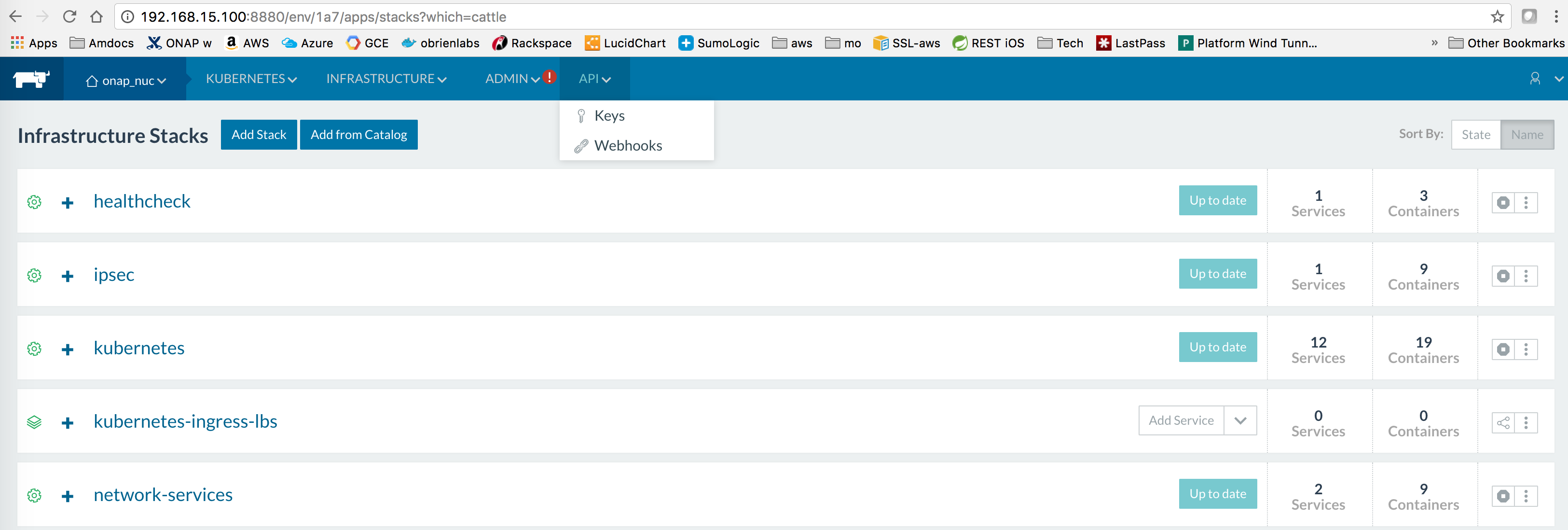

Rancher environment for Kubernetes

setup a separate onap kubernetes environment and disable the exising default environment.

Adding hosts to the Kubernetes environment will kick in k8s containers

Rancher kubectl config

To be able to run the kubectl scripts - install kubectl

Nexus3 security settings

Fix nexus3 security for each namespace

in createAll.bash add the following two lines just before namespace creation - to create a secret and attach it to the namespace (thanks to Jason Hunt of IBM last friday to helping us attach it - when we were all getting our pods to come up). A better fix for the future will be to pass these in as parameters from a prod/stage/dev ecosystem config.

create_namespace() {

kubectl create namespace $1-$2

+ kubectl --namespace $1-$2 create secret docker-registry regsecret --docker-server=nexus3.onap.org:10001 --docker-username=docker --docker-password=docker --docker-email=email@email.com

+ kubectl --namespace $1-$2 patch serviceaccount default -p '{"imagePullSecrets": [{"name": "regsecret"}]}'

} |

|---|

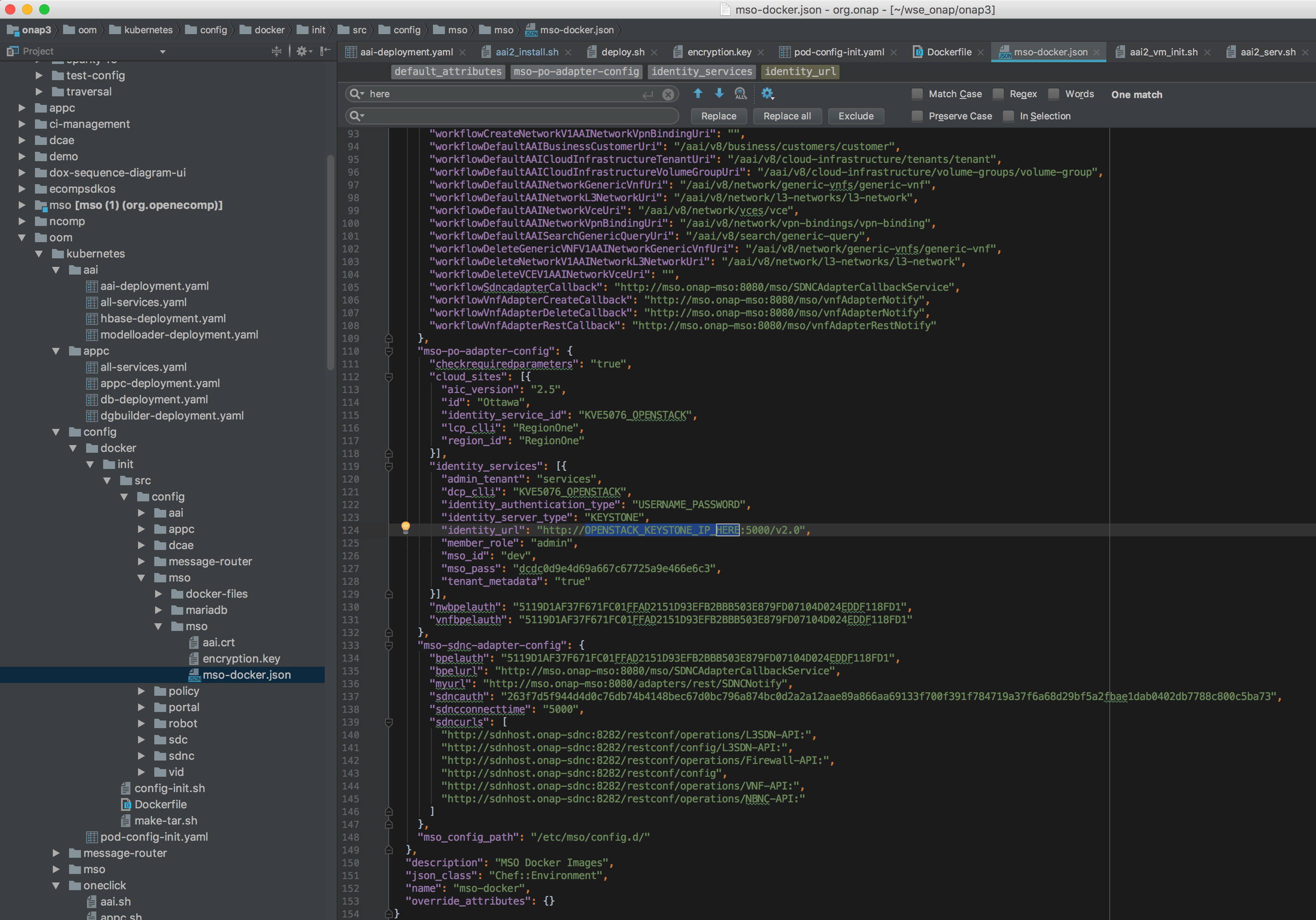

Fix MSO mso-docker.json

Before running pod-config-init.yaml - make sure your config for openstack is setup correctly - so you can deploy the vFirewall VMs for example

vi oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json

| Original | Replacement for Rackspace |

"mso-po-adapter-config": { | "mso-po-adapter-config": { |

|---|

delete/recreate the config poroot@obriensystemskub0:~/oom/kubernetes/config# kubectl --namespace default delete -f pod-config-init.yaml

pod "config-init" deleted

root@obriensystemskub0:~/oom/kubernetes/config# kubectl create -f pod-config-init.yaml

pod "config-init" created

or copy over your changes directly to the mount

root@obriensystemskub0:~/oom/kubernetes/config# cp docker/init/src/config/mso/mso/mso-docker.json /dockerdata-nfs/onapdemo/mso/mso/mso-docker.json

Use only "onap" namespace

Note: use only the hardcoded "onap" namespace prefix - as URLs in the config pod are set as follows "workflowSdncadapterCallback": "http://mso.onap-mso:8080/mso/SDNCAdapterCallbackService",

Monitor Container Deployment

first verify your kubernetes system is up

Then wait 25-45 min for all pods to attain 1/1 state

Kubernetes specific config

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Nexus Docker repo Credentials

Checking out use of a kubectl secret in the yaml files via - https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

Container Endpoint access

Check the services view in the Kuberntes API under robot

robot.onap-robot:88 TCP

robot.onap-robot:30209 TCP

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get services --all-namespaces -o wide onap-vid vid-mariadb None <none> 3306/TCP 1h app=vid-mariadb onap-vid vid-server 10.43.14.244 <nodes> 8080:30200/TCP 1h app=vid-server |

|---|

Container Logs

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-vid logs -f vid-server-248645937-8tt6p 16-Jul-2017 02:46:48.707 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 22520 ms root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE onap-robot robot-44708506-dgv8j 1/1 Running 0 36m 10.42.240.80 obriensystemskub0 root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl --namespace onap-robot logs -f robot-44708506-dgv8j 2017-07-16 01:55:54: (log.c.164) server started |

|---|

SSH into ONAP containers

Normally I would via https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

Get the pod name via kubectl get pods --all-namespaces -o wide bash into the pod via kubectl -n onap-mso exec -it mso-1648770403-8hwcf /bin/bash |

|---|

Push Files to Pods

Trying to get an authorization file into the robot pod

root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl cp authorization onap-robot/robot-44708506-nhm0n:/home/ubuntu above works? |

|---|

Running ONAP Portal UI Operations

see Installing and Running the ONAP Demos

Get the mapped external port by checking the service in kubernetes - here 30200 for VID on a particular node in our cluster.

or run a kube

fix /etc/hosts as usual

192.168.163.132 portal.api.simpledemo.openecomp.org 192.168.163.132 sdc.api.simpledemo.openecomp.org 192.168.163.132 policy.api.simpledemo.openecomp.org 192.168.163.132 vid.api.simpledemo.openecomp.org |

|---|

In order to map internal 8989 ports to external ones like 30215 - we will need to reconfigure the onap config links as below.

Kubernetes Installation Options

Rancher on Ubuntu 16.04

Install Rancher

http://rancher.com/docs/rancher/v1.6/en/quick-start-guide/

http://rancher.com/docs/rancher/v1.6/en/installing-rancher/installing-server/#single-container

Install a docker version that Rancher and Kubernetes support which is currently 1.12.6

http://rancher.com/docs/rancher/v1.5/en/hosts/#supported-docker-versions

curl https://releases.rancher.com/install-docker/1.12.sh | sh |

|---|

Verify your Rancher admin console is up on the external port you configured above

Wait for the docker container to finish DB startup

http://rancher.com/docs/rancher/v1.6/en/hosts/

Registering Hosts in Rancher

Having issues registering a combined single VM (controller + host) - use your real IP not localhost

In settings | Host Configuration | set your IP [root@obrien-b2 etcd]# sudo docker run -e CATTLE_AGENT_IP="192.168.163.128" --rm --privileged -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/rancher:/var/lib/rancher rancher/agent:v1.2.2 http://192.168.163.128:8080/v1/scripts/A9487FC88388CC31FB76:1483142400000:IypSDQCtA4SwkRnthKqH53Vxoo |

|---|

See your host registered

Troubleshooting

Rancher fails to restart on server reboot

Having issues after a reboot of a colocated server/agent

Installing Clean Ubuntu

apt-get install ssh apt-get install ubuntu-desktop |

|---|

Docker Nexus Config

OOM-3 - Getting issue details... STATUS

Out of the box we cant pull images - currently working on a config step around https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

| kubectl create secret docker-registry regsecret --docker-server=nexus3.onap.org:10001 --docker-username=docker --docker-password=docker --docker-email=someone@amdocs.com |

|---|

imagePullSecrets: - name: regsecret |

|---|

Failed to pull image "nexus3.onap.org:10001/openecomp/testsuite:1.0-STAGING-latest": image pull failed for nexus3.onap.org:10001/openecomp/testsuite:1.0-STAGING-latest, this may be because there are no credentials on this request. details: (unauthorized: authentication required)

kubelet 172.17.4.99

OOM Repo changes

20170629: fix on 20170626 on a hardcoded proxy - (for those who run outside the firewall) - https://gerrit.onap.org/r/gitweb?p=oom.git;a=commitdiff;h=131c2a42541fb807f395fe1f39a8482a53f92c60

DNS resolution

add "service.ns.svc.cluster.local" to fix

Search Line limits were exceeded, some dns names have been omitted, the applied search line is: default.svc.cluster.local svc.cluster.local cluster.local kubelet.kubernetes.rancher.internal kubernetes.rancher.internal rancher.internal

https://github.com/rancher/rancher/issues/9303

root@obriensystemskub0:~/oom/kubernetes/oneclick# cat /etc/resolv.conf

# Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

# DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN

nameserver 192.168.241.2

search localdomain service.ns.svc.cluster.local

OOM-1 - Getting issue details... STATUS

Design Issues

DI 10: 20170724: DCAE Integration

OOM-5 - Getting issue details... STATUS

todo:

docker images need to be pushed to nexus

from: registry.stratlab.local:30002/onap/dcae/cdap:1.0.7

to: nexus3.onap.org:10001/openecomp

OOM-62 - Getting issue details... STATUS

2 persistent volumes also created (controller-pvs, collector-pvs) root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get pods --all-namespaces -o wide | grep dcae onap-dcae cdap0-801098998-1j83b 0/1 Init:ImagePullBackOff 0 7m 10.42.170.56 obriensystemskub0 onap-dcae cdap1-1109312935-sv8g0 0/1 Init:ImagePullBackOff 0 7m 10.42.184.43 obriensystemskub0 onap-dcae cdap2-2495595959-fxnmg 0/1 Init:ImagePullBackOff 0 7m 10.42.69.133 obriensystemskub0 onap-dcae dcae-collector-common-event-2687859322-jcv7n 1/1 Running 0 7m 10.42.219.171 obriensystemskub0 onap-dcae dcae-collector-dmaapbc-2087600858-sb98v 1/1 Running 0 7m 10.42.225.93 obriensystemskub0 onap-dcae dcae-controller-1960065296-95xxx 0/1 ContainerCreating 0 7m <none> obriensystemskub0 onap-dcae dcae-pgaas-3690783998-v4w60 0/1 ImagePullBackOff 0 7m 10.42.28.81 obriensystemskub0 onap-dcae dcae-ves-collector-1184035059-t0t7s 0/1 ImagePullBackOff 0 7m 10.42.223.26 obriensystemskub0 onap-dcae dmaap-3637563410-2s7b2 0/1 CrashLoopBackOff 6 7m 10.42.7.93 obriensystemskub0 onap-dcae kafka-2923495538-tb218 0/1 CrashLoopBackOff 6 7m 10.42.49.109 obriensystemskub0 onap-dcae zookeeper-2122426841-2n6h5 1/1 Running 0 7m 10.42.192.205 obriensystemskub0 root@obriensystemskub0:~/oom/kubernetes/oneclick# kubectl get services --all-namespaces -o wide | grep dcae onap-dcae dcae-collector-common-event 10.43.97.177 <nodes> 8080:30236/TCP,8443:30237/TCP,9999:30238/TCP 8m app=dcae-collector-common-event onap-dcae dcae-collector-dmaapbc 10.43.100.153 <nodes> 8080:30239/TCP,8443:30240/TCP 8m app=dcae-collector-dmaapbc onap-dcae dcae-controller 10.43.117.220 <nodes> 8000:30234/TCP,9998:30235/TCP 7m app=dcae-controller onap-dcae dcae-ves-collector 10.43.215.194 <nodes> 8080:30241/TCP,9999:30242/TCP 8m app=dcae-ves-collector onap-dcae zldciad4vipstg00 10.43.110.169 <nodes> 5432:30245/TCP 8m app=dcae-pgaas |

|---|

Pushing Docker Images to ONAP

Other projects have a docker-maven-plugin - need to see if I can run this locally.

Questions

https://lists.onap.org/pipermail/onap-discuss/2017-July/002084.html

Links

https://kubernetes.io/docs/user-guide/kubectl-cheatsheet/

Content to edit/merge

Viswa, Missed that you have config-init in your mail – so covered OK. I stood up robot and aai on a clean VM to verify the docs (this is inside an openstack lab behind a firewall – that has its own proxy) Let me know if you have docker proxy issues – I would also like to reference/doc this for others like yourselves. Also, just verifying that you added the security workaround step – (or make your docker repo trust all repos) vi oom/kubernetes/oneclick/createAll.bash create_namespace() { kubectl create namespace $1-$2 + kubectl --namespace $1-$2 create secret docker-registry regsecret --docker-server=nexus3.onap.org:10001 --docker-username=docker --docker-password=docker --docker-email=email@email.com + kubectl --namespace $1-$2 patch serviceaccount default -p '{"imagePullSecrets": [{"name": "regsecret"}]}' } I just happen to be standing up a clean deployment on openstack – lets try just robot – it pulls the testsuite image root@obrienk-1:/home/ubuntu/oom/kubernetes/config# kubectl create -f pod-config-init.yaml pod "config-init" created root@obrienk-1:/home/ubuntu/oom/kubernetes/config# cd ../oneclick/ root@obrienk-1:/home/ubuntu/oom/kubernetes/oneclick# ./createAll.bash -n onap -a robot ********** Creating up ONAP: robot Creating namespaces ********** namespace "onap-robot" created secret "regsecret" created serviceaccount "default" patched Creating services ********** service "robot" created ********** Creating deployments for robot ********** Robot.... deployment "robot" created **** Done **** root@obrienk-1:/home/ubuntu/oom/kubernetes/oneclick# kubectl get pods --all-namespaces | grep onap onap-robot robot-4262359493-k84b6 1/1 Running 0 4m One nexus3 onap specific images downloaded for robot root@obrienk-1:/home/ubuntu/oom/kubernetes/oneclick# docker images REPOSITORY TAG IMAGE ID CREATED SIZE nexus3.onap.org:10001/openecomp/testsuite 1.0-STAGING-latest 3a476b4fe0d8 2 hours ago 1.16 GB next try one with 3 onap images like aai uses nexus3.onap.org:10001/openecomp/ajsc-aai 1.0-STAGING-latest c45b3a0ca00f 2 hours ago 1.352 GB aaidocker/aai-hbase-1.2.3 latest aba535a6f8b5 7 months ago 1.562 GB hbase is first root@obrienk-1:/home/ubuntu/oom/kubernetes/oneclick# ./createAll.bash -n onap -a aai root@obrienk-1:/home/ubuntu/oom/kubernetes/oneclick# kubectl get pods --all-namespaces | grep onap onap-aai aai-service-3351257372-tlq93 0/1 PodInitializing 0 2m onap-aai hbase-1381435241-ld56l 1/1 Running 0 2m onap-aai model-loader-service-2816942467-kh1n0 0/1 Init:0/2 0 2m onap-robot robot-4262359493-k84b6 1/1 Running 0 8m aai-service is next root@obrienk-1:/home/ubuntu/oom/kubernetes/oneclick# kubectl get pods --all-namespaces | grep onap onap-aai aai-service-3351257372-tlq93 1/1 Running 0 5m onap-aai hbase-1381435241-ld56l 1/1 Running 0 5m onap-aai model-loader-service-2816942467-kh1n0 0/1 Init:1/2 0 5m onap-robot robot-4262359493-k84b6 1/1 Running 0 11m model-loader-service takes the longest Subject: Re: [onap-discuss] [OOM] Using OOM kubernetes based seed code over Rancher Hi , Search line limits turn out to be a red-herring – this is a non-fatal bug in Rancher you can ignore – they put too many entries in the search domain list (Ill update the wiki) Verify you have a stopped config-init pod – it won’t show in the get pods command – goto the rancher or kubernetes gui. The fact you have non-working robot – looks like you may be having issues pulling images from docker – verify your image list and that docker can pull from nexus3 >docker images Should show some nexus3.onap.org ones if the pull were ok If not try an image pull to verify this: ubuntu@obrienk-1:~/oom/kubernetes/config$ docker login -u docker -p docker nexus3.onap.org:10001 Login Succeeded ubuntu@obrienk-1:~/oom/kubernetes/config$ docker pull nexus3.onap.org:10001/openecomp/mso:1.0-STAGING-latest 1.0-STAGING-latest: Pulling from openecomp/mso 23a6960fe4a9: Extracting [===================================> ] 32.93 MB/45.89 MB e9e104b0e69d: Download complete If so then you need to set the docker proxy – or run outside the firewall like I do. Also to be able to run the 34 pods (without DCAE) you will need 37g+ (33 + 4 for rancher/k82 + some for the OS) – also plan for over 100G of HD space. /michael |

|---|