Difference between running vCPE and vCPE + OOF - Beijing

The following steps are in addition the standard vCPE use case setup steps.

1. We need to create an updated vCPE service CSAR as follows:

# When we add nfType and nfRole during vCPE service creation in SDC, we also add an nfFunction to subcomponents in vCPE CSAR. For vG put nf_function of 'vGW' For vgMuxAR put nf_function of 'vgMuxAR' For vBRG put nf_function of 'vBRG'

2. We need to configure OOF and OOF used Policy

How to configure OOF is TBD based on OOF Team Uploading the policy models is TBD based on OOF Team

3. When we create the service instance we need to add the following to the request for userParams

"userParams": [

{

"name": "BRG_WAN_MAC_Address",

"value": "fa:16:3e:be:9a:b0"

},

{

"name": "Customer_Location",

"value": {

"customerLatitude": "32.897480",

"customerLongitude": "97.040443",

"customerName": "some_company"

}

},

{

"name": "Homing_Model_Ids",

"value": [

{

"resourceModuleName": "vgMuxAR",

"resourceModelInvariantId": "565d5b75-11b8-41be-9991-ee03a0049159",

"resourceModelVersionId": "61414c6c-6082-4e03-9824-bf53c3582b78"

}

]

}

]

}

Limitation of vCPE + OOF in Beijing

- vCPE service instance name should match policy setup in OOF

Difference between running vCPE and vCPE + OOF + HPA - Beijing

1. We need to create an updated vCPE service CSAR that has flavor_label for vGW set to vcpe.vgw

We can use the following zip when creating the vgw CSAR: vgw-sb01-hpa1.zip

2. We need to create OOF used HPA Policy

This is a basic HPA policy. What we found during testing is that formatting can cause OOF not to work, so caution is in order.

{ "configBody":"{\"service\":\"hpaPolicy\",\"policyName\":\"OSDF_R2.hpa_policy_vGW_1\",\"description\":\"HPA policy for vGW\",\"templateVersion\":\"OpenSource.version.1\",\"version\":\"test1\",\"priority\":\"3\",\"riskType\":\"test\",\"riskLevel\":\"2\",\"guard\":\"False\",\"content\":{\"resources\":[\"vGW\"],\"identity\":\"hpaPolicy_vGW\",\"policyScope\":[\"vcpe\",\"us\",\"international\",\"ip\",\"vgw\"],\"policyType\":\"hpaPolicy\",\"flavorFeatures\":[{\"flavorLabel\":\"vcpe.vgw\",\"flavorProperties\":[{\"hpa-feature\":\"basicCapabilities\",\"mandatory\":\"True\",\"architecture\":\"generic\",\"hpa-feature-attributes\":[{\"hpa-attribute-key\":\"numVirtualCpu\",\"hpa-attribute-value\":\"2\",\"operator\":\">=\",\"unit\":\"\"},{\"hpa-attribute-key\":\"virtualMemSize\",\"hpa-attribute-value\":\"8\",\"operator\":\"=\",\"unit\":\"MB\"}]}]},{\"flavorLabel\":\"vcpe.vgw.02\",\"flavorProperties\":[{\"hpa-feature\":\"basicCapabilities\",\"mandatory\":\"True\",\"architecture\":\"generic\",\"hpa-feature-attributes\":[{\"hpa-attribute-key\":\"numVirtualCpu\",\"hpa-attribute-value\":\"4\",\"operator\":\">=\",\"unit\":\"\"},{\"hpa-attribute-key\":\"virtualMemSize\",\"hpa-attribute-value\":\"8\",\"operator\":\"=\",\"unit\":\"MB\"}]}]},{\"flavorLabel\":\"vcpe.vgw.03\",\"flavorProperties\":[{\"hpa-feature\":\"basicCapabilities\",\"mandatory\":\"True\",\"architecture\":\"generic\",\"hpa-feature-attributes\":[{\"hpa-attribute-key\":\"numVirtualCpu\",\"hpa-attribute-value\":\"4\",\"operator\":\"=\",\"unit\":\"\"},{\"hpa-attribute-key\":\"virtualMemSize\",\"hpa-attribute-value\":\"8\",\"operator\":\"=\",\"unit\":\"MB\"}]}]}]}}", "policyName": "OSDF_R2.hpa_policy_vGW_1", "policyConfigType":"Optimization", "ecompName":"DCAE", "policyScope":"OSDF_R2" }

Limitation of vCPE + OOF + HPA in Beijing

- HPA will not work with multiple clouds due to a bug: https://jira.onap.org/browse/SO-671

This bug also requires the the cloud region to be RegionOne.

Preparation

Install ONAP

Make sure that you've installed ONAP R2 release. For installation instructions, please refer ONAP Installation in Vanilla OpenStack.

Make sure that all components pass health check when you do the following:

- ssh to the robot vm, run '/opt/ete.sh health'

You will need to update your /etc/hosts so that you can access the ONAP Portal in your browser. You may also want to add IP addresses of so, sdnc, aai, etc so that you can easily ssh to those VMs. Below is a sample just for your reference:

10.12.5.159 aai-inst2 10.12.5.162 portal 10.12.5.162 portal.api.simpledemo.onap.org 10.12.5.173 dns-server 10.12.5.178 aai 10.12.5.178 aai.api.simpledemo.onap.org 10.12.5.178 aai1 10.12.5.183 dcaecdap00 10.12.5.184 multi-service 10.12.5.189 sdc 10.12.5.189 sdc.api.simpledemo.onap.org 10.12.5.194 robot 10.12.5.2 so 10.12.5.204 dmaap 10.12.5.207 appc 10.12.5.208 dcae-bootstrap 10.12.5.211 dcaeorcl00 10.12.5.214 sdnc 10.12.5.219 dcaecdap02 10.12.5.224 dcaecnsl02 10.12.5.225 dcaecnsl00 10.12.5.227 dcaedokp00 10.12.5.229 dcaecnsl01 10.12.5.238 dcaepgvm00 10.12.5.239 dcaedoks00 10.12.5.241 dcaecdap03 10.12.5.247 dcaecdap04 10.12.5.248 dcaecdap05 10.12.5.249 dcaecdap06 10.12.5.38 policy 10.12.5.38 policy.api.simpledemo.onap.org 10.12.5.48 vid 10.12.5.48 vid.api.simpledemo.onap.org 10.12.5.51 clamp 10.12.5.62 dcaecdap01

You can try to login to the portal at http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm using as one of the following roles. The password is demo123456 for all the users.

| User | Role |

|---|---|

| demo | Operator |

| cs0008 | DESIGNER |

| jm0007 | TESTER |

| op0001 | OPS |

| gv0001 | GOVERNOR |

Create images for vBRG, vBNG, vGMUX, and vG

Follow the following instructions to build an image for each VNF and save them in your Openstack: ONAP vCPE VPP-based VNF Installation and Usage Information

To avoid unexpected mistakes, you may want to give each image a meaningful name and also be careful when mixing upper case and lower case characters. After this you should see images like below.

VNF Onboarding

Create license model in SDC

Log in to SDC portal as designer. Create a license that will be used by the subsequent steps. The detailed steps are here: Creating a Licensing Model

Prepare HEAT templates

vCPE uses five VNFs: Infra, vBRG, vBNG, vGMUX, and vG, which are described using five HEAT templates. For each HEAT template, you will need to fill the env file with appropriate parameters. The HEAT templates can be obtained from gerrit: [demo.git] / heat / vCPE /

Note that for each VNF, the env file name and yaml file name are associated together by file MANIFEST.json. If for any reason you change the env anf yaml file names, please remember to change MANIFEST.json accordingly.

For each VNF, compress the three env, yaml, and json files into a zip package, which will be used for onboarding. If you want to get the zip packages I used for reference, download them here: infra-sb01.zip, vbng-sb01.zip, vbrg-sb01.zip, vgmux-sb01.zip, vgw-sb01-hpa.zip

VNF onboarding in SDC

Onboard the VNFs in SDC one by one. The process is the same for all VNFs. The suggested names for the VNFs are given below (all lower case). The suffix can be a date plus a sequence letter, e.g., 1222a.

- vcpevsp_infra_[suffix]

- vcpevsp_vbrg_[suffix]

- vcpevsp_vbng_[suffix]

- vcpevsp_vgmux_[suffix]

- vcpevsp_vgw_[suffix]_hpa

Below is an example for onboarding infra.

Sign into SDC as cs0008, choose ONBOARD and then click 'CREATE NEW VSP'.

Now enter the name of the vsp. For naming, I'd suggest all lower case with format vcpevsp_[vnf]_[suffix], see example below.

After clicking 'Create', click 'missing' and then select to use the license model created previously.

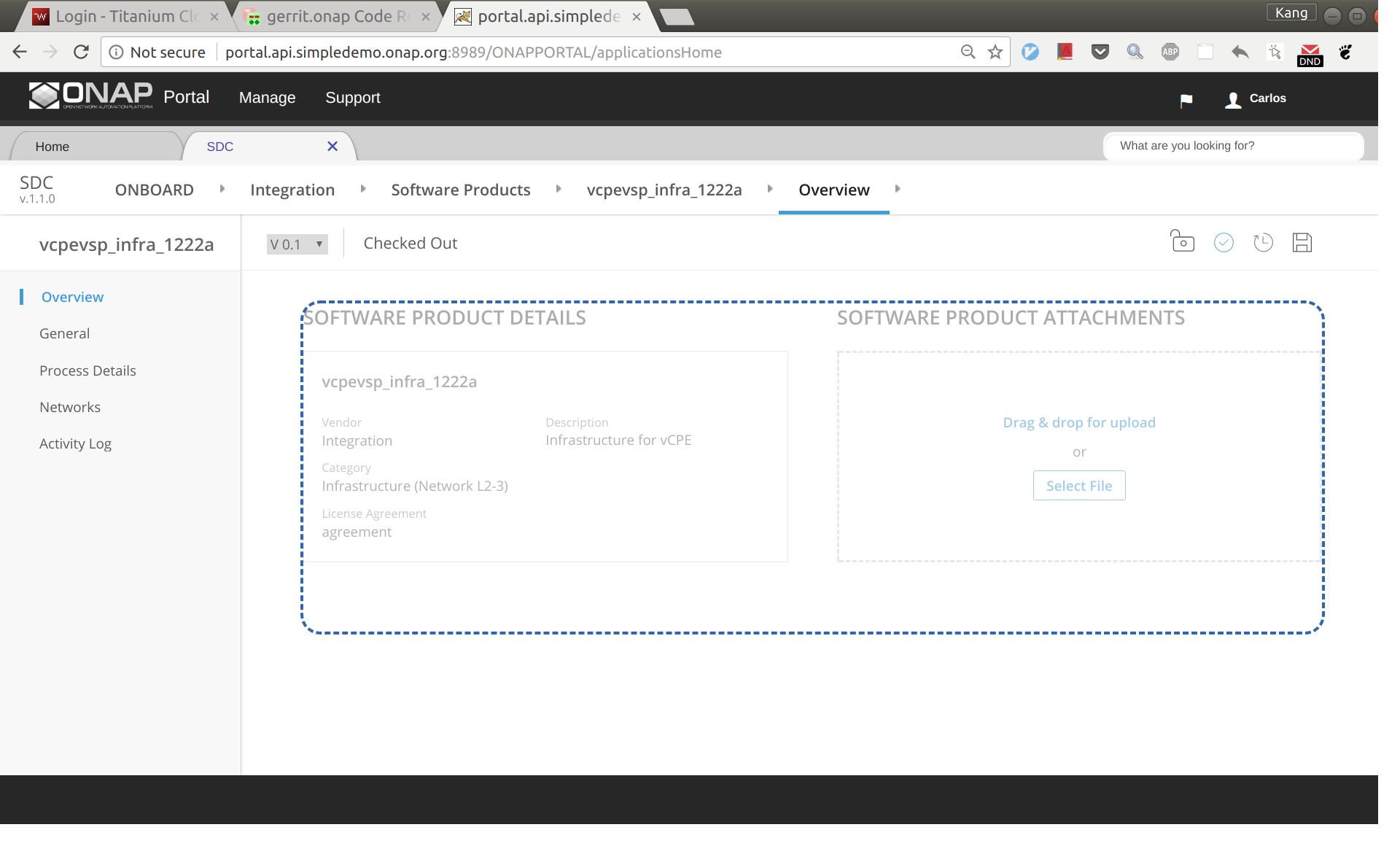

Click 'Overview' on the left size panel, then drag and drop infra.zip to the webpage to upload the HEAT.

Now click 'Proceed To Validation' to validate the HEAT template.

You may see a lot of warnings. In most cases, you can ignore those warnings.

Click 'Check in', and then 'Submit'

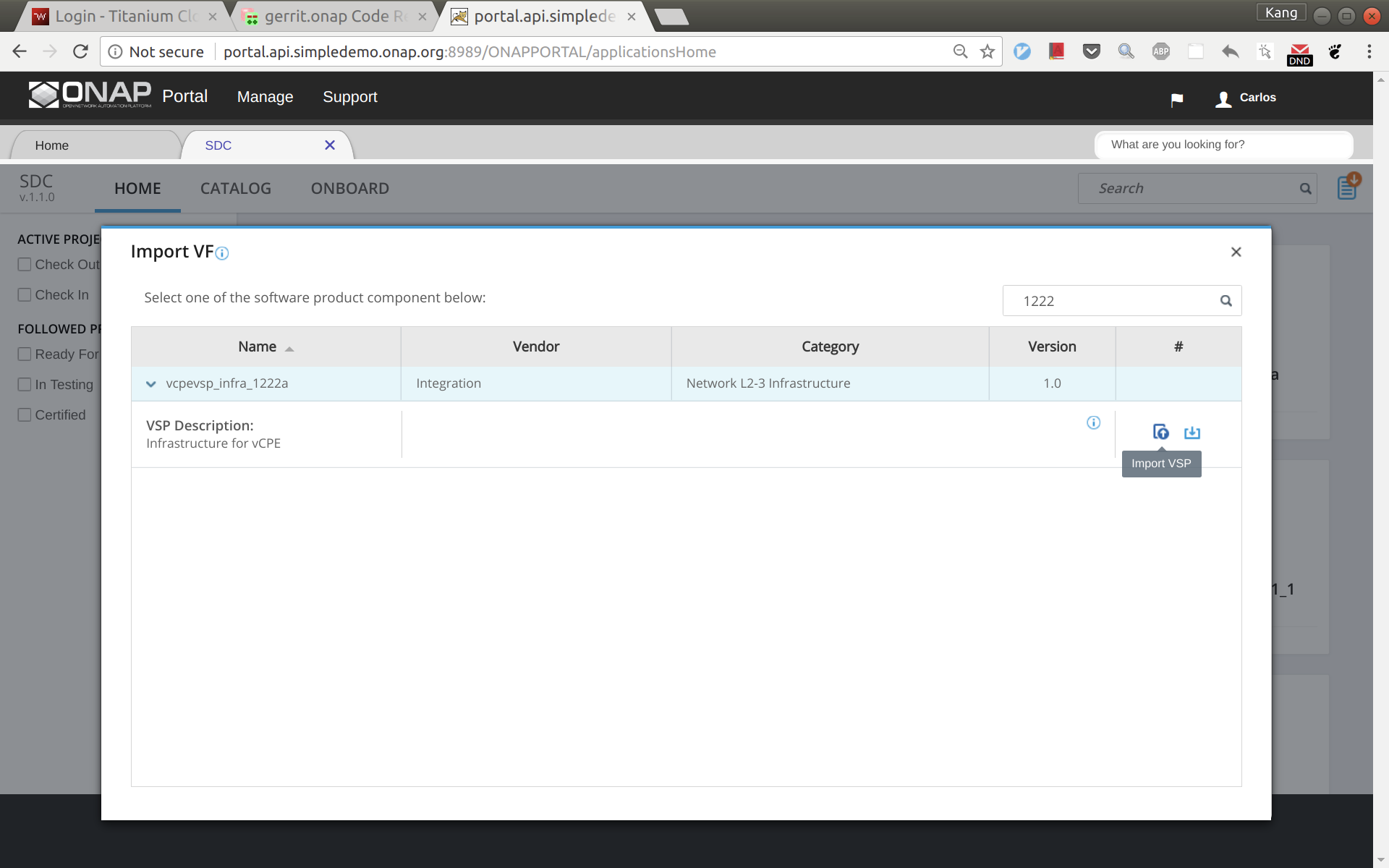

Go to SDC home, and then click 'Import VSP'.

In the search box, type in suffix of the vsp you onboarded a moment ago to easily locate the vsp. Then click 'Import VSP'.

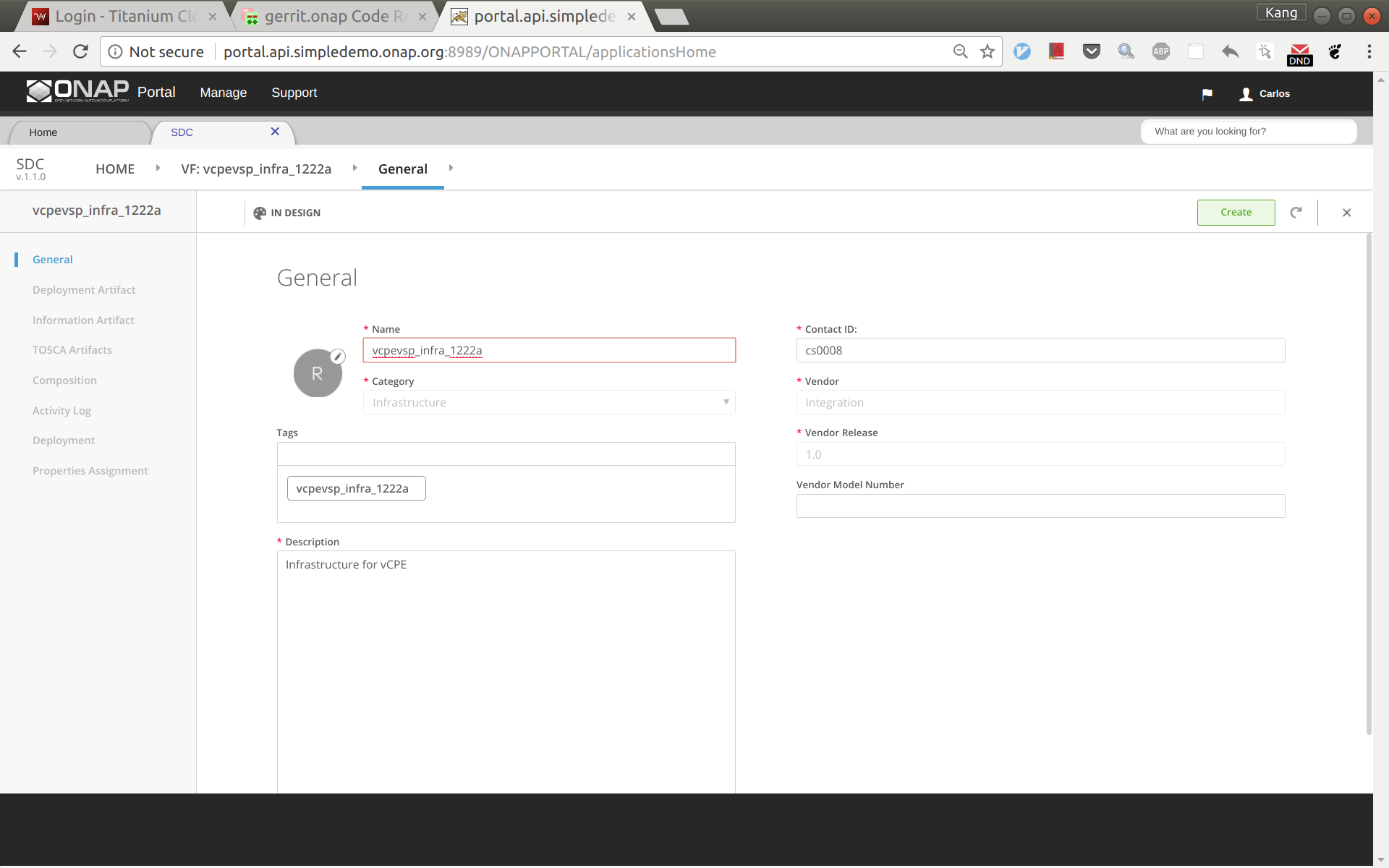

Click 'Create' without changing anything.

Now a VF based on the HEAT is created successfully. Click 'Submit for Testing'.

Sign out and sign back in as tester: jm0007, select the VF you created a moment ago, test and accept it.

Service Design and Creation

The entire vCPE use case is divided into five services as show below. Each service is described below with suggested names.

- vcpesvc_infra_[suffix]: includes two generic neutron networks named cpe_signal and cpe_public (all names are lower case) and a VNF infra.

- vcpesvc_vbng_[suffix]: includes two generic neutron networks named brg_bng and bng_mux and a VNF vBNG.

- vcpesvc_vgmux_[suffix]: includes a generic neutron network named mux_gw and a VNF vGMUX

- vcpesvc_vbrg_[suffix]: includes a VNF vBRG.

- vcpesvc_rescust_[suffix]_hpa: includes a VNF vGW and two allotted resources that will be explained shortly.

Service design and distribution for infra, vBNG, vGMUX, and vBRG

The process for creating these four services are the same, however make sure to use the vnfs & networks as described above. Below are the steps to create vcpesvc_infra_1222a. Follow the same process to create the other three services, changing networks and VNFs according to above. Log back in as Designer username: cs0008

In SDC, click 'Add Service' to create a new service

Enter name, category, description, product code, and click 'Create'.

Click 'Composition' from left side panel. Drag and drop VF vcpevsp_infra_[suffix] to the design.

Drag and drop a generic neutron network to the design, click to select the icon in the design, then click the pen in the upper right corner (next to the trash bin icon), a window will pop up as shown below. Now change the instance name to 'cpe_signal'.

Click and select the network icon in the design again. From the right side panel, click the icon and then select 'network_role'. In the pop up window, enter 'cpe_signal' as shown below.

Add another generic neutron network the sam way. This time change the instance name and network role to 'cpe_public'. Now the service design is completed. Click 'Submit for Testing'.

Sign out and sign back in as tester 'jm0007'. Test and approve this service.

Sign out and sign back in as governer 'gv0001'. Approve this service.

Sign out and sign back in as operator 'op0001'. Distribute this service. Click monitor to see the results. After some time (could take 30 seconds or more), you should see the service being distributed to AAI, SO, SDNC.

Service design and distribution for customer service

First of all, make sure that all the previous four services have been created and distributed successfully.

The customer service includes a VNF vGW and two allotted resources: tunnelxconn and brg. We will need to create the two allotted resources first and then use them together with vG (which was already onboarded and imported as a VF previously) to compose the service.

Create allotted resource tunnelxconn

This allotted resource depends on the previous created service vcpesvc_vgmux[suffix]. The dependency is described by filling the allotted resource with the UUID, invariant UUID, and service name of vcpesvc_vgmux_[suffix]. So for preparation, we first download the csar file of vcpesvc_vgmux_[suffix] from SDC.

Sign into SDC as designer cs0008, click create a new VF, select 'Tunnel XConnect' as category and enter other information as needed. See below for an example. I'm using vcpear_tunnelxconn_[suffix] as the name of this allotted resource.

Click create. And then click 'Composition', drag an 'AllottedResource' from the left side panel to the design.

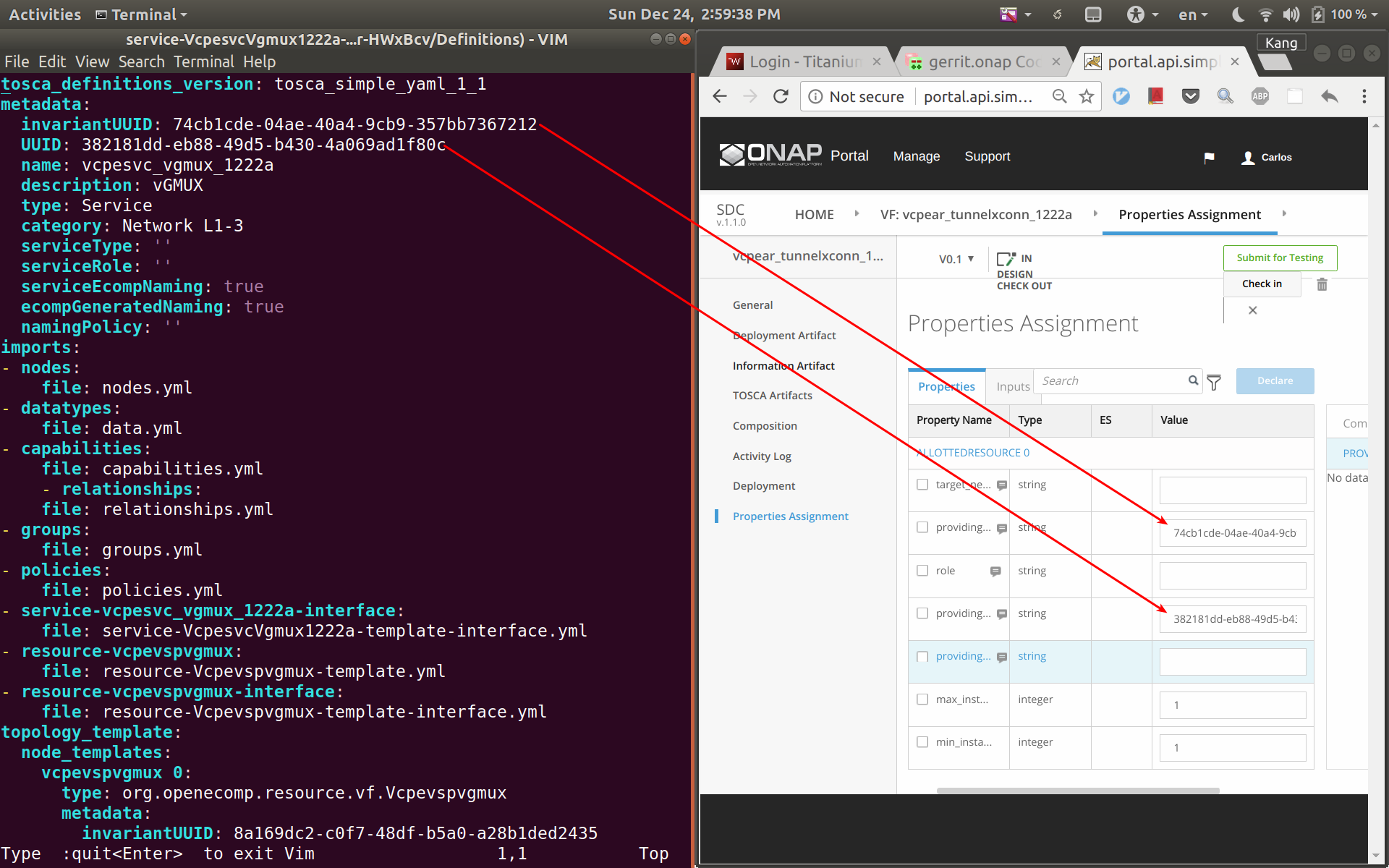

Click on VF name link in between HOME link and Composition on the top menu. From here click on Properties Assignment on the left hand menu. Now open the csar file for vcpesvc_vgmux_1222a, under 'Definitions' open file 'service-VcpesvcVgmux1222a-template.yml'. (Note that the actual file name depends on what you name the service at the first place.) Now put the yml file and the SDC window side by side. Now copy&paste the invariantUUID, UUID, and node name to the corresponding fields in SDC. See the two screenshots below. Save and then submit for testing.

Create allotted resource brg

This allotted resource depends on the previous created service vcpesvc_vbrg_1222a. The dependency is described by filling the allotted resource with the UUID, invariant UUID, and service name of vcpesvc_vbrg_1222a. So for preparation, we first download the csar file of vcpesvc_vbrg_1222a from SDC.

We name this allotted resource vcpear_brg_1222a. The process to create it is the same as that for the above vcpear_vgmux_1222a, Use catagory: Tunnel Xconnect again. The only differences are the UUID, invariant UUID, and service name parameters being used. Therefore, I will not repeat the steps and screenshots here.

Sign out and sign back in as tester 'jm0007'. Test and approve both Allotted Resources.

Create customer service

Log back in as Designer username: cs0008

We name the service vcpesvc_rescust_1222a and follow the steps below to create it.

Sign into SDC as designer, add a new service and fill in parameters as below. Then click 'Create'.

Click 'Composition' from the left side panel. Drag and drop the following three components to the design.

- vcpevsp_vgw_[suffix]

- vcpear_tunnelxconn_[suffix]

- vcpear_brg_[suffix]

Point your mouse to the arrow next to 'Composition' and then click 'Properties Assignment' (see below).

First select tunnelxconn from the right side panel, then fill nf_role and nf_type with value 'TunnelXConn' and the nf_function with 'vgMuxAR'

For vG put nf_function ad 'vGW'.

Next select brg from the right side panel, then fill nf_role and nf_type with value 'BRG'.

Click 'Submit for Testing'.

Now sign out and sign back in as tester 'jm0007' to complete test of vcpesvc_rescust_1222a.

Sign out and sign back in as governer 'gv0001'. Approve this service.

Distribute the customer service to AAI, SO, and SDNC

Before distributing the customer service, make sure that the other four services for infra, vBNG, vGMUX, and vBRG all have been successfully distributed.

ssh to the sdnc VM and execute 'docker stop sdnc_ueblistener_container'. This is a temporary operation and should not be needed in the future after a little problem in the sdnc ueb listener is fixed.

Now distribute the customer service, sign out and sign back in as operator 'op0001'. Distribute this service and check the status to ensure the distribution succeeds. It may take a tens of seconds to complete. The results should look like below.

Initial Configuration of ONAP to Deploy vCPE

ssh to the robot VM, execute:

- /opt/demo.sh init_robot

- /opt/demo.sh init

Add an availability zone to AAI by executing the following:

curl -X PUT \

https://aai:8443/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/CloudOwner/RegionOne/availability-zones/availability-zone/nova \

-H 'accept: application/json' \

-u 'AAI:AAI'\

-H 'content-type: application/json' \

-d '{

"availability-zone-name": "nova",

"hypervisor-type": "KVM",

"operational-status": "Active"

}'

Add operation user ID to AAI. Note that you will need to replace the tenant ID 087050388b204c73a3e418dd2c1fe30b and tenant name with the values you use.

curl -X PUT \

'https://aai1:8443/aai/v11/business/customers/customer/SDN-ETHERNET-INTERNET' \

-H 'accept: application/json' \

-H 'cache-control: no-cache' \

-H 'content-type: application/json' \

-u 'AAI:AAI'\

-d '{

"global-customer-id": "SDN-ETHERNET-INTERNET",

"subscriber-name": "SDN-ETHERNET-INTERNET",

"subscriber-type": "INFRA",

"service-subscriptions": {

"service-subscription": [

{

"service-type": "vCPE",

"relationship-list": {

"relationship": [

{

"related-to": "tenant",

"related-link": "/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/CloudOwner/RegionOne/tenants/tenant/087050388b204c73a3e418dd2c1fe30b",

"relationship-data": [

{

"relationship-key": "cloud-region.cloud-owner",

"relationship-value": "CloudOwner"

},

{

"relationship-key": "cloud-region.cloud-region-id",

"relationship-value": "RegionOne"

},

{

"relationship-key": "tenant.tenant-id",

"relationship-value": "087050388b204c73a3e418dd2c1fe30b"

}

],

"related-to-property": [

{

"property-key": "tenant.tenant-name",

"property-value": "Integration-SB-01"

}

]

}

]

}

}

]

}

}'

ssh to the SO VM and do the following

- Enter the mso docker: docker exec -it testlab_mso_1 bash

- Inside the docker, execute: update-ca-certificates -f

- Edit /etc/mso/config.d/mso.bpmn.urn.properties, find the following line

mso.workflow.default.aai.v11.tenant.uri=/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/CloudOwner/DFW/tenants/tenant

and change it to the following

mso.workflow.default.aai.v11.tenant.uri=/aai/v11/cloud-infrastructure/cloud-regions/cloud-region/CloudOwner/RegionOne/tenants/tenant - Exit from the docker

ssh to the SDNC VM and do the following:

- Add a route: ip route add 10.3.0.0/24 via 10.0.101.10 dev eth0

- Enter the sdnc controller docker: docker exec -it sdnc_controller_container bash

In the container, run the following to create IP address pool: /opt/sdnc/bin/addIpAddresses.sh VGW 10.5.0 22 250

Deploy Infrastructure

Download and modify automation code

I have developed a python program to automate the deployment. The code will be added to our gerrit repo later. For now you can download from this link: vcpe.zip

Unzip it and then modify vcpecommon.py. You will need to enter your cloud and network information into the following two dictionaries.

cloud = {

'--os-auth-url': 'http://10.12.25.2:5000',

'--os-username': 'kxi',

'--os-user-domain-id': 'default',

'--os-project-domain-id': 'default',

'--os-tenant-id': '087050388b204c73a3e418dd2c1fe30b',

'--os-region-name': 'RegionOne',

'--os-password': 'yourpassword',

'--os-project-domain-name': 'Integration-SB-01',

'--os-identity-api-version': '3'

}

common_preload_config = {

'oam_onap_net': 'oam_onap_oTA1',

'oam_onap_subnet': 'oam_onap_oTA1',

'public_net': 'external',

'public_net_id': '971040b2-7059-49dc-b220-4fab50cb2ad4'

}

Run automation program to deploy services

Sign into SDC as designer and download five csar files for infra, vbng, vgmux, vbrg, and rescust. Copy all the csar files to directory csar.

Now you can simply run 'vcpe.py' to see the instructions.

To get ready for service deployment. First run 'vcpe.py init'. This will modify SO and SDNC database to add service-related information.

Once it is done. Run 'vcpe.py infra'. This will deploy the following services. It may take 7-10 minutes to complete depending on the cloud infrastructure.

- Infra

- vBNG

- vGMUX

- vBRG

If the deployment succeeds, you will see a summary of the deployment from the program.

Validate deployed VNFs

By now you will be able to see 7 VMs in Horizon. However, this does not mean all the VNFs are functioning properly. In many cases we found that a VNF may need to be restarted multiple times to make it function properly. We perform validation as follows:

- Run healthcheck.py. It checks for three things:

- vGMUX honeycomb server is running

- vBRG honeycomb server is running

- vBRG has obtained an IP address and its MAC/IP data has been captured by SDNC

If this healthcheck passes, then skip the following and start to deploy customer service. Otherwise do the following and redo healthcheck.

- If vGMUX check does not pass, restart vGMUX, make sure it can be connected using ssh.

- If vBRG check does not pass, restart vBRG, make sure it can be connected using ssh.

(Please note that the four VPP-based VNFs (vBRG, vBNG, vGMUX, and vGW) were developed by the ONAP community in a tight schedule. We are aware that vBRG may not be stable and sometimes need to be restarted multiple times to get it work. The team is investigating the problem and hope to make it better in the near future. Your patience is appreciated.)

Deploy Customer Service and Test Data Plane

After passing healthcheck, we can deploy customer service by running 'vcpe.py customer'. This will take around 3 minutes depending on the cloud infrastructure. Once finished, the program will print the next few steps to test data plane connection from the vBRG to the web server. If you check Horizon you should be able to see a stack for vgw created a moment ago.

Tips for trouble shooting:

- There could be situations that the vGW does not fully functioning and cannot be connected to using ssh. Try to restart the VM to solve this problem.

- isc-dhcp-server is supposed to be installed on vGW after it is instantiated. But it could happen that the server is not properly installed. If this happens, you can simply ssh to the vGW VM and manually install it with 'apt install isc-dhcp-server'.

"userParams": [

{

"name": "BRG_WAN_MAC_Address",

"value": "fa:16:3e:be:9a:b0"

},

{

"name": "Customer_Location",

"value": {

"customerLatitude": "32.897480",

"customerLongitude": "97.040443",

"customerName": "some_company"

}

},

{

"name": "Homing_Model_Ids",

"value": [

{

"resourceModuleName": "vgMuxAR",

"resourceModelInvariantId": "565d5b75-11b8-41be-9991-ee03a0049159",

"resourceModelVersionId": "61414c6c-6082-4e03-9824-bf53c3582b78"

}

]

}

]

}