Overview

Prerequisites for Openstack

- 140+ GB RAM by combining all computes and around 60+ CPU cores

- Keystone v2 authentication is must

- Capable of creating 100GB cinder volume in Openstack cloud

Installing ONAP in vanilla OpenStack from command line is very similar to installing ONAP in Rackspace (see Tutorial: Configuring and Starting Up the Base ONAP Stack). The Heat templates that install ONAP in Rackspace and vanilla OpenStack are similar too. The main difference is the way resource-intensive VMs are defined. Unlike OpenStack, Rackspace requires to explicitly create a local disk for memory- or CPU-intensive VMs.

Currently, there are three different Heat templates for ONAP installation in vanilla OpenStack:

- onap_openstack.yaml / onap_openstack.env: ONAP VMs created out of this template have one vNIC. The vNIC has a private IP towards the Private Management Network and a floating IP. The floating IPs addresses are assigned during ONAP installation by OpenStack, based on floating IP availability. This template can be used when users do not know the set of floating IPs assigned to them or do not have OpenStack admin rights.

- onap_openstack_float.yaml / onap_openstack_float.env: ONAP VMs created out of this template have one vNIC. The vNIC has a private IP towards the Private Management Network and a floating IP. The floating IP addresses are specified ahead of time in the environment file. This template can be used when users always want to assign the same floating IP to an ONAP VM in different ONAP installation. NOTES: 1) Running this template requires OpenStack admin rights; 2) Fixed floating IP assignment via Heat template is not supported in OpenStack Kilo and older releases.

- onap_openstack_nofloat.yaml / onap_openstack_nofloat.env: ONAP VMs created out of this template have two vNICs: one has a private IP towards the Private Management Network and the other one has a public IP address towards the external network. From a network resource perspective, this template is similar to the Heat template used to build ONAP in Rackspace.

ALL TEMPLATES ABOVE SUPPORT DCAE DATA COLLECTION AND ANALYTICS PLATFORM INSTALLATION. In onap_openstack.yaml / onap_openstack.env and onap_openstack_float.yaml / onap_openstack_float.env you are required to manually allocate five (5) Floating IP addresses to your OpenStack project. To allocate those addresses, from the OpenStack horizon dashboard, click on Compute -> Access & Security -> Floating IPs. Then click “Allocate IP To Project” five times. OpenStack will assign five IPs to your project. Then, please assign the allocated Floating IPs to the following VMs defined in the Heat environment file:

dcae_coll_float_ip

dcae_hdp1_float_ip

dcae_hdp2_float_ip

dcae_hdp3_float_ip

dcae_db_float_ip

At run time, the DCAE controller will fetch those IPs from the underlying OpenStack platform and assign them to the VMs defined above. The "dcae_base_environment" parameter is set to 1-NIC-FLOATING-IPS.

For onap_openstack_nofloat.yaml / onap_openstack_nofloat.env no floating IP address allocation is required. OpenStack will assign public addresses to the DCAE VMs based on availability. In onap_openstack_nofloat.env, the "dcae_base_environment" parameter is set to 2-NIC.

Note that the DCAE controller handles only one set of IP addresses, either floating IPs or private IPs. As such, in the onap_openstack.yaml and onap_openstack_float.yaml templates, which use floating IPs, the private IP addresses assigned to the five additional VMs that DCAE creates off the Heat template will not be considered. OpenStack will assign them five private IPs in the Private Management Network based on availability. More details about DCAE setup available here: DCAE Controller Development Guide.

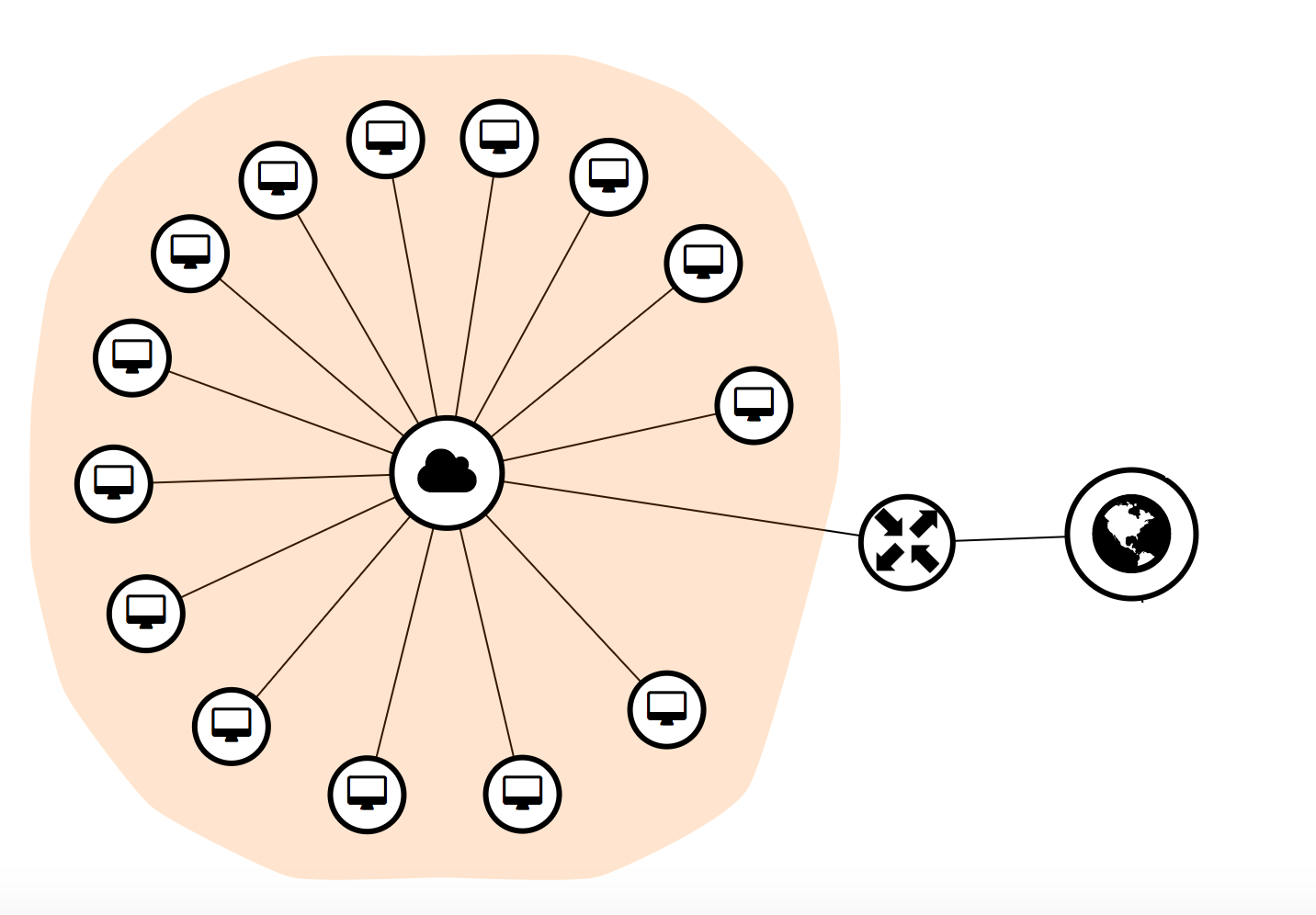

The figure below shows the network setup created with onap_openstack_float.yaml / onap_openstack_float.env. As described above, ONAP VMs have a private IP address in the ONAP Private Management Network space and use floating IP addresses for remote access and connection to Gerrit and Nexus repositories. A router that connects the ONAP Private Management Network to the external network is also created.

Heat Template

In this section, we describe the structure of onap_openstack_float.yaml, which assigns fixed floating IPs to ONAP VMs. However, because the different Heat templates have a similar structure (except for the network setup), the same considerations apply also to onap_openstack.yaml and onap_openstack_nofloat.yaml.

The Heat template contains the parameters and resources (i.e. vCPUs, vNICs, RAM, local and external volumes, ...) necessary to build ONAP components. After ONAP VMs have been created, a post-instantiation bash script that installs software dependencies is ran in any VM. In release 1.0.0, this script was part of the Heat template. Since release 1.1.0, the bash script has been simplified: it only contains the operations necessary to pass configuration parameters to a VM, while the logic that downloads software dependencies and sets the execution environment up has been moved off to a new bash script that is downloaded from Nexus.

The rationale behind this change is that ONAP will need to support multiple platforms (currently it supports Rackspace and vanilla OpenStack), which may require different Heat templates, while a VM installation script will remain unchanged. This will avoid to change a script multiple times, once for each Heat template.

Find below an example of the simplified post-instantiation bash script for MSO, with the list of all the configuration parameters passed to the VM and available in /opt/config:

The post-instantiation bash script downloads (from Nexus) and runs an install script, mso_install.sh in the example above, which installs software dependencies like Git, Java, NTP daemon, docker-engine, docker-compose, etc. Finally, the install script downloads (from Nexus) and runs an init script, e.g. mso_vm_init.sh, which downloads and runs docker images. The rationale of having two bash scripts is that the install script runs only once, after a VM is created, while the init script runs after every VM (re)boot to pickup the latest changes in Gerrit and download and run the latest docker image for a specific ONAP component.

Heat Environment File

In order to correctly install ONAP in vanilla OpenStack, it is necessary to set the following parameters in the Heat environment file, based on the specific OpenStack environment:

- public_net_id: PUT YOUR NETWORK ID/NAME HERE

- ubuntu_1404_image: PUT THE UBUNTU 14.04 IMAGE NAME HERE

- ubuntu_1604_image: PUT THE UBUNTU 16.04 IMAGE NAME HERE

- flavor_small: PUT THE SMALL FLAVOR NAME HERE

- flavor_medium: PUT THE MEDIUM FLAVOR NAME HERE

- flavor_large: PUT THE LARGE FLAVOR NAME HERE

- flavor_xlarge: PUT THE XLARGE FLAVOR NAME HERE

- pub_key: PUT YOUR PUBLIC KEY HERE

- openstack_tenant_id: PUT YOUR OPENSTACK PROJECT ID HERE

- openstack_username: PUT YOUR OPENSTACK USERNAME HERE

- openstack_api_key: PUT YOUR OPENSTACK PASSWORD HERE

- horizon_url: PUT THE HORIZON URL HERE

- keystone_url: PUT THE KEYSTONE URL HERE

- dns_list: PUT THE ADDRESS OF THE EXTERNAL DNS HERE (e.g. a comma-separated list of IP addresses in your /etc/resolv.conf in UNIX-based Operating Systems)

- external_dns: PUT THE FIRST ADDRESS OF THE EXTERNAL DNS LIST HERE

- aai1_float_ip: PUT A&AI INSTANCE 1 FLOATING IP HERE

- aai2_float_ip: PUT A&AI INSTANCE 2 FLOATING IP HERE

- appc_float_ip: PUT APP-C FLOATING IP HERE

- dcae_float_ip: PUT DCAE FLOATING IP HERE

- dcae_coll_float_ip: PUT DCAE COLLECTOR FLOATING IP HERE

- dcae_db_float_ip: PUT DCAE DATABASE FLOATING IP HERE

- dcae_hdp1_float_ip: PUT DCAE HADOOP VM1 FLOATING IP HERE

- dcae_hdp2_float_ip: PUT DCAE HADOOP VM2 FLOATING IP HERE

- dcae_hdp3_float_ip: PUT DCAE HADOOP VM3 FLOATING IP HERE

- dns_float_ip: PUT DNS FLOATING IP HERE

- mso_float_ip: PUT MSO FLOATING IP HERE

- mr_float_ip: PUT MESSAGE ROUTER FLOATING IP HERE

- policy_float_ip: PUT POLICY FLOATING IP HERE

- portal_float_ip: PUT PORTAL FLOATING IP HERE

- robot_float_ip: PUT ROBOT FLOATING IP HERE

- sdc_float_ip: PUT SDC FLOATING IP HERE

- sdnc_float_ip: PUT SDN-C FLOATING IP HERE

- vid_float_ip: PUT VID FLOATING IP HERE

Note:

A&AI now has two (2) instances. One has the docker containers that run the A&AI logic and one has databases and third-party software dependencies.

Getting ENV options

Image list

$ glance image-list | grep buntu

| c60d00b6-d03e-492b-9eed-e6580b438619 | Ubuntu_16.04_xenial |

Instance flavors

$ openstack flavor list | grep onap

| 123 | onap_small | 4192 | 0 | 0 | 1 | True |

| 124 | onap_medium | 8192 | 0 | 0 | 1 | True |

| 125 | onap_large | 8192 | 0 | 0 | 2 | True |

| 126 | onap_xlarge | 16192 | 0 | 0 | 2 | True |

List floating ips

$ openstack floating ip list

+--------------------------------------+---------------------+------------------+------+--------------------------------------+----------------------------------+

| ID | Floating IP Address | Fixed IP Address | Port | Floating Network | Project |

+--------------------------------------+---------------------+------------------+------+--------------------------------------+----------------------------------+

| 03fffffff0-07f5-4172-a71d-fb1394e1f7b4 | 44.253.254.255 | None | None | fffffff-59f0-415c-880d-d05ec86ff8f0 | fffffc6575bda4525b49433a38e697e22 | etc...

Public network

$ openstack network list | grep public

| b4f41afd-1f08-47f8-851b-ca536bffffff | public | d4902035-2d4c-40cc-8a05-e916401fffff |

Note:

Be careful not to use private address space 172.18.0.0/16 for the setup of vanilla opernstack and/or the provider network and the floating addresses therein.

Some containers create a route in the hosting VM form 172.18.0.0./16 to a br-<some-hex-string> bridge which means these containers cannot connect to any other VM and/or container in the 172.18.0.0/16 space. DCAE might be the most prominent victim for that. See also Undocumented network 172.18.0.0/16 in some ONAP VMs - can it be re-configured in https://wiki.onap.org/questions

Note:

Neutron may require that the dns_nameservers property of a OS::Neutron::Subnet resource be explicitly set, in order to allow VMs in the subnet to access the external network. dns_nameservers is a list of DNS IP addresses. However, some OpenStack installations do not support lists is the "user_data" section of a Heat template, which is used to build configuration files that are used during ONAP installation. As such, we define two different parameters: 1) dns_list is a list of DNS IP addresses that is provided to Neutron; 2) external_dns is a string that contains one of the DNS IP addresses in the list and can be used in the "user_data" section. In many cases, when only one DNS address is provided, these two parameters will be set to the same value.

All the other parameters in the environment file are set and can be left untouched.

Note that the IP addresses in the ONAP Private Management Network are also set, but they can be changed at will, still making sure that they are consistent with the private network CIDR.

- oam_network_cidr: 10.0.0.0/8

- aai1_ip_addr: 10.0.1.1

- aai2_ip_addr: 10.0.1.2

- appc_ip_addr: 10.0.2.1

- dcae_ip_addr: 10.0.4.1

- dns_ip_addr: 10.0.100.1

- mso_ip_addr: 10.0.5.1

- mr_ip_addr: 10.0.11.1

- policy_ip_addr: 10.0.6.1

- portal_ip_addr: 10.0.9.1

- robot_ip_addr: 10.0.10.1

- sdc_ip_addr: 10.0.3.1

- sdnc_ip_addr: 10.0.7.1

- vid_ip_addr: 10.0.8.1

Finally, the Heat environment file contains some DCAE-specific parameters. Some of them are worth mentioning:

- dcae_base_environment: 1-NIC-FLOATING-IPS

- dcae_zone, dcae_state: The location in which DCAE is deployed

- openstack_region: The OpenStack Region in which DCAE is deployed (also used by MSO)

dcae_base_environment specifies the DCAE networking configuration and shouldn't be modified (see above for a description of the DCAE network configuration, i.e. 1-NIC-FLOATING-IPS vs. 2-NIC). openstack_region must reflect the OpenStack OS_REGION_NAME environment variable (please refer to Tutorial: Configuring and Starting Up the Base ONAP Stack for cloud environment variables), while dcae_zone and dcae_state can contain any meaningful location information that helps the user distinguish between different DCAE deployments. For example, if an instance of DCAE is deployed in a data center in New York City, the two parameters can assume the following values:

- dcae_zone: nyc01

- dcae_state: ny

How to use both v2 and v3 Openstack Keystone API

As some ONAP components still currently use v2 Keystone API you should have both, v2 and v3 configured in your environment. That means to have endpoints url without any version at the end.

If you have already installed keystone identity component and created endpoints, you can modify them directly in Maria DB. To do this, run the following commands in red (id should refer to the 3 endpoints, internal, public and admin you have created):

107 Comments

arvind sharma

hi Josef Reisinger,

Kindly let me know if the ONAP Installation can be done on Vanilla OpenStack. Is this dependent on Base ONAP stack installation?

Regards

Josef Reisinger

Hi arvind sharma,

in theory yes and I think there are some people on the list which have successfully done this. I would really like to learn how "complex" this exercise was. I have a setup on Ocata, unfortunately I have some issues getting the docker container correctly run.

See for example my current baby, the SDC: SDC - sdc-BE startup failed

Amit Gupta

Can i install ONAP components without docker container.

i have tried to install using the Openstack vm's but failed to install 8 components.

Story::

I am installing ONAP using the VM's, without docker. I am not using docker vm as such for onap isntalltion (ran the latest 1.2.0 snapshot artifact, faced issue related to docker so i moved to older version and try to install onap components using vm's)

i am running env and head template with august release artifact 1.1.0 snapshot. the below 8 vm's are not getting up and onap installtion create failed.

dcae_coll_float_ip: 192.168.30.153

dcae_db_float_ip: 192.168.30.150

dcae_hdp1_float_ip: 192.168.30.170

dcae_hdp2_float_ip: 192.168.30.211

dcae_hdp3_float_ip: 192.168.30.212

sdc_float_ip: 192.168.30.202

policy_float_ip: 192.168.30.162

portal_float_ip: 192.168.30.164

pasted the links for env and heat template file .

Heat template:

https://gerrit.onap.org/r/gitweb?p=demo.git;a=blob;f=heat/OpenECOMP/onap_openstack_float.yaml;h=024dd2f90a2f36b09497a5d93dda52ae58294dc9;hb=refs/heads/master

Env file creation link for floating ip mentioned in the env file:

https://gerrit.onap.org/r/gitweb?p=demo.git;a=blob;f=heat/OpenECOMP/onap_openstack_float.env;h=1dfc7f81eec1985aa20c8bd36a87d3ae34d0f361;hb=refs/heads/master

Please help how to do this ??

Thanks

Andreas Geissler

Hi all,

could someone explain, why each created VM require an own floating IP address ?

As a router is created to the external network, the VMs have access to the Internet and the GitHubs, but why is an access form external to each VM required ?

Regards

Andreas

Marco Platania

Andreas,

At this time, some ONAP components in vanilla OpenStack require floating (or public) IPs for internal configuration. For what regards access to VMs from external location, this is mainly done for test and debug purposes.

Marco

Andreas Geissler

Hi Marco,

I tried to install the ONAP 1.1.0 with the fixed floating IPs, but faced another problem.

The installation can only work, if the Openstack-User has administrive rights, which is not the case in our test environment.

So I think it would be better to let the user choose, whether the a fixed or a randomly chosen is used.

I removed the fixed ones from the YAML, but still the DCAE deployment fail (Hadoop VMs...).

Andreas

Manish Sharma

Marco,

As you told, the floating IP are only for accessing VMs to enable test and debug. So we should be able to not assign any floating IP during installation and could attach a floating IP attached later to the VM which needs debugging. This way we could avoid this configuration till necessary and also skip this (pool of floating IP) prerequisite.

Regards

Marco Platania

Hi Manish,

That is technically true, but not for DCAE. The DCAE controller maintains those IPs in the internal state to keep track of the 5 additional VMs that it spins up.

Marco

RAMANJANEYUL AKULA

It looks there is a bug in MSO cloud_config.json reloading.

If we do any changes to the json file it's not loading properly.

To overcome that issue we need to edit the docker init config file : /opt/test_lab/volumes/mso/chef-config/mso-docker.json and edit the cloud config params as needed, Ex: The identity_service_id in the cloud_sites was pointing to RACK_SPACE where as the actual ID in the identity_services is set to DEFAULT_KEYSTONE. Because of this problem the MSO was not able to load the cloud config.

To fix this problem, one need to edit the file /opt/test_lab/volumes/mso/chef-config/mso-docker.json and correct the identity_service_id.

After that restart the docker service by using the command : "docker restart testlab_mso_1"

Snap of the correct config:

"mso-po-adapter-config": {

"checkrequiredparameters": "true",

"cloud_sites": [

{

"aic_version": "2.5",

"id": "Dallas",

"identity_service_id": "DEFAULT_KEYSTONE",

"lcp_clli": "DFW",

"region_id": "DFW"

},

{

"aic_version": "2.5",

"id": "Northern Virginia",

"identity_service_id": "DEFAULT_KEYSTONE",

"lcp_clli": "IAD",

"region_id": "IAD"

},

{

"aic_version": "2.5",

"id": "Chicago",

"identity_service_id": "DEFAULT_KEYSTONE",

"lcp_clli": "ORD",

"region_id": "ORD"

}

],

"identity_services": [

{

"admin_tenant": "service",

"dcp_clli": "DEFAULT_KEYSTONE",

"identity_authentication_type": "USERNAME_PASSWORD",

"identity_server_type": "KEYSTONE",

"identity_url": "http://10.203.107.104:5000/v2.0",

"member_role": "admin",

"mso_id": "tools",

"mso_pass": "197f79bfd3c750fcaad07c6816bc82c9",

"tenant_metadata": "true"

}

],

How to test it:

curl http://localhost:8080/networks/rest/cloud/showConfig

Marco Platania

Ramu,

Could you please raise this issue in the mailing list? This way, MSO team can be notified more quickly and look at it.

Thanks

RAMANJANEYUL AKULA

Marco, MSO team helped me here. Just want to give the WA to the rest of the team who is facing this problem. Hence the comment.

Marco Platania

Hello Ramu,

This problem has now been fixed. The MSO VM is expected to come up with the correct OpenStack configuration automatically, no manual intervention required. Note that changes above fix the issue only partially. Parts of the cloud_sites objects, such as region names and IDs, still reflect the Rackspace environment. The new code merge in the demo repo solves this issue.

Marco

Viswanath KSP

Hello,

I'm a newbie to ONAP and I'm trying to install ONAP following the instructions @ https://nexus.onap.org/content/sites/raw/org.openecomp.demo/README.md . Currently I'm stuck with error :

Does openstack client needs "Admin" privilege inorder to instantiate ONAP ?

BR,

Viswa

David Rhodes

Make sure all your OS_env vars are set correctly, and that there aren't any extra. At one point I had the OS_PROJECT_ID (iirc) set to an old value (from prior startup) and got this error. Basically, just set the ones as described in the Base ONAP setup (you don't need the project id, but I was experimenting).

Marco Platania

No it doesn't. But you need to authenticate with the OpenStack platform first. Please look at section "Create a Script to Configure the Local Environment" here: Tutorial: Configuring and Starting Up the Base ONAP Stack

Viswanath KSP

Hi Marco,

Yes I did that. Still I'm hitting the same error. Googling the error, many have suggested to set admin token v.z

That's where I got puzzled whether Admin rights are necessary for ONAP installation.

Andreas Geissler

Hi Marco,

as I already mentioned above, you need at least the enhanced rights to reserve Floating IP Adresses (floating_ip_address) from your pool.

This requires at least in our environment administrive rights, which we currently not have. See:

https://docs.openstack.org/developer/heat/template_guide/openstack.html#OS::Neutron::FloatingIP-prop-floating_ip_address

Best regards

Andreas

Marco Platania

Hi Andreas,

We plan to have a similar template soon, which however doesn't require floating IPs preallocation. It uses two NICs, one towards the private network and one towards the external network. This scenario is similar to the one we use in Rackspace. I'll release the template soon.

Thanks

Viswanath KSP

Thanks Marco. Looking forward to it.

Marco Platania

Hi Viswanath, Andreas,

The Heat template without fixed floating IP pre-allocation has been released. It is available here: onap_openstack.yaml and onap_openstack.env

Thanks

Viswanath KSP

Hey Marco Platania,

I just used onap_openstack_nofloat.yaml and hit with below error. Looks like still "Admin" access is needed to create an interface on external network.

Could you please take a look & let me know what I'm missing here.

BR,

Viswa

Marco Platania

Hello Viswa,

OpenStack configuration may change from deployment to deployment, as well as permissions to execute some operations. Rather than ONAP installation configuration, my guess is that you should look at the way OpenStack has been installed and setup in your lab. Perhaps you are not allowed to attach network interfaces directly to the external network.

My suggestion is to use onap_openstack.yaml, if that fits the bill. That will create a private interface and assign a floating IP to each VM. This is the standard OpenStack approach to building stacks, so it should work in your environment too.

The configuration described in onap_openstack_nofloat.yaml mimics the scenario we tested in Rackspace, but as I said before, you may need to properly configure your OpenStack lab to use it.

Marco

Viswanath KSP

Thanks Marco Platania. I requested my Openstack team to bump user privileges. With renewed privilege, I used onap_openstack_nofloat.yaml to create stack. Stack creation was successful, however for some reason, post-installation-script didn't not run successfully for portal-vm.

Hence I manually logged into portal-vm and executed below part manually.

# Download and run install script

curl -k __nexus_repo__/org.openecomp.demo/boot/__artifacts_version__/portal_install.sh

-o /opt/portal_install.sh

cd /opt

chmod +x portal_install.sh

./portal_install.sh

I had to change some lines w.r.t our environment, http_proxy for docker, dns nameserver change etc. After all these steps, I could point to portal-vm:8989 successfully. However I'm unable to locate ECOMPPORTAL/login.htm .

Could you please point to me where I have to look to troubleshoot this issue further?

I understand posting questions in this page might not be a good approach. Please advise me on how to take up this thread.

I am testing ONAP extensively and I would reach-out to this forum often to get some assistance.

BR,

Viswa

Marco Platania

Hello Viswa,

I'm glad to see that you managed to install ONAP. To get support from the community, you should subscribe to the discuss mailing list, as described here: Mailing Lists

You can also post your question in this forum, much like you would do in stackoverflow: https://wiki.onap.org/display/DW/questions/all

Regarding the issues with Portal, have you added Portal, VID, SDC, and Policy IP addresses to your /etc/hosts file? This file should look like:

104.130.31.32 portal.api.simpledemo.openecomp.org

104.239.249.76 policy.api.simpledemo.openecomp.org

104.239.249.58 sdc.api.simpledemo.openecomp.org

104.130.12.222 vid.api.simpledemo.openecomp.org

When done, you should be able to reach portal at: portal.api.simpledemo.openecomp.org:8989/ECOMPPORTAL/login.htm

Note that the FQDN is required instead of the IP address.

Marco

Viswanath KSP

Thanks Marco Platania . Yes I had added above lines ( with IPs as per my environment), still I'm facing same issue.

I have posted more details at Unable to access ECOMPPORTAL . Please take a look at the exception logs and advise me how to proceed.

BR,

Viswa

Viswanath KSP

Hi Marco Platania,

Is there any update on my query. I'm literally stuck here and would appreciate if you could help me.

BR,

Viswa

Marco Platania

Manoop Talasila, could somebody from your team help here?

Thanks,

Marco

Gaurav Mittal

Hi,

I am getting a problem where the portal_installing is giving below error. I have the ONAP stack creation successful win a Vanilla openstack installed. Kindly help.

Gaurav Mittal

Can you kindly share what was the contents of this script. In mine it shows as:

ubuntu@vm1-portal:/opt$ cat portal_install.sh

<html>

<head><title>504 Gateway Time-out</title></head>

<body bgcolor="white">

<center><h1>504 Gateway Time-out</h1></center>

<hr><center>nginx/1.12.0</center>

</body>

</html>

ubuntu@vm1-portal:/opt$

Antony Prabhu M

Some of the packages are not downloaded properly, i believe. You may need to run the install script inside each VM and then try xxxx_install.sh

Gaurav Mittal

Thanks a lot Antony for the reply. Can you kindly guide me where can i find this install script inside the VM and what is the script name.

Antony Prabhu M

For portal VM, you can re-run the below script in the VM once again...(it can be captured from heat xxx.yaml files)

# Download and run install script

curl -k __nexus_repo__/org.onap.demo/boot/__artifacts_version__/portal_install.sh -o /opt/portal_install.sh

cd /opt

chmod +x portal_install.sh

./portal_install.sh

if the internet is bad, some of the artifacts won't be downloaded properly. Hence you may need to re-run until it's success.

If the install script is success, all the dockers would be running without any issues.

How to verify whether my portal or other VM is running successfully?.

Refer the below deployment diagram for each VM (it indicates all inside packages and DBs runing)

Overall Deployment Architecture

On my first ONAP installation, i made each VM success on per day basis.

Gaurav Mittal

Sir,

I did the same way you told. on executing portal_install.sh I see the error at the end as:

Reading package lists... Done

W: GPG error: https://apt.dockerproject.org ubuntu-trusty InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY F76221572C52609D

...

...

...

Building dependency tree

Reading state information... Done

docker-engine is already the newest version.

0 upgraded, 0 newly installed, 0 to remove and 14 not upgraded.

mkdir: cannot create directory â/opt/dockerâ: File exists

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 617 0 617 0 0 482 0 --:--:-- 0:00:01 --:--:-- 482

100 7857k 100 7857k 0 0 144k 0 0:00:54 0:00:54 --:--:-- 111k

docker stop/waiting

docker start/running, process 17871

You must specify a repository to clone.

usage: git clone [options] [--] <repo> [<dir>]

-v, --verbose be more verbose

-q, --quiet be more quiet

--progress force progress reporting

-n, --no-checkout don't create a checkout

--bare create a bare repository

--mirror create a mirror repository (implies bare)

-l, --local to clone from a local repository

--no-hardlinks don't use local hardlinks, always copy

-s, --shared setup as shared repository

--recursive initialize submodules in the clone

--recurse-submodules initialize submodules in the clone

--template <template-directory>

directory from which templates will be used

--reference <repo> reference repository

-o, --origin <name> use <name> instead of 'origin' to track upstream

-b, --branch <branch>

checkout <branch> instead of the remote's HEAD

-u, --upload-pack <path>

path to git-upload-pack on the remote

--depth <depth> create a shallow clone of that depth

--single-branch clone only one branch, HEAD or --branch

--separate-git-dir <gitdir>

separate git dir from working tree

-c, --config <key=value>

set config inside the new repository

++ cat /opt/config/nexus_username.txt

+ NEXUS_USERNAME=docker

++ cat /opt/config/nexus_password.txt

+ NEXUS_PASSWD=docker

++ cat /opt/config/nexus_docker_repo.txt

+ NEXUS_DOCKER_REPO=nexus3.onap.org:10001

++ cat /opt/config/docker_version.txt

+ DOCKER_IMAGE_VERSION=1.1-STAGING-latest

+ cd /opt/portal

./portal_vm_init.sh: line 15: cd: /opt/portal: No such file or directory

+ git pull

fatal: Not a git repository (or any of the parent directories): .git

+ cd deliveries

./portal_vm_init.sh: line 17: cd: deliveries: No such file or directory

+ source .env

./portal_vm_init.sh: line 20: .env: No such file or directory

+ ETC=/PROJECT/OpenSource/UbuntuEP/etc

+ mkdir -p /PROJECT/OpenSource/UbuntuEP/etc

+ cp -r 'properties_rackspace/*' /PROJECT/OpenSource/UbuntuEP/etc

cp: cannot stat âproperties_rackspace/*â: No such file or directory

+ docker login -u docker -p docker nexus3.onap.org:10001

Login Succeeded

+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest

invalid reference format

+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest

invalid reference format

+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest

invalid reference format

+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :

Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format

+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :

Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format

+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :

Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format

+ /opt/docker/docker-compose down

ERROR:

Can't find a suitable configuration file in this directory or any

parent. Are you in the right directory?

Supported filenames: docker-compose.yml, docker-compose.yaml

+ /opt/docker/docker-compose up -d

ERROR:

Can't find a suitable configuration file in this directory or any

parent. Are you in the right directory?

Supported filenames: docker-compose.yml, docker-compose.yaml

root@vm1-portal:/opt#

You must specify a repository to clone.

usage: git clone [options] [--] <repo> [<dir>]

-v, --verbose be more verbose -q, --quiet be more quiet --progress force progress reporting -n, --no-checkout don't create a checkout --bare create a bare repository --mirror create a mirror repository (implies bare) -l, --local to clone from a local repository --no-hardlinks don't use local hardlinks, always copy -s, --shared setup as shared repository --recursive initialize submodules in the clone --recurse-submodules initialize submodules in the clone --template <template-directory> directory from which templates will be used --reference <repo> reference repository -o, --origin <name> use <name> instead of 'origin' to track upstream -b, --branch <branch> checkout <branch> instead of the remote's HEAD -u, --upload-pack <path> path to git-upload-pack on the remote --depth <depth> create a shallow clone of that depth --single-branch clone only one branch, HEAD or --branch --separate-git-dir <gitdir> separate git dir from working tree -c, --config <key=value> set config inside the new repository

++ cat /opt/config/nexus_username.txt+ NEXUS_USERNAME=docker++ cat /opt/config/nexus_password.txt+ NEXUS_PASSWD=docker++ cat /opt/config/nexus_docker_repo.txt+ NEXUS_DOCKER_REPO=nexus3.onap.org:10001++ cat /opt/config/docker_version.txt+ DOCKER_IMAGE_VERSION=1.1-STAGING-latest+ cd /opt/portal./portal_vm_init.sh: line 15: cd: /opt/portal: No such file or directory+ git pullfatal: Not a git repository (or any of the parent directories): .git+ cd deliveries./portal_vm_init.sh: line 17: cd: deliveries: No such file or directory+ source .env./portal_vm_init.sh: line 20: .env: No such file or directory+ ETC=/PROJECT/OpenSource/UbuntuEP/etc+ mkdir -p /PROJECT/OpenSource/UbuntuEP/etc+ cp -r 'properties_rackspace/*' /PROJECT/OpenSource/UbuntuEP/etccp: cannot stat âproperties_rackspace/*â: No such file or directory+ docker login -u docker -p docker nexus3.onap.org:10001Login Succeeded+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latestinvalid reference format+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latestinvalid reference format+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latestinvalid reference format+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format+ /opt/docker/docker-compose downERROR: Can't find a suitable configuration file in this directory or any parent. Are you in the right directory?

Supported filenames: docker-compose.yml, docker-compose.yaml

+ /opt/docker/docker-compose up -dERROR: Can't find a suitable configuration file in this directory or any parent. Are you in the right directory?

Supported filenames: docker-compose.yml, docker-compose.yaml

root@vm1-portal:/opt#

Antony Prabhu M

I also got the same error initially...it's mainly due to internet connection speed or some issue with docker repository. May be you can try later some time or next week?.

Don't need to run portal_install.sh everytime...You can try 'portal_vm_init.sh' only from /opt directory.

Gaurav Mittal

Thanks a lot sir... Well i executed the ./portal_vm_init.sh script. Seen below error. Hope this is internet issue.

I am suspecting the problem is because of below line:

+ source .env

./portal_vm_init.sh: line 20: .env: No such file or directory

========

root@vm1-portal:/opt# ./portal_vm_init.sh

++ cat /opt/config/nexus_username.txt

+ NEXUS_USERNAME=docker

++ cat /opt/config/nexus_password.txt

+ NEXUS_PASSWD=docker

++ cat /opt/config/nexus_docker_repo.txt

+ NEXUS_DOCKER_REPO=nexus3.onap.org:10001

++ cat /opt/config/docker_version.txt

+ DOCKER_IMAGE_VERSION=1.1-STAGING-latest

+ cd /opt/portal

+ git pull

fatal: Not a git repository (or any of the parent directories): .git

+ cd deliveries

./portal_vm_init.sh: line 17: cd: deliveries: No such file or directory

+ source .env

./portal_vm_init.sh: line 20: .env: No such file or directory

+ ETC=/PROJECT/OpenSource/UbuntuEP/etc

+ mkdir -p /PROJECT/OpenSource/UbuntuEP/etc

+ cp -r 'properties_rackspace/*' /PROJECT/OpenSource/UbuntuEP/etc

cp: cannot stat âproperties_rackspace/*â: No such file or directory

+ docker login -u docker -p docker nexus3.onap.org:10001

Login Succeeded

+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest

invalid reference format

+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest

invalid reference format

+ docker pull nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest

invalid reference format

+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :

Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format

+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :

Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format

+ docker tag nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest :

Error parsing reference: "nexus3.onap.org:10001/openecomp/:1.1-STAGING-latest" is not a valid repository/tag: invalid reference format

+ /opt/docker/docker-compose down

ERROR:

Can't find a suitable configuration file in this directory or any

parent. Are you in the right directory?

Supported filenames: docker-compose.yml, docker-compose.yaml

+ /opt/docker/docker-compose up -d

ERROR:

Can't find a suitable configuration file in this directory or any

parent. Are you in the right directory?

Supported filenames: docker-compose.yml, docker-compose.yaml

root@vm1-portal:/opt# ./portal_vm_init.sh

Josef Reisinger

Hi Gaurav, that's strange .. and I agree with Anthony that this might be related to network connectivity, -speed, time of year , weather (just kidding). I had the same issue which I reported in ONAP master branch Stabilization yesterday and, guess what, I downloaded and executed portal_install.sh and voila .. as of today I have a portal in which I can login.

Btw, would you mind to continue the discussion in https://wiki.onap.org/questions as on my screen the space for comments on comments in this wiki gets quite narrow ?

Gaurav Mittal

Thanks for all the support to everyone. I was able to bring up the portal... Really appreciate the team, specially Anthony for the guidance.

Antony Prabhu M

Welcome Gaurav...I too got good support from community.

David Rhodes

I've been making some progress bringing this up on devstack ... but now have this error:

Are the requested ubuntu images full images as from: https://cloud-images.ubuntu.com/ ? When you set the, for example, ubuntu_1404_image in the env file am I setting the name of the image or the ID, or something else?

In looking some things up, it suggests that a glance version downgrade to 2.0.0 might help this (https://ask.openstack.org/en/question/102116/heat-cannot-validate-glanceimage-constraint/) has anyone else run into this problem?

THANKS! Dave

Marco Platania

Hi Dave,

Regarding the images in the env file, you can either pass the full names or the IDs.

Marco

David Rhodes

I am still stuck on this ... I've tried quite a few things and just don't see why the images do not validate. What is it trying to validate exactly? Am I using the wrong images?

EDIT: some progress ... if I restart the heat engine (devstack@h-eng) after devstack is started then I don't get this error, even though it appeared that this service was running fine before.

Marco Platania

I'm not surprise if something in your OpenStack lab is preventing correct ONAP installation. We had issues with uploading ubuntu images too in the past, and we solved it by rebooting the glance service (if I'm not mistaken...)

Mark Beierl

Hey, David Rhodes, I came across this comment while trying to resolve the exact same issue using Heat with DevStack, and nothing to do with ONAP. It appears to be some form of problem with the Heat installation where it does not have the hook (or code, or ...?) installed that allows it to validate an image name against Glance, hence the constraint not found. If you have found a solution, please let me know.

Likewise, if I find one, I'll post back here.

Chak Chau

Hi,

In the environment file, how do I know or generate "pub_key: PUT YOUR PUBLIC KEY HERE" component in the cloud environment. I have Red Hat in the cloud environment.

Also, how do I find out what are the following environment components?

horizon_url: PUT THE HORIZON URL HERE

keystone_url: PUT THE KEYSTONE URL HERE

Thanks,

Chak

Michael O'Brien

Chak, hi

ssh key generation at Setting Up Your Development Environment#OSX

OSX/Redhat/Ubuntu all use ssh-keygen -t rsa

You can also read the public key on openstack in /dashboard/project/access_and_security/keypair

These are generated by logging into your openstack and exporting your admin.rc in /dashboard/project/access_and_security/ under the API Access tab

horizon : IP/dashboard

keystone: IP:5000/v2.0

/michael

Chak Chau

Hi Michael,

Thank you very much, let me try to do that.

Best Regards,

Chak

Chak Chau

Hi Michael,

For

horizon : IP/dashboard

keystone: IP:5000/v2.0

Did you mean the IP of my private cloud environment, or some other IP?

Thanks,

Chak

Michael O'Brien

Yes, the ip of your openstack console - where you run stacks in the gui below

Chak Chau

Thank you very much!

Chak

Chak Chau

Hi,

From the orchestration menu, I tried launch stack with input files onap_openstack_float.env and onap_openstack_float.yaml. It asked to fill in all the parameters again wonder why?

But when looked at the preview stack, I saw the value assigned. What did I do wrong?

Thanks,

Chak

Michael O'Brien

Yes, in openstack there seems to be bug in the UI that ignores the env file (ran into this myself) - try to run from the command line where the env file has effect

follow the same instructions as for rackspaces (install the openstack client and export/source your admin.rc)

Tutorial: Configuring and Starting Up the Base ONAP Stack#InstallPythonvirtualenvTools(optional,butrecommended)

then type

sudo apt install python-pip

sudo pip install virtualenv virtualenvwrapper

pip install python-openstackclient python-heatclient

openstack stack create -t onap_openstack_float.yaml -e onap_openstack_float.env ONAP

/michael

David Rhodes

Yes, there are problems with reading the ENV file in horizon: e.g. https://access.redhat.com/solutions/2438871

Chak Chau

Hi Michael,

Thank you very much for the advice.

Since I am using a cloud environment, how would I do that. Do I need to log on command terminal of one of the VMs created in the cloud in RedHat environment and run command line from that or other way? I'm running an cloud with openstack dashboard on my HP laptop, not on a physical server.

Thanks,

Chak

Michael O'Brien

You can run openstack commands from an ssh session from any machine/vm (wherever you keep your ssh key) - either from your host laptop - I assume you are running packstack openstack on a VM on your laptop? or you can spin up a VM on your openstack and install an openstack client there instead - as long as you have connectivity..

/michael

Chak Chau

Hi Michael,

Thank you!

I ran into several issues when is issue the command below:

openstack stack create -t onap_openstack_float.yaml -e onap_openstack_float.env ONAP

It said "Missing value auth-url required for auth plugin password".

In addition, I can ping from one VM to another VM, and to an external client VM created, but I can not do ssh for some reason. Appreciate the advice.

Btw, I have my admin.rc file created in windows notpad++, how do I export it to the client VM created in OpenStack? I may be doing something wrong?

Thanks,

Chak

Michael O'Brien

You can move files between systems/vms with scp command. If you are going to work on windows I would suggest installing git bash using the developer guide page.

Setting Up Your Development Environment#InstallingGit

For the auth file - verify you are using the credentials exported by your openstack security page.

For ssh between machines/vms you need to either reference the private key with a -i key input to ssh or on OSX for example do a ssh-add. On your git-bash or your linux box it may be easier for you to just rename it ~/.ssh/id_rsa which is the default key and is loaded by default (assuming you use the same key everywhere)

For an example see the vFirewall demo ssh into the snk vm - where you would need the specific key listed below

Verifying your ONAP Deployment#sshkeys

/michael

Chak Chau

Thank You Michael, let me try to do that per your suggestion.

Chak

Chak Chau

Hi Michael,

When I ran the stack command I noticed this error, do you have any suggestion?

Many thanks,

Chak

cloud@ubuntu:~$ openstack stack create -t onap_openstack_float.env -e onap_openstack_float.yaml ONAP

Password:

Error parsing template file:///home/cloud/onap_openstack_float.env Template format version not found.

cloud@ubuntu:~$

Stephen Moffitt

I downloaded the

onap_openstack_float.*files and tried an installation on my local openstack. The AAI installation failed for me. The /opt/test-config directory is pulled from Gerrit. I didn't change thegerrit_branchin the env file - Set torelease-1.0.0. But this branch does not seem to exist for http://gerrit.onap.org/r/aai/test-config. Any suggestions what thegerrit_branchshould be set to?I manually changed the branch to "master", just for AAI components and reran the installation script. It got further, but then the next error was...

There does not appear to be any tags for sparky-be.

1.0-STAGING-latestis taken from thedocker_versionin the env file. Any suggestions what thedocker_versionshould be set to, or is this error resulting from using the wronggerrit_branch?Thanks

Stephen

PS: Not sure if it mentioned anywhere, but the user appears to need admin role in the tenant for the scripts to work (needs ability to set floating ips).

Antony Prabhu M

Hi,

Even Am also facing the same issue from yesterday.

$git clone -b $GERRIT_BRANCH http://gerrit.onap.org/r/aai/test-config (this command is failing continuously, where GERRIT_BRANCH is release-1.1.0)

Also i noticed that the ONAP installation doesn't trigger the 'xxxx_install.sh' file automatically.

Please help.

Regards,

Antony Prabhu

Marco Platania

Hello Antony,

AAI configuration has changed in ONAP 1.1.0 (current master branch). Please use these parameters in the heat environment file:

artifacts_version: 1.1.0-SNAPSHOT

docker_version: 1.1-STAGING-latest

gerrit_branch: master

dcae_base_environment: 1-NIC-FLOATING-IPS

gitlab_branch: master

dcae_code_version: 1.1.0

I updated the env files in Gerrit.

Marco

Antony Prabhu M

Thanks Marco.

The installation went fine..I could able to access portal.

However 2 VMs are not complete.

root@vm1-sdnc:/opt# git clone -v -b $GERRIT_BRANCH --single-branch http://gerrit.onap.org/r/sdnc/oam.git sdnc

Cloning into 'sdnc'...

POST git-upload-pack (152 bytes)

remote: Finding sources: 100% (2367/2367)

fatal: The remote end hung up unexpectedly MiB | 41.00 KiB/s

fatal: early EOF

fatal: index-pack failed

root@vm1-sdnc:/opt#

Please help.

Regards,

Antony Prabhu

Marco Platania

Antony,

I didn't experience the same issue, but after reading your comment I tried to execute the sdnc clone operation. It is very slow... Probably (hopefully) some temporary issue. Normally, it works well.

Marco

Antony Prabhu M

Hi Marco,

Thanks for your reply.

The 'git clone' for sdnc fails exactly after reaching 94% download.

And, app-c docker pull is failing with following error always.

root@vm1-appc:/opt# ./appc_vm_init.sh

Already up-to-date.

Login Succeeded

1.1-STAGING-latest: Pulling from openecomp/appc-image

23a6960fe4a9: Already exists

e9e104b0e69d: Extracting 822B/822B

cd33d2ea7970: Download complete

534ff7b7d120: Download complete

7d352ac0c7f5: Download complete

caba0c2d2abc: Download complete

f85491e881df: Download complete

6353bd72d74d: Download complete

229be354bfe5: Download complete

b7437d96d990: Download complete

694345f88910: Download complete

467a80086a6f: Download complete

b8106eaa4fb2: Download complete

b388cbf87c16: Download complete

9e60e60381b3: Download complete

failed to register layer: open /var/lib/docker/aufs/layers/19cab3f6e6e11b9aa30fc68698292044104b1cdf8fa4bee385952d271f3aa065: no such file or directory

Error response from daemon: No such image: nexus3.onap.org:10001/openecomp/appc-image:1.1-STAGING-latest

1.1-STAGING-latest: Pulling from openecomp/dgbuilder-sdnc-image

23a6960fe4a9: Already exists

e9e104b0e69d: Extracting 822B/822B

cd33d2ea7970: Download complete

534ff7b7d120: Download complete

7d352ac0c7f5: Download complete

ab500c13f231: Download complete

88b7a4955fc5: Download complete

failed to register layer: open /var/lib/docker/aufs/layers/19cab3f6e6e11b9aa30fc68698292044104b1cdf8fa4bee385952d271f3aa065: no such file or directory

Error response from daemon: No such image: nexus3.onap.org:10001/openecomp/dgbuilder-sdnc-image:1.1-STAGING-latest

Pulling appc (openecomp/appc-image:latest)...

ERROR: Get https://registry-1.docker.io/v2/: net/http: TLS handshake timeout

root@vm1-appc:/opt#

Please help.

Regards,

Antony Prabhu

Marco Platania

I experienced the same issues yesterday. Did you try today? I just spun up a new ONAP instance in my lab and it works fine

Antony Prabhu M

Hi Marco,

I tried today...Still these 2 are failing. (sdnc - git clone & appc docker pull)

The following command, i tried in a normal system...it's failing there also.

It reaches 94% and after that, it is stuck.

$git clone -b master --single-branch http://gerrit.onap.org/r/sdnc/oam.git sdnc

Can you please help?.

Regards,

Antony Prabhu

Marco Platania

Can you please send an email to the ONAP mailing list? I want to see if other people are experiencing the same... I just spun up a new instance and everything was fine. If you write to the mailing list, it will also catch the attention of SDNC and APPC folks

Antony Prabhu M

Hi Marco,

I tried the sdnc cloning on Monday. It was successful.

For APPC, the did 'stop docker', removed the 'docker' folder and re-triggered the 'appc_vm_init.sh'. it was successful.

Thanks.

Michael O'Brien

Marco,

I just switched rackspace from 1.0 to 1.1 - need to test DCAE 1.0 in 1.1 for k8s. I should have searched confluence because I ran into the fact aai/test-config has no branch in 1.0 - I am retesting the stack now.

I'll close

AAI-136 - Getting issue details... STATUS

with gerrit_branch: master - aai comes up ok as expected

root@vm1-aai-inst1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 990cafe29c8a nexus3.onap.org:10001/openecomp/aai-resources "/bin/sh -c ./dock..." 6 minutes ago Up 6 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1 903b776aefff attos/dmaap "/bin/sh -c ./star..." 7 minutes ago Up 7 minutes 0.0.0.0:3904-3905->3904-3905/tcp dockerfiles_dmaap_1 3464d7b32ad0 dockerfiles_kafka "start-kafka.sh" 7 minutes ago Up 7 minutes 0.0.0.0:9092->9092/tcp dockerfiles_kafka_1 cd7951896575 wurstmeister/zookeeper "/bin/sh -c '/usr/..." 7 minutes ago Up 7 minutes 22/tcp, 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp dockerfiles_zookeeper_1

root@vm1-aai-inst2:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES ccd0f430b336 elasticsearch:2.4.1 "/docker-entrypoin..." 13 minutes ago Up 13 minutes 0.0.0.0:9200->9200/tcp, 9300/tcp elasticsearch f4b7018f0805 aaionap/gremlin-server "/bin/sh -c \"/dock..." 14 minutes ago Up 14 minutes 0.0.0.0:8182->8182/tcp testconfig_aai.gremlinserver.simpledemo.openecomp.org_1 0f415ac5d17a harisekhon/hbase "/bin/sh -c \"/entr..." 15 minutes ago Up 15 minutes 0.0.0.0:2181->2181/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:8085->8085/tcp, 0.0.0.0:9090->9090/tcp, 0.0.0.0:16000->16000/tcp, 0.0.0.0:16010->16010/tcp, 9095/tcp, 0.0.0.0:16201->16201/tcp, 16301/tcp testconfig_aai.hbase.simpledemo.openecomp.org_1

Checked history - was fixed on June 30 by you. My clone is from Aug 3rd though - so for some reason I was still seeing the openecomp folder instead of the renamed onap

docker moves from 1.0 to 1.1, dcae moves to 1.1, gerritt moves to master from 1.0

another pull fixed it

obrienbiometrics:demo michaelobrien$ git pull

Warning: Permanently added '[gerrit.openecomp.org]:29418,[198.145.29.92]:29418' (RSA) to the list of known hosts.

First, rewinding head to replay your work on top of it...

Fast-forwarded master to a94e309943d51a2d56e9aa19a582ac9eb40966e6.

Olga S

Hi guys, @Marco Platania,

I installed ONAP using onap_openstack_float.yaml / onap_openstack_float.env, but unfortunatelly DCAE VMs wasn't created except vm1-dcae-controller. I created 5 Floating IPs and assigned them to the following VMs defined in the Heat environment file:

dcae_coll_float_ip

dcae_hdp1_float_ip

dcae_hdp2_float_ip

dcae_hdp3_float_ip

dcae_db_float_ip.

ONAP installation was done sucessful but this 5 VMs are missing.

Did anybody encounter this issue and how did you solved it?

Thank you in advance,

Olga

Josef Reisinger

Seems as if DCAE controller has issues to tell Openstack to spin up further servers

When we installed Openstack, we followed the tutorial quite closely and called the controller host name "controller". This is not very wise, as we do not have a name server to serve this name and have to add it to the /etc/hosts file as needed. Be aware that the same configuration appears in the API URLs, changing the URL in /opt/config/keystone_url.txt from host name to IP address did not work for me.

If you used a host name for the controller in the Openstack configuration, you may want to check the following;

I added the following line to the end of /opt/dcae_vm_init.sh

where the controller name and controller IP needs to be adapted to your installation. After a restart of the DCAE controller, you should be able to check the controller host is accessible from the dcae container

Carsten Lund

This does work, but this should be considered an OpenStack installation issues. The service APIs from Openstack should use either IPs or DNS that lookup. Otherwise OpenStack CLI tools will also not work. MSO will have similar issues creating VMs then.

Olga S

Thank you!

Carsten Lund

Which errors do you see when running 'docker log XX' for the one docker container on vm1-dcae-controller?

Olga S

There is no docker container on my vm1-dcae-controller, it seems that something went wrong here.

If I try to run manually /opt/dcae_install.sh, I receive:

docker login -u docker -p docker nexus3.onap.org:10001

Error response from daemon: Get https://nexus3.onap.org:10001/v1/users/: dial tcp 199.204.45.137:10001: i/o timeout

make: *** [up] Error 1

Zi Li

Hi Olga,

Did you have solved the docker login issue? How did you fixed it? I got the same problem

Olga S

Hi,

The problem with missing docker container was solved. Please see docker logs: http://paste.openstack.org/show/615810/.

subhash kumar singh

We are also getting same error in DCAE logs http://paste.openstack.org/show/615625/

JIRA issue is filed for it : https://jira.onap.org/projects/DCAEGEN2/issues/DCAEGEN2-69

Olga S

Ok. Thanks!

Marco Platania

Olga,

In addition to what Carsten says below, could you also paste the content of /opt/app/dcae-controller/config.yaml in the DCAE controller VM (apparently, the only one that came up)?

Thanks

Olga S

BASE: 1-NIC-FLOATING-IPS

ZONE: ZONE

DNS-IP-ADDR: 10.0.100.1

STATE: STATE

DCAE-VERSION: 1.1.0

HORIZON-URL: http://10.6.140.7/dashboard//onap

KEYSTONE-URL: http://10.6.140.7:5000/v2.0

OPENSTACK-TENANT-ID: onap

OPENSTACK-TENANT-NAME: OPEN-ECOMP

OPENSTACK-REGION: RegionOne

OPENSTACK-PRIVATE-NETWORK: oam_ecomp_sZ6G

OPENSTACK-USER: onap

OPENSTACK-PASSWORD: onap123

OPENSTACK-AUTH-METHOD: password

OPENSTACK-KEYNAME: onap_key_sZ6G_dcae

OPENSTACK-PUBKEY: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDVBCcv3fzMNwTtXXkkLucVrzr4Iws9elXDKpykwKMCyI70ZY2w271B5SFRZ8krSNzUf4H6WlrG4VcJdKZgBgOsjRX8ZAuuahSSjF229iwYWkmF3OrmHvYjbbNn0z4z+StWZxip8lm9w+MiWRso9Juchk58oD8rPjtGYF+Uadu4yJ6hSASDaqwnlGp1wLKj/e41VcHn0sdipvP0y8f/C+XV9qkgOp0t1ZWD8Q2csU/xYdsqzdP5Z1tj08vZcrZ5zzYPQh5G8uNjd/s3/y7xFo0nfjH6tz29x8z9Zchb+BRoM0Tcr6grUk/uvKewPUXJ/uS9X+VN4EnuWRV4ccKyALaN onap@overcloud-controller-0.localdomain

NEXUS-URL-ROOT: https://nexus.onap.org

NEXUS-USER: docker

NEXUS-PASSWORD: docker

NEXUS-URL-SNAPSHOTS: https://nexus.onap.org/content/repositories/snapshots

NEXUS-RAWURL: https://nexus.onap.org/content/sites/raw

DOCKER-REGISTRY: nexus3.onap.org:10001

DOCKER-VERSION: 1.1-STAGING-latest

GIT-MR-REPO: http://gerrit.onap.org/r/dcae/demo/startup/message-router.git

public_net_id: e303aa39-47cb-45dc-b718-991465fcdbf4

dcae_ip_addr: 10.0.4.1

dcae_pstg00_ip_addr: 10.0.4.101

dcae_coll00_ip_addr: 10.0.4.102

dcae_cdap00_ip_addr: 10.0.4.103

dcae_cdap01_ip_addr: 10.0.4.104

dcae_cdap02_ip_addr: 10.0.4.105

UBUNTU-1404-IMAGE: ubuntu-14.04-server-cloudimg-amd64-disk1.img

UBUNTU-1604-IMAGE: ubuntu-16.04-server-cloudimg-amd64-disk1.img

FLAVOR-SMALL: m1.small

FLAVOR-MEDIUM: m1.medium

FLAVOR-LARGE: m1.large

FLAVOR-XLARGE: m1.xlarge

dcae_float_ip_addr: 10.6.140.34

dcae_pstg00_float_ip_addr: 10.6.140.52

dcae_coll00_float_ip_addr: 10.6.140.57

dcae_cdap00_float_ip_addr: 10.6.140.55

dcae_cdap01_float_ip_addr: 10.6.140.59

dcae_cdap02_float_ip_addr: 10.6.140.61

subhash kumar singh

We are also facing same issue, I tried to re-initialize DCAE after allocating floating IPs but still we are not able to see these 5 VMS.

Docker logs: http://paste.openstack.org/show/615625/

Amit Gupta

Hi Olga S,

I am installing ONAP using the VM's, without docker. I am not using docker vm as such for onap isntalltion (ran the latest 1.2.0 snapshot artifact, faced issue related to docker so i moved to older version and try to install onap components using vm's)

i am running env and head template with august release artifact 1.1.0 snapshot. the below 8 vm's are not getting up and onap installtion create failed.

dcae_coll_float_ip: 192.168.30.153

dcae_db_float_ip: 192.168.30.150

dcae_hdp1_float_ip: 192.168.30.170

dcae_hdp2_float_ip: 192.168.30.211

dcae_hdp3_float_ip: 192.168.30.212

sdc_float_ip: 192.168.30.202

policy_float_ip: 192.168.30.162

portal_float_ip: 192.168.30.164

pasted the links for env and heat template file .

Heat template:

https://gerrit.onap.org/r/gitweb?p=demo.git;a=blob;f=heat/OpenECOMP/onap_openstack_float.yaml;h=024dd2f90a2f36b09497a5d93dda52ae58294dc9;hb=refs/heads/master

Env file creation link for floating ip mentioned in the env file:

https://gerrit.onap.org/r/gitweb?p=demo.git;a=blob;f=heat/OpenECOMP/onap_openstack_float.env;h=1dfc7f81eec1985aa20c8bd36a87d3ae34d0f361;hb=refs/heads/master

Please help how to do this ??

Thanks

Pranjal

GOKUL SINGARAJU

ONAP nofloat.env and yaml stack startup fails on sdc_volume_data

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resource.py", line 713, in _action_recorder

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource yield

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resource.py", line 784, in _do_action

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource yield self.action_handler_task(action, args=handler_args)

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/scheduler.py", line 328, in wrapper

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource step = next(subtask)

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resource.py", line 758, in action_handler_task

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource while not check(handler_data):

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resources/openstack/cinder/volume.py", line 298, in check_create_complete

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource complete = super(CinderVolume, self).check_create_complete(vol_id)

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resources/volume_base.py", line 53, in check_create_complete

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource resource_status=vol.status)

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource ResourceInError: Went to status error due to "Unknown"

2017-07-20 20:18:20.477 12249 ERROR heat.engine.resource

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource Traceback (most recent call last):

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resource.py", line 713, in _action_recorder

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource yield

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resource.py", line 784, in _do_action

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource yield self.action_handler_task(action, args=handler_args)

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/scheduler.py", line 328, in wrapper

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource step = next(subtask)

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resource.py", line 758, in action_handler_task

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource while not check(handler_data):

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resources/openstack/cinder/volume.py", line 298, in check_create_complete

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource complete = super(CinderVolume, self).check_create_complete(vol_id)

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource File "/usr/lib/python2.7/dist-packages/heat/engine/resources/volume_base.py", line 53, in check_create_complete

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource resource_status=vol.status)

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource ResourceInError: Went to status error due to "Unknown"

2017-07-20 20:18:59.387 12249 ERROR heat.engine.resource

2017-07-20 20:19:00.434 12249 DEBUG oslo_messaging._drivers.amqpdriver [-] CAST unique_id: 4093e76649ba4ae99774bf146c90f7e7 size: 11403 NOTIFY exchange: heat topic: notifications.error _send /usr/lib/python2.7/dist-packages/oslo_messaging/_drivers/amqpdriver.py:480

2017-07-20 20:19:00.445 12249 INFO heat.engine.stack [-] Stack CREATE FAILED (ONAP): Resource CREATE failed: ResourceInError: resources.sdc_volume_data: Went to status error due to "Unknown"

Antony Prabhu M

I think, your openstack doesn't have resource to create 100GB size of sdc volume. Can you check this?.

David Rhodes

Having auth trouble trying to bring this up at Cloudlab ...

1) I selected Mikata (is that right)?

2) openstack itself seems to be running fine

4) I had to change /etc/nova/nova/conf :: adding a section [neutron] with setting: user_domain_name = default

5) But I still get auth problems :: tail of a --debug stack create attempt:

Suggestions??? I think I have all the passwords and env stuff set ok, but maybe not?

Marco Platania

David,

Which template are you using? If you are using onap_openstack_float.yaml, that requires OpenStack admin access to statically allocate floating IPs to VMs. That would justify the authorization issue.

David Rhodes

Using _nofloat .... but I also have admin/su rights on the deployed nodes.

Edmund Haselwanter

Hi,

is there a JIRA Project do work on issues / feature requests for the Demo project?

I was able to deploy ONAP approximately two weeks ago, but I'm failing to do so now.

Nothing really changed from my side. Main issue right now is that I'm not able to bring up the portal correctly.

Did something in the portal-apps docker container change? Maybe this is a misleading error, but I get:

Marco Platania

Hi Edmund,

You can use Integration project in JIRA for Demo, although the problem you mention is related to Portal, not the way ONAP is installed. Manoop Talasila, are you and Portal team aware of this issue with the DB?

Marco

Edmund Haselwanter

and one other thing: the wait in the portal-apps/portal-wms image is too short:

Antony Prabhu M

I hope, your network doesn't have 172.x.x.x/24 series anywhere...I faced the similar issue with 172.x.x.x series. later once i corrected, it was fine.

Edmund Haselwanter

no. no change in that:

root@vm1-portal:~# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP group default qlen 1000 link/ether fa:16:3e:de:27:60 brd ff:ff:ff:ff:ff:ff inet 10.11.12.8/24 brd 10.11.12.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fede:2760/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP group default qlen 1000 link/ether fa:16:3e:96:f2:24 brd ff:ff:ff:ff:ff:ff inet 10.0.9.1/16 brd 10.0.255.255 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::f816:3eff:fe96:f224/64 scope link valid_lft forever preferred_lft forever 4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:4d:a1:c7:d3 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 scope global docker0 valid_lft forever preferred_lft forever 6: br-403c025dd082: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c4:a3:0a:93 brd ff:ff:ff:ff:ff:ff inet 172.18.0.1/16 scope global br-403c025dd082 valid_lft forever preferred_lft forever inet6 fe80::42:c4ff:fea3:a93/64 scope link valid_lft forever preferred_lft forever 8: veth24ca1da: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-403c025dd082 state UP group default link/ether a2:3f:fb:8a:05:26 brd ff:ff:ff:ff:ff:ff inet6 fe80::a03f:fbff:fe8a:526/64 scope link valid_lft forever preferred_lft forever 10: veth9a5e442: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-403c025dd082 state UP group default link/ether 22:fe:b4:c3:c3:c6 brd ff:ff:ff:ff:ff:ff inet6 fe80::20fe:b4ff:fec3:c3c6/64 scope link valid_lft forever preferred_lft forever 16: vetheed0692: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-403c025dd082 state UP group default link/ether 16:2f:ed:27:82:21 brd ff:ff:ff:ff:ff:ff inet6 fe80::142f:edff:fe27:8221/64 scope link valid_lft forever preferred_lft forever 18: veth9e030ce: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-403c025dd082 state UP group default link/ether 9a:8a:20:7e:a7:c0 brd ff:ff:ff:ff:ff:ff inet6 fe80::988a:20ff:fe7e:a7c0/64 scope link valid_lft forever preferred_lft forever root@vm1-portal:~# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.11.12.1 0.0.0.0 UG 0 0 0 eth0 10.0.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth1 10.11.12.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 169.254.169.254 10.11.12.1 255.255.255.255 UGH 0 0 0 eth0 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-403c025dd082Antony Prabhu M

It looks fine to me..I meant to say that any of the openstack network too (controller or compute provider network...etc).

Have u used float or no_float.yaml for installation?...

I advise you to follow the deployment architecture and find out, if any docker is missing?. Sometimes, due to bad internet connection, it happens.

Overall Deployment Architecture

If everything is available, you may need to restart all the VMs from /opt/ directory (do only ./portal_vm_init.sh).

This looks like synch-up issue with other VMs.

Edmund Haselwanter

Antony Prabhu M Ok. I'll double check. And I think I'll invest some time in a distributed 're/install' script. is there any documented order/dependency like the one with the wait for DB?

I guess I'll try to raise/comment with JIRA tickets in the various projects. Thinking e.g. about a 'more meaningful exception for db connection/startup issues' if this is not a problem with the container then the stacktrace I'm seeing is super misleading. It makes no sense to see a 'java.sql.SQLException: No suitable driver' exception if there is 'just' an orchestration issue.

Edmund Haselwanter

Manoop Talasila how can I follow the updates of e.g. the portal containers? is there a documented image tagging strategy, a history?

I would rather like to put in distinct tags than be point-in-time dependent.

Currently it seams that the deployment pulls a generic '1.1-STAGING-latest' tag for all containers, which is point in time dependent .

Manoop Talasila

Until now every component tried to maintain same tag across ONAP. However, as per ONAP TSC team are now allowed to use their own version tags based on their pace of internal releases: Independent Versioning and Release Process.

Edmund Haselwanter

I see floating ips in https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.0-SNAPSHOT/onap_openstack_nofloat.yaml

this is a copy and paste error, I guess?

dcae_c_floating_ip: type: OS::Neutron::FloatingIP properties: floating_network_id: { get_param: public_net_id } port_id: { get_resource: dcae_c_private_port }Marco Platania

Yes, it's a copy/paste mistake

Edmund Haselwanter

which centos 7 version (http://cloud.centos.org/centos/7/images/) should be used as a base for https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.0-SNAPSHOT/onap_openstack_nofloat.yaml

centos_7_image: type: string description: Name of the CentOS 7 imageDavid Rhodes

I think that my problem may be related ... using onap_openstack.env/yaml version. Build is Vanilla. The portal doesn't come up, and I see this in the vm1-portal logs:

Processing triggers for ureadahead (0.100.0-16) ... % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0100 617 0 617 0 0 3815 0 --:--:-- --:--:-- --:--:-- 3832 [ 588.922631] aufs au_opts_parse:1155:dockerd[18934]: unknown option dirperm1 59 7857k 59 4640k 0 0 5352k 0 0:00:01 --:--:-- 0:00:01 5352k100 7857k 100 7857k 0 0 8347k 0 --:--:-- --:--:-- --:--:-- 42.4M docker stop/waiting docker start/running, process 19061 Cloning into 'portal'... [ 591.540839] aufs au_opts_parse:1155:dockerd[19108]: unknown option dirperm1 Already up-to-date. chmod: cannot access 'portal/deliveries/new_start.sh': No such file or directory chmod: cannot access 'portal/deliveries/new_stop.sh': No such file or directory chmod: cannot access 'portal/deliveries/dbstart.sh': No such file or directory unzip: cannot find or open portal/deliveries/etc.zip, portal/deliveries/etc.zip.zip or portal/deliveries/etc.zip.ZIP.AND then later in the log:

And only the mariadb container is running:

Suggestions? Are these the correct settings?

Marco Platania

Portal has been updated recently. Please look at the latest portal_vm_init.sh script. The artifact_version and the docker_version are correct.

Edmund Haselwanter

sorry to say, but neither

nor

nor the script itself will yield a deterministic deployment.

It depends on the point in time when you run it. That is just the way it is right now. So asking for version settings has no point. btw. I created INT-284 - Getting issue details... STATUS to fix this.

jinwoo jung

Do I have a private DNS server and put the IP address into dns_fowarder in Heat Template?

And In GUI, Network > public > Port > status is N/A . Is it ok?

pranjal sharma

I am not able to execute the below curl command in any of the generated onap vm's.

##########

root@onap-dns-server:/etc/bind# curl -k https://nexus.onap.org/content/sites/raw/org.onap.demo/boot/1.1.1/dns_install.sh -o /opt/dns_install.sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- 0:00:48 --:--:-- 0^C

root@onap-dns-server:/etc/bind#

root@onap-dns-server:/etc/bind# wget https://nexus.onap.org/content/sites/raw/org.onap.demo/boot/1.1.1/dns_install.sh

--2018-01-12 10:32:41-- https://nexus.onap.org/content/sites/raw/org.onap.demo/boot/1.1.1/dns_install.sh

Resolving nexus.onap.org (nexus.onap.org)... 199.204.45.137, 2604:e100:1:0:f816:3eff:fefb:56ed

Connecting to nexus.onap.org (nexus.onap.org)|199.204.45.137|:443... connected.

########

Although wget www.google.com is working all good and able to download the index.html file page but wget of nexus repo is showing connected but unable to download html/file page.

Can you help me to resolve this.

Thanks,

pranjal

Mohit Agarwal

Hi,

I am a complete beginner. I need help with setting up ONAP on a bare metal machine I have at my workplace. I am trying to install OpenStack on it. Can anyone tell me the components of OpenStack that need to configured before I can move ahead with setting up ONAP?

Eric Debeau

Hello Mohit and welcome to ONAP

The full ONAP installation for Amsterdam Release using Heat Template is described here:

https://onap.readthedocs.io/en/amsterdam/guides/onap-developer/settingup/fullonap.html

We need the following OpenStack components: Cinder, Designate, Glance, Heat, Horizon, Keystone, Neutron, Nova

To deploy OpenStack, you can use various solutions:

There is also a Kubernetes based installation described here:

http://onap.readthedocs.io/en/latest/submodules/oom.git/docs/OOM%20Project%20Description/oom_project_description.html#onap-operations-manager-project

Please also use the onap-discuss list for such exchange

Eric