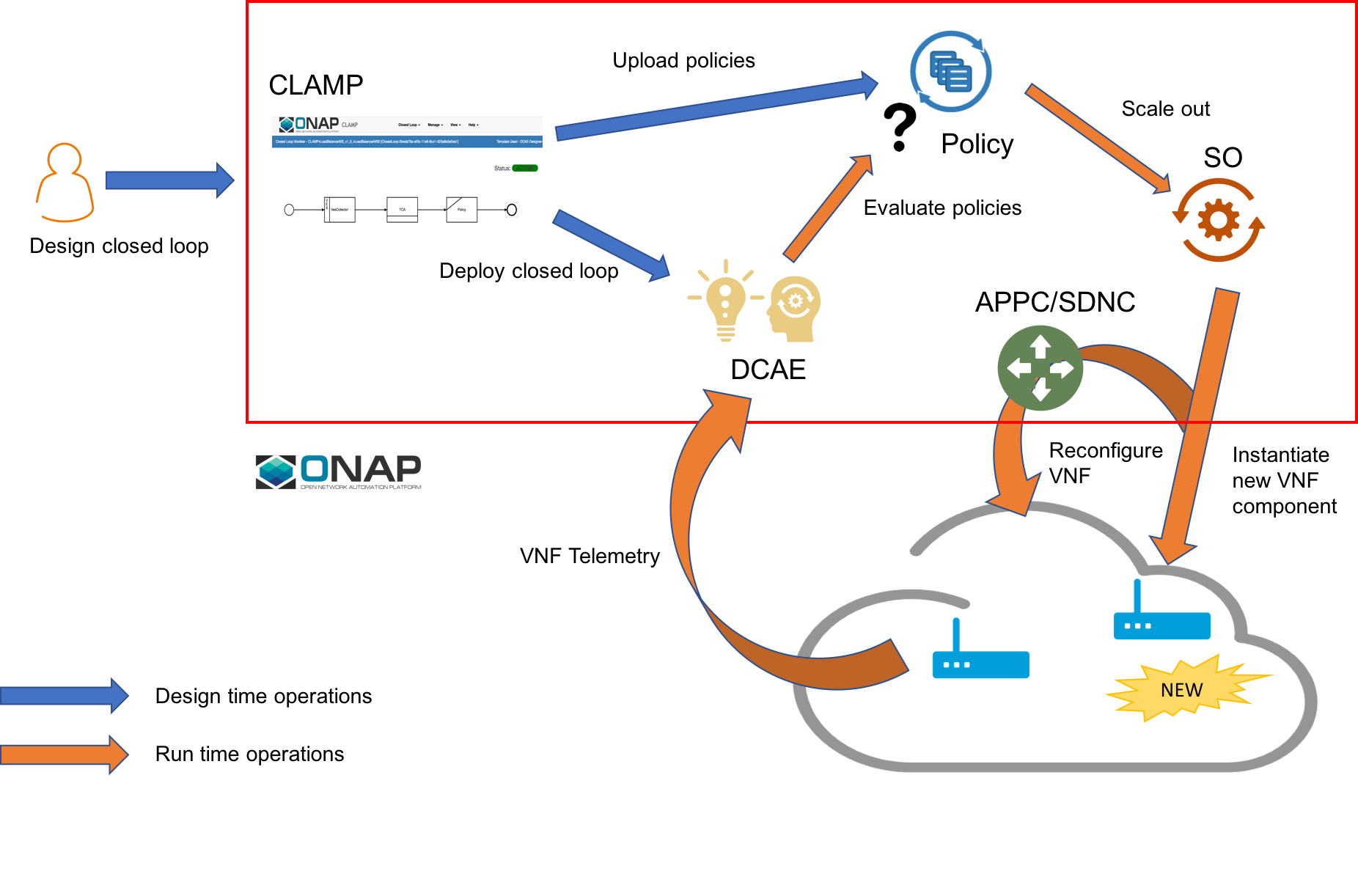

ONAP Casablanca Release delivers platform capabilities that support scaling up resources. The use case has been designed and implemented to fully take advantage of existing functionalities, using newer APIs and technologies whenever possible. Compared to the previous version delivered for Beijing, which supported only manual trigger and one VNF type, the new use case adds:

- A design phase in which users define and deploy policies and closed-loops from CLAMP;

- Closed-loop- and Policy-based automation;

- Guard policies that allow/deny scale out operations based on frequency limits or max number of instances already scaled;

- New and improved workflow that leverages SO ability to compose building blocks on the fly;

- Use of Generic Resource APIs for VNF instantiation and lifecycle management (as opposed to the old VNF APIs);

- VNF health check;

- Support for multiple VNF types;

- Support for multiple VNF controllers, i.e. APPC (officially supported) and SDNC (experimental);

- Ability to define lifecycle management configuration from CLAMP (automated trigger) or VID (manual trigger).

This is a different kind of Use Case for ONAP that Integrates many of the projects to show how they can work together to perform a complex but essential activity.

The use case has been successfully demonstrated against docker images released for Casablanca, using the vLB/vDNS VNFs available in the Demo repository in Gerrit (https://gerrit.onap.org/r/gitweb?p=demo.git;a=tree;f=heat/vLBMS;h=37f803e33c6e1e41c3e7c5b009feeb7e3e1953d0;hb=refs/heads/casablanca).

Scale out with manual trigger

Closed loop-enabled scale out

Known Issues and Resolutions

1) When running closed loop-enabled scale out, the closed loop designed in CLAMP conflicts with the default closed loop defined for the old vLB/vDNS use case

Resolution: Change TCA configuration for the old vLB/vDNS use case

- Connect to Consul: http://<ANY K8S VM IP ADDRESS>:30270 and click on "Key/Value" → "dcae-tca-analytics"

- Change "eventName" in the vLB default policy to something different, for example "vLB" instead of the default value "vLoadBalancer"

- Change "subscriberConsumerGroup" in the TCA configuration to something different, for example "OpenDCAE-c13" instead of the default value "OpenDCAE-c12"

- Click "UPDATE" to upload the new TCA configuration

2) When running closed loop-enabled scale out, the permitAll guard policy conflicts with the scale out guard policy

Resolution: Undeploy the permitAll guard policy

- Connect to the Policy GUI, either through the ONAP Portal (https://portal.api.simpledemo.onap.org:30225/ONAPPORTAL/login.htm) or directly (https://policy.api.simpledemo.onap.org:30219/onap/login.htm)

- If using the ONAP Portal, use demo/demo123456! as credentials, otherwise, if accessing Policy GUI directly, use demo/demo

- Click "Policy" → "Push" on the left panel

- Click the pencil symbol next to "default" in the PDP Groups table

- Select "Decision_AllPermitGuard"

- Click "Remove"

3) When distributing the closed loop from CLAMP to policy if we get error as - policy send failed PE300: Invalid template, the template name closedLoopControlName was not found in the dictionary.

For proper operation via CLAMP, the rules template need to be uploaded to Policy. This can be achieved by executing the push-policies.sh script before creating the Control Loop via CLAMP:

kubectl get pods -n onap -o wide | grep policy | grep pap # identify the PAP pop

kubectl exec -it <pap-pod> -c pap -n onap -- bash -c "/tmp/policy-install/config/push-policies.sh"

Videos:

These videos provide a detailed documentation of how to run scale out use case using manual or automated (i.e. closed loop-enabled) trigger:

Material Used for the Demo

Heat templates for the vLB/vDNS VNFs: vLBMS.zip

DCAE Blueprint: tca_docker_k8s_v4.yaml

SDNC preload for the base VNF: base_preload.txt

SDNC preload of the scaling VF module (must be updated for each VF module): scaleout_preload.txt

Example of SDNC VF module topology to define a JSON path to configuration parameters for scale out: sdnc_resource_example.txt

Configuration parameters for CDT: parameters_vLB.yaml

VNF interface for CDT: Vloadbalancerms..dnsscaling..module-1.xml

AAI API to set Availability Zone:

CloudOwner is the cloud-owner object in AAI

- RegionOne is the cloud-region-id in AAI

- AZ1 is the name of the Availability Zone

curl -X PUT \

https://10.12.7.29:30233/aai/v14/cloud-infrastructure/cloud-regions/cloud-region/CloudOwner/RegionOne/availability-zones/availability-zone/AZ1 \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-H 'Postman-Token: 4fe938e9-28d7-4956-8822-e01b6f27a894' \

-H 'X-FromAppId: AAI' \

-H 'X-TransactionId: get_aai_subscr' \

-H 'cache-control: no-cache' \

-d '{

"availability-zone-name": "AZ1",

"hypervisor-type": "hypervisor"

}'

VID API to enable scale out for a given VNF:

- UUID is the VNF model version ID in the CSAR file

- invariantUUID is the VNF model invariant ID in the CSAR file

curl -X POST \

https://<ANY K8S VM IP ADDRESS>:30200/vid/change-management/vnf_workflow_relation \

-H 'Accept-Encoding: gzip, deflate' \

-H 'Content-Type: application/json' \

-H 'Postman-Token: 7086e3eb-f6a9-416c-85f9-6c99ae7c2c02' \

-H 'cache-control: no-cache' \

-d '{

"workflowsDetails":[

{

"workflowName":"VNF Scale Out",

"vnfDetails":{

"UUID":"d5c2aff0-d2bc-401e-8277-fd90c1351b0e",

"invariantUUID":"cdc1ac36-a3c9-41e8-872b-10d02a6eded1"

}

}

]

}'

Configuration parameters:

Define a JSON path to "vdns_private_ip_0", "vdns_private_ip_1", and "enabled". Use the following formats for VID and Policy:

VID:

[{"ip-addr":"$.vf-module-topology.vf-module-parameters.param[10].value","oam-ip-addr":"$.vf-module-topology.vf-module-parameters.param[15].value","enabled":"$.vf-module-topology.vf-module-parameters.param[22].value"}]

POLICY:

requestParameters: '{"usePreload":true,"userParams":[]}'

configurationParameters: '[{"ip-addr":"$.vf-module-topology.vf-module-parameters.param[10].value","oam-ip-addr":"$.vf-module-topology.vf-module-parameters.param[15].value","enabled":"$.vf-module-topology.vf-module-parameters.param[22].value"}]'

6 Comments

Jorge Hernandez

For proper operation via CLAMP, the rules template need to be uploaded to Policy. This can be achieved by executing the push-policies.sh script before creating the Control Loop via CLAMP:

samir kumar

Marco Platania Jorge Hernandez

Good Folks,

I was trying to follow the steps and all was in sync except at the time of distribution - CLAMP has errors in deployment - which is to do with CSAR exceptipon

Any advice - please do share and also how can we run V firewall use case as well.. any source to get DCAE blue print etc for Casablanca

Marco Platania

Which kind of CLAMP errors did you see? In case CLAMP complains about missing tables, it's an error that we saw in the past. For some reason, sometimes some table doesn't get created, so you need to rebuild CLAMP. vFirewall use case uses a different procedure, this wiki page doesn't apply (it will later in Dublin...) You find video about the vFW here: Running the ONAP Demos They are a little dated but mostly good.

samir kumar

Marco Platania Thanks a lot Marco. I have shutdown the instance today - Let me share the logs again.

samir kumar

Marco Platania

Please note the below and any help will be appreciated .

I have followed the video steps just as it was explained.

AAI deployment Error

Clamp Error - CSAR installation Error

Marco Platania

You have a problem with distribution in general, not only CLAMP, so you may want to post this to the onap-discuss mailing list. You can find larger support there. Can you please attach the logs of AAI model loader and SO sdc-controller in your email?