This long-winded page name will revert to "Running the ONAP vFirewall Demo...." when we are finished before 9 Dec - and moved out of the wiki root

Please join and post "validated" actions/config/results - but do not move or edit this page until we get a complete vFW run before Ideally the 4 Dec KubCon conference and worst case the 11 Dec ONAP Conference - thank you

Under construction - this page is a consolidation of all details in getting the vFirewall running over the next 2 weeks in prep of anyone that would like to demo it for the F2F in Dec.

ADD content ONLY when verified - with evidence (screen-cap, JSON output etc..) DO paste any questions and unverified config/actions in the comment section at the end - for the team to verify |

HEAT Daily meeting at 1200 EDT noon Nov 27 to 8 Dec 2017 - https://zoom.us/j/7939937123 see schedule at https://lists.onap.org/pipermail/onap-discuss/2017-November/006483.html

Statement of Work

Ideally we provide this page as a the draft that will go into ReadTheDocs.io - where this page gets deleted and referenced there.

There are currently 3 or more distinct pages, email threads, presentations, phone calls, meetings where all the details needed to "Step by Step" get a running vFirewall up are located.

We would like to get to the point where we were before Aug 2017 where an individual with an Openstack environment (OOM as well now) - could follow each instruction point (action - and expected/documented result/output) and end up with our current minimal sanity usecase - the vFirewall

If you have any details on configuration of getting up the vFirewall post them to the comments section and it will be tested and incorporated

Ideally any action added to this page itself - is fully tested with resulting output (text/screencap) - pasted as a reference.

JIRAs: OOM-459 - Getting issue details... STATUS for OOM and INT-106 - Getting issue details... STATUS for HEAT

Output

1- This set of instructions below - to go from an empty OOM host or OpenStack lab - all the way to closed loop running.

2 - A set of videos - the vFirewall from an already deployed OOM and HEAT deployment - see the reference videos from Running the ONAP Demos#ONAPDeploymentVideos see

INT-333

-

Getting issue details...

STATUS

3- Secondary videos on bringing up OOM and HEAT deployments

Running the vFirewall Demo

TODO: check for JIRA on appc demo.robot working : 20171128 (worked in 1.0.0)

Prerequisites

| Artifact | Location | Notes |

|---|---|---|

private key (ssh-add) | obrienbiometrics:onap_public michaelobrien$ ssh-keygen SHA256:YzLggI8nGXna0Ssx0DMpLvZKSPTGZJ1mXwj2XZ+c8Gg michaelobrien@obrienbiometrics.local paste onap_public.pub into the pub_key: sections of all the onap_openstack and vFW env files | |

| openstack yaml and env | https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.0-SNAPSHOT/ demo/heat/onap/onap-openstack.* | |

vFirewall yaml and env unverified | We will use the split vFWCL (vFW closed loop) in demo/heat/vFWCL demo/heat/vFWCL/vFWPKG/base_vpkg.env demo/heat/vFWCL/vFWSNK/base_vfw.env image_name: ubuntu-14-04-cloud-amd64 flavor_name: m1.medium public_net_id: 971040b2-7059-49dc-b220-4fab50cb2ad4 cloud_env: openstack onap_private_net_id: oam_onap_6Gve onap_private_subnet_id: oam_onap_6Gve not the older https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/vFW/1.1.0-SNAPSHOT/ or the deprecated https://nexus.onap.org/content/sites/raw/org.openecomp.demo/heat/vFW/1.1.0-SNAPSHOT/ | |

| demo/heat/vFWCL/vFWPKG/base_vpkg.env | ||

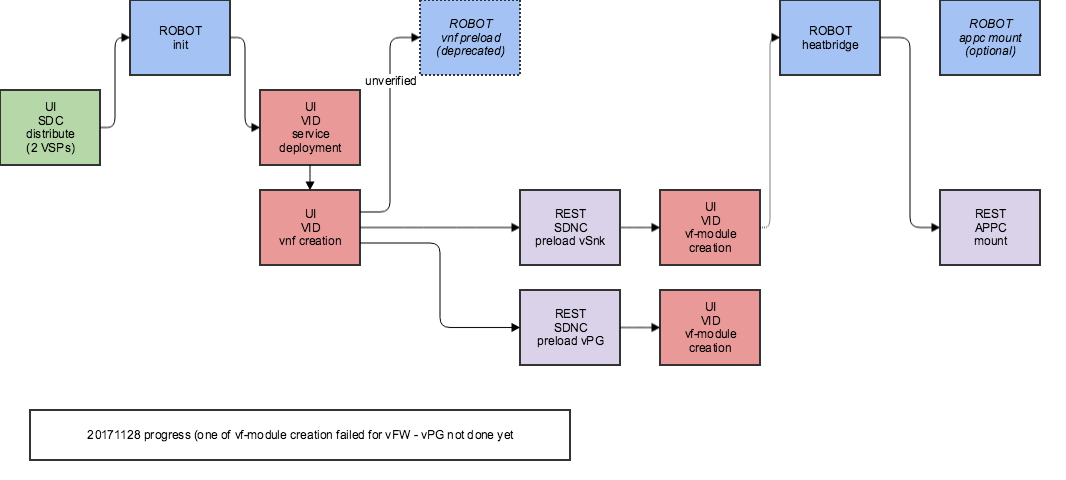

vFirewall Tasks

Ideally we have an automated one-click vFW deployment - in the works -

| T# | Task | Action Rest URL+JSON payload | Result JSON / Text / Screencap | Artifacts Link or attach file | Env OOM HEAT or both | Verify Read | Last run | Notes |

|---|---|---|---|---|---|---|---|---|

| Before robot init (init_customer and distribute | ||||||||

| cloud region PUT to AAI | from postman:code PUT /aai/v11/cloud-infrastructure/cloud-regions/cloud-region/Openstack/RegionOne HTTP/1.1 { | 201 created | OOM | GET /aai/v11/cloud-infrastructure/cloud-regions/cloud-region/Openstack/RegionOne HTTP/1.1 200 OK { | 20171126 | |||

| 1 | TBD - cloud region PUT to AAI | Verify: cloud-region is not set by robot ./demo.sh init (only the customer is - we need to run the rest call for cloud region ourselves watch intermittent issues bringing up aai1 containers in AAI-513 - Getting issue details... STATUS | HEAT | TBD 201711xx | ||||

TBD Customer creation | Note: robot ./demo.sh | |||||||

| TBD SDC Distribution | ||||||||

| TBD VID Service creation | ||||||||

| TBD VID Service Instance deployment | ||||||||

| TBD VID Create VNF | ||||||||

| TBD VNF preload | curl here | |||||||

SDNC VNF Preload (Integration-Jenkins lab) | ||||||||

TBD VID Create VF-Module | ||||||||

| TBD Robot Heatbridge | ||||||||

| TBD APPC mountpoint (Robot or REST) | ||||||||

APPC mountpoint for vFW closed-loop (Integration-Jenkins lab) |

Verifying the vFirewall

Original/Ongoing Doc References

running vFW Demo on ONAP Amsterdam Release

Clearwater vIMS Onboarding and Instantiation

Integration Test - could not find vFW content here

ONAP master branch Stabilization

OOM-1 - Getting issue details... STATUS

INT-106 - Getting issue details... STATUS

INT-284 - Getting issue details... STATUS

List of ONAP Implementations under Test by Environment

Please add yourself to the list so we can target EPIC work based on environment affinity

| Environment | Branch | Deployer | Contacts | vFW status | Notes |

|---|---|---|---|---|---|

| Intel Openlab | master | HEAT | none | cloud: http://10.12.25.2/auth/login/?next=/project/instances/ servers Starting up (20171123) - not ready yet | |

| Intel Openlab | master | OOM Kubernetes | none | cloud: http://10.12.25.2/auth/login/?next=/project/instances/ server: 10.12.25.117 key: openlab_oom_key (pass by mail) (non-DCAE ONAP components only) partial 16g only until quota increased or we cluster 4 OOM-461 - Getting issue details... STATUS | |

| Intel Openlab | release-1.1.0 | OOM Kubernetes | none | cloud: http://10.12.25.2/auth/login/?next=/project/instances/ server: 10.12.25.119 key: openlab_oom_key (pass by mail) watch INT-344 - Getting issue details... STATUS | |

| Rackspace | master | OOM Kubernetes | none | (non-DCAE ONAP components only) DCAEGEN2 not tested yet for R1 Running CD jobs hourly | |

| Amazon AWS EC2 | master | OOM Kubernetes | none | (non-DCAE ONAP components only) - spot node terminated | |

| Amazon AWS ECS | OOM Kubernetes | pending test | n/a | (non-DCAE ONAP components only) - node terminated | |

| Google GCE | master | OOM Kubernetes | (non-DCAE ONAP components only) - node closed | ||

| Google GCE CaaS | OOM Kubernetes | pending test | n/a | (non-DCAE ONAP components only) | |

| Rackspace | HEAT | not supported yet | n/a | ||

| Alibaba VM | OOM Kubernetes | none | not tested yet |

Continuous Deployment References

| Tech | Servers | Details |

|---|---|---|

| HEAT | ||

| Kubernetes | Jobs (AWS) Analytics (AWS) CD servers (AWS) dev.onap.info | OOM R2 Master (Beijing) http://jenkins.onap.info/job/oom-cd-release-110-branch/ OOM R1 (Amsterdam) |

Formal Recordings

put all daily and ongoing vFW formal run videos here - in the leadup to the 2 conferences.

| Recording details | Recording embedded (currently limited to 30 min for the 100mb limit) or link | |

|---|---|---|

ONAP installation of OOM from clean VM to Healthcheck | ||

| OOM vFirewall SDC distribution to VF-Module creation | pending | |

ONAP installation of HEAT from empty OPENSTACK to Healthcheck | Review the 20171128 videos from Marco via https://lists.onap.org/pipermail/onap-discuss/2017-November/006572.html on https://wiki.onap.org/display/DW/Running+the+ONAP+Demos INT-333 - Getting issue details... STATUS | |

| HEAT vFirewall SDC distribution to VF-Module creation | pending |

Daily Working Recordings

| Date | Video | Notes / TODO |

|---|---|---|

2017 1127 | HEAT: get back to the vnf preload - continue to the 3 vFW VMs coming up todo: use the split template (abandon the single VNF) todo: stop using robot for all except customer creation - essentially everything is REST and VID todo: fix DNS of the onap env file OOM: go over master status, get a 1.1.0 branch up separately CHAT: From Brian to Everyone: (12:06) | |

| 20171128 | HEAT: error on vf-module creation (MSO Heat issue) | |

| 20171129 OOM | OOM OOM | |

| 20171129 HEAT | HEAT |

Generated JIRAs

OOM-461 - Getting issue details... STATUS

AAI-513 - Getting issue details... STATUS

INT-346 - Getting issue details... STATUS

TODO: JIRA: Medium: SDNC preload - fix confusion: vnf-name=VF-Module, generic-vnf-name=VNF

Access and Deployment Configuration

Openlab VNC and CLI

The following is missing some sections and a bit out of date (v2 deprecated in favor of v3) -Integration Testing Schedule, 10-09-2017

| Get an openlab account - Integration / Developer Lab Access | Stephen Gooch provides excellent/fast service - raise a JIRA like the following OPENLABS-75 - Getting issue details... STATUS |

Install openVPN - Using Lab POD-ONAP-01 Environment For OSX both Viscosity and TunnelBlick work fine | |

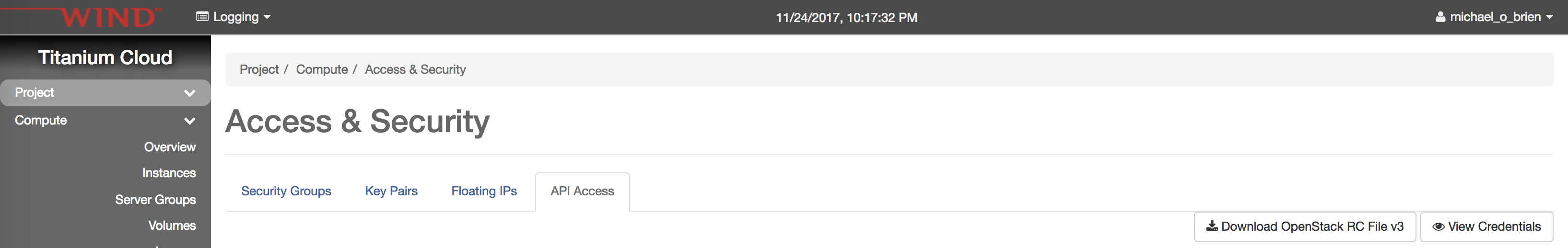

| Login to Openstack | |

| Install openstack command line tools | Tutorial: Configuring and Starting Up the Base ONAP Stack#InstallPythonvirtualenvTools(optional,butrecommended) |

| get your v3 rc file | |

| verify your openstack cli access (or just use the jumpbox) | obrienbiometrics:aws michaelobrien$ source logging-openrc.sh obrienbiometrics:aws michaelobrien$ openstack server list +--------------------------------------+---------+--------+-------------------------------+------------+ | ID | Name | Status | Networks | Image Name | +--------------------------------------+---------+--------+-------------------------------+------------+ | 1ed28213-62dd-4ef6-bdde-6307e0b42c8c | jenkins | ACTIVE | admin-private-mgmt=10.10.2.34 | | +--------------------------------------+---------+--------+-------------------------------+------------+ |

| get 15 elastic IP's | You may need to release unused IPs from other tenants - as we have 4 pools of 50 |

| fill in your stack env parameters | onap_openstack.env public_net_id: 971040b2-7059-49dc-b220-4fab50cb2ad4 public_net_name: external ubuntu_1404_image: ubuntu-14-04-cloud-amd64 ubuntu_1604_image: ubuntu-16-04-cloud-amd64 flavor_small: m1.small flavor_medium: m1.medium flavor_large: m1.large flavor_xlarge: m1.large flavor_xxlarge: m1.xlarge vm_base_name: onap key_name: onap_key pub_key: ssh-rsa AAAAobrienbiometrics nexus_repo: https://nexus.onap.org/content/sites/raw nexus_docker_repo: nexus3.onap.org:10001 nexus_username: docker nexus_password: docker dmaap_topic: AUTO artifacts_version: 1.1.0-SNAPSHOT openstack_tenant_id: a85a07a5f34d4yyyyyyy openstack_tenant_name: Logyyyyyyy openstack_username: michaelyyyyyy openstack_api_key: Wyyyyyyy openstack_auth_method: password openstack_region: RegionOne horizon_url: http://10.12.25.2:5000/v3 keystone_url: http://10.12.25.2:5000 dns_list: ["10.12.25.5", "8.8.8.8"] external_dns: 8.8.8.8 dns_forwarder: 110.12.25.5 oam_network_cidr: 10.0.0.0/16 dnsaas_config_enabled: PUT WHETHER TO USE PROXYED DESIGNATE dnsaas_region: PUT THE DESIGNATE PROVIDING OPENSTACK'S REGION HERE dnsaas_keystone_url: PUT THE DESIGNATE PROVIDING OPENSTACK'S KEYSTONE URL HERE dnsaas_tenant_name: PUT THE TENANT NAME IN THE DESIGNATE PROVIDING OPENSTACK HERE (FOR R1 USE THE SAME AS openstack_tenant_name) dnsaas_username: PUT THE DESIGNATE PROVIDING OPENSTACK'S USERNAME HERE dnsaas_password: PUT THE DESIGNATE PROVIDING OPENSTACK'S PASSWORD HERE dcae_keystone_url: PUT THE MULTIVIM PROVIDED KEYSTONE API URL HERE dcae_centos_7_image: PUT THE CENTOS7 VM IMAGE NAME HERE FOR DCAE LAUNCHED CENTOS7 VM dcae_domain: PUT THE NAME OF DOMAIN THAT DCAE VMS REGISTER UNDER dcae_public_key: PUT THE PUBLIC KEY OF A KEYPAIR HERE TO BE USED BETWEEN DCAE LAUNCHED VMS dcae_private_key: PUT THE SECRET KEY OF A KEYPAIR HERE TO BE USED BETWEEN DCAE LAUNCHED VMS |

| Run the HEAT stack | obrienbiometrics:openlab michaelobrien$ openstack stack create -t onap_openstack.yaml -e onap_openstack.env ONAP1125_6 | id | 9b026354-c071-4e31-8611-11fef2f408f5 | | stack_name | ONAP1125_6 | | description | Heat template to install ONAP components | | creation_time | 2017-11-26T02:16:57Z | | updated_time | 2017-11-26T02:16:57Z | | stack_status | CREATE_IN_PROGRESS | | stack_status_reason | Stack CREATE started | obrienbiometrics:openlab michaelobrien$ openstack stack list | 9b026354-c071-4e31-8611-11fef2f408f5 | ONAP1125_6 | CREATE_IN_PROGRESS | 2017-11-26T02:16:57Z | 2017-11-26T02:16:57Z |

| Wait for deployment | DCEA and several mutli-service VM's down obrienbiometrics:openlab michaelobrien$ openstack server list | db5388c0-9fa5-4359-ad21-689dd0ce8955 | onap-multi-service | ERROR | | ubuntu-16-04-cloud-amd64 | | d712dce1-d39d-4c6e-8d21-d9da9aa40ea1 | onap-dcae-bootstrap | ACTIVE | oam_onap_awsf=10.0.4.1, 10.12.5.197 | ubuntu-16-04-cloud-amd64 | | 4724fa8e-e10b-46cb-a81d-e7a9a7df041e | onap-aai-inst1 | ACTIVE | oam_onap_awsf=10.0.1.1, 10.12.5.118 | ubuntu-14-04-cloud-amd64 | | bc4ef1f3-422d-4e66-a21b-8c5a3d206938 | onap-portal | ACTIVE | oam_onap_awsf=10.0.9.1, 10.12.5.241 | ubuntu-14-04-cloud-amd64 | | 0f9edb8e-a379-4ab1-a6b1-c24763b69ecd | onap-policy | ACTIVE | oam_onap_awsf=10.0.6.1, 10.12.5.17 | ubuntu-14-04-cloud-amd64 | | bd1f29e3-e05e-4570-9f41-94af83aec7d6 | onap-aai-inst2 | ACTIVE | oam_onap_awsf=10.0.1.2, 10.12.5.252 | ubuntu-14-04-cloud-amd64 | | 57e90b08-d69e-4770-a298-97f64387e60d | onap-dns-server | ACTIVE | oam_onap_awsf=10.0.100.1, 10.12.5.237 | ubuntu-14-04-cloud-amd64 | | e9dd8800-0f77-4658-90b0-db98f4689485 | onap-message-router | ACTIVE | oam_onap_awsf=10.0.11.1, 10.12.5.234 | ubuntu-14-04-cloud-amd64 | | af6120d8-419a-45f9-ae32-b077b9ace407 | onap-sdnc | ACTIVE | oam_onap_awsf=10.0.7.1, 10.12.5.226 | ubuntu-14-04-cloud-amd64 | | b6daf774-dc6a-4c9b-aaa3-ca8fc5734ac3 | onap-clamp | ACTIVE | oam_onap_awsf=10.0.12.1, 10.12.5.128 | ubuntu-16-04-cloud-amd64 | | 31524fcb-d1b2-427b-b0bf-29e8fc65fded | onap-sdc | ACTIVE | oam_onap_awsf=10.0.3.1, 10.12.5.92 | ubuntu-16-04-cloud-amd64 | | 31f8c1e7-a7e7-417d-a9df-cc5d65d7777c | onap-vid | ACTIVE | oam_onap_awsf=10.0.8.1, 10.12.5.218 | ubuntu-14-04-cloud-amd64 | | 482befc8-2a6a-4da7-8e05-8f8b294f80d2 | onap-robot | ACTIVE | oam_onap_awsf=10.0.10.1, 10.12.6.21 | ubuntu-16-04-cloud-amd64 | | 8ea76387-aadf-46da-8257-5e9c2f80fa48 | onap-appc | ACTIVE | oam_onap_awsf=10.0.2.1, 10.12.5.222 | ubuntu-14-04-cloud-amd64 | | 43b90061-885f-454b-8830-9da3338fca56 | onap-so | ACTIVE | oam_onap_awsf=10.0.5.1, 10.12.5.230 | ubuntu-16-04-cloud-amd64 | |

configure local vi /etc/hosts | 10.12.5.214 policy.api.simpledemo.onap.org 10.12.5.118 portal.api.simpledemo.onap.org 10.12.5.141 sdc.api.simpledemo.onap.org 10.12.5.92 vid.api.simpledemo.onap.org |

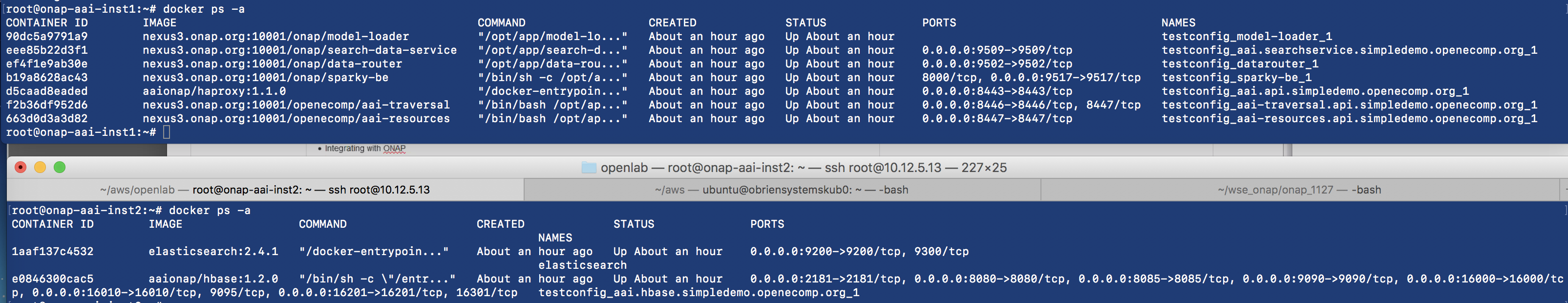

| Verify AAI_VM1 DNS | Intermittenty AAI1 does not fully initialize, docker will get installed and the test-config dir will get pulled - but the 6 docker containers in the compose file will not be up. login to aai immediately after stack startup and add the following before test-config root@onap-aai-inst1:~# cat /etc/hosts 10.0.1.2 aai.hbase.simpledemo.openecomp.org 10.12.5.213 aai.hbase.simpledemo.openecomp.org |

| Spot check containers | |

check robot health Core components are PASS so lets continue with the vFW | root@onap-robot:/opt# ./ete.sh health ------------------------------------------------------------------------------ Basic SDNGC Health Check | PASS | ------------------------------------------------------------------------------ Basic A&AI Health Check [ WARN ] Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x7fc24a256d50>: Failed to establish a new connection: [Errno 111] Connection refused',)': /aai/util/echo?action=long [ WARN ] Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x7fc24a256d10>: Failed to establish a new connection: [Errno 111] Connection refused',)': /aai/util/echo?action=long [ WARN ] Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x7fc24a23b690>: Failed to establish a new connection: [Errno 111] Connection refused',)': /aai/util/echo?action=long | FAIL | ConnectionError: HTTPSConnectionPool(host='10.0.1.1', port=8443): Max retries exceeded with url: /aai/util/echo?action=long (Caused by NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x7fc24a172710>: Failed to establish a new connection: [Errno 111] Connection refused',)) ------------------------------------------------------------------------------ Basic Policy Health Check | PASS | ------------------------------------------------------------------------------ Basic MSO Health Check | PASS | ------------------------------------------------------------------------------ Basic ASDC Health Check | PASS | ------------------------------------------------------------------------------ Basic APPC Health Check | PASS | ------------------------------------------------------------------------------ Basic Portal Health Check | PASS | ------------------------------------------------------------------------------ Basic Message Router Health Check | PASS | ------------------------------------------------------------------------------ Basic VID Health Check | PASS | ------------------------------------------------------------------------------ |

Design/Runtime Issues

20171122: Do we run the older robot preload or do we do the SDNC rest PUT manually

Older Tutorial: Creating a Service Instance from a Design Model#RunRobotdemo.shpreloadofDemoModule

20171122: Do we use the older June vFW zip (yaml + env) or must we use a new split template

investigate Brian's comment on running vFW Demo on ONAP Amsterdam Release - "If you want to do closed loop for vFW there is a new two VNF service for Amsterdam (vFWCL - it is in the demo repo) that separates the traffic generator into a second VNF/Heat stack so that Policy an associate the event on the LB with the VNF to be controlled (the traffic generator) through APPC. Contact Pam and Marco for details."

INT-342 - Getting issue details... STATUS

20171128: we are using the split vFWCL version

20171122: Do we run the older robot appc mountpoint or do we do the APPC rest PUT manually

20171125: Do we need R1 components to run the vFirewall like MultiVIM

There was a question about this from several developers - specifically is MSO wrapped now - or can we run with a minimal set of VM's to run the vFW.

INT-346 - Getting issue details... STATUS

20171125: Workaround for intermittent AAI-vm1 failure in HEAT

https://lists.onap.org/pipermail/onap-discuss/2017-November/006508.html

AAI-513 - Getting issue details... STATUS

For now my internal DNS was not working - AAI1 did not see AAI2 - thanks Venkata - harcoded the following in aai1 /etc/hosts

root@onap-aai-inst1:~# cat /etc/hosts 10.0.1.2 aai.hbase.simpledemo.openecomp.org 10.12.5.213 aai.hbase.simpledemo.openecomp.org root@onap-aai-inst1:/opt/test-config# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 603e85af586f nexus3.onap.org:10001/onap/model-loader "/opt/app/model-lo..." About a minute ago Up About a minute testconfig_model-loader_1 9826995b7ad5 nexus3.onap.org:10001/onap/data-router "/opt/app/data-rou..." About a minute ago Up About a minute 0.0.0.0:9502->9502/tcp testconfig_datarouter_1 19dd8614b767 nexus3.onap.org:10001/onap/search-data-service "/opt/app/search-d..." About a minute ago Up About a minute 0.0.0.0:9509->9509/tcp testconfig_aai.searchservice.simpledemo.openecomp.org_1 89b93577733f nexus3.onap.org:10001/onap/sparky-be "/bin/sh -c /opt/a..." About a minute ago Up About a minute 8000/tcp, 0.0.0.0:9517->9517/tcp testconfig_sparky-be_1 c13e604e1fdc aaionap/haproxy:1.1.0 "/docker-entrypoin..." About a minute ago Up About a minute 0.0.0.0:8443->8443/tcp testconfig_aai.api.simpledemo.openecomp.org_1 00aa79860bd5 nexus3.onap.org:10001/openecomp/aai-traversal "/bin/bash /opt/ap..." 4 minutes ago Up 4 minutes 0.0.0.0:8446->8446/tcp, 8447/tcp testconfig_aai-traversal.api.simpledemo.openecomp.org_1 54747c3594fc nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 7 minutes ago Up 7 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1

oot@onap-aai-inst1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES root@onap-aai-inst1:/opt# ./aai_vm_init.sh Waiting for 'testconfig_aai-resources.api.simpledemo.openecomp.org_1' deployment to finish ... Waiting for 'testconfig_aai-resources.api.simpledemo.openecomp.org_1' deployment to finish ... ERROR: testconfig_aai-resources.api.simpledemo.openecomp.org_1 deployment failed root@onap-aai-inst1:/opt# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1f1476cbd6f5 nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 14 minutes ago Up 14 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1 root@onap-aai-inst1:/opt# docker logs -f testconfig_aai-resources.api.simpledemo.openecomp.org_1 aai.hbase.simpledemo.openecomp.org: forward host lookup failed: Unknown host Waiting for hbase to be up FIX: reboot and add /etc/hosts entry right after startup before or after aai_install.sh but before test-config/deploy_vm1.sh root@onap-aai-inst1:~# cat /etc/hosts 10.0.1.2 aai.hbase.simpledemo.openecomp.org 10.12.5.213 aai.hbase.simpledemo.openecomp.org root@onap-aai-inst2:~# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1aaf137c4532 elasticsearch:2.4.1 "/docker-entrypoin..." About an hour ago Up About an hour 0.0.0.0:9200->9200/tcp, 9300/tcp elasticsearch e0846300cac5 aaionap/hbase:1.2.0 "/bin/sh -c \"/entr..." About an hour ago Up About an hour 0.0.0.0:2181->2181/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:8085->8085/tcp, 0.0.0.0:9090->9090/tcp, 0.0.0.0:16000->16000/tcp, 0.0.0.0:16010->16010/tcp, 9095/tcp, 0.0.0.0:16201->16201/tcp, 16301/tcp testconfig_aai.hbase.simpledemo.openecomp.org_1 root@onap-aai-inst1:~# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 90dc5a9791a9 nexus3.onap.org:10001/onap/model-loader "/opt/app/model-lo..." 36 minutes ago Up 35 minutes testconfig_model-loader_1 eee85b22d3f1 nexus3.onap.org:10001/onap/search-data-service "/opt/app/search-d..." 36 minutes ago Up 35 minutes 0.0.0.0:9509->9509/tcp testconfig_aai.searchservice.simpledemo.openecomp.org_1 ef4f1e9ab30e nexus3.onap.org:10001/onap/data-router "/opt/app/data-rou..." 36 minutes ago Up 35 minutes 0.0.0.0:9502->9502/tcp testconfig_datarouter_1 b19a8628ac43 nexus3.onap.org:10001/onap/sparky-be "/bin/sh -c /opt/a..." 36 minutes ago Up 36 minutes 8000/tcp, 0.0.0.0:9517->9517/tcp testconfig_sparky-be_1 d5caad8eaded aaionap/haproxy:1.1.0 "/docker-entrypoin..." 36 minutes ago Up 36 minutes 0.0.0.0:8443->8443/tcp testconfig_aai.api.simpledemo.openecomp.org_1 f2b36df952d6 nexus3.onap.org:10001/openecomp/aai-traversal "/bin/bash /opt/ap..." 38 minutes ago Up 38 minutes 0.0.0.0:8446->8446/tcp, 8447/tcp testconfig_aai-traversal.api.simpledemo.openecomp.org_1 663d0d3a3d82 nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 40 minutes ago Up 40 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1

Still need to verify the DNS setting for the other VMs

20171127: Running Heatbridge from robot

20171127: key management in the single/split vFW

POLICY-409 - Getting issue details... STATUS

20171127 Which template is supported vFW old/new-split or both

Use the newer split one in vFWCL as documented in POLICY-409 - Getting issue details... STATUS since 4th Nov.

20171128: VMware VIO Requirements for vFW Deployment

TODO: expand on requirement of MultiCloud for VF-Module creation on VMware VIO.

At the final Step of vf Module Creation SO Can use VIO in 2 modes .

(a) SO ↔ VIO

in this case there was Certificate challenges faced as per SO logs and resolved by doing following steps .

a.1 picked up the VIO Certifcate from the loadBalance VM and path : /usr/local/share/ca-certificates

a.2 copied the ceritificate to and copied to : /usr/local/share/ca-certificates inside MSO_TestLab Container .

a.3 run update-ca-certificates with root inside the mso_testlab docker

(b) SO ↔ MultiCloud ↔ VIO

b.1 need to update identity url in cloud-config.json present in SO Test lab container to have MultiCloud EndPoint .

b.2 multiCloud needs to register the VIM to ESR .

20171128: SDNC VM HD fills up - controller container shuts down 24h after a failed VNF preload

see

SDNC-204 - Getting issue details... STATUS

SDNC-156 - Getting issue details... STATUS

vFW status: 20171129: (Note CL videos from Marco are on the main demo page)

[12:50]

oom = SDC onboarding OK (master) - will do robot init tomorrow in 1.1

[12:51]

heat = reworked the vnf preload with the right network id - but the SDNC VM filled to 100% HD after 3 days - bringing down the controller (will raise a jira) - need a log rotation strategy - refreshing the VM or the stack for tomorrow at 12

|

| onap-sdnc | ubuntu-14-04-cloud-amd64 | oam_onap_Ze9k

| m1.large | onap_key_Ze9k | Active | nova | None | Running | 1 day, 5 hours |

Test Deployments

20171125:2100: HEAT

Ran out of ram on

| Ran out of ram for onap-multi-service | No valid host was found. There are not enough hosts available. compute-08: (RamFilter) Insufficient usable RAM: req:16384, avail:3297.0 MB, compute-09: (RamFilter) Insufficient usable RAM: req:16384, avail:13537.0 MB, compute-06: (RamFilter) Insufficient robot vm docker containers down root@onap-robot:/opt# ./robot_vm_init.sh Already up-to-date. Already up-to-date. Login Succeeded 1.1-STAGING-latest: Pulling from openecomp/testsuite Digest: sha256:5f48706ba91a4bb805bff39e67bb52b26011d59f690e53dfa1d803745939c76a Status: Image is up to date for nexus3.onap.org:10001/openecomp/testsuite:1.1-STAGING-latest Error response from daemon: No such container: openecompete_container fix: wait for them CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 77c6eba8c641 nexus3.onap.org:10001/onap/sniroemulator:latest "/docker-entrypoin..." 30 seconds ago Up 29 seconds 8080-8081/tcp, 0.0.0.0:8080->9999/tcp sniroemulator 903964fc8fe1 nexus3.onap.org:10001/openecomp/testsuite:1.1-STAGING-latest "lighttpd -D -f /e..." 30 seconds ago Up 29 seconds 0.0.0.0:88->88/tcp openecompete_container |

| Wait for deployment | AAI-513 - Getting issue details... STATUS |