20181220 - update for casablanca -TODO: review the vFW automation in https://github.com/garyiwu/onap-lab-ci - thanks Yang Xu

This long-winded page name will revert to "Running the ONAP vFirewall Demo...." when we are finished before 9 Dec - and moved out of the wiki root

Please join and post "validated" actions/config/results - but do not move or edit this page until we get a complete vFW run before Ideally the 4 Dec KubCon conference and worst case the 11 Dec ONAP Conference - thank you

Under construction - this page is a consolidation of all details in getting the vFirewall running over the next 2 weeks in prep of anyone that would like to demo it for the F2F in Dec.

ADD content ONLY when verified - with evidence (screen-cap, JSON output etc..) DO paste any questions and unverified config/actions in the comment section at the end - for the team to verify |

HEAT Daily meeting at 1200 EDT noon Nov 27 to 8 Dec 2017 - https://zoom.us/j/7939937123 see schedule at https://lists.onap.org/pipermail/onap-discuss/2017-November/006483.html

Statement of Work

Ideally we provide this page as a the draft that will go into ReadTheDocs.io - where this page gets deleted and referenced there.

There are currently 3 or more distinct pages, email threads, presentations, phone calls, meetings where all the details needed to "Step by Step" get a running vFirewall up are located.

We would like to get to the point where we were before Aug 2017 where an individual with an Openstack environment (OOM as well now) - could follow each instruction point (action - and expected/documented result/output) and end up with our current minimal sanity usecase - the vFirewall

If you have any details on configuration of getting up the vFirewall post them to the comments section and it will be tested and incorporated

Ideally any action added to this page itself - is fully tested with resulting output (text/screencap) - pasted as a reference.

JIRAs: OOM-459 - Getting issue details... STATUS for OOM and INT-106 - Getting issue details... STATUS for HEAT

Output

1- This set of instructions below - to go from an empty OOM host or OpenStack lab - all the way to closed loop running.

2 - A set of videos - the vFirewall from an already deployed OOM and HEAT deployment - see the reference videos from Running the ONAP Demos#ONAPDeploymentVideos see

INT-333

-

Getting issue details...

STATUS

3- Secondary videos on bringing up OOM and HEAT deployments

Running the vFirewall Demo

sync with Running the ONAP Demos#QuickstartInstructions

TODO: check for JIRA on appc demo.robot working : 20171128 (worked in 1.0.0)

20180307 - SDC 503 - see pod reordering in amsterdam https://lists.onap.org/pipermail/onap-discuss/2018-March/008403.html - need to raise jira

Prerequisites

| Artifact | Location | Notes |

|---|---|---|

private key (ssh-add) | obrienbiometrics:onap_public michaelobrien$ ssh-keygen SHA256:YzLggI8nGXna0Ssx0DMpLvZKSPTGZJ1mXwj2XZ+c8Gg michaelobrien@obrienbiometrics.local paste onap_public.pub into the pub_key: sections of all the onap_openstack and vFW env files | |

| openstack yaml and env | https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.0-SNAPSHOT/ demo/heat/onap/onap-openstack.* | |

vFirewall yaml and env unverified | We will use the split vFWCL (vFW closed loop) in demo/heat/vFWCL demo/heat/vFWCL/vFWPKG/base_vpkg.env demo/heat/vFWCL/vFWSNK/base_vfw.env image_name: ubuntu-14-04-cloud-amd64 flavor_name: m1.medium public_net_id: 971040b2-7059-49dc-b220-4fab50cb2ad4 cloud_env: openstack onap_private_net_id: oam_onap_6Gve onap_private_subnet_id: oam_onap_6Gve Note: the network must be the one that shows on the instances page - or the only non-shared one in the network list not the older https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/vFW/1.1.0-SNAPSHOT/ or the deprecated https://nexus.onap.org/content/sites/raw/org.openecomp.demo/heat/vFW/1.1.0-SNAPSHOT/ | |

| demo/heat/vFWCL/vFWPKG/base_vpkg.env | ||

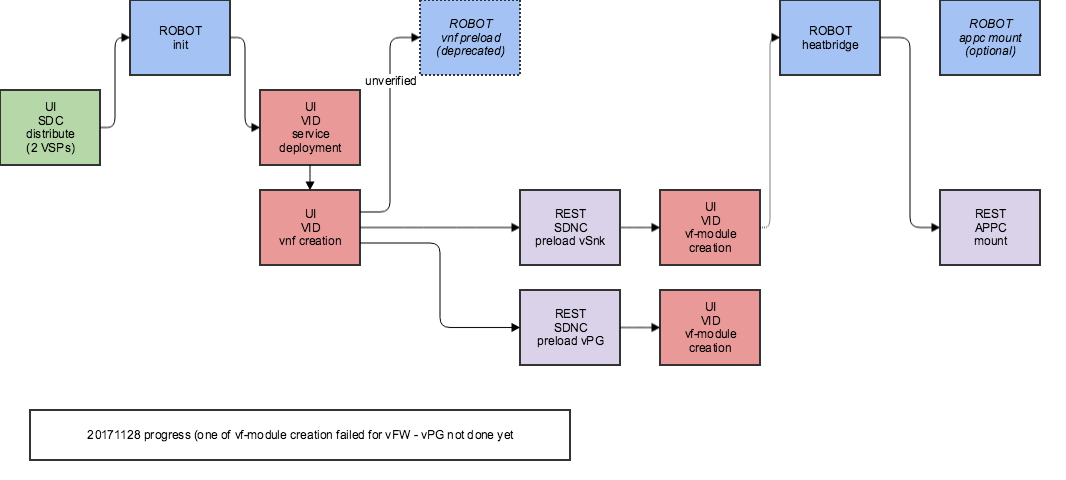

vFirewall Tasks

Ideally we have an automated one-click vFW deployment - in the works -

sync with Running the ONAP Demos#QuickstartInstructions

| T# | Task | Action Rest URL+JSON payload | Result JSON / Text / Screencap | Artifacts Link or attach file | Env OOM HEAT or both | Verify Read | Last run | Notes |

|---|---|---|---|---|---|---|---|---|

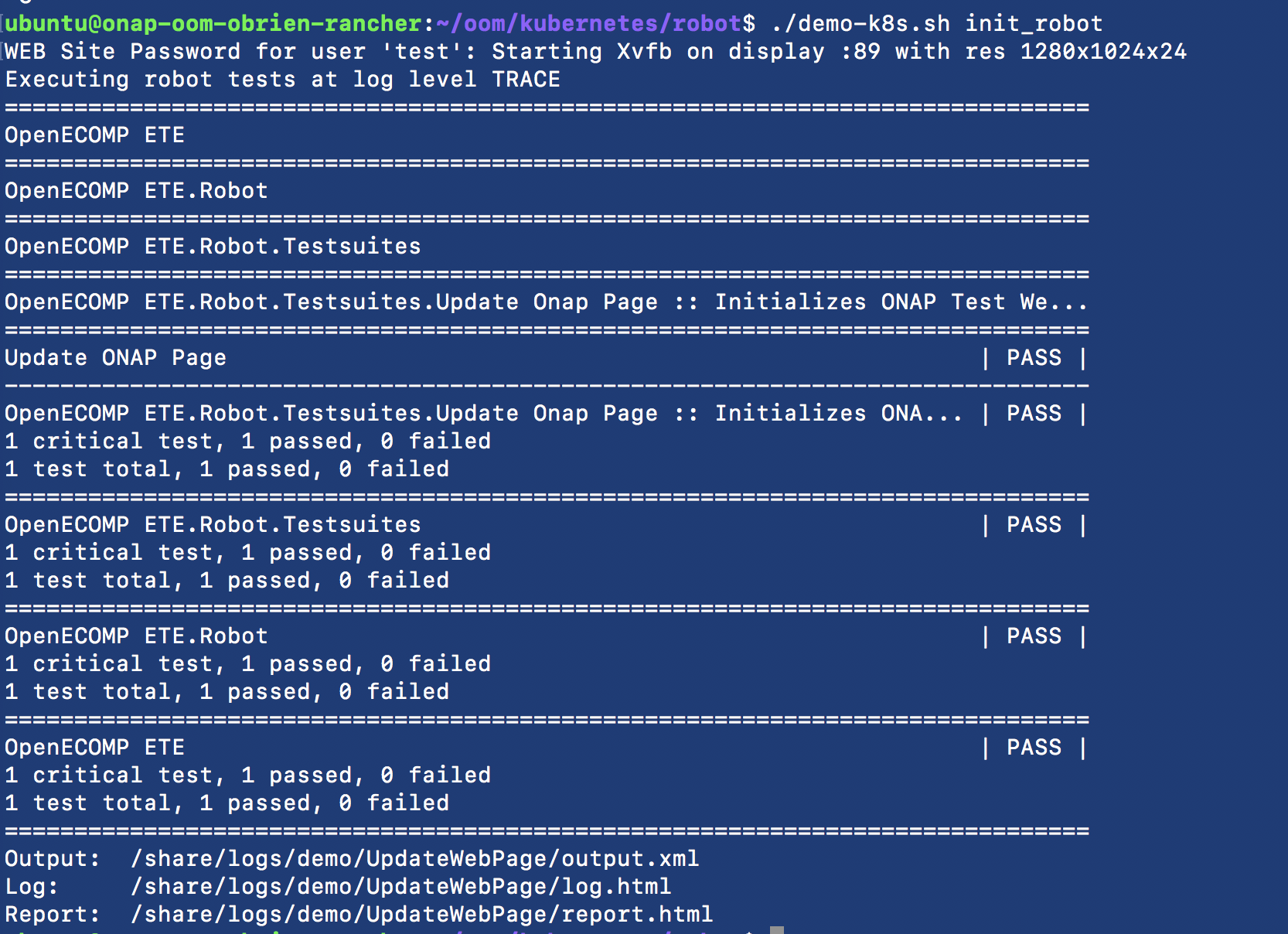

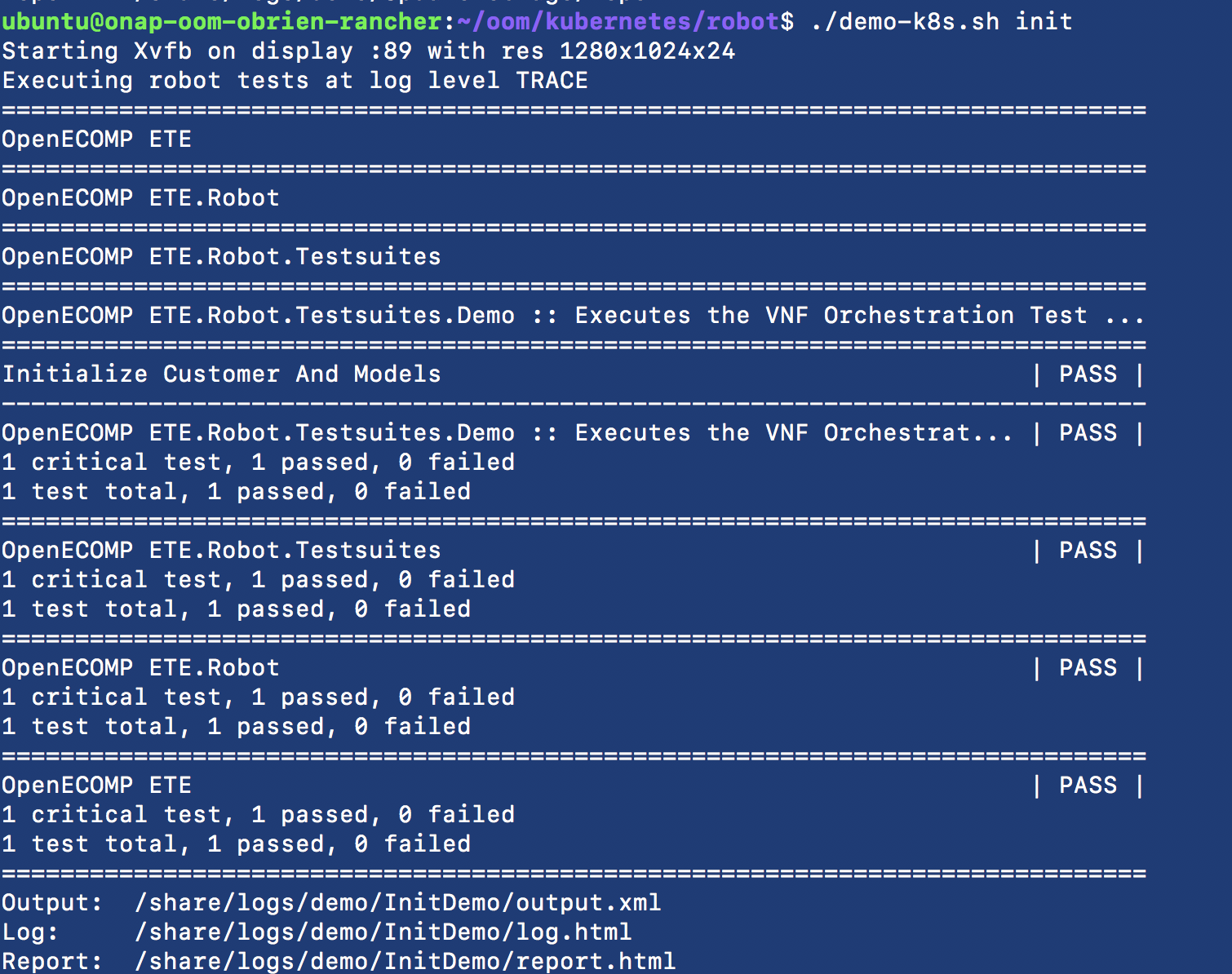

./demo-k8s.sh onap init_robot ./demo-k8s.sh init | start with a full DCAE deploy (amsterdam) via OOM ubuntu@a-onap-devopscd:~/oom/kubernetes/robot$ ./demo-k8s.sh onap init_robot Number of parameters: 2 KEY: init_robot WEB Site Password for user 'test': ++ ETEHOME=/var/opt/OpenECOMP_ETE ++ VARIABLEFILES='-V /share/config/vm_properties.py -V /share/config/integration_robot_properties.py -V /share/config/integration_preload_parameters.py' +++ kubectl --namespace onap get pods +++ sed 's/ .*//' +++ grep robot No resources found. ++ POD= ++ kubectl --namespace onap exec -- /var/opt/OpenECOMP_ETE/runTags.sh -V /share/config/vm_properties.py -V /share/config/integration_robot_properties.py -V /share/config/integration_preload_parameters.py -v WEB_PASSWORD:test -d /share/logs/demo/UpdateWebPage -i UpdateWebPage --display 89 ubuntu@a-onap-devopscd:~/oom/kubernetes/robot$ ./demo-k8s.sh onap init_robot Number of parameters: 2 KEY: init_robot WEB Site Password for user 'test': ++ ETEHOME=/var/opt/OpenECOMP_ETE ++ VARIABLEFILES='-V /share/config/vm_properties.py -V /share/config/integration_robot_properties.py -V /share/config/integration_preload_parameters.py' +++ kubectl --namespace onap get pods +++ sed 's/ .*//' +++ grep robot No resources found. ++ POD= ++ kubectl --namespace onap exec -- /var/opt/OpenECOMP_ETE/runTags.sh -V /share/config/vm_properties.py -V /share/config/integration_robot_properties.py -V /share/config/integration_preload_parameters.py -v WEB_PASSWORD:test -d /share/logs/demo/UpdateWebPage -i UpdateWebPage --display 89 | |||||||

| optional | Before robot init (init_customer and distribute | |||||||

| optional | cloud region PUT to AAI | from postman:code PUT /aai/v11/cloud-infrastructure/cloud-regions/cloud-region/Openstack/RegionOne HTTP/1.1 { | 201 created | OOM | GET /aai/v11/cloud-infrastructure/cloud-regions/cloud-region/Openstack/RegionOne HTTP/1.1 200 OK { | 20171126 | ||

1 optional | TBD - cloud region PUT to AAI | Verify: cloud-region is not set by robot ./demo.sh init (only the customer is - we need to run the rest call for cloud region ourselves watch intermittent issues bringing up aai1 containers in AAI-513 - Getting issue details... STATUS | HEAT | TBD 201711xx | ||||

SDC Distribution (manual) | HEAT http://portal.api.simpledemo.onap.org:8989/ONAPPORTAL/login.htm OOM: http://<host>:30211 License Model as cs0008 on SDC onboard | new license model | license key groups (network wide / Universal) | Entitlement pools (network wide / absolute 100 / CPU / 000001 / Other tbd / Month) | Feature Groups (123456) manuf ref # | Available Entitlement Pools (push right) | License Agreements | Add license agreement (unlimited) - push right / save / check-in / submit | Onboard breadcrumb VF Onboard | new Vendor (not Virtual) Software Product (FWL App L4+) - select network package not manual checkbox | select LA (Lversion 1, LA, then FG) save | upload zip | proceed to validation | checkin | submit Onboard home | drop vendor software prod repo | select, import vsp | create | icon | submit for testing Distributing as jm0007 | start testing | accept as cs0008 | sdc home | see firewall | add service | cat=l4, 123456 create | icon | composition, expand left app L4 - drag | submit for testing as jm0007 | start testing | accept as gv0001 | approve as op0001 | distribute | |||||||

TBD Customer creation | Note: robot ./demo.sh oom: oom/kubernetes/robot/demo-k8s.sh | |||||||

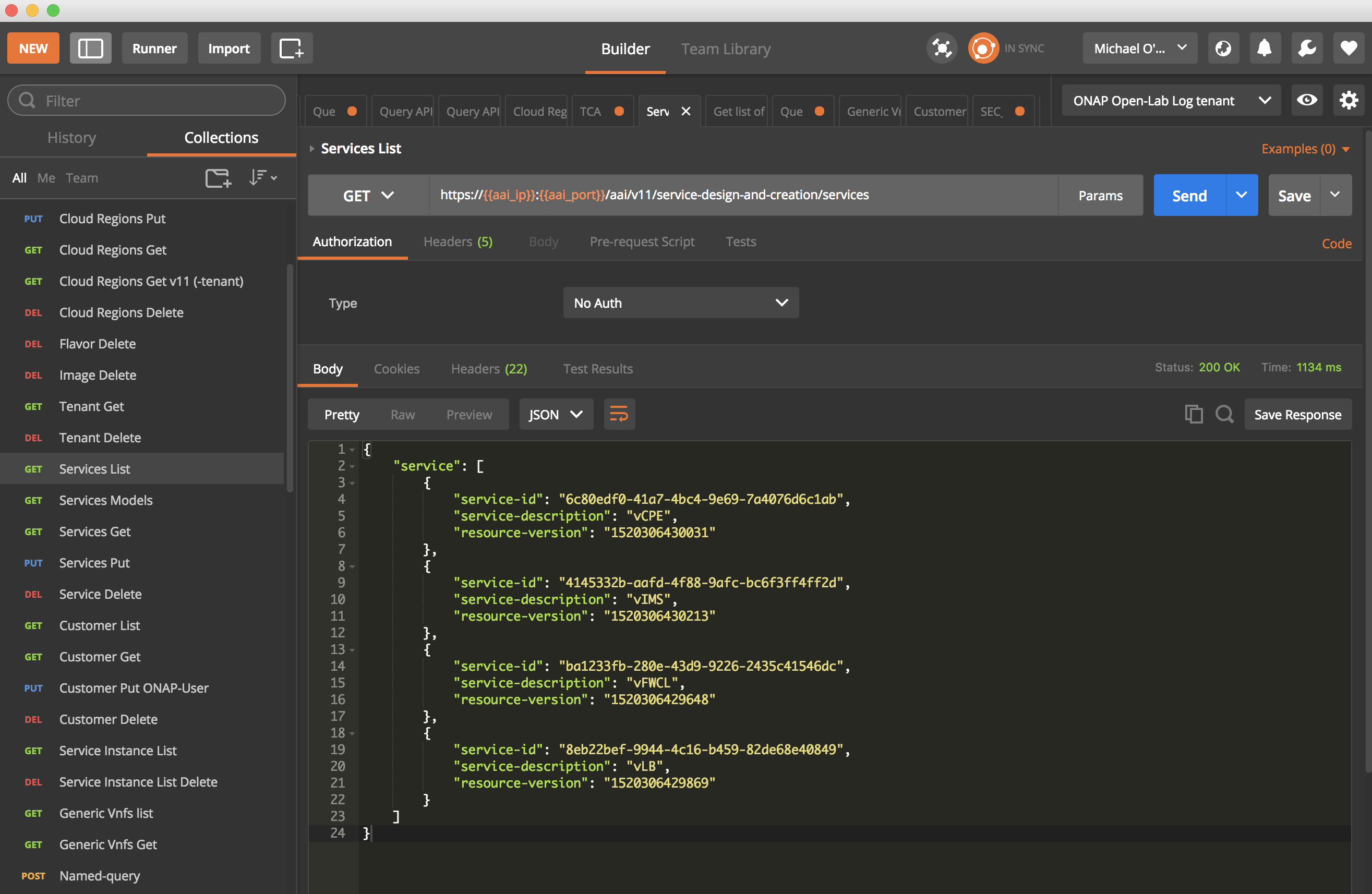

| SDC Model Distribution | If you are at this step - switch over to Alexis de Talhouët page on vFWCL instantiation, testing, and debuging | |||||||

| TBD VID Service creation | ||||||||

| TBD VID Service Instance deployment | ||||||||

| TBD VID Create VNF | ||||||||

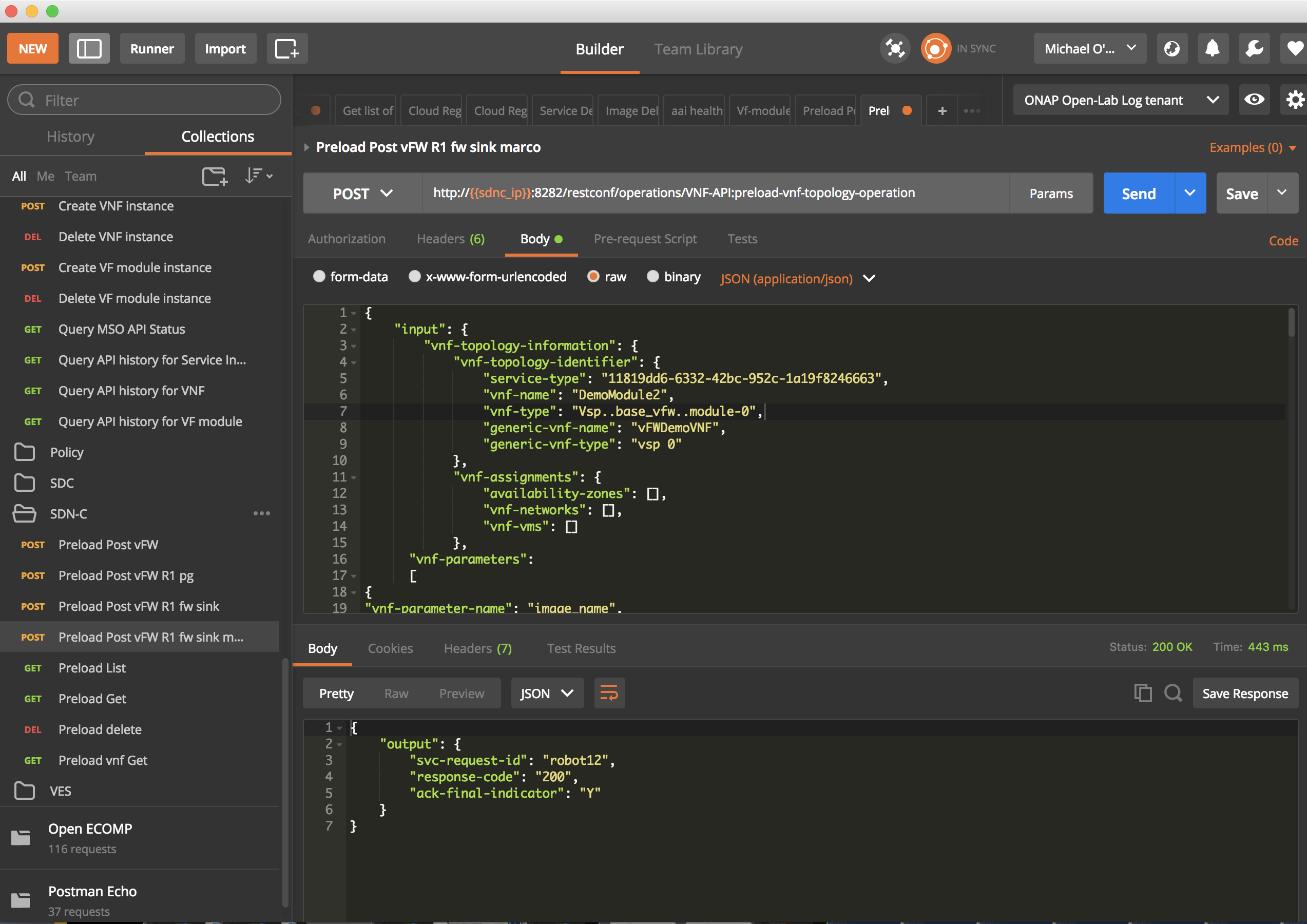

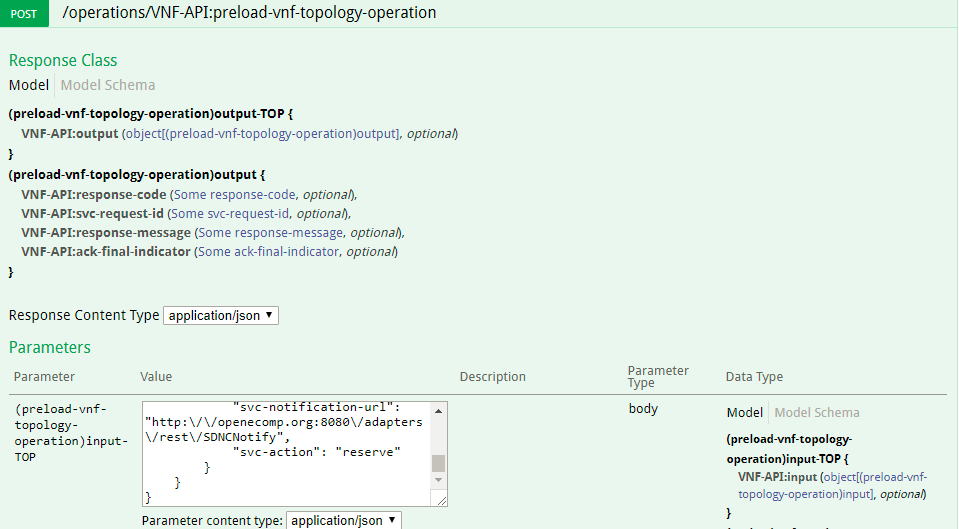

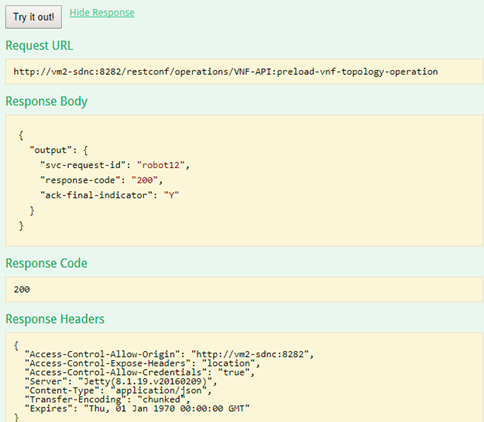

VNF preload OK (REST) | http://{{sdnc_ip}}:8282/restconf/operations/VNF-API:preload-vnf-topology-operation note the service-type change - see gui top right POST /restconf/operations/VNF-API:preload-vnf-topology-operation HTTP/1.1

Host: 10.12.5.92:8282

Accept: application/json

Content-Type: application/json

X-TransactionId: 0a3f6713-ba96-4971-a6f8-c2da85a3176e

X-FromAppId: API client

Authorization: Basic YWRtaW46S3A4Yko0U1hzek0wV1hsaGFrM2VIbGNzZTJnQXc4NHZhb0dHbUp2VXkyVQ==

Cache-Control: no-cache

Postman-Token: e1c8d1ec-4cd9-5744-3ac9-f83f0d3c71d4

{

"input": {

"vnf-topology-information": {

"vnf-topology-identifier": {

"service-type": "11819dd6-6332-42bc-952c-1a19f8246663",

"vnf-name": "DemoModule2",

"vnf-type": "Vsp..base_vfw..module-0",

"generic-vnf-name": "vFWDemoVNF",

"generic-vnf-type": "vsp 0"

},

"vnf-assignments": {

"availability-zones": [],

"vnf-networks": [],

"vnf-vms": []

},

"vnf-parameters":

[

{

"vnf-parameter-name": "image_name",

"vnf-parameter-value": "ubuntu-14-04-cloud-amd64"

},

{

"vnf-parameter-name": "flavor_name",

"vnf-parameter-value": "m1.medium"

},

{

"vnf-parameter-name": "public_net_id",

"vnf-parameter-value": "971040b2-7059-49dc-b220-4fab50cb2ad4"

},

{

"vnf-parameter-name": "unprotected_private_net_id",

"vnf-parameter-value": "zdfw1fwl01_unprotected"

},

{

"vnf-parameter-name": "unprotected_private_subnet_id",

"vnf-parameter-value": "zdfw1fwl01_unprotected_sub"

},

{

"vnf-parameter-name": "protected_private_net_id",

"vnf-parameter-value": "zdfw1fwl01_protected"

},

{

"vnf-parameter-name": "protected_private_subnet_id",

"vnf-parameter-value": "zdfw1fwl01_protected_sub"

},

{

"vnf-parameter-name": "onap_private_net_id",

"vnf-parameter-value": "oam_onap_Ze9k"

},

{

"vnf-parameter-name": "onap_private_subnet_id",

"vnf-parameter-value": "oam_onap_Ze9k"

},

{

"vnf-parameter-name": "unprotected_private_net_cidr",

"vnf-parameter-value": "192.168.10.0/24"

},

{

"vnf-parameter-name": "protected_private_net_cidr",

"vnf-parameter-value": "192.168.20.0/24"

},

{

"vnf-parameter-name": "onap_private_net_cidr",

"vnf-parameter-value": "10.0.0.0/16"

},

{

"vnf-parameter-name": "vfw_private_ip_0",

"vnf-parameter-value": "192.168.10.100"

},

{

"vnf-parameter-name": "vfw_private_ip_1",

"vnf-parameter-value": "192.168.20.100"

},

{

"vnf-parameter-name": "vfw_private_ip_2",

"vnf-parameter-value": "10.0.100.5"

},

{

"vnf-parameter-name": "vpg_private_ip_0",

"vnf-parameter-value": "192.168.10.200"

},

{

"vnf-parameter-name": "vsn_private_ip_0",

"vnf-parameter-value": "192.168.20.250"

},

{

"vnf-parameter-name": "vsn_private_ip_1",

"vnf-parameter-value": "10.0.100.4"

},

{

"vnf-parameter-name": "vfw_name_0",

"vnf-parameter-value": "vFWDemoVNF"

},

{

"vnf-parameter-name": "vsn_name_0",

"vnf-parameter-value": "zdfw1fwl01snk01"

},

{

"vnf-parameter-name": "vnf_id",

"vnf-parameter-value": "vFirewall_vSink_demo_app"

},

{

"vnf-parameter-name": "vf_module_id",

"vnf-parameter-value": "vFirewall_vSink"

},

{

"vnf-parameter-name": "dcae_collector_ip",

"vnf-parameter-value": "127.0.0.1"

},

{

"vnf-parameter-name": "dcae_collector_port",

"vnf-parameter-value": "8080"

},

{

"vnf-parameter-name": "repo_url_blob",

"vnf-parameter-value": "https://nexus.onap.org/content/sites/raw"

},

{

"vnf-parameter-name": "repo_url_artifacts",

"vnf-parameter-value": "https://nexus.onap.org/content/groups/staging"

},

{

"vnf-parameter-name": "demo_artifacts_version",

"vnf-parameter-value": "1.1.0"

},

{

"vnf-parameter-name": "install_script_version",

"vnf-parameter-value": "1.1.0-SNAPSHOT"

},

{

"vnf-parameter-name": "key_name",

"vnf-parameter-value": "onapkey"

},

{

"vnf-parameter-name": "pub_key",

"vnf-parameter-value": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDlc+Lkkd6qK4yrhwgyEXmDuseZihbdYk3Dd90p4/TTDCenGVdfdPU9r4KuCrn8nhjjhVvOx8s1hSi03NI9qHQasLcNCVavzse04kq/RlrkmEvSnqI0/HYNOMYASBQAxgF/pocbANnERcfzXrWiymK5Aqm3U8P25EkeKp9tQmSiijki8ywA5iXuBDWiPQxE5gtxotGMUH5EhElHXlQ2lWRc3IlHghfoh8sI3auz7Bimma3vEUd64e6uuZR5oxCdv3ybZBkYnOcgiGaeP7sWDpjggpI40bfoQ/PbZh4u9maLPmY8vm1HKebZgfwkgEXSi0B4QgUHlRcVWV7lNo+418Tt michaelobrien@obrienbiometrics"

},

{

"vnf-parameter-name": "cloud_env",

"vnf-parameter-value": "openstack"

}

]

},

"request-information": {

"request-id": "robot12",

"order-version": "1",

"notification-url": "openecomp.org",

"order-number": "1",

"request-action": "PreloadVNFRequest"

},

"sdnc-request-header": {

"svc-request-id": "robot12",

"svc-notification-url": "http:\/\/openecomp.org:8080\/adapters\/rest\/SDNCNotify",

"svc-action": "reserve"

}

}

}

Result 200 {

"output": {

"svc-request-id": "robot12",

"response-code": "200",

"ack-final-indicator": "Y"

}

}

| |||||||

VNF preload (alternative, no postman) | (hope I got it right) references to video are like "X-mm:ss some text" where X is 0..5 and the video is 20171128_1200_X_of_5_daily_session.mp4 |

| ||||||

SDNC VNF Preload (Integration-Jenkins lab) | (from Marco 20171128) | |||||||

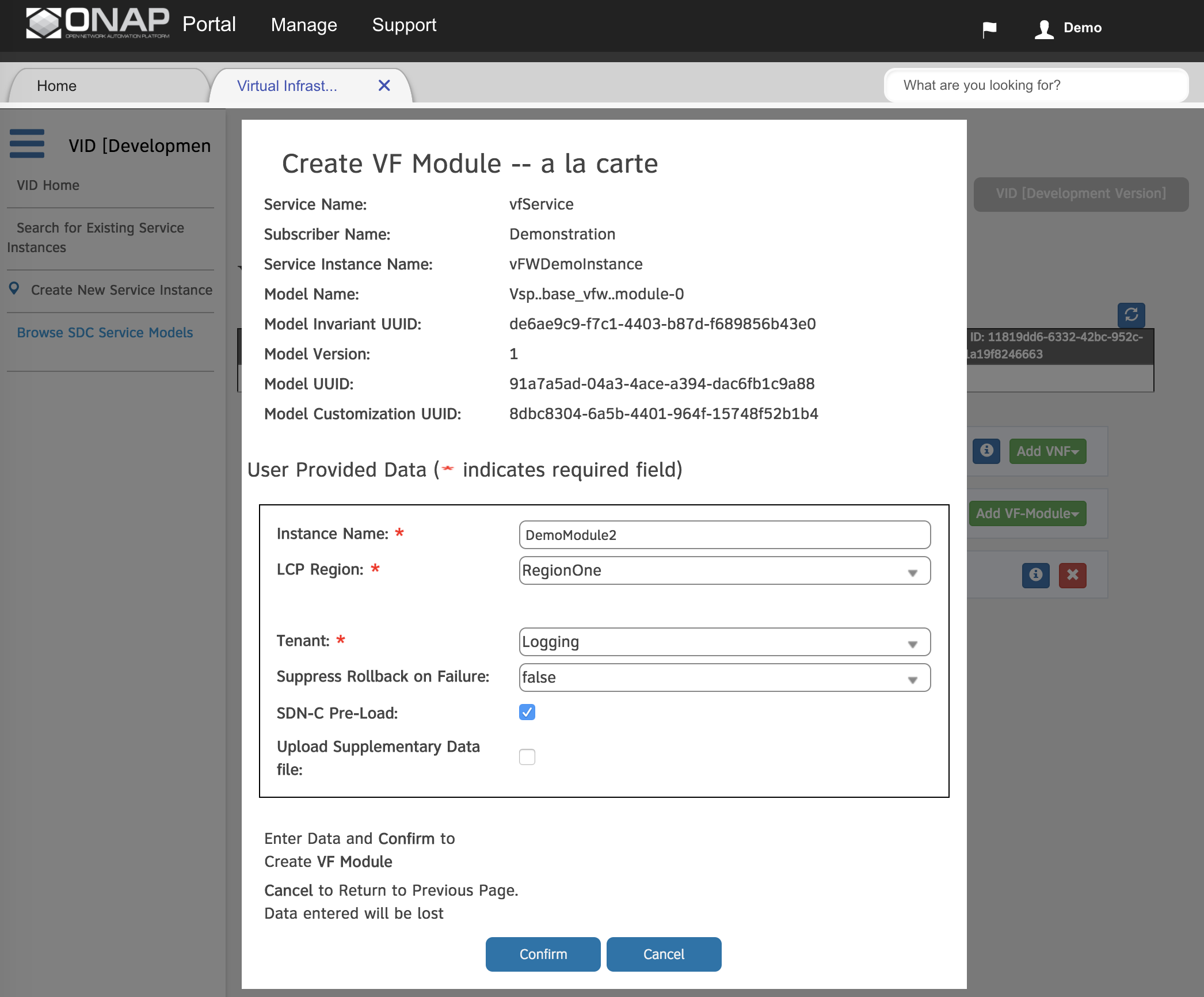

TBD VID Create VF-Module (vSNK) | Need to delete the previous failure first - raise JIRA on error for now postfix and recreate | |||||||

| TBD VID Create VF-Module (vPG) | ||||||||

| TBD Robot Heatbridge | ||||||||

| TBD APPC mountpoint (Robot or REST) | ||||||||

APPC mountpoint for vFW closed-loop (Integration-Jenkins lab) |

Verifying the vFirewall

Original/Ongoing Doc References

running vFW Demo on ONAP Amsterdam Release

Clearwater vIMS Onboarding and Instantiation

Vetted vFirewall Demo - Full draft how-to for F2F and ReadTheDocs

Integration Use Case Test Cases - could not find vFW content here

ONAP master branch Stabilization

OOM-1 - Getting issue details... STATUS

INT-106 - Getting issue details... STATUS

INT-284 - Getting issue details... STATUS

List of ONAP Implementations under Test by Environment

Please add yourself to the list so we can target EPIC work based on environment affinity

| Environment | Branch | Deployer | Contacts | vFW status | Notes |

|---|---|---|---|---|---|

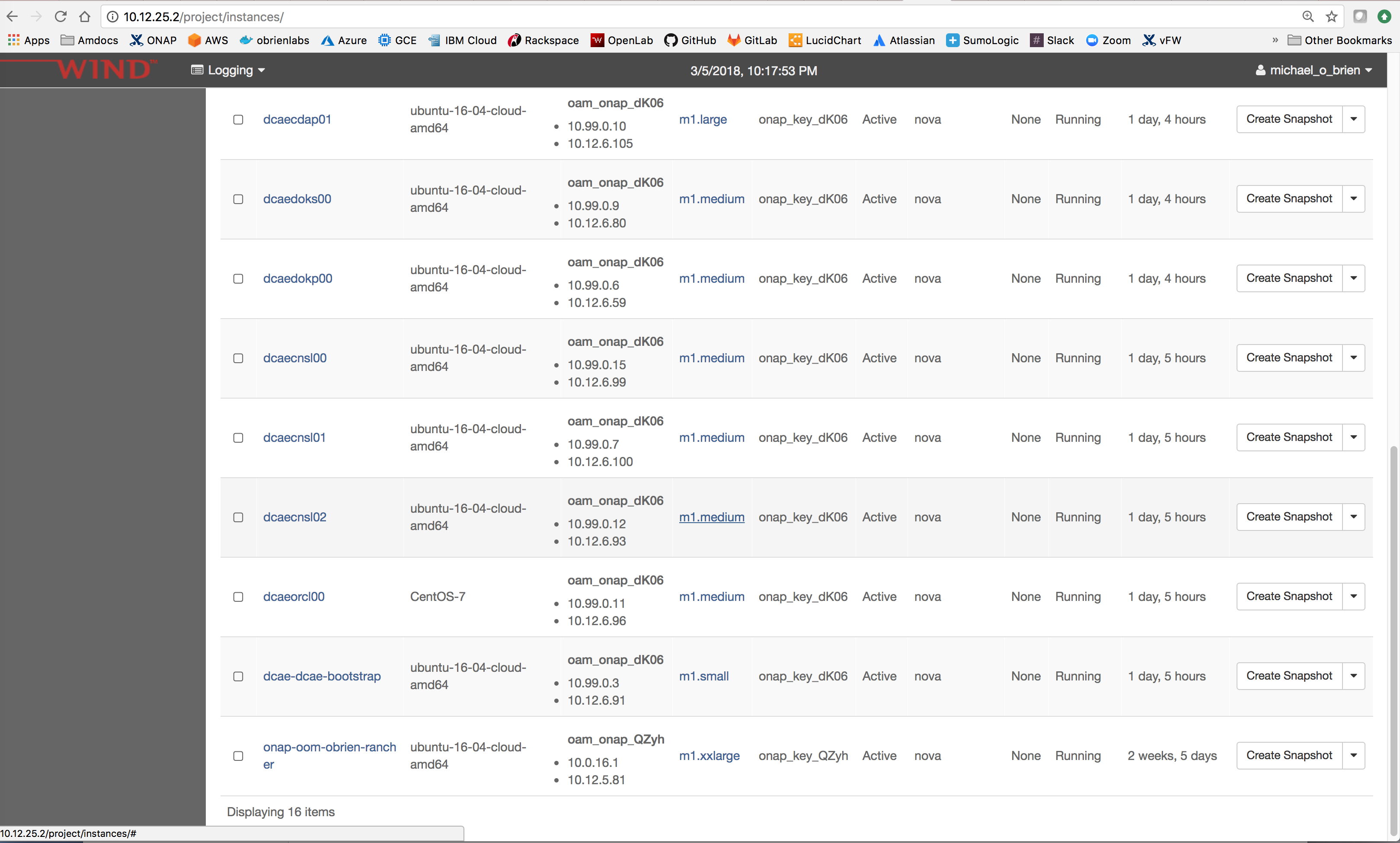

| Intel Openlab | master | HEAT | none | cloud: http://10.12.25.2/auth/login/?next=/project/instances/ servers Starting up (20171123) - not ready yet | |

| Intel Openlab | master | OOM Kubernetes | none | cloud: http://10.12.25.2/auth/login/?next=/project/instances/ server: 10.12.25.117 key: openlab_oom_key (pass by mail) (non-DCAE ONAP components only) partial 16g only until quota increased or we cluster 4 OOM-461 - Getting issue details... STATUS | |

| Intel Openlab | release-1.1.0 | OOM Kubernetes | none | cloud: http://10.12.25.2/auth/login/?next=/project/instances/ server: 10.12.25.119 key: openlab_oom_key (pass by mail) watch INT-344 - Getting issue details... STATUS | |

| Rackspace | master | OOM Kubernetes | none | (non-DCAE ONAP components only) DCAEGEN2 not tested yet for R1 | |

| Amazon AWS EC2 | master | OOM Kubernetes | none | (non-DCAE ONAP components only) - spot node terminated | |

| Amazon AWS ECS | OOM Kubernetes | pending test | n/a | (non-DCAE ONAP components only) - node terminated | |

| Google GCE | master | OOM Kubernetes | (non-DCAE ONAP components only) - node closed | ||

| Google GCE CaaS | OOM Kubernetes | pending test | n/a | (non-DCAE ONAP components only) | |

| Rackspace | HEAT | not supported yet | n/a | ||

| Alibaba VM | OOM Kubernetes | none | not tested yet |

Continuous Deployment References

| Tech | Servers | Details |

|---|---|---|

| HEAT | ||

| Kubernetes | Jobs (AWS) Analytics (AWS) CD servers (AWS) dev.onap.info | OOM R2 Master (Beijing) http://jenkins.onap.info/job/oom-cd-release-110-branch/ OOM R1 (Amsterdam) |

Formal Recordings

put all daily and ongoing vFW formal run videos here - in the leadup to the 2 conferences.

| Recording details | Recording embedded (currently limited to 30 min for the 100mb limit) or link | |

|---|---|---|

ONAP installation of OOM from clean VM to Healthcheck | ONAP R1 OOM from clean AWS VM to deployed ONAP 3 videos - reuse for OOM-395 - Getting issue details... STATUS 20171208 : GUI only for SDC onboarding in OOM 20171208 release-1.1.0 - no devops screens in this one so it can be used for demos | |

| OOM vFirewall SDC distribution to VF-Module creation | See Alexis' vFWCL instantiation, testing, and debuging | |

ONAP installation of HEAT from empty OPENSTACK to Healthcheck | Review the 20171128 videos from Marco via https://lists.onap.org/pipermail/onap-discuss/2017-November/006572.html on https://wiki.onap.org/display/DW/Running+the+ONAP+Demos INT-333 - Getting issue details... STATUS | |

| HEAT vFirewall SDC distribution to VF-Module creation | see Alexis' vFWCL instantiation, testing, and debuging |

Daily Working Recordings

| Date | Video | Notes / TODO |

|---|---|---|

2017 1127 | HEAT: get back to the vnf preload - continue to the 3 vFW VMs coming up todo: use the split template (abandon the single VNF) todo: stop using robot for all except customer creation - essentially everything is REST and VID todo: fix DNS of the onap env file OOM: go over master status, get a 1.1.0 branch up separately CHAT: From Brian to Everyone: (12:06) | |

| 20171128 | HEAT: error on vf-module creation (MSO Heat issue) 12:23:15 From Eric Debeau : The API for licence model creation are not documented in R1 ================================================================= Time markers in the videos to the left. The "Part"-number represents part 0..4 in the file name Part Marker comment | |

| 20171129 OOM | chat minimal OOM/HEAT deployment for vFW 11:04:28 From Michael O'Brien : ./createAll.bash -n onap -a mso | |

| 20171129 HEAT | chat | |

20171130 OOM | chat 11:06:25 From Alexis de Talhouët : /dockerdata-nfs/onap/robot/eteshare/config | |

20171201 OOM | Agenda Pull master and release-1.1.0 patches (merged) fixed yesterday by Alexis de T. https://gerrit.onap.org/r/#/q/status:merged+project:+oom Servers amsterdam.onap.info = 1.1.0 oom cd.onap.info = master onap-parameters.yaml points to my personal Rackspace in case we get to VF-Module creation The 2 vFWVL zips require a network predefined on Rackspace Results: robot init passed, but later Alexis tested the extra SDNC call from Marco's video and got all the way to vf-module creation for the first vFW template and saw the 2 VMs up in openstack - a very big thank you to Alexis for all the work in the last 4 days, the 15+ commits, the new config docker image .... retrofiting details over the weekend Also our friends at VMware under Ranki are running OK under OOM release-1.1.0 on prep of their demo of ONAP Amsterday R1 OOM at KubeCon on Tuesday morning - one week before our ONAP F2F in Santa Clara on the 11th. |

Generated JIRAs

OOM-461 - Getting issue details... STATUS

AAI-513 - Getting issue details... STATUS

INT-346 - Getting issue details... STATUS

OOM-475 - Getting issue details... STATUS

SDNC-208 - Getting issue details... STATUS

VID-96 - Getting issue details... STATUS

SDC-716 - Getting issue details... STATUS

OOM-478 - Getting issue details... STATUS

OOM-482 - Getting issue details... STATUS

OOM-483 - Getting issue details... STATUS

OOM-484 - Getting issue details... STATUS

Fixes to Pull and Test

https://gerrit.onap.org/r/#/c/25277/

https://gerrit.onap.org/r/#/c/25257/

https://gerrit.onap.org/r/#/c/25263/

https://gerrit.onap.org/r/#/c/25279/1

https://gerrit.onap.org/r/#/c/25283/

https://gerrit.onap.org/r/#/c/25289/1

Access and Deployment Configuration

OOM Deployment

Follow instructions at ONAP on Kubernetes#AutomatedInstallation

Openlab VNC and CLI

The following is missing some sections and a bit out of date (v2 deprecated in favor of v3) -Integration Testing Schedule, 10-09-2017

| Get an openlab account - Integration / Developer Lab Access | Stephen Gooch provides excellent/fast service - raise a JIRA like the following OPENLABS-75 - Getting issue details... STATUS |

Install openVPN - Using Lab POD-ONAP-01 Environment For OSX both Viscosity and TunnelBlick work fine | |

| Login to Openstack | |

| Install openstack command line tools | Tutorial: Configuring and Starting Up the Base ONAP Stack#InstallPythonvirtualenvTools(optional,butrecommended) |

| get your v3 rc file | |

| verify your openstack cli access (or just use the jumpbox) | obrienbiometrics:aws michaelobrien$ source logging-openrc.sh obrienbiometrics:aws michaelobrien$ openstack server list +--------------------------------------+---------+--------+-------------------------------+------------+ | ID | Name | Status | Networks | Image Name | +--------------------------------------+---------+--------+-------------------------------+------------+ | 1ed28213-62dd-4ef6-bdde-6307e0b42c8c | jenkins | ACTIVE | admin-private-mgmt=10.10.2.34 | | +--------------------------------------+---------+--------+-------------------------------+------------+ |

| get 15 elastic IP's | You may need to release unused IPs from other tenants - as we have 4 pools of 50 |

| fill in your stack env parameters | onap_openstack.env public_net_id: 971040b2-7059-49dc-b220-4fab50cb2ad4 public_net_name: external ubuntu_1404_image: ubuntu-14-04-cloud-amd64 ubuntu_1604_image: ubuntu-16-04-cloud-amd64 flavor_small: m1.small flavor_medium: m1.medium flavor_large: m1.large flavor_xlarge: m1.xlarge flavor_xxlarge: m1.xxlarge vm_base_name: onap key_name: onap_key pub_key: ssh-rsa AAAAobrienbiometrics nexus_repo: https://nexus.onap.org/content/sites/raw nexus_docker_repo: nexus3.onap.org:10001 nexus_username: docker nexus_password: docker dmaap_topic: AUTO artifacts_version: 1.1.0-SNAPSHOT openstack_tenant_id: a85a07a5f34d4yyyyyyy openstack_tenant_name: Logyyyyyyy openstack_username: michaelyyyyyy openstack_api_key: Wyyyyyyy openstack_auth_method: password openstack_region: RegionOne horizon_url: http://10.12.25.2:5000/v3 keystone_url: http://10.12.25.2:5000 dns_list: ["10.12.25.5", "8.8.8.8"] external_dns: 8.8.8.8 dns_forwarder: 10.12.25.5 oam_network_cidr: 10.0.0.0/16 follow http://onap.readthedocs.io/en/latest/submodules/dcaegen2.git/docs/sections/installation_heat.html dnsaas_config_enabled: true dnsaas_region: RegionOne dnsaas_keystone_url: http://10.12.25.5:5000/v3 dnsaas_tenant_name: Logging dnsaas_username: demo dnsaas_password: onapdemo dcae_keystone_url: http://10.12.25.5:5000/v2 dcae_centos_7_image: CentOS-7 dcae_domain: dcaeg2.onap.org dcae_public_key: PUT THE PUBLIC KEY OF A KEYPAIR HERE TO BE USED BETWEEN DCAE LAUNCHED VMS dcae_private_key: PUT THE SECRET KEY OF A KEYPAIR HERE TO BE USED BETWEEN DCAE LAUNCHED VMS |

| Run the HEAT stack | obrienbiometrics:openlab michaelobrien$ openstack stack create -t onap_openstack.yaml -e onap_openstack.env ONAP1125_6 | id | 9b026354-c071-4e31-8611-11fef2f408f5 | | stack_name | ONAP1125_6 | | description | Heat template to install ONAP components | | creation_time | 2017-11-26T02:16:57Z | | updated_time | 2017-11-26T02:16:57Z | | stack_status | CREATE_IN_PROGRESS | | stack_status_reason | Stack CREATE started | obrienbiometrics:openlab michaelobrien$ openstack stack list | 9b026354-c071-4e31-8611-11fef2f408f5 | ONAP1125_6 | CREATE_IN_PROGRESS | 2017-11-26T02:16:57Z | 2017-11-26T02:16:57Z |

| Wait for deployment | DCEA and several mutli-service VM's down obrienbiometrics:openlab michaelobrien$ openstack server list | db5388c0-9fa5-4359-ad21-689dd0ce8955 | onap-multi-service | ERROR | | ubuntu-16-04-cloud-amd64 | | d712dce1-d39d-4c6e-8d21-d9da9aa40ea1 | onap-dcae-bootstrap | ACTIVE | oam_onap_awsf=10.0.4.1, 10.12.5.197 | ubuntu-16-04-cloud-amd64 | | 4724fa8e-e10b-46cb-a81d-e7a9a7df041e | onap-aai-inst1 | ACTIVE | oam_onap_awsf=10.0.1.1, 10.12.5.118 | ubuntu-14-04-cloud-amd64 | | bc4ef1f3-422d-4e66-a21b-8c5a3d206938 | onap-portal | ACTIVE | oam_onap_awsf=10.0.9.1, 10.12.5.241 | ubuntu-14-04-cloud-amd64 | | 0f9edb8e-a379-4ab1-a6b1-c24763b69ecd | onap-policy | ACTIVE | oam_onap_awsf=10.0.6.1, 10.12.5.17 | ubuntu-14-04-cloud-amd64 | | bd1f29e3-e05e-4570-9f41-94af83aec7d6 | onap-aai-inst2 | ACTIVE | oam_onap_awsf=10.0.1.2, 10.12.5.252 | ubuntu-14-04-cloud-amd64 | | 57e90b08-d69e-4770-a298-97f64387e60d | onap-dns-server | ACTIVE | oam_onap_awsf=10.0.100.1, 10.12.5.237 | ubuntu-14-04-cloud-amd64 | | e9dd8800-0f77-4658-90b0-db98f4689485 | onap-message-router | ACTIVE | oam_onap_awsf=10.0.11.1, 10.12.5.234 | ubuntu-14-04-cloud-amd64 | | af6120d8-419a-45f9-ae32-b077b9ace407 | onap-sdnc | ACTIVE | oam_onap_awsf=10.0.7.1, 10.12.5.226 | ubuntu-14-04-cloud-amd64 | | b6daf774-dc6a-4c9b-aaa3-ca8fc5734ac3 | onap-clamp | ACTIVE | oam_onap_awsf=10.0.12.1, 10.12.5.128 | ubuntu-16-04-cloud-amd64 | | 31524fcb-d1b2-427b-b0bf-29e8fc65fded | onap-sdc | ACTIVE | oam_onap_awsf=10.0.3.1, 10.12.5.92 | ubuntu-16-04-cloud-amd64 | | 31f8c1e7-a7e7-417d-a9df-cc5d65d7777c | onap-vid | ACTIVE | oam_onap_awsf=10.0.8.1, 10.12.5.218 | ubuntu-14-04-cloud-amd64 | | 482befc8-2a6a-4da7-8e05-8f8b294f80d2 | onap-robot | ACTIVE | oam_onap_awsf=10.0.10.1, 10.12.6.21 | ubuntu-16-04-cloud-amd64 | | 8ea76387-aadf-46da-8257-5e9c2f80fa48 | onap-appc | ACTIVE | oam_onap_awsf=10.0.2.1, 10.12.5.222 | ubuntu-14-04-cloud-amd64 | | 43b90061-885f-454b-8830-9da3338fca56 | onap-so | ACTIVE | oam_onap_awsf=10.0.5.1, 10.12.5.230 | ubuntu-16-04-cloud-amd64 | |

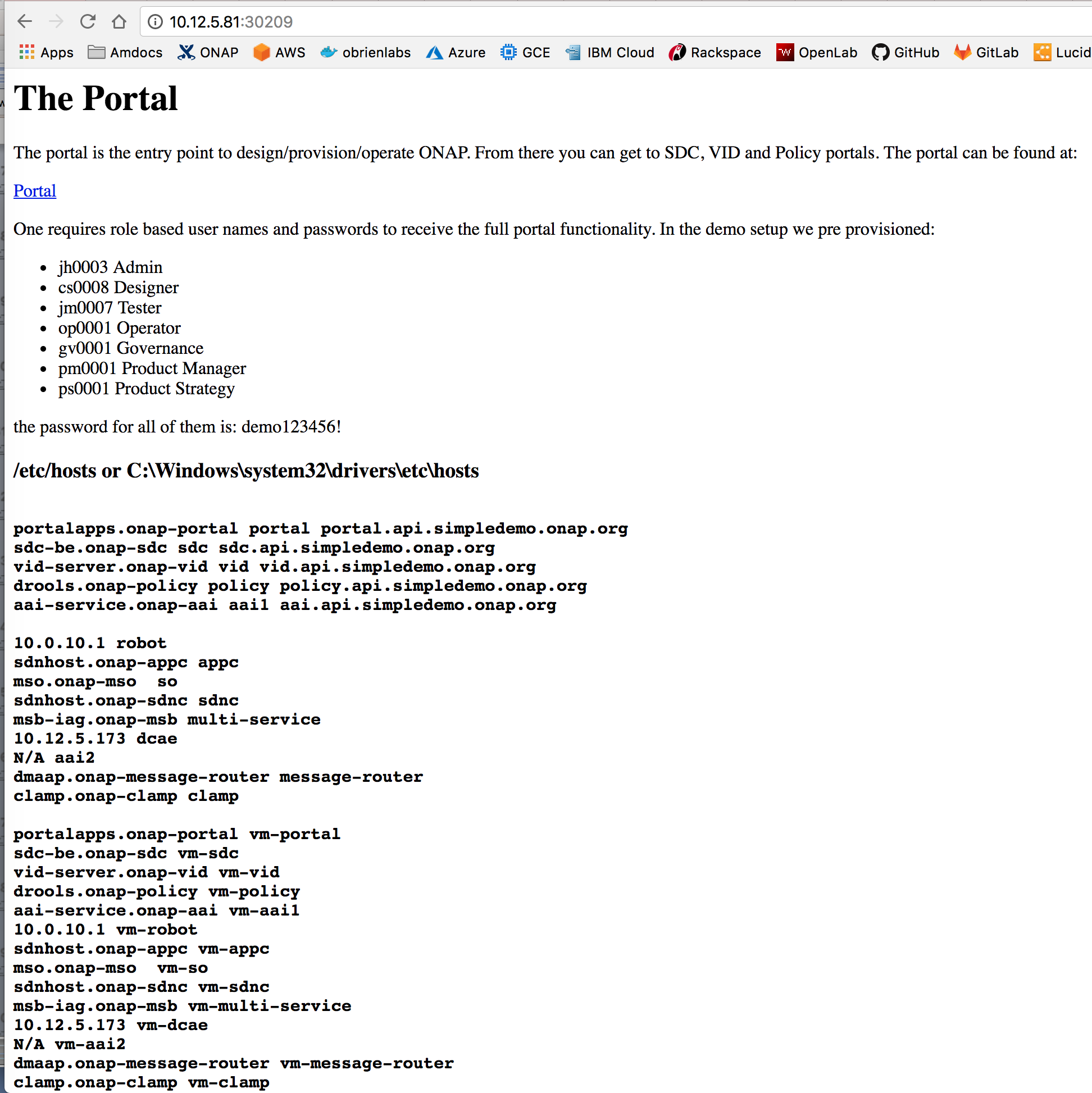

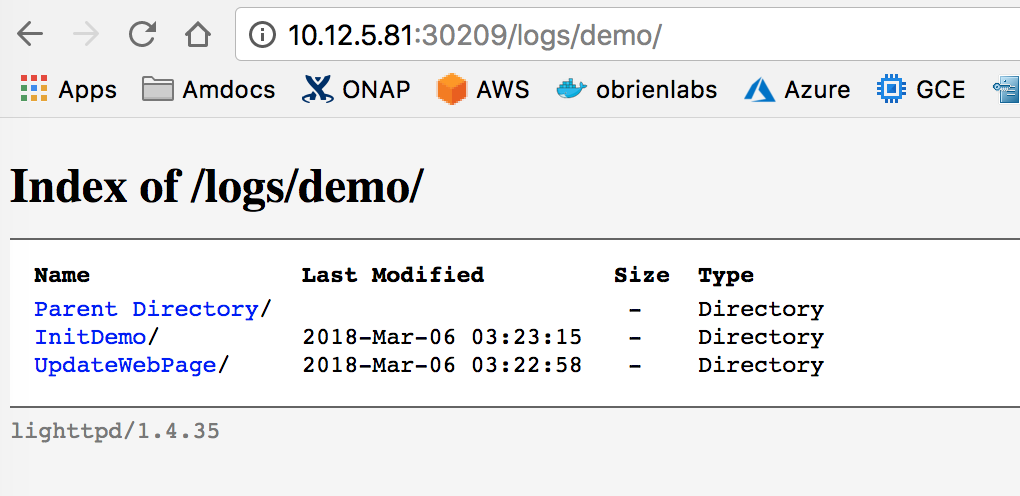

configure local vi /etc/hosts | Enable the robot webserver to see error logs and get /etc/hosts values HEAT root@onap-robot:/opt# ./demo.sh init_robot OOM oom/kubernetes/robot/demo-k8s.sh init_robot 10.12.5.214 policy.api.simpledemo.onap.org 10.12.5.118 portal.api.simpledemo.onap.org 10.12.5.141 sdc.api.simpledemo.onap.org 10.12.5.92 vid.api.simpledemo.onap.org |

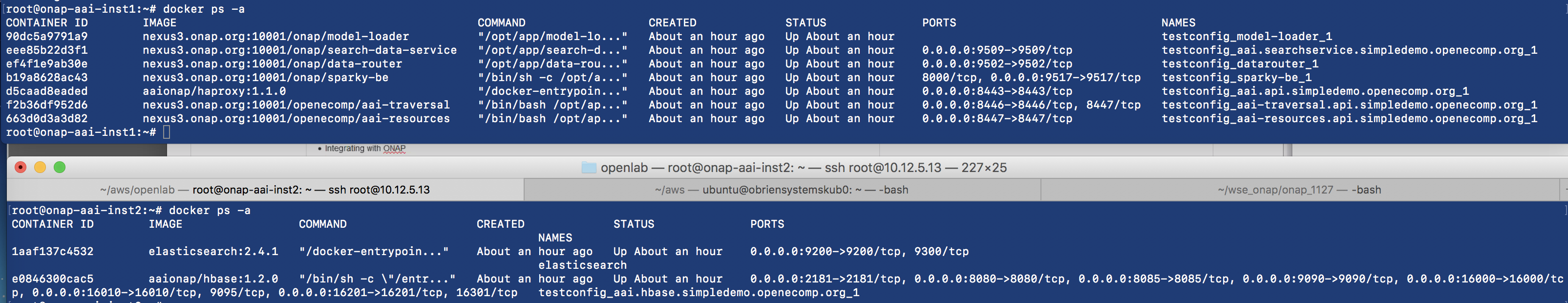

| Verify AAI_VM1 DNS | Intermittenty AAI1 does not fully initialize, docker will get installed and the test-config dir will get pulled - but the 6 docker containers in the compose file will not be up. login to aai immediately after stack startup and add the following before test-config root@onap-aai-inst1:~# cat /etc/hosts 10.0.1.2 aai.hbase.simpledemo.openecomp.org 10.12.5.213 aai.hbase.simpledemo.openecomp.org |

| Enable robot webserver | |

| Spot check containers | | 1fe78720-e418-47f7-bcfd-b6b93c791448 | oom-cd-obrien-cd0 | ACTIVE | admin-private-mgmt=10.10.2.15, 10.12.25.117 |

check robot health Core components are PASS so lets continue with the vFW | Thanks Alexis for the 20171130 changes http://jenkins.onap.info/job/oom-cd/528/console 15:39:15 Basic SDNGC Health Check | PASS | 15:39:15 Basic A&AI Health Check | PASS | 15:39:15 Basic Policy Health Check | PASS | 15:39:15 Basic MSO Health Check | PASS | 15:39:15 Basic ASDC Health Check | PASS | 15:39:15 Basic APPC Health Check | PASS | 15:39:15 Basic Portal Health Check | PASS | 15:39:15 Basic Message Router Health Check | PASS | 15:39:15 Basic VID Health Check | PASS | 15:39:16 Basic Microservice Bus Health Check | PASS | 15:39:16 Basic CLAMP Health Check | PASS | 15:39:16 catalog API Health Check | PASS | 15:39:16 emsdriver API Health Check | PASS | 15:39:16 gvnfmdriver API Health Check | PASS | 15:39:16 huaweivnfmdriver API Health Check | PASS | 15:39:16 multicloud API Health Check | PASS | 15:39:16 multicloud-ocata API Health Check | PASS | 15:39:16 multicloud-titanium_cloud API Health Check | PASS | 15:39:16 multicloud-vio API Health Check | PASS | 15:39:16 nokiavnfmdriver API Health Check | PASS | 15:39:16 nslcm API Health Check | PASS | 15:39:16 resmgr API Health Check | PASS | 15:39:16 usecaseui-gui API Health Check | PASS | 15:39:16 vnflcm API Health Check | PASS | 15:39:16 vnfmgr API Health Check | PASS | 15:39:16 vnfres API Health Check | PASS | 15:39:16 workflow API Health Check | PASS | 15:39:16 ztesdncdriver API Health Check | PASS | 15:39:16 ztevmanagerdriver API Health Check | PASS | 15:39:16 OpenECOMP ETE.Robot.Testsuites.Health-Check :: Testing ecomp compo... | FAIL | 15:39:16 30 critical tests, 29 passed, 1 failed 15:39:16 30 tests total, 29 passed, 1 failed |

Design/Runtime Issues

20171122: Do we run the older robot preload or do we do the SDNC rest PUT manually

Older Tutorial: Creating a Service Instance from a Design Model#RunRobotdemo.shpreloadofDemoModule

20171122: Do we use the older June vFW zip (yaml + env) or must we use a new split template

investigate Brian's comment on running vFW Demo on ONAP Amsterdam Release - "If you want to do closed loop for vFW there is a new two VNF service for Amsterdam (vFWCL - it is in the demo repo) that separates the traffic generator into a second VNF/Heat stack so that Policy an associate the event on the LB with the VNF to be controlled (the traffic generator) through APPC. Contact Pam and Marco for details."

INT-342 - Getting issue details... STATUS

20171128: we are using the split vFWCL version

20171122: Do we run the older robot appc mountpoint or do we do the APPC rest PUT manually

20171125: Do we need R1 components to run the vFirewall like MultiVIM

There was a question about this from several developers - specifically is MSO wrapped now - or can we run with a minimal set of VM's to run the vFW.

INT-346 - Getting issue details... STATUS

20171125: Workaround for intermittent AAI-vm1 failure in HEAT

https://lists.onap.org/pipermail/onap-discuss/2017-November/006508.html

AAI-513 - Getting issue details... STATUS

For now my internal DNS was not working - AAI1 did not see AAI2 - thanks Venkata - harcoded the following in aai1 /etc/hosts

root@onap-aai-inst1:~# cat /etc/hosts 10.0.1.2 aai.hbase.simpledemo.openecomp.org 10.12.5.213 aai.hbase.simpledemo.openecomp.org root@onap-aai-inst1:/opt/test-config# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 603e85af586f nexus3.onap.org:10001/onap/model-loader "/opt/app/model-lo..." About a minute ago Up About a minute testconfig_model-loader_1 9826995b7ad5 nexus3.onap.org:10001/onap/data-router "/opt/app/data-rou..." About a minute ago Up About a minute 0.0.0.0:9502->9502/tcp testconfig_datarouter_1 19dd8614b767 nexus3.onap.org:10001/onap/search-data-service "/opt/app/search-d..." About a minute ago Up About a minute 0.0.0.0:9509->9509/tcp testconfig_aai.searchservice.simpledemo.openecomp.org_1 89b93577733f nexus3.onap.org:10001/onap/sparky-be "/bin/sh -c /opt/a..." About a minute ago Up About a minute 8000/tcp, 0.0.0.0:9517->9517/tcp testconfig_sparky-be_1 c13e604e1fdc aaionap/haproxy:1.1.0 "/docker-entrypoin..." About a minute ago Up About a minute 0.0.0.0:8443->8443/tcp testconfig_aai.api.simpledemo.openecomp.org_1 00aa79860bd5 nexus3.onap.org:10001/openecomp/aai-traversal "/bin/bash /opt/ap..." 4 minutes ago Up 4 minutes 0.0.0.0:8446->8446/tcp, 8447/tcp testconfig_aai-traversal.api.simpledemo.openecomp.org_1 54747c3594fc nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 7 minutes ago Up 7 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1

oot@onap-aai-inst1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES root@onap-aai-inst1:/opt# ./aai_vm_init.sh Waiting for 'testconfig_aai-resources.api.simpledemo.openecomp.org_1' deployment to finish ... Waiting for 'testconfig_aai-resources.api.simpledemo.openecomp.org_1' deployment to finish ... ERROR: testconfig_aai-resources.api.simpledemo.openecomp.org_1 deployment failed root@onap-aai-inst1:/opt# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1f1476cbd6f5 nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 14 minutes ago Up 14 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1 root@onap-aai-inst1:/opt# docker logs -f testconfig_aai-resources.api.simpledemo.openecomp.org_1 aai.hbase.simpledemo.openecomp.org: forward host lookup failed: Unknown host Waiting for hbase to be up FIX: reboot and add /etc/hosts entry right after startup before or after aai_install.sh but before test-config/deploy_vm1.sh root@onap-aai-inst1:~# cat /etc/hosts 10.0.1.2 aai.hbase.simpledemo.openecomp.org 10.12.5.213 aai.hbase.simpledemo.openecomp.org root@onap-aai-inst2:~# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1aaf137c4532 elasticsearch:2.4.1 "/docker-entrypoin..." About an hour ago Up About an hour 0.0.0.0:9200->9200/tcp, 9300/tcp elasticsearch e0846300cac5 aaionap/hbase:1.2.0 "/bin/sh -c \"/entr..." About an hour ago Up About an hour 0.0.0.0:2181->2181/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:8085->8085/tcp, 0.0.0.0:9090->9090/tcp, 0.0.0.0:16000->16000/tcp, 0.0.0.0:16010->16010/tcp, 9095/tcp, 0.0.0.0:16201->16201/tcp, 16301/tcp testconfig_aai.hbase.simpledemo.openecomp.org_1 root@onap-aai-inst1:~# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 90dc5a9791a9 nexus3.onap.org:10001/onap/model-loader "/opt/app/model-lo..." 36 minutes ago Up 35 minutes testconfig_model-loader_1 eee85b22d3f1 nexus3.onap.org:10001/onap/search-data-service "/opt/app/search-d..." 36 minutes ago Up 35 minutes 0.0.0.0:9509->9509/tcp testconfig_aai.searchservice.simpledemo.openecomp.org_1 ef4f1e9ab30e nexus3.onap.org:10001/onap/data-router "/opt/app/data-rou..." 36 minutes ago Up 35 minutes 0.0.0.0:9502->9502/tcp testconfig_datarouter_1 b19a8628ac43 nexus3.onap.org:10001/onap/sparky-be "/bin/sh -c /opt/a..." 36 minutes ago Up 36 minutes 8000/tcp, 0.0.0.0:9517->9517/tcp testconfig_sparky-be_1 d5caad8eaded aaionap/haproxy:1.1.0 "/docker-entrypoin..." 36 minutes ago Up 36 minutes 0.0.0.0:8443->8443/tcp testconfig_aai.api.simpledemo.openecomp.org_1 f2b36df952d6 nexus3.onap.org:10001/openecomp/aai-traversal "/bin/bash /opt/ap..." 38 minutes ago Up 38 minutes 0.0.0.0:8446->8446/tcp, 8447/tcp testconfig_aai-traversal.api.simpledemo.openecomp.org_1 663d0d3a3d82 nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 40 minutes ago Up 40 minutes 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1

Still need to verify the DNS setting for the other VMs

20171127: Running Heatbridge from robot

20171127: key management in the single/split vFW

POLICY-409 - Getting issue details... STATUS

20171127 Which template is supported vFW old/new-split or both

Use the newer split one in vFWCL as documented in POLICY-409 - Getting issue details... STATUS since 4th Nov.

20171128: VMware VIO Requirements for vFW Deployment

TODO: expand on requirement of MultiCloud for VF-Module creation on VMware VIO.

At the final Step of vf Module Creation SO Can use VIO in 2 modes .

(a) SO ↔ VIO

in this case there was Certificate challenges faced as per SO logs and resolved by doing following steps .

a.1 picked up the VIO Certifcate from the loadBalance VM and path : /usr/local/share/ca-certificates

a.2 copied the ceritificate to and copied to : /usr/local/share/ca-certificates inside MSO_TestLab Container .

a.3 run update-ca-certificates with root inside the mso_testlab docker

(b) SO ↔ MultiCloud ↔ VIO

b.1 need to update identity url in cloud-config.json present in SO Test lab container to have MultiCloud EndPoint .

b.2 multiCloud needs to register the VIM to ESR .

20171128: SDNC VM HD fills up - controller container shuts down 24h after a failed VNF preload

see

SDNC-204 - Getting issue details... STATUS

SDNC-156 - Getting issue details... STATUS

vFW status: 20171129: (Note CL videos from Marco are on the main demo page)

[12:50]

oom = SDC onboarding OK (master) - will do robot init tomorrow in 1.1

[12:51]

heat = reworked the vnf preload with the right network id - but the SDNC VM filled to 100% HD after 3 days - bringing down the controller (will raise a jira) - need a log rotation strategy - refreshing the VM or the stack for tomorrow at 12

|

| onap-sdnc | ubuntu-14-04-cloud-amd64 | oam_onap_Ze9k

| m1.large | onap_key_Ze9k | Active | nova | None | Running | 1 day, 5 hours |

Fix: reboot the instance to get back to 8%

root@onap-aai-inst1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 90dc5a9791a9 nexus3.onap.org:10001/onap/model-loader "/opt/app/model-lo..." 37 hours ago Up 37 hours testconfig_model-loader_1 eee85b22d3f1 nexus3.onap.org:10001/onap/search-data-service "/opt/app/search-d..." 37 hours ago Up 37 hours 0.0.0.0:9509->9509/tcp testconfig_aai.searchservice.simpledemo.openecomp.org_1 ef4f1e9ab30e nexus3.onap.org:10001/onap/data-router "/opt/app/data-rou..." 37 hours ago Up 37 hours 0.0.0.0:9502->9502/tcp testconfig_datarouter_1 b19a8628ac43 nexus3.onap.org:10001/onap/sparky-be "/bin/sh -c /opt/a..." 37 hours ago Up 37 hours 8000/tcp, 0.0.0.0:9517->9517/tcp testconfig_sparky-be_1 d5caad8eaded aaionap/haproxy:1.1.0 "/docker-entrypoin..." 37 hours ago Up 37 hours 0.0.0.0:8443->8443/tcp testconfig_aai.api.simpledemo.openecomp.org_1 f2b36df952d6 nexus3.onap.org:10001/openecomp/aai-traversal "/bin/bash /opt/ap..." 37 hours ago Up 37 hours 0.0.0.0:8446->8446/tcp, 8447/tcp testconfig_aai-traversal.api.simpledemo.openecomp.org_1 663d0d3a3d82 nexus3.onap.org:10001/openecomp/aai-resources "/bin/bash /opt/ap..." 37 hours ago Up 37 hours 0.0.0.0:8447->8447/tcp testconfig_aai-resources.api.simpledemo.openecomp.org_1 root@onap-aai-inst1:~# df Filesystem 1K-blocks Used Available Use% Mounted on /dev/vda1 82536112 6153472 72996520 8% / |

Test Deployments

20171125:2100: HEAT

Ran out of ram on

| Ran out of ram for onap-multi-service | No valid host was found. There are not enough hosts available. compute-08: (RamFilter) Insufficient usable RAM: req:16384, avail:3297.0 MB, compute-09: (RamFilter) Insufficient usable RAM: req:16384, avail:13537.0 MB, compute-06: (RamFilter) Insufficient robot vm docker containers down root@onap-robot:/opt# ./robot_vm_init.sh Already up-to-date. Already up-to-date. Login Succeeded 1.1-STAGING-latest: Pulling from openecomp/testsuite Digest: sha256:5f48706ba91a4bb805bff39e67bb52b26011d59f690e53dfa1d803745939c76a Status: Image is up to date for nexus3.onap.org:10001/openecomp/testsuite:1.1-STAGING-latest Error response from daemon: No such container: openecompete_container fix: wait for them CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 77c6eba8c641 nexus3.onap.org:10001/onap/sniroemulator:latest "/docker-entrypoin..." 30 seconds ago Up 29 seconds 8080-8081/tcp, 0.0.0.0:8080->9999/tcp sniroemulator 903964fc8fe1 nexus3.onap.org:10001/openecomp/testsuite:1.1-STAGING-latest "lighttpd -D -f /e..." 30 seconds ago Up 29 seconds 0.0.0.0:88->88/tcp openecompete_container |

| Wait for deployment | AAI-513 - Getting issue details... STATUS |

20171201: OOM release-1.1.0

Filling in over the weekend | See daily videos and Alexis Videos on the vFWCL and his expanded wiki |

48 Comments

Ran Pollak

Great Page Michael,

I've also add the link of "ONAP master branch Stabilization" so it will be more helpful to know current Issues & WA for the community.

ramki krishnan

Really Cool Michael - we will also VMware VIO details shortly.

Michael O'Brien

Incorporate answers to questions in https://lists.onap.org/pipermail/onap-discuss/2017-November/006537.html

Gaurav Gupta

Updated the VIO Requirement Section

Michael O'Brien

vFW status: 20171129: (Note CL videos from Marco are on the main demo page)

[12:50]

oom = SDC onboarding OK (master) - will do robot init tomorrow in 1.1

[12:51]

heat = reworked the vnf preload with the right network id - but the SDNC VM filled to 100% HD after 3 days - bringing down the controller (will raise a jira) - need a log rotation strategy - refreshing the VM or the stack for tomorrow at 12

Brian Freeman

The SDNC unknown.log fill is because of a bug in the delete processing (and the wrong configuration in log4j that doesn't roll unknown.log). Both are fixed.

Remove unknown.log and restart the sdnc_controller_container

https://gerrit.onap.org/r/#/c/24805/

https://gerrit.onap.org/r/#/c/24927/

Check with Dan to see if you are getting the right container when you run sdnc_vm_init.sh

Michael O'Brien

Will do, I will redeploy the stack today - but 24805/24927 were merged on the 22nd - my stack is from the 26th - so likely we have not updated the sh script to pull the latest image yet.

SDNC-156 - Getting issue details... STATUS

SDNC-204 - Getting issue details... STATUS

/miochael

Brian Freeman

Michael,

A way to check if you have the latest SDNC containter is to check

.../current/etc/org.ops4j.pax.logging.cfg to look for directedGraphNode instead of unknown as the default appender log file.

Brian

log4j.appender.directed-graph-node-id.appender.layout.ConversionPattern=%d{ISO8601} | %-5.5p | %X{nodeId} | %m%nlog4j.appender.directed-graph-node-id.appender.file=${karaf.data}/log/$\\{nodeId\\}.logBrian Freeman

I removed unknown.log and rebooted the VM. The sdnc_controller_container is 13 days old and the logging.cfg doesnt have the changes for log roll on unknown.log. Need to work with Dan to get the updated container.

Brian Freeman

I tried to do apt-get update in the container and got failures so not sure whats wrong on that VM

Michael O'Brien

I rebooted the SDNC VM and was able to do the preload the Marco and ourselves setup - I had a liveness issue with the SDC VM - rebooted - OK, When creating the VF-Module for vFW sink - I ran into the issue where we cannot delete the previously failed VF-Module on the VNF - will rerun from the beginning on a new service.

http://{{sdnc_ip}}:8282/restconf/operations/VNF-API:preload-vnf-topology-operation

{"output": {"svc-request-id":"robot12","response-code":"200","ack-final-indicator":"Y"}}Will rest the same on OOM today - on the 1.1.0 branch

thank you

/michael

Michael O'Brien

TODO: post OOM release-1.1.0 status 201711

https://gerrit.onap.org/r/#/c/25283/

ubuntu@ip-172-31-82-11:~/oom$ git status

On branch release-1.1.0

ubuntu@ip-172-31-82-11:~/oom$ kubectl get pods --all-namespaces | grep 0/

onap-aaf aaf-1993711932-39jbg 0/1 Running 0 2h

onap-aai data-router-2899278992-hwlbj 0/1 ImagePullBackOff 0 2h

ubuntu@ip-172-31-82-11:~/oom$ kubectl get pods --all-namespaces | grep 1/2

onap-aai model-loader-service-4017625447-gq81h 1/2 ImagePullBackOff 0 2h

onap-aai search-data-service-403696673-dm2r6 1/2 ImagePullBackOff 0 2h

onap-aai sparky-be-154298787-3h73z 1/2 ImagePullBackOff 0 2h

onap-mso mso-3784963895-lxz27 1/2 CrashLoopBackOff 29 2h

ubuntu@ip-172-31-82-11:~/oom$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system heapster-4285517626-4ghv8 1/1 Running 0 3h

kube-system kube-dns-638003847-x4qmc 3/3 Running 0 3h

kube-system kubernetes-dashboard-716739405-0h0pw 1/1 Running 0 3h

kube-system monitoring-grafana-2360823841-7h3zq 1/1 Running 0 3h

kube-system monitoring-influxdb-2323019309-s9pzl 1/1 Running 0 3h

kube-system tiller-deploy-737598192-0wjcb 1/1 Running 0 3h

onap-aaf aaf-1993711932-39jbg 0/1 Running 0 2h

onap-aaf aaf-cs-1310404376-bjr36 1/1 Running 0 2h

onap-aai aai-resources-1412762642-22cg7 2/2 Running 0 2h

onap-aai aai-service-749944520-w93c8 1/1 Running 0 2h

onap-aai aai-traversal-3084029645-nwj8h 2/2 Running 0 2h

onap-aai data-router-2899278992-hwlbj 0/1 ImagePullBackOff 0 2h

onap-aai elasticsearch-622738319-4rc06 1/1 Running 0 2h

onap-aai hbase-1949550546-03chp 1/1 Running 0 2h

onap-aai model-loader-service-4017625447-gq81h 1/2 ImagePullBackOff 0 2h

onap-aai search-data-service-403696673-dm2r6 1/2 ImagePullBackOff 0 2h

onap-aai sparky-be-154298787-3h73z 1/2 ImagePullBackOff 0 2h

onap-appc appc-1828810488-wz1w3 2/2 Running 0 2h

onap-appc appc-dbhost-2793739621-b0smf 1/1 Running 0 2h

onap-appc appc-dgbuilder-2298093128-mdghw 1/1 Running 0 2h

onap-clamp clamp-2211988013-246ps 1/1 Running 0 2h

onap-clamp clamp-mariadb-1812977665-7m17r 1/1 Running 0 2h

onap-cli cli-595710742-tfvtq 1/1 Running 0 2h

onap-consul consul-agent-3312409084-mk468 1/1 Running 2 2h

onap-consul consul-server-1173049560-7g9fl 1/1 Running 3 2h

onap-consul consul-server-1173049560-92l2t 1/1 Running 2 2h

onap-consul consul-server-1173049560-q8pqn 1/1 Running 3 2h

onap-kube2msb kube2msb-registrator-4293827076-w1z17 1/1 Running 0 2h

onap-log elasticsearch-1942187295-98bcl 1/1 Running 0 2h

onap-log kibana-3372627750-x184p 1/1 Running 0 2h

onap-log logstash-1708188010-f75gp 1/1 Running 0 2h

onap-message-router dmaap-3126594942-m2txb 1/1 Running 0 2h

onap-message-router global-kafka-666408702-11pmc 1/1 Running 0 2h

onap-message-router zookeeper-624700062-f8r0s 1/1 Running 0 2h

onap-msb msb-consul-3334785600-p9sj9 1/1 Running 0 2h

onap-msb msb-discovery-196547432-w92nd 1/1 Running 0 2h

onap-msb msb-eag-1649257109-tj6cd 1/1 Running 0 2h

onap-msb msb-iag-1033096170-1spj9 1/1 Running 0 2h

onap-mso mariadb-829081257-zh907 1/1 Running 0 2h

onap-mso mso-3784963895-lxz27 1/2 CrashLoopBackOff 28 2h

onap-multicloud framework-2273343137-4whh2 1/1 Running 0 2h

onap-multicloud multicloud-ocata-1517639325-6v7zl 1/1 Running 0 2h

onap-multicloud multicloud-vio-4239509896-05p6h 1/1 Running 0 2h

onap-multicloud multicloud-windriver-3629763724-37s6j 1/1 Running 0 2h

onap-policy brmsgw-1909438199-83tdx 1/1 Running 0 2h

onap-policy drools-2600956298-h683r 2/2 Running 0 2h

onap-policy mariadb-2660273324-3rk3w 1/1 Running 0 2h

onap-policy nexus-3663640793-d894d 1/1 Running 0 2h

onap-policy pap-466625067-76vp8 2/2 Running 0 2h

onap-policy pdp-2354817903-vx2vb 2/2 Running 0 2h

onap-portal portalapps-1783099045-mwv5f 2/2 Running 0 2h

onap-portal portaldb-3181004999-n5n8t 2/2 Running 0 2h

onap-portal portalwidgets-2060058548-w0bk2 1/1 Running 0 2h

onap-portal vnc-portal-3680188324-90dvg 1/1 Running 0 2h

onap-robot robot-2551980890-wl0s1 1/1 Running 0 2h

onap-sdc sdc-be-2336519847-96xd2 2/2 Running 0 2h

onap-sdc sdc-cs-1151560586-16vp5 1/1 Running 0 2h

onap-sdc sdc-es-2438522492-sn98n 1/1 Running 0 2h

onap-sdc sdc-fe-2862673798-cpxl5 2/2 Running 0 2h

onap-sdc sdc-kb-1258596734-124hw 1/1 Running 0 2h

onap-sdnc sdnc-1395102659-2xftv 2/2 Running 0 2h

onap-sdnc sdnc-dbhost-3029711096-w1qdk 1/1 Running 0 2h

onap-sdnc sdnc-dgbuilder-4267203648-rl1fj 1/1 Running 0 2h

onap-sdnc sdnc-portal-2558294154-9lfxz 1/1 Running 0 2h

onap-uui uui-4267149477-q6ns5 1/1 Running 0 2h

onap-uui uui-server-3441797946-wtwgn 1/1 Running 0 2h

onap-vfc vfc-catalog-840807183-p1b2v 1/1 Running 0 2h

onap-vfc vfc-emsdriver-2936953408-j9j6d 1/1 Running 0 2h

onap-vfc vfc-gvnfmdriver-2866216209-h928w 1/1 Running 0 2h

onap-vfc vfc-hwvnfmdriver-2588350680-7d9ml 1/1 Running 0 2h

onap-vfc vfc-jujudriver-406795794-tl75p 1/1 Running 0 2h

onap-vfc vfc-nokiavnfmdriver-1760240499-4ztkx 1/1 Running 0 2h

onap-vfc vfc-nslcm-3756650867-t9pvl 1/1 Running 0 2h

onap-vfc vfc-resmgr-1409642779-gjth8 1/1 Running 0 2h

onap-vfc vfc-vnflcm-3340104471-z5v0g 1/1 Running 0 2h

onap-vfc vfc-vnfmgr-2823857741-ssc20 1/1 Running 0 2h

onap-vfc vfc-vnfres-1792029715-j7t54 1/1 Running 0 2h

onap-vfc vfc-workflow-3450325534-42kjr 1/1 Running 0 2h

onap-vfc vfc-workflowengineactiviti-4110617986-6vbxv 1/1 Running 0 2h

onap-vfc vfc-ztesdncdriver-1452986549-zwqqq 1/1 Running 0 2h

onap-vfc vfc-ztevmanagerdriver-2080553526-82cpm 1/1 Running 0 2h

onap-vid vid-mariadb-3318685446-grr0k 1/1 Running 0 2h

onap-vid vid-server-248757250-b04tk 2/2 Running 0 2h

onap-vnfsdk postgres-436836560-tp4d0 1/1 Running 0 2h

onap-vnfsdk refrepo-1924147637-bfwrb 1/1 Running 0 2h

with the aai openecomp to onap change by Alexis - fixes 3 of the 4 aai containers

ubuntu@ip-172-31-82-11:~/oom/kubernetes/oneclick$ kubectl get pods --all-namespaces | grep onap-aai

onap-aai aai-resources-888548294-w907q 2/2 Running 0 7m

onap-aai aai-service-749944520-lp1rh 1/1 Running 0 7m

onap-aai aai-traversal-37564605-hp4tz 2/2 Running 0 7m

onap-aai data-router-3434587794-jmts3 0/1 CrashLoopBackOff 5 7m

onap-aai elasticsearch-622738319-wq95z 1/1 Running 0 7m

onap-aai hbase-1949550546-9dxqf 1/1 Running 0 7m

onap-aai model-loader-service-4144225433-qjcbf 2/2 Running 0 7m

onap-aai search-data-service-378072033-32fj4 2/2 Running 0 7m

onap-aai sparky-be-3094577325-cq6v5 2/2 Running 0 7m

Michael O'Brien

Adding Alexis, Mandeep

Marco, good catch – looking at it now.

A lot of changes in today – testing these from Alexis.

https://gerrit.onap.org/r/#/c/25287/1/kubernetes/config/docker/init/src/config/aai/data-router/dynamic/conf/entity-event-policy.xml

https://gerrit.onap.org/r/#/c/25277/

https://gerrit.onap.org/r/#/c/25257/

https://gerrit.onap.org/r/#/c/25263/

https://gerrit.onap.org/r/#/c/25279/1

https://gerrit.onap.org/r/#/c/25283/

From: PLATANIA, MARCO (MARCO) [mailto:platania@research.att.com]

Sent: Thursday, November 30, 2017 16:30

To: Michael O'Brien <Frank.Obrien@amdocs.com>; Mike Elliott <Mike.Elliott@amdocs.com>; Roger Maitland <Roger.Maitland@amdocs.com>

Subject: Possible error in SO configuration

Michael and Team,

I noticed something that may not work in SO in k8s. I see you have a config directory that contains all the configuration for ONAP components. This is put in a container that drops that config before the other containers are actually created.

In the SO case, in oom/kubernetes/config/docker/init/src/config/mso/mso/mso-docker.json, you have this line:

admin_tenant": "OPENSTACK_SERVICE_TENANT_NAME_HERE",

OPENSTACK_SERVICE_TENANT_NAME_HERE gets replaced by OPENSTACK_SERVICE_TENANT_NAME defined in oom/kubernetes/config/onap-parameters.yaml. If you are using the same values as in onap-parameters-template.yaml, you have:

OPENSTACK_SERVICE_TENANT_NAME: "services" and, hence, "admin_tenant": "services" in mso-docker.json (eventually in the SO container), which is not what we have in SO deployments via Heat. It should be “service”, not “services”.

Hope this helps.

OOM-478 - Getting issue details... STATUS

Arun Arora

Hi Michael,

The ONAP template link mentioned here is https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.0-SNAPSHOT/ which points to the master branch which is ever changing.

I tried deploying it today and faced some issues. Shouldn't it be https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.1/ i.e. the Amsterdam release?

I just re-deployed with the Amsterdam release template. Will update if it succeeds or not.

Thanks,

Arun

Arun Arora

Hi Michael,

Amsterdam deployment was good with the templates in https://nexus.onap.org/content/sites/raw/org.onap.demo/heat/ONAP/1.1.1/

Thanks,

Arun

Alexis de Talhouët

Good news all, vFW instantiation is working with latest OOM 1.1

Michael O'Brien

Alexis, Excellent work as usual, thank you for all your 15+ merges for release-1.1.0, the config docker image changes, the procedural changes. Next step retrofit all these flows back to the wiki - to aide future E2E oneclick orchestration efforts on these use cases.

You were instrumental in all the OOM hands-on over the last 3 days

I thought we would need to do another session to starting at service creation again after ./demo-k8s.sh init - but you ran all the way to vf-module creation just in time - thanks for that.

For community reference - the details of Alexis 's VMs up in openstack orchestrated from OOM Kubernetes - are at 06:30 on video 14 of 20171201

20171201_1100_14_of_14_daily_session_oom_Alexis_VMs_in_openstack_from_OOM_kubernetes.mp4

Vetted vFirewall Demo - Full draft how-to for F2F and ReadTheDocs

Arun Arora

Hi Michael,

Can you pls put a note in SDC steps to repeat them for 2nd VNF as well. Its not clear currently.

Thanks,

Arun

Michael O'Brien

correct, was editing the quick steps from the other demo page - I think I will move them here as we retrofit what we actually did - the wiki is playing catch up with the videos on us.

Running the ONAP Demos#QuickstartInstructions

"Onboard home | drop vendor software prod repo on "top right" | select, import vsp | create | icon | submit for testing - do twice for each vFWSNK, vFWPG"

Gaurav Gupta

Hi Michael

there are couple of Info that should be put in for vFWCL . please note that all of this will also be applicable to vLB/vDNS ..

a - At least in vFWCL We know there are 4 networks ( Tenant Network , ONAP OAM Network , Protected and Unprotected Network ) that are needed by ( PktGen , Sink and Firewall VM's ) .These networks are specificed in the SDNC preload meaning these should be precreated in the Horizon .

b - what should the Network Topology/Router look like as Indicated in horizon .

c- How many networks are needed in vLB/vDNS Respectively .

d- I see multiple devices attached to Linux bridge in firewall VM as in Tap Device and ethernet interfaces eth1 , eth2 . if there is some pictorial representation of what each device ingress/egress looks like will surely help .

Brian Freeman

Gauruv,

the only network that needs to be pre-created before running vFWCL is the ONAP_OAM network - that is created by the ONAP heat /oom install.

the other networks are created dynamically by the vFW CL heat templates. SDNC preload never means that the network has to be precreated in Horizon. SDNC preload is simply a way to over ride the heat .env file assignments manually.

Gaurav Gupta

thanks Brian for the info and also alex to reply on the onap-discuss . I am doing some additional steps around Networking for vFWCL .Let me navigate why is that needed

Shlomi Tsadok

Cloud region PUT to AAI fails with 500 Server Error:

PUT URL:

Auth:

Payload:

See here as well: AAI calls failing with 500

Arun Arora

Hi Shlomi,

You need to create Region first and then you can create a tenant entry in AAI.

Follow 8.1, 8.2 and 8.3 in https://wiki.onap.org/display/DW/running+vFW+Demo+on+ONAP+Amsterdam+Release

Thanks,

Arun

Shlomi Tsadok

Hi Arun,

I'm getting the same server error while trying to do so:

Arun Arora

OK, Before that did you import AAI certificate? If not please do it.

Apart from that make sure your AAI health check passes.

Try these and lets see if you can move forward.

Shlomi Tsadok

SSL cert is imported (client is postman btw) and AAI passed health check. Now I'm getting '400 bad request' and the following error:

Request is:

Shlomi Tsadok

Reported as bug: AAI-551 - Getting issue details... STATUS

Brian Freeman

Did you notice that your cloudowner is Openstack in the URI and CloudOwner in the PUT Buffer. It should be CloudOwner in both cases ? Maybe you copy/pasted the wrong uri ?

Shlomi Tsadok

Thanks for catching it. I tried with both 'CloudOwner' and 'cloudowner' in the URI and got the exact same error.

Shlomi Tsadok

James Forsyth nailed it. I missed the comma after "RegionOne", on line 3. Removing it solves the issue. Thanks!

Michael O'Brien

This will work - note the CloudOwner not Openstack - wlll get a 201 -created

PUT /aai/v11/cloud-infrastructure/cloud-regions/cloud-region/CloudOwner/RegionOne HTTP/1.1 Host: :30233 Accept: application/json Content-Type: application/json X-FromAppId: AAI X-TransactionId: get_aai_subscr Authorization: Basic QUFJOkFBSQ== Cache-Control: no-cache Postman-Token: 86c89bfa-9297-99e9-09c3-a5773bf7ae23 { "cloud-owner": "CloudOwner", "cloud-region-id": "RegionOne", "cloud-region-version": "v2", "cloud-type": "SharedNode", "cloud-zone": "CloudZone", "owner-defined-type": "OwnerType", "tenants": { "tenant": [{ "tenant-id": "1035021", "tenant-name": "ecomp-dev" }] } }Huang Haibin

where can I get SSL cert? I use curl tool. how to use SSL cert?

curl -X PUT https://10.96.0.34:8443/aai/v11/cloud-infrastructure/cloud-regions/CloudOwner/RegionOne --user AAI:AAI -H "Content-Type:application/json" -H "Accept:application/json" -k -d '{"cloud-owner": "CloudOwner", "cloud-region-id": "RegionOne"}'

{"requestError":{"serviceException":{"messageId":"SVC3002","text":"Error writing output performing %1 on %2 (msg=%3) (ec=%4)","variables":["GET","v11/cloud-infrastructure/cloud-regions/CloudOwner/RegionOne","Internal Error:java.lang.NullPointerException","ERR.5.4.4000"]}}}vagrant@worker1:~$

Shlomi Tsadok

Cloud region configuration works now. I needed to add '"resource-version": "1512575879454"' to the request though (exact version could be determined by GET-ing the URI).

Karen Joseph

I’m trying to reproduce the vFW creation process with release 1.1.0 branch of oom, following the video.

I’m failing on creating service instance in VID

any ideas/leads guys?

Shlomi Tsadok

IIRC on my deployment it was solved by running '/opt/demo.sh init' from robot VM

Gaurav Gupta

Karen Joseph Pls refer to the wiki and steps around AAI Put Request . running vFW Demo on ONAP Amsterdam Release

Michael O'Brien

verify you have a cloud-region set - this will also set a tenant - then during robot init (init_customer) - the customer will be set in aai - same instructions as in heat except different ports.

/michael

Karen Joseph

I successfully deployed vFW using k8s client v1.7.3 server v1.7.11 and helm v2.7.2 client and server on Openstack

I had an issue with mso-docker.json and needed to change references to the mso service to localhost - to my understanding this is due to changes in hairpin configuration between the k8s versions. please let me know if you have a more elegant way to solve the issue...

I also needed to restart sdc and mso sometimes, so SDC issue is still happening, but is not consistent.

Shlomi Tsadok

All applications on Portal's 'Application Catalog' are grayed out, except for SDC. Health check for the grayed out applications is OK. What can cause that?

Michael O'Brien

verify you are using the right user - follow Tutorial: Creating a Service Instance from a Design Model

Michael O'Brien

BTW, welcome to ONAP it is good to see more of the team in the public space

/michael

Shlomi Tsadok

Thanks for all the help so far, glad to join a great community

pranjal sharma

Hi Michael,

Is the below JIRA is still open.

https://jira.onap.org/browse/url?u=https-3A__jira.onap.org_browse_AAI-2D513&d=DwMFAg&c=LFYZ-o9_HUMeMTSQicvjIg&r=KgFIQiUJzSC0gUhJaQxg8eC3w16GC3sKgWIcs4iIee0&m=RCSGr1kIZ6BqOK6Fre_hPnaJFiCVvFEKo8z4JavSqC8&s=uhcYhh0UeGZVza9c1ds7d94RfwZJynfvvou2-CxuZZY&e=

I am getting the same error in aai-vm2 but still aai-vm1 is runnning now.

My DNS settings is as below:

dns_list: 192.168.20.5

external_dns: 8.8.8.8

dns_forwarder: 192.168.122.182

oam_network_cidr: 10.0.0.0/16

Please help.

Thanks

Pranjal

Michael O'Brien

In answer to a question from Eric Maye about bringing vFW zip files into the vnc-portal container

I find it easier if you are using the vnc-portal container to post the VNF zip files to a wiki page or anything accessible via browser from inside the Ubuntu image – then download them into the container there

Or scp the files to the kubernetes host – then copy them into the container via kubectl.

Nikita Chouhan

I am trying to run vFW service on Amsterdam.

At the time of service distribution in SDC, I am getting the error 500.

It States in the logs that "Distribution Engine is DOWN".

any ideas?

Shriganesh Thokal

Hi,

We have deployed all VNFs for vFirewalll use case on Beijing. All VNFs are getting instantiated but unable to see traffic on vSink portal. ONAP and and Openstack are deployed on two different VMs on AWS. We found many errors in instance logs like,

W: GPG error: http://ppa.launchpad.net trusty InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY EB9B1D8886F44E2A

----------------------------------------------------------------

interface tap111 does not exist!

interface tap222 does not exist!

./v_firewall_init.sh: line 67: /var/lib/honeycomb/persist/context/data.json: No such file or directory

./v_firewall_init.sh: line 68: /var/lib/honeycomb/persist/config/data.json: No such file or directory

We have used following in pre-load,

{

"vnf-parameter-name": "demo_artifacts_version",

"vnf-parameter-value": "1.2.0-SNAPSHOT"

},

{

"vnf-parameter-name": "install_script_version",

"vnf-parameter-value": "1.2.0"

}

Can anyone help here.

Let me know if complete logs are required.

Suryansh Pratap

Nikita Chouhan Have you resolved this problem. If yes, can you please let me know the solution